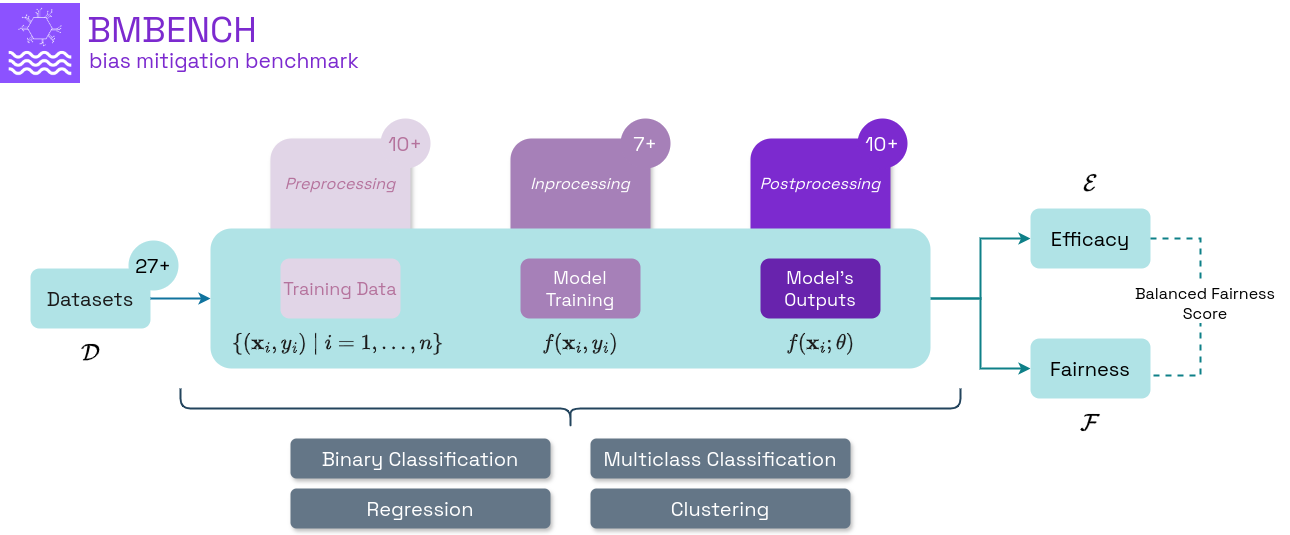

The development and assessment of bias mitigation methods require rigorous benchmarks. This paper introduces BMBench, a comprehensive benchmarking framework to evaluate bias mitigation strategies across multitask machine learning predictions (binary classification, multiclass classification, regression, and clustering). Our benchmark leverages state-of-the-art and proposed datasets to improve fairness research, offering a broad spectrum of fairness metrics for a robust evaluation of bias mitigation methods. We provide an open-source repository to allow researchers to test and refine their bias mitigation approaches easily, promoting advancements in the creation of fair machine learning models.

@article{dacosta2025bmbench,

author = {da Costa, K., Munoz, C., Modenesi, B., Fernandez, F., Koshiyama, A.},

title = {BMBENCH: Empirical Benchmarking of Algorithmic Fairness in Machine Learning Models},

journal = {ICCV},

year = {2025},

}