Spaces:

Runtime error

Runtime error

Commit

·

0d24b07

1

Parent(s):

c2d9b17

Initial commit from GitMoore-AnimateAnyone project

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- LICENSE +203 -0

- NOTICE +20 -0

- README.md +272 -13

- app.py +263 -0

- assets/mini_program_maliang.png +0 -0

- configs/inference/inference_v1.yaml +23 -0

- configs/inference/inference_v2.yaml +35 -0

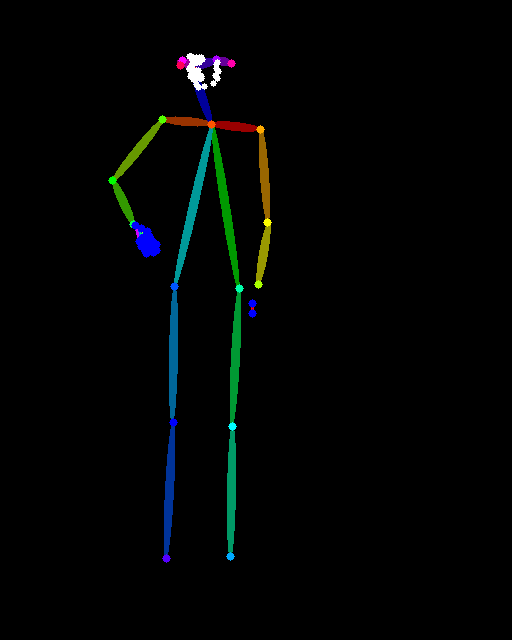

- configs/inference/pose_images/pose-1.png +0 -0

- configs/inference/pose_videos/anyone-video-1_kps.mp4 +0 -0

- configs/inference/pose_videos/anyone-video-2_kps.mp4 +0 -0

- configs/inference/pose_videos/anyone-video-4_kps.mp4 +0 -0

- configs/inference/pose_videos/anyone-video-5_kps.mp4 +0 -0

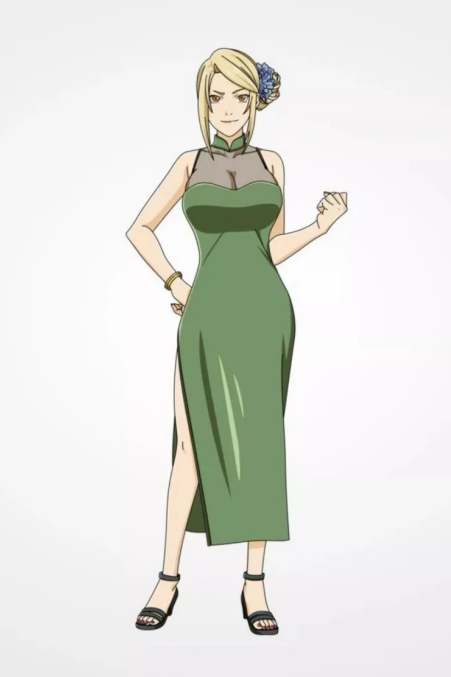

- configs/inference/ref_images/anyone-1.png +0 -0

- configs/inference/ref_images/anyone-10.png +0 -0

- configs/inference/ref_images/anyone-11.png +0 -0

- configs/inference/ref_images/anyone-2.png +0 -0

- configs/inference/ref_images/anyone-3.png +0 -0

- configs/inference/ref_images/anyone-5.png +0 -0

- configs/inference/talkinghead_images/1.png +0 -0

- configs/inference/talkinghead_images/2.png +0 -0

- configs/inference/talkinghead_images/3.png +0 -0

- configs/inference/talkinghead_images/4.png +0 -0

- configs/inference/talkinghead_images/5.png +0 -0

- configs/inference/talkinghead_videos/1.mp4 +0 -0

- configs/inference/talkinghead_videos/2.mp4 +0 -0

- configs/inference/talkinghead_videos/3.mp4 +0 -0

- configs/inference/talkinghead_videos/4.mp4 +0 -0

- configs/prompts/animation.yaml +26 -0

- configs/prompts/inference_reenact.yaml +48 -0

- configs/prompts/test_cases.py +33 -0

- configs/train/stage1.yaml +59 -0

- configs/train/stage2.yaml +59 -0

- output/gradio/20240710T1140.mp4 +0 -0

- output/gradio/20240710T1201.mp4 +0 -0

- pretrained_weights/DWPose/dw-ll_ucoco_384.onnx +3 -0

- pretrained_weights/DWPose/yolox_l.onnx +3 -0

- pretrained_weights/denoising_unet.pth +3 -0

- pretrained_weights/image_encoder/config.json +23 -0

- pretrained_weights/image_encoder/pytorch_model.bin +3 -0

- pretrained_weights/motion_module.pth +3 -0

- pretrained_weights/pose_guider.pth +3 -0

- pretrained_weights/reference_unet.pth +3 -0

- pretrained_weights/sd-vae-ft-mse/config.json +29 -0

- pretrained_weights/sd-vae-ft-mse/diffusion_pytorch_model.bin +3 -0

- pretrained_weights/sd-vae-ft-mse/diffusion_pytorch_model.safetensors +3 -0

- pretrained_weights/stable-diffusion-v1-5/feature_extractor/preprocessor_config.json +20 -0

- pretrained_weights/stable-diffusion-v1-5/model_index.json +32 -0

- pretrained_weights/stable-diffusion-v1-5/unet/config.json +37 -0

- pretrained_weights/stable-diffusion-v1-5/unet/diffusion_pytorch_model.bin +3 -0

- pretrained_weights/stable-diffusion-v1-5/v1-inference.yaml +70 -0

LICENSE

ADDED

|

@@ -0,0 +1,203 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Copyright @2023-2024 Moore Threads Technology Co., Ltd("Moore Threads"). All rights reserved.

|

| 2 |

+

|

| 3 |

+

Apache License

|

| 4 |

+

Version 2.0, January 2004

|

| 5 |

+

http://www.apache.org/licenses/

|

| 6 |

+

|

| 7 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 8 |

+

|

| 9 |

+

1. Definitions.

|

| 10 |

+

|

| 11 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 12 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 13 |

+

|

| 14 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 15 |

+

the copyright owner that is granting the License.

|

| 16 |

+

|

| 17 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 18 |

+

other entities that control, are controlled by, or are under common

|

| 19 |

+

control with that entity. For the purposes of this definition,

|

| 20 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 21 |

+

direction or management of such entity, whether by contract or

|

| 22 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 23 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 24 |

+

|

| 25 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 26 |

+

exercising permissions granted by this License.

|

| 27 |

+

|

| 28 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 29 |

+

including but not limited to software source code, documentation

|

| 30 |

+

source, and configuration files.

|

| 31 |

+

|

| 32 |

+

"Object" form shall mean any form resulting from mechanical

|

| 33 |

+

transformation or translation of a Source form, including but

|

| 34 |

+

not limited to compiled object code, generated documentation,

|

| 35 |

+

and conversions to other media types.

|

| 36 |

+

|

| 37 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 38 |

+

Object form, made available under the License, as indicated by a

|

| 39 |

+

copyright notice that is included in or attached to the work

|

| 40 |

+

(an example is provided in the Appendix below).

|

| 41 |

+

|

| 42 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 43 |

+

form, that is based on (or derived from) the Work and for which the

|

| 44 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 45 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 46 |

+

of this License, Derivative Works shall not include works that remain

|

| 47 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 48 |

+

the Work and Derivative Works thereof.

|

| 49 |

+

|

| 50 |

+

"Contribution" shall mean any work of authorship, including

|

| 51 |

+

the original version of the Work and any modifications or additions

|

| 52 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 53 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 54 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 55 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 56 |

+

means any form of electronic, verbal, or written communication sent

|

| 57 |

+

to the Licensor or its representatives, including but not limited to

|

| 58 |

+

communication on electronic mailing lists, source code control systems,

|

| 59 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 60 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 61 |

+

excluding communication that is conspicuously marked or otherwise

|

| 62 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 63 |

+

|

| 64 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 65 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 66 |

+

subsequently incorporated within the Work.

|

| 67 |

+

|

| 68 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 69 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 70 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 71 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 72 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 73 |

+

Work and such Derivative Works in Source or Object form.

|

| 74 |

+

|

| 75 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 76 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 77 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 78 |

+

(except as stated in this section) patent license to make, have made,

|

| 79 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 80 |

+

where such license applies only to those patent claims licensable

|

| 81 |

+

by such Contributor that are necessarily infringed by their

|

| 82 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 83 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 84 |

+

institute patent litigation against any entity (including a

|

| 85 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 86 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 87 |

+

or contributory patent infringement, then any patent licenses

|

| 88 |

+

granted to You under this License for that Work shall terminate

|

| 89 |

+

as of the date such litigation is filed.

|

| 90 |

+

|

| 91 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 92 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 93 |

+

modifications, and in Source or Object form, provided that You

|

| 94 |

+

meet the following conditions:

|

| 95 |

+

|

| 96 |

+

(a) You must give any other recipients of the Work or

|

| 97 |

+

Derivative Works a copy of this License; and

|

| 98 |

+

|

| 99 |

+

(b) You must cause any modified files to carry prominent notices

|

| 100 |

+

stating that You changed the files; and

|

| 101 |

+

|

| 102 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 103 |

+

that You distribute, all copyright, patent, trademark, and

|

| 104 |

+

attribution notices from the Source form of the Work,

|

| 105 |

+

excluding those notices that do not pertain to any part of

|

| 106 |

+

the Derivative Works; and

|

| 107 |

+

|

| 108 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 109 |

+

distribution, then any Derivative Works that You distribute must

|

| 110 |

+

include a readable copy of the attribution notices contained

|

| 111 |

+

within such NOTICE file, excluding those notices that do not

|

| 112 |

+

pertain to any part of the Derivative Works, in at least one

|

| 113 |

+

of the following places: within a NOTICE text file distributed

|

| 114 |

+

as part of the Derivative Works; within the Source form or

|

| 115 |

+

documentation, if provided along with the Derivative Works; or,

|

| 116 |

+

within a display generated by the Derivative Works, if and

|

| 117 |

+

wherever such third-party notices normally appear. The contents

|

| 118 |

+

of the NOTICE file are for informational purposes only and

|

| 119 |

+

do not modify the License. You may add Your own attribution

|

| 120 |

+

notices within Derivative Works that You distribute, alongside

|

| 121 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 122 |

+

that such additional attribution notices cannot be construed

|

| 123 |

+

as modifying the License.

|

| 124 |

+

|

| 125 |

+

You may add Your own copyright statement to Your modifications and

|

| 126 |

+

may provide additional or different license terms and conditions

|

| 127 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 128 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 129 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 130 |

+

the conditions stated in this License.

|

| 131 |

+

|

| 132 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 133 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 134 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 135 |

+

this License, without any additional terms or conditions.

|

| 136 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 137 |

+

the terms of any separate license agreement you may have executed

|

| 138 |

+

with Licensor regarding such Contributions.

|

| 139 |

+

|

| 140 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 141 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 142 |

+

except as required for reasonable and customary use in describing the

|

| 143 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 144 |

+

|

| 145 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 146 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 147 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 148 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 149 |

+

implied, including, without limitation, any warranties or conditions

|

| 150 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 151 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 152 |

+

appropriateness of using or redistributing the Work and assume any

|

| 153 |

+

risks associated with Your exercise of permissions under this License.

|

| 154 |

+

|

| 155 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 156 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 157 |

+

unless required by applicable law (such as deliberate and grossly

|

| 158 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 159 |

+

liable to You for damages, including any direct, indirect, special,

|

| 160 |

+

incidental, or consequential damages of any character arising as a

|

| 161 |

+

result of this License or out of the use or inability to use the

|

| 162 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 163 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 164 |

+

other commercial damages or losses), even if such Contributor

|

| 165 |

+

has been advised of the possibility of such damages.

|

| 166 |

+

|

| 167 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 168 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 169 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 170 |

+

or other liability obligations and/or rights consistent with this

|

| 171 |

+

License. However, in accepting such obligations, You may act only

|

| 172 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 173 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 174 |

+

defend, and hold each Contributor harmless for any liability

|

| 175 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 176 |

+

of your accepting any such warranty or additional liability.

|

| 177 |

+

|

| 178 |

+

END OF TERMS AND CONDITIONS

|

| 179 |

+

|

| 180 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 181 |

+

|

| 182 |

+

To apply the Apache License to your work, attach the following

|

| 183 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 184 |

+

replaced with your own identifying information. (Don't include

|

| 185 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 186 |

+

comment syntax for the file format. We also recommend that a

|

| 187 |

+

file or class name and description of purpose be included on the

|

| 188 |

+

same "printed page" as the copyright notice for easier

|

| 189 |

+

identification within third-party archives.

|

| 190 |

+

|

| 191 |

+

Copyright [yyyy] [name of copyright owner]

|

| 192 |

+

|

| 193 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 194 |

+

you may not use this file except in compliance with the License.

|

| 195 |

+

You may obtain a copy of the License at

|

| 196 |

+

|

| 197 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 198 |

+

|

| 199 |

+

Unless required by applicable law or agreed to in writing, software

|

| 200 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 201 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 202 |

+

See the License for the specific language governing permissions and

|

| 203 |

+

limitations under the License.

|

NOTICE

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

==============================================================

|

| 2 |

+

This repo also contains various third-party components and some code modified from other repos under other open source licenses. The following sections contain licensing infromation for such third-party libraries.

|

| 3 |

+

|

| 4 |

+

-----------------------------

|

| 5 |

+

majic-animate

|

| 6 |

+

BSD 3-Clause License

|

| 7 |

+

Copyright (c) Bytedance Inc.

|

| 8 |

+

|

| 9 |

+

-----------------------------

|

| 10 |

+

animatediff

|

| 11 |

+

Apache License, Version 2.0

|

| 12 |

+

|

| 13 |

+

-----------------------------

|

| 14 |

+

Dwpose

|

| 15 |

+

Apache License, Version 2.0

|

| 16 |

+

|

| 17 |

+

-----------------------------

|

| 18 |

+

inference pipeline for animatediff-cli-prompt-travel

|

| 19 |

+

animatediff-cli-prompt-travel

|

| 20 |

+

Apache License, Version 2.0

|

README.md

CHANGED

|

@@ -1,13 +1,272 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 🤗 Introduction

|

| 2 |

+

**update** 🔥🔥🔥 We propose a face reenactment method, based on our AnimateAnyone pipeline: Using the facial landmark of driving video to control the pose of given source image, and keeping the identity of source image. Specially, we disentangle head attitude (including eyes blink) and mouth motion from the landmark of driving video, and it can control the expression and movements of source face precisely. We release our inference codes and pretrained models of face reenactment!!

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

**update** 🏋️🏋️🏋️ We release our training codes!! Now you can train your own AnimateAnyone models. See [here](#train) for more details. Have fun!

|

| 6 |

+

|

| 7 |

+

**update**:🔥🔥🔥 We launch a HuggingFace Spaces demo of Moore-AnimateAnyone at [here](https://huggingface.co/spaces/xunsong/Moore-AnimateAnyone)!!

|

| 8 |

+

|

| 9 |

+

This repository reproduces [AnimateAnyone](https://github.com/HumanAIGC/AnimateAnyone). To align the results demonstrated by the original paper, we adopt various approaches and tricks, which may differ somewhat from the paper and another [implementation](https://github.com/guoqincode/Open-AnimateAnyone).

|

| 10 |

+

|

| 11 |

+

It's worth noting that this is a very preliminary version, aiming for approximating the performance (roughly 80% under our test) showed in [AnimateAnyone](https://github.com/HumanAIGC/AnimateAnyone).

|

| 12 |

+

|

| 13 |

+

We will continue to develop it, and also welcome feedbacks and ideas from the community. The enhanced version will also be launched on our [MoBi MaLiang](https://maliang.mthreads.com/) AIGC platform, running on our own full-featured GPU S4000 cloud computing platform.

|

| 14 |

+

|

| 15 |

+

# 📝 Release Plans

|

| 16 |

+

|

| 17 |

+

- [x] Inference codes and pretrained weights of AnimateAnyone

|

| 18 |

+

- [x] Training scripts of AnimateAnyone

|

| 19 |

+

- [x] Inference codes and pretrained weights of face reenactment

|

| 20 |

+

- [ ] Training scripts of face reenactment

|

| 21 |

+

- [ ] Inference scripts of audio driven portrait video generation

|

| 22 |

+

- [ ] Training scripts of audio driven portrait video generation

|

| 23 |

+

# 🎞️ Examples

|

| 24 |

+

|

| 25 |

+

## AnimateAnyone

|

| 26 |

+

|

| 27 |

+

Here are some AnimateAnyone results we generated, with the resolution of 512x768.

|

| 28 |

+

|

| 29 |

+

https://github.com/MooreThreads/Moore-AnimateAnyone/assets/138439222/f0454f30-6726-4ad4-80a7-5b7a15619057

|

| 30 |

+

|

| 31 |

+

https://github.com/MooreThreads/Moore-AnimateAnyone/assets/138439222/337ff231-68a3-4760-a9f9-5113654acf48

|

| 32 |

+

|

| 33 |

+

<table class="center">

|

| 34 |

+

|

| 35 |

+

<tr>

|

| 36 |

+

<td width=50% style="border: none">

|

| 37 |

+

<video controls autoplay loop src="https://github.com/MooreThreads/Moore-AnimateAnyone/assets/138439222/9c4d852e-0a99-4607-8d63-569a1f67a8d2" muted="false"></video>

|

| 38 |

+

</td>

|

| 39 |

+

<td width=50% style="border: none">

|

| 40 |

+

<video controls autoplay loop src="https://github.com/MooreThreads/Moore-AnimateAnyone/assets/138439222/722c6535-2901-4e23-9de9-501b22306ebd" muted="false"></video>

|

| 41 |

+

</td>

|

| 42 |

+

</tr>

|

| 43 |

+

|

| 44 |

+

<tr>

|

| 45 |

+

<td width=50% style="border: none">

|

| 46 |

+

<video controls autoplay loop src="https://github.com/MooreThreads/Moore-AnimateAnyone/assets/138439222/17b907cc-c97e-43cd-af18-b646393c8e8a" muted="false"></video>

|

| 47 |

+

</td>

|

| 48 |

+

<td width=50% style="border: none">

|

| 49 |

+

<video controls autoplay loop src="https://github.com/MooreThreads/Moore-AnimateAnyone/assets/138439222/86f2f6d2-df60-4333-b19b-4c5abcd5999d" muted="false"></video>

|

| 50 |

+

</td>

|

| 51 |

+

</tr>

|

| 52 |

+

</table>

|

| 53 |

+

|

| 54 |

+

**Limitation**: We observe following shortcomings in current version:

|

| 55 |

+

1. The background may occur some artifacts, when the reference image has a clean background

|

| 56 |

+

2. Suboptimal results may arise when there is a scale mismatch between the reference image and keypoints. We have yet to implement preprocessing techniques as mentioned in the [paper](https://arxiv.org/pdf/2311.17117.pdf).

|

| 57 |

+

3. Some flickering and jittering may occur when the motion sequence is subtle or the scene is static.

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

These issues will be addressed and improved in the near future. We appreciate your anticipation!

|

| 62 |

+

|

| 63 |

+

## Face Reenactment

|

| 64 |

+

|

| 65 |

+

Here are some results we generated, with the resolution of 512x512.

|

| 66 |

+

|

| 67 |

+

<table class="center">

|

| 68 |

+

|

| 69 |

+

<tr>

|

| 70 |

+

<td width=50% style="border: none">

|

| 71 |

+

<video controls autoplay loop src="https://github.com/MooreThreads/Moore-AnimateAnyone/assets/117793823/8cfaddec-fb81-485e-88e9-229c0adb8bf9" muted="false"></video>

|

| 72 |

+

</td>

|

| 73 |

+

<td width=50% style="border: none">

|

| 74 |

+

<video controls autoplay loop src="https://github.com/MooreThreads/Moore-AnimateAnyone/assets/117793823/ad06ba29-5bb2-490e-a204-7242c724ba8b" muted="false"></video>

|

| 75 |

+

</td>

|

| 76 |

+

</tr>

|

| 77 |

+

|

| 78 |

+

<tr>

|

| 79 |

+

<td width=50% style="border: none">

|

| 80 |

+

<video controls autoplay loop src="https://github.com/MooreThreads/Moore-AnimateAnyone/assets/117793823/6843cdc0-830b-4f91-87c5-41cd12fbe8c2" muted="false"></video>

|

| 81 |

+

</td>

|

| 82 |

+

<td width=50% style="border: none">

|

| 83 |

+

<video controls autoplay loop src="https://github.com/MooreThreads/Moore-AnimateAnyone/assets/117793823/bb9b8b74-ba4b-4f62-8fd1-7ebf140acc81" muted="false"></video>

|

| 84 |

+

</td>

|

| 85 |

+

</tr>

|

| 86 |

+

</table>

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

# ⚒️ Installation

|

| 90 |

+

|

| 91 |

+

## Build Environtment

|

| 92 |

+

|

| 93 |

+

We Recommend a python version `>=3.10` and cuda version `=11.7`. Then build environment as follows:

|

| 94 |

+

|

| 95 |

+

```shell

|

| 96 |

+

# [Optional] Create a virtual env

|

| 97 |

+

python -m venv .venv

|

| 98 |

+

source .venv/bin/activate

|

| 99 |

+

# Install with pip:

|

| 100 |

+

pip install -r requirements.txt

|

| 101 |

+

# For face landmark extraction

|

| 102 |

+

git clone https://github.com/emilianavt/OpenSeeFace.git

|

| 103 |

+

```

|

| 104 |

+

|

| 105 |

+

## Download weights

|

| 106 |

+

|

| 107 |

+

**Automatically downloading**: You can run the following command to download weights automatically:

|

| 108 |

+

|

| 109 |

+

```shell

|

| 110 |

+

python tools/download_weights.py

|

| 111 |

+

```

|

| 112 |

+

|

| 113 |

+

Weights will be placed under the `./pretrained_weights` direcotry. The whole downloading process may take a long time.

|

| 114 |

+

|

| 115 |

+

**Manually downloading**: You can also download weights manually, which has some steps:

|

| 116 |

+

|

| 117 |

+

1. Download our AnimateAnyone trained [weights](https://huggingface.co/patrolli/AnimateAnyone/tree/main), which include four parts: `denoising_unet.pth`, `reference_unet.pth`, `pose_guider.pth` and `motion_module.pth`.

|

| 118 |

+

|

| 119 |

+

2. Download our trained [weights](https://pan.baidu.com/s/1lS5CynyNfYlDbjowKKfG8g?pwd=crci) of face reenactment, and place these weights under `pretrained_weights`.

|

| 120 |

+

|

| 121 |

+

3. Download pretrained weight of based models and other components:

|

| 122 |

+

- [StableDiffusion V1.5](https://huggingface.co/runwayml/stable-diffusion-v1-5)

|

| 123 |

+

- [sd-vae-ft-mse](https://huggingface.co/stabilityai/sd-vae-ft-mse)

|

| 124 |

+

- [image_encoder](https://huggingface.co/lambdalabs/sd-image-variations-diffusers/tree/main/image_encoder)

|

| 125 |

+

|

| 126 |

+

4. Download dwpose weights (`dw-ll_ucoco_384.onnx`, `yolox_l.onnx`) following [this](https://github.com/IDEA-Research/DWPose?tab=readme-ov-file#-dwpose-for-controlnet).

|

| 127 |

+

|

| 128 |

+

Finally, these weights should be orgnized as follows:

|

| 129 |

+

|

| 130 |

+

```text

|

| 131 |

+

./pretrained_weights/

|

| 132 |

+

|-- DWPose

|

| 133 |

+

| |-- dw-ll_ucoco_384.onnx

|

| 134 |

+

| `-- yolox_l.onnx

|

| 135 |

+

|-- image_encoder

|

| 136 |

+

| |-- config.json

|

| 137 |

+

| `-- pytorch_model.bin

|

| 138 |

+

|-- denoising_unet.pth

|

| 139 |

+

|-- motion_module.pth

|

| 140 |

+

|-- pose_guider.pth

|

| 141 |

+

|-- reference_unet.pth

|

| 142 |

+

|-- sd-vae-ft-mse

|

| 143 |

+

| |-- config.json

|

| 144 |

+

| |-- diffusion_pytorch_model.bin

|

| 145 |

+

| `-- diffusion_pytorch_model.safetensors

|

| 146 |

+

|-- reenact

|

| 147 |

+

| |-- denoising_unet.pth

|

| 148 |

+

| |-- reference_unet.pth

|

| 149 |

+

| |-- pose_guider1.pth

|

| 150 |

+

| |-- pose_guider2.pth

|

| 151 |

+

`-- stable-diffusion-v1-5

|

| 152 |

+

|-- feature_extractor

|

| 153 |

+

| `-- preprocessor_config.json

|

| 154 |

+

|-- model_index.json

|

| 155 |

+

|-- unet

|

| 156 |

+

| |-- config.json

|

| 157 |

+

| `-- diffusion_pytorch_model.bin

|

| 158 |

+

`-- v1-inference.yaml

|

| 159 |

+

```

|

| 160 |

+

|

| 161 |

+

Note: If you have installed some of the pretrained models, such as `StableDiffusion V1.5`, you can specify their paths in the config file (e.g. `./config/prompts/animation.yaml`).

|

| 162 |

+

|

| 163 |

+

# 🚀 Training and Inference

|

| 164 |

+

|

| 165 |

+

## Inference of AnimateAnyone

|

| 166 |

+

|

| 167 |

+

Here is the cli command for running inference scripts:

|

| 168 |

+

|

| 169 |

+

```shell

|

| 170 |

+

python -m scripts.pose2vid --config ./configs/prompts/animation.yaml -W 512 -H 784 -L 64

|

| 171 |

+

```

|

| 172 |

+

|

| 173 |

+

You can refer the format of `animation.yaml` to add your own reference images or pose videos. To convert the raw video into a pose video (keypoint sequence), you can run with the following command:

|

| 174 |

+

|

| 175 |

+

```shell

|

| 176 |

+

python tools/vid2pose.py --video_path /path/to/your/video.mp4

|

| 177 |

+

```

|

| 178 |

+

|

| 179 |

+

## Inference of Face Reenactment

|

| 180 |

+

Here is the cli command for running inference scripts:

|

| 181 |

+

|

| 182 |

+

```shell

|

| 183 |

+

python -m scripts.lmks2vid --config ./configs/prompts/inference_reenact.yaml --driving_video_path YOUR_OWN_DRIVING_VIDEO_PATH --source_image_path YOUR_OWN_SOURCE_IMAGE_PATH

|

| 184 |

+

```

|

| 185 |

+

We provide some face images in `./config/inference/talkinghead_images`, and some face videos in `./config/inference/talkinghead_videos` for inference.

|

| 186 |

+

|

| 187 |

+

## <span id="train"> Training of AnimateAnyone </span>

|

| 188 |

+

|

| 189 |

+

Note: package dependencies have been updated, you may upgrade your environment via `pip install -r requirements.txt` before training.

|

| 190 |

+

|

| 191 |

+

### Data Preparation

|

| 192 |

+

|

| 193 |

+

Extract keypoints from raw videos:

|

| 194 |

+

|

| 195 |

+

```shell

|

| 196 |

+

python tools/extract_dwpose_from_vid.py --video_root /path/to/your/video_dir

|

| 197 |

+

```

|

| 198 |

+

|

| 199 |

+

Extract the meta info of dataset:

|

| 200 |

+

|

| 201 |

+

```shell

|

| 202 |

+

python tools/extract_meta_info.py --root_path /path/to/your/video_dir --dataset_name anyone

|

| 203 |

+

```

|

| 204 |

+

|

| 205 |

+

Update lines in the training config file:

|

| 206 |

+

|

| 207 |

+

```yaml

|

| 208 |

+

data:

|

| 209 |

+

meta_paths:

|

| 210 |

+

- "./data/anyone_meta.json"

|

| 211 |

+

```

|

| 212 |

+

|

| 213 |

+

### Stage1

|

| 214 |

+

|

| 215 |

+

Put [openpose controlnet weights](https://huggingface.co/lllyasviel/control_v11p_sd15_openpose/tree/main) under `./pretrained_weights`, which is used to initialize the pose_guider.

|

| 216 |

+

|

| 217 |

+

Put [sd-image-variation](https://huggingface.co/lambdalabs/sd-image-variations-diffusers/tree/main) under `./pretrained_weights`, which is used to initialize unet weights.

|

| 218 |

+

|

| 219 |

+

Run command:

|

| 220 |

+

|

| 221 |

+

```shell

|

| 222 |

+

accelerate launch train_stage_1.py --config configs/train/stage1.yaml

|

| 223 |

+

```

|

| 224 |

+

|

| 225 |

+

### Stage2

|

| 226 |

+

|

| 227 |

+

Put the pretrained motion module weights `mm_sd_v15_v2.ckpt` ([download link](https://huggingface.co/guoyww/animatediff/blob/main/mm_sd_v15_v2.ckpt)) under `./pretrained_weights`.

|

| 228 |

+

|

| 229 |

+

Specify the stage1 training weights in the config file `stage2.yaml`, for example:

|

| 230 |

+

|

| 231 |

+

```yaml

|

| 232 |

+

stage1_ckpt_dir: './exp_output/stage1'

|

| 233 |

+

stage1_ckpt_step: 30000

|

| 234 |

+

```

|

| 235 |

+

|

| 236 |

+

Run command:

|

| 237 |

+

|

| 238 |

+

```shell

|

| 239 |

+

accelerate launch train_stage_2.py --config configs/train/stage2.yaml

|

| 240 |

+

```

|

| 241 |

+

|

| 242 |

+

# 🎨 Gradio Demo

|

| 243 |

+

|

| 244 |

+

**HuggingFace Demo**: We launch a quick preview demo of Moore-AnimateAnyone at [HuggingFace Spaces](https://huggingface.co/spaces/xunsong/Moore-AnimateAnyone)!!

|

| 245 |

+

We appreciate the assistance provided by the HuggingFace team in setting up this demo.

|

| 246 |

+

|

| 247 |

+

To reduce waiting time, we limit the size (width, height, and length) and inference steps when generating videos.

|

| 248 |

+

|

| 249 |

+

If you have your own GPU resource (>= 16GB vram), you can run a local gradio app via following commands:

|

| 250 |

+

|

| 251 |

+

`python app.py`

|

| 252 |

+

|

| 253 |

+

# Community Contributions

|

| 254 |

+

|

| 255 |

+

- Installation for Windows users: [Moore-AnimateAnyone-for-windows](https://github.com/sdbds/Moore-AnimateAnyone-for-windows)

|

| 256 |

+

|

| 257 |

+

# 🖌️ Try on Mobi MaLiang

|

| 258 |

+

|

| 259 |

+

We will launched this model on our [MoBi MaLiang](https://maliang.mthreads.com/) AIGC platform, running on our own full-featured GPU S4000 cloud computing platform. Mobi MaLiang has now integrated various AIGC applications and functionalities (e.g. text-to-image, controllable generation...). You can experience it by [clicking this link](https://maliang.mthreads.com/) or scanning the QR code bellow via WeChat!

|

| 260 |

+

|

| 261 |

+

<p align="left">

|

| 262 |

+

<img src="assets/mini_program_maliang.png" width="100

|

| 263 |

+

"/>

|

| 264 |

+

</p>

|

| 265 |

+

|

| 266 |

+

# ⚖️ Disclaimer

|

| 267 |

+

|

| 268 |

+

This project is intended for academic research, and we explicitly disclaim any responsibility for user-generated content. Users are solely liable for their actions while using the generative model. The project contributors have no legal affiliation with, nor accountability for, users' behaviors. It is imperative to use the generative model responsibly, adhering to both ethical and legal standards.

|

| 269 |

+

|

| 270 |

+

# 🙏🏻 Acknowledgements

|

| 271 |

+

|

| 272 |

+

We first thank the authors of [AnimateAnyone](). Additionally, we would like to thank the contributors to the [majic-animate](https://github.com/magic-research/magic-animate), [animatediff](https://github.com/guoyww/AnimateDiff) and [Open-AnimateAnyone](https://github.com/guoqincode/Open-AnimateAnyone) repositories, for their open research and exploration. Furthermore, our repo incorporates some codes from [dwpose](https://github.com/IDEA-Research/DWPose) and [animatediff-cli-prompt-travel](https://github.com/s9roll7/animatediff-cli-prompt-travel/), and we extend our thanks to them as well.

|

app.py

ADDED

|

@@ -0,0 +1,263 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import random

|

| 3 |

+

from datetime import datetime

|

| 4 |

+

|

| 5 |

+

import gradio as gr

|

| 6 |

+

import numpy as np

|

| 7 |

+

import torch

|

| 8 |

+

from diffusers import AutoencoderKL, DDIMScheduler

|

| 9 |

+

from einops import repeat

|

| 10 |

+

from omegaconf import OmegaConf

|

| 11 |

+

from PIL import Image

|

| 12 |

+

from torchvision import transforms

|

| 13 |

+

from transformers import CLIPVisionModelWithProjection

|

| 14 |

+

|

| 15 |

+

from src.models.pose_guider import PoseGuider

|

| 16 |

+

from src.models.unet_2d_condition import UNet2DConditionModel

|

| 17 |

+

from src.models.unet_3d import UNet3DConditionModel

|

| 18 |

+

from src.pipelines.pipeline_pose2vid_long import Pose2VideoPipeline

|

| 19 |

+

from src.utils.util import get_fps, read_frames, save_videos_grid

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

class AnimateController:

|

| 23 |

+

def __init__(

|

| 24 |

+

self,

|

| 25 |

+

config_path="./configs/prompts/animation.yaml",

|

| 26 |

+

weight_dtype=torch.float16,

|

| 27 |

+

):

|

| 28 |

+

# Read pretrained weights path from config

|

| 29 |

+

self.config = OmegaConf.load(config_path)

|

| 30 |

+

self.pipeline = None

|

| 31 |

+

self.weight_dtype = weight_dtype

|

| 32 |

+

|

| 33 |

+

def animate(

|

| 34 |

+

self,

|

| 35 |

+

ref_image,

|

| 36 |

+

pose_video_path,

|

| 37 |

+

width=512,

|

| 38 |

+

height=768,

|

| 39 |

+

length=24,

|

| 40 |

+

num_inference_steps=25,

|

| 41 |

+

cfg=3.5,

|

| 42 |

+

seed=123,

|

| 43 |

+

):

|

| 44 |

+

generator = torch.manual_seed(seed)

|

| 45 |

+

if isinstance(ref_image, np.ndarray):

|

| 46 |

+

ref_image = Image.fromarray(ref_image)

|

| 47 |

+

if self.pipeline is None:

|

| 48 |

+

vae = AutoencoderKL.from_pretrained(

|

| 49 |

+

self.config.pretrained_vae_path,

|

| 50 |

+

).to("cuda", dtype=self.weight_dtype)

|

| 51 |

+

|

| 52 |

+

reference_unet = UNet2DConditionModel.from_pretrained(

|

| 53 |

+

self.config.pretrained_base_model_path,

|

| 54 |

+

subfolder="unet",

|

| 55 |

+

).to(dtype=self.weight_dtype, device="cuda")

|

| 56 |

+

|

| 57 |

+

inference_config_path = self.config.inference_config

|

| 58 |

+

infer_config = OmegaConf.load(inference_config_path)

|

| 59 |

+

denoising_unet = UNet3DConditionModel.from_pretrained_2d(

|

| 60 |

+

self.config.pretrained_base_model_path,

|

| 61 |

+

self.config.motion_module_path,

|

| 62 |

+

subfolder="unet",

|

| 63 |

+

unet_additional_kwargs=infer_config.unet_additional_kwargs,

|

| 64 |

+

).to(dtype=self.weight_dtype, device="cuda")

|

| 65 |

+

|

| 66 |

+

pose_guider = PoseGuider(320, block_out_channels=(16, 32, 96, 256)).to(

|

| 67 |

+

dtype=self.weight_dtype, device="cuda"

|

| 68 |

+

)

|

| 69 |

+

|

| 70 |

+

image_enc = CLIPVisionModelWithProjection.from_pretrained(

|

| 71 |

+

self.config.image_encoder_path

|

| 72 |

+

).to(dtype=self.weight_dtype, device="cuda")

|

| 73 |

+

sched_kwargs = OmegaConf.to_container(infer_config.noise_scheduler_kwargs)

|

| 74 |

+

scheduler = DDIMScheduler(**sched_kwargs)

|

| 75 |

+

|

| 76 |

+

# load pretrained weights

|

| 77 |

+

denoising_unet.load_state_dict(

|

| 78 |

+

torch.load(self.config.denoising_unet_path, map_location="cpu"),

|

| 79 |

+

strict=False,

|

| 80 |

+

)

|

| 81 |

+

reference_unet.load_state_dict(

|

| 82 |

+

torch.load(self.config.reference_unet_path, map_location="cpu"),

|

| 83 |

+

)

|

| 84 |

+

pose_guider.load_state_dict(

|

| 85 |

+

torch.load(self.config.pose_guider_path, map_location="cpu"),

|

| 86 |

+

)

|

| 87 |

+

|

| 88 |

+

pipe = Pose2VideoPipeline(

|

| 89 |

+

vae=vae,

|

| 90 |

+

image_encoder=image_enc,

|

| 91 |

+

reference_unet=reference_unet,

|

| 92 |

+

denoising_unet=denoising_unet,

|

| 93 |

+

pose_guider=pose_guider,

|

| 94 |

+

scheduler=scheduler,

|

| 95 |

+

)

|

| 96 |

+

pipe = pipe.to("cuda", dtype=self.weight_dtype)

|

| 97 |

+

self.pipeline = pipe

|

| 98 |

+

|

| 99 |

+

pose_images = read_frames(pose_video_path)

|

| 100 |

+

src_fps = get_fps(pose_video_path)

|

| 101 |

+

|

| 102 |

+

pose_list = []

|

| 103 |

+

pose_tensor_list = []

|

| 104 |

+

pose_transform = transforms.Compose(

|

| 105 |

+

[transforms.Resize((height, width)), transforms.ToTensor()]

|

| 106 |

+

)

|

| 107 |

+

for pose_image_pil in pose_images[:length]:

|

| 108 |

+

pose_list.append(pose_image_pil)

|

| 109 |

+

pose_tensor_list.append(pose_transform(pose_image_pil))

|

| 110 |

+

|

| 111 |

+

video = self.pipeline(

|

| 112 |

+

ref_image,

|

| 113 |

+

pose_list,

|

| 114 |

+

width=width,

|

| 115 |

+

height=height,

|

| 116 |

+

video_length=length,

|

| 117 |

+

num_inference_steps=num_inference_steps,

|

| 118 |

+

guidance_scale=cfg,

|

| 119 |

+

generator=generator,

|

| 120 |

+

).videos

|

| 121 |

+

|

| 122 |

+

ref_image_tensor = pose_transform(ref_image) # (c, h, w)

|

| 123 |

+

ref_image_tensor = ref_image_tensor.unsqueeze(1).unsqueeze(0) # (1, c, 1, h, w)

|

| 124 |

+

ref_image_tensor = repeat(

|

| 125 |

+

ref_image_tensor, "b c f h w -> b c (repeat f) h w", repeat=length

|

| 126 |

+

)

|

| 127 |

+

pose_tensor = torch.stack(pose_tensor_list, dim=0) # (f, c, h, w)

|

| 128 |

+

pose_tensor = pose_tensor.transpose(0, 1)

|

| 129 |

+

pose_tensor = pose_tensor.unsqueeze(0)

|

| 130 |

+

video = torch.cat([ref_image_tensor, pose_tensor, video], dim=0)

|

| 131 |

+

|

| 132 |

+

save_dir = f"./output/gradio"

|

| 133 |

+

if not os.path.exists(save_dir):

|

| 134 |

+

os.makedirs(save_dir, exist_ok=True)

|

| 135 |

+

date_str = datetime.now().strftime("%Y%m%d")

|

| 136 |

+

time_str = datetime.now().strftime("%H%M")

|

| 137 |

+

out_path = os.path.join(save_dir, f"{date_str}T{time_str}.mp4")

|

| 138 |

+

save_videos_grid(

|

| 139 |

+

video,

|

| 140 |

+

out_path,

|

| 141 |

+

n_rows=3,

|

| 142 |

+

fps=src_fps,

|

| 143 |

+

)

|

| 144 |

+

|

| 145 |

+

torch.cuda.empty_cache()

|

| 146 |

+

|

| 147 |

+

return out_path

|

| 148 |

+

|

| 149 |

+

|

| 150 |

+

controller = AnimateController()

|

| 151 |

+

|

| 152 |

+

|

| 153 |

+

def ui():

|

| 154 |

+

with gr.Blocks() as demo:

|

| 155 |

+

gr.Markdown(

|

| 156 |

+

"""

|

| 157 |

+

# Moore-AnimateAnyone Demo

|

| 158 |

+

"""

|

| 159 |

+

)

|

| 160 |

+

animation = gr.Video(

|

| 161 |

+

format="mp4",

|

| 162 |

+

label="Animation Results",

|

| 163 |

+

height=448,

|

| 164 |

+

autoplay=True,

|

| 165 |

+

)

|

| 166 |

+

|

| 167 |

+

with gr.Row():

|

| 168 |

+

reference_image = gr.Image(label="Reference Image")

|

| 169 |

+

motion_sequence = gr.Video(

|

| 170 |

+

format="mp4", label="Motion Sequence", height=512

|

| 171 |

+

)

|

| 172 |

+

|

| 173 |

+

with gr.Column():

|

| 174 |

+

width_slider = gr.Slider(

|

| 175 |

+

label="Width", minimum=448, maximum=768, value=512, step=64

|

| 176 |

+

)

|

| 177 |

+

height_slider = gr.Slider(

|

| 178 |

+

label="Height", minimum=512, maximum=1024, value=768, step=64

|

| 179 |

+

)

|

| 180 |

+

length_slider = gr.Slider(

|

| 181 |

+

label="Video Length", minimum=24, maximum=128, value=24, step=24

|

| 182 |

+

)

|

| 183 |

+

with gr.Row():

|

| 184 |

+

seed_textbox = gr.Textbox(label="Seed", value=-1)

|

| 185 |

+

seed_button = gr.Button(

|

| 186 |

+

value="\U0001F3B2", elem_classes="toolbutton"

|

| 187 |

+

)

|

| 188 |

+

seed_button.click(

|

| 189 |

+

fn=lambda: gr.Textbox.update(value=random.randint(1, 1e8)),

|

| 190 |

+

inputs=[],

|

| 191 |

+

outputs=[seed_textbox],

|

| 192 |

+

)

|

| 193 |

+

with gr.Row():

|

| 194 |

+

sampling_steps = gr.Slider(

|

| 195 |

+

label="Sampling steps",

|

| 196 |

+

value=25,

|

| 197 |

+

info="default: 25",

|

| 198 |

+

step=5,

|

| 199 |

+

maximum=30,

|

| 200 |

+

minimum=10,

|

| 201 |

+

)

|

| 202 |

+

guidance_scale = gr.Slider(

|

| 203 |

+

label="Guidance scale",

|

| 204 |

+

value=3.5,

|

| 205 |

+

info="default: 3.5",

|

| 206 |

+

step=0.5,

|

| 207 |

+

maximum=10,

|

| 208 |

+

minimum=2.0,

|

| 209 |

+

)

|

| 210 |

+

submit = gr.Button("Animate")

|

| 211 |

+

|

| 212 |

+

def read_video(video):

|

| 213 |

+

return video

|

| 214 |

+

|

| 215 |

+

def read_image(image):

|

| 216 |

+

return Image.fromarray(image)

|

| 217 |

+

|

| 218 |

+

# when user uploads a new video

|

| 219 |

+

motion_sequence.upload(read_video, motion_sequence, motion_sequence)

|

| 220 |

+

# when `first_frame` is updated

|

| 221 |

+

reference_image.upload(read_image, reference_image, reference_image)

|

| 222 |

+

# when the `submit` button is clicked

|

| 223 |

+

submit.click(

|

| 224 |

+

controller.animate,

|

| 225 |

+

[

|

| 226 |

+

reference_image,

|

| 227 |

+

motion_sequence,

|

| 228 |

+

width_slider,

|

| 229 |

+

height_slider,

|

| 230 |

+

length_slider,

|

| 231 |

+

sampling_steps,

|

| 232 |

+

guidance_scale,

|

| 233 |

+

seed_textbox,

|

| 234 |

+

],

|

| 235 |

+

animation,

|

| 236 |

+

)

|

| 237 |

+

|

| 238 |

+

# Examples

|

| 239 |

+

gr.Markdown("## Examples")

|

| 240 |

+

gr.Examples(

|

| 241 |

+

examples=[

|

| 242 |

+

[

|

| 243 |

+

"./configs/inference/ref_images/anyone-5.png",

|

| 244 |

+

"./configs/inference/pose_videos/anyone-video-2_kps.mp4",

|

| 245 |

+

],

|

| 246 |

+

[

|

| 247 |

+

"./configs/inference/ref_images/anyone-10.png",

|

| 248 |

+