Spaces:

Runtime error

Runtime error

Commit

·

e248cd9

1

Parent(s):

e0ae9ac

Upload 12 files

Browse files- README.md +110 -12

- app.py +320 -0

- config.json +33 -0

- demo.ipynb +0 -0

- figs/interface.png +0 -0

- figs/response.png +0 -0

- figs/title.png +0 -0

- prepare_data.ipynb +1545 -0

- requirements.txt +7 -0

- train.sh +21 -0

- train_lora.py +221 -0

- utils.py +162 -0

README.md

CHANGED

|

@@ -1,12 +1,110 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

|

| 3 |

+

## What is FinGPT-Forecaster?

|

| 4 |

+

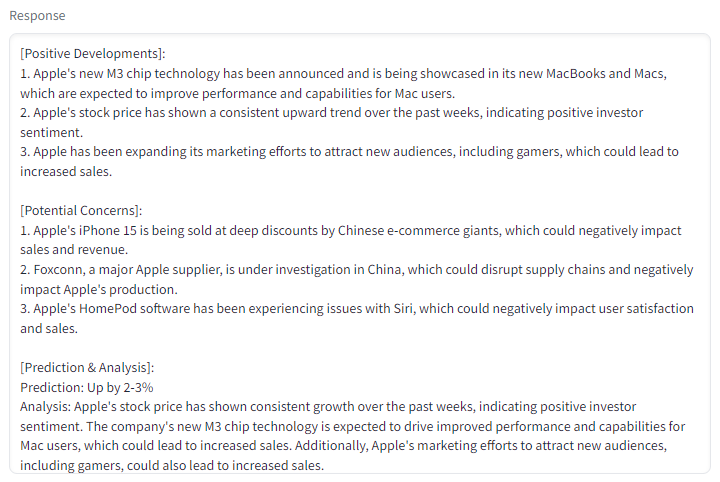

- FinGPT-Forecaster takes market news and optional basic financials related to the specified company from the past few weeks as input and responds with the company's **positive developments** and **potential concerns**. Then it gives out a **prediction** of stock price movement for the coming week and its **analysis** summary.

|

| 5 |

+

- FinGPT-Forecaster is finetuned on Llama-2-7b-chat-hf with LoRA on the past year's DOW30 market data. But also has shown great generalization ability on other ticker symbols.

|

| 6 |

+

- FinGPT-Forecaster is an easy-to-deploy junior robo-advisor, a milestone towards our goal.

|

| 7 |

+

|

| 8 |

+

## Try out the demo!

|

| 9 |

+

|

| 10 |

+

Try our demo at <https://huggingface.co/spaces/FinGPT/FinGPT-Forecaster>

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

Enter the following inputs:

|

| 15 |

+

|

| 16 |

+

1) ticker symbol (e.g. AAPL, MSFT, NVDA)

|

| 17 |

+

2) the day from which you want the prediction to happen (yyyy-mm-dd)

|

| 18 |

+

3) the number of past weeks where market news are retrieved

|

| 19 |

+

4) whether to add latest basic financials as additional information

|

| 20 |

+

|

| 21 |

+

Then, click Submit!You'll get a response like this

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

This is just a demo showing what this model is capable of. Results inferred from randomly chosen news can be strongly biased.

|

| 26 |

+

For more detailed and customized usage, scroll down and continue your reading.

|

| 27 |

+

|

| 28 |

+

## Deploy FinGPT-Forecaster

|

| 29 |

+

|

| 30 |

+

We have released our FinGPT-Forecaster trained on DOW30 market data from 2022-12-30 to 2023-9-1 on HuggingFace: [fingpt-forecaster_dow30_llama2-7b_lora](https://huggingface.co/FinGPT/fingpt-forecaster_dow30_llama2-7b_lora)

|

| 31 |

+

|

| 32 |

+

We have most of the key requirements in `requirements.txt`. Before you start, do `pip install -r requirements.txt`. Then you can refer to `demo.ipynb` for our deployment and evaluation script.

|

| 33 |

+

|

| 34 |

+

First let's load the model:

|

| 35 |

+

|

| 36 |

+

```

|

| 37 |

+

from datasets import load_dataset

|

| 38 |

+

from transformers import AutoTokenizer, AutoModelForCausalLM

|

| 39 |

+

from peft import PeftModel

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

base_model = AutoModelForCausalLM.from_pretrained(

|

| 43 |

+

'meta-llama/Llama-2-7b-chat-hf',

|

| 44 |

+

trust_remote_code=True,

|

| 45 |

+

device_map="auto",

|

| 46 |

+

torch_dtype=torch.float16, # optional if you have enough VRAM

|

| 47 |

+

)

|

| 48 |

+

tokenizer = AutoTokenizer.from_pretrained('meta-llama/Llama-2-7b-chat-hf')

|

| 49 |

+

|

| 50 |

+

model = PeftModel.from_pretrained(base_model, 'FinGPT/fingpt-forecaster_dow30_llama2-7b_lora')

|

| 51 |

+

model = model.eval()

|

| 52 |

+

```

|

| 53 |

+

|

| 54 |

+

Then you are ready to go, prepare your prompt with news & stock price movements in llama format (which we'll mention in the next section), and generate your own forecasting results!

|

| 55 |

+

```

|

| 56 |

+

B_INST, E_INST = "[INST]", "[/INST]"

|

| 57 |

+

B_SYS, E_SYS = "<<SYS>>\n", "\n<</SYS>>\n\n"

|

| 58 |

+

|

| 59 |

+

prompt = B_INST + B_SYS + {SYSTEM_PROMPT} + E_SYS + {YOUR_PROMPT} + E_INST

|

| 60 |

+

inputs = tokenizer(

|

| 61 |

+

prompt, return_tensors='pt'

|

| 62 |

+

)

|

| 63 |

+

inputs = {key: value.to(model.device) for key, value in inputs.items()}

|

| 64 |

+

|

| 65 |

+

res = model.generate(

|

| 66 |

+

**inputs, max_length=4096, do_sample=True,

|

| 67 |

+

eos_token_id=tokenizer.eos_token_id,

|

| 68 |

+

use_cache=True

|

| 69 |

+

)

|

| 70 |

+

output = tokenizer.decode(res[0], skip_special_tokens=True)

|

| 71 |

+

answer = re.sub(r'.*\[/INST\]\s*', '', output, flags=re.DOTALL) # don't forget to import re

|

| 72 |

+

```

|

| 73 |

+

|

| 74 |

+

## Data Preparation

|

| 75 |

+

Company profile & Market news & Basic financials & Stock prices are retrieved using **yfinance & finnhub**.

|

| 76 |

+

|

| 77 |

+

Prompts used are organized as below:

|

| 78 |

+

|

| 79 |

+

```

|

| 80 |

+

SYSTEM_PROMPT = "You are a seasoned stock market analyst. Your task is to list the positive developments and potential concerns for companies based on relevant news and basic financials from the past weeks, then provide an analysis and prediction for the companies' stock price movement for the upcoming week. Your answer format should be as follows:\n\n[Positive Developments]:\n1. ...\n\n[Potential Concerns]:\n1. ...\n\n[Prediction & Analysis]:\n...\n"

|

| 81 |

+

|

| 82 |

+

prompt = """

|

| 83 |

+

[Company Introduction]:

|

| 84 |

+

|

| 85 |

+

{name} is a leading entity in the {finnhubIndustry} sector. Incorporated and publicly traded since {ipo}, the company has established its reputation as one of the key players in the market. As of today, {name} has a market capitalization of {marketCapitalization:.2f} in {currency}, with {shareOutstanding:.2f} shares outstanding. {name} operates primarily in the {country}, trading under the ticker {ticker} on the {exchange}. As a dominant force in the {finnhubIndustry} space, the company continues to innovate and drive progress within the industry.

|

| 86 |

+

|

| 87 |

+

From {startDate} to {endDate}, {name}'s stock price {increase/decrease} from {startPrice} to {endPrice}. Company news during this period are listed below:

|

| 88 |

+

|

| 89 |

+

[Headline]: ...

|

| 90 |

+

[Summary]: ...

|

| 91 |

+

|

| 92 |

+

[Headline]: ...

|

| 93 |

+

[Summary]: ...

|

| 94 |

+

|

| 95 |

+

Some recent basic financials of {name}, reported at {date}, are presented below:

|

| 96 |

+

|

| 97 |

+

[Basic Financials]:

|

| 98 |

+

{attr1}: {value1}

|

| 99 |

+

{attr2}: {value2}

|

| 100 |

+

...

|

| 101 |

+

|

| 102 |

+

Based on all the information before {curday}, let's first analyze the positive developments and potential concerns for {symbol}. Come up with 2-4 most important factors respectively and keep them concise. Most factors should be inferred from company-related news. Then make your prediction of the {symbol} stock price movement for next week ({period}). Provide a summary analysis to support your prediction.

|

| 103 |

+

|

| 104 |

+

"""

|

| 105 |

+

```

|

| 106 |

+

## Train your own FinGPT-Forecaster

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

**Disclaimer: Nothing herein is financial advice, and NOT a recommendation to trade real money. Please use common sense and always first consult a professional before trading or investing.**

|

app.py

ADDED

|

@@ -0,0 +1,320 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import re

|

| 3 |

+

import time

|

| 4 |

+

import json

|

| 5 |

+

import random

|

| 6 |

+

import finnhub

|

| 7 |

+

import torch

|

| 8 |

+

import gradio as gr

|

| 9 |

+

import pandas as pd

|

| 10 |

+

import yfinance as yf

|

| 11 |

+

from pynvml import *

|

| 12 |

+

from peft import PeftModel

|

| 13 |

+

from collections import defaultdict

|

| 14 |

+

from datetime import date, datetime, timedelta

|

| 15 |

+

from transformers import AutoTokenizer, AutoModelForCausalLM, TextStreamer

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

access_token = os.environ["HF_TOKEN"]

|

| 19 |

+

finnhub_client = finnhub.Client(api_key=os.environ["FINNHUB_API_KEY"])

|

| 20 |

+

|

| 21 |

+

base_model = AutoModelForCausalLM.from_pretrained(

|

| 22 |

+

'meta-llama/Llama-2-7b-chat-hf',

|

| 23 |

+

token=access_token,

|

| 24 |

+

trust_remote_code=True,

|

| 25 |

+

device_map="auto",

|

| 26 |

+

torch_dtype=torch.float16,

|

| 27 |

+

offload_folder="offload/"

|

| 28 |

+

)

|

| 29 |

+

model = PeftModel.from_pretrained(

|

| 30 |

+

base_model,

|

| 31 |

+

'FinGPT/fingpt-forecaster_dow30_llama2-7b_lora',

|

| 32 |

+

offload_folder="offload/"

|

| 33 |

+

)

|

| 34 |

+

model = model.eval()

|

| 35 |

+

|

| 36 |

+

tokenizer = AutoTokenizer.from_pretrained(

|

| 37 |

+

'meta-llama/Llama-2-7b-chat-hf',

|

| 38 |

+

token=access_token

|

| 39 |

+

)

|

| 40 |

+

|

| 41 |

+

streamer = TextStreamer(tokenizer)

|

| 42 |

+

|

| 43 |

+

B_INST, E_INST = "[INST]", "[/INST]"

|

| 44 |

+

B_SYS, E_SYS = "<<SYS>>\n", "\n<</SYS>>\n\n"

|

| 45 |

+

|

| 46 |

+

SYSTEM_PROMPT = "You are a seasoned stock market analyst. Your task is to list the positive developments and potential concerns for companies based on relevant news and basic financials from the past weeks, then provide an analysis and prediction for the companies' stock price movement for the upcoming week. " \

|

| 47 |

+

"Your answer format should be as follows:\n\n[Positive Developments]:\n1. ...\n\n[Potential Concerns]:\n1. ...\n\n[Prediction & Analysis]\nPrediction: ...\nAnalysis: ..."

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

def print_gpu_utilization():

|

| 51 |

+

|

| 52 |

+

nvmlInit()

|

| 53 |

+

handle = nvmlDeviceGetHandleByIndex(0)

|

| 54 |

+

info = nvmlDeviceGetMemoryInfo(handle)

|

| 55 |

+

print(f"GPU memory occupied: {info.used//1024**2} MB.")

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

def get_curday():

|

| 59 |

+

|

| 60 |

+

return date.today().strftime("%Y-%m-%d")

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

def n_weeks_before(date_string, n):

|

| 64 |

+

|

| 65 |

+

date = datetime.strptime(date_string, "%Y-%m-%d") - timedelta(days=7*n)

|

| 66 |

+

|

| 67 |

+

return date.strftime("%Y-%m-%d")

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

def get_stock_data(stock_symbol, steps):

|

| 71 |

+

|

| 72 |

+

stock_data = yf.download(stock_symbol, steps[0], steps[-1])

|

| 73 |

+

if len(stock_data) == 0:

|

| 74 |

+

raise gr.Error(f"Failed to download stock price data for symbol {stock_symbol} from yfinance!")

|

| 75 |

+

|

| 76 |

+

# print(stock_data)

|

| 77 |

+

|

| 78 |

+

dates, prices = [], []

|

| 79 |

+

available_dates = stock_data.index.format()

|

| 80 |

+

|

| 81 |

+

for date in steps[:-1]:

|

| 82 |

+

for i in range(len(stock_data)):

|

| 83 |

+

if available_dates[i] >= date:

|

| 84 |

+

prices.append(stock_data['Close'][i])

|

| 85 |

+

dates.append(datetime.strptime(available_dates[i], "%Y-%m-%d"))

|

| 86 |

+

break

|

| 87 |

+

|

| 88 |

+

dates.append(datetime.strptime(available_dates[-1], "%Y-%m-%d"))

|

| 89 |

+

prices.append(stock_data['Close'][-1])

|

| 90 |

+

|

| 91 |

+

return pd.DataFrame({

|

| 92 |

+

"Start Date": dates[:-1], "End Date": dates[1:],

|

| 93 |

+

"Start Price": prices[:-1], "End Price": prices[1:]

|

| 94 |

+

})

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

def get_news(symbol, data):

|

| 98 |

+

|

| 99 |

+

news_list = []

|

| 100 |

+

|

| 101 |

+

for end_date, row in data.iterrows():

|

| 102 |

+

start_date = row['Start Date'].strftime('%Y-%m-%d')

|

| 103 |

+

end_date = row['End Date'].strftime('%Y-%m-%d')

|

| 104 |

+

# print(symbol, ': ', start_date, ' - ', end_date)

|

| 105 |

+

time.sleep(1) # control qpm

|

| 106 |

+

weekly_news = finnhub_client.company_news(symbol, _from=start_date, to=end_date)

|

| 107 |

+

if len(weekly_news) == 0:

|

| 108 |

+

raise gr.Error(f"No company news found for symbol {symbol} from finnhub!")

|

| 109 |

+

weekly_news = [

|

| 110 |

+

{

|

| 111 |

+

"date": datetime.fromtimestamp(n['datetime']).strftime('%Y%m%d%H%M%S'),

|

| 112 |

+

"headline": n['headline'],

|

| 113 |

+

"summary": n['summary'],

|

| 114 |

+

} for n in weekly_news

|

| 115 |

+

]

|

| 116 |

+

weekly_news.sort(key=lambda x: x['date'])

|

| 117 |

+

news_list.append(json.dumps(weekly_news))

|

| 118 |

+

|

| 119 |

+

data['News'] = news_list

|

| 120 |

+

|

| 121 |

+

return data

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

def get_company_prompt(symbol):

|

| 125 |

+

|

| 126 |

+

profile = finnhub_client.company_profile2(symbol=symbol)

|

| 127 |

+

if not profile:

|

| 128 |

+

raise gr.Error(f"Failed to find company profile for symbol {symbol} from finnhub!")

|

| 129 |

+

|

| 130 |

+

company_template = "[Company Introduction]:\n\n{name} is a leading entity in the {finnhubIndustry} sector. Incorporated and publicly traded since {ipo}, the company has established its reputation as one of the key players in the market. As of today, {name} has a market capitalization of {marketCapitalization:.2f} in {currency}, with {shareOutstanding:.2f} shares outstanding." \

|

| 131 |

+

"\n\n{name} operates primarily in the {country}, trading under the ticker {ticker} on the {exchange}. As a dominant force in the {finnhubIndustry} space, the company continues to innovate and drive progress within the industry."

|

| 132 |

+

|

| 133 |

+

formatted_str = company_template.format(**profile)

|

| 134 |

+

|

| 135 |

+

return formatted_str

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

def get_prompt_by_row(symbol, row):

|

| 139 |

+

|

| 140 |

+

start_date = row['Start Date'] if isinstance(row['Start Date'], str) else row['Start Date'].strftime('%Y-%m-%d')

|

| 141 |

+

end_date = row['End Date'] if isinstance(row['End Date'], str) else row['End Date'].strftime('%Y-%m-%d')

|

| 142 |

+

term = 'increased' if row['End Price'] > row['Start Price'] else 'decreased'

|

| 143 |

+

head = "From {} to {}, {}'s stock price {} from {:.2f} to {:.2f}. Company news during this period are listed below:\n\n".format(

|

| 144 |

+

start_date, end_date, symbol, term, row['Start Price'], row['End Price'])

|

| 145 |

+

|

| 146 |

+

news = json.loads(row["News"])

|

| 147 |

+

news = ["[Headline]: {}\n[Summary]: {}\n".format(

|

| 148 |

+

n['headline'], n['summary']) for n in news if n['date'][:8] <= end_date.replace('-', '') and \

|

| 149 |

+

not n['summary'].startswith("Looking for stock market analysis and research with proves results?")]

|

| 150 |

+

|

| 151 |

+

basics = json.loads(row['Basics'])

|

| 152 |

+

if basics:

|

| 153 |

+

basics = "Some recent basic financials of {}, reported at {}, are presented below:\n\n[Basic Financials]:\n\n".format(

|

| 154 |

+

symbol, basics['period']) + "\n".join(f"{k}: {v}" for k, v in basics.items() if k != 'period')

|

| 155 |

+

else:

|

| 156 |

+

basics = "[Basic Financials]:\n\nNo basic financial reported."

|

| 157 |

+

|

| 158 |

+

return head, news, basics

|

| 159 |

+

|

| 160 |

+

|

| 161 |

+

def sample_news(news, k=5):

|

| 162 |

+

|

| 163 |

+

return [news[i] for i in sorted(random.sample(range(len(news)), k))]

|

| 164 |

+

|

| 165 |

+

|

| 166 |

+

def get_current_basics(symbol, curday):

|

| 167 |

+

|

| 168 |

+

basic_financials = finnhub_client.company_basic_financials(symbol, 'all')

|

| 169 |

+

if not basic_financials['series']:

|

| 170 |

+

raise gr.Error(f"Failed to find basic financials for symbol {symbol} from finnhub!")

|

| 171 |

+

|

| 172 |

+

final_basics, basic_list, basic_dict = [], [], defaultdict(dict)

|

| 173 |

+

|

| 174 |

+

for metric, value_list in basic_financials['series']['quarterly'].items():

|

| 175 |

+

for value in value_list:

|

| 176 |

+

basic_dict[value['period']].update({metric: value['v']})

|

| 177 |

+

|

| 178 |

+

for k, v in basic_dict.items():

|

| 179 |

+

v.update({'period': k})

|

| 180 |

+

basic_list.append(v)

|

| 181 |

+

|

| 182 |

+

basic_list.sort(key=lambda x: x['period'])

|

| 183 |

+

|

| 184 |

+

for basic in basic_list[::-1]:

|

| 185 |

+

if basic['period'] <= curday:

|

| 186 |

+

break

|

| 187 |

+

|

| 188 |

+

return basic

|

| 189 |

+

|

| 190 |

+

|

| 191 |

+

def get_all_prompts_online(symbol, data, curday, with_basics=True):

|

| 192 |

+

|

| 193 |

+

company_prompt = get_company_prompt(symbol)

|

| 194 |

+

|

| 195 |

+

prev_rows = []

|

| 196 |

+

|

| 197 |

+

for row_idx, row in data.iterrows():

|

| 198 |

+

head, news, _ = get_prompt_by_row(symbol, row)

|

| 199 |

+

prev_rows.append((head, news, None))

|

| 200 |

+

|

| 201 |

+

prompt = ""

|

| 202 |

+

for i in range(-len(prev_rows), 0):

|

| 203 |

+

prompt += "\n" + prev_rows[i][0]

|

| 204 |

+

sampled_news = sample_news(

|

| 205 |

+

prev_rows[i][1],

|

| 206 |

+

min(5, len(prev_rows[i][1]))

|

| 207 |

+

)

|

| 208 |

+

if sampled_news:

|

| 209 |

+

prompt += "\n".join(sampled_news)

|

| 210 |

+

else:

|

| 211 |

+

prompt += "No relative news reported."

|

| 212 |

+

|

| 213 |

+

period = "{} to {}".format(curday, n_weeks_before(curday, -1))

|

| 214 |

+

|

| 215 |

+

if with_basics:

|

| 216 |

+

basics = get_current_basics(symbol, curday)

|

| 217 |

+

basics = "Some recent basic financials of {}, reported at {}, are presented below:\n\n[Basic Financials]:\n\n".format(

|

| 218 |

+

symbol, basics['period']) + "\n".join(f"{k}: {v}" for k, v in basics.items() if k != 'period')

|

| 219 |

+

else:

|

| 220 |

+

basics = "[Basic Financials]:\n\nNo basic financial reported."

|

| 221 |

+

|

| 222 |

+

info = company_prompt + '\n' + prompt + '\n' + basics

|

| 223 |

+

prompt = info + f"\n\nBased on all the information before {curday}, let's first analyze the positive developments and potential concerns for {symbol}. Come up with 2-4 most important factors respectively and keep them concise. Most factors should be inferred from company related news. " \

|

| 224 |

+

f"Then make your prediction of the {symbol} stock price movement for next week ({period}). Provide a summary analysis to support your prediction."

|

| 225 |

+

|

| 226 |

+

return info, prompt

|

| 227 |

+

|

| 228 |

+

|

| 229 |

+

def construct_prompt(ticker, curday, n_weeks, use_basics):

|

| 230 |

+

|

| 231 |

+

try:

|

| 232 |

+

steps = [n_weeks_before(curday, n) for n in range(n_weeks + 1)][::-1]

|

| 233 |

+

except Exception:

|

| 234 |

+

raise gr.Error(f"Invalid date {curday}!")

|

| 235 |

+

|

| 236 |

+

data = get_stock_data(ticker, steps)

|

| 237 |

+

data = get_news(ticker, data)

|

| 238 |

+

data['Basics'] = [json.dumps({})] * len(data)

|

| 239 |

+

# print(data)

|

| 240 |

+

|

| 241 |

+

info, prompt = get_all_prompts_online(ticker, data, curday, use_basics)

|

| 242 |

+

|

| 243 |

+

prompt = B_INST + B_SYS + SYSTEM_PROMPT + E_SYS + prompt + E_INST

|

| 244 |

+

# print(prompt)

|

| 245 |

+

|

| 246 |

+

return info, prompt

|

| 247 |

+

|

| 248 |

+

|

| 249 |

+

def predict(ticker, date, n_weeks, use_basics):

|

| 250 |

+

|

| 251 |

+

print_gpu_utilization()

|

| 252 |

+

|

| 253 |

+

info, prompt = construct_prompt(ticker, date, n_weeks, use_basics)

|

| 254 |

+

|

| 255 |

+

inputs = tokenizer(

|

| 256 |

+

prompt, return_tensors='pt', padding=False

|

| 257 |

+

)

|

| 258 |

+

inputs = {key: value.to(model.device) for key, value in inputs.items()}

|

| 259 |

+

|

| 260 |

+

print("Inputs loaded onto devices.")

|

| 261 |

+

|

| 262 |

+

res = model.generate(

|

| 263 |

+

**inputs, max_length=4096, do_sample=True,

|

| 264 |

+

eos_token_id=tokenizer.eos_token_id,

|

| 265 |

+

use_cache=True, streamer=streamer

|

| 266 |

+

)

|

| 267 |

+

output = tokenizer.decode(res[0], skip_special_tokens=True)

|

| 268 |

+

answer = re.sub(r'.*\[/INST\]\s*', '', output, flags=re.DOTALL)

|

| 269 |

+

|

| 270 |

+

torch.cuda.empty_cache()

|

| 271 |

+

|

| 272 |

+

return info, answer

|

| 273 |

+

|

| 274 |

+

|

| 275 |

+

demo = gr.Interface(

|

| 276 |

+

predict,

|

| 277 |

+

inputs=[

|

| 278 |

+

gr.Textbox(

|

| 279 |

+

label="Ticker",

|

| 280 |

+

value="AAPL",

|

| 281 |

+

info="Companys from Dow-30 are recommended"

|

| 282 |

+

),

|

| 283 |

+

gr.Textbox(

|

| 284 |

+

label="Date",

|

| 285 |

+

value=get_curday,

|

| 286 |

+

info="Date from which the prediction is made, use format yyyy-mm-dd"

|

| 287 |

+

),

|

| 288 |

+

gr.Slider(

|

| 289 |

+

minimum=1,

|

| 290 |

+

maximum=4,

|

| 291 |

+

value=3,

|

| 292 |

+

step=1,

|

| 293 |

+

label="n_weeks",

|

| 294 |

+

info="Information of the past n weeks will be utilized, choose between 1 and 4"

|

| 295 |

+

),

|

| 296 |

+

gr.Checkbox(

|

| 297 |

+

label="Use Latest Basic Financials",

|

| 298 |

+

value=False,

|

| 299 |

+

info="If checked, the latest quarterly reported basic financials of the company is taken into account."

|

| 300 |

+

)

|

| 301 |

+

],

|

| 302 |

+

outputs=[

|

| 303 |

+

gr.Textbox(

|

| 304 |

+

label="Information"

|

| 305 |

+

),

|

| 306 |

+

gr.Textbox(

|

| 307 |

+

label="Response"

|

| 308 |

+

)

|

| 309 |

+

],

|

| 310 |

+

title="FinGPT-Forecaster",

|

| 311 |

+

description="""FinGPT-Forecaster takes random market news and optional basic financials related to the specified company from the past few weeks as input and responds with the company's **positive developments** and **potential concerns**. Then it gives out a **prediction** of stock price movement for the coming week and its **analysis** summary.

|

| 312 |

+

This model is finetuned on Llama2-7b-chat-hf with LoRA on the past year's DOW30 market data. Inference in this demo uses fp16 and **welcomes any ticker symbol**.

|

| 313 |

+

Company profile & Market news & Basic financials & Stock prices are retrieved using **yfinance & finnhub**.

|

| 314 |

+

This is just a demo showing what this model is capable of. Results inferred from randomly chosen news can be strongly biased.

|

| 315 |

+

For more detailed and customized implementation, refer to our FinGPT project: <https://github.com/AI4Finance-Foundation/FinGPT>

|

| 316 |

+

**Disclaimer: Nothing herein is financial advice, and NOT a recommendation to trade real money. Please use common sense and always first consult a professional before trading or investing.**

|

| 317 |

+

"""

|

| 318 |

+

)

|

| 319 |

+

|

| 320 |

+

demo.launch()

|

config.json

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"train_micro_batch_size_per_gpu": "auto",

|

| 3 |

+

"train_batch_size": "auto",

|

| 4 |

+

"gradient_accumulation_steps": "auto",

|

| 5 |

+

"optimizer": {

|

| 6 |

+

"type": "ZeroOneAdam",

|

| 7 |

+

"params": {

|

| 8 |

+

"lr": "auto",

|

| 9 |

+

"weight_decay": "auto",

|

| 10 |

+

"bias_correction": false,

|

| 11 |

+

"var_freeze_step": 1000,

|

| 12 |

+

"var_update_scaler": 16,

|

| 13 |

+

"local_step_scaler": 1000,

|

| 14 |

+

"local_step_clipper": 16,

|

| 15 |

+

"cuda_aware": true,

|

| 16 |

+

"comm_backend_name": "nccl"

|

| 17 |

+

}

|

| 18 |

+

},

|

| 19 |

+

"scheduler": {

|

| 20 |

+

"type": "WarmupLR",

|

| 21 |

+

"params": {

|

| 22 |

+

"warmup_min_lr": 0,

|

| 23 |

+

"warmup_max_lr": "auto",

|

| 24 |

+

"warmup_num_steps": "auto"

|

| 25 |

+

}

|

| 26 |

+

},

|

| 27 |

+

"fp16": {

|

| 28 |

+

"enabled": true

|

| 29 |

+

},

|

| 30 |

+

"zero_optimization": {

|

| 31 |

+

"stage": 0

|

| 32 |

+

}

|

| 33 |

+

}

|

demo.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

figs/interface.png

ADDED

|

figs/response.png

ADDED

|

figs/title.png

ADDED

|

prepare_data.ipynb

ADDED

|

@@ -0,0 +1,1545 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|