Spaces:

Running

Running

Upload essential

Browse files- 6082308423334085331.jpg +0 -0

- a00e1c819a87fa56bb1e6058d9814bae.jpg +0 -0

- app.py +72 -0

- best_model.pth +3 -0

- model.py +168 -0

- requirements.txt +4 -0

6082308423334085331.jpg

ADDED

|

a00e1c819a87fa56bb1e6058d9814bae.jpg

ADDED

|

app.py

ADDED

|

@@ -0,0 +1,72 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from model import Trainer

|

| 3 |

+

import torch

|

| 4 |

+

import cv2

|

| 5 |

+

import tempfile

|

| 6 |

+

import numpy as np

|

| 7 |

+

|

| 8 |

+

def predict_beauty_score(img):

|

| 9 |

+

trainer = Trainer()

|

| 10 |

+

|

| 11 |

+

# Save the numpy array as an image temporarily

|

| 12 |

+

with tempfile.NamedTemporaryFile(suffix='.jpg', delete=False) as tmp:

|

| 13 |

+

# Convert RGB to BGR for cv2

|

| 14 |

+

img_bgr = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)

|

| 15 |

+

cv2.imwrite(tmp.name, img_bgr)

|

| 16 |

+

# Use the temporary file path

|

| 17 |

+

image_tensor = trainer.image_to_tensor(tmp.name)

|

| 18 |

+

|

| 19 |

+

prediction = trainer.predict(image_tensor)

|

| 20 |

+

score = prediction.item() * 100

|

| 21 |

+

|

| 22 |

+

# Decide which GIF to show based on the score

|

| 23 |

+

if score < 20:

|

| 24 |

+

gif_url = "https://i.pinimg.com/originals/9f/79/2a/9f792aed5881d409425de1a4361bc06b.gif"

|

| 25 |

+

elif score < 40:

|

| 26 |

+

gif_url = "https://i.pinimg.com/originals/ba/f5/c8/baf5c89c099b34decb7f4507b5144366.gif"

|

| 27 |

+

elif score < 60:

|

| 28 |

+

gif_url = "https://i.pinimg.com/originals/87/b6/dc/87b6dcfeec6f38a3836b1caf1d8fceab.gif"

|

| 29 |

+

elif score < 80:

|

| 30 |

+

gif_url = "https://i.pinimg.com/originals/3a/90/b8/3a90b87a337b79b9c8b7a3d9bf7250d7.gif"

|

| 31 |

+

else:

|

| 32 |

+

gif_url = "https://i.pinimg.com/originals/f6/02/01/f6020120d9e99f7b106c557cdc1edb1f.gif"

|

| 33 |

+

|

| 34 |

+

# Create formatted HTML outpu

|

| 35 |

+

html_output = f"""

|

| 36 |

+

<div style='text-align: center; padding: 20px;'>

|

| 37 |

+

<h2 style='color: #FFFFFF; margin-bottom: 10px;'>Score</h2>

|

| 38 |

+

<div style='font-size: 48px; font-weight: bold; color: #1a73e8;'>

|

| 39 |

+

{score:.3f}

|

| 40 |

+

</div>

|

| 41 |

+

<br/>

|

| 42 |

+

<div style='display: flex; justify-content: center;'>

|

| 43 |

+

<img src="{gif_url}" alt="GIF" width="200" height="200" />

|

| 44 |

+

</div>

|

| 45 |

+

<p>Enjoy the fun! :)</p>

|

| 46 |

+

</div>

|

| 47 |

+

"""

|

| 48 |

+

|

| 49 |

+

return html_output

|

| 50 |

+

|

| 51 |

+

# Create Gradio interface

|

| 52 |

+

demo = gr.Interface(

|

| 53 |

+

fn=predict_beauty_score,

|

| 54 |

+

inputs=gr.Image(), # Simple image input

|

| 55 |

+

outputs=gr.HTML(), # Using HTML output for custom formatting

|

| 56 |

+

title="Image Beauty Score Predictor",

|

| 57 |

+

description="""

|

| 58 |

+

<ul>

|

| 59 |

+

<li>Upload an image to get its beauty score prediction</li>

|

| 60 |

+

<li>Please remember that this is just for fun and is not intended to downplay anybody and I, as the model creator believe everyone is beautiful the way it is</li>

|

| 61 |

+

<li>Respect each other and most importantly have fun :)</li>

|

| 62 |

+

</ul>

|

| 63 |

+

""",

|

| 64 |

+

examples=[

|

| 65 |

+

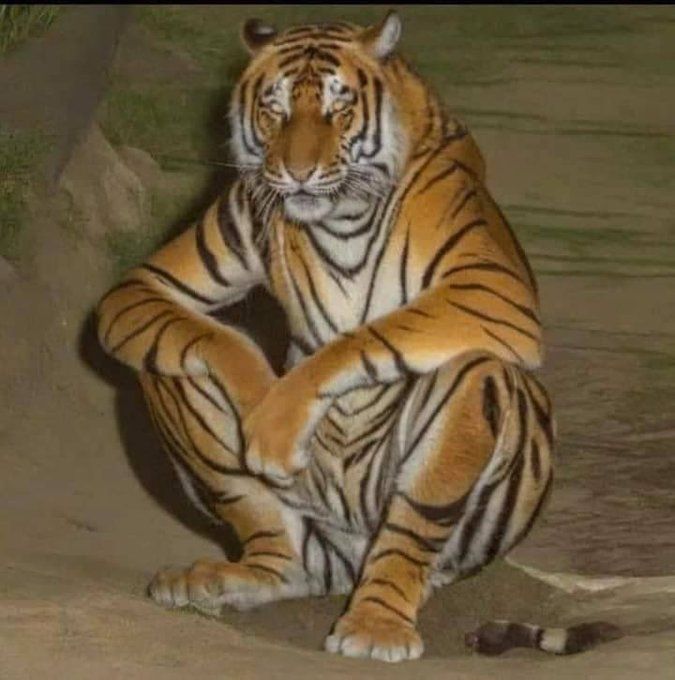

["6082308423334085331.jpg"],

|

| 66 |

+

["a00e1c819a87fa56bb1e6058d9814bae.jpg"]

|

| 67 |

+

],

|

| 68 |

+

cache_examples=True

|

| 69 |

+

)

|

| 70 |

+

|

| 71 |

+

if __name__ == "__main__":

|

| 72 |

+

demo.launch()

|

best_model.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:defa774b8bc88ded913ee62d88aca8091398f852420e53b404de67bb51e7cea3

|

| 3 |

+

size 292234402

|

model.py

ADDED

|

@@ -0,0 +1,168 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import numpy as np

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn as nn

|

| 4 |

+

import torch.nn.functional as F

|

| 5 |

+

import torch.optim as optim

|

| 6 |

+

from torch.utils.data import DataLoader, TensorDataset, random_split

|

| 7 |

+

from torch.optim.lr_scheduler import ReduceLROnPlateau

|

| 8 |

+

import cv2

|

| 9 |

+

|

| 10 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 11 |

+

|

| 12 |

+

class BeautyScore(nn.Module):

|

| 13 |

+

def __init__(self, first_neuron):

|

| 14 |

+

super(BeautyScore, self).__init__()

|

| 15 |

+

|

| 16 |

+

self.first_out_channels = first_neuron

|

| 17 |

+

|

| 18 |

+

self.features = nn.Sequential(

|

| 19 |

+

# First Convolutional Block

|

| 20 |

+

nn.Conv2d(in_channels=3, out_channels=self.first_out_channels, kernel_size=3, padding=1), # dimension [batch_size, out_channel, 128, `128`] -> padding = 1

|

| 21 |

+

nn.ReLU(),

|

| 22 |

+

nn.BatchNorm2d(self.first_out_channels),

|

| 23 |

+

nn.MaxPool2d(2), # dimension [batch_size, out_channel, 64, 64]

|

| 24 |

+

|

| 25 |

+

# Second Convolutional Block

|

| 26 |

+

nn.Conv2d(in_channels=self.first_out_channels, out_channels=self.first_out_channels*2, kernel_size=3, padding=1), # dimension [batch_size, out_channel, 32, 32]

|

| 27 |

+

nn.ReLU(),

|

| 28 |

+

nn.BatchNorm2d(self.first_out_channels*2),

|

| 29 |

+

nn.MaxPool2d(2), # dimension [batch_size, out_channel*2, 32, 32]

|

| 30 |

+

|

| 31 |

+

# Third Convolutional Block

|

| 32 |

+

nn.Conv2d(in_channels=self.first_out_channels*2, out_channels=self.first_out_channels*4, kernel_size=3, padding=1), # dimension [batch_size, out_channel, 16, 16]

|

| 33 |

+

nn.ReLU(),

|

| 34 |

+

nn.BatchNorm2d(self.first_out_channels*4),

|

| 35 |

+

nn.MaxPool2d(2), # dimension [batch_size, out_channel*4, 16, 16]

|

| 36 |

+

|

| 37 |

+

)

|

| 38 |

+

|

| 39 |

+

# Calculate size of flattened features after the convolutional layers

|

| 40 |

+

self.flatten_size = self.first_out_channels * 4 * (128 // (2**3)) * (128 // (2**3)) # out_channel * (128 // 2^amount_of_max_pool) * (128 // 2^amount_of_max_pool)

|

| 41 |

+

|

| 42 |

+

self.classifier = nn.Sequential(

|

| 43 |

+

nn.Dropout(0.3),

|

| 44 |

+

nn.Linear(self.flatten_size, 256), # dimension [batch_size, 256]

|

| 45 |

+

nn.ReLU(),

|

| 46 |

+

nn.Dropout(0.3),

|

| 47 |

+

nn.Linear(256, 128), # dimension [batch_size, 128]

|

| 48 |

+

nn.ReLU(),

|

| 49 |

+

nn.Dropout(0.3),

|

| 50 |

+

nn.Linear(128, 1), # dimension [batch_size, 1]

|

| 51 |

+

nn.Sigmoid() # To get value from 0 to 1

|

| 52 |

+

)

|

| 53 |

+

|

| 54 |

+

def forward(self, x):

|

| 55 |

+

x = self.features(x)

|

| 56 |

+

x = x.reshape(x.size(0), -1) # Flatten the tensor

|

| 57 |

+

x = self.classifier(x)

|

| 58 |

+

return x

|

| 59 |

+

|

| 60 |

+

class Trainer:

|

| 61 |

+

def __init__(self, train_loader = None, val_loader = None):

|

| 62 |

+

self.model = BeautyScore(first_neuron=256)

|

| 63 |

+

self.train_loader = train_loader

|

| 64 |

+

self.val_loader = val_loader

|

| 65 |

+

self.criterion = nn.MSELoss()

|

| 66 |

+

self.optimizer = torch.optim.SGD(self.model.parameters(), lr=0.001)

|

| 67 |

+

self.scheduler = ReduceLROnPlateau(self.optimizer, mode='min', factor=0.1, patience=5, verbose=True)

|

| 68 |

+

self.num_epochs = 20

|

| 69 |

+

|

| 70 |

+

def load_data(self):

|

| 71 |

+

data_path = '/home/reynaldy/.cache/kagglehub/datasets/pranavchandane/scut-fbp5500-v2-facial-beauty-scores/versions/2/scut_fbp5500-cmprsd.npz'

|

| 72 |

+

|

| 73 |

+

data = np.load(data_path)

|

| 74 |

+

data['X'].shape, data['y'].shape

|

| 75 |

+

|

| 76 |

+

features_numpy = data['X'].astype(np.float32)

|

| 77 |

+

features_numpy = np.array([cv2.resize(img, (128, 128)) for img in features_numpy]) # Resize the images to 256x256

|

| 78 |

+

features = torch.tensor(features_numpy, dtype=torch.float32).to(device)

|

| 79 |

+

features = features.permute(0, 3, 1, 2).to(device)

|

| 80 |

+

|

| 81 |

+

label_numpy = data['y'].astype(np.float32)

|

| 82 |

+

labels = torch.tensor(label_numpy, dtype=torch.float32).to(device)

|

| 83 |

+

tensor_min = labels.min()

|

| 84 |

+

tensor_max = labels.max()

|

| 85 |

+

|

| 86 |

+

labels = (labels - tensor_min) / (tensor_max - tensor_min)

|

| 87 |

+

print("Finish loading data")

|

| 88 |

+

|

| 89 |

+

train_size = int(0.8 * len(features))

|

| 90 |

+

test_size = len(features) - train_size

|

| 91 |

+

|

| 92 |

+

train_dataset, test_dataset = random_split(TensorDataset(features, labels), [train_size, test_size])

|

| 93 |

+

train_loader = DataLoader(train_dataset, batch_size=32, shuffle=True)

|

| 94 |

+

val_loader = DataLoader(test_dataset, batch_size=32, shuffle=False)

|

| 95 |

+

return train_loader, val_loader

|

| 96 |

+

|

| 97 |

+

def train(self):

|

| 98 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 99 |

+

self.model.to(device)

|

| 100 |

+

self.model.train()

|

| 101 |

+

running_loss = 0.0

|

| 102 |

+

train_loader, _= self.load_data()

|

| 103 |

+

|

| 104 |

+

for batch_idx, (inputs, labels) in enumerate(train_loader):

|

| 105 |

+

inputs, labels = inputs.to(device), labels.to(device).float()

|

| 106 |

+

|

| 107 |

+

self.optimizer.zero_grad()

|

| 108 |

+

outputs = self.model(inputs)

|

| 109 |

+

loss = self.criterion(outputs.squeeze(), labels)

|

| 110 |

+

loss.backward()

|

| 111 |

+

self.optimizer.step()

|

| 112 |

+

|

| 113 |

+

running_loss += loss.item()

|

| 114 |

+

|

| 115 |

+

if (batch_idx + 1) % 20 == 0:

|

| 116 |

+

print(f"Batch {batch_idx + 1}/{len(train_loader)} Loss: {loss.item()}")

|

| 117 |

+

|

| 118 |

+

epoch_loss = running_loss / len(train_loader)

|

| 119 |

+

if self.scheduler:

|

| 120 |

+

self.scheduler.step(epoch_loss)

|

| 121 |

+

|

| 122 |

+

print(f"Training Loss: {epoch_loss:.4f}")

|

| 123 |

+

return epoch_loss

|

| 124 |

+

|

| 125 |

+

def validate(self):

|

| 126 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 127 |

+

self.model.to(device)

|

| 128 |

+

self.model.eval()

|

| 129 |

+

running_loss = 0.0

|

| 130 |

+

_, val_loader = self.load_data()

|

| 131 |

+

|

| 132 |

+

with torch.no_grad():

|

| 133 |

+

for batch_idx, (inputs, labels) in enumerate(val_loader):

|

| 134 |

+

inputs, labels = inputs.to(device), labels.to(device).float()

|

| 135 |

+

outputs = self.model(inputs)

|

| 136 |

+

loss = self.criterion(outputs.squeeze(), labels)

|

| 137 |

+

|

| 138 |

+

running_loss += loss.item()

|

| 139 |

+

|

| 140 |

+

epoch_loss = running_loss / len(val_loader)

|

| 141 |

+

print(f"Validation Loss: {epoch_loss:.4f}")

|

| 142 |

+

return epoch_loss

|

| 143 |

+

|

| 144 |

+

def image_to_tensor(self, image_path):

|

| 145 |

+

image = cv2.imread(image_path)

|

| 146 |

+

image = cv2.resize(image, (128, 128))

|

| 147 |

+

image = torch.tensor(image, dtype=torch.float32).to(device)

|

| 148 |

+

image = image.permute(2, 0, 1).unsqueeze(0)

|

| 149 |

+

return image

|

| 150 |

+

|

| 151 |

+

def predict(self, inputs):

|

| 152 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 153 |

+

self.model.load_state_dict(torch.load('best_model.pth', weights_only=True))

|

| 154 |

+

self.model.to(device)

|

| 155 |

+

self.model.eval()

|

| 156 |

+

inputs = inputs.to(device)

|

| 157 |

+

with torch.no_grad():

|

| 158 |

+

outputs = self.model(inputs)

|

| 159 |

+

return outputs

|

| 160 |

+

|

| 161 |

+

if __name__ == "__main__":

|

| 162 |

+

trainer = Trainer()

|

| 163 |

+

|

| 164 |

+

# Test the model

|

| 165 |

+

image_path = '6082308423334085331.jpg'

|

| 166 |

+

image_tensor = trainer.image_to_tensor(image_path)

|

| 167 |

+

prediction = trainer.predict(image_tensor)

|

| 168 |

+

print(f"Predicted Beauty Score: {prediction.item() * 100}")

|

requirements.txt

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch

|

| 2 |

+

cv2

|

| 3 |

+

numpy

|

| 4 |

+

tempfile

|