diff --git a/.gitattributes b/.gitattributes

index c7d9f3332a950355d5a77d85000f05e6f45435ea..b442ae273b4bfd119296a7602d2cec21cd47ee17 100644

--- a/.gitattributes

+++ b/.gitattributes

@@ -32,3 +32,10 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

*.zip filter=lfs diff=lfs merge=lfs -text

*.zst filter=lfs diff=lfs merge=lfs -text

*tfevents* filter=lfs diff=lfs merge=lfs -text

+figure/figure1.png filter=lfs diff=lfs merge=lfs -text

+figure/figure2.png filter=lfs diff=lfs merge=lfs -text

+figure/figure3.png filter=lfs diff=lfs merge=lfs -text

+ultralytics/yolo/v8/detect/deep_sort_pytorch/deep_sort/deep/checkpoint/ckpt.t7 filter=lfs diff=lfs merge=lfs -text

+ultralytics/yolo/v8/detect/night_motorbikes.mp4 filter=lfs diff=lfs merge=lfs -text

+YOLOv8_DeepSORT_TRACKING_SCRIPT.ipynb filter=lfs diff=lfs merge=lfs -text

+YOLOv8_Detection_Tracking_CustomData_Complete.ipynb filter=lfs diff=lfs merge=lfs -text

diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000000000000000000000000000000000000..ef69c309cee09a99ca81a02b4fa4b0fad608f7a4

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,150 @@

+# Byte-compiled / optimized / DLL files

+__pycache__/

+*.py[cod]

+*$py.class

+

+# C extensions

+*.so

+

+# Distribution / packaging

+.Python

+build/

+develop-eggs/

+dist/

+downloads/

+eggs/

+.eggs/

+lib/

+lib64/

+parts/

+sdist/

+var/

+wheels/

+pip-wheel-metadata/

+share/python-wheels/

+*.egg-info/

+.installed.cfg

+*.egg

+MANIFEST

+

+# PyInstaller

+# Usually these files are written by a python script from a template

+# before PyInstaller builds the exe, so as to inject date/other infos into it.

+*.manifest

+*.spec

+

+# Installer logs

+pip-log.txt

+pip-delete-this-directory.txt

+

+# Unit test / coverage reports

+htmlcov/

+.tox/

+.nox/

+.coverage

+.coverage.*

+.cache

+nosetests.xml

+coverage.xml

+*.cover

+*.py,cover

+.hypothesis/

+.pytest_cache/

+

+# Translations

+*.mo

+*.pot

+

+# Django stuff:

+*.log

+local_settings.py

+db.sqlite3

+db.sqlite3-journal

+

+# Flask stuff:

+instance/

+.webassets-cache

+

+# Scrapy stuff:

+.scrapy

+

+# Sphinx documentation

+docs/_build/

+

+# PyBuilder

+target/

+

+# Jupyter Notebook

+.ipynb_checkpoints

+

+# IPython

+profile_default/

+ipython_config.py

+

+# pyenv

+.python-version

+

+# pipenv

+# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

+# However, in case of collaboration, if having platform-specific dependencies or dependencies

+# having no cross-platform support, pipenv may install dependencies that don't work, or not

+# install all needed dependencies.

+#Pipfile.lock

+

+# PEP 582; used by e.g. github.com/David-OConnor/pyflow

+__pypackages__/

+

+# Celery stuff

+celerybeat-schedule

+celerybeat.pid

+

+# SageMath parsed files

+*.sage.py

+

+# Environments

+.env

+.venv

+env/

+venv/

+ENV/

+env.bak/

+venv.bak/

+

+# Spyder project settings

+.spyderproject

+.spyproject

+

+# Rope project settings

+.ropeproject

+

+# mkdocs documentation

+/site

+

+# mypy

+.mypy_cache/

+.dmypy.json

+dmypy.json

+

+# Pyre type checker

+.pyre/

+

+# datasets and projects

+datasets/

+runs/

+wandb/

+

+.DS_Store

+

+# Neural Network weights -----------------------------------------------------------------------------------------------

+*.weights

+*.pt

+*.pb

+*.onnx

+*.engine

+*.mlmodel

+*.torchscript

+*.tflite

+*.h5

+*_saved_model/

+*_web_model/

+*_openvino_model/

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..480127f24b4f28e2dfd794f72c7f26f9168082f9

--- /dev/null

+++ b/.pre-commit-config.yaml

@@ -0,0 +1,65 @@

+# Define hooks for code formations

+# Will be applied on any updated commit files if a user has installed and linked commit hook

+

+default_language_version:

+ python: python3.8

+

+exclude: 'docs/'

+# Define bot property if installed via https://github.com/marketplace/pre-commit-ci

+ci:

+ autofix_prs: true

+ autoupdate_commit_msg: '[pre-commit.ci] pre-commit suggestions'

+ autoupdate_schedule: monthly

+ # submodules: true

+

+repos:

+ - repo: https://github.com/pre-commit/pre-commit-hooks

+ rev: v4.3.0

+ hooks:

+ # - id: end-of-file-fixer

+ - id: trailing-whitespace

+ - id: check-case-conflict

+ - id: check-yaml

+ - id: check-toml

+ - id: pretty-format-json

+ - id: check-docstring-first

+

+ - repo: https://github.com/asottile/pyupgrade

+ rev: v2.37.3

+ hooks:

+ - id: pyupgrade

+ name: Upgrade code

+ args: [ --py37-plus ]

+

+ - repo: https://github.com/PyCQA/isort

+ rev: 5.10.1

+ hooks:

+ - id: isort

+ name: Sort imports

+

+ - repo: https://github.com/pre-commit/mirrors-yapf

+ rev: v0.32.0

+ hooks:

+ - id: yapf

+ name: YAPF formatting

+

+ - repo: https://github.com/executablebooks/mdformat

+ rev: 0.7.16

+ hooks:

+ - id: mdformat

+ name: MD formatting

+ additional_dependencies:

+ - mdformat-gfm

+ - mdformat-black

+ # exclude: "README.md|README.zh-CN.md|CONTRIBUTING.md"

+

+ - repo: https://github.com/PyCQA/flake8

+ rev: 5.0.4

+ hooks:

+ - id: flake8

+ name: PEP8

+

+ #- repo: https://github.com/asottile/yesqa

+ # rev: v1.4.0

+ # hooks:

+ # - id: yesqa

\ No newline at end of file

diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

new file mode 100644

index 0000000000000000000000000000000000000000..5e9c66afa07f59291cfbec1ca28772501fdf2d76

--- /dev/null

+++ b/CONTRIBUTING.md

@@ -0,0 +1,113 @@

+## Contributing to YOLOv8 🚀

+

+We love your input! We want to make contributing to YOLOv8 as easy and transparent as possible, whether it's:

+

+- Reporting a bug

+- Discussing the current state of the code

+- Submitting a fix

+- Proposing a new feature

+- Becoming a maintainer

+

+YOLOv8 works so well due to our combined community effort, and for every small improvement you contribute you will be

+helping push the frontiers of what's possible in AI 😃!

+

+## Submitting a Pull Request (PR) 🛠️

+

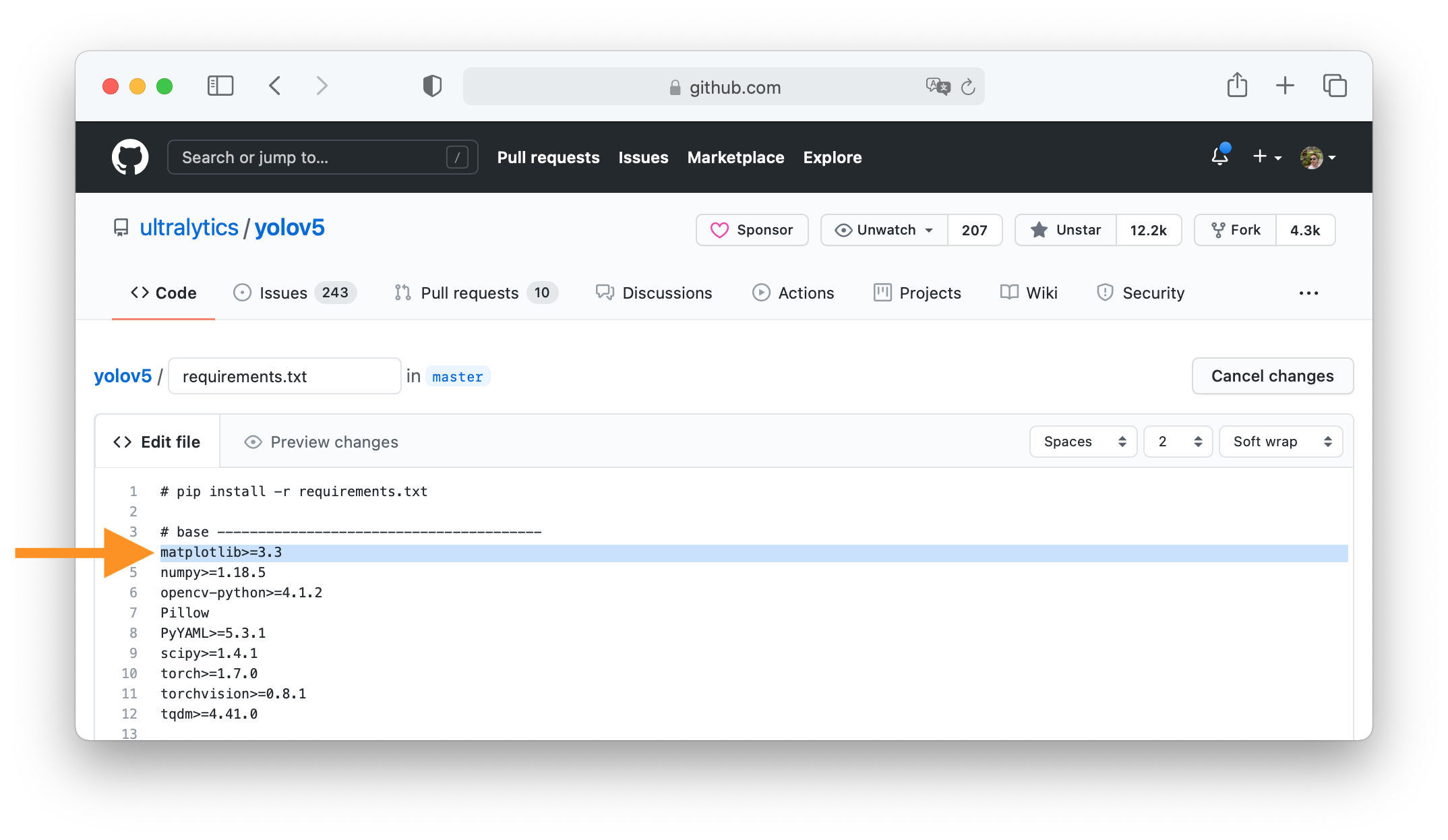

+Submitting a PR is easy! This example shows how to submit a PR for updating `requirements.txt` in 4 steps:

+

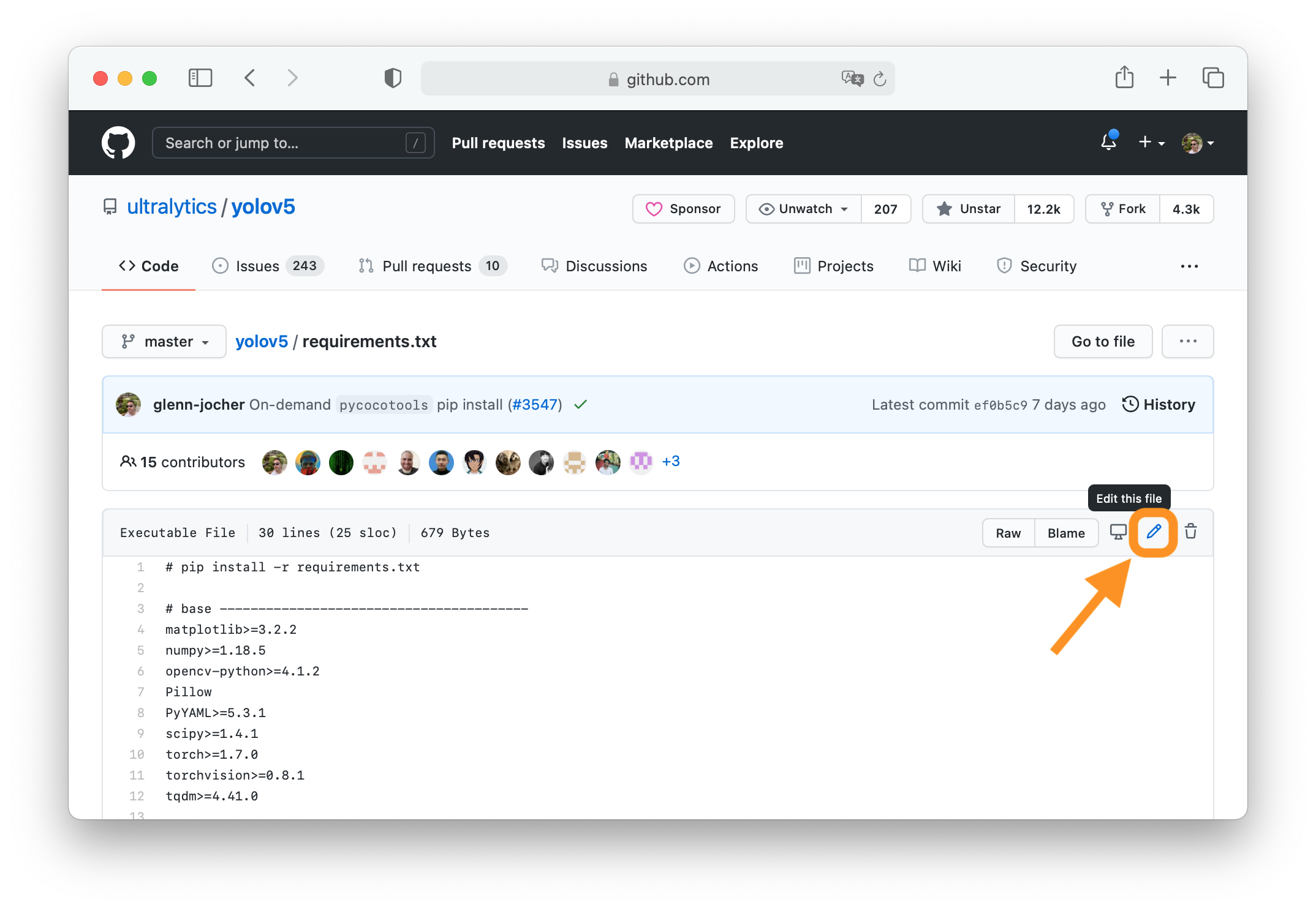

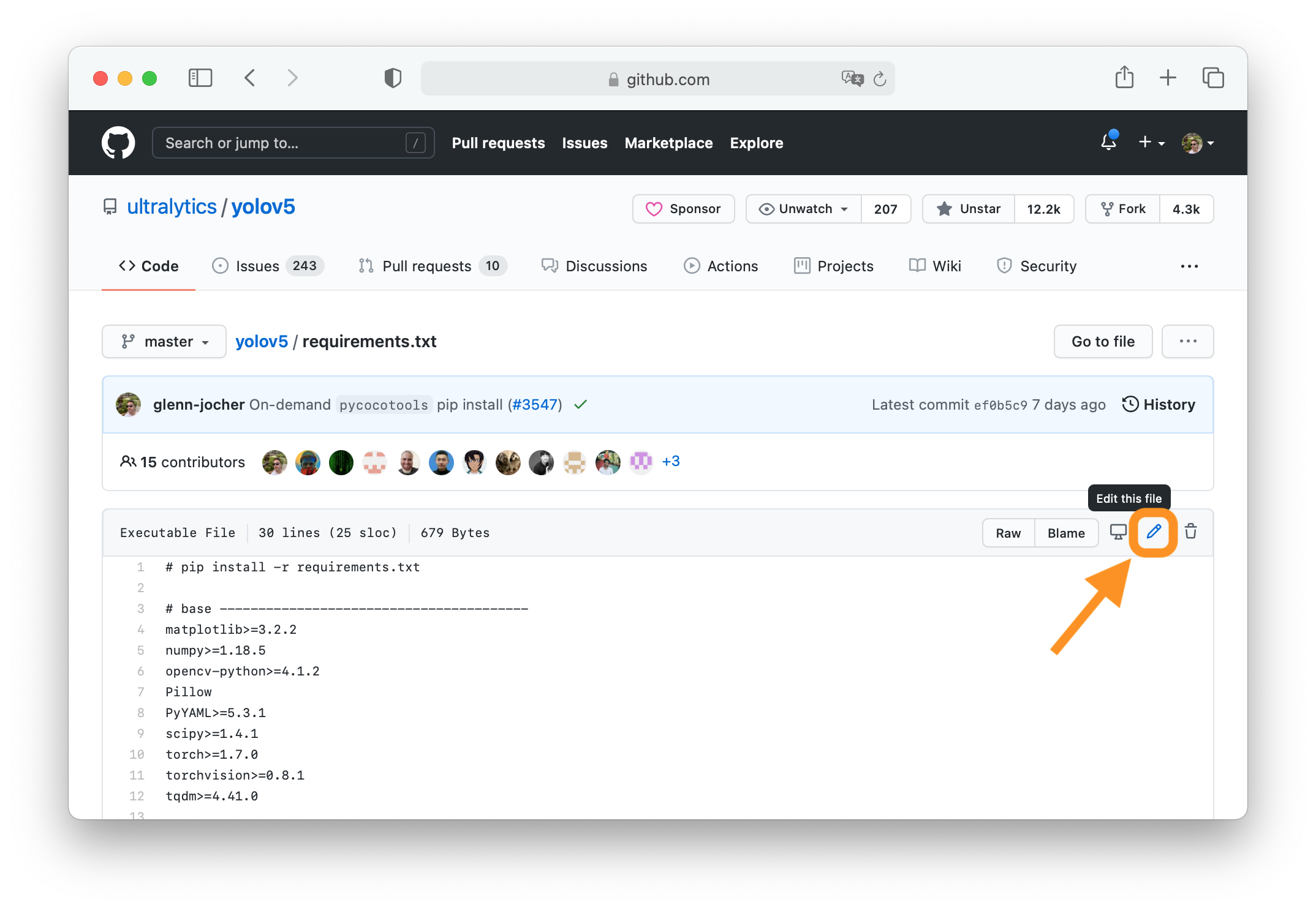

+### 1. Select File to Update

+

+Select `requirements.txt` to update by clicking on it in GitHub.

+

+

+

+### 2. Click 'Edit this file'

+

+Button is in top-right corner.

+

+

+

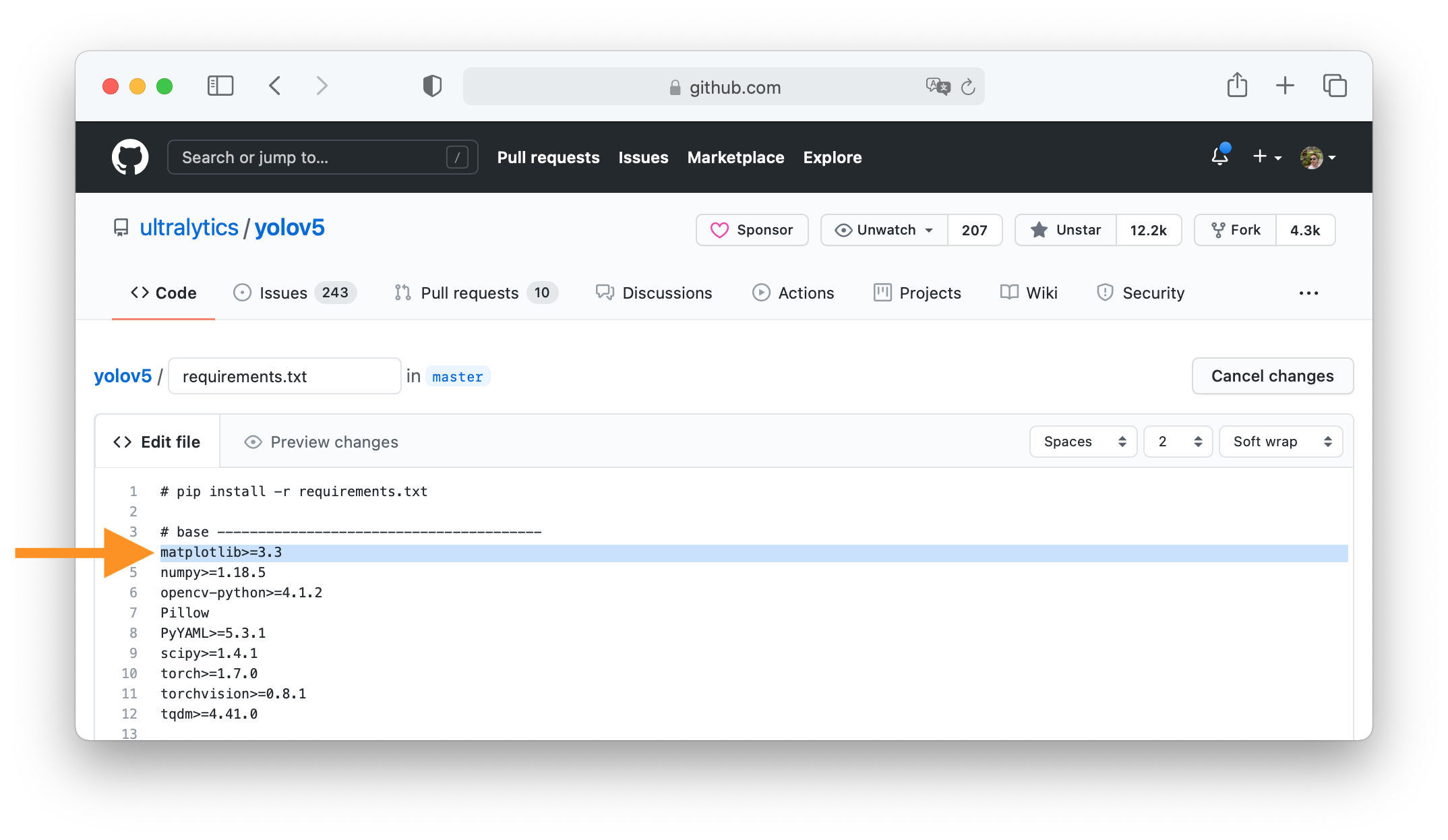

+### 3. Make Changes

+

+Change `matplotlib` version from `3.2.2` to `3.3`.

+

+

+

+### 4. Preview Changes and Submit PR

+

+Click on the **Preview changes** tab to verify your updates. At the bottom of the screen select 'Create a **new branch**

+for this commit', assign your branch a descriptive name such as `fix/matplotlib_version` and click the green **Propose

+changes** button. All done, your PR is now submitted to YOLOv8 for review and approval 😃!

+

+

+

+### PR recommendations

+

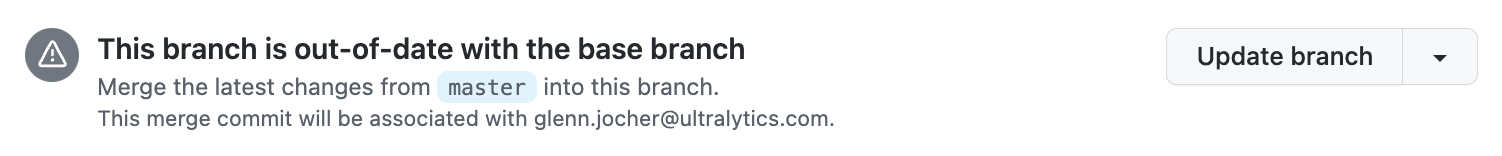

+To allow your work to be integrated as seamlessly as possible, we advise you to:

+

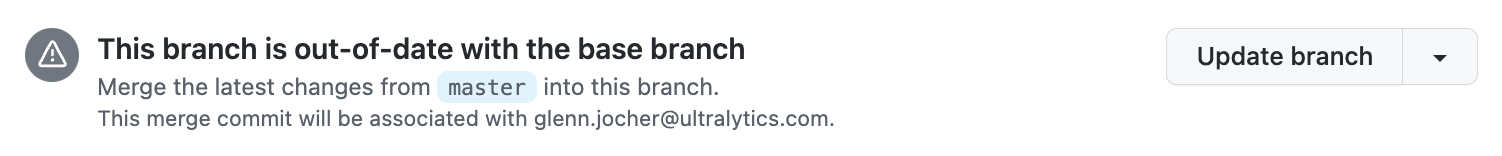

+- ✅ Verify your PR is **up-to-date** with `ultralytics/ultralytics` `master` branch. If your PR is behind you can update

+ your code by clicking the 'Update branch' button or by running `git pull` and `git merge master` locally.

+

+

+

+- ✅ Verify all YOLOv8 Continuous Integration (CI) **checks are passing**.

+

+

+

+- ✅ Reduce changes to the absolute **minimum** required for your bug fix or feature addition. _"It is not daily increase

+ but daily decrease, hack away the unessential. The closer to the source, the less wastage there is."_ — Bruce Lee

+

+### Docstrings

+

+Not all functions or classes require docstrings but when they do, we follow [google-stlye docstrings format](https://google.github.io/styleguide/pyguide.html#38-comments-and-docstrings). Here is an example:

+

+```python

+"""

+ What the function does - performs nms on given detection predictions

+

+ Args:

+ arg1: The description of the 1st argument

+ arg2: The description of the 2nd argument

+

+ Returns:

+ What the function returns. Empty if nothing is returned

+

+ Raises:

+ Exception Class: When and why this exception can be raised by the function.

+"""

+```

+

+## Submitting a Bug Report 🐛

+

+If you spot a problem with YOLOv8 please submit a Bug Report!

+

+For us to start investigating a possible problem we need to be able to reproduce it ourselves first. We've created a few

+short guidelines below to help users provide what we need in order to get started.

+

+When asking a question, people will be better able to provide help if you provide **code** that they can easily

+understand and use to **reproduce** the problem. This is referred to by community members as creating

+a [minimum reproducible example](https://stackoverflow.com/help/minimal-reproducible-example). Your code that reproduces

+the problem should be:

+

+- ✅ **Minimal** – Use as little code as possible that still produces the same problem

+- ✅ **Complete** – Provide **all** parts someone else needs to reproduce your problem in the question itself

+- ✅ **Reproducible** – Test the code you're about to provide to make sure it reproduces the problem

+

+In addition to the above requirements, for [Ultralytics](https://ultralytics.com/) to provide assistance your code

+should be:

+

+- ✅ **Current** – Verify that your code is up-to-date with current

+ GitHub [master](https://github.com/ultralytics/ultralytics/tree/main), and if necessary `git pull` or `git clone` a new

+ copy to ensure your problem has not already been resolved by previous commits.

+- ✅ **Unmodified** – Your problem must be reproducible without any modifications to the codebase in this

+ repository. [Ultralytics](https://ultralytics.com/) does not provide support for custom code ⚠️.

+

+If you believe your problem meets all of the above criteria, please close this issue and raise a new one using the 🐛

+**Bug Report** [template](https://github.com/ultralytics/ultralytics/issues/new/choose) and providing

+a [minimum reproducible example](https://stackoverflow.com/help/minimal-reproducible-example) to help us better

+understand and diagnose your problem.

+

+## License

+

+By contributing, you agree that your contributions will be licensed under

+the [GPL-3.0 license](https://choosealicense.com/licenses/gpl-3.0/)

diff --git a/MANIFEST.in b/MANIFEST.in

new file mode 100644

index 0000000000000000000000000000000000000000..1635ec154384b8857c15841c27f1845f90d6b988

--- /dev/null

+++ b/MANIFEST.in

@@ -0,0 +1,5 @@

+include *.md

+include requirements.txt

+include LICENSE

+include setup.py

+recursive-include ultralytics *.yaml

diff --git a/README.md b/README.md

index fdd03d7d6c6c1decda050b04e83df02f473f5805..acec568fa30cba210950bab8b08e7590455a4bf6 100644

--- a/README.md

+++ b/README.md

@@ -1,12 +1,82 @@

----

-title: YOLOv8 Real Time

-emoji: 🏢

-colorFrom: pink

-colorTo: green

-sdk: gradio

-sdk_version: 3.29.0

-app_file: app.py

-pinned: false

----

-

-Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

+

+YOLOv8 Object Detection with DeepSORT Tracking(ID + Trails)

+

+## Google Colab File Link (A Single Click Solution)

+The google colab file link for yolov8 object detection and tracking is provided below, you can check the implementation in Google Colab, and its a single click implementation, you just need to select the Run Time as GPU, and click on Run All.

+

+[`Google Colab File`](https://colab.research.google.com/drive/1U6cnTQ0JwCg4kdHxYSl2NAhU4wK18oAu?usp=sharing)

+

+## Object Detection and Tracking (ID + Trails) using YOLOv8 on Custom Data

+## Google Colab File Link (A Single Click Solution)

+[`Google Colab File`](https://colab.research.google.com/drive/1dEpI2k3m1i0vbvB4bNqPRQUO0gSBTz25?usp=sharing)

+

+## YOLOv8 Segmentation with DeepSORT Object Tracking

+

+[`Github Repo Link`](https://github.com/MuhammadMoinFaisal/YOLOv8_Segmentation_DeepSORT_Object_Tracking.git)

+

+## Steps to run Code

+

+- Clone the repository

+```

+git clone https://github.com/MuhammadMoinFaisal/YOLOv8-DeepSORT-Object-Tracking.git

+```

+- Goto the cloned folder.

+```

+cd YOLOv8-DeepSORT-Object-Tracking

+```

+- Install the dependecies

+```

+pip install -e '.[dev]'

+

+```

+

+- Setting the Directory.

+```

+cd ultralytics/yolo/v8/detect

+

+```

+- Downloading the DeepSORT Files From The Google Drive

+```

+

+https://drive.google.com/drive/folders/1kna8eWGrSfzaR6DtNJ8_GchGgPMv3VC8?usp=sharing

+```

+- After downloading the DeepSORT Zip file from the drive, unzip it go into the subfolders and place the deep_sort_pytorch folder into the yolo/v8/detect folder

+

+- Downloading a Sample Video from the Google Drive

+```

+gdown "https://drive.google.com/uc?id=1rjBn8Fl1E_9d0EMVtL24S9aNQOJAveR5&confirm=t"

+```

+

+- Run the code with mentioned command below.

+

+- For yolov8 object detection + Tracking

+```

+python predict.py model=yolov8l.pt source="test3.mp4" show=True

+```

+- For yolov8 object detection + Tracking + Vehicle Counting

+- Download the updated predict.py file from the Google Drive and place it into ultralytics/yolo/v8/detect folder

+- Google Drive Link

+```

+https://drive.google.com/drive/folders/1awlzTGHBBAn_2pKCkLFADMd1EN_rJETW?usp=sharing

+```

+- For yolov8 object detection + Tracking + Vehicle Counting

+```

+python predict.py model=yolov8l.pt source="test3.mp4" show=True

+```

+

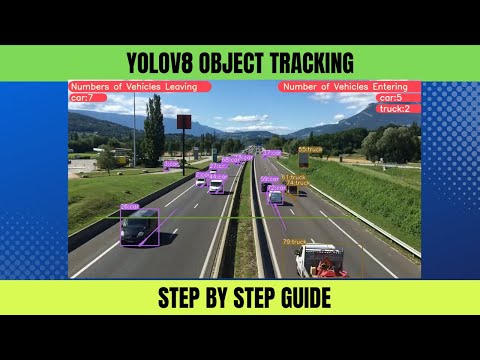

+### RESULTS

+

+#### Vehicles Detection, Tracking and Counting

+

+

+#### Vehicles Detection, Tracking and Counting

+

+

+

+### Watch the Complete Step by Step Explanation

+

+- Video Tutorial Link [`YouTube Link`](https://www.youtube.com/watch?v=9jRRZ-WL698)

+

+

+[]([https://www.youtube.com/watch?v=StTqXEQ2l-Y](https://www.youtube.com/watch?v=9jRRZ-WL698))

+

diff --git a/YOLOv8_DeepSORT_TRACKING_SCRIPT.ipynb b/YOLOv8_DeepSORT_TRACKING_SCRIPT.ipynb

new file mode 100644

index 0000000000000000000000000000000000000000..ba893bf990a58c3ab754e748230a83500f8217fa

--- /dev/null

+++ b/YOLOv8_DeepSORT_TRACKING_SCRIPT.ipynb

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:c0918af0bfa0ef2e0e9d26d9a8b06e2d706f5a5685d4e19eb58877a8036092ac

+size 16618677

diff --git a/YOLOv8_Detection_Tracking_CustomData_Complete.ipynb b/YOLOv8_Detection_Tracking_CustomData_Complete.ipynb

new file mode 100644

index 0000000000000000000000000000000000000000..7a8786db0d3690d707223a700bc5585bea2a395e

--- /dev/null

+++ b/YOLOv8_Detection_Tracking_CustomData_Complete.ipynb

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:3d514434f9b1d8f5f3a7fb72e782f82d3c136523a7fc7bb41c2a2a390f4aa783

+size 22625415

diff --git a/figure/figure1.png b/figure/figure1.png

new file mode 100644

index 0000000000000000000000000000000000000000..e5aca066a69033fc59f2a947f762f3253eb04c5c

--- /dev/null

+++ b/figure/figure1.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:664385ae049b026377706815fe377fe731aef846900f0343d50a214a269b4707

+size 2814838

diff --git a/figure/figure2.png b/figure/figure2.png

new file mode 100644

index 0000000000000000000000000000000000000000..6f3b640b32eb202e7528ccef1cc1045634ed04ae

--- /dev/null

+++ b/figure/figure2.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:a2c383e5fcfc524b385e41d33b0bbf56320a3a81d3a96f9500f7f254009c8f03

+size 2632436

diff --git a/figure/figure3.png b/figure/figure3.png

new file mode 100644

index 0000000000000000000000000000000000000000..7ef17e500eed453b2c5c2c70c04dec90f36ac0b7

--- /dev/null

+++ b/figure/figure3.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:cb719fd4505ae476bebe57131165764d11e6193d165cdecf70a760100cf6551f

+size 2941484

diff --git a/mkdocs.yml b/mkdocs.yml

new file mode 100644

index 0000000000000000000000000000000000000000..f71d4bd28dcff828114c35247a95440685c7c5ad

--- /dev/null

+++ b/mkdocs.yml

@@ -0,0 +1,95 @@

+site_name: Ultralytics Docs

+repo_url: https://github.com/ultralytics/ultralytics

+repo_name: Ultralytics

+

+theme:

+ name: "material"

+ logo: https://github.com/ultralytics/assets/raw/main/logo/Ultralytics-logomark-white.png

+ icon:

+ repo: fontawesome/brands/github

+ admonition:

+ note: octicons/tag-16

+ abstract: octicons/checklist-16

+ info: octicons/info-16

+ tip: octicons/squirrel-16

+ success: octicons/check-16

+ question: octicons/question-16

+ warning: octicons/alert-16

+ failure: octicons/x-circle-16

+ danger: octicons/zap-16

+ bug: octicons/bug-16

+ example: octicons/beaker-16

+ quote: octicons/quote-16

+

+ palette:

+ # Palette toggle for light mode

+ - scheme: default

+ toggle:

+ icon: material/brightness-7

+ name: Switch to dark mode

+

+ # Palette toggle for dark mode

+ - scheme: slate

+ toggle:

+ icon: material/brightness-4

+ name: Switch to light mode

+ features:

+ - content.code.annotate

+ - content.tooltips

+ - search.highlight

+ - search.share

+ - search.suggest

+ - toc.follow

+

+extra_css:

+ - stylesheets/style.css

+

+markdown_extensions:

+ # Div text decorators

+ - admonition

+ - pymdownx.details

+ - pymdownx.superfences

+ - tables

+ - attr_list

+ - def_list

+ # Syntax highlight

+ - pymdownx.highlight:

+ anchor_linenums: true

+ - pymdownx.inlinehilite

+ - pymdownx.snippets

+

+ # Button

+ - attr_list

+

+ # Content tabs

+ - pymdownx.superfences

+ - pymdownx.tabbed:

+ alternate_style: true

+

+ # Highlight

+ - pymdownx.critic

+ - pymdownx.caret

+ - pymdownx.keys

+ - pymdownx.mark

+ - pymdownx.tilde

+plugins:

+ - mkdocstrings

+

+# Primary navigation

+nav:

+ - Quickstart: quickstart.md

+ - CLI: cli.md

+ - Python Interface: sdk.md

+ - Configuration: config.md

+ - Customization Guide: engine.md

+ - Ultralytics HUB: hub.md

+ - iOS and Android App: app.md

+ - Reference:

+ - Python Model interface: reference/model.md

+ - Engine:

+ - Trainer: reference/base_trainer.md

+ - Validator: reference/base_val.md

+ - Predictor: reference/base_pred.md

+ - Exporter: reference/exporter.md

+ - nn Module: reference/nn.md

+ - operations: reference/ops.md

diff --git a/requirements.txt b/requirements.txt

new file mode 100644

index 0000000000000000000000000000000000000000..fbf5aaacfa1c0b665ef67a853d5c18f941b5cd8d

--- /dev/null

+++ b/requirements.txt

@@ -0,0 +1,46 @@

+# Ultralytics requirements

+# Usage: pip install -r requirements.txt

+

+# Base ----------------------------------------

+hydra-core>=1.2.0

+matplotlib>=3.2.2

+numpy>=1.18.5

+opencv-python>=4.1.1

+Pillow>=7.1.2

+PyYAML>=5.3.1

+requests>=2.23.0

+scipy>=1.4.1

+torch>=1.7.0

+torchvision>=0.8.1

+tqdm>=4.64.0

+

+# Logging -------------------------------------

+tensorboard>=2.4.1

+# clearml

+# comet

+

+# Plotting ------------------------------------

+pandas>=1.1.4

+seaborn>=0.11.0

+

+# Export --------------------------------------

+# coremltools>=6.0 # CoreML export

+# onnx>=1.12.0 # ONNX export

+# onnx-simplifier>=0.4.1 # ONNX simplifier

+# nvidia-pyindex # TensorRT export

+# nvidia-tensorrt # TensorRT export

+# scikit-learn==0.19.2 # CoreML quantization

+# tensorflow>=2.4.1 # TF exports (-cpu, -aarch64, -macos)

+# tensorflowjs>=3.9.0 # TF.js export

+# openvino-dev # OpenVINO export

+

+# Extras --------------------------------------

+ipython # interactive notebook

+psutil # system utilization

+thop>=0.1.1 # FLOPs computation

+# albumentations>=1.0.3

+# pycocotools>=2.0.6 # COCO mAP

+# roboflow

+

+# HUB -----------------------------------------

+GitPython>=3.1.24

diff --git a/setup.cfg b/setup.cfg

new file mode 100644

index 0000000000000000000000000000000000000000..d7c4cb3e1a4d34291816835b071ba0d75243d79b

--- /dev/null

+++ b/setup.cfg

@@ -0,0 +1,54 @@

+# Project-wide configuration file, can be used for package metadata and other toll configurations

+# Example usage: global configuration for PEP8 (via flake8) setting or default pytest arguments

+# Local usage: pip install pre-commit, pre-commit run --all-files

+

+[metadata]

+license_file = LICENSE

+description_file = README.md

+

+[tool:pytest]

+norecursedirs =

+ .git

+ dist

+ build

+addopts =

+ --doctest-modules

+ --durations=25

+ --color=yes

+

+[flake8]

+max-line-length = 120

+exclude = .tox,*.egg,build,temp

+select = E,W,F

+doctests = True

+verbose = 2

+# https://pep8.readthedocs.io/en/latest/intro.html#error-codes

+format = pylint

+# see: https://www.flake8rules.com/

+ignore = E731,F405,E402,F401,W504,E127,E231,E501,F403

+ # E731: Do not assign a lambda expression, use a def

+ # F405: name may be undefined, or defined from star imports: module

+ # E402: module level import not at top of file

+ # F401: module imported but unused

+ # W504: line break after binary operator

+ # E127: continuation line over-indented for visual indent

+ # E231: missing whitespace after ‘,’, ‘;’, or ‘:’

+ # E501: line too long

+ # F403: ‘from module import *’ used; unable to detect undefined names

+

+[isort]

+# https://pycqa.github.io/isort/docs/configuration/options.html

+line_length = 120

+# see: https://pycqa.github.io/isort/docs/configuration/multi_line_output_modes.html

+multi_line_output = 0

+

+[yapf]

+based_on_style = pep8

+spaces_before_comment = 2

+COLUMN_LIMIT = 120

+COALESCE_BRACKETS = True

+SPACES_AROUND_POWER_OPERATOR = True

+SPACE_BETWEEN_ENDING_COMMA_AND_CLOSING_BRACKET = False

+SPLIT_BEFORE_CLOSING_BRACKET = False

+SPLIT_BEFORE_FIRST_ARGUMENT = False

+# EACH_DICT_ENTRY_ON_SEPARATE_LINE = False

diff --git a/setup.py b/setup.py

new file mode 100644

index 0000000000000000000000000000000000000000..a5d13d953b4ac461dc8798c16e93dd19796d84bb

--- /dev/null

+++ b/setup.py

@@ -0,0 +1,53 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+import re

+from pathlib import Path

+

+import pkg_resources as pkg

+from setuptools import find_packages, setup

+

+# Settings

+FILE = Path(__file__).resolve()

+ROOT = FILE.parent # root directory

+README = (ROOT / "README.md").read_text(encoding="utf-8")

+REQUIREMENTS = [f'{x.name}{x.specifier}' for x in pkg.parse_requirements((ROOT / 'requirements.txt').read_text())]

+

+

+def get_version():

+ file = ROOT / 'ultralytics/__init__.py'

+ return re.search(r'^__version__ = [\'"]([^\'"]*)[\'"]', file.read_text(), re.M)[1]

+

+

+setup(

+ name="ultralytics", # name of pypi package

+ version=get_version(), # version of pypi package

+ python_requires=">=3.7.0",

+ license='GPL-3.0',

+ description='Ultralytics YOLOv8 and HUB',

+ long_description=README,

+ long_description_content_type="text/markdown",

+ url="https://github.com/ultralytics/ultralytics",

+ project_urls={

+ 'Bug Reports': 'https://github.com/ultralytics/ultralytics/issues',

+ 'Funding': 'https://ultralytics.com',

+ 'Source': 'https://github.com/ultralytics/ultralytics',},

+ author="Ultralytics",

+ author_email='hello@ultralytics.com',

+ packages=find_packages(), # required

+ include_package_data=True,

+ install_requires=REQUIREMENTS,

+ extras_require={

+ 'dev':

+ ['check-manifest', 'pytest', 'pytest-cov', 'coverage', 'mkdocs', 'mkdocstrings[python]', 'mkdocs-material'],},

+ classifiers=[

+ "Intended Audience :: Developers", "Intended Audience :: Science/Research",

+ "License :: OSI Approved :: GNU General Public License v3 (GPLv3)", "Programming Language :: Python :: 3",

+ "Programming Language :: Python :: 3.7", "Programming Language :: Python :: 3.8",

+ "Programming Language :: Python :: 3.9", "Programming Language :: Python :: 3.10",

+ "Topic :: Software Development", "Topic :: Scientific/Engineering",

+ "Topic :: Scientific/Engineering :: Artificial Intelligence",

+ "Topic :: Scientific/Engineering :: Image Recognition", "Operating System :: POSIX :: Linux",

+ "Operating System :: MacOS", "Operating System :: Microsoft :: Windows"],

+ keywords="machine-learning, deep-learning, vision, ML, DL, AI, YOLO, YOLOv3, YOLOv5, YOLOv8, HUB, Ultralytics",

+ entry_points={

+ 'console_scripts': ['yolo = ultralytics.yolo.cli:cli', 'ultralytics = ultralytics.yolo.cli:cli'],})

diff --git a/ultralytics/__init__.py b/ultralytics/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..0dcb7110db6eed6e668354a20d00578feb03e811

--- /dev/null

+++ b/ultralytics/__init__.py

@@ -0,0 +1,9 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+__version__ = "8.0.3"

+

+from ultralytics.hub import checks

+from ultralytics.yolo.engine.model import YOLO

+from ultralytics.yolo.utils import ops

+

+__all__ = ["__version__", "YOLO", "hub", "checks"] # allow simpler import

diff --git a/ultralytics/hub/__init__.py b/ultralytics/hub/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..9c945d5025071f0e920c8b24eaaf606579f03e1a

--- /dev/null

+++ b/ultralytics/hub/__init__.py

@@ -0,0 +1,133 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+import os

+import shutil

+

+import psutil

+import requests

+from IPython import display # to display images and clear console output

+

+from ultralytics.hub.auth import Auth

+from ultralytics.hub.session import HubTrainingSession

+from ultralytics.hub.utils import PREFIX, split_key

+from ultralytics.yolo.utils import LOGGER, emojis, is_colab

+from ultralytics.yolo.utils.torch_utils import select_device

+from ultralytics.yolo.v8.detect import DetectionTrainer

+

+

+def checks(verbose=True):

+ if is_colab():

+ shutil.rmtree('sample_data', ignore_errors=True) # remove colab /sample_data directory

+

+ if verbose:

+ # System info

+ gib = 1 << 30 # bytes per GiB

+ ram = psutil.virtual_memory().total

+ total, used, free = shutil.disk_usage("/")

+ display.clear_output()

+ s = f'({os.cpu_count()} CPUs, {ram / gib:.1f} GB RAM, {(total - free) / gib:.1f}/{total / gib:.1f} GB disk)'

+ else:

+ s = ''

+

+ select_device(newline=False)

+ LOGGER.info(f'Setup complete ✅ {s}')

+

+

+def start(key=''):

+ # Start training models with Ultralytics HUB. Usage: from src.ultralytics import start; start('API_KEY')

+ def request_api_key(attempts=0):

+ """Prompt the user to input their API key"""

+ import getpass

+

+ max_attempts = 3

+ tries = f"Attempt {str(attempts + 1)} of {max_attempts}" if attempts > 0 else ""

+ LOGGER.info(f"{PREFIX}Login. {tries}")

+ input_key = getpass.getpass("Enter your Ultralytics HUB API key:\n")

+ auth.api_key, model_id = split_key(input_key)

+ if not auth.authenticate():

+ attempts += 1

+ LOGGER.warning(f"{PREFIX}Invalid API key ⚠️\n")

+ if attempts < max_attempts:

+ return request_api_key(attempts)

+ raise ConnectionError(emojis(f"{PREFIX}Failed to authenticate ❌"))

+ else:

+ return model_id

+

+ try:

+ api_key, model_id = split_key(key)

+ auth = Auth(api_key) # attempts cookie login if no api key is present

+ attempts = 1 if len(key) else 0

+ if not auth.get_state():

+ if len(key):

+ LOGGER.warning(f"{PREFIX}Invalid API key ⚠️\n")

+ model_id = request_api_key(attempts)

+ LOGGER.info(f"{PREFIX}Authenticated ✅")

+ if not model_id:

+ raise ConnectionError(emojis('Connecting with global API key is not currently supported. ❌'))

+ session = HubTrainingSession(model_id=model_id, auth=auth)

+ session.check_disk_space()

+

+ # TODO: refactor, hardcoded for v8

+ args = session.model.copy()

+ args.pop("id")

+ args.pop("status")

+ args.pop("weights")

+ args["data"] = "coco128.yaml"

+ args["model"] = "yolov8n.yaml"

+ args["batch_size"] = 16

+ args["imgsz"] = 64

+

+ trainer = DetectionTrainer(overrides=args)

+ session.register_callbacks(trainer)

+ setattr(trainer, 'hub_session', session)

+ trainer.train()

+ except Exception as e:

+ LOGGER.warning(f"{PREFIX}{e}")

+

+

+def reset_model(key=''):

+ # Reset a trained model to an untrained state

+ api_key, model_id = split_key(key)

+ r = requests.post('https://api.ultralytics.com/model-reset', json={"apiKey": api_key, "modelId": model_id})

+

+ if r.status_code == 200:

+ LOGGER.info(f"{PREFIX}model reset successfully")

+ return

+ LOGGER.warning(f"{PREFIX}model reset failure {r.status_code} {r.reason}")

+

+

+def export_model(key='', format='torchscript'):

+ # Export a model to all formats

+ api_key, model_id = split_key(key)

+ formats = ('torchscript', 'onnx', 'openvino', 'engine', 'coreml', 'saved_model', 'pb', 'tflite', 'edgetpu', 'tfjs',

+ 'ultralytics_tflite', 'ultralytics_coreml')

+ assert format in formats, f"ERROR: Unsupported export format '{format}' passed, valid formats are {formats}"

+

+ r = requests.post('https://api.ultralytics.com/export',

+ json={

+ "apiKey": api_key,

+ "modelId": model_id,

+ "format": format})

+ assert r.status_code == 200, f"{PREFIX}{format} export failure {r.status_code} {r.reason}"

+ LOGGER.info(f"{PREFIX}{format} export started ✅")

+

+

+def get_export(key='', format='torchscript'):

+ # Get an exported model dictionary with download URL

+ api_key, model_id = split_key(key)

+ formats = ('torchscript', 'onnx', 'openvino', 'engine', 'coreml', 'saved_model', 'pb', 'tflite', 'edgetpu', 'tfjs',

+ 'ultralytics_tflite', 'ultralytics_coreml')

+ assert format in formats, f"ERROR: Unsupported export format '{format}' passed, valid formats are {formats}"

+

+ r = requests.post('https://api.ultralytics.com/get-export',

+ json={

+ "apiKey": api_key,

+ "modelId": model_id,

+ "format": format})

+ assert r.status_code == 200, f"{PREFIX}{format} get_export failure {r.status_code} {r.reason}"

+ return r.json()

+

+

+# temp. For checking

+if __name__ == "__main__":

+ start(key="b3fba421be84a20dbe68644e14436d1cce1b0a0aaa_HeMfHgvHsseMPhdq7Ylz")

diff --git a/ultralytics/hub/auth.py b/ultralytics/hub/auth.py

new file mode 100644

index 0000000000000000000000000000000000000000..e38f228bca451467b2a622a378a469ddef1d0c13

--- /dev/null

+++ b/ultralytics/hub/auth.py

@@ -0,0 +1,70 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+import requests

+

+from ultralytics.hub.utils import HUB_API_ROOT, request_with_credentials

+from ultralytics.yolo.utils import is_colab

+

+API_KEY_PATH = "https://hub.ultralytics.com/settings?tab=api+keys"

+

+

+class Auth:

+ id_token = api_key = model_key = False

+

+ def __init__(self, api_key=None):

+ self.api_key = self._clean_api_key(api_key)

+ self.authenticate() if self.api_key else self.auth_with_cookies()

+

+ @staticmethod

+ def _clean_api_key(key: str) -> str:

+ """Strip model from key if present"""

+ separator = "_"

+ return key.split(separator)[0] if separator in key else key

+

+ def authenticate(self) -> bool:

+ """Attempt to authenticate with server"""

+ try:

+ header = self.get_auth_header()

+ if header:

+ r = requests.post(f"{HUB_API_ROOT}/v1/auth", headers=header)

+ if not r.json().get('success', False):

+ raise ConnectionError("Unable to authenticate.")

+ return True

+ raise ConnectionError("User has not authenticated locally.")

+ except ConnectionError:

+ self.id_token = self.api_key = False # reset invalid

+ return False

+

+ def auth_with_cookies(self) -> bool:

+ """

+ Attempt to fetch authentication via cookies and set id_token.

+ User must be logged in to HUB and running in a supported browser.

+ """

+ if not is_colab():

+ return False # Currently only works with Colab

+ try:

+ authn = request_with_credentials(f"{HUB_API_ROOT}/v1/auth/auto")

+ if authn.get("success", False):

+ self.id_token = authn.get("data", {}).get("idToken", None)

+ self.authenticate()

+ return True

+ raise ConnectionError("Unable to fetch browser authentication details.")

+ except ConnectionError:

+ self.id_token = False # reset invalid

+ return False

+

+ def get_auth_header(self):

+ if self.id_token:

+ return {"authorization": f"Bearer {self.id_token}"}

+ elif self.api_key:

+ return {"x-api-key": self.api_key}

+ else:

+ return None

+

+ def get_state(self) -> bool:

+ """Get the authentication state"""

+ return self.id_token or self.api_key

+

+ def set_api_key(self, key: str):

+ """Get the authentication state"""

+ self.api_key = key

diff --git a/ultralytics/hub/session.py b/ultralytics/hub/session.py

new file mode 100644

index 0000000000000000000000000000000000000000..58d268fe85c15360584116daa9e29d17c93b8e9b

--- /dev/null

+++ b/ultralytics/hub/session.py

@@ -0,0 +1,122 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+import signal

+import sys

+from pathlib import Path

+from time import sleep

+

+import requests

+

+from ultralytics import __version__

+from ultralytics.hub.utils import HUB_API_ROOT, check_dataset_disk_space, smart_request

+from ultralytics.yolo.utils import LOGGER, is_colab, threaded

+

+AGENT_NAME = f'python-{__version__}-colab' if is_colab() else f'python-{__version__}-local'

+

+session = None

+

+

+def signal_handler(signum, frame):

+ """ Confirm exit """

+ global hub_logger

+ LOGGER.info(f'Signal received. {signum} {frame}')

+ if isinstance(session, HubTrainingSession):

+ hub_logger.alive = False

+ del hub_logger

+ sys.exit(signum)

+

+

+signal.signal(signal.SIGTERM, signal_handler)

+signal.signal(signal.SIGINT, signal_handler)

+

+

+class HubTrainingSession:

+

+ def __init__(self, model_id, auth):

+ self.agent_id = None # identifies which instance is communicating with server

+ self.model_id = model_id

+ self.api_url = f'{HUB_API_ROOT}/v1/models/{model_id}'

+ self.auth_header = auth.get_auth_header()

+ self.rate_limits = {'metrics': 3.0, 'ckpt': 900.0, 'heartbeat': 300.0} # rate limits (seconds)

+ self.t = {} # rate limit timers (seconds)

+ self.metrics_queue = {} # metrics queue

+ self.alive = True # for heartbeats

+ self.model = self._get_model()

+ self._heartbeats() # start heartbeats

+

+ def __del__(self):

+ # Class destructor

+ self.alive = False

+

+ def upload_metrics(self):

+ payload = {"metrics": self.metrics_queue.copy(), "type": "metrics"}

+ smart_request(f'{self.api_url}', json=payload, headers=self.auth_header, code=2)

+

+ def upload_model(self, epoch, weights, is_best=False, map=0.0, final=False):

+ # Upload a model to HUB

+ file = None

+ if Path(weights).is_file():

+ with open(weights, "rb") as f:

+ file = f.read()

+ if final:

+ smart_request(f'{self.api_url}/upload',

+ data={

+ "epoch": epoch,

+ "type": "final",

+ "map": map},

+ files={"best.pt": file},

+ headers=self.auth_header,

+ retry=10,

+ timeout=3600,

+ code=4)

+ else:

+ smart_request(f'{self.api_url}/upload',

+ data={

+ "epoch": epoch,

+ "type": "epoch",

+ "isBest": bool(is_best)},

+ headers=self.auth_header,

+ files={"last.pt": file},

+ code=3)

+

+ def _get_model(self):

+ # Returns model from database by id

+ api_url = f"{HUB_API_ROOT}/v1/models/{self.model_id}"

+ headers = self.auth_header

+

+ try:

+ r = smart_request(api_url, method="get", headers=headers, thread=False, code=0)

+ data = r.json().get("data", None)

+ if not data:

+ return

+ assert data['data'], 'ERROR: Dataset may still be processing. Please wait a minute and try again.' # RF fix

+ self.model_id = data["id"]

+

+ return data

+ except requests.exceptions.ConnectionError as e:

+ raise ConnectionRefusedError('ERROR: The HUB server is not online. Please try again later.') from e

+

+ def check_disk_space(self):

+ if not check_dataset_disk_space(self.model['data']):

+ raise MemoryError("Not enough disk space")

+

+ # COMMENT: Should not be needed as HUB is now considered an integration and is in integrations_callbacks

+ # import ultralytics.yolo.utils.callbacks.hub as hub_callbacks

+ # @staticmethod

+ # def register_callbacks(trainer):

+ # for k, v in hub_callbacks.callbacks.items():

+ # trainer.add_callback(k, v)

+

+ @threaded

+ def _heartbeats(self):

+ while self.alive:

+ r = smart_request(f'{HUB_API_ROOT}/v1/agent/heartbeat/models/{self.model_id}',

+ json={

+ "agent": AGENT_NAME,

+ "agentId": self.agent_id},

+ headers=self.auth_header,

+ retry=0,

+ code=5,

+ thread=False)

+ self.agent_id = r.json().get('data', {}).get('agentId', None)

+ sleep(self.rate_limits['heartbeat'])

diff --git a/ultralytics/hub/utils.py b/ultralytics/hub/utils.py

new file mode 100644

index 0000000000000000000000000000000000000000..1b925399b951a666305684f390d82b3d900515a1

--- /dev/null

+++ b/ultralytics/hub/utils.py

@@ -0,0 +1,150 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+import os

+import shutil

+import threading

+import time

+

+import requests

+

+from ultralytics.yolo.utils import DEFAULT_CONFIG_DICT, LOGGER, RANK, SETTINGS, TryExcept, colorstr, emojis

+

+PREFIX = colorstr('Ultralytics: ')

+HELP_MSG = 'If this issue persists please visit https://github.com/ultralytics/hub/issues for assistance.'

+HUB_API_ROOT = os.environ.get("ULTRALYTICS_HUB_API", "https://api.ultralytics.com")

+

+

+def check_dataset_disk_space(url='https://github.com/ultralytics/yolov5/releases/download/v1.0/coco128.zip', sf=2.0):

+ # Check that url fits on disk with safety factor sf, i.e. require 2GB free if url size is 1GB with sf=2.0

+ gib = 1 << 30 # bytes per GiB

+ data = int(requests.head(url).headers['Content-Length']) / gib # dataset size (GB)

+ total, used, free = (x / gib for x in shutil.disk_usage("/")) # bytes

+ LOGGER.info(f'{PREFIX}{data:.3f} GB dataset, {free:.1f}/{total:.1f} GB free disk space')

+ if data * sf < free:

+ return True # sufficient space

+ LOGGER.warning(f'{PREFIX}WARNING: Insufficient free disk space {free:.1f} GB < {data * sf:.3f} GB required, '

+ f'training cancelled ❌. Please free {data * sf - free:.1f} GB additional disk space and try again.')

+ return False # insufficient space

+

+

+def request_with_credentials(url: str) -> any:

+ """ Make an ajax request with cookies attached """

+ from google.colab import output # noqa

+ from IPython import display # noqa

+ display.display(

+ display.Javascript("""

+ window._hub_tmp = new Promise((resolve, reject) => {

+ const timeout = setTimeout(() => reject("Failed authenticating existing browser session"), 5000)

+ fetch("%s", {

+ method: 'POST',

+ credentials: 'include'

+ })

+ .then((response) => resolve(response.json()))

+ .then((json) => {

+ clearTimeout(timeout);

+ }).catch((err) => {

+ clearTimeout(timeout);

+ reject(err);

+ });

+ });

+ """ % url))

+ return output.eval_js("_hub_tmp")

+

+

+# Deprecated TODO: eliminate this function?

+def split_key(key=''):

+ """

+ Verify and split a 'api_key[sep]model_id' string, sep is one of '.' or '_'

+

+ Args:

+ key (str): The model key to split. If not provided, the user will be prompted to enter it.

+

+ Returns:

+ Tuple[str, str]: A tuple containing the API key and model ID.

+ """

+

+ import getpass

+

+ error_string = emojis(f'{PREFIX}Invalid API key ⚠️\n') # error string

+ if not key:

+ key = getpass.getpass('Enter model key: ')

+ sep = '_' if '_' in key else '.' if '.' in key else None # separator

+ assert sep, error_string

+ api_key, model_id = key.split(sep)

+ assert len(api_key) and len(model_id), error_string

+ return api_key, model_id

+

+

+def smart_request(*args, retry=3, timeout=30, thread=True, code=-1, method="post", verbose=True, **kwargs):

+ """

+ Makes an HTTP request using the 'requests' library, with exponential backoff retries up to a specified timeout.

+

+ Args:

+ *args: Positional arguments to be passed to the requests function specified in method.

+ retry (int, optional): Number of retries to attempt before giving up. Default is 3.

+ timeout (int, optional): Timeout in seconds after which the function will give up retrying. Default is 30.

+ thread (bool, optional): Whether to execute the request in a separate daemon thread. Default is True.

+ code (int, optional): An identifier for the request, used for logging purposes. Default is -1.

+ method (str, optional): The HTTP method to use for the request. Choices are 'post' and 'get'. Default is 'post'.

+ verbose (bool, optional): A flag to determine whether to print out to console or not. Default is True.

+ **kwargs: Keyword arguments to be passed to the requests function specified in method.

+

+ Returns:

+ requests.Response: The HTTP response object. If the request is executed in a separate thread, returns None.

+ """

+ retry_codes = (408, 500) # retry only these codes

+

+ def func(*func_args, **func_kwargs):

+ r = None # response

+ t0 = time.time() # initial time for timer

+ for i in range(retry + 1):

+ if (time.time() - t0) > timeout:

+ break

+ if method == 'post':

+ r = requests.post(*func_args, **func_kwargs) # i.e. post(url, data, json, files)

+ elif method == 'get':

+ r = requests.get(*func_args, **func_kwargs) # i.e. get(url, data, json, files)

+ if r.status_code == 200:

+ break

+ try:

+ m = r.json().get('message', 'No JSON message.')

+ except AttributeError:

+ m = 'Unable to read JSON.'

+ if i == 0:

+ if r.status_code in retry_codes:

+ m += f' Retrying {retry}x for {timeout}s.' if retry else ''

+ elif r.status_code == 429: # rate limit

+ h = r.headers # response headers

+ m = f"Rate limit reached ({h['X-RateLimit-Remaining']}/{h['X-RateLimit-Limit']}). " \

+ f"Please retry after {h['Retry-After']}s."

+ if verbose:

+ LOGGER.warning(f"{PREFIX}{m} {HELP_MSG} ({r.status_code} #{code})")

+ if r.status_code not in retry_codes:

+ return r

+ time.sleep(2 ** i) # exponential standoff

+ return r

+

+ if thread:

+ threading.Thread(target=func, args=args, kwargs=kwargs, daemon=True).start()

+ else:

+ return func(*args, **kwargs)

+

+

+@TryExcept()

+def sync_analytics(cfg, all_keys=False, enabled=False):

+ """

+ Sync analytics data if enabled in the global settings

+

+ Args:

+ cfg (DictConfig): Configuration for the task and mode.

+ all_keys (bool): Sync all items, not just non-default values.

+ enabled (bool): For debugging.

+ """

+ if SETTINGS['sync'] and RANK in {-1, 0} and enabled:

+ cfg = dict(cfg) # convert type from DictConfig to dict

+ if not all_keys:

+ cfg = {k: v for k, v in cfg.items() if v != DEFAULT_CONFIG_DICT.get(k, None)} # retain non-default values

+ cfg['uuid'] = SETTINGS['uuid'] # add the device UUID to the configuration data

+

+ # Send a request to the HUB API to sync analytics

+ smart_request(f'{HUB_API_ROOT}/v1/usage/anonymous', json=cfg, headers=None, code=3, retry=0, verbose=False)

diff --git a/ultralytics/models/README.md b/ultralytics/models/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..7d726576fa52c402217d98845d41dec0a7ea969d

--- /dev/null

+++ b/ultralytics/models/README.md

@@ -0,0 +1,36 @@

+## Models

+

+Welcome to the Ultralytics Models directory! Here you will find a wide variety of pre-configured model configuration

+files (`*.yaml`s) that can be used to create custom YOLO models. The models in this directory have been expertly crafted

+and fine-tuned by the Ultralytics team to provide the best performance for a wide range of object detection and image

+segmentation tasks.

+

+These model configurations cover a wide range of scenarios, from simple object detection to more complex tasks like

+instance segmentation and object tracking. They are also designed to run efficiently on a variety of hardware platforms,

+from CPUs to GPUs. Whether you are a seasoned machine learning practitioner or just getting started with YOLO, this

+directory provides a great starting point for your custom model development needs.

+

+To get started, simply browse through the models in this directory and find one that best suits your needs. Once you've

+selected a model, you can use the provided `*.yaml` file to train and deploy your custom YOLO model with ease. See full

+details at the Ultralytics [Docs](https://docs.ultralytics.com), and if you need help or have any questions, feel free

+to reach out to the Ultralytics team for support. So, don't wait, start creating your custom YOLO model now!

+

+### Usage

+

+Model `*.yaml` files may be used directly in the Command Line Interface (CLI) with a `yolo` command:

+

+```bash

+yolo task=detect mode=train model=yolov8n.yaml data=coco128.yaml epochs=100

+```

+

+They may also be used directly in a Python environment, and accepts the same

+[arguments](https://docs.ultralytics.com/config/) as in the CLI example above:

+

+```python

+from ultralytics import YOLO

+

+model = YOLO("yolov8n.yaml") # build a YOLOv8n model from scratch

+

+model.info() # display model information

+model.train(data="coco128.yaml", epochs=100) # train the model

+```

diff --git a/ultralytics/models/v3/yolov3-spp.yaml b/ultralytics/models/v3/yolov3-spp.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..5d6794f1d02e16563355c26aab7c96967941c92e

--- /dev/null

+++ b/ultralytics/models/v3/yolov3-spp.yaml

@@ -0,0 +1,47 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 80 # number of classes

+depth_multiple: 1.0 # model depth multiple

+width_multiple: 1.0 # layer channel multiple

+

+# darknet53 backbone

+backbone:

+ # [from, number, module, args]

+ [[-1, 1, Conv, [32, 3, 1]], # 0

+ [-1, 1, Conv, [64, 3, 2]], # 1-P1/2

+ [-1, 1, Bottleneck, [64]],

+ [-1, 1, Conv, [128, 3, 2]], # 3-P2/4

+ [-1, 2, Bottleneck, [128]],

+ [-1, 1, Conv, [256, 3, 2]], # 5-P3/8

+ [-1, 8, Bottleneck, [256]],

+ [-1, 1, Conv, [512, 3, 2]], # 7-P4/16

+ [-1, 8, Bottleneck, [512]],

+ [-1, 1, Conv, [1024, 3, 2]], # 9-P5/32

+ [-1, 4, Bottleneck, [1024]], # 10

+ ]

+

+# YOLOv3-SPP head

+head:

+ [[-1, 1, Bottleneck, [1024, False]],

+ [-1, 1, SPP, [512, [5, 9, 13]]],

+ [-1, 1, Conv, [1024, 3, 1]],

+ [-1, 1, Conv, [512, 1, 1]],

+ [-1, 1, Conv, [1024, 3, 1]], # 15 (P5/32-large)

+

+ [-2, 1, Conv, [256, 1, 1]],

+ [-1, 1, nn.Upsample, [None, 2, 'nearest']],

+ [[-1, 8], 1, Concat, [1]], # cat backbone P4

+ [-1, 1, Bottleneck, [512, False]],

+ [-1, 1, Bottleneck, [512, False]],

+ [-1, 1, Conv, [256, 1, 1]],

+ [-1, 1, Conv, [512, 3, 1]], # 22 (P4/16-medium)

+

+ [-2, 1, Conv, [128, 1, 1]],

+ [-1, 1, nn.Upsample, [None, 2, 'nearest']],

+ [[-1, 6], 1, Concat, [1]], # cat backbone P3

+ [-1, 1, Bottleneck, [256, False]],

+ [-1, 2, Bottleneck, [256, False]], # 27 (P3/8-small)

+

+ [[27, 22, 15], 1, Detect, [nc]], # Detect(P3, P4, P5)

+ ]

diff --git a/ultralytics/models/v3/yolov3-tiny.yaml b/ultralytics/models/v3/yolov3-tiny.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..d7921d37800c92832c52ba506a902ac82ea96a24

--- /dev/null

+++ b/ultralytics/models/v3/yolov3-tiny.yaml

@@ -0,0 +1,38 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 80 # number of classes

+depth_multiple: 1.0 # model depth multiple

+width_multiple: 1.0 # layer channel multiple

+

+# YOLOv3-tiny backbone

+backbone:

+ # [from, number, module, args]

+ [[-1, 1, Conv, [16, 3, 1]], # 0

+ [-1, 1, nn.MaxPool2d, [2, 2, 0]], # 1-P1/2

+ [-1, 1, Conv, [32, 3, 1]],

+ [-1, 1, nn.MaxPool2d, [2, 2, 0]], # 3-P2/4

+ [-1, 1, Conv, [64, 3, 1]],

+ [-1, 1, nn.MaxPool2d, [2, 2, 0]], # 5-P3/8

+ [-1, 1, Conv, [128, 3, 1]],

+ [-1, 1, nn.MaxPool2d, [2, 2, 0]], # 7-P4/16

+ [-1, 1, Conv, [256, 3, 1]],

+ [-1, 1, nn.MaxPool2d, [2, 2, 0]], # 9-P5/32

+ [-1, 1, Conv, [512, 3, 1]],

+ [-1, 1, nn.ZeroPad2d, [[0, 1, 0, 1]]], # 11

+ [-1, 1, nn.MaxPool2d, [2, 1, 0]], # 12

+ ]

+

+# YOLOv3-tiny head

+head:

+ [[-1, 1, Conv, [1024, 3, 1]],

+ [-1, 1, Conv, [256, 1, 1]],

+ [-1, 1, Conv, [512, 3, 1]], # 15 (P5/32-large)

+

+ [-2, 1, Conv, [128, 1, 1]],

+ [-1, 1, nn.Upsample, [None, 2, 'nearest']],

+ [[-1, 8], 1, Concat, [1]], # cat backbone P4

+ [-1, 1, Conv, [256, 3, 1]], # 19 (P4/16-medium)

+

+ [[19, 15], 1, Detect, [nc]], # Detect(P4, P5)

+ ]

diff --git a/ultralytics/models/v3/yolov3.yaml b/ultralytics/models/v3/yolov3.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..3ecb642457ebfa2dea684ea714837da4a879ada9

--- /dev/null

+++ b/ultralytics/models/v3/yolov3.yaml

@@ -0,0 +1,47 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 80 # number of classes

+depth_multiple: 1.0 # model depth multiple

+width_multiple: 1.0 # layer channel multiple

+

+# darknet53 backbone

+backbone:

+ # [from, number, module, args]

+ [[-1, 1, Conv, [32, 3, 1]], # 0

+ [-1, 1, Conv, [64, 3, 2]], # 1-P1/2

+ [-1, 1, Bottleneck, [64]],

+ [-1, 1, Conv, [128, 3, 2]], # 3-P2/4

+ [-1, 2, Bottleneck, [128]],

+ [-1, 1, Conv, [256, 3, 2]], # 5-P3/8

+ [-1, 8, Bottleneck, [256]],

+ [-1, 1, Conv, [512, 3, 2]], # 7-P4/16

+ [-1, 8, Bottleneck, [512]],

+ [-1, 1, Conv, [1024, 3, 2]], # 9-P5/32

+ [-1, 4, Bottleneck, [1024]], # 10

+ ]

+

+# YOLOv3 head

+head:

+ [[-1, 1, Bottleneck, [1024, False]],

+ [-1, 1, Conv, [512, 1, 1]],

+ [-1, 1, Conv, [1024, 3, 1]],

+ [-1, 1, Conv, [512, 1, 1]],

+ [-1, 1, Conv, [1024, 3, 1]], # 15 (P5/32-large)

+

+ [-2, 1, Conv, [256, 1, 1]],

+ [-1, 1, nn.Upsample, [None, 2, 'nearest']],

+ [[-1, 8], 1, Concat, [1]], # cat backbone P4

+ [-1, 1, Bottleneck, [512, False]],

+ [-1, 1, Bottleneck, [512, False]],

+ [-1, 1, Conv, [256, 1, 1]],

+ [-1, 1, Conv, [512, 3, 1]], # 22 (P4/16-medium)

+

+ [-2, 1, Conv, [128, 1, 1]],

+ [-1, 1, nn.Upsample, [None, 2, 'nearest']],

+ [[-1, 6], 1, Concat, [1]], # cat backbone P3

+ [-1, 1, Bottleneck, [256, False]],

+ [-1, 2, Bottleneck, [256, False]], # 27 (P3/8-small)

+

+ [[27, 22, 15], 1, Detect, [nc]], # Detect(P3, P4, P5)

+ ]

diff --git a/ultralytics/models/v5/yolov5l.yaml b/ultralytics/models/v5/yolov5l.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..ca3a85d364e84c90cf6df6457ecd51791baa7040

--- /dev/null

+++ b/ultralytics/models/v5/yolov5l.yaml

@@ -0,0 +1,44 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 80 # number of classes

+depth_multiple: 1.0 # model depth multiple

+width_multiple: 1.0 # layer channel multiple

+

+# YOLOv5 v6.0 backbone

+backbone:

+ # [from, number, module, args]

+ [[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

+ [-1, 1, Conv, [128, 3, 2]], # 1-P2/4

+ [-1, 3, C3, [128]],

+ [-1, 1, Conv, [256, 3, 2]], # 3-P3/8

+ [-1, 6, C3, [256]],

+ [-1, 1, Conv, [512, 3, 2]], # 5-P4/16

+ [-1, 9, C3, [512]],

+ [-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

+ [-1, 3, C3, [1024]],

+ [-1, 1, SPPF, [1024, 5]], # 9

+ ]

+

+# YOLOv5 v6.0 head

+head:

+ [[-1, 1, Conv, [512, 1, 1]],

+ [-1, 1, nn.Upsample, [None, 2, 'nearest']],

+ [[-1, 6], 1, Concat, [1]], # cat backbone P4

+ [-1, 3, C3, [512, False]], # 13

+

+ [-1, 1, Conv, [256, 1, 1]],

+ [-1, 1, nn.Upsample, [None, 2, 'nearest']],

+ [[-1, 4], 1, Concat, [1]], # cat backbone P3

+ [-1, 3, C3, [256, False]], # 17 (P3/8-small)

+

+ [-1, 1, Conv, [256, 3, 2]],

+ [[-1, 14], 1, Concat, [1]], # cat head P4

+ [-1, 3, C3, [512, False]], # 20 (P4/16-medium)

+

+ [-1, 1, Conv, [512, 3, 2]],

+ [[-1, 10], 1, Concat, [1]], # cat head P5

+ [-1, 3, C3, [1024, False]], # 23 (P5/32-large)

+

+ [[17, 20, 23], 1, Detect, [nc]], # Detect(P3, P4, P5)

+ ]

\ No newline at end of file

diff --git a/ultralytics/models/v5/yolov5m.yaml b/ultralytics/models/v5/yolov5m.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..fddcc63b744a717f3e12cebd4508fbd3778bd188

--- /dev/null

+++ b/ultralytics/models/v5/yolov5m.yaml

@@ -0,0 +1,44 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 80 # number of classes

+depth_multiple: 0.67 # model depth multiple

+width_multiple: 0.75 # layer channel multiple

+

+# YOLOv5 v6.0 backbone

+backbone:

+ # [from, number, module, args]

+ [[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

+ [-1, 1, Conv, [128, 3, 2]], # 1-P2/4

+ [-1, 3, C3, [128]],

+ [-1, 1, Conv, [256, 3, 2]], # 3-P3/8

+ [-1, 6, C3, [256]],

+ [-1, 1, Conv, [512, 3, 2]], # 5-P4/16

+ [-1, 9, C3, [512]],

+ [-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

+ [-1, 3, C3, [1024]],

+ [-1, 1, SPPF, [1024, 5]], # 9

+ ]

+

+# YOLOv5 v6.0 head

+head:

+ [[-1, 1, Conv, [512, 1, 1]],

+ [-1, 1, nn.Upsample, [None, 2, 'nearest']],

+ [[-1, 6], 1, Concat, [1]], # cat backbone P4

+ [-1, 3, C3, [512, False]], # 13

+

+ [-1, 1, Conv, [256, 1, 1]],

+ [-1, 1, nn.Upsample, [None, 2, 'nearest']],

+ [[-1, 4], 1, Concat, [1]], # cat backbone P3

+ [-1, 3, C3, [256, False]], # 17 (P3/8-small)

+

+ [-1, 1, Conv, [256, 3, 2]],

+ [[-1, 14], 1, Concat, [1]], # cat head P4

+ [-1, 3, C3, [512, False]], # 20 (P4/16-medium)

+

+ [-1, 1, Conv, [512, 3, 2]],

+ [[-1, 10], 1, Concat, [1]], # cat head P5

+ [-1, 3, C3, [1024, False]], # 23 (P5/32-large)

+

+ [[17, 20, 23], 1, Detect, [nc]], # Detect(P3, P4, P5)

+ ]

\ No newline at end of file

diff --git a/ultralytics/models/v5/yolov5n.yaml b/ultralytics/models/v5/yolov5n.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..259e1cf4bd4ad9f8391f2876273b7c82849c42db

--- /dev/null

+++ b/ultralytics/models/v5/yolov5n.yaml

@@ -0,0 +1,44 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 80 # number of classes

+depth_multiple: 0.33 # model depth multiple

+width_multiple: 0.25 # layer channel multiple

+

+# YOLOv5 v6.0 backbone

+backbone:

+ # [from, number, module, args]

+ [[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

+ [-1, 1, Conv, [128, 3, 2]], # 1-P2/4

+ [-1, 3, C3, [128]],

+ [-1, 1, Conv, [256, 3, 2]], # 3-P3/8

+ [-1, 6, C3, [256]],

+ [-1, 1, Conv, [512, 3, 2]], # 5-P4/16

+ [-1, 9, C3, [512]],

+ [-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

+ [-1, 3, C3, [1024]],

+ [-1, 1, SPPF, [1024, 5]], # 9

+ ]

+

+# YOLOv5 v6.0 head

+head:

+ [[-1, 1, Conv, [512, 1, 1]],

+ [-1, 1, nn.Upsample, [None, 2, 'nearest']],

+ [[-1, 6], 1, Concat, [1]], # cat backbone P4

+ [-1, 3, C3, [512, False]], # 13

+

+ [-1, 1, Conv, [256, 1, 1]],

+ [-1, 1, nn.Upsample, [None, 2, 'nearest']],

+ [[-1, 4], 1, Concat, [1]], # cat backbone P3

+ [-1, 3, C3, [256, False]], # 17 (P3/8-small)

+

+ [-1, 1, Conv, [256, 3, 2]],

+ [[-1, 14], 1, Concat, [1]], # cat head P4

+ [-1, 3, C3, [512, False]], # 20 (P4/16-medium)

+

+ [-1, 1, Conv, [512, 3, 2]],

+ [[-1, 10], 1, Concat, [1]], # cat head P5

+ [-1, 3, C3, [1024, False]], # 23 (P5/32-large)

+

+ [[17, 20, 23], 1, Detect, [nc]], # Detect(P3, P4, P5)

+ ]

\ No newline at end of file

diff --git a/ultralytics/models/v5/yolov5s.yaml b/ultralytics/models/v5/yolov5s.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..9e63349f9aa68d654bc9e55a19102e704ca32de9

--- /dev/null

+++ b/ultralytics/models/v5/yolov5s.yaml

@@ -0,0 +1,45 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 80 # number of classes

+depth_multiple: 0.33 # model depth multiple

+width_multiple: 0.50 # layer channel multiple

+

+

+# YOLOv5 v6.0 backbone

+backbone:

+ # [from, number, module, args]

+ [[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

+ [-1, 1, Conv, [128, 3, 2]], # 1-P2/4

+ [-1, 3, C3, [128]],

+ [-1, 1, Conv, [256, 3, 2]], # 3-P3/8

+ [-1, 6, C3, [256]],

+ [-1, 1, Conv, [512, 3, 2]], # 5-P4/16

+ [-1, 9, C3, [512]],

+ [-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

+ [-1, 3, C3, [1024]],

+ [-1, 1, SPPF, [1024, 5]], # 9

+ ]

+

+# YOLOv5 v6.0 head

+head:

+ [[-1, 1, Conv, [512, 1, 1]],

+ [-1, 1, nn.Upsample, [None, 2, 'nearest']],

+ [[-1, 6], 1, Concat, [1]], # cat backbone P4

+ [-1, 3, C3, [512, False]], # 13

+

+ [-1, 1, Conv, [256, 1, 1]],

+ [-1, 1, nn.Upsample, [None, 2, 'nearest']],

+ [[-1, 4], 1, Concat, [1]], # cat backbone P3

+ [-1, 3, C3, [256, False]], # 17 (P3/8-small)

+

+ [-1, 1, Conv, [256, 3, 2]],

+ [[-1, 14], 1, Concat, [1]], # cat head P4

+ [-1, 3, C3, [512, False]], # 20 (P4/16-medium)

+

+ [-1, 1, Conv, [512, 3, 2]],

+ [[-1, 10], 1, Concat, [1]], # cat head P5

+ [-1, 3, C3, [1024, False]], # 23 (P5/32-large)

+

+ [[17, 20, 23], 1, Detect, [nc]], # Detect(P3, P4, P5)

+ ]

\ No newline at end of file

diff --git a/ultralytics/models/v5/yolov5x.yaml b/ultralytics/models/v5/yolov5x.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..8217affcb61800e70f29bd30b08fb980c9254b19

--- /dev/null

+++ b/ultralytics/models/v5/yolov5x.yaml

@@ -0,0 +1,44 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 80 # number of classes

+depth_multiple: 1.33 # model depth multiple

+width_multiple: 1.25 # layer channel multiple

+

+# YOLOv5 v6.0 backbone

+backbone:

+ # [from, number, module, args]

+ [[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

+ [-1, 1, Conv, [128, 3, 2]], # 1-P2/4

+ [-1, 3, C3, [128]],

+ [-1, 1, Conv, [256, 3, 2]], # 3-P3/8

+ [-1, 6, C3, [256]],

+ [-1, 1, Conv, [512, 3, 2]], # 5-P4/16

+ [-1, 9, C3, [512]],

+ [-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

+ [-1, 3, C3, [1024]],

+ [-1, 1, SPPF, [1024, 5]], # 9

+ ]

+

+# YOLOv5 v6.0 head

+head:

+ [[-1, 1, Conv, [512, 1, 1]],

+ [-1, 1, nn.Upsample, [None, 2, 'nearest']],

+ [[-1, 6], 1, Concat, [1]], # cat backbone P4

+ [-1, 3, C3, [512, False]], # 13

+

+ [-1, 1, Conv, [256, 1, 1]],

+ [-1, 1, nn.Upsample, [None, 2, 'nearest']],

+ [[-1, 4], 1, Concat, [1]], # cat backbone P3

+ [-1, 3, C3, [256, False]], # 17 (P3/8-small)

+

+ [-1, 1, Conv, [256, 3, 2]],

+ [[-1, 14], 1, Concat, [1]], # cat head P4

+ [-1, 3, C3, [512, False]], # 20 (P4/16-medium)

+

+ [-1, 1, Conv, [512, 3, 2]],

+ [[-1, 10], 1, Concat, [1]], # cat head P5

+ [-1, 3, C3, [1024, False]], # 23 (P5/32-large)

+

+ [[17, 20, 23], 1, Detect, [nc]], # Detect(P3, P4, P5)

+ ]

\ No newline at end of file

diff --git a/ultralytics/models/v8/cls/yolov8l-cls.yaml b/ultralytics/models/v8/cls/yolov8l-cls.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..bf981a8434cb9ae0d66f31c539fbfa2cbdda2dc7

--- /dev/null

+++ b/ultralytics/models/v8/cls/yolov8l-cls.yaml

@@ -0,0 +1,23 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 1000 # number of classes

+depth_multiple: 1.00 # scales module repeats

+width_multiple: 1.00 # scales convolution channels

+

+# YOLOv8.0n backbone

+backbone:

+ # [from, repeats, module, args]

+ - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

+ - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

+ - [-1, 3, C2f, [128, True]]

+ - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

+ - [-1, 6, C2f, [256, True]]

+ - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

+ - [-1, 6, C2f, [512, True]]

+ - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

+ - [-1, 3, C2f, [1024, True]]

+

+# YOLOv8.0n head

+head:

+ - [-1, 1, Classify, [nc]]

diff --git a/ultralytics/models/v8/cls/yolov8m-cls.yaml b/ultralytics/models/v8/cls/yolov8m-cls.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..7e91894ce7613efc0cf9fbac8b3800825e42a927

--- /dev/null

+++ b/ultralytics/models/v8/cls/yolov8m-cls.yaml

@@ -0,0 +1,23 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 1000 # number of classes

+depth_multiple: 0.67 # scales module repeats

+width_multiple: 0.75 # scales convolution channels

+

+# YOLOv8.0n backbone

+backbone:

+ # [from, repeats, module, args]

+ - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

+ - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

+ - [-1, 3, C2f, [128, True]]

+ - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

+ - [-1, 6, C2f, [256, True]]

+ - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

+ - [-1, 6, C2f, [512, True]]

+ - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

+ - [-1, 3, C2f, [1024, True]]

+

+# YOLOv8.0n head

+head:

+ - [-1, 1, Classify, [nc]]

diff --git a/ultralytics/models/v8/cls/yolov8n-cls.yaml b/ultralytics/models/v8/cls/yolov8n-cls.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..29be2264b9f8e801d8b1dc044dd8c4cdf4cdc700

--- /dev/null

+++ b/ultralytics/models/v8/cls/yolov8n-cls.yaml

@@ -0,0 +1,23 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 1000 # number of classes

+depth_multiple: 0.33 # scales module repeats

+width_multiple: 0.25 # scales convolution channels

+

+# YOLOv8.0n backbone

+backbone:

+ # [from, repeats, module, args]

+ - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

+ - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

+ - [-1, 3, C2f, [128, True]]

+ - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

+ - [-1, 6, C2f, [256, True]]

+ - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

+ - [-1, 6, C2f, [512, True]]

+ - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

+ - [-1, 3, C2f, [1024, True]]

+

+# YOLOv8.0n head

+head:

+ - [-1, 1, Classify, [nc]]

diff --git a/ultralytics/models/v8/cls/yolov8s-cls.yaml b/ultralytics/models/v8/cls/yolov8s-cls.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..00ddc55afe8ae8b4693399af29869ee50e6c50d3

--- /dev/null

+++ b/ultralytics/models/v8/cls/yolov8s-cls.yaml

@@ -0,0 +1,23 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 1000 # number of classes

+depth_multiple: 0.33 # scales module repeats

+width_multiple: 0.50 # scales convolution channels

+

+# YOLOv8.0n backbone

+backbone:

+ # [from, repeats, module, args]

+ - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

+ - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

+ - [-1, 3, C2f, [128, True]]

+ - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

+ - [-1, 6, C2f, [256, True]]

+ - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

+ - [-1, 6, C2f, [512, True]]

+ - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

+ - [-1, 3, C2f, [1024, True]]

+

+# YOLOv8.0n head

+head:

+ - [-1, 1, Classify, [nc]]

diff --git a/ultralytics/models/v8/cls/yolov8x-cls.yaml b/ultralytics/models/v8/cls/yolov8x-cls.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..46c75d5a2a04b59632aa25c52602a9ada162c0e9

--- /dev/null

+++ b/ultralytics/models/v8/cls/yolov8x-cls.yaml

@@ -0,0 +1,23 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 1000 # number of classes

+depth_multiple: 1.00 # scales module repeats

+width_multiple: 1.25 # scales convolution channels

+

+# YOLOv8.0n backbone

+backbone:

+ # [from, repeats, module, args]

+ - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

+ - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

+ - [-1, 3, C2f, [128, True]]

+ - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

+ - [-1, 6, C2f, [256, True]]

+ - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

+ - [-1, 6, C2f, [512, True]]

+ - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

+ - [-1, 3, C2f, [1024, True]]

+

+# YOLOv8.0n head

+head:

+ - [-1, 1, Classify, [nc]]

diff --git a/ultralytics/models/v8/seg/yolov8l-seg.yaml b/ultralytics/models/v8/seg/yolov8l-seg.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..235dc761eb3680955c33887000dc6ba8e32c8cd4

--- /dev/null

+++ b/ultralytics/models/v8/seg/yolov8l-seg.yaml

@@ -0,0 +1,40 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 80 # number of classes

+depth_multiple: 1.00 # scales module repeats

+width_multiple: 1.00 # scales convolution channels

+

+# YOLOv8.0l backbone

+backbone:

+ # [from, repeats, module, args]

+ - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

+ - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

+ - [-1, 3, C2f, [128, True]]

+ - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

+ - [-1, 6, C2f, [256, True]]

+ - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

+ - [-1, 6, C2f, [512, True]]

+ - [-1, 1, Conv, [512, 3, 2]] # 7-P5/32

+ - [-1, 3, C2f, [512, True]]

+ - [-1, 1, SPPF, [512, 5]] # 9

+

+# YOLOv8.0l head

+head:

+ - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

+ - [[-1, 6], 1, Concat, [1]] # cat backbone P4

+ - [-1, 3, C2f, [512]] # 13

+

+ - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

+ - [[-1, 4], 1, Concat, [1]] # cat backbone P3

+ - [-1, 3, C2f, [256]] # 17 (P3/8-small)

+

+ - [-1, 1, Conv, [256, 3, 2]]

+ - [[-1, 12], 1, Concat, [1]] # cat head P4

+ - [-1, 3, C2f, [512]] # 20 (P4/16-medium)

+

+ - [-1, 1, Conv, [512, 3, 2]]

+ - [[-1, 9], 1, Concat, [1]] # cat head P5

+ - [-1, 3, C2f, [512]] # 23 (P5/32-large)

+

+ - [[15, 18, 21], 1, Segment, [nc, 32, 256]] # Detect(P3, P4, P5)

diff --git a/ultralytics/models/v8/seg/yolov8m-seg.yaml b/ultralytics/models/v8/seg/yolov8m-seg.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..17c07f6bb0089de42190d467676c2434eed64bdc

--- /dev/null

+++ b/ultralytics/models/v8/seg/yolov8m-seg.yaml

@@ -0,0 +1,40 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 80 # number of classes

+depth_multiple: 0.67 # scales module repeats

+width_multiple: 0.75 # scales convolution channels

+

+# YOLOv8.0m backbone

+backbone:

+ # [from, repeats, module, args]

+ - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

+ - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

+ - [-1, 3, C2f, [128, True]]

+ - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

+ - [-1, 6, C2f, [256, True]]

+ - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

+ - [-1, 6, C2f, [512, True]]

+ - [-1, 1, Conv, [768, 3, 2]] # 7-P5/32

+ - [-1, 3, C2f, [768, True]]

+ - [-1, 1, SPPF, [768, 5]] # 9

+

+# YOLOv8.0m head

+head:

+ - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

+ - [[-1, 6], 1, Concat, [1]] # cat backbone P4

+ - [-1, 3, C2f, [512]] # 13

+

+ - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

+ - [[-1, 4], 1, Concat, [1]] # cat backbone P3

+ - [-1, 3, C2f, [256]] # 17 (P3/8-small)

+

+ - [-1, 1, Conv, [256, 3, 2]]

+ - [[-1, 12], 1, Concat, [1]] # cat head P4

+ - [-1, 3, C2f, [512]] # 20 (P4/16-medium)

+

+ - [-1, 1, Conv, [512, 3, 2]]

+ - [[-1, 9], 1, Concat, [1]] # cat head P5

+ - [-1, 3, C2f, [768]] # 23 (P5/32-large)

+

+ - [[15, 18, 21], 1, Segment, [nc, 32, 256]] # Detect(P3, P4, P5)

diff --git a/ultralytics/models/v8/seg/yolov8n-seg.yaml b/ultralytics/models/v8/seg/yolov8n-seg.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..ffecc9d9ba366d075a8655dba84ebd117a14afaf

--- /dev/null

+++ b/ultralytics/models/v8/seg/yolov8n-seg.yaml

@@ -0,0 +1,40 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 80 # number of classes

+depth_multiple: 0.33 # scales module repeats

+width_multiple: 0.25 # scales convolution channels

+

+# YOLOv8.0n backbone

+backbone:

+ # [from, repeats, module, args]

+ - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

+ - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

+ - [-1, 3, C2f, [128, True]]

+ - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

+ - [-1, 6, C2f, [256, True]]

+ - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

+ - [-1, 6, C2f, [512, True]]

+ - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

+ - [-1, 3, C2f, [1024, True]]

+ - [-1, 1, SPPF, [1024, 5]] # 9

+

+# YOLOv8.0n head

+head:

+ - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

+ - [[-1, 6], 1, Concat, [1]] # cat backbone P4

+ - [-1, 3, C2f, [512]] # 13

+

+ - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

+ - [[-1, 4], 1, Concat, [1]] # cat backbone P3

+ - [-1, 3, C2f, [256]] # 17 (P3/8-small)

+

+ - [-1, 1, Conv, [256, 3, 2]]

+ - [[-1, 12], 1, Concat, [1]] # cat head P4

+ - [-1, 3, C2f, [512]] # 20 (P4/16-medium)

+

+ - [-1, 1, Conv, [512, 3, 2]]

+ - [[-1, 9], 1, Concat, [1]] # cat head P5

+ - [-1, 3, C2f, [1024]] # 23 (P5/32-large)

+

+ - [[15, 18, 21], 1, Segment, [nc, 32, 256]] # Detect(P3, P4, P5)

diff --git a/ultralytics/models/v8/seg/yolov8s-seg.yaml b/ultralytics/models/v8/seg/yolov8s-seg.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..dc828a1cd290871a6304e3cce47289d21c397c93

--- /dev/null

+++ b/ultralytics/models/v8/seg/yolov8s-seg.yaml

@@ -0,0 +1,40 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 80 # number of classes

+depth_multiple: 0.33 # scales module repeats

+width_multiple: 0.50 # scales convolution channels

+

+# YOLOv8.0s backbone

+backbone:

+ # [from, repeats, module, args]

+ - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

+ - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

+ - [-1, 3, C2f, [128, True]]

+ - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

+ - [-1, 6, C2f, [256, True]]

+ - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

+ - [-1, 6, C2f, [512, True]]

+ - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

+ - [-1, 3, C2f, [1024, True]]

+ - [-1, 1, SPPF, [1024, 5]] # 9

+

+# YOLOv8.0s head

+head:

+ - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

+ - [[-1, 6], 1, Concat, [1]] # cat backbone P4

+ - [-1, 3, C2f, [512]] # 13

+

+ - [-1, 1, nn.Upsample, [None, 2, 'nearest']]

+ - [[-1, 4], 1, Concat, [1]] # cat backbone P3

+ - [-1, 3, C2f, [256]] # 17 (P3/8-small)

+

+ - [-1, 1, Conv, [256, 3, 2]]

+ - [[-1, 12], 1, Concat, [1]] # cat head P4

+ - [-1, 3, C2f, [512]] # 20 (P4/16-medium)

+

+ - [-1, 1, Conv, [512, 3, 2]]

+ - [[-1, 9], 1, Concat, [1]] # cat head P5

+ - [-1, 3, C2f, [1024]] # 23 (P5/32-large)

+

+ - [[15, 18, 21], 1, Segment, [nc, 32, 256]] # Detect(P3, P4, P5)

diff --git a/ultralytics/models/v8/seg/yolov8x-seg.yaml b/ultralytics/models/v8/seg/yolov8x-seg.yaml

new file mode 100644

index 0000000000000000000000000000000000000000..0572283c987401b0f1a270a9a129f40fa0f51860

--- /dev/null

+++ b/ultralytics/models/v8/seg/yolov8x-seg.yaml

@@ -0,0 +1,40 @@

+# Ultralytics YOLO 🚀, GPL-3.0 license

+

+# Parameters

+nc: 80 # number of classes