Spaces:

Sleeping

Sleeping

krishnapal2308

commited on

Commit

·

3a2d1fe

1

Parent(s):

7c6be45

Adding Sample Images.

Browse files- __pycache__/inference_script.cpython-310.pyc +0 -0

- __pycache__/vit_gpt2.cpython-310.pyc +0 -0

- app.py +26 -4

- sample_images/1.jpg +0 -0

- sample_images/2.jpg +0 -0

- sample_images/3.jpg +0 -0

- sample_images/4.jpg +0 -0

- sample_images/5.jpg +0 -0

- sample_images/6.jpg +0 -0

- sample_images/7.jpg +0 -0

__pycache__/inference_script.cpython-310.pyc

CHANGED

|

Binary files a/__pycache__/inference_script.cpython-310.pyc and b/__pycache__/inference_script.cpython-310.pyc differ

|

|

|

__pycache__/vit_gpt2.cpython-310.pyc

CHANGED

|

Binary files a/__pycache__/vit_gpt2.cpython-310.pyc and b/__pycache__/vit_gpt2.cpython-310.pyc differ

|

|

|

app.py

CHANGED

|

@@ -5,10 +5,13 @@ import inference_script

|

|

| 5 |

import vit_gpt2

|

| 6 |

import os

|

| 7 |

import warnings

|

|

|

|

| 8 |

warnings.filterwarnings('ignore')

|

| 9 |

|

| 10 |

|

| 11 |

def process_image_and_generate_output(image, model_selection):

|

|

|

|

|

|

|

| 12 |

|

| 13 |

if model_selection == ('Basic Model (Trained only for 15 epochs without any hyperparameter tuning, utilizing '

|

| 14 |

'inception v3)'):

|

|

@@ -36,12 +39,31 @@ def process_image_and_generate_output(image, model_selection):

|

|

| 36 |

return pred_caption, audio_content

|

| 37 |

|

| 38 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 39 |

iface = gr.Interface(fn=process_image_and_generate_output,

|

| 40 |

-

inputs=[

|

| 41 |

-

"tuning, utilizing inception v3)", "ViT-GPT2 (SOTA model for Image "

|

| 42 |

-

"captioning)"], label="Choose "

|

| 43 |

-

"Model")],

|

| 44 |

outputs=["text", "audio"],

|

|

|

|

| 45 |

title="Eye For Blind | Image Captioning & TTS",

|

| 46 |

description="To be added")

|

| 47 |

|

|

|

|

| 5 |

import vit_gpt2

|

| 6 |

import os

|

| 7 |

import warnings

|

| 8 |

+

|

| 9 |

warnings.filterwarnings('ignore')

|

| 10 |

|

| 11 |

|

| 12 |

def process_image_and_generate_output(image, model_selection):

|

| 13 |

+

if image is None:

|

| 14 |

+

return "Please select an image", None

|

| 15 |

|

| 16 |

if model_selection == ('Basic Model (Trained only for 15 epochs without any hyperparameter tuning, utilizing '

|

| 17 |

'inception v3)'):

|

|

|

|

| 39 |

return pred_caption, audio_content

|

| 40 |

|

| 41 |

|

| 42 |

+

# Define your sample images

|

| 43 |

+

# sample_images = [os.path.join(os.path.dirname(__file__), 'sample_images/1.jpg'),

|

| 44 |

+

# os.path.join(os.path.dirname(__file__), 'sample_images/2.jpg'),

|

| 45 |

+

# os.path.join(os.path.dirname(__file__), 'sample_images/3.jpg'),

|

| 46 |

+

# os.path.join(os.path.dirname(__file__), 'sample_images/4.jpg'), ]

|

| 47 |

+

sample_images = [

|

| 48 |

+

["sample_images/1.jpg"],

|

| 49 |

+

["sample_images/2.jpg"],

|

| 50 |

+

["sample_images/3.jpg"],

|

| 51 |

+

["sample_images/4.jpg"]

|

| 52 |

+

]

|

| 53 |

+

|

| 54 |

+

# Create a dropdown to select sample image

|

| 55 |

+

image_input = gr.Image(label="Upload Image", sources=['upload', 'webcam'])

|

| 56 |

+

|

| 57 |

+

# Create a dropdown to choose the model

|

| 58 |

+

model_selection_input = gr.Radio(["Basic Model (Trained only for 15 epochs without any hyperparameter "

|

| 59 |

+

"tuning, utilizing inception v3)",

|

| 60 |

+

"ViT-GPT2 (SOTA model for Image captioning)"],

|

| 61 |

+

label="Choose Model")

|

| 62 |

+

|

| 63 |

iface = gr.Interface(fn=process_image_and_generate_output,

|

| 64 |

+

inputs=[image_input, model_selection_input],

|

|

|

|

|

|

|

|

|

|

| 65 |

outputs=["text", "audio"],

|

| 66 |

+

examples=sample_images,

|

| 67 |

title="Eye For Blind | Image Captioning & TTS",

|

| 68 |

description="To be added")

|

| 69 |

|

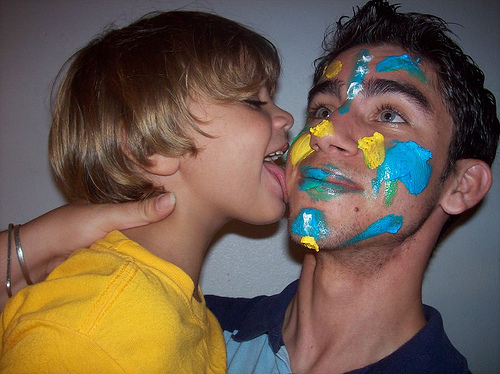

sample_images/1.jpg

ADDED

|

sample_images/2.jpg

ADDED

|

sample_images/3.jpg

ADDED

|

sample_images/4.jpg

ADDED

|

sample_images/5.jpg

ADDED

|

sample_images/6.jpg

ADDED

|

sample_images/7.jpg

ADDED

|