Spaces:

No application file

No application file

Delete DenseAV

Browse files- DenseAV/.gitignore +0 -5

- DenseAV/LICENSE +0 -22

- DenseAV/README.md +0 -172

- DenseAV/__init__.py +0 -0

- DenseAV/demo.ipynb +0 -0

- DenseAV/denseav/__init__.py +0 -0

- DenseAV/denseav/aggregators.py +0 -517

- DenseAV/denseav/aligners.py +0 -300

- DenseAV/denseav/configs/av_align.yaml +0 -125

- DenseAV/denseav/constants.py +0 -12

- DenseAV/denseav/data/AVDatasets.py +0 -1249

- DenseAV/denseav/data/__init__.py +0 -0

- DenseAV/denseav/data/make_tarballs.py +0 -108

- DenseAV/denseav/eval_utils.py +0 -135

- DenseAV/denseav/evaluate.py +0 -87

- DenseAV/denseav/featurizers/AudioMAE.py +0 -570

- DenseAV/denseav/featurizers/CAVMAE.py +0 -1082

- DenseAV/denseav/featurizers/CLIP.py +0 -50

- DenseAV/denseav/featurizers/DAVENet.py +0 -162

- DenseAV/denseav/featurizers/DINO.py +0 -451

- DenseAV/denseav/featurizers/DINOv2.py +0 -49

- DenseAV/denseav/featurizers/Hubert.py +0 -70

- DenseAV/denseav/featurizers/ImageBind.py +0 -2033

- DenseAV/denseav/featurizers/__init__.py +0 -0

- DenseAV/denseav/plotting.py +0 -244

- DenseAV/denseav/saved_models.py +0 -262

- DenseAV/denseav/shared.py +0 -739

- DenseAV/denseav/train.py +0 -1213

- DenseAV/gradio_app.py +0 -196

- DenseAV/hubconf.py +0 -25

- DenseAV/samples/puppies.mp4 +0 -3

- DenseAV/setup.py +0 -37

DenseAV/.gitignore

DELETED

|

@@ -1,5 +0,0 @@

|

|

| 1 |

-

# Created by .ignore support plugin (hsz.mobi)

|

| 2 |

-

results/attention/*

|

| 3 |

-

results/features/*

|

| 4 |

-

|

| 5 |

-

.env

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

DenseAV/LICENSE

DELETED

|

@@ -1,22 +0,0 @@

|

|

| 1 |

-

MIT License

|

| 2 |

-

|

| 3 |

-

Copyright (c) Mark Hamilton. All rights reserved.

|

| 4 |

-

|

| 5 |

-

Permission is hereby granted, free of charge, to any person obtaining a

|

| 6 |

-

copy of this software and associated documentation files (the

|

| 7 |

-

"Software"), to deal in the Software without restriction, including

|

| 8 |

-

without limitation the rights to use, copy, modify, merge, publish,

|

| 9 |

-

distribute, sublicense, and/or sell copies of the Software, and to

|

| 10 |

-

permit persons to whom the Software is furnished to do so, subject to

|

| 11 |

-

the following conditions:

|

| 12 |

-

|

| 13 |

-

The above copyright notice and this permission notice shall be included

|

| 14 |

-

in all copies or substantial portions of the Software.

|

| 15 |

-

|

| 16 |

-

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS

|

| 17 |

-

OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF

|

| 18 |

-

MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

|

| 19 |

-

NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE

|

| 20 |

-

LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION

|

| 21 |

-

OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION

|

| 22 |

-

WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

DenseAV/README.md

DELETED

|

@@ -1,172 +0,0 @@

|

|

| 1 |

-

# Separating the "Chirp" from the "Chat": Self-supervised Visual Grounding of Sound and Language

|

| 2 |

-

### CVPR 2024

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

[](https://aka.ms/denseav) [](https://arxiv.org/abs/2406.05629) [](https://colab.research.google.com/github/mhamilton723/DenseAV/blob/main/demo.ipynb)

|

| 6 |

-

|

| 7 |

-

[](https://huggingface.co/spaces/mhamilton723/DenseAV)

|

| 8 |

-

|

| 9 |

-

[//]: # ([](https://huggingface.co/papers/2403.10516))

|

| 10 |

-

[](https://paperswithcode.com/sota/speech-prompted-semantic-segmentation-on?p=separating-the-chirp-from-the-chat-self)

|

| 11 |

-

[](https://paperswithcode.com/sota/sound-prompted-semantic-segmentation-on?p=separating-the-chirp-from-the-chat-self)

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

[Mark Hamilton](https://mhamilton.net/),

|

| 15 |

-

[Andrew Zisserman](https://www.robots.ox.ac.uk/~az/),

|

| 16 |

-

[John R. Hershey](https://research.google/people/john-hershey/),

|

| 17 |

-

[William T. Freeman](https://billf.mit.edu/about/bio)

|

| 18 |

-

|

| 19 |

-

|

| 20 |

-

|

| 21 |

-

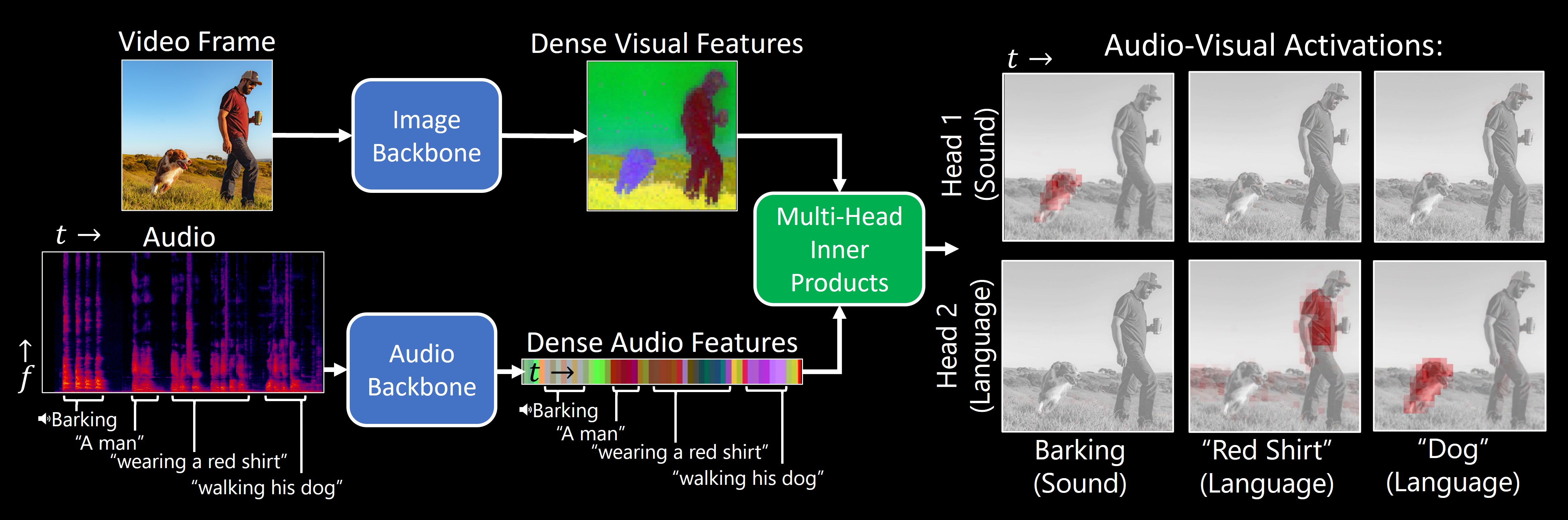

**TL;DR**:Our model, DenseAV, learns the meaning of words and the location of sounds (visual grounding) without supervision or text.

|

| 22 |

-

|

| 23 |

-

https://github.com/mhamilton723/DenseAV/assets/6456637/ba908ab5-9618-42f9-8d7a-30ecb009091f

|

| 24 |

-

|

| 25 |

-

|

| 26 |

-

## Contents

|

| 27 |

-

<!--ts-->

|

| 28 |

-

* [Install](#install)

|

| 29 |

-

* [Model Zoo](#model-zoo)

|

| 30 |

-

* [Getting Datasets](#getting-atasets)

|

| 31 |

-

* [Evaluate Models](#evaluate-models)

|

| 32 |

-

* [Train a Model](#train-model)

|

| 33 |

-

* [Local Gradio Demo](#local-gradio-demo)

|

| 34 |

-

* [Coming Soon](coming-soon)

|

| 35 |

-

* [Citation](#citation)

|

| 36 |

-

* [Contact](#contact)

|

| 37 |

-

<!--te-->

|

| 38 |

-

|

| 39 |

-

## Install

|

| 40 |

-

|

| 41 |

-

To use DenseAV locally clone the repository:

|

| 42 |

-

|

| 43 |

-

```shell script

|

| 44 |

-

git clone https://github.com/mhamilton723/DenseAV.git

|

| 45 |

-

cd DenseAV

|

| 46 |

-

pip install -e .

|

| 47 |

-

```

|

| 48 |

-

|

| 49 |

-

|

| 50 |

-

## Model Zoo

|

| 51 |

-

|

| 52 |

-

To see examples of pretrained model usage please see our [Collab notebook](https://colab.research.google.com/github/mhamilton723/DenseAV/blob/main/demo.ipynb). We currently supply the following pretrained models:

|

| 53 |

-

|

| 54 |

-

| Model Name | Checkpoint | Torch Hub Repository | Torch Hub Name |

|

| 55 |

-

|-------------------------------|----------------------------------------------------------------------------------------------------------------------------------|----------------------|--------------------|

|

| 56 |

-

| Sound | [Download](https://marhamilresearch4.blob.core.windows.net/denseav-public/hub/denseav_sound.ckpt) | mhamilton723/DenseAV | sound |

|

| 57 |

-

| Language | [Download](https://marhamilresearch4.blob.core.windows.net/denseav-public/hub/denseav_language.ckpt) | mhamilton723/DenseAV | language |

|

| 58 |

-

| Sound + Language (Two Headed) | [Download](https://marhamilresearch4.blob.core.windows.net/denseav-public/hub/denseav_2head.ckpt) | mhamilton723/DenseAV | sound_and_language |

|

| 59 |

-

|

| 60 |

-

For example, to load the model trained on both sound and language:

|

| 61 |

-

|

| 62 |

-

```python

|

| 63 |

-

model = torch.hub.load("mhamilton723/DenseAV", 'sound_and_language')

|

| 64 |

-

```

|

| 65 |

-

|

| 66 |

-

### Load from HuggingFace

|

| 67 |

-

|

| 68 |

-

```python

|

| 69 |

-

from denseav.train import LitAVAligner

|

| 70 |

-

|

| 71 |

-

model1 = LitAVAligner.from_pretrained("mhamilton723/DenseAV-sound")

|

| 72 |

-

model2 = LitAVAligner.from_pretrained("mhamilton723/DenseAV-language")

|

| 73 |

-

model3 = LitAVAligner.from_pretrained("mhamilton723/DenseAV-sound-language")

|

| 74 |

-

```

|

| 75 |

-

|

| 76 |

-

|

| 77 |

-

## Getting Datasets

|

| 78 |

-

|

| 79 |

-

Our code assumes that all data lives in a common directory on your system, in these examples we use `/path/to/your/data`. Our code will often reference this directory as the `data_root`

|

| 80 |

-

|

| 81 |

-

### Speech and Sound Prompted ADE20K

|

| 82 |

-

|

| 83 |

-

To download our new Speech and Sound prompted ADE20K Dataset:

|

| 84 |

-

|

| 85 |

-

```bash

|

| 86 |

-

cd /path/to/your/data

|

| 87 |

-

wget https://marhamilresearch4.blob.core.windows.net/denseav-public/datasets/ADE20KSoundPrompted.zip

|

| 88 |

-

unzip ADE20KSoundPrompted.zip

|

| 89 |

-

wget https://marhamilresearch4.blob.core.windows.net/denseav-public/datasets/ADE20KSpeechPrompted.zip

|

| 90 |

-

unzip ADE20KSpeechPrompted.zip

|

| 91 |

-

```

|

| 92 |

-

|

| 93 |

-

### Places Audio

|

| 94 |

-

|

| 95 |

-

First download the places audio dataset from its [original source](https://groups.csail.mit.edu/sls/downloads/placesaudio/downloads.cgi).

|

| 96 |

-

|

| 97 |

-

To run the code the data will need to be processed to be of the form:

|

| 98 |

-

|

| 99 |

-

```

|

| 100 |

-

[Instructions coming soon]

|

| 101 |

-

```

|

| 102 |

-

|

| 103 |

-

### Audioset

|

| 104 |

-

|

| 105 |

-

Because of copyright issues we cannot make [Audioset](https://research.google.com/audioset/dataset/index.html) easily availible to download.

|

| 106 |

-

First download this dataset through appropriate means. [This other project](https://github.com/ktonal/audioset-downloader) appears to make this simple.

|

| 107 |

-

|

| 108 |

-

To run the code the data will need to be processed to be of the form:

|

| 109 |

-

|

| 110 |

-

```

|

| 111 |

-

[Instructions coming soon]

|

| 112 |

-

```

|

| 113 |

-

|

| 114 |

-

|

| 115 |

-

## Evaluate Models

|

| 116 |

-

|

| 117 |

-

To evaluate a trained model first clone the repository for

|

| 118 |

-

[local development](#local-development). Then run

|

| 119 |

-

|

| 120 |

-

```shell

|

| 121 |

-

cd featup

|

| 122 |

-

python evaluate.py

|

| 123 |

-

```

|

| 124 |

-

|

| 125 |

-

After evaluation, see the results in tensorboard's hparams tab.

|

| 126 |

-

|

| 127 |

-

```shell

|

| 128 |

-

cd ../logs/evaluate

|

| 129 |

-

tensorboard --logdir .

|

| 130 |

-

```

|

| 131 |

-

|

| 132 |

-

Then visit [https://localhost:6006](https://localhost:6006) and click on hparams to browse results. We report "advanced" speech metrics and "basic" sound metrics in our paper.

|

| 133 |

-

|

| 134 |

-

|

| 135 |

-

## Train a Model

|

| 136 |

-

|

| 137 |

-

```shell

|

| 138 |

-

cd denseav

|

| 139 |

-

python train.py

|

| 140 |

-

```

|

| 141 |

-

|

| 142 |

-

## Local Gradio Demo

|

| 143 |

-

|

| 144 |

-

To run our [HuggingFace Spaces hosted DenseAV demo](https://huggingface.co/spaces/mhamilton723/FeatUp) locally first install DenseAV for local development. Then run:

|

| 145 |

-

|

| 146 |

-

```shell

|

| 147 |

-

python gradio_app.py

|

| 148 |

-

```

|

| 149 |

-

|

| 150 |

-

Wait a few seconds for the demo to spin up, then navigate to [http://localhost:7860/](http://localhost:7860/) to view the demo.

|

| 151 |

-

|

| 152 |

-

|

| 153 |

-

## Coming Soon:

|

| 154 |

-

|

| 155 |

-

- Bigger models!

|

| 156 |

-

|

| 157 |

-

## Citation

|

| 158 |

-

|

| 159 |

-

```

|

| 160 |

-

@misc{hamilton2024separating,

|

| 161 |

-

title={Separating the "Chirp" from the "Chat": Self-supervised Visual Grounding of Sound and Language},

|

| 162 |

-

author={Mark Hamilton and Andrew Zisserman and John R. Hershey and William T. Freeman},

|

| 163 |

-

year={2024},

|

| 164 |

-

eprint={2406.05629},

|

| 165 |

-

archivePrefix={arXiv},

|

| 166 |

-

primaryClass={cs.CV}

|

| 167 |

-

}

|

| 168 |

-

```

|

| 169 |

-

|

| 170 |

-

## Contact

|

| 171 |

-

|

| 172 |

-

For feedback, questions, or press inquiries please contact [Mark Hamilton](mailto:[email protected])

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

DenseAV/__init__.py

DELETED

|

File without changes

|

DenseAV/demo.ipynb

DELETED

|

The diff for this file is too large to render.

See raw diff

|

|

|

DenseAV/denseav/__init__.py

DELETED

|

File without changes

|

DenseAV/denseav/aggregators.py

DELETED

|

@@ -1,517 +0,0 @@

|

|

| 1 |

-

from abc import abstractmethod

|

| 2 |

-

|

| 3 |

-

import math

|

| 4 |

-

import torch

|

| 5 |

-

import torch.nn as nn

|

| 6 |

-

import torch.nn.functional as F

|

| 7 |

-

from tqdm import tqdm

|

| 8 |

-

|

| 9 |

-

from denseav.constants import *

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

@torch.jit.script

|

| 13 |

-

def masked_mean(x: torch.Tensor, mask: torch.Tensor, dim: int):

|

| 14 |

-

mask = mask.to(x)

|

| 15 |

-

return (x * mask).sum(dim, keepdim=True) / mask.sum(dim, keepdim=True).clamp_min(.001)

|

| 16 |

-

|

| 17 |

-

|

| 18 |

-

@torch.jit.script

|

| 19 |

-

def masked_max(x: torch.Tensor, mask: torch.Tensor, dim: int):

|

| 20 |

-

mask = mask.to(torch.bool)

|

| 21 |

-

eps = 1e7

|

| 22 |

-

# eps = torch.finfo(x.dtype).max

|

| 23 |

-

return (x - (~mask) * eps).max(dim, keepdim=True).values

|

| 24 |

-

|

| 25 |

-

|

| 26 |

-

def masked_lse(x: torch.Tensor, mask: torch.Tensor, dim: int, temp):

|

| 27 |

-

x = x.to(torch.float32)

|

| 28 |

-

mask = mask.to(torch.float32)

|

| 29 |

-

x_masked = (x - (1 - mask) * torch.finfo(x.dtype).max)

|

| 30 |

-

return (torch.logsumexp(x_masked * temp, dim, keepdim=True) - torch.log(mask.sum(dim, keepdim=True))) / temp

|

| 31 |

-

|

| 32 |

-

|

| 33 |

-

class BaseAggregator(torch.nn.Module):

|

| 34 |

-

|

| 35 |

-

def __init__(self, nonneg_sim, mask_silence, num_heads, head_agg, use_cls):

|

| 36 |

-

super().__init__()

|

| 37 |

-

|

| 38 |

-

self.nonneg_sim = nonneg_sim

|

| 39 |

-

self.mask_silence = mask_silence

|

| 40 |

-

self.num_heads = num_heads

|

| 41 |

-

self.head_agg = head_agg

|

| 42 |

-

self.use_cls = use_cls

|

| 43 |

-

|

| 44 |

-

@abstractmethod

|

| 45 |

-

def _agg_sim(self, sim, mask):

|

| 46 |

-

pass

|

| 47 |

-

|

| 48 |

-

def prepare_sims(self, sim, mask, agg_sim, agg_heads):

|

| 49 |

-

sim_size = sim.shape

|

| 50 |

-

assert len(mask.shape) == 2

|

| 51 |

-

assert len(sim_size) in {6, 7}, f"sim has wrong number of dimensions: {sim.shape}"

|

| 52 |

-

pairwise = len(sim_size) == 6

|

| 53 |

-

|

| 54 |

-

if self.mask_silence:

|

| 55 |

-

mask = mask

|

| 56 |

-

else:

|

| 57 |

-

mask = torch.ones_like(mask)

|

| 58 |

-

|

| 59 |

-

if self.nonneg_sim:

|

| 60 |

-

sim = sim.clamp_min(0)

|

| 61 |

-

|

| 62 |

-

if pairwise:

|

| 63 |

-

head_dim = 1

|

| 64 |

-

else:

|

| 65 |

-

head_dim = 2

|

| 66 |

-

|

| 67 |

-

if self.head_agg == "max_elementwise" and agg_heads:

|

| 68 |

-

sim = sim.max(head_dim, keepdim=True).values

|

| 69 |

-

|

| 70 |

-

if agg_sim:

|

| 71 |

-

sim = self._agg_sim(sim, mask)

|

| 72 |

-

|

| 73 |

-

if agg_heads:

|

| 74 |

-

if self.head_agg == "sum" or self.head_agg == "max_elementwise":

|

| 75 |

-

sim = sim.sum(head_dim)

|

| 76 |

-

elif self.head_agg == "max":

|

| 77 |

-

sim = sim.max(head_dim).values

|

| 78 |

-

else:

|

| 79 |

-

raise ValueError(f"Unknown head_agg: {self.head_agg}")

|

| 80 |

-

|

| 81 |

-

return sim

|

| 82 |

-

|

| 83 |

-

def _get_full_sims(self, preds, raw, agg_sim, agg_heads):

|

| 84 |

-

if agg_sim or agg_heads or raw:

|

| 85 |

-

assert (agg_sim or agg_heads) != raw, "Cannot have raw on at the same time as agg_sim or agg_heads"

|

| 86 |

-

|

| 87 |

-

audio_feats = preds[AUDIO_FEATS]

|

| 88 |

-

audio_mask = preds[AUDIO_MASK]

|

| 89 |

-

image_feats = preds[IMAGE_FEATS]

|

| 90 |

-

|

| 91 |

-

b1, c2, f, t1 = audio_feats.shape

|

| 92 |

-

b2, t2 = audio_mask.shape

|

| 93 |

-

d, c1, h, w = image_feats.shape

|

| 94 |

-

assert b1 == b2 and c1 == c2 and t1 == t2

|

| 95 |

-

assert c1 % self.num_heads == 0

|

| 96 |

-

new_c = c1 // self.num_heads

|

| 97 |

-

audio_feats = audio_feats.reshape(b1, self.num_heads, new_c, f, t1)

|

| 98 |

-

image_feats = image_feats.reshape(d, self.num_heads, new_c, h, w)

|

| 99 |

-

raw_sims = torch.einsum(

|

| 100 |

-

"akcft,vkchw->avkhwft",

|

| 101 |

-

audio_feats.to(torch.float32),

|

| 102 |

-

image_feats.to(torch.float32))

|

| 103 |

-

|

| 104 |

-

if self.use_cls:

|

| 105 |

-

audio_cls = preds[AUDIO_CLS].reshape(b1, self.num_heads, new_c)

|

| 106 |

-

image_cls = preds[IMAGE_CLS].reshape(d, self.num_heads, new_c)

|

| 107 |

-

cls_sims = torch.einsum(

|

| 108 |

-

"akc,vkc->avk",

|

| 109 |

-

audio_cls.to(torch.float32),

|

| 110 |

-

image_cls.to(torch.float32))

|

| 111 |

-

raw_sims += cls_sims.reshape(b1, d, self.num_heads, 1, 1, 1, 1)

|

| 112 |

-

|

| 113 |

-

if raw:

|

| 114 |

-

return raw_sims

|

| 115 |

-

else:

|

| 116 |

-

return self.prepare_sims(raw_sims, audio_mask, agg_sim, agg_heads)

|

| 117 |

-

|

| 118 |

-

def get_pairwise_sims(self, preds, raw, agg_sim, agg_heads):

|

| 119 |

-

if agg_sim or agg_heads or raw:

|

| 120 |

-

assert (agg_sim or agg_heads) != raw, "Cannot have raw on at the same time as agg_sim or agg_heads"

|

| 121 |

-

|

| 122 |

-

audio_feats = preds[AUDIO_FEATS]

|

| 123 |

-

audio_mask = preds[AUDIO_MASK]

|

| 124 |

-

image_feats = preds[IMAGE_FEATS]

|

| 125 |

-

|

| 126 |

-

a1, c1, f, t1 = audio_feats.shape

|

| 127 |

-

a2, t2 = audio_mask.shape

|

| 128 |

-

|

| 129 |

-

assert c1 % self.num_heads == 0

|

| 130 |

-

new_c = c1 // self.num_heads

|

| 131 |

-

audio_feats = audio_feats.reshape(a1, self.num_heads, new_c, f, t1)

|

| 132 |

-

|

| 133 |

-

if len(image_feats.shape) == 5:

|

| 134 |

-

print("Using similarity for video, should only be called during plotting")

|

| 135 |

-

v, vt, c2, h, w = image_feats.shape

|

| 136 |

-

image_feats = image_feats.reshape(v, vt, self.num_heads, new_c, h, w)

|

| 137 |

-

raw_sims = torch.einsum(

|

| 138 |

-

"bkcft,bskchw,bt->bskhwft",

|

| 139 |

-

audio_feats.to(torch.float32),

|

| 140 |

-

image_feats.to(torch.float32),

|

| 141 |

-

audio_mask.to(torch.float32))

|

| 142 |

-

|

| 143 |

-

if self.use_cls:

|

| 144 |

-

audio_cls = preds[AUDIO_CLS].reshape(v, self.num_heads, new_c)

|

| 145 |

-

image_cls = preds[IMAGE_CLS].reshape(v, vt, self.num_heads, new_c)

|

| 146 |

-

cls_sims = torch.einsum(

|

| 147 |

-

"bkc,bskc->bsk",

|

| 148 |

-

audio_cls.to(torch.float32),

|

| 149 |

-

image_cls.to(torch.float32))

|

| 150 |

-

raw_sims += cls_sims.reshape(v, vt, self.num_heads, 1, 1, 1, 1)

|

| 151 |

-

|

| 152 |

-

|

| 153 |

-

elif len(image_feats.shape) == 4:

|

| 154 |

-

v, c2, h, w = image_feats.shape

|

| 155 |

-

image_feats = image_feats.reshape(v, self.num_heads, new_c, h, w)

|

| 156 |

-

raw_sims = torch.einsum(

|

| 157 |

-

"bkcft,bkchw,bt->bkhwft",

|

| 158 |

-

audio_feats.to(torch.float32),

|

| 159 |

-

image_feats.to(torch.float32),

|

| 160 |

-

audio_mask.to(torch.float32))

|

| 161 |

-

|

| 162 |

-

if self.use_cls:

|

| 163 |

-

audio_cls = preds[AUDIO_CLS].reshape(v, self.num_heads, new_c)

|

| 164 |

-

image_cls = preds[IMAGE_CLS].reshape(v, self.num_heads, new_c)

|

| 165 |

-

cls_sims = torch.einsum(

|

| 166 |

-

"bkc,bkc->bk",

|

| 167 |

-

audio_cls.to(torch.float32),

|

| 168 |

-

image_cls.to(torch.float32))

|

| 169 |

-

raw_sims += cls_sims.reshape(v, self.num_heads, 1, 1, 1, 1)

|

| 170 |

-

else:

|

| 171 |

-

raise ValueError(f"Improper image shape: {image_feats.shape}")

|

| 172 |

-

|

| 173 |

-

assert a1 == a2 and c2 == c2 and t1 == t2

|

| 174 |

-

|

| 175 |

-

if raw:

|

| 176 |

-

return raw_sims

|

| 177 |

-

else:

|

| 178 |

-

return self.prepare_sims(raw_sims, audio_mask, agg_sim, agg_heads)

|

| 179 |

-

|

| 180 |

-

def forward(self, preds, agg_heads):

|

| 181 |

-

return self._get_full_sims(

|

| 182 |

-

preds, raw=False, agg_sim=True, agg_heads=agg_heads)

|

| 183 |

-

|

| 184 |

-

def forward_batched(self, preds, agg_heads, batch_size):

|

| 185 |

-

new_preds = {k: v for k, v in preds.items()}

|

| 186 |

-

big_image_feats = new_preds.pop(IMAGE_FEATS)

|

| 187 |

-

if self.use_cls:

|

| 188 |

-

big_image_cls = new_preds.pop(IMAGE_CLS)

|

| 189 |

-

|

| 190 |

-

n = big_image_feats.shape[0]

|

| 191 |

-

n_steps = math.ceil(n / batch_size)

|

| 192 |

-

outputs = []

|

| 193 |

-

for step in tqdm(range(n_steps), "Calculating Sim", leave=False):

|

| 194 |

-

new_preds[IMAGE_FEATS] = big_image_feats[step * batch_size:(step + 1) * batch_size].cuda()

|

| 195 |

-

if self.use_cls:

|

| 196 |

-

new_preds[IMAGE_CLS] = big_image_cls[step * batch_size:(step + 1) * batch_size].cuda()

|

| 197 |

-

|

| 198 |

-

sim = self.forward(new_preds, agg_heads=agg_heads)

|

| 199 |

-

outputs.append(sim.cpu())

|

| 200 |

-

return torch.cat(outputs, dim=1)

|

| 201 |

-

|

| 202 |

-

|

| 203 |

-

class ImageThenAudioAggregator(BaseAggregator):

|

| 204 |

-

|

| 205 |

-

def __init__(self, image_agg_type, audio_agg_type, nonneg_sim, mask_silence, num_heads, head_agg, use_cls):

|

| 206 |

-

super().__init__(nonneg_sim, mask_silence, num_heads, head_agg, use_cls)

|

| 207 |

-

if image_agg_type == "max":

|

| 208 |

-

self.image_agg = lambda x, dim: x.max(dim=dim, keepdim=True).values

|

| 209 |

-

elif image_agg_type == "avg":

|

| 210 |

-

self.image_agg = lambda x, dim: x.mean(dim=dim, keepdim=True)

|

| 211 |

-

else:

|

| 212 |

-

raise ValueError(f"Unknown image_agg_type {image_agg_type}")

|

| 213 |

-

|

| 214 |

-

if audio_agg_type == "max":

|

| 215 |

-

self.time_agg = masked_max

|

| 216 |

-

elif audio_agg_type == "avg":

|

| 217 |

-

self.time_agg = masked_mean

|

| 218 |

-

else:

|

| 219 |

-

raise ValueError(f"Unknown audio_agg_type {audio_agg_type}")

|

| 220 |

-

|

| 221 |

-

self.freq_agg = lambda x, dim: x.mean(dim=dim, keepdim=True)

|

| 222 |

-

|

| 223 |

-

def _agg_sim(self, sim, mask):

|

| 224 |

-

sim_shape = sim.shape

|

| 225 |

-

new_mask_shape = [1] * len(sim_shape)

|

| 226 |

-

new_mask_shape[0] = sim_shape[0]

|

| 227 |

-

new_mask_shape[-1] = sim_shape[-1]

|

| 228 |

-

mask = mask.reshape(new_mask_shape)

|

| 229 |

-

sim = self.image_agg(sim, -3)

|

| 230 |

-

sim = self.image_agg(sim, -4)

|

| 231 |

-

sim = self.freq_agg(sim, -2)

|

| 232 |

-

sim = self.time_agg(sim, mask, -1)

|

| 233 |

-

return sim.squeeze(-1).squeeze(-1).squeeze(-1).squeeze(-1)

|

| 234 |

-

|

| 235 |

-

|

| 236 |

-

class PairedAggregator(BaseAggregator):

|

| 237 |

-

|

| 238 |

-

def __init__(self, nonneg_sim, mask_silence, num_heads, head_agg, use_cls):

|

| 239 |

-

super().__init__(nonneg_sim, mask_silence, num_heads, head_agg, use_cls)

|

| 240 |

-

self.image_agg_max = lambda x, dim: x.max(dim=dim, keepdim=True).values

|

| 241 |

-

self.image_agg_mean = lambda x, dim: x.mean(dim=dim, keepdim=True)

|

| 242 |

-

|

| 243 |

-

self.time_agg_max = masked_max

|

| 244 |

-

self.time_agg_mean = masked_mean

|

| 245 |

-

|

| 246 |

-

self.freq_agg = lambda x, dim: x.mean(dim=dim, keepdim=True)

|

| 247 |

-

|

| 248 |

-

def _agg_sim(self, sim, mask):

|

| 249 |

-

sim_shape = sim.shape

|

| 250 |

-

new_mask_shape = [1] * len(sim_shape)

|

| 251 |

-

new_mask_shape[0] = sim_shape[0]

|

| 252 |

-

new_mask_shape[-1] = sim_shape[-1]

|

| 253 |

-

mask = mask.reshape(new_mask_shape)

|

| 254 |

-

|

| 255 |

-

sim_1 = self.image_agg_max(sim, -3)

|

| 256 |

-

sim_1 = self.image_agg_max(sim_1, -4)

|

| 257 |

-

sim_1 = self.freq_agg(sim_1, -2)

|

| 258 |

-

sim_1 = self.time_agg_mean(sim_1, mask, -1)

|

| 259 |

-

|

| 260 |

-

sim_2 = self.freq_agg(sim, -2)

|

| 261 |

-

sim_2 = self.time_agg_max(sim_2, mask, -1)

|

| 262 |

-

sim_2 = self.image_agg_mean(sim_2, -3)

|

| 263 |

-

sim_2 = self.image_agg_mean(sim_2, -4)

|

| 264 |

-

|

| 265 |

-

sim = 1 / 2 * (sim_1 + sim_2)

|

| 266 |

-

|

| 267 |

-

return sim.squeeze(-1).squeeze(-1).squeeze(-1).squeeze(-1)

|

| 268 |

-

|

| 269 |

-

|

| 270 |

-

|

| 271 |

-

class CAVMAEAggregator(BaseAggregator):

|

| 272 |

-

|

| 273 |

-

def __init__(self, *args, **kwargs):

|

| 274 |

-

super().__init__(False, False, 1, "sum", False)

|

| 275 |

-

|

| 276 |

-

def _get_full_sims(self, preds, raw, agg_sim, agg_heads):

|

| 277 |

-

if agg_sim:

|

| 278 |

-

audio_feats = preds[AUDIO_FEATS]

|

| 279 |

-

image_feats = preds[IMAGE_FEATS]

|

| 280 |

-

pool_audio_feats = F.normalize(audio_feats.mean(dim=[-1, -2]), dim=1)

|

| 281 |

-

pool_image_feats = F.normalize(image_feats.mean(dim=[-1, -2]), dim=1)

|

| 282 |

-

sims = torch.einsum(

|

| 283 |

-

"bc,dc->bd",

|

| 284 |

-

pool_audio_feats.to(torch.float32),

|

| 285 |

-

pool_image_feats.to(torch.float32))

|

| 286 |

-

if agg_heads:

|

| 287 |

-

return sims

|

| 288 |

-

else:

|

| 289 |

-

return sims.unsqueeze(-1)

|

| 290 |

-

|

| 291 |

-

else:

|

| 292 |

-

return BaseAggregator._get_full_sims(self, preds, raw, agg_sim, agg_heads)

|

| 293 |

-

|

| 294 |

-

def get_pairwise_sims(self, preds, raw, agg_sim, agg_heads):

|

| 295 |

-

if agg_sim:

|

| 296 |

-

audio_feats = preds[AUDIO_FEATS]

|

| 297 |

-

image_feats = preds[IMAGE_FEATS]

|

| 298 |

-

pool_audio_feats = F.normalize(audio_feats.mean(dim=[-1, -2]), dim=1)

|

| 299 |

-

pool_image_feats = F.normalize(image_feats.mean(dim=[-1, -2]), dim=1)

|

| 300 |

-

sims = torch.einsum(

|

| 301 |

-

"bc,bc->b",

|

| 302 |

-

pool_audio_feats.to(torch.float32),

|

| 303 |

-

pool_image_feats.to(torch.float32))

|

| 304 |

-

if agg_heads:

|

| 305 |

-

return sims

|

| 306 |

-

else:

|

| 307 |

-

return sims.unsqueeze(-1)

|

| 308 |

-

|

| 309 |

-

else:

|

| 310 |

-

return BaseAggregator.get_pairwise_sims(self, preds, raw, agg_sim, agg_heads)

|

| 311 |

-

|

| 312 |

-

|

| 313 |

-

class ImageBindAggregator(BaseAggregator):

|

| 314 |

-

|

| 315 |

-

def __init__(self, num_heads, *args, **kwargs):

|

| 316 |

-

super().__init__(False, False, num_heads, "sum", False)

|

| 317 |

-

|

| 318 |

-

def _get_full_sims(self, preds, raw, agg_sim, agg_heads):

|

| 319 |

-

if agg_sim:

|

| 320 |

-

sims = torch.einsum(

|

| 321 |

-

"bc,dc->bd",

|

| 322 |

-

preds[AUDIO_CLS].to(torch.float32),

|

| 323 |

-

preds[IMAGE_CLS].to(torch.float32))

|

| 324 |

-

if agg_heads:

|

| 325 |

-

return sims

|

| 326 |

-

else:

|

| 327 |

-

sims = sims.unsqueeze(-1)

|

| 328 |

-

return sims.repeat(*([1] * (sims.dim() - 1)), self.num_heads)

|

| 329 |

-

|

| 330 |

-

|

| 331 |

-

else:

|

| 332 |

-

return BaseAggregator._get_full_sims(self, preds, raw, agg_sim, agg_heads)

|

| 333 |

-

|

| 334 |

-

def get_pairwise_sims(self, preds, raw, agg_sim, agg_heads):

|

| 335 |

-

if agg_sim:

|

| 336 |

-

sims = torch.einsum(

|

| 337 |

-

"bc,dc->b",

|

| 338 |

-

preds[AUDIO_CLS].to(torch.float32),

|

| 339 |

-

preds[IMAGE_CLS].to(torch.float32))

|

| 340 |

-

if agg_heads:

|

| 341 |

-

return sims

|

| 342 |

-

else:

|

| 343 |

-

sims = sims.unsqueeze(-1)

|

| 344 |

-

return sims.repeat(*([1] * (sims.dim() - 1)), self.num_heads)

|

| 345 |

-

|

| 346 |

-

else:

|

| 347 |

-

return BaseAggregator.get_pairwise_sims(self, preds, raw, agg_sim, agg_heads)

|

| 348 |

-

|

| 349 |

-

def forward_batched(self, preds, agg_heads, batch_size):

|

| 350 |

-

return self.forward(preds, agg_heads)

|

| 351 |

-

|

| 352 |

-

|

| 353 |

-

class SimPool(nn.Module):

|

| 354 |

-

def __init__(self, dim, num_heads=1, qkv_bias=False, qk_scale=None, gamma=None, use_beta=False):

|

| 355 |

-

super().__init__()

|

| 356 |

-

self.num_heads = num_heads

|

| 357 |

-

head_dim = dim // num_heads

|

| 358 |

-

self.scale = qk_scale or head_dim ** -0.5

|

| 359 |

-

|

| 360 |

-

self.norm_patches = nn.LayerNorm(dim, eps=1e-6)

|

| 361 |

-

|

| 362 |

-

self.wq = nn.Linear(dim, dim, bias=qkv_bias)

|

| 363 |

-

self.wk = nn.Linear(dim, dim, bias=qkv_bias)

|

| 364 |

-

|

| 365 |

-

if gamma is not None:

|

| 366 |

-

self.gamma = torch.tensor([gamma])

|

| 367 |

-

if use_beta:

|

| 368 |

-

self.beta = nn.Parameter(torch.tensor([0.0]))

|

| 369 |

-

self.eps = torch.tensor([1e-6])

|

| 370 |

-

|

| 371 |

-

self.gamma = gamma

|

| 372 |

-

self.use_beta = use_beta

|

| 373 |

-

|

| 374 |

-

def prepare_input(self, x):

|

| 375 |

-

if len(x.shape) == 3: # Transformer

|

| 376 |

-

# Input tensor dimensions:

|

| 377 |

-

# x: (B, N, d), where B is batch size, N are patch tokens, d is depth (channels)

|

| 378 |

-

B, N, d = x.shape

|

| 379 |

-

gap_cls = x.mean(-2) # (B, N, d) -> (B, d)

|

| 380 |

-

gap_cls = gap_cls.unsqueeze(1) # (B, d) -> (B, 1, d)

|

| 381 |

-

return gap_cls, x

|

| 382 |

-

if len(x.shape) == 4: # CNN

|

| 383 |

-

# Input tensor dimensions:

|

| 384 |

-

# x: (B, d, H, W), where B is batch size, d is depth (channels), H is height, and W is width

|

| 385 |

-

B, d, H, W = x.shape

|

| 386 |

-

gap_cls = x.mean([-2, -1]) # (B, d, H, W) -> (B, d)

|

| 387 |

-

x = x.reshape(B, d, H * W).permute(0, 2, 1) # (B, d, H, W) -> (B, d, H*W) -> (B, H*W, d)

|

| 388 |

-

gap_cls = gap_cls.unsqueeze(1) # (B, d) -> (B, 1, d)

|

| 389 |

-

return gap_cls, x

|

| 390 |

-

else:

|

| 391 |

-

raise ValueError(f"Unsupported number of dimensions in input tensor: {len(x.shape)}")

|

| 392 |

-

|

| 393 |

-

def forward(self, x):

|

| 394 |

-

self.eps = self.eps.to(x.device)

|

| 395 |

-

# Prepare input tensor and perform GAP as initialization

|

| 396 |

-

gap_cls, x = self.prepare_input(x)

|

| 397 |

-

|

| 398 |

-

# Prepare queries (q), keys (k), and values (v)

|

| 399 |

-

q, k, v = gap_cls, self.norm_patches(x), self.norm_patches(x)

|

| 400 |

-

|

| 401 |

-

# Extract dimensions after normalization

|

| 402 |

-

Bq, Nq, dq = q.shape

|

| 403 |

-

Bk, Nk, dk = k.shape

|

| 404 |

-

Bv, Nv, dv = v.shape

|

| 405 |

-

|

| 406 |

-

# Check dimension consistency across batches and channels

|

| 407 |

-

assert Bq == Bk == Bv

|

| 408 |

-

assert dq == dk == dv

|

| 409 |

-

|

| 410 |

-

# Apply linear transformation for queries and keys then reshape

|

| 411 |

-

qq = self.wq(q).reshape(Bq, Nq, self.num_heads, dq // self.num_heads).permute(0, 2, 1,

|

| 412 |

-

3) # (Bq, Nq, dq) -> (B, num_heads, Nq, dq/num_heads)

|

| 413 |

-

kk = self.wk(k).reshape(Bk, Nk, self.num_heads, dk // self.num_heads).permute(0, 2, 1,

|

| 414 |

-

3) # (Bk, Nk, dk) -> (B, num_heads, Nk, dk/num_heads)

|

| 415 |

-

|

| 416 |

-

vv = v.reshape(Bv, Nv, self.num_heads, dv // self.num_heads).permute(0, 2, 1,

|

| 417 |

-

3) # (Bv, Nv, dv) -> (B, num_heads, Nv, dv/num_heads)

|

| 418 |

-

|

| 419 |

-

# Compute attention scores

|

| 420 |

-

attn = (qq @ kk.transpose(-2, -1)) * self.scale

|

| 421 |

-

# Apply softmax for normalization

|

| 422 |

-

attn = attn.softmax(dim=-1)

|

| 423 |

-

|

| 424 |

-

# If gamma scaling is used

|

| 425 |

-

if self.gamma is not None:

|

| 426 |

-

# Apply gamma scaling on values and compute the weighted sum using attention scores

|

| 427 |

-

x = torch.pow(attn @ torch.pow((vv - vv.min() + self.eps), self.gamma),

|

| 428 |

-

1 / self.gamma) # (B, num_heads, Nv, dv/num_heads) -> (B, 1, 1, d)

|

| 429 |

-

# If use_beta, add a learnable translation

|

| 430 |

-

if self.use_beta:

|

| 431 |

-

x = x + self.beta

|

| 432 |

-

else:

|

| 433 |

-

# Compute the weighted sum using attention scores

|

| 434 |

-

x = (attn @ vv).transpose(1, 2).reshape(Bq, Nq, dq)

|

| 435 |

-

|

| 436 |

-

return x.squeeze()

|

| 437 |

-

|

| 438 |

-

|

| 439 |

-

|

| 440 |

-

class SimPoolAggregator(BaseAggregator):

|

| 441 |

-

|

| 442 |

-

def __init__(self, num_heads, dim, *args, **kwargs):

|

| 443 |

-

super().__init__(False, False, num_heads, "sum", False)

|

| 444 |

-

self.pool = SimPool(dim, gamma=1.25)

|

| 445 |

-

|

| 446 |

-

def _get_full_sims(self, preds, raw, agg_sim, agg_heads):

|

| 447 |

-

if agg_sim:

|

| 448 |

-

device = self.pool.wq.weight.data.device

|

| 449 |

-

pooled_audio = self.pool(preds[AUDIO_FEATS].to(torch.float32).to(device))

|

| 450 |

-

pooled_image = self.pool(preds[IMAGE_FEATS].to(torch.float32).to(device))

|

| 451 |

-

|

| 452 |

-

sims = torch.einsum(

|

| 453 |

-

"bc,dc->bd",

|

| 454 |

-

pooled_audio,

|

| 455 |

-

pooled_image)

|

| 456 |

-

if agg_heads:

|

| 457 |

-

return sims

|

| 458 |

-

else:

|

| 459 |

-

sims = sims.unsqueeze(-1)

|

| 460 |

-

return sims.repeat(*([1] * (sims.dim() - 1)), self.num_heads)

|

| 461 |

-

|

| 462 |

-

|

| 463 |

-

else:

|

| 464 |

-

return BaseAggregator._get_full_sims(self, preds, raw, agg_sim, agg_heads)

|

| 465 |

-

|

| 466 |

-

def get_pairwise_sims(self, preds, raw, agg_sim, agg_heads):

|

| 467 |

-

if agg_sim:

|

| 468 |

-

device = self.pool.wq.weight.data.device

|

| 469 |

-

pooled_audio = self.pool(preds[AUDIO_FEATS].to(torch.float32).to(device))

|

| 470 |

-

pooled_image = self.pool(preds[IMAGE_FEATS].to(torch.float32).to(device))

|

| 471 |

-

|

| 472 |

-

sims = torch.einsum(

|

| 473 |

-

"bc,dc->b",

|

| 474 |

-

pooled_audio,

|

| 475 |

-

pooled_image)

|

| 476 |

-

if agg_heads:

|

| 477 |

-

return sims

|

| 478 |

-

else:

|

| 479 |

-

sims = sims.unsqueeze(-1)

|

| 480 |

-

return sims.repeat(*([1] * (sims.dim() - 1)), self.num_heads)

|

| 481 |

-

|

| 482 |

-

else:

|

| 483 |

-

return BaseAggregator.get_pairwise_sims(self, preds, raw, agg_sim, agg_heads)

|

| 484 |

-

|

| 485 |

-

def forward_batched(self, preds, agg_heads, batch_size):

|

| 486 |

-

return self.forward(preds, agg_heads)

|

| 487 |

-

|

| 488 |

-

|

| 489 |

-

|

| 490 |

-

def get_aggregator(sim_agg_type, nonneg_sim, mask_silence, num_heads, head_agg, use_cls, dim):

|

| 491 |

-

shared_args = dict(

|

| 492 |

-

nonneg_sim=nonneg_sim,

|

| 493 |

-

mask_silence=mask_silence,

|

| 494 |

-

num_heads=num_heads,

|

| 495 |

-

head_agg=head_agg,

|

| 496 |

-

use_cls=use_cls,

|

| 497 |

-

)

|

| 498 |

-

|

| 499 |

-

if sim_agg_type == "paired":

|

| 500 |

-

agg1 = PairedAggregator(**shared_args)

|

| 501 |

-

elif sim_agg_type == "misa":

|

| 502 |

-

agg1 = ImageThenAudioAggregator("max", "avg", **shared_args)

|

| 503 |

-

elif sim_agg_type == "mima":

|

| 504 |

-

agg1 = ImageThenAudioAggregator("max", "max", **shared_args)

|

| 505 |

-

elif sim_agg_type == "sisa":

|

| 506 |

-

agg1 = ImageThenAudioAggregator("avg", "avg", **shared_args)

|

| 507 |

-

elif sim_agg_type == "cavmae":

|

| 508 |

-

agg1 = CAVMAEAggregator()

|

| 509 |

-

elif sim_agg_type == "imagebind":

|

| 510 |

-

agg1 = ImageBindAggregator(num_heads=shared_args["num_heads"])

|

| 511 |

-

elif sim_agg_type == "simpool":

|

| 512 |

-

agg1 = SimPoolAggregator(num_heads=shared_args["num_heads"], dim=dim)

|

| 513 |

-

else:

|

| 514 |

-

raise ValueError(f"Unknown loss_type {sim_agg_type}")

|

| 515 |

-

|

| 516 |

-

return agg1

|

| 517 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

DenseAV/denseav/aligners.py

DELETED

|

@@ -1,300 +0,0 @@

|

|

| 1 |

-

from functools import partial

|

| 2 |

-

|

| 3 |

-

import torch

|

| 4 |

-

import torch.nn.functional as F

|

| 5 |

-

from torch.nn import ModuleList

|

| 6 |

-

|

| 7 |

-

from denseav.featurizers.DINO import Block

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

class ChannelNorm(torch.nn.Module):

|

| 11 |

-

|

| 12 |

-

def __init__(self, dim, *args, **kwargs):

|

| 13 |

-

super().__init__(*args, **kwargs)

|

| 14 |

-

self.norm = torch.nn.LayerNorm(dim, eps=1e-4)

|

| 15 |

-

|

| 16 |

-

def forward_spatial(self, x):

|

| 17 |

-

return self.norm(x.permute(0, 2, 3, 1)).permute(0, 3, 1, 2)

|

| 18 |

-

|

| 19 |

-

def forward(self, x, cls):

|

| 20 |

-

return self.forward_spatial(x), self.forward_cls(cls)

|

| 21 |

-

|

| 22 |

-

def forward_cls(self, cls):

|

| 23 |

-

if cls is not None:

|

| 24 |

-

return self.norm(cls)

|

| 25 |

-

else:

|

| 26 |

-

return None

|

| 27 |

-

|

| 28 |

-

|

| 29 |

-

def id_conv(dim, strength=.9):

|

| 30 |

-

conv = torch.nn.Conv2d(dim, dim, 1, padding="same")

|

| 31 |

-

start_w = conv.weight.data

|

| 32 |

-

conv.weight.data = torch.nn.Parameter(

|

| 33 |

-

torch.eye(dim, device=start_w.device).unsqueeze(-1).unsqueeze(-1) * strength + start_w * (1 - strength))

|

| 34 |

-

conv.bias.data = torch.nn.Parameter(conv.bias.data * (1 - strength))

|

| 35 |

-

return conv

|

| 36 |

-

|

| 37 |

-

|

| 38 |

-

class LinearAligner(torch.nn.Module):

|

| 39 |

-

def __init__(self, in_dim, out_dim, use_norm=True):

|

| 40 |

-

super().__init__()

|

| 41 |

-

self.in_dim = in_dim

|

| 42 |

-

self.out_dim = out_dim

|

| 43 |

-

if use_norm:

|

| 44 |

-

self.norm = ChannelNorm(in_dim)

|

| 45 |

-

else:

|

| 46 |

-

self.norm = Identity2()

|

| 47 |

-

|

| 48 |

-

if in_dim == out_dim:

|

| 49 |

-

self.layer = id_conv(in_dim, 0)

|

| 50 |

-

else:

|

| 51 |

-

self.layer = torch.nn.Conv2d(in_dim, out_dim, kernel_size=1, stride=1)

|

| 52 |

-

|

| 53 |

-

self.cls_layer = torch.nn.Linear(in_dim, out_dim)

|

| 54 |

-

|

| 55 |

-

def forward(self, spatial, cls):

|

| 56 |

-

norm_spatial, norm_cls = self.norm(spatial, cls)

|

| 57 |

-

|

| 58 |

-

if cls is not None:

|

| 59 |

-

aligned_cls = self.cls_layer(cls)

|

| 60 |

-

else:

|

| 61 |

-

aligned_cls = None

|

| 62 |

-

|

| 63 |

-

return self.layer(norm_spatial), aligned_cls

|

| 64 |

-

|

| 65 |

-

class IdLinearAligner(torch.nn.Module):

|

| 66 |

-

def __init__(self, in_dim, out_dim):

|

| 67 |

-

super().__init__()

|

| 68 |

-

self.in_dim = in_dim

|

| 69 |

-

self.out_dim = out_dim

|

| 70 |

-

assert self.out_dim == self.in_dim

|

| 71 |

-

self.layer = id_conv(in_dim, 1.0)

|

| 72 |

-

def forward(self, spatial, cls):

|

| 73 |

-

return self.layer(spatial), cls

|

| 74 |

-

|

| 75 |

-

|

| 76 |

-

class FrequencyAvg(torch.nn.Module):

|

| 77 |

-

def __init__(self):

|

| 78 |

-

super().__init__()

|

| 79 |

-

|

| 80 |

-

def forward(self, spatial, cls):

|

| 81 |

-

return spatial.mean(2, keepdim=True), cls

|

| 82 |

-

|

| 83 |

-

|

| 84 |

-

class LearnedTimePool(torch.nn.Module):

|

| 85 |

-

def __init__(self, dim, width, maxpool):

|

| 86 |

-

super().__init__()

|

| 87 |

-

self.dim = dim

|

| 88 |

-

self.width = width

|

| 89 |

-

self.norm = ChannelNorm(dim)

|

| 90 |

-

if maxpool:

|

| 91 |

-

self.layer = torch.nn.Sequential(

|

| 92 |

-

torch.nn.Conv2d(dim, dim, kernel_size=width, stride=1, padding="same"),

|

| 93 |

-

torch.nn.MaxPool2d(kernel_size=(1, width), stride=(1, width))

|

| 94 |

-

)

|

| 95 |

-

else:

|

| 96 |

-

self.layer = torch.nn.Conv2d(dim, dim, kernel_size=(1, width), stride=(1, width))

|

| 97 |

-

|

| 98 |

-

def forward(self, spatial, cls):

|

| 99 |

-

norm_spatial, norm_cls = self.norm(spatial, cls)

|

| 100 |

-

return self.layer(norm_spatial), norm_cls

|

| 101 |

-

|

| 102 |

-

|

| 103 |

-

class LearnedTimePool2(torch.nn.Module):

|

| 104 |

-

def __init__(self, in_dim, out_dim, width, maxpool, use_cls_layer):

|

| 105 |

-

super().__init__()

|

| 106 |

-

self.in_dim = in_dim

|

| 107 |

-

self.out_dim = out_dim

|

| 108 |

-

self.width = width

|

| 109 |

-

|

| 110 |

-

if maxpool:

|

| 111 |

-

self.layer = torch.nn.Sequential(

|

| 112 |

-

torch.nn.Conv2d(in_dim, out_dim, kernel_size=width, stride=1, padding="same"),

|

| 113 |

-

torch.nn.MaxPool2d(kernel_size=(1, width), stride=(1, width))

|

| 114 |

-

)

|

| 115 |

-

else:

|

| 116 |

-

self.layer = torch.nn.Conv2d(in_dim, out_dim, kernel_size=(1, width), stride=(1, width))

|

| 117 |

-

|

| 118 |

-

self.use_cls_layer = use_cls_layer

|

| 119 |

-

if use_cls_layer:

|

| 120 |

-

self.cls_layer = torch.nn.Linear(in_dim, out_dim)

|

| 121 |

-

|

| 122 |

-

def forward(self, spatial, cls):

|

| 123 |

-

|

| 124 |

-

if cls is not None:

|

| 125 |

-

if self.use_cls_layer:

|

| 126 |

-

aligned_cls = self.cls_layer(cls)

|

| 127 |

-

else:

|

| 128 |

-

aligned_cls = cls

|

| 129 |

-

else:

|

| 130 |

-

aligned_cls = None

|

| 131 |

-

|

| 132 |

-

return self.layer(spatial), aligned_cls

|

| 133 |

-

|

| 134 |

-

|

| 135 |

-

class Sequential2(torch.nn.Module):

|

| 136 |

-

|

| 137 |

-

def __init__(self, *modules):

|

| 138 |

-

super().__init__()

|

| 139 |

-

self.mod_list = ModuleList(modules)

|

| 140 |

-

|

| 141 |

-

def forward(self, x, y):

|

| 142 |

-

results = (x, y)

|

| 143 |

-

for m in self.mod_list:

|

| 144 |

-

results = m(*results)

|

| 145 |

-

return results

|

| 146 |

-

|

| 147 |

-

|

| 148 |

-

class ProgressiveGrowing(torch.nn.Module):

|

| 149 |

-

|

| 150 |

-

def __init__(self, stages, phase_lengths):

|

| 151 |

-

super().__init__()

|

| 152 |

-

self.stages = torch.nn.ModuleList(stages)

|

| 153 |

-

self.phase_lengths = torch.tensor(phase_lengths)

|

| 154 |

-

assert len(self.phase_lengths) + 1 == len(self.stages)

|

| 155 |

-

self.phase_boundaries = self.phase_lengths.cumsum(0)

|

| 156 |

-

self.register_buffer('phase', torch.tensor([1]))

|

| 157 |

-

|

| 158 |

-

def maybe_change_phase(self, global_step):

|

| 159 |

-

needed_phase = (global_step >= self.phase_boundaries).to(torch.int64).sum().item() + 1

|

| 160 |

-

if needed_phase != self.phase.item():

|

| 161 |

-

print(f"Changing aligner phase to {needed_phase}")

|

| 162 |

-