Spaces:

Running

Running

Commit

·

5693654

0

Parent(s):

init

Browse files- .env.example +42 -0

- .github/workflows/docker-build.yml +61 -0

- .github/workflows/security-scan.yml +43 -0

- .github/workflows/sync.yml +60 -0

- .gitignore +20 -0

- .python-version +1 -0

- Dockerfile +27 -0

- README.md +279 -0

- app/clients/__init__.py +5 -0

- app/clients/base_client.py +54 -0

- app/clients/claude_client.py +142 -0

- app/clients/deepseek_client.py +133 -0

- app/deepclaude/__init__.py +0 -0

- app/deepclaude/deepclaude.py +268 -0

- app/main.py +160 -0

- app/utils/auth.py +47 -0

- app/utils/logger.py +70 -0

- pyproject.toml +14 -0

- uv.lock +0 -0

.env.example

ADDED

|

@@ -0,0 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 客户端请求时允许通过请求的 API KEY,无需在当前环境变量当中手动添加 Bearer,目前只支持一个,未来可以升级为数组/toml/yaml 或专门的管理工具

|

| 2 |

+

ALLOW_API_KEY=your_api_key

|

| 3 |

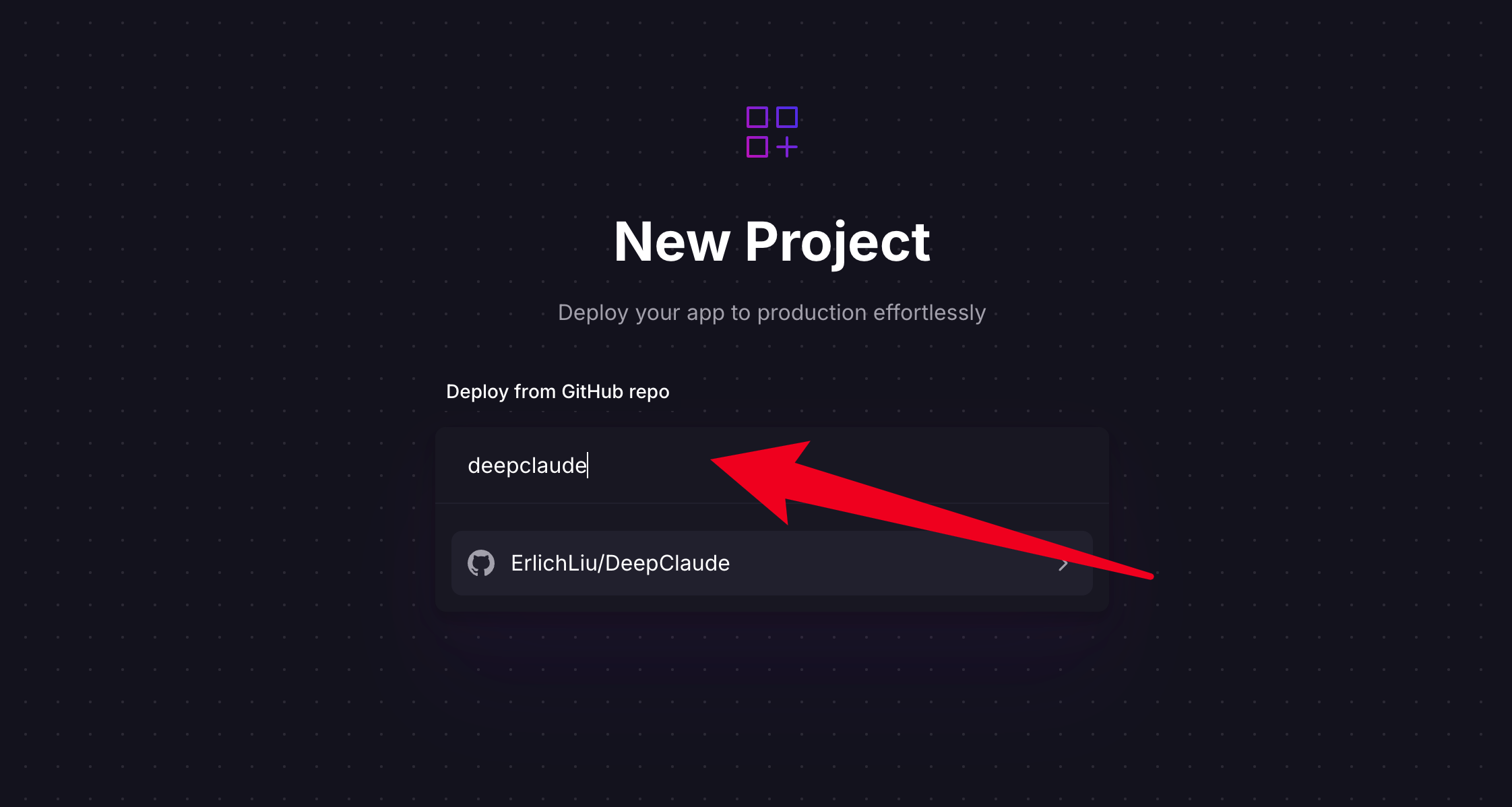

+

|

| 4 |

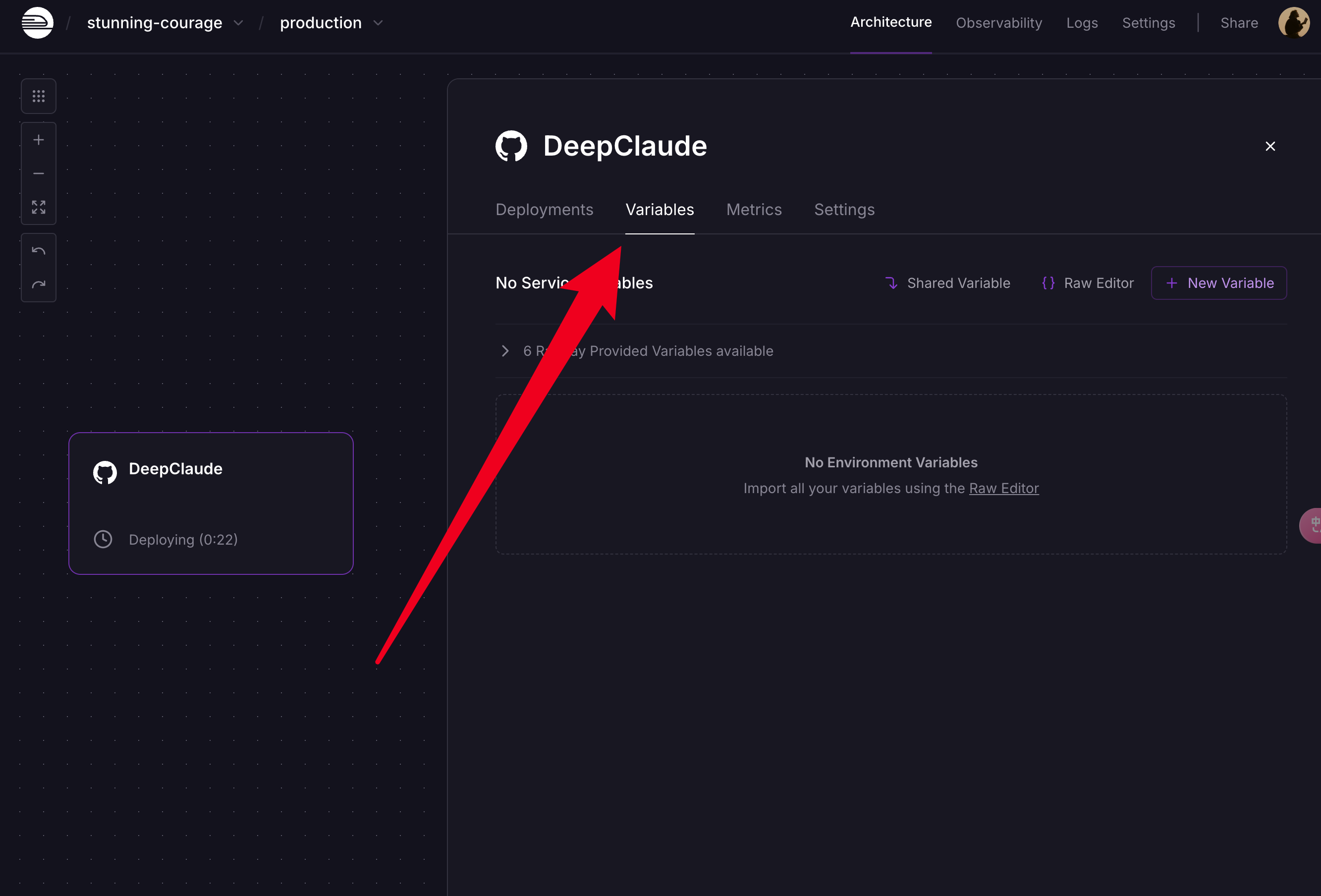

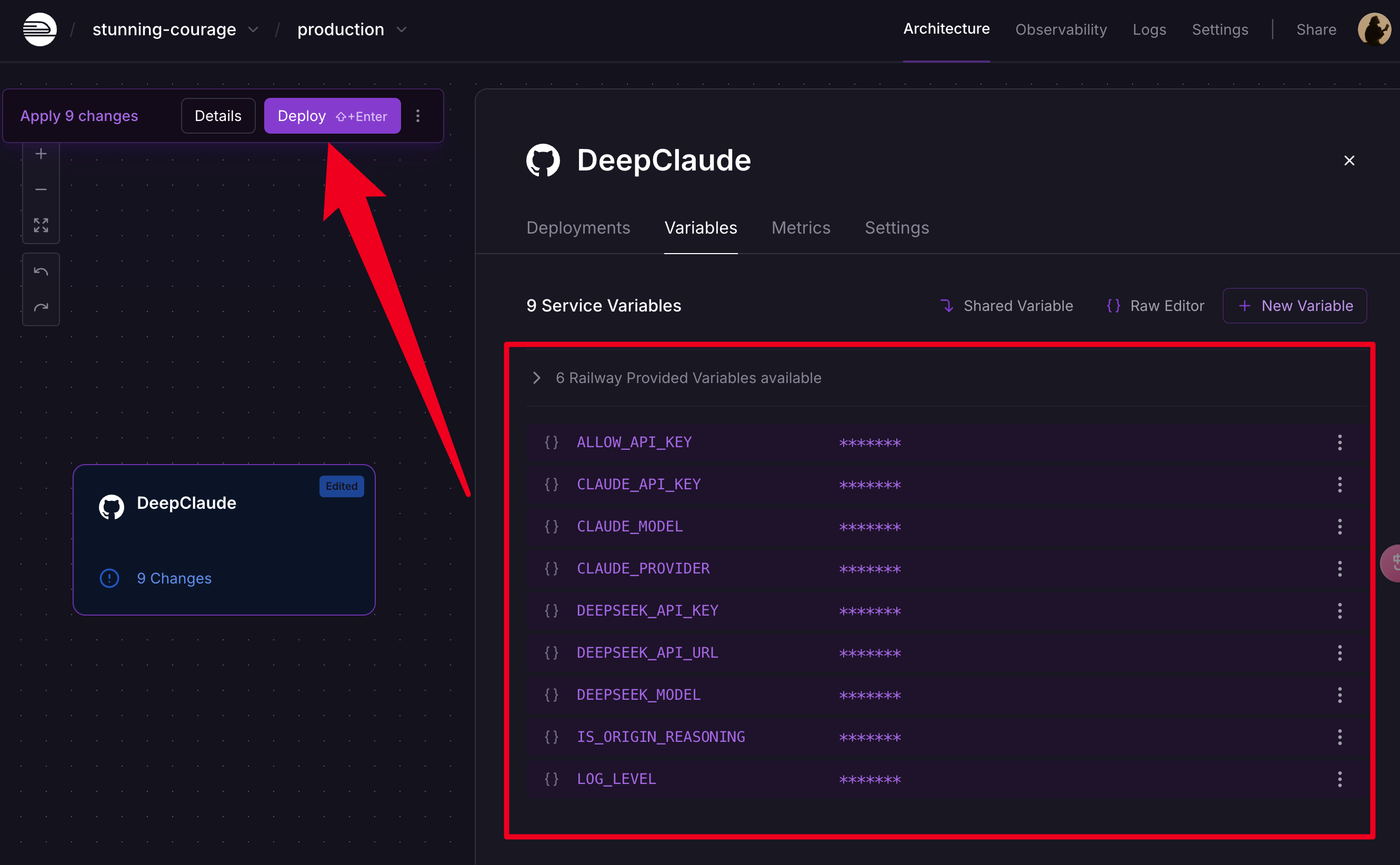

+

# 服务端跨域配置

|

| 5 |

+

# 允许访问的域名,多个域名使用逗号分隔(中间不能有空格),例如:http://localhost:3000,https://chat.example.com

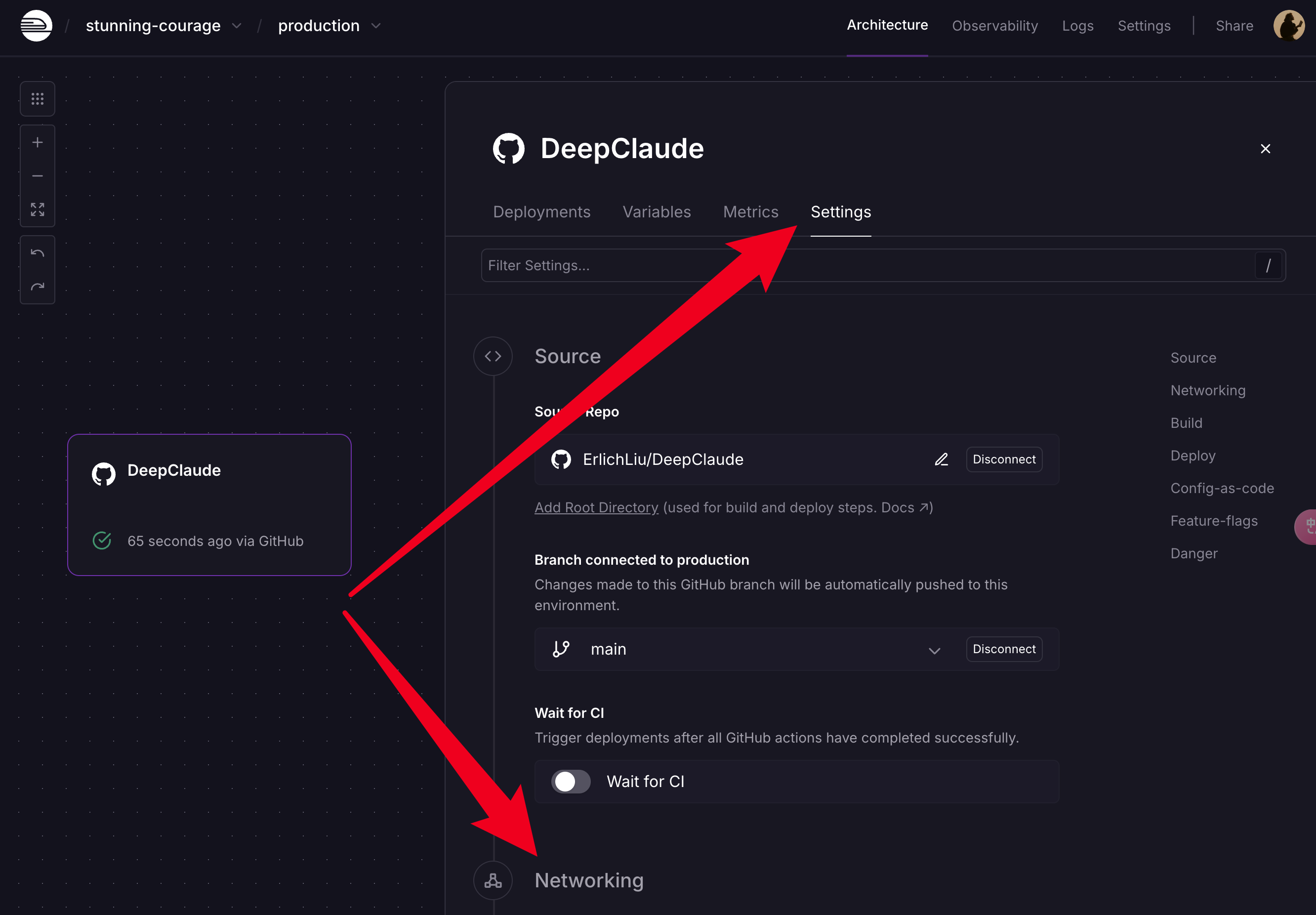

|

| 6 |

+

# 如果允许所有域名访问,则填写 *

|

| 7 |

+

ALLOW_ORIGINS=*

|

| 8 |

+

|

| 9 |

+

# DeepSeek API KEY,默认为 DeepSeek 官方 API,只支持 r1 系列模型(包括基于 llama 和 qwen 的密集模型,1.5b、7b、8b、14b、32b、70b、671b)

|

| 10 |

+

# 除了采用官网的 DeepSeek r1 外,还推荐以下:

|

| 11 |

+

# 1.SiliconFlow 的 deepseek-ai/DeepSeek-R1 获取 API KEY 的链接:https://cloud.siliconflow.cn/i/RXikvHE2 (点击此链接可以获得到 2000 万免费 tokens)

|

| 12 |

+

# 2.Groq 托管的 deepseek-r1-distill-llama-70b 获取 API KEY 的链接:https://console.groq.com/docs/models

|

| 13 |

+

# 3.本地 Ollama 运行的 DeepSeek r1 , 可以根据自己电脑的内存和显存大小而定,推荐 7b、8b、14b 最佳,获取链接:https://ollama.com

|

| 14 |

+

DEEPSEEK_API_KEY=your_deepseek_api_key

|

| 15 |

+

DEEPSEEK_API_URL=https://api.deepseek.com/v1/chat/completions #如果是siliconflow,则使用 https://api.siliconflow.cn/v1/chat/completions

|

| 16 |

+

DEEPSEEK_MODEL=deepseek-reasoner #如果是siliconflow,则使用 deepseek-ai/DeepSeek-R1

|

| 17 |

+

|

| 18 |

+

# DeepSeek推理过程格式配置

|

| 19 |

+

# 该变量用于区别返回体中推理过程的格式

|

| 20 |

+

# 目前支持两种格式:

|

| 21 |

+

# 1. Origin_Reasoning (支持 reasoning_content 字段返回) (DeepSeek官方 deepseek-reasoner 模型、 SiliconFlow 的 deepseek-ai/DeepSeek-R1 模型、火山云的 DeepSeek R1 模型均支持)

|

| 22 |

+

# 2. <think></think> 标签格式(通常是通过 deepseek r1 蒸馏过的模型采用这种格式,包括各个平台托管的基于 llama 和 qwen 的密集模型,比如 7b、8b、14b、32b、70b 的版本)

|

| 23 |

+

# 填写true表示使用 Origin_Reasoning 格式,填写false表示使用 <think></think> 标签格式

|

| 24 |

+

IS_ORIGIN_REASONING=true

|

| 25 |

+

|

| 26 |

+

# Claude API KEY,默认为 Claude 官方 API,只推荐 Claude 3.5 Sonnet 模型,不推荐其他模型

|

| 27 |

+

CLAUDE_API_KEY=your_claude_api_key

|

| 28 |

+

CLAUDE_MODEL=claude-3-5-sonnet-20241022

|

| 29 |

+

|

| 30 |

+

# Claude Provider

|

| 31 |

+

# 若使用官方则填 anthropic

|

| 32 |

+

# 若使用中转平台如 OpenRouter 或国内基于 OneAPI 的中转平台则填oneapi

|

| 33 |

+

CLAUDE_PROVIDER=anthropic

|

| 34 |

+

|

| 35 |

+

# 如果使用 OpenRouter,这里填写 OpenRouter 的 API URL https://openrouter.ai/api/v1/chat/completions,

|

| 36 |

+

# 如果使用中转平台则填写相应的 API URL: 例如 https://api.oneapi.com/v1/chat/completions

|

| 37 |

+

# 默认为 Anthropic 的 API URL https://api.anthropic.com/v1/messages

|

| 38 |

+

CLAUDE_API_URL=https://api.anthropic.com/v1/messages

|

| 39 |

+

|

| 40 |

+

# 日志配置

|

| 41 |

+

# 可选值:DEBUG, INFO, WARNING, ERROR, CRITICAL

|

| 42 |

+

LOG_LEVEL=INFO

|

.github/workflows/docker-build.yml

ADDED

|

@@ -0,0 +1,61 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Docker Build

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches: [ "main" ]

|

| 6 |

+

workflow_dispatch:

|

| 7 |

+

|

| 8 |

+

jobs:

|

| 9 |

+

check-repository:

|

| 10 |

+

runs-on: ubuntu-latest

|

| 11 |

+

outputs:

|

| 12 |

+

is_original: ${{ steps.check.outputs.is_original }}

|

| 13 |

+

steps:

|

| 14 |

+

- id: check

|

| 15 |

+

run: |

|

| 16 |

+

if [ "${{ github.repository }}" = "ErlichLiu/DeepClaude" ]; then

|

| 17 |

+

echo "is_original=true" >> $GITHUB_OUTPUT

|

| 18 |

+

else

|

| 19 |

+

echo "is_original=false" >> $GITHUB_OUTPUT

|

| 20 |

+

fi

|

| 21 |

+

|

| 22 |

+

build:

|

| 23 |

+

needs: check-repository

|

| 24 |

+

if: needs.check-repository.outputs.is_original == 'true'

|

| 25 |

+

runs-on: ubuntu-latest

|

| 26 |

+

permissions:

|

| 27 |

+

packages: write

|

| 28 |

+

contents: read

|

| 29 |

+

|

| 30 |

+

steps:

|

| 31 |

+

- uses: actions/checkout@v4

|

| 32 |

+

|

| 33 |

+

- name: Login to GitHub Container Registry

|

| 34 |

+

uses: docker/login-action@v3

|

| 35 |

+

with:

|

| 36 |

+

registry: ghcr.io

|

| 37 |

+

username: ${{ github.repository_owner }}

|

| 38 |

+

password: ${{ secrets.GITHUB_TOKEN }}

|

| 39 |

+

|

| 40 |

+

- name: Set lowercase variables

|

| 41 |

+

run: |

|

| 42 |

+

OWNER_LOWER=$(echo "${{ github.repository_owner }}" | tr '[:upper:]' '[:lower:]')

|

| 43 |

+

REPO_NAME_LOWER=$(echo "${{ github.event.repository.name }}" | tr '[:upper:]' '[:lower:]')

|

| 44 |

+

echo "OWNER_LOWER=$OWNER_LOWER" >> $GITHUB_ENV

|

| 45 |

+

echo "REPO_NAME_LOWER=$REPO_NAME_LOWER" >> $GITHUB_ENV

|

| 46 |

+

|

| 47 |

+

- name: Set up Docker Buildx

|

| 48 |

+

uses: docker/setup-buildx-action@v3

|

| 49 |

+

with:

|

| 50 |

+

driver: docker-container

|

| 51 |

+

|

| 52 |

+

- name: Build and push Docker image

|

| 53 |

+

uses: docker/build-push-action@v5

|

| 54 |

+

with:

|

| 55 |

+

context: .

|

| 56 |

+

file: ./Dockerfile

|

| 57 |

+

push: true

|

| 58 |

+

platforms: linux/amd64,linux/arm64

|

| 59 |

+

tags: |

|

| 60 |

+

ghcr.io/${{ env.OWNER_LOWER }}/${{ env.REPO_NAME_LOWER }}:latest

|

| 61 |

+

ghcr.io/${{ env.OWNER_LOWER }}/${{ env.REPO_NAME_LOWER }}:${{ github.sha }}

|

.github/workflows/security-scan.yml

ADDED

|

@@ -0,0 +1,43 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Security Scan

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

schedule:

|

| 5 |

+

- cron: '0 0 * * 0' # 每周运行一次

|

| 6 |

+

workflow_dispatch:

|

| 7 |

+

|

| 8 |

+

jobs:

|

| 9 |

+

check-repository:

|

| 10 |

+

runs-on: ubuntu-latest

|

| 11 |

+

outputs:

|

| 12 |

+

is_original: ${{ steps.check.outputs.is_original }}

|

| 13 |

+

steps:

|

| 14 |

+

- id: check

|

| 15 |

+

run: |

|

| 16 |

+

if [ "${{ github.repository }}" = "ErlichLiu/DeepClaude" ]; then

|

| 17 |

+

echo "is_original=true" >> $GITHUB_OUTPUT

|

| 18 |

+

else

|

| 19 |

+

echo "is_original=false" >> $GITHUB_OUTPUT

|

| 20 |

+

fi

|

| 21 |

+

|

| 22 |

+

scan:

|

| 23 |

+

needs: check-repository

|

| 24 |

+

if: needs.check-repository.outputs.is_original == 'true'

|

| 25 |

+

runs-on: ubuntu-latest

|

| 26 |

+

steps:

|

| 27 |

+

- uses: actions/checkout@v4

|

| 28 |

+

|

| 29 |

+

- name: Set lowercase variables

|

| 30 |

+

run: |

|

| 31 |

+

OWNER_LOWER=$(echo "${{ github.repository_owner }}" | tr '[:upper:]' '[:lower:]')

|

| 32 |

+

REPO_NAME_LOWER=$(echo "${{ github.event.repository.name }}" | tr '[:upper:]' '[:lower:]')

|

| 33 |

+

echo "OWNER_LOWER=$OWNER_LOWER" >> $GITHUB_ENV

|

| 34 |

+

echo "REPO_NAME_LOWER=$REPO_NAME_LOWER" >> $GITHUB_ENV

|

| 35 |

+

|

| 36 |

+

- name: Run Trivy vulnerability scanner

|

| 37 |

+

uses: aquasecurity/trivy-action@master

|

| 38 |

+

with:

|

| 39 |

+

image-ref: ghcr.io/${{ env.OWNER_LOWER }}/${{ env.REPO_NAME_LOWER }}:latest

|

| 40 |

+

format: 'table'

|

| 41 |

+

exit-code: '1'

|

| 42 |

+

ignore-unfixed: true

|

| 43 |

+

severity: 'CRITICAL'

|

.github/workflows/sync.yml

ADDED

|

@@ -0,0 +1,60 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Upstream Sync

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

schedule:

|

| 5 |

+

- cron: "0 0 * * *"

|

| 6 |

+

workflow_dispatch:

|

| 7 |

+

|

| 8 |

+

jobs:

|

| 9 |

+

# 检查是否为 fork 仓库

|

| 10 |

+

check-repository:

|

| 11 |

+

name: Check Repository Type

|

| 12 |

+

runs-on: ubuntu-latest

|

| 13 |

+

outputs:

|

| 14 |

+

is_fork: ${{ steps.check.outputs.is_fork }}

|

| 15 |

+

steps:

|

| 16 |

+

- id: check

|

| 17 |

+

run: |

|

| 18 |

+

if [ "${{ github.repository }}" != "ErlichLiu/DeepClaude" ]; then

|

| 19 |

+

echo "is_fork=true" >> $GITHUB_OUTPUT

|

| 20 |

+

else

|

| 21 |

+

echo "is_fork=false" >> $GITHUB_OUTPUT

|

| 22 |

+

fi

|

| 23 |

+

|

| 24 |

+

# 同步上游更改

|

| 25 |

+

sync:

|

| 26 |

+

needs: check-repository

|

| 27 |

+

if: needs.check-repository.outputs.is_fork == 'true'

|

| 28 |

+

name: Sync Latest From Upstream

|

| 29 |

+

runs-on: ubuntu-latest

|

| 30 |

+

steps:

|

| 31 |

+

# 标准签出

|

| 32 |

+

- name: Checkout target repo

|

| 33 |

+

uses: actions/checkout@v4

|

| 34 |

+

# 如果上游仓库有对.github/workflows/下的文件进行变更,则需要使用有workflow权限的token

|

| 35 |

+

# with:

|

| 36 |

+

# token: ${{ secrets.ACTION_TOKEN }}

|

| 37 |

+

|

| 38 |

+

# 获取分支名(区分 PR 和普通提交场景)

|

| 39 |

+

- name: Get branch name (merge)

|

| 40 |

+

if: github.event_name != 'pull_request'

|

| 41 |

+

shell: bash

|

| 42 |

+

run: echo "BRANCH_NAME=$(echo ${GITHUB_REF#refs/heads/} | tr / -)" >> $GITHUB_ENV

|

| 43 |

+

|

| 44 |

+

- name: Get branch name (pull request)

|

| 45 |

+

if: github.event_name == 'pull_request'

|

| 46 |

+

shell: bash

|

| 47 |

+

run: echo "BRANCH_NAME=$(echo ${GITHUB_HEAD_REF} | tr / -)" >> $GITHUB_ENV

|

| 48 |

+

|

| 49 |

+

# 运行同步动作

|

| 50 |

+

- name: Sync upstream changes

|

| 51 |

+

id: sync

|

| 52 |

+

uses: aormsby/[email protected]

|

| 53 |

+

with:

|

| 54 |

+

upstream_sync_repo: ErlichLiu/DeepClaude

|

| 55 |

+

upstream_sync_branch: ${{ env.BRANCH_NAME }}

|

| 56 |

+

target_sync_branch: ${{ env.BRANCH_NAME }}

|

| 57 |

+

target_repo_token: ${{ secrets.GITHUB_TOKEN }}

|

| 58 |

+

upstream_pull_args: --allow-unrelated-histories --no-edit

|

| 59 |

+

shallow_since: '1 days ago'

|

| 60 |

+

test_mode: false

|

.gitignore

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Python-generated files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[oc]

|

| 4 |

+

build/

|

| 5 |

+

dist/

|

| 6 |

+

wheels/

|

| 7 |

+

*.egg-info

|

| 8 |

+

|

| 9 |

+

# Environment

|

| 10 |

+

.env

|

| 11 |

+

.env.*

|

| 12 |

+

!.env.example

|

| 13 |

+

|

| 14 |

+

# Virtual environments

|

| 15 |

+

.venv

|

| 16 |

+

|

| 17 |

+

# Windsurf

|

| 18 |

+

.windsurfrules

|

| 19 |

+

|

| 20 |

+

docker-compose.yml

|

.python-version

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

3.11

|

Dockerfile

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 使用 Python 3.11 slim 版本作为基础镜像

|

| 2 |

+

FROM python:3.11-slim

|

| 3 |

+

|

| 4 |

+

# 设置工作目录

|

| 5 |

+

WORKDIR /app

|

| 6 |

+

|

| 7 |

+

# 设置环境变量

|

| 8 |

+

ENV PYTHONUNBUFFERED=1 \

|

| 9 |

+

PYTHONDONTWRITEBYTECODE=1

|

| 10 |

+

|

| 11 |

+

# 安装依赖

|

| 12 |

+

RUN pip install --no-cache-dir \

|

| 13 |

+

aiohttp==3.11.11 \

|

| 14 |

+

colorlog==6.9.0 \

|

| 15 |

+

fastapi==0.115.8 \

|

| 16 |

+

python-dotenv==1.0.1 \

|

| 17 |

+

tiktoken==0.8.0 \

|

| 18 |

+

"uvicorn[standard]"

|

| 19 |

+

|

| 20 |

+

# 复制项目文件

|

| 21 |

+

COPY ./app ./app

|

| 22 |

+

|

| 23 |

+

# 暴露端口

|

| 24 |

+

EXPOSE 8000

|

| 25 |

+

|

| 26 |

+

# 启动命令

|

| 27 |

+

CMD ["python", "-m", "uvicorn", "app.main:app", "--host", "0.0.0.0", "--port", "8000"]

|

README.md

ADDED

|

@@ -0,0 +1,279 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

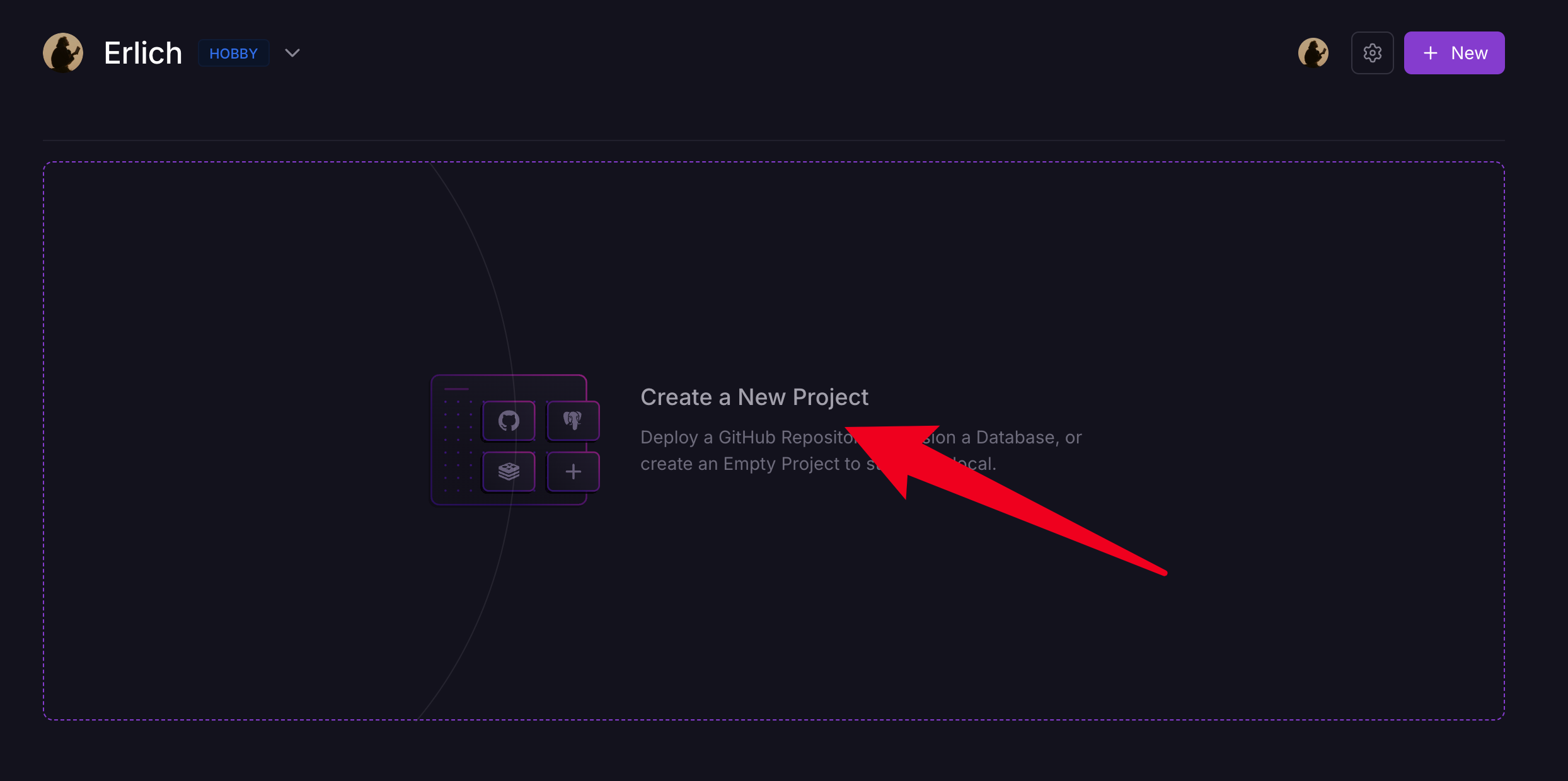

|

|

|

|

|

|

|

|

|

|

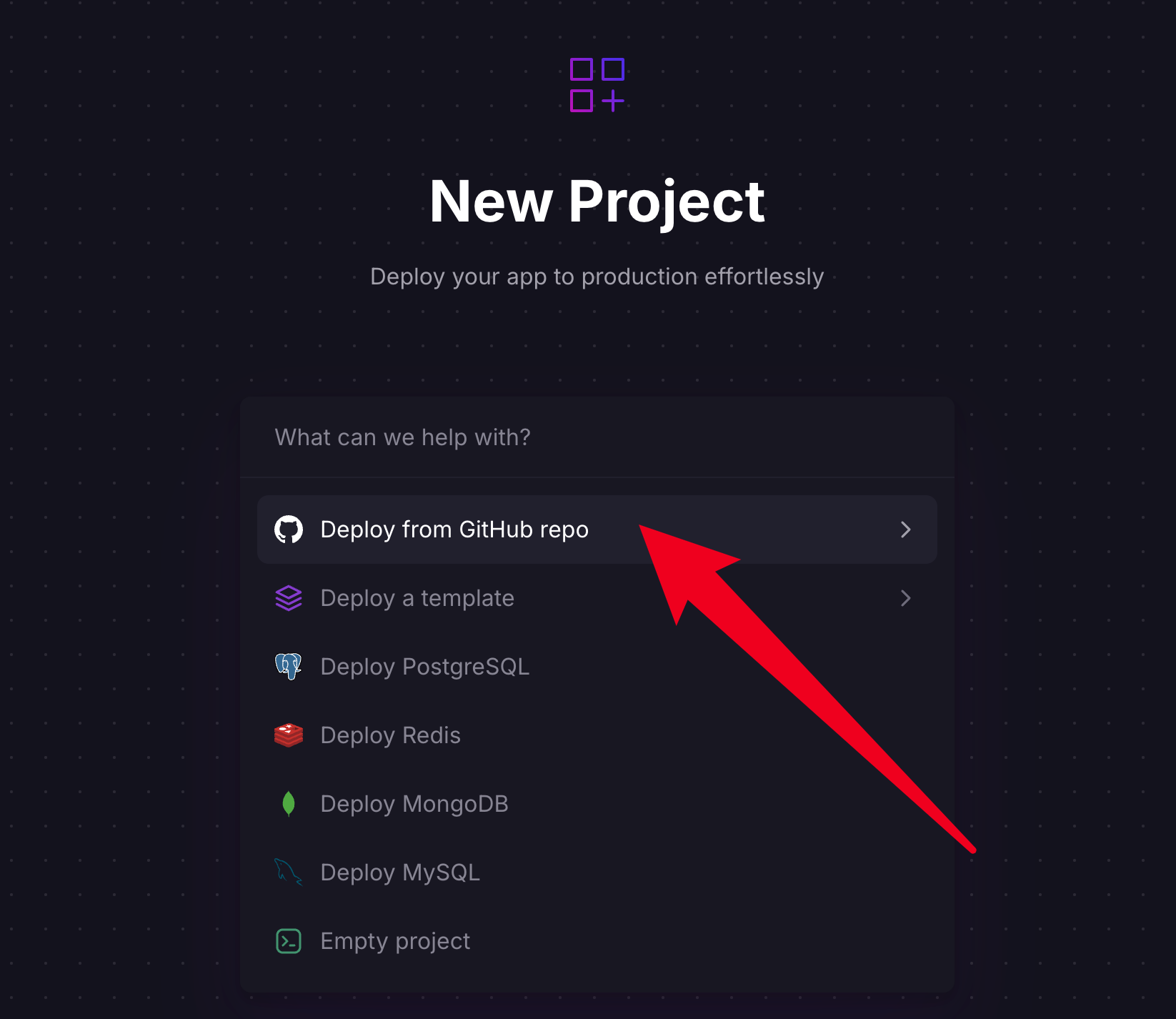

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

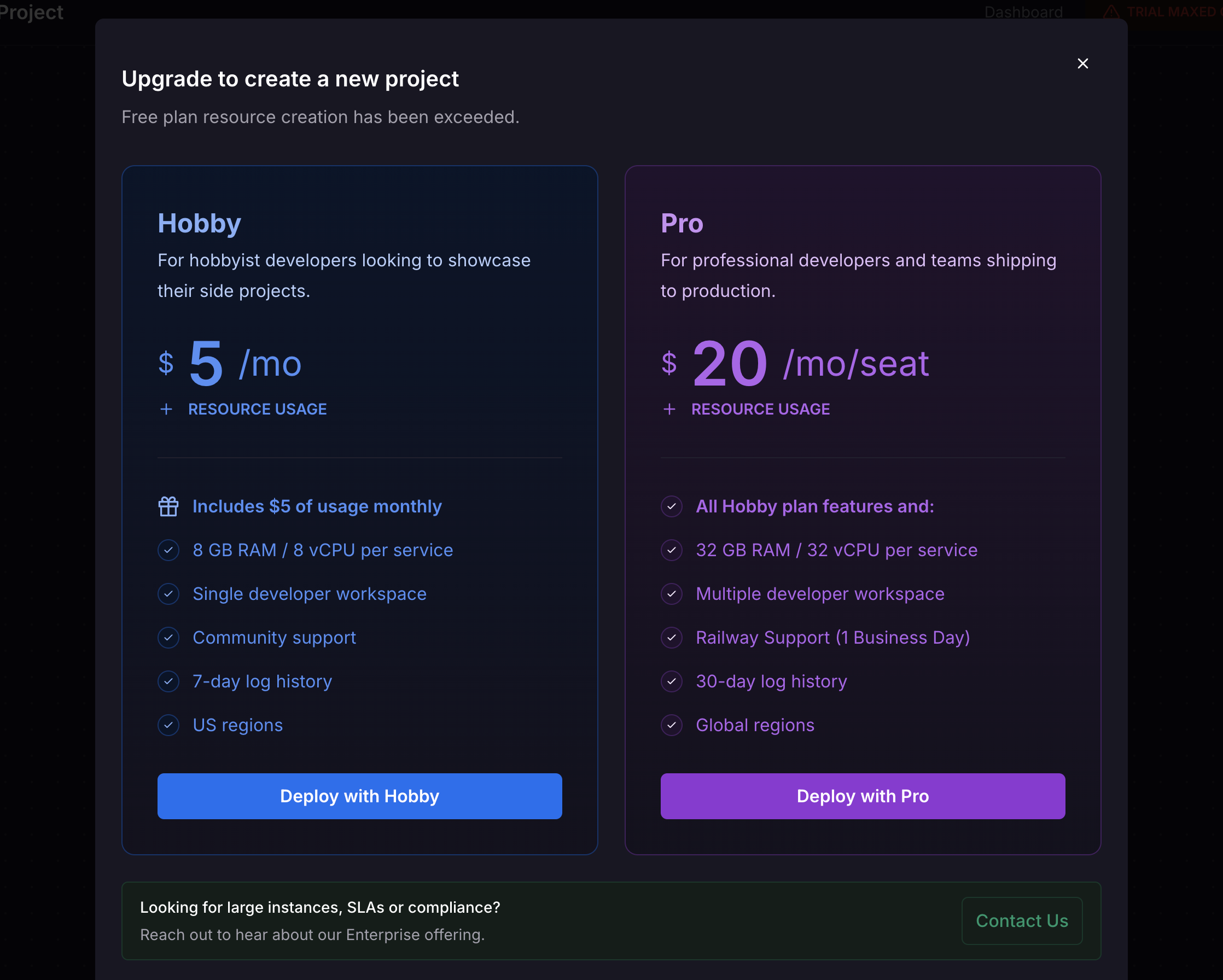

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<div>

|

| 2 |

+

<h1>DeepClaude 🐬🧠 - OpenAI Compatible</h1>

|

| 3 |

+

|

| 4 |

+

<a href="https://github.com/getasterisk/deepclaude"> Inspiration from getasterisk/deepclaude</a>

|

| 5 |

+

|

| 6 |

+

[](#)

|

| 7 |

+

[](https://openai.com)

|

| 8 |

+

|

| 9 |

+

</div>

|

| 10 |

+

|

| 11 |

+

<div>

|

| 12 |

+

<h3 style="color: #FF9909"> 特别说明:对于不太会部署,只是希望使用上最强 DeepClaude 组合的朋友,请直接联系 Erlich(微信:geekthings)购买按量付费的 API 即可,国内可以直接访问 </h3>

|

| 13 |

+

</div>

|

| 14 |

+

|

| 15 |

+

---

|

| 16 |

+

|

| 17 |

+

<details>

|

| 18 |

+

<summary><strong>更新日志:</strong></summary>

|

| 19 |

+

<div>

|

| 20 |

+

2025-02-08.2: 支持非流式请求,支持 OpenAI 兼容的 models 接口返回。(⚠️ 当前暂未实现正确的 tokens 消耗统计,稍后更新)

|

| 21 |

+

|

| 22 |

+

2025-02-08.1: 添加 Github Actions,支持 fork 自动同步、支持自动构建 Docker 最新镜像、支持 docker-compose 部署

|

| 23 |

+

|

| 24 |

+

2025-02-07.2: 修复 Claude temperature 参数可能会超过范围导致的请求失败的 bug

|

| 25 |

+

|

| 26 |

+

2025-02-07.1: 支持 Claude temputerature 等参数;添加更详细的 .env.example 说明

|

| 27 |

+

|

| 28 |

+

2025-02-06.1:修复非原生推理模型无法获得到推理内容的 bug

|

| 29 |

+

|

| 30 |

+

2025-02-05.1: 支持通过环境变量配置是否是原生支持推理字段的模型,满血版本通常支持

|

| 31 |

+

|

| 32 |

+

2025-02-04.2: 支持跨域配置,可在 .env 中配置

|

| 33 |

+

|

| 34 |

+

2025-02-04.1: 支持 Openrouter 以及 OneAPI 等中转服务商作为 Claude 部分的供应商

|

| 35 |

+

|

| 36 |

+

2025-02-03.3: 支持 OpenRouter 作为 Claude 的供应商,详见 .env.example 说明

|

| 37 |

+

|

| 38 |

+

2025-02-03.2: 由于 deepseek r1 在某种程度上已经开启了一个规范,所以我们也遵循推理标注的这种规范,更好适配支持的更好的 Cherry Studio 等软件。

|

| 39 |

+

|

| 40 |

+

2025-02-03.1: Siliconflow 的 DeepSeek R1 返回结构变更,支持新的返回结构

|

| 41 |

+

|

| 42 |

+

</div>

|

| 43 |

+

</details>

|

| 44 |

+

|

| 45 |

+

# Table of Contents

|

| 46 |

+

|

| 47 |

+

- [Table of Contents](#table-of-contents)

|

| 48 |

+

- [Introduction](#introduction)

|

| 49 |

+

- [Implementation](#implementation)

|

| 50 |

+

- [How to run](#how-to-run)

|

| 51 |

+

- [1. 获得运行所需的 API](#1-获得运行所需的-api)

|

| 52 |

+

- [2. 开始运行(本地运行)](#2-开始运行本地运行)

|

| 53 |

+

- [Deployment](#deployment)

|

| 54 |

+

- [Railway 一键部署(推荐)](#railway-一键部署推荐)

|

| 55 |

+

- [Zeabur 一键部署(一定概率下会遇到 Domain 生成问题,需要重新创建 project 部署)](#zeabur-一键部署一定概率下会遇到-domain-生成问题需要重新创建-project-部署)

|

| 56 |

+

- [使用 docker-compose 部署(Docker 镜像将随着 main 分支自动更新到最新)](#使用-docker-compose-部署docker-镜像将随着-main-分支自动更新到最新)

|

| 57 |

+

- [Docker 部署(自行 Build)](#docker-部署自行-build)

|

| 58 |

+

- [Automatic fork sync](#automatic-fork-sync)

|

| 59 |

+

- [Technology Stack](#technology-stack)

|

| 60 |

+

- [Star History](#star-history)

|

| 61 |

+

- [Buy me a coffee](#buy-me-a-coffee)

|

| 62 |

+

- [About Me](#about-me)

|

| 63 |

+

|

| 64 |

+

# Introduction

|

| 65 |

+

最近 DeepSeek 推出了 [DeepSeek R1 模型](https://platform.deepseek.com),在推理能力上已经达到了第一梯队。但是 DeepSeek R1 在一些日常任务的输出上可能仍然无法匹敌 Claude 3.5 Sonnet。Aider 团队最近有一篇研究,表示通过[采用 DeepSeek R1 + Claude 3.5 Sonnet 可以实现最好的效果](https://aider.chat/2025/01/24/r1-sonnet.html)。

|

| 66 |

+

|

| 67 |

+

<img src="https://img.erlich.fun/personal-blog/uPic/heiQYX.png" alt="deepseek r1 and sonnet benchmark" style="width=400px;"/>

|

| 68 |

+

|

| 69 |

+

> **R1 as architect with Sonnet as editor has set a new SOTA of 64.0%** on the [aider polyglot benchmark](https://aider.chat/2024/12/21/polyglot.html). They achieve this at **14X less cost** compared to the previous o1 SOTA result.

|

| 70 |

+

|

| 71 |

+

并且 Aider 还 [开源了 Demo](https://github.com/getasterisk/deepclaude),你可以直接在他们的项目上进行在线体验。

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

本项目受到该项目的启发,通过 fastAPI 完全重写,并支持 OpenAI 兼容格式,支持 DeepSeek 官方 API 以及第三方托管的 API。

|

| 76 |

+

|

| 77 |

+

用户可以自行运行在自己的服务器,并对外提供开放 API 接口,接入 [OneAPI](https://github.com/songquanpeng/one-api) 等实现统一分发(token 消耗部分仍需开发)。也可以接入你的日常 ChatBox 软件以及 接入 [Cursor](https://www.cursor.com/) 等软件实现更好的编程效果(Claude 的流式输出+ Tool use 仍需开发)。

|

| 78 |

+

|

| 79 |

+

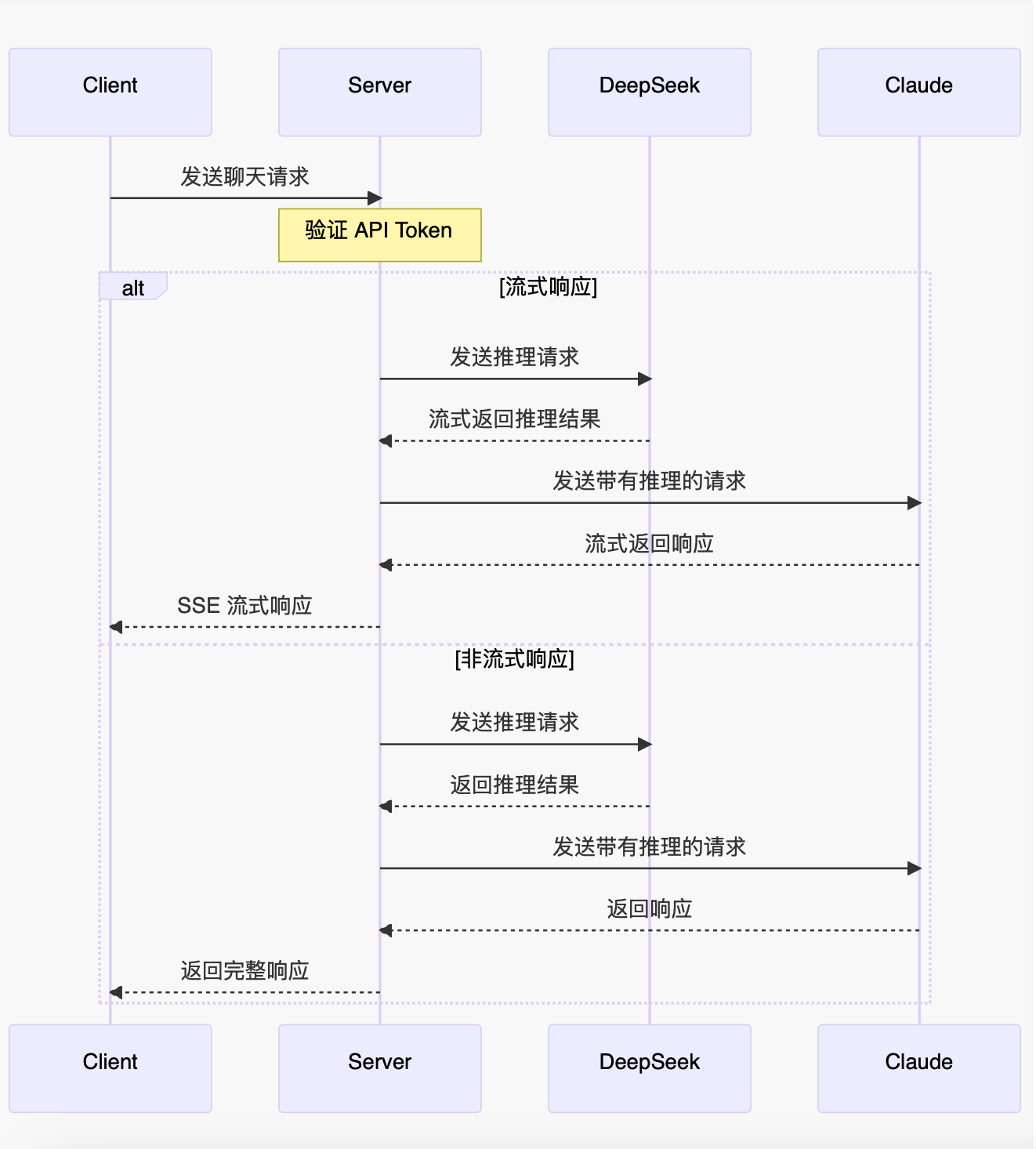

# Implementation

|

| 80 |

+

⚠️Notice: 目前只支持流式输出模式(因为这是效率最高的模式,不会浪费时间);接下来会实现第一段 DeepSeek 推理阶段流式,Claude 输出非流式的模式(处于节省时间的考虑)。

|

| 81 |

+

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

# How to run

|

| 85 |

+

|

| 86 |

+

> 项目支持本地运行和服务器运行,本地运行可与 Ollama 搭配,实现用本地的 DeepSeek R1 与 Claude 组合输出

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

## 1. 获得运行所需的 API

|

| 90 |

+

|

| 91 |

+

1. 获取 DeepSeek API,因为最近 DeepSeek 还在遭受攻击,所以经常无法使用,推荐使用 Siliconflow 的效果更好(也可以本地 Ollama 的): https://cloud.siliconflow.cn/i/RXikvHE2 (点击此链接可以获得到 2000 万免费 tokens)

|

| 92 |

+

2. 获取 Claude 的 API KEY (目前还没有做中转模式,以及对 Google 和 AWS 托管的版本的兼容支持,欢迎 PR):https://console.anthropic.com

|

| 93 |

+

|

| 94 |

+

## 2. 开始运行(本地运行)

|

| 95 |

+

Step 1. 克隆本项目到适合的文件夹并进入项目

|

| 96 |

+

|

| 97 |

+

```bash

|

| 98 |

+

git clone [email protected]:ErlichLiu/DeepClaude.git

|

| 99 |

+

cd DeepClaude

|

| 100 |

+

```

|

| 101 |

+

|

| 102 |

+

Step 2. 通过 uv 安装依赖(如果你还没有安装 uv,请看下方注解)

|

| 103 |

+

|

| 104 |

+

```bash

|

| 105 |

+

# 通过 uv 在本地创建虚拟环境,并安装依赖

|

| 106 |

+

uv sync

|

| 107 |

+

# macOS 激活虚拟环境

|

| 108 |

+

source .venv/bin/activate

|

| 109 |

+

# Windows 激活虚拟环境

|

| 110 |

+

.venv\Scripts\activate

|

| 111 |

+

```

|

| 112 |

+

|

| 113 |

+

Step 3. 配置环境变量

|

| 114 |

+

|

| 115 |

+

```bash

|

| 116 |

+

# 复制 .env 环境变量到本地

|

| 117 |

+

cp .env.example .env

|

| 118 |

+

```

|

| 119 |

+

|

| 120 |

+

Step 4. 按照环境变量当中的注释依次填写配置信息(在此步骤可以配置 Ollama)

|

| 121 |

+

|

| 122 |

+

Step 5. 本地运行程序

|

| 123 |

+

|

| 124 |

+

```bash

|

| 125 |

+

# 本地运行

|

| 126 |

+

uvicorn app.main:app

|

| 127 |

+

```

|

| 128 |

+

|

| 129 |

+

Step 6. 配置程序到你的 Chatbox(推荐 [NextChat](https://nextchat.dev/)、[ChatBox](https://chatboxai.app/zh)、[LobeChat](https://lobechat.com/))

|

| 130 |

+

|

| 131 |

+

```bash

|

| 132 |

+

# 通常 baseUrl 为:http://127.0.0.1:8000/v1

|

| 133 |

+

```

|

| 134 |

+

|

| 135 |

+

**注:本项目采用 uv 作为包管理器,这是一个更快速更现代的管理方式,用于替代 pip,你可以[在此了解更多](https://docs.astral.sh/uv/)**

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

# Deployment

|

| 140 |

+

|

| 141 |

+

> 项目支持 Docker 服务器部署,可自行调用接入常用的 Chatbox,也可以作为渠道一直,将其视为一个特殊的 `DeepClaude`模型接入到 [OneAPI](https://github.com/songquanpeng/one-api) 等产品使用。

|

| 142 |

+

|

| 143 |

+

## Railway 一键部署(推荐)

|

| 144 |

+

<details>

|

| 145 |

+

<summary><strong>一键部署到 Railway</strong></summary>

|

| 146 |

+

|

| 147 |

+

<div>

|

| 148 |

+

1. 首先 fork 一份代码。

|

| 149 |

+

|

| 150 |

+

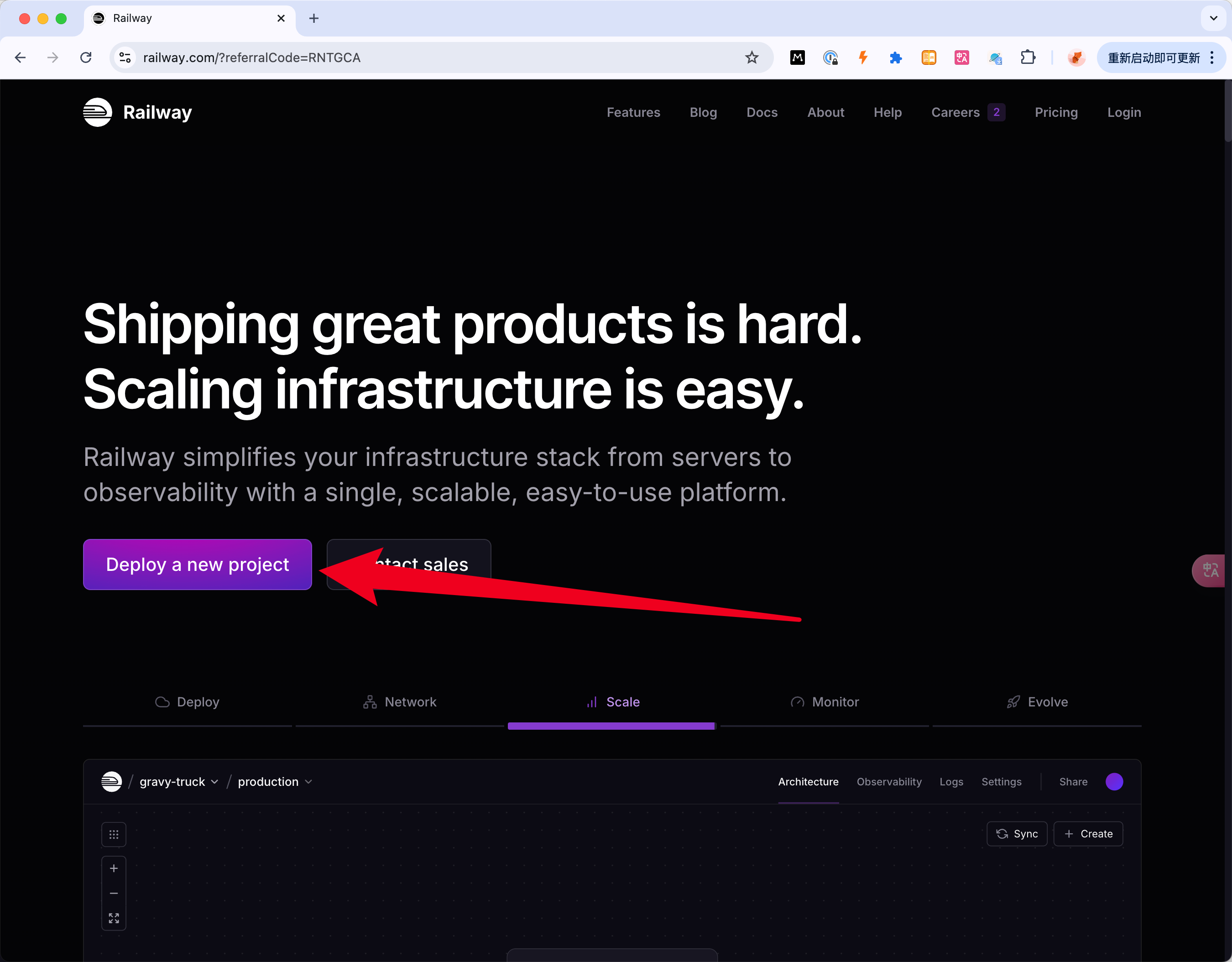

2. 点击打开 Railway 主页:https://railway.com?referralCode=RNTGCA

|

| 151 |

+

|

| 152 |

+

3. 点击 `Deploy a new project`

|

| 153 |

+

|

| 154 |

+

|

| 155 |

+

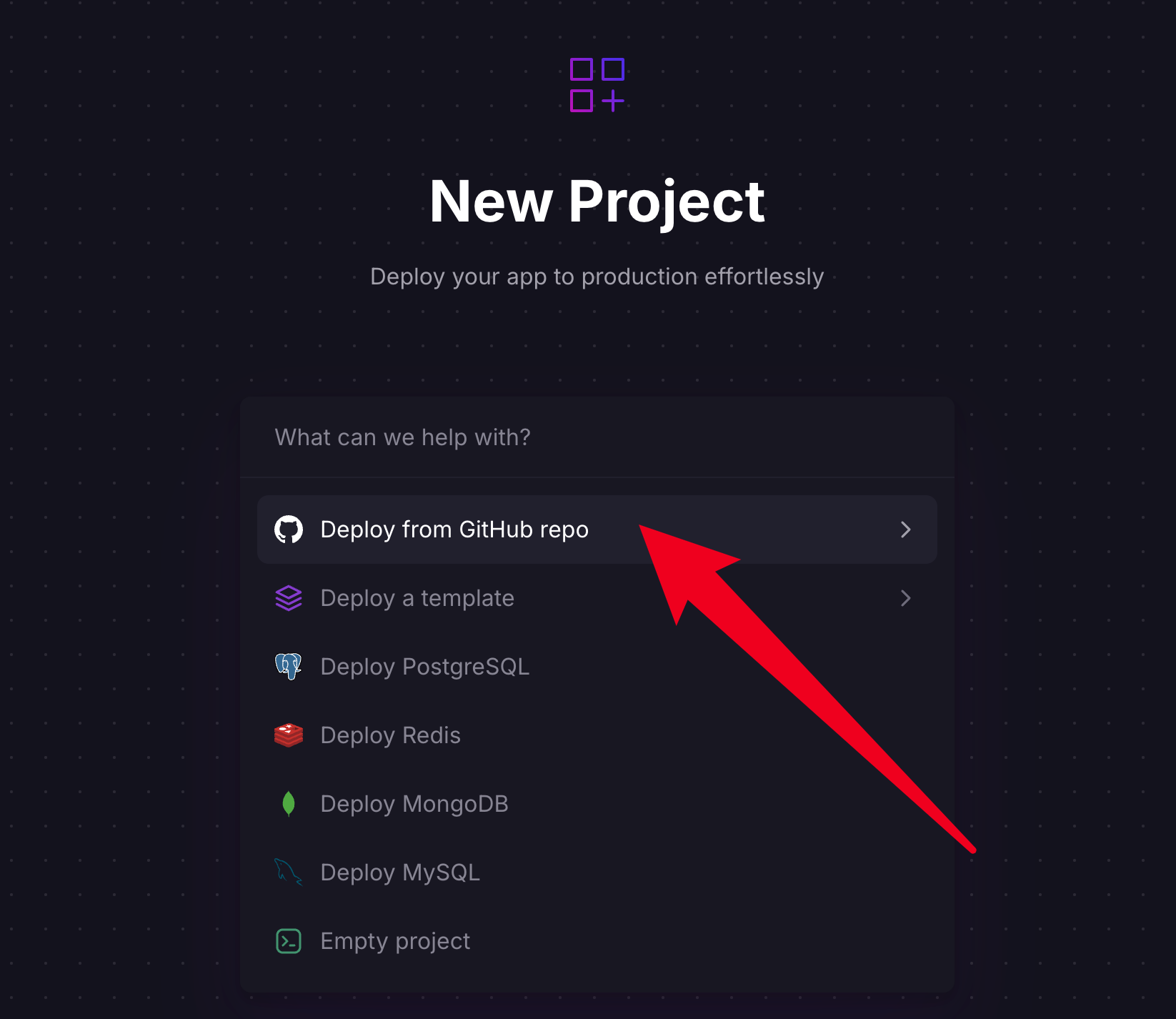

4. 点击 `Deploy from GitHub repo`

|

| 156 |

+

|

| 157 |

+

|

| 158 |

+

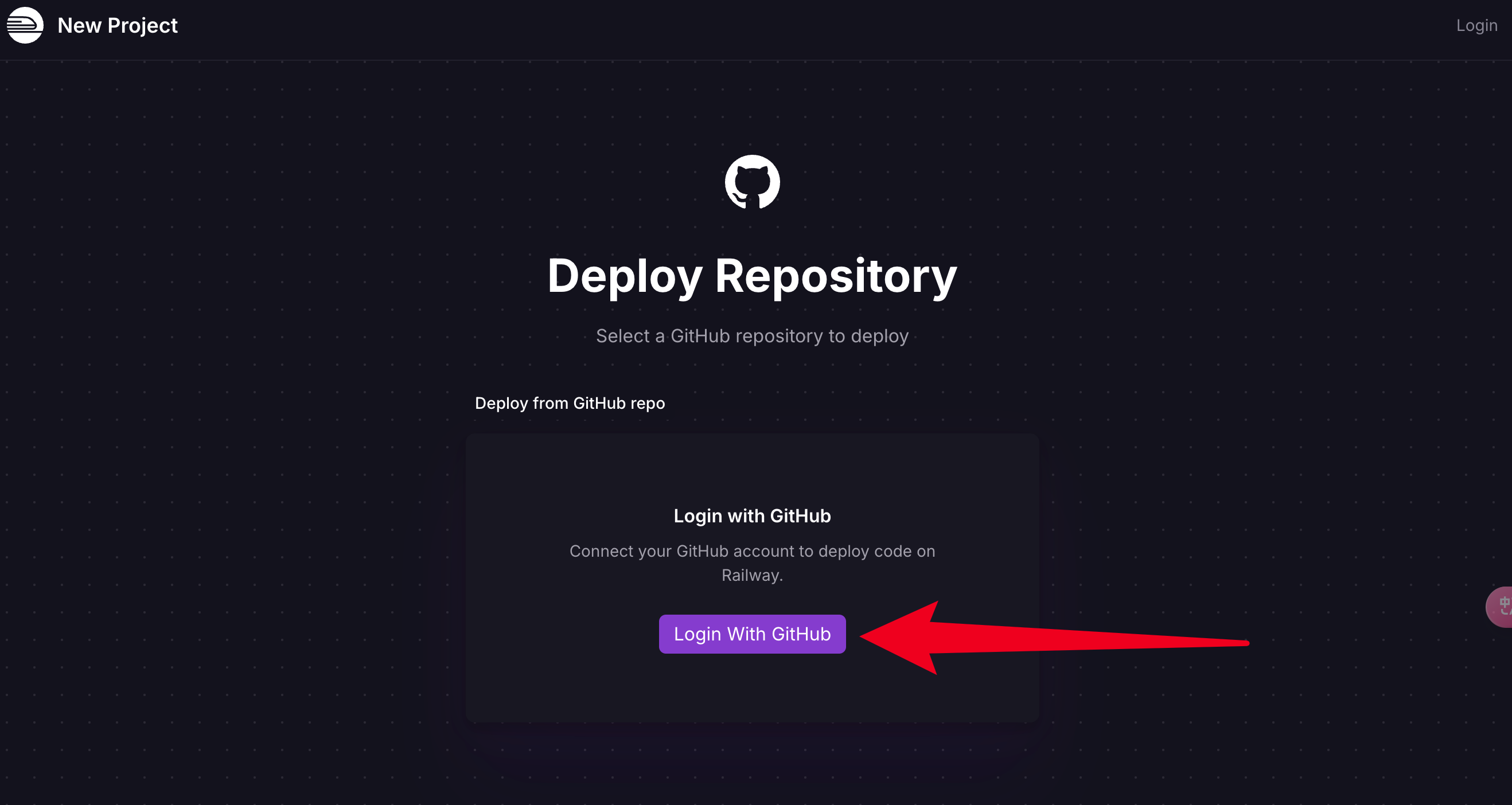

5. 点击 `Login with GitHub`

|

| 159 |

+

|

| 160 |

+

|

| 161 |

+

6. 选择升级,选择只需 5 美金的 Hobby Plan 即可

|

| 162 |

+

|

| 163 |

+

|

| 164 |

+

|

| 165 |

+

1. 点击 `Create a New Project`

|

| 166 |

+

|

| 167 |

+

|

| 168 |

+

1. 继续选择 `Deploy from GitHub repo`

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

1. 输入框内搜索`DeepClaude`,选中后点击。

|

| 172 |

+

|

| 173 |

+

|

| 174 |

+

1. 选择`Variable`,并点击`New Variable` 按钮,按照环境变量内的键值对进行填写

|

| 175 |

+

|

| 176 |

+

|

| 177 |

+

1. 填写完成后重新点击 `Deploy` 按钮,等待数秒后即可完成部署

|

| 178 |

+

|

| 179 |

+

|

| 180 |

+

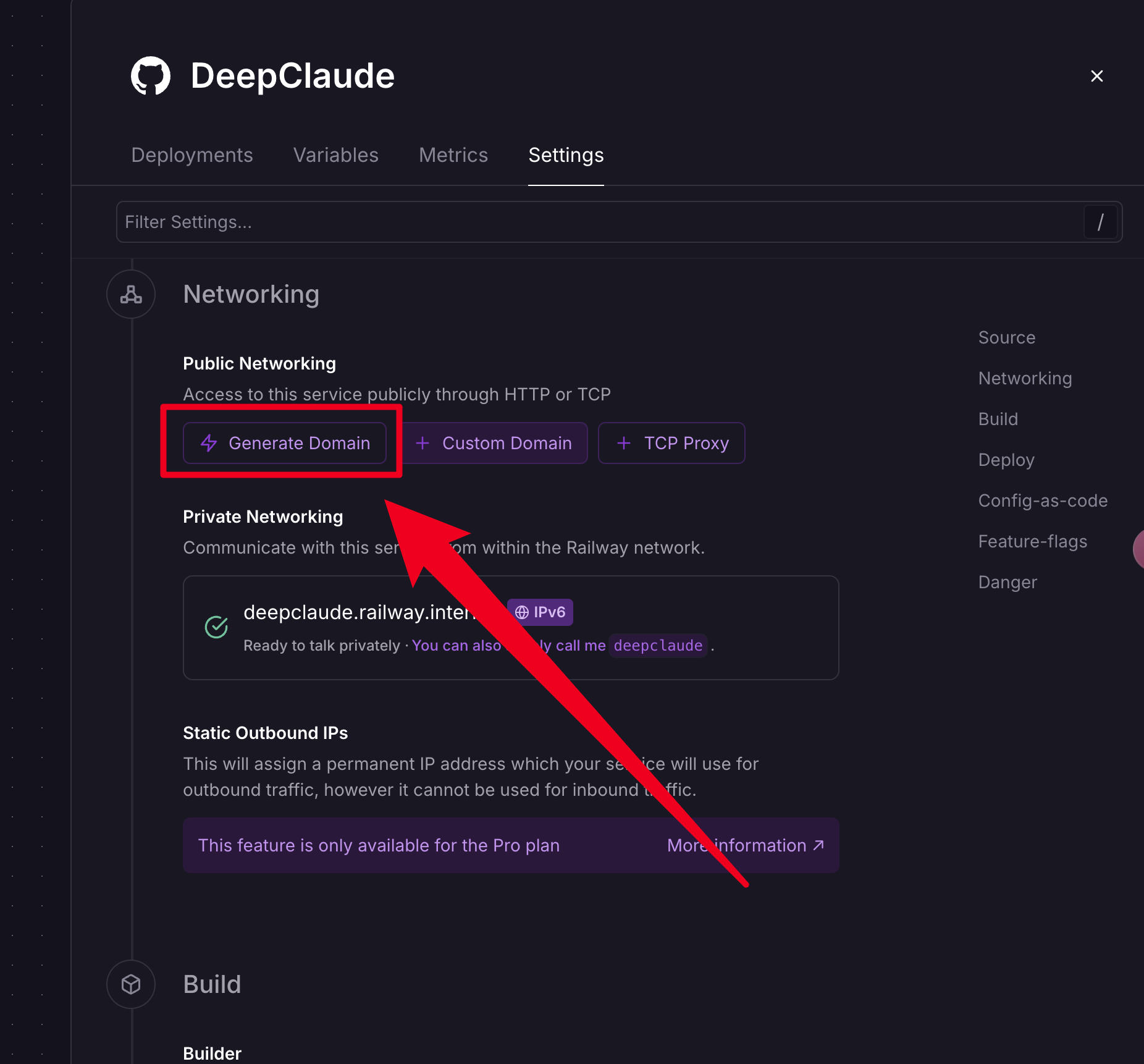

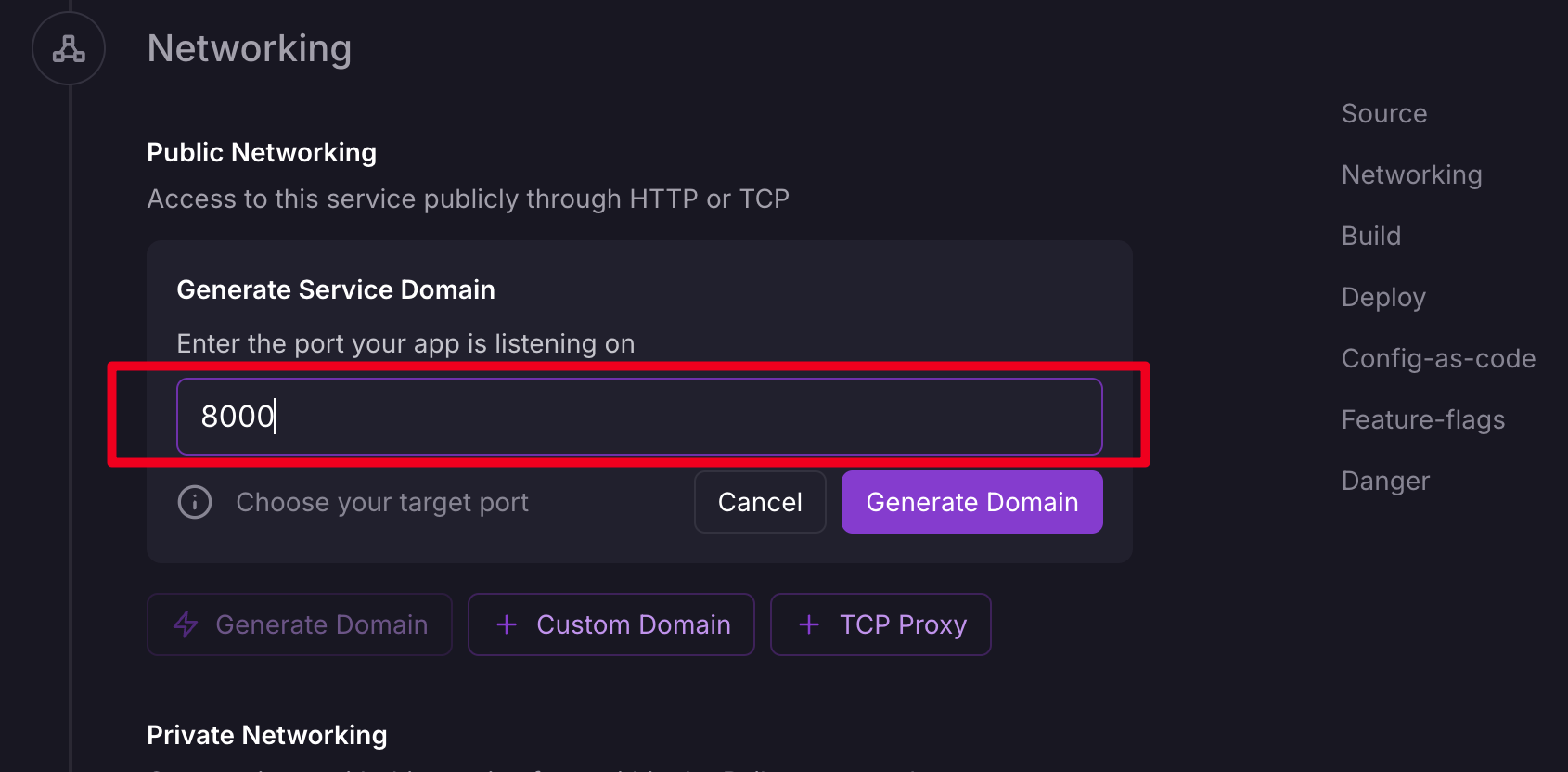

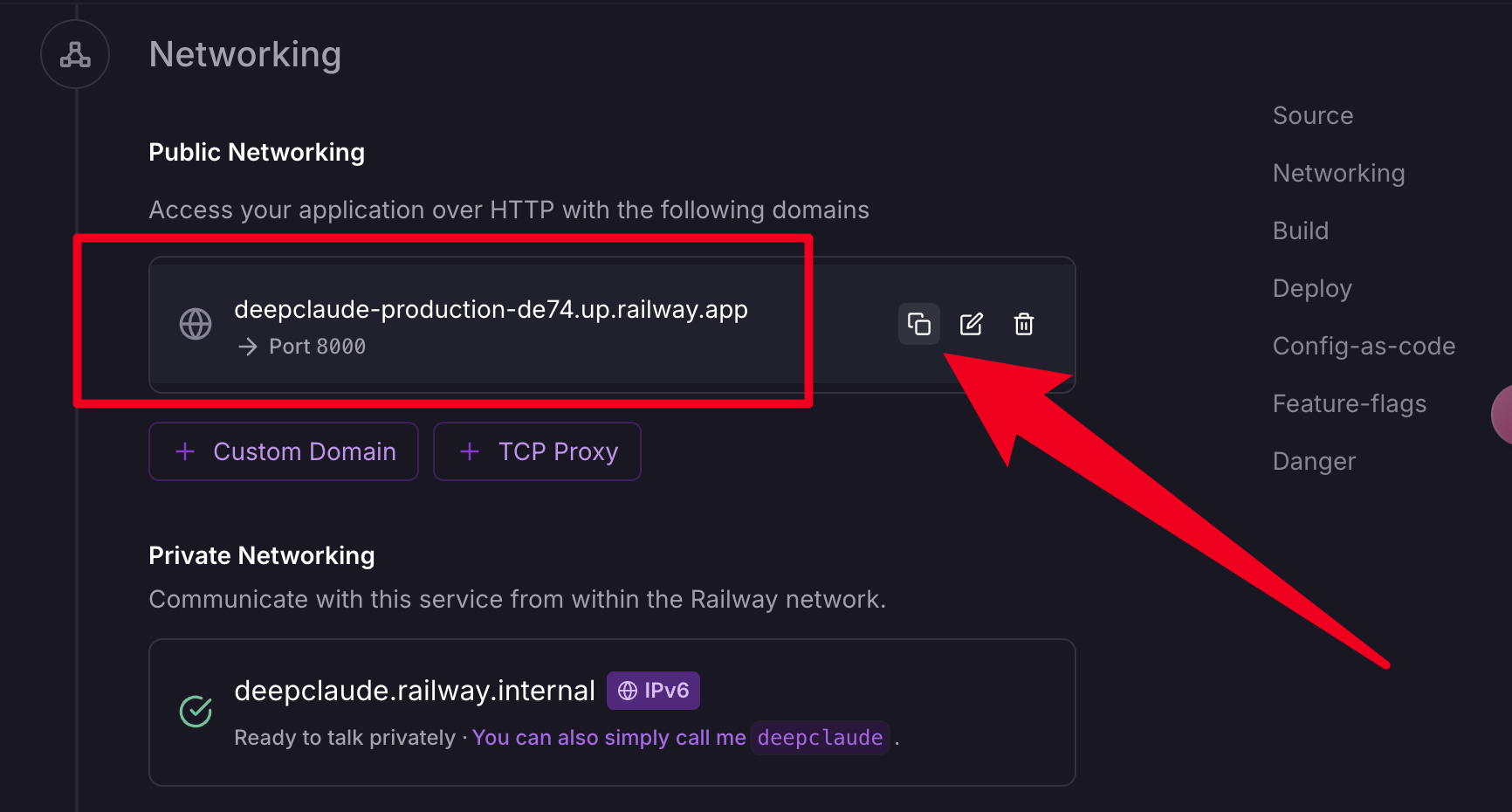

1. 部署完成后,点击 `Settings` 按钮,然后向下查看到 `Networking` 区域,然后选择 `Generate Domain`,并输入 `8000` 作为端口号

|

| 181 |

+

|

| 182 |

+

|

| 183 |

+

|

| 184 |

+

|

| 185 |

+

1. 接下来就可以在你喜欢的 Chatbox 内配置使用或作为 API 使用了

|

| 186 |

+

|

| 187 |

+

|

| 188 |

+

</div>

|

| 189 |

+

</details>

|

| 190 |

+

|

| 191 |

+

## Zeabur 一键部署(一定概率下会遇到 Domain 生成问题,需要重新创建 project 部署)

|

| 192 |

+

<details>

|

| 193 |

+

<summary><strong>一键部署到 Zeabur</strong></summary>

|

| 194 |

+

<div>

|

| 195 |

+

|

| 196 |

+

|

| 197 |

+

[](https://zeabur.com?referralCode=ErlichLiu&utm_source=ErlichLiu)

|

| 198 |

+

|

| 199 |

+

1. 首先 fork 一份代码。

|

| 200 |

+

2. 进入 [Zeabur](https://zeabur.com?referralCode=ErlichLiu&utm_source=ErlichLiu),登录。

|

| 201 |

+

3. 选择 Create New Project,选择地区为新加坡或日本区域。

|

| 202 |

+

4. 选择项目来源为 Github,搜索框搜索 DeepClaude 后确认,然后点击右下角的 Config。

|

| 203 |

+

5. 在 Environment Variables 区域点击 Add Environment Variables,逐个填写 .env.example 当中的配置,等号左右对应的就是 Environment Variables 里的 Key 和 Value。(注意:ALLOW_API_KEY 是你自己规定的外部访问你的服务时需要填写的 API KEY,可以随意填写,不要有空格)

|

| 204 |

+

6. 全部编辑完成后点击 Next,然后点击 Deploy,静待片刻即可完成部署。

|

| 205 |

+

7. 完成部署后点击当前面板上部的 Networking,点击 Public 区域的 Generate Domain(也可以配置自己的域名),然后输入一个你想要的域名即可(这个完整的 xxx.zeabur.app 将是你接下来在任何开源对话框、Cursor、Roo Code 等产品内填写的 baseUrl)

|

| 206 |

+

8. 接下来就可以去上述所说的任何的项目里去配置使用你的 API 了,也可以配置到 One API,作为一个 OpenAI 渠道使用。(晚点会补充这部分的配置方法)

|

| 207 |

+

</div>

|

| 208 |

+

</details>

|

| 209 |

+

|

| 210 |

+

## 使用 docker-compose 部署(Docker 镜像将随着 main 分支自动更新到最新)

|

| 211 |

+

|

| 212 |

+

推荐可以使用 `docker-compose.yml` 文件进行部署,更加方便快捷。

|

| 213 |

+

|

| 214 |

+

1. 确保已安装 Docker Compose。

|

| 215 |

+

2. 复制 `docker-compose.yml` 文件到项目根目录。

|

| 216 |

+

3. 修改 `docker-compose.yml` 文件中的环境变量配置,将 `your_allow_api_key`,`your_allow_origins`,`your_deepseek_api_key` 和 `your_claude_api_key` 替换为你的实际配置。

|

| 217 |

+

4. 在项目根目录下运行 Docker Compose 命令启动服务:

|

| 218 |

+

|

| 219 |

+

```bash

|

| 220 |

+

docker-compose up -d

|

| 221 |

+

```

|

| 222 |

+

|

| 223 |

+

服务启动后,DeepClaude API 将在 `http://宿主机IP:8000/v1/chat/completions` 上进行访问。

|

| 224 |

+

|

| 225 |

+

|

| 226 |

+

## Docker 部署(自行 Build)

|

| 227 |

+

|

| 228 |

+

1. **构建 Docker 镜像**

|

| 229 |

+

|

| 230 |

+

在项目根目录下,使用 Dockerfile 构建镜像。请确保已经安装 Docker 环境。

|

| 231 |

+

|

| 232 |

+

```bash

|

| 233 |

+

docker build -t deepclaude:latest .

|

| 234 |

+

```

|

| 235 |

+

|

| 236 |

+

2. **运行 Docker 容器**

|

| 237 |

+

|

| 238 |

+

运行构建好的 Docker 镜像,将容器的 8000 端口映射到宿主机的 8000 端口。同时,通过 `-e` 参数设置必要的环境变量,包括 API 密钥、允许的域名等。请根据 `.env.example` 文件中的说明配置环境变量。

|

| 239 |

+

|

| 240 |

+

```bash

|

| 241 |

+

docker run -d \

|

| 242 |

+

-p 8000:8000 \

|

| 243 |

+

-e ALLOW_API_KEY=your_allow_api_key \

|

| 244 |

+

-e ALLOW_ORIGINS="*" \

|

| 245 |

+

-e DEEPSEEK_API_KEY=your_deepseek_api_key \

|

| 246 |

+

-e DEEPSEEK_API_URL=https://api.deepseek.com/v1/chat/completions \

|

| 247 |

+

-e DEEPSEEK_MODEL=deepseek-reasoner \

|

| 248 |

+

-e IS_ORIGIN_REASONING=true \

|

| 249 |

+

-e CLAUDE_API_KEY=your_claude_api_key \

|

| 250 |

+

-e CLAUDE_MODEL=claude-3-5-sonnet-20241022 \

|

| 251 |

+

-e CLAUDE_PROVIDER=anthropic \

|

| 252 |

+

-e CLAUDE_API_URL=https://api.anthropic.com/v1/messages \

|

| 253 |

+

-e LOG_LEVEL=INFO \

|

| 254 |

+

--restart always \

|

| 255 |

+

deepclaude:latest

|

| 256 |

+

```

|

| 257 |

+

|

| 258 |

+

请替换上述命令中的 `your_allow_api_key`,`your_allow_origins`,`your_deepseek_api_key` 和 `your_claude_api_key` 为你实际的 API 密钥和配置。`ALLOW_ORIGINS` 请设置为允许访问的域名,如 `"http://localhost:3000,https://chat.example.com"` 或 `"*"` 表示允许所有来源。

|

| 259 |

+

|

| 260 |

+

|

| 261 |

+

# Automatic fork sync

|

| 262 |

+

项目已经支持 Github Actions 自动更新 fork 项目的代码,保持你的 fork 版本与当前 main 分支保持一致。如需开启,请 frok 后在 Settings 中开启 Actions 权限即可。

|

| 263 |

+

|

| 264 |

+

|

| 265 |

+

# Technology Stack

|

| 266 |

+

- [FastAPI](https://fastapi.tiangolo.com/)

|

| 267 |

+

- [UV as package manager](https://docs.astral.sh/uv/#project-management)

|

| 268 |

+

- [Docker](https://www.docker.com/)

|

| 269 |

+

|

| 270 |

+

# Star History

|

| 271 |

+

|

| 272 |

+

[](https://star-history.com/#ErlichLiu/DeepClaude&Date)

|

| 273 |

+

|

| 274 |

+

# Buy me a coffee

|

| 275 |

+

<img src="https://img.erlich.fun/personal-blog/uPic/IMG_3625.JPG" alt="微信赞赏码" style="width: 400px;"/>

|

| 276 |

+

|

| 277 |

+

# About Me

|

| 278 |

+

- Email: [email protected]

|

| 279 |

+

- Website: [Erlichliu](https://erlich.fun)

|

app/clients/__init__.py

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .base_client import BaseClient

|

| 2 |

+

from .deepseek_client import DeepSeekClient

|

| 3 |

+

from .claude_client import ClaudeClient

|

| 4 |

+

|

| 5 |

+

__all__ = ['BaseClient', 'DeepSeekClient', 'ClaudeClient']

|

app/clients/base_client.py

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""基础客户端类,定义通用接口"""

|

| 2 |

+

from typing import AsyncGenerator, Any

|

| 3 |

+

import aiohttp

|

| 4 |

+

from app.utils.logger import logger

|

| 5 |

+

from abc import ABC, abstractmethod

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

class BaseClient(ABC):

|

| 9 |

+

def __init__(self, api_key: str, api_url: str):

|

| 10 |

+

"""初始化基础客户端

|

| 11 |

+

|

| 12 |

+

Args:

|

| 13 |

+

api_key: API密钥

|

| 14 |

+

api_url: API地址

|

| 15 |

+

"""

|

| 16 |

+

self.api_key = api_key

|

| 17 |

+

self.api_url = api_url

|

| 18 |

+

|

| 19 |

+

async def _make_request(self, headers: dict, data: dict) -> AsyncGenerator[bytes, None]:

|

| 20 |

+

"""发送请求并处理响应

|

| 21 |

+

|

| 22 |

+

Args:

|

| 23 |

+

headers: 请求头

|

| 24 |

+

data: 请求数据

|

| 25 |

+

|

| 26 |

+

Yields:

|

| 27 |

+

bytes: 原始响应数据

|

| 28 |

+

"""

|

| 29 |

+

try:

|

| 30 |

+

async with aiohttp.ClientSession() as session:

|

| 31 |

+

async with session.post(self.api_url, headers=headers, json=data) as response:

|

| 32 |

+

if response.status != 200:

|

| 33 |

+

error_text = await response.text()

|

| 34 |

+

logger.error(f"API 请求失败: {error_text}")

|

| 35 |

+

return

|

| 36 |

+

|

| 37 |

+

async for chunk in response.content.iter_any():

|

| 38 |

+

yield chunk

|

| 39 |

+

|

| 40 |

+

except Exception as e:

|

| 41 |

+

logger.error(f"请求 API 时发生错误: {e}")

|

| 42 |

+

|

| 43 |

+

@abstractmethod

|

| 44 |

+

async def stream_chat(self, messages: list, model: str) -> AsyncGenerator[tuple[str, str], None]:

|

| 45 |

+

"""流式对话,由子类实现

|

| 46 |

+

|

| 47 |

+

Args:

|

| 48 |

+

messages: 消息列表

|

| 49 |

+

model: 模型名称

|

| 50 |

+

|

| 51 |

+

Yields:

|

| 52 |

+

tuple[str, str]: (内容类型, 内容)

|

| 53 |

+

"""

|

| 54 |

+

pass

|

app/clients/claude_client.py

ADDED

|

@@ -0,0 +1,142 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Claude API 客户端"""

|

| 2 |

+

import json

|

| 3 |

+

from typing import AsyncGenerator

|

| 4 |

+

from app.utils.logger import logger

|

| 5 |

+

from .base_client import BaseClient

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

class ClaudeClient(BaseClient):

|

| 9 |

+

def __init__(self, api_key: str, api_url: str = "https://api.anthropic.com/v1/messages", provider: str = "anthropic"):

|

| 10 |

+

"""初始化 Claude 客户端

|

| 11 |

+

|

| 12 |

+

Args:

|

| 13 |

+

api_key: Claude API密钥

|

| 14 |

+

api_url: Claude API地址

|

| 15 |

+

is_openrouter: 是否使用 OpenRouter API

|

| 16 |

+

"""

|

| 17 |

+

super().__init__(api_key, api_url)

|

| 18 |

+

self.provider = provider

|

| 19 |

+

|

| 20 |

+

async def stream_chat(

|

| 21 |

+

self,

|

| 22 |

+

messages: list,

|

| 23 |

+

model_arg: tuple[float, float, float, float],

|

| 24 |

+

model: str,

|

| 25 |

+

stream: bool = True

|

| 26 |

+

) -> AsyncGenerator[tuple[str, str], None]:

|

| 27 |

+

"""流式或非流式对话

|

| 28 |

+

|

| 29 |

+

Args:

|

| 30 |

+

messages: 消息列表

|

| 31 |

+

model_arg: 模型参数元组[temperature, top_p, presence_penalty, frequency_penalty]

|

| 32 |

+

model: 模型名称。如果是 OpenRouter, 会自动转换为 'anthropic/claude-3.5-sonnet' 格式

|

| 33 |

+

stream: 是否使用流式输出,默认为 True

|

| 34 |

+

|

| 35 |

+

Yields:

|

| 36 |

+

tuple[str, str]: (内容类型, 内容)

|

| 37 |

+

内容类型: "answer"

|

| 38 |

+

内容: 实际的文本内容

|

| 39 |

+

"""

|

| 40 |

+

|

| 41 |

+

if self.provider == "openrouter":

|

| 42 |

+

# 转换模型名称为 OpenRouter 格式

|

| 43 |

+

model = "anthropic/claude-3.5-sonnet"

|

| 44 |

+

|

| 45 |

+

headers = {

|

| 46 |

+

"Authorization": f"Bearer {self.api_key}",

|

| 47 |

+

"Content-Type": "application/json",

|

| 48 |

+

"HTTP-Referer": "https://github.com/ErlichLiu/DeepClaude", # OpenRouter 需要

|

| 49 |

+

"X-Title": "DeepClaude" # OpenRouter 需要

|

| 50 |

+

}

|

| 51 |

+

|

| 52 |

+

data = {

|

| 53 |

+

"model": model, # OpenRouter 使用 anthropic/claude-3.5-sonnet 格式

|

| 54 |

+

"messages": messages,

|

| 55 |

+

"stream": stream,

|

| 56 |

+

"temperature": 1 if model_arg[0] < 0 or model_arg[0] > 1 else model_arg[0],

|

| 57 |

+

"top_p": model_arg[1],

|

| 58 |

+

"presence_penalty": model_arg[2],

|

| 59 |

+

"frequency_penalty": model_arg[3]

|

| 60 |

+

}

|

| 61 |

+

elif self.provider == "oneapi":

|

| 62 |

+

headers = {

|

| 63 |

+

"Authorization": f"Bearer {self.api_key}",

|

| 64 |

+

"Content-Type": "application/json"

|

| 65 |

+

}

|

| 66 |

+

|

| 67 |

+

data = {

|

| 68 |

+

"model": model,

|

| 69 |

+

"messages": messages,

|

| 70 |

+

"stream": stream,

|

| 71 |

+

"temperature": 1 if model_arg[0] < 0 or model_arg[0] > 1 else model_arg[0],

|

| 72 |

+

"top_p": model_arg[1],

|

| 73 |

+

"presence_penalty": model_arg[2],

|

| 74 |

+

"frequency_penalty": model_arg[3]

|

| 75 |

+

}

|

| 76 |

+

elif self.provider == "anthropic":

|

| 77 |

+

headers = {

|

| 78 |

+

"x-api-key": self.api_key,

|

| 79 |

+

"anthropic-version": "2023-06-01",

|

| 80 |

+

"content-type": "application/json",

|

| 81 |

+

"accept": "text/event-stream" if stream else "application/json",

|

| 82 |

+

}

|

| 83 |

+

|

| 84 |

+

data = {

|

| 85 |

+

"model": model,

|

| 86 |

+

"messages": messages,

|

| 87 |

+

"max_tokens": 8192,

|

| 88 |

+

"stream": stream,

|

| 89 |

+

"temperature": 1 if model_arg[0] < 0 or model_arg[0] > 1 else model_arg[0], # Claude仅支持temperature与top_p

|

| 90 |

+

"top_p": model_arg[1]

|

| 91 |

+

}

|

| 92 |

+

else:

|

| 93 |

+

raise ValueError(f"不支持的Claude Provider: {self.provider}")

|

| 94 |

+

|

| 95 |

+

logger.debug(f"开始对话:{data}")

|

| 96 |

+

|

| 97 |

+

if stream:

|

| 98 |

+

async for chunk in self._make_request(headers, data):

|

| 99 |

+

chunk_str = chunk.decode('utf-8')

|

| 100 |

+

if not chunk_str.strip():

|

| 101 |

+

continue

|

| 102 |

+

|

| 103 |

+

for line in chunk_str.split('\n'):

|

| 104 |

+

if line.startswith('data: '):

|

| 105 |

+

json_str = line[6:] # 去掉 'data: ' 前缀

|

| 106 |

+

if json_str.strip() == '[DONE]':

|

| 107 |

+

return

|

| 108 |

+

|

| 109 |

+

try:

|

| 110 |

+

data = json.loads(json_str)

|

| 111 |

+

if self.provider in ("openrouter", "oneapi"):

|

| 112 |

+

# OpenRouter/OneApi 格式

|

| 113 |

+

content = data.get('choices', [{}])[0].get('delta', {}).get('content', '')

|

| 114 |

+

if content:

|

| 115 |

+

yield "answer", content

|

| 116 |

+

elif self.provider == "anthropic":

|

| 117 |

+

# Anthropic 格式

|

| 118 |

+

if data.get('type') == 'content_block_delta':

|

| 119 |

+

content = data.get('delta', {}).get('text', '')

|

| 120 |

+

if content:

|

| 121 |

+

yield "answer", content

|

| 122 |

+

else:

|

| 123 |

+

raise ValueError(f"不支持的Claude Provider: {self.provider}")

|

| 124 |

+

except json.JSONDecodeError:

|

| 125 |

+

continue

|

| 126 |

+

else:

|

| 127 |

+

# 非流式输出

|

| 128 |

+

async for chunk in self._make_request(headers, data):

|

| 129 |

+

try:

|

| 130 |

+

response = json.loads(chunk.decode('utf-8'))

|

| 131 |

+

if self.provider in ("openrouter", "oneapi"):

|

| 132 |

+

content = response.get('choices', [{}])[0].get('message', {}).get('content', '')

|

| 133 |

+

if content:

|

| 134 |

+

yield "answer", content

|

| 135 |

+

elif self.provider == "anthropic":

|

| 136 |

+

content = response.get('content', [{}])[0].get('text', '')

|

| 137 |

+

if content:

|

| 138 |

+

yield "answer", content

|

| 139 |

+

else:

|

| 140 |

+

raise ValueError(f"不支持的Claude Provider: {self.provider}")

|

| 141 |

+

except json.JSONDecodeError:

|

| 142 |

+

continue

|

app/clients/deepseek_client.py

ADDED

|

@@ -0,0 +1,133 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""DeepSeek API 客户端"""

|

| 2 |

+

import json

|

| 3 |

+

from typing import AsyncGenerator

|

| 4 |

+

from app.utils.logger import logger

|

| 5 |

+

from .base_client import BaseClient

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

class DeepSeekClient(BaseClient):

|

| 9 |

+

def __init__(self, api_key: str, api_url: str = "https://api.siliconflow.cn/v1/chat/completions", provider: str = "deepseek"):

|

| 10 |

+

"""初始化 DeepSeek 客户端

|

| 11 |

+

|

| 12 |

+

Args:

|

| 13 |

+

api_key: DeepSeek API密钥

|

| 14 |

+

api_url: DeepSeek API地址

|

| 15 |

+

"""

|

| 16 |

+

super().__init__(api_key, api_url)

|

| 17 |

+

self.provider = provider

|

| 18 |

+

|

| 19 |

+

def _process_think_tag_content(self, content: str) -> tuple[bool, str]:

|

| 20 |

+

"""处理包含 think 标签的内容

|

| 21 |

+

|

| 22 |

+

Args:

|

| 23 |

+

content: 需要处理的内容字符串

|

| 24 |

+

|

| 25 |

+

Returns:

|

| 26 |

+

tuple[bool, str]:

|

| 27 |

+

bool: 是否检测到完整的 think 标签对

|

| 28 |

+

str: 处理后的内容

|

| 29 |

+

"""

|

| 30 |

+

has_start = "<think>" in content

|

| 31 |

+

has_end = "</think>" in content

|

| 32 |

+

|

| 33 |

+

if has_start and has_end:

|

| 34 |

+

return True, content

|

| 35 |

+

elif has_start:

|

| 36 |

+

return False, content

|

| 37 |

+

elif not has_start and not has_end:

|

| 38 |

+

return False, content

|

| 39 |

+

else:

|

| 40 |

+

return True, content

|

| 41 |

+

|

| 42 |

+

async def stream_chat(self, messages: list, model: str = "deepseek-ai/DeepSeek-R1", is_origin_reasoning: bool = True) -> AsyncGenerator[tuple[str, str], None]:

|

| 43 |

+

"""流式对话

|

| 44 |

+

|

| 45 |

+

Args:

|

| 46 |

+

messages: 消息列表

|

| 47 |

+

model: 模型名称

|

| 48 |

+

|

| 49 |

+

Yields:

|

| 50 |

+

tuple[str, str]: (内容类型, 内容)

|

| 51 |

+

内容类型: "reasoning" 或 "content"

|

| 52 |

+

内容: 实际的文本内容

|

| 53 |

+

"""

|

| 54 |

+

headers = {

|

| 55 |

+

"Authorization": f"Bearer {self.api_key}",

|

| 56 |

+

"Content-Type": "application/json",

|

| 57 |

+

"Accept": "text/event-stream",

|

| 58 |

+

}

|

| 59 |

+

data = {

|

| 60 |

+

"model": model,

|

| 61 |

+

"messages": messages,

|

| 62 |

+

"stream": True,

|

| 63 |

+

}

|

| 64 |

+

|

| 65 |

+

logger.debug(f"开始流式对话:{data}")

|

| 66 |

+

|

| 67 |

+

accumulated_content = ""

|

| 68 |

+

is_collecting_think = False

|

| 69 |

+

|

| 70 |

+

async for chunk in self._make_request(headers, data):

|

| 71 |

+

chunk_str = chunk.decode('utf-8')

|

| 72 |

+

|

| 73 |

+

try:

|

| 74 |

+

lines = chunk_str.splitlines()

|

| 75 |

+

for line in lines:

|

| 76 |

+

if line.startswith("data: "):

|

| 77 |

+

json_str = line[len("data: "):]

|

| 78 |

+

if json_str == "[DONE]":

|

| 79 |

+

return

|

| 80 |

+

|

| 81 |

+

data = json.loads(json_str)

|

| 82 |

+

if data and data.get("choices") and data["choices"][0].get("delta"):

|

| 83 |

+

delta = data["choices"][0]["delta"]

|

| 84 |

+

|

| 85 |

+

if is_origin_reasoning:

|

| 86 |

+

# 处理 reasoning_content

|

| 87 |

+

if delta.get("reasoning_content"):

|

| 88 |

+

content = delta["reasoning_content"]

|

| 89 |

+

logger.debug(f"提取推理内容:{content}")

|

| 90 |

+

yield "reasoning", content

|

| 91 |

+

|

| 92 |

+

if delta.get("reasoning_content") is None and delta.get("content"):

|

| 93 |

+

content = delta["content"]

|

| 94 |

+

logger.info(f"提取内容信息,推理阶段结束: {content}")

|

| 95 |

+

yield "content", content

|

| 96 |

+

else:

|

| 97 |

+

# 处理其他模型的输出

|

| 98 |

+

if delta.get("content"):

|

| 99 |

+

content = delta["content"]

|

| 100 |

+

if content == "": # 只跳过完全空的字符串

|

| 101 |

+

continue

|

| 102 |

+

logger.debug(f"非原生推理内容:{content}")

|

| 103 |

+

accumulated_content += content

|

| 104 |

+

|

| 105 |

+

# 检查累积的内容是否包含完整的 think 标签对

|

| 106 |

+

is_complete, processed_content = self._process_think_tag_content(accumulated_content)

|

| 107 |

+

|

| 108 |

+

if "<think>" in content and not is_collecting_think:

|

| 109 |

+

# 开始收集推理内容

|

| 110 |

+

logger.debug(f"开始收集推理内容:{content}")

|

| 111 |

+

is_collecting_think = True

|

| 112 |

+

yield "reasoning", content

|

| 113 |

+

elif is_collecting_think:

|

| 114 |

+

if "</think>" in content:

|

| 115 |

+

# 推理内��结束

|

| 116 |

+

logger.debug(f"推理内容结束:{content}")

|

| 117 |

+

is_collecting_think = False

|

| 118 |

+

yield "reasoning", content

|

| 119 |

+

# 输出空的 content 来触发 Claude 处理

|

| 120 |

+

yield "content", ""

|

| 121 |

+

# 重置累积内容

|

| 122 |

+

accumulated_content = ""

|

| 123 |

+

else:

|

| 124 |

+

# 继续收集推理内容

|

| 125 |

+

yield "reasoning", content

|

| 126 |

+

else:

|

| 127 |

+

# 普通内容

|

| 128 |

+

yield "content", content

|

| 129 |

+

|

| 130 |

+

except json.JSONDecodeError as e:

|

| 131 |

+

logger.error(f"JSON 解析错误: {e}")

|

| 132 |

+

except Exception as e:

|

| 133 |

+

logger.error(f"处理 chunk 时发生错误: {e}")

|

app/deepclaude/__init__.py

ADDED

|

File without changes

|

app/deepclaude/deepclaude.py

ADDED

|

@@ -0,0 +1,268 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|