Spaces:

Runtime error

Runtime error

| from deepsparse import Pipeline | |

| import time | |

| import gradio as gr | |

| markdownn = ''' | |

| # Named Entity Recognition Pipeline with DeepSparse | |

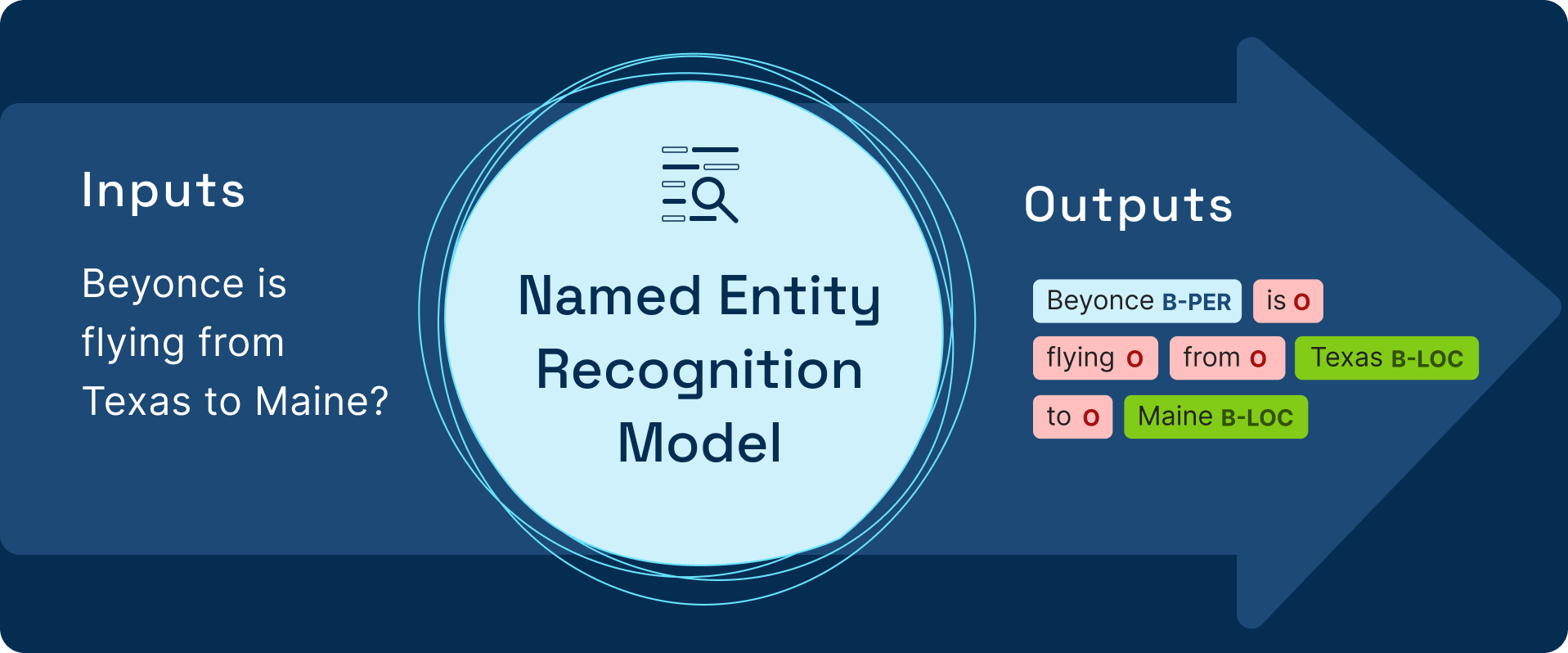

| Named Entity Recognition is the task of extracting and locating named entities in a sentence. The entities include, people's names, location, organizations, etc. | |

|  | |

| ## What is DeepSparse? | |

| DeepSparse is an inference runtime offering GPU-class performance on CPUs and APIs to integrate ML into your application. Sparsification is a powerful technique for optimizing models for inference, reducing the compute needed with a limited accuracy tradeoff. DeepSparse is designed to take advantage of model sparsity, enabling you to deploy models with the flexibility and scalability of software on commodity CPUs with the best-in-class performance of hardware accelerators, enabling you to standardize operations and reduce infrastructure costs. | |

| Similar to Hugging Face, DeepSparse provides off-the-shelf pipelines for computer vision and NLP that wrap the model with proper pre- and post-processing to run performantly on CPUs by using sparse models. | |

| SparseML Named Entity Recognition Pipelines integrate with Hugging Face’s Transformers library to enable the sparsification of a large set of transformers models. | |

| ### Inference API Example | |

| Here is sample code for a token classification pipeline: | |

| ```python | |

| from deepsparse import Pipeline | |

| pipeline = Pipeline.create(task="ner", model_path="zoo:nlp/token_classification/distilbert-none/pytorch/huggingface/conll2003/pruned80_quant-none-vnni") | |

| text = "Mary is flying from Nairobi to New York" | |

| inference = pipeline(text) | |

| print(inference) | |

| ``` | |

| ## Use Case Description | |

| The Named Entity Recognition Pipeline can process text before storing the information in a database. | |

| For example, you may want to process text and store the entities in different columns depending on the entity type. | |

| [Want to train a sparse model on your data? Checkout the documentation on sparse transfer learning](https://docs.neuralmagic.com/use-cases/natural-language-processing/question-answering) | |

| ''' | |

| task = "ner" | |

| sparse_qa_pipeline = Pipeline.create( | |

| task=task, | |

| model_path="zoo:distilbert-conll2003_wikipedia_bookcorpus-pruned90", | |

| ) | |

| def map_ner(inference): | |

| entities = [] | |

| for item in dict(inference)['predictions'][0]: | |

| dictionary = dict(item) | |

| entity = dictionary['entity'] | |

| if entity == "LABEL_0": | |

| value = "O" | |

| elif entity == "LABEL_1": | |

| value = "B-PER" | |

| elif entity == "LABEL_2": | |

| value = "I-PER" | |

| elif entity == "LABEL_3": | |

| value = "-ORG" | |

| elif entity == "LABEL_4": | |

| value = "I-ORG" | |

| elif entity == "LABEL_5": | |

| value = "B-LOC" | |

| elif entity == "LABEL_6": | |

| value = "I-LOC" | |

| elif entity == "LABEL_7": | |

| value = "B-MISC" | |

| else: | |

| value = "I-MISC" | |

| dictionary['entity'] = value | |

| entities.append(dictionary) | |

| return entities | |

| def run_pipeline(text): | |

| sparse_start = time.perf_counter() | |

| sparse_output = sparse_qa_pipeline(text) | |

| sparse_entities = map_ner(sparse_output) | |

| sparse_output = {"text": text, "entities": sparse_entities} | |

| sparse_result = dict(sparse_output) | |

| sparse_end = time.perf_counter() | |

| sparse_duration = (sparse_end - sparse_start) * 1000.0 | |

| return sparse_output, sparse_duration | |

| with gr.Blocks() as demo: | |

| with gr.Row(): | |

| with gr.Column(): | |

| gr.Markdown(markdownn) | |

| with gr.Column(): | |

| gr.Markdown(""" | |

| ### Named Entity Recognition Demo | |

| Using [token_classification/distilbert](https://sparsezoo.neuralmagic.com/models/nlp%2Ftoken_classification%2Fdistilbert-none%2Fpytorch%2Fhuggingface%2Fconll2003%2Fpruned80_quant-none-vnni) | |

| """) | |

| text = gr.Text(label="Text") | |

| btn = gr.Button("Submit") | |

| sparse_answers = gr.HighlightedText(label="Sparse model answers") | |

| sparse_duration = gr.Number(label="Sparse Latency (ms):") | |

| gr.Examples( [["We are flying from Texas to California"],["Mary is flying from Nairobi to New York"],["Norway is beautiful and has great hotels"] ],inputs=[text],) | |

| btn.click( | |

| run_pipeline, | |

| inputs=[text], | |

| outputs=[sparse_answers,sparse_duration], | |

| ) | |

| if __name__ == "__main__": | |

| demo.launch() |