sanbo

commited on

Commit

·

08ca036

1

Parent(s):

5e28307

update sth. at 2025-01-20 14:08:58

Browse files- .dockerignore +9 -0

- .env.example +4 -0

- .github/workflows/build_docker_dev.yml +70 -0

- .github/workflows/build_docker_main.yml +70 -0

- .gitignore +8 -0

- Dockerfile +11 -0

- LICENSE +21 -0

- README——xxx.md +217 -0

- api/chat2api.py +124 -0

- api/files.py +144 -0

- api/models.py +28 -0

- api/tokens.py +86 -0

- app.py +57 -0

- chatgpt/ChatService.py +530 -0

- chatgpt/authorization.py +78 -0

- chatgpt/chatFormat.py +436 -0

- chatgpt/chatLimit.py +34 -0

- chatgpt/fp.py +61 -0

- chatgpt/proofofWork.py +504 -0

- chatgpt/refreshToken.py +57 -0

- chatgpt/turnstile.py +268 -0

- chatgpt/wssClient.py +36 -0

- docker-compose-warp.yml +56 -0

- docker-compose.yml +22 -0

- gateway/admin.py +0 -0

- gateway/backend.py +381 -0

- gateway/chatgpt.py +32 -0

- gateway/gpts.py +24 -0

- gateway/login.py +10 -0

- gateway/reverseProxy.py +293 -0

- gateway/route.py +0 -0

- gateway/share.py +251 -0

- gateway/v1.py +33 -0

- requirements.txt +13 -0

- templates/chatgpt.html +385 -0

- templates/chatgpt_context.json +0 -0

- templates/gpts_context.json +0 -0

- templates/login.html +82 -0

- templates/tokens.html +82 -0

- utils/Client.py +56 -0

- utils/Logger.py +24 -0

- utils/configs.py +106 -0

- utils/globals.py +107 -0

- utils/kv_utils.py +10 -0

- utils/retry.py +32 -0

- version.txt +1 -0

.dockerignore

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.env

|

| 2 |

+

*.pyc

|

| 3 |

+

/.git/

|

| 4 |

+

/.idea/

|

| 5 |

+

/docs/

|

| 6 |

+

/tmp/

|

| 7 |

+

/data/

|

| 8 |

+

/.venv/

|

| 9 |

+

/.vscode/

|

.env.example

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

API_PREFIX=your_prefix

|

| 2 |

+

CHATGPT_BASE_URL=https://chatgpt.com

|

| 3 |

+

PROXY_URL=your_first_proxy, your_second_proxy

|

| 4 |

+

SCHEDULED_REFRESH=false

|

.github/workflows/build_docker_dev.yml

ADDED

|

@@ -0,0 +1,70 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Build Docker Image (dev)

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches:

|

| 6 |

+

- dev

|

| 7 |

+

paths-ignore:

|

| 8 |

+

- 'README.md'

|

| 9 |

+

- 'docker-compose.yml'

|

| 10 |

+

- 'docker-compose-warp.yml'

|

| 11 |

+

- 'docs/**'

|

| 12 |

+

- '.github/workflows/build_docker_main.yml'

|

| 13 |

+

- '.github/workflows/build_docker_dev.yml'

|

| 14 |

+

workflow_dispatch:

|

| 15 |

+

|

| 16 |

+

jobs:

|

| 17 |

+

main:

|

| 18 |

+

runs-on: ubuntu-latest

|

| 19 |

+

|

| 20 |

+

steps:

|

| 21 |

+

- name: Check out the repository

|

| 22 |

+

uses: actions/checkout@v2

|

| 23 |

+

|

| 24 |

+

- name: Read the version from version.txt

|

| 25 |

+

id: get_version

|

| 26 |

+

run: |

|

| 27 |

+

version=$(cat version.txt)

|

| 28 |

+

echo "Current version: v$version-dev"

|

| 29 |

+

echo "::set-output name=version::v$version-dev"

|

| 30 |

+

|

| 31 |

+

- name: Commit and push version tag

|

| 32 |

+

env:

|

| 33 |

+

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

| 34 |

+

run: |

|

| 35 |

+

version=${{ steps.get_version.outputs.version }}

|

| 36 |

+

git config --local user.email "[email protected]"

|

| 37 |

+

git config --local user.name "GitHub Action"

|

| 38 |

+

git tag "$version"

|

| 39 |

+

git push https://x-access-token:${GHCR_PAT}@github.com/lanqian528/chat2api.git "$version"

|

| 40 |

+

|

| 41 |

+

- name: Set up QEMU

|

| 42 |

+

uses: docker/setup-qemu-action@v3

|

| 43 |

+

|

| 44 |

+

- name: Set up Docker Buildx

|

| 45 |

+

uses: docker/setup-buildx-action@v3

|

| 46 |

+

|

| 47 |

+

- name: Log in to Docker Hub

|

| 48 |

+

uses: docker/login-action@v3

|

| 49 |

+

with:

|

| 50 |

+

username: ${{ secrets.DOCKER_USERNAME }}

|

| 51 |

+

password: ${{ secrets.DOCKER_PASSWORD }}

|

| 52 |

+

|

| 53 |

+

- name: Docker meta

|

| 54 |

+

id: meta

|

| 55 |

+

uses: docker/metadata-action@v5

|

| 56 |

+

with:

|

| 57 |

+

images: lanqian528/chat2api

|

| 58 |

+

tags: |

|

| 59 |

+

type=raw,value=latest-dev

|

| 60 |

+

type=raw,value=${{ steps.get_version.outputs.version }}

|

| 61 |

+

|

| 62 |

+

- name: Build and push

|

| 63 |

+

uses: docker/build-push-action@v5

|

| 64 |

+

with:

|

| 65 |

+

context: .

|

| 66 |

+

platforms: linux/amd64,linux/arm64

|

| 67 |

+

file: Dockerfile

|

| 68 |

+

push: true

|

| 69 |

+

tags: ${{ steps.meta.outputs.tags }}

|

| 70 |

+

labels: ${{ steps.meta.outputs.labels }}

|

.github/workflows/build_docker_main.yml

ADDED

|

@@ -0,0 +1,70 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Build Docker Image

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches:

|

| 6 |

+

- main

|

| 7 |

+

paths-ignore:

|

| 8 |

+

- 'README.md'

|

| 9 |

+

- 'docker-compose.yml'

|

| 10 |

+

- 'docker-compose-warp.yml'

|

| 11 |

+

- 'docs/**'

|

| 12 |

+

- '.github/workflows/build_docker_main.yml'

|

| 13 |

+

- '.github/workflows/build_docker_dev.yml'

|

| 14 |

+

workflow_dispatch:

|

| 15 |

+

|

| 16 |

+

jobs:

|

| 17 |

+

main:

|

| 18 |

+

runs-on: ubuntu-latest

|

| 19 |

+

|

| 20 |

+

steps:

|

| 21 |

+

- name: Check out the repository

|

| 22 |

+

uses: actions/checkout@v2

|

| 23 |

+

|

| 24 |

+

- name: Read the version from version.txt

|

| 25 |

+

id: get_version

|

| 26 |

+

run: |

|

| 27 |

+

version=$(cat version.txt)

|

| 28 |

+

echo "Current version: v$version"

|

| 29 |

+

echo "::set-output name=version::v$version"

|

| 30 |

+

|

| 31 |

+

- name: Commit and push version tag

|

| 32 |

+

env:

|

| 33 |

+

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

| 34 |

+

run: |

|

| 35 |

+

version=${{ steps.get_version.outputs.version }}

|

| 36 |

+

git config --local user.email "[email protected]"

|

| 37 |

+

git config --local user.name "GitHub Action"

|

| 38 |

+

git tag "$version"

|

| 39 |

+

git push https://x-access-token:${GHCR_PAT}@github.com/lanqian528/chat2api.git "$version"

|

| 40 |

+

|

| 41 |

+

- name: Set up QEMU

|

| 42 |

+

uses: docker/setup-qemu-action@v3

|

| 43 |

+

|

| 44 |

+

- name: Set up Docker Buildx

|

| 45 |

+

uses: docker/setup-buildx-action@v3

|

| 46 |

+

|

| 47 |

+

- name: Log in to Docker Hub

|

| 48 |

+

uses: docker/login-action@v3

|

| 49 |

+

with:

|

| 50 |

+

username: ${{ secrets.DOCKER_USERNAME }}

|

| 51 |

+

password: ${{ secrets.DOCKER_PASSWORD }}

|

| 52 |

+

|

| 53 |

+

- name: Docker meta

|

| 54 |

+

id: meta

|

| 55 |

+

uses: docker/metadata-action@v5

|

| 56 |

+

with:

|

| 57 |

+

images: lanqian528/chat2api

|

| 58 |

+

tags: |

|

| 59 |

+

type=raw,value=latest,enable={{is_default_branch}}

|

| 60 |

+

type=raw,value=${{ steps.get_version.outputs.version }}

|

| 61 |

+

|

| 62 |

+

- name: Build and push

|

| 63 |

+

uses: docker/build-push-action@v5

|

| 64 |

+

with:

|

| 65 |

+

context: .

|

| 66 |

+

platforms: linux/amd64,linux/arm64

|

| 67 |

+

file: Dockerfile

|

| 68 |

+

push: true

|

| 69 |

+

tags: ${{ steps.meta.outputs.tags }}

|

| 70 |

+

labels: ${{ steps.meta.outputs.labels }}

|

.gitignore

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.env

|

| 2 |

+

*.pyc

|

| 3 |

+

/.git/

|

| 4 |

+

/.idea/

|

| 5 |

+

/tmp/

|

| 6 |

+

/data/

|

| 7 |

+

/.venv/

|

| 8 |

+

/.vscode/

|

Dockerfile

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM python:3.11-slim

|

| 2 |

+

|

| 3 |

+

WORKDIR /app

|

| 4 |

+

|

| 5 |

+

COPY . /app

|

| 6 |

+

|

| 7 |

+

RUN pip install --no-cache-dir -r requirements.txt

|

| 8 |

+

|

| 9 |

+

EXPOSE 5005

|

| 10 |

+

|

| 11 |

+

CMD ["python", "app.py"]

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2024 aurora-develop

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README——xxx.md

ADDED

|

@@ -0,0 +1,217 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# CHAT2API

|

| 2 |

+

|

| 3 |

+

🤖 一个简单的 ChatGPT TO API 代理

|

| 4 |

+

|

| 5 |

+

🌟 无需账号即可使用免费、无限的 `GPT-3.5`

|

| 6 |

+

|

| 7 |

+

💥 支持 AccessToken 使用账号,支持 `O1-Preview/mini`、`GPT-4`、`GPT-4o/mini`、 `GPTs`

|

| 8 |

+

|

| 9 |

+

🔍 回复格式与真实 API 完全一致,适配几乎所有客户端

|

| 10 |

+

|

| 11 |

+

👮 配套用户管理端[Chat-Share](https://github.com/h88782481/Chat-Share)使用前需提前配置好环境变量(ENABLE_GATEWAY设置为True,AUTO_SEED设置为False)

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

## 交流群

|

| 15 |

+

|

| 16 |

+

[https://t.me/chat2api](https://t.me/chat2api)

|

| 17 |

+

|

| 18 |

+

要提问请先阅读完仓库文档,尤其是常见问题部分。

|

| 19 |

+

|

| 20 |

+

提问时请提供:

|

| 21 |

+

|

| 22 |

+

1. 启动日志截图(敏感信息打码,包括环境变量和版本号)

|

| 23 |

+

2. 报错的日志信息(敏感信息打码)

|

| 24 |

+

3. 接口返回的状态码和响应体

|

| 25 |

+

|

| 26 |

+

## 赞助商

|

| 27 |

+

|

| 28 |

+

感谢 Capsolver 对本项目的赞助,对于市面上任何人机验证码,你可以使用 [Capsolver](https://www.capsolver.com/zh?utm_source=github&utm_medium=repo&utm_campaign=scraping&utm_term=chat2api) 来解决

|

| 29 |

+

|

| 30 |

+

[](https://www.capsolver.com/zh?utm_source=github&utm_medium=repo&utm_campaign=scraping&utm_term=chat2api)

|

| 31 |

+

|

| 32 |

+

## 功能

|

| 33 |

+

|

| 34 |

+

### 最新版本号存于 `version.txt`

|

| 35 |

+

|

| 36 |

+

### 逆向API 功能

|

| 37 |

+

> - [x] 流式、非流式传输

|

| 38 |

+

> - [x] 免登录 GPT-3.5 对话

|

| 39 |

+

> - [x] GPT-3.5 模型对话(传入模型名不包含 gpt-4,则默认使用 gpt-3.5,也就是 text-davinci-002-render-sha)

|

| 40 |

+

> - [x] GPT-4 系列模型对话(传入模型名包含: gpt-4,gpt-4o,gpt-4o-mini,gpt-4-moblie 即可使用对应模型,需传入 AccessToken)

|

| 41 |

+

> - [x] O1 系列模型对话(传入模型名包含 o1-preview,o1-mini 即可使用对应模型,需传入 AccessToken)

|

| 42 |

+

> - [x] GPT-4 模型画图、代码、联网

|

| 43 |

+

> - [x] 支持 GPTs(传入模型名:gpt-4-gizmo-g-*)

|

| 44 |

+

> - [x] 支持 Team Plus 账号(需传入 team account id)

|

| 45 |

+

> - [x] 上传图片、文件(格式为 API 对应格式,支持 URL 和 base64)

|

| 46 |

+

> - [x] 可作为网关使用,可多机分布部署

|

| 47 |

+

> - [x] 多账号轮询,同时支持 `AccessToken` 和 `RefreshToken`

|

| 48 |

+

> - [x] 请求失败重试,自动轮询下一个 Token

|

| 49 |

+

> - [x] Tokens 管理,支持上传、清除

|

| 50 |

+

> - [x] 定时使用 `RefreshToken` 刷新 `AccessToken` / 每次启动将会全部非强制刷新一次,每4天晚上3点全部强制刷新一次。

|

| 51 |

+

> - [x] 支持文件下载,需要开启历史记录

|

| 52 |

+

> - [x] 支持 `O1-Preview/mini` 模型推理过程输出

|

| 53 |

+

|

| 54 |

+

### 官网镜像 功能

|

| 55 |

+

> - [x] 支持官网原生镜像

|

| 56 |

+

> - [x] 后台账号池随机抽取,`Seed` 设置随机账号

|

| 57 |

+

> - [x] 输入 `RefreshToken` 或 `AccessToken` 直接登录使用

|

| 58 |

+

> - [x] 支持 O1-Preview/mini、GPT-4、GPT-4o/mini

|

| 59 |

+

> - [x] 敏感信息接口禁用、部分设置接口禁用

|

| 60 |

+

> - [x] /login 登录页面,注销后自动跳转到登录页面

|

| 61 |

+

> - [x] /?token=xxx 直接登录, xxx 为 `RefreshToken` 或 `AccessToken` 或 `SeedToken` (随机种子)

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

> TODO

|

| 65 |

+

> - [ ] 镜像支持 `GPTs`

|

| 66 |

+

> - [ ] 暂无,欢迎提 `issue`

|

| 67 |

+

|

| 68 |

+

## 逆向API

|

| 69 |

+

|

| 70 |

+

完全 `OpenAI` 格式的 API ,支持传入 `AccessToken` 或 `RefreshToken`,可用 GPT-4, GPT-4o, GPTs, O1-Preview, O1-Mini:

|

| 71 |

+

|

| 72 |

+

```bash

|

| 73 |

+

curl --location 'http://127.0.0.1:5005/v1/chat/completions' \

|

| 74 |

+

--header 'Content-Type: application/json' \

|

| 75 |

+

--header 'Authorization: Bearer {{Token}}' \

|

| 76 |

+

--data '{

|

| 77 |

+

"model": "gpt-3.5-turbo",

|

| 78 |

+

"messages": [{"role": "user", "content": "Say this is a test!"}],

|

| 79 |

+

"stream": true

|

| 80 |

+

}'

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

将你账号的 `AccessToken` 或 `RefreshToken` 作为 `{{ Token }}` 传入。

|

| 84 |

+

也可填写你设置的环境变量 `Authorization` 的值, 将会随机选择后台账号

|

| 85 |

+

|

| 86 |

+

如果有team账号,可以传入 `ChatGPT-Account-ID`,使用 Team 工作区:

|

| 87 |

+

|

| 88 |

+

- 传入方式一:

|

| 89 |

+

`headers` 中传入 `ChatGPT-Account-ID`值

|

| 90 |

+

|

| 91 |

+

- 传入方式二:

|

| 92 |

+

`Authorization: Bearer <AccessToken 或 RefreshToken>,<ChatGPT-Account-ID>`

|

| 93 |

+

|

| 94 |

+

如果设置了 `AUTHORIZATION` 环境变量,可以将设置的值作为 `{{ Token }}` 传入进行多 Tokens 轮询。

|

| 95 |

+

|

| 96 |

+

> - `AccessToken` 获取: chatgpt官网登录后,再打开 [https://chatgpt.com/api/auth/session](https://chatgpt.com/api/auth/session) 获取 `accessToken` 这个值。

|

| 97 |

+

> - `RefreshToken` 获取: 此处不提供获取方法。

|

| 98 |

+

> - 免登录 gpt-3.5 无需传入 Token。

|

| 99 |

+

|

| 100 |

+

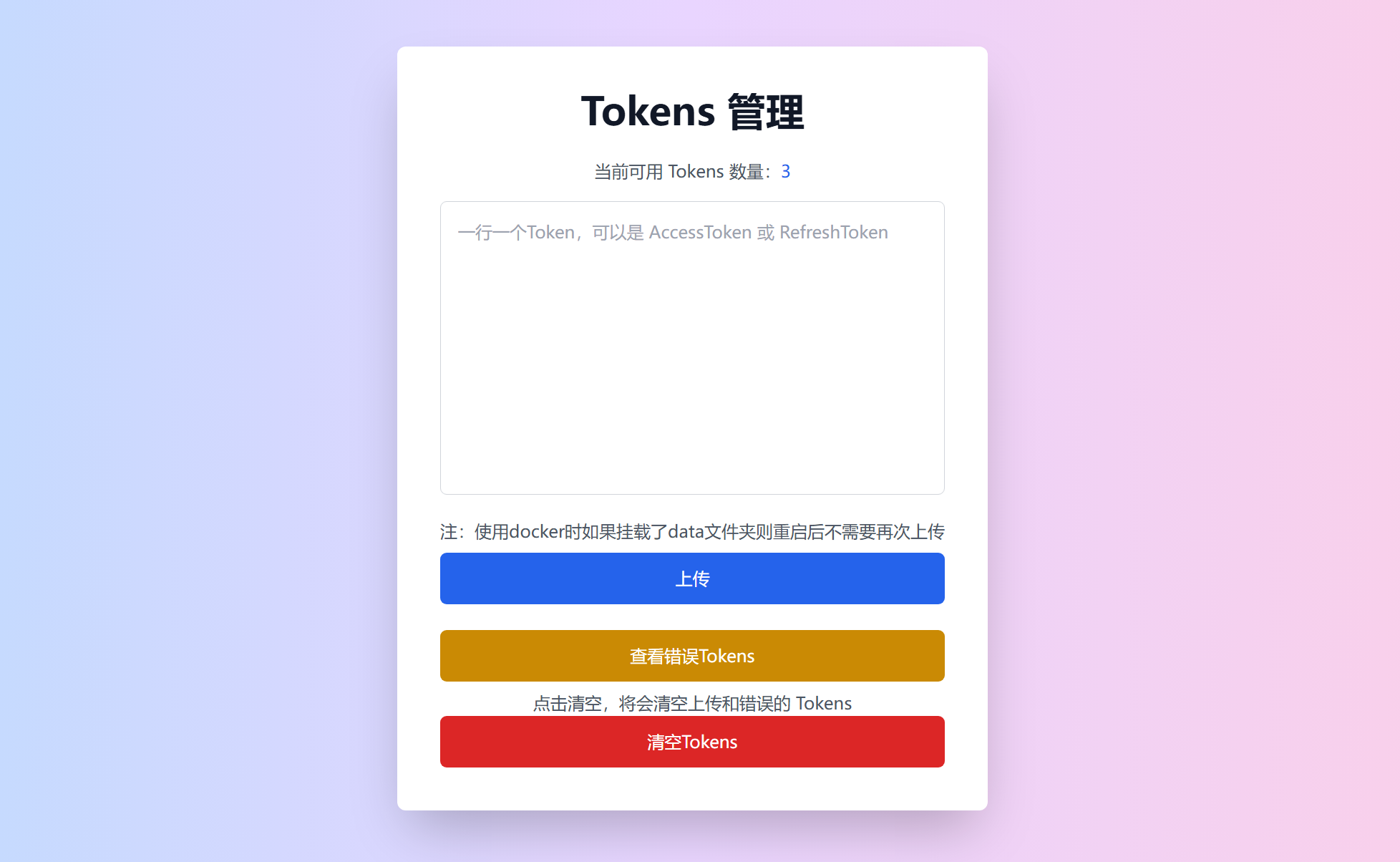

## Tokens 管理

|

| 101 |

+

|

| 102 |

+

1. 配置环境变量 `AUTHORIZATION` 作为 `授权码` ,然后运行程序。

|

| 103 |

+

|

| 104 |

+

2. 访问 `/tokens` 或者 `/{api_prefix}/tokens` 可以查看现有 Tokens 数量,也可以上传新的 Tokens ,或者清空 Tokens。

|

| 105 |

+

|

| 106 |

+

3. 请求时传入 `AUTHORIZATION` 中配置的 `授权码` 即可使用轮询的Tokens进行对话

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

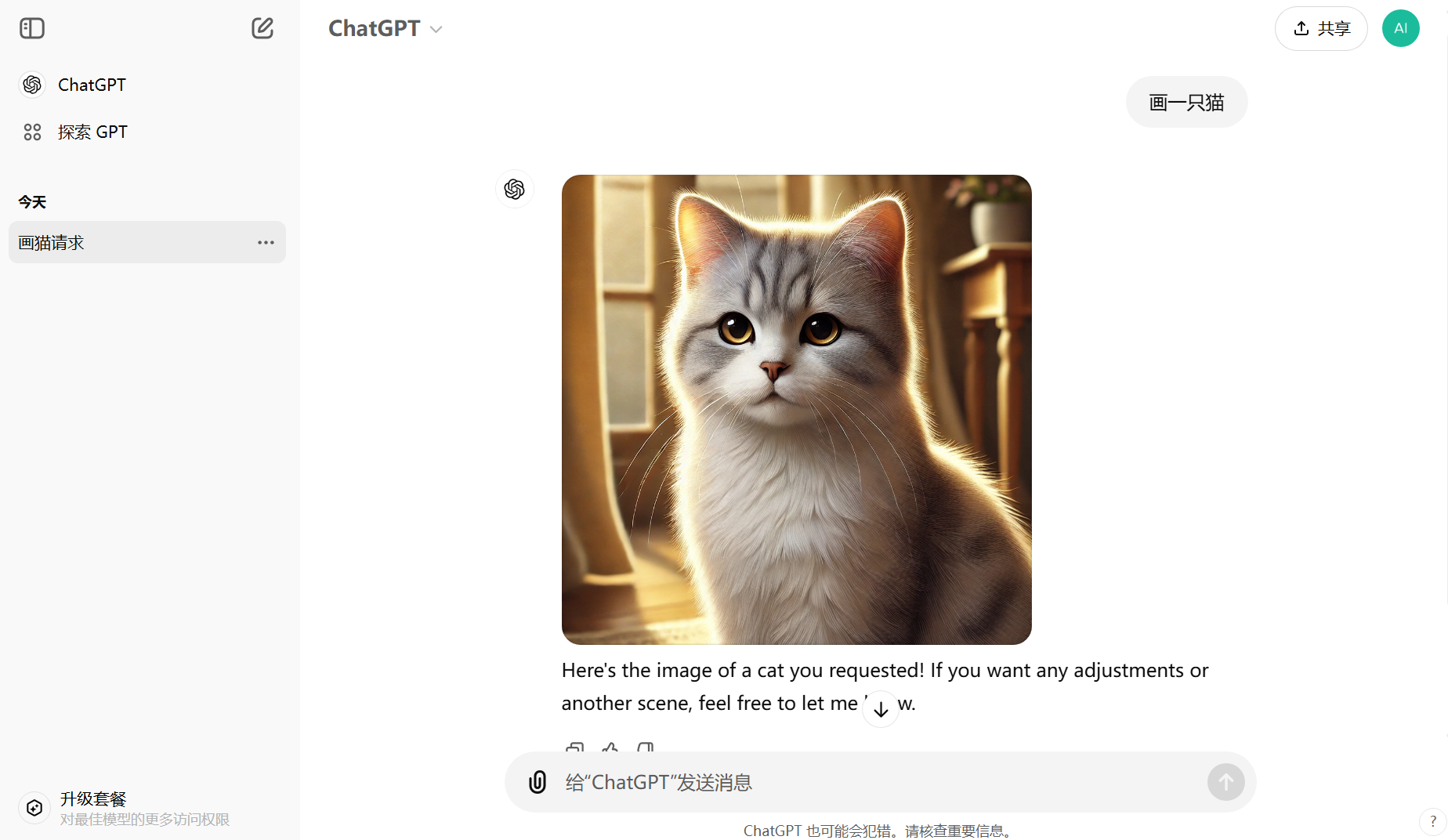

## 官网原生镜像

|

| 111 |

+

|

| 112 |

+

1. 配置环境变量 `ENABLE_GATEWAY` 为 `true`,然后运行程序, 注意开启后别人也可以直接通过域名访问你的网关。

|

| 113 |

+

|

| 114 |

+

2. 在 Tokens 管理页面上传 `RefreshToken` 或 `AccessToken`

|

| 115 |

+

|

| 116 |

+

3. 访问 `/login` 到登录页面

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

|

| 120 |

+

4. 进入官网原生镜像页面使用

|

| 121 |

+

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

## 环境变量

|

| 125 |

+

|

| 126 |

+

每个环境变量都有默认值,如果不懂环境变量的含义,请不要设置,更不要传空值,字符串无需引号。

|

| 127 |

+

|

| 128 |

+

| 分类 | 变量名 | 示例值 | 默认值 | 描述 |

|

| 129 |

+

|------|-------------------|-------------------------------------------------------------|-----------------------|--------------------------------------------------------------|

|

| 130 |

+

| 安全相关 | API_PREFIX | `your_prefix` | `None` | API 前缀密码,不设置容易被人访问,设置后需请求 `/your_prefix/v1/chat/completions` |

|

| 131 |

+

| | AUTHORIZATION | `your_first_authorization`,<br/>`your_second_authorization` | `[]` | 你自己为使用多账号轮询 Tokens 设置的授权码,英文逗号分隔 |

|

| 132 |

+

| | AUTH_KEY | `your_auth_key` | `None` | 私人网关需要加`auth_key`请求头才设置该项 |

|

| 133 |

+

| 请求相关 | CHATGPT_BASE_URL | `https://chatgpt.com` | `https://chatgpt.com` | ChatGPT 网关地址,设置后会改变请求的网站,多个网关用逗号分隔 |

|

| 134 |

+

| | PROXY_URL | `http://ip:port`,<br/>`http://username:password@ip:port` | `[]` | 全局代理 URL,出 403 时启用,多个代理用逗号分隔 |

|

| 135 |

+

| | EXPORT_PROXY_URL | `http://ip:port`或<br/>`http://username:password@ip:port` | `None` | 出口代理 URL,防止请求图片和文件时泄漏源站 ip |

|

| 136 |

+

| 功能相关 | HISTORY_DISABLED | `true` | `true` | 是否不保存聊天记录并返回 conversation_id |

|

| 137 |

+

| | POW_DIFFICULTY | `00003a` | `00003a` | 要解决的工作量证明难度,不懂别设置 |

|

| 138 |

+

| | RETRY_TIMES | `3` | `3` | 出错重试次数,使用 `AUTHORIZATION` 会自动随机/轮询下一个账号 |

|

| 139 |

+

| | CONVERSATION_ONLY | `false` | `false` | 是否直接使用对话接口,如果你用的网关支持自动解决 `POW` 才启用 |

|

| 140 |

+

| | ENABLE_LIMIT | `true` | `true` | 开启后不尝试突破官方次数限制,尽可能防止封号 |

|

| 141 |

+

| | UPLOAD_BY_URL | `false` | `false` | 开启后按照 `URL+空格+正文` 进行对话,自动解析 URL 内容并上传,多个 URL 用空格分隔 |

|

| 142 |

+

| | SCHEDULED_REFRESH | `false` | `false` | 是否定时刷新 `AccessToken` ,开启后每次启动程序将会全部非强制刷新一次,每4天晚上3点全部强制刷新一次。 |

|

| 143 |

+

| | RANDOM_TOKEN | `true` | `true` | 是否随机选取后台 `Token` ,开启后随机后台账号,关闭后为顺序轮询 |

|

| 144 |

+

| 网关功能 | ENABLE_GATEWAY | `false` | `false` | 是否启用网关模式,开启后可以使用镜像站,但也将会不设防 |

|

| 145 |

+

| | AUTO_SEED | `false` | `true` | 是否启用随机账号模式,默认启用,输入`seed`后随机匹配后台`Token`。关闭之后需要手动对接接口,来进行`Token`管控。 |

|

| 146 |

+

|

| 147 |

+

## 部署

|

| 148 |

+

|

| 149 |

+

### Zeabur 部署

|

| 150 |

+

|

| 151 |

+

[](https://zeabur.com/templates/6HEGIZ?referralCode=LanQian528)

|

| 152 |

+

|

| 153 |

+

### 直接部署

|

| 154 |

+

|

| 155 |

+

```bash

|

| 156 |

+

git clone https://github.com/LanQian528/chat2api

|

| 157 |

+

cd chat2api

|

| 158 |

+

pip install -r requirements.txt

|

| 159 |

+

python app.py

|

| 160 |

+

```

|

| 161 |

+

|

| 162 |

+

### Docker 部署

|

| 163 |

+

|

| 164 |

+

您需要安装 Docker 和 Docker Compose。

|

| 165 |

+

|

| 166 |

+

```bash

|

| 167 |

+

docker run -d \

|

| 168 |

+

--name chat2api \

|

| 169 |

+

-p 5005:5005 \

|

| 170 |

+

lanqian528/chat2api:latest

|

| 171 |

+

```

|

| 172 |

+

|

| 173 |

+

### (推荐,可用 PLUS 账号) Docker Compose 部署

|

| 174 |

+

|

| 175 |

+

创建一个新的目录,例如 chat2api,���进入该目录:

|

| 176 |

+

|

| 177 |

+

```bash

|

| 178 |

+

mkdir chat2api

|

| 179 |

+

cd chat2api

|

| 180 |

+

```

|

| 181 |

+

|

| 182 |

+

在此目录中下载库中的 docker-compose.yml 文件:

|

| 183 |

+

|

| 184 |

+

```bash

|

| 185 |

+

wget https://raw.githubusercontent.com/LanQian528/chat2api/main/docker-compose-warp.yml

|

| 186 |

+

```

|

| 187 |

+

|

| 188 |

+

修改 docker-compose-warp.yml 文件中的环境变量,保存后:

|

| 189 |

+

|

| 190 |

+

```bash

|

| 191 |

+

docker-compose up -d

|

| 192 |

+

```

|

| 193 |

+

|

| 194 |

+

|

| 195 |

+

## 常见问题

|

| 196 |

+

|

| 197 |

+

> - 错误代码:

|

| 198 |

+

> - `401`:当前 IP 不支持免登录,请尝试更换 IP 地址,或者在环境变量 `PROXY_URL` 中设置代理,或者你的身份验证失败。

|

| 199 |

+

> - `403`:请在日志中查看具体报错信息。

|

| 200 |

+

> - `429`:当前 IP 请求1小时内请求超过限制,请稍后再试,或更换 IP。

|

| 201 |

+

> - `500`:服务器内部错误,请求失败。

|

| 202 |

+

> - `502`:服务器网关错误,或网络不可用,请尝试更换网络环境。

|

| 203 |

+

|

| 204 |

+

> - 已知情况:

|

| 205 |

+

> - 日本 IP 很多不支持免登,免登 GPT-3.5 建议使用美国 IP。

|

| 206 |

+

> - 99%的账号都支持免费 `GPT-4o` ,但根据 IP 地区开启,目前日本和新加坡 IP 已知开启概率较大。

|

| 207 |

+

|

| 208 |

+

> - 环境变量 `AUTHORIZATION` 是什么?

|

| 209 |

+

> - 是一个自己给 chat2api 设置的一个身份验证,设置后才可使用已保存的 Tokens 轮询,请求时当作 `APIKEY` 传入。

|

| 210 |

+

> - AccessToken 如何获取?

|

| 211 |

+

> - chatgpt官网登录后,再打开 [https://chatgpt.com/api/auth/session](https://chatgpt.com/api/auth/session) 获取 `accessToken` 这个值。

|

| 212 |

+

|

| 213 |

+

|

| 214 |

+

## License

|

| 215 |

+

|

| 216 |

+

MIT License

|

| 217 |

+

|

api/chat2api.py

ADDED

|

@@ -0,0 +1,124 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import asyncio

|

| 2 |

+

import types

|

| 3 |

+

|

| 4 |

+

from apscheduler.schedulers.asyncio import AsyncIOScheduler

|

| 5 |

+

from fastapi import Request, HTTPException, Form, Security

|

| 6 |

+

from fastapi.responses import HTMLResponse, StreamingResponse, JSONResponse

|

| 7 |

+

from fastapi.security import HTTPAuthorizationCredentials

|

| 8 |

+

from starlette.background import BackgroundTask

|

| 9 |

+

|

| 10 |

+

import utils.globals as globals

|

| 11 |

+

from app import app, templates, security_scheme

|

| 12 |

+

from chatgpt.ChatService import ChatService

|

| 13 |

+

from chatgpt.authorization import refresh_all_tokens

|

| 14 |

+

from utils.Logger import logger

|

| 15 |

+

from utils.configs import api_prefix, scheduled_refresh

|

| 16 |

+

from utils.retry import async_retry

|

| 17 |

+

|

| 18 |

+

scheduler = AsyncIOScheduler()

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

@app.on_event("startup")

|

| 22 |

+

async def app_start():

|

| 23 |

+

if scheduled_refresh:

|

| 24 |

+

scheduler.add_job(id='refresh', func=refresh_all_tokens, trigger='cron', hour=3, minute=0, day='*/2',

|

| 25 |

+

kwargs={'force_refresh': True})

|

| 26 |

+

scheduler.start()

|

| 27 |

+

asyncio.get_event_loop().call_later(0, lambda: asyncio.create_task(refresh_all_tokens(force_refresh=False)))

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

async def to_send_conversation(request_data, req_token):

|

| 31 |

+

chat_service = ChatService(req_token)

|

| 32 |

+

try:

|

| 33 |

+

await chat_service.set_dynamic_data(request_data)

|

| 34 |

+

await chat_service.get_chat_requirements()

|

| 35 |

+

return chat_service

|

| 36 |

+

except HTTPException as e:

|

| 37 |

+

await chat_service.close_client()

|

| 38 |

+

raise HTTPException(status_code=e.status_code, detail=e.detail)

|

| 39 |

+

except Exception as e:

|

| 40 |

+

await chat_service.close_client()

|

| 41 |

+

logger.error(f"Server error, {str(e)}")

|

| 42 |

+

raise HTTPException(status_code=500, detail="Server error")

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

async def process(request_data, req_token):

|

| 46 |

+

chat_service = await to_send_conversation(request_data, req_token)

|

| 47 |

+

await chat_service.prepare_send_conversation()

|

| 48 |

+

res = await chat_service.send_conversation()

|

| 49 |

+

return chat_service, res

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

@app.post(f"/{api_prefix}/v1/chat/completions" if api_prefix else "/v1/chat/completions")

|

| 53 |

+

async def send_conversation(request: Request, credentials: HTTPAuthorizationCredentials = Security(security_scheme)):

|

| 54 |

+

req_token = credentials.credentials

|

| 55 |

+

try:

|

| 56 |

+

request_data = await request.json()

|

| 57 |

+

except Exception:

|

| 58 |

+

raise HTTPException(status_code=400, detail={"error": "Invalid JSON body"})

|

| 59 |

+

chat_service, res = await async_retry(process, request_data, req_token)

|

| 60 |

+

try:

|

| 61 |

+

if isinstance(res, types.AsyncGeneratorType):

|

| 62 |

+

background = BackgroundTask(chat_service.close_client)

|

| 63 |

+

return StreamingResponse(res, media_type="text/event-stream", background=background)

|

| 64 |

+

else:

|

| 65 |

+

background = BackgroundTask(chat_service.close_client)

|

| 66 |

+

return JSONResponse(res, media_type="application/json", background=background)

|

| 67 |

+

except HTTPException as e:

|

| 68 |

+

await chat_service.close_client()

|

| 69 |

+

if e.status_code == 500:

|

| 70 |

+

logger.error(f"Server error, {str(e)}")

|

| 71 |

+

raise HTTPException(status_code=500, detail="Server error")

|

| 72 |

+

raise HTTPException(status_code=e.status_code, detail=e.detail)

|

| 73 |

+

except Exception as e:

|

| 74 |

+

await chat_service.close_client()

|

| 75 |

+

logger.error(f"Server error, {str(e)}")

|

| 76 |

+

raise HTTPException(status_code=500, detail="Server error")

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

@app.get(f"/{api_prefix}/tokens" if api_prefix else "/tokens", response_class=HTMLResponse)

|

| 80 |

+

async def upload_html(request: Request):

|

| 81 |

+

tokens_count = len(set(globals.token_list) - set(globals.error_token_list))

|

| 82 |

+

return templates.TemplateResponse("tokens.html",

|

| 83 |

+

{"request": request, "api_prefix": api_prefix, "tokens_count": tokens_count})

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

@app.post(f"/{api_prefix}/tokens/upload" if api_prefix else "/tokens/upload")

|

| 87 |

+

async def upload_post(text: str = Form(...)):

|

| 88 |

+

lines = text.split("\n")

|

| 89 |

+

for line in lines:

|

| 90 |

+

if line.strip() and not line.startswith("#"):

|

| 91 |

+

globals.token_list.append(line.strip())

|

| 92 |

+

with open(globals.TOKENS_FILE, "a", encoding="utf-8") as f:

|

| 93 |

+

f.write(line.strip() + "\n")

|

| 94 |

+

logger.info(f"Token count: {len(globals.token_list)}, Error token count: {len(globals.error_token_list)}")

|

| 95 |

+

tokens_count = len(set(globals.token_list) - set(globals.error_token_list))

|

| 96 |

+

return {"status": "success", "tokens_count": tokens_count}

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

@app.post(f"/{api_prefix}/tokens/clear" if api_prefix else "/tokens/clear")

|

| 100 |

+

async def upload_post():

|

| 101 |

+

globals.token_list.clear()

|

| 102 |

+

globals.error_token_list.clear()

|

| 103 |

+

with open(globals.TOKENS_FILE, "w", encoding="utf-8") as f:

|

| 104 |

+

pass

|

| 105 |

+

logger.info(f"Token count: {len(globals.token_list)}, Error token count: {len(globals.error_token_list)}")

|

| 106 |

+

tokens_count = len(set(globals.token_list) - set(globals.error_token_list))

|

| 107 |

+

return {"status": "success", "tokens_count": tokens_count}

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

@app.post(f"/{api_prefix}/tokens/error" if api_prefix else "/tokens/error")

|

| 111 |

+

async def error_tokens():

|

| 112 |

+

error_tokens_list = list(set(globals.error_token_list))

|

| 113 |

+

return {"status": "success", "error_tokens": error_tokens_list}

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

@app.get(f"/{api_prefix}/tokens/add/{{token}}" if api_prefix else "/tokens/add/{token}")

|

| 117 |

+

async def add_token(token: str):

|

| 118 |

+

if token.strip() and not token.startswith("#"):

|

| 119 |

+

globals.token_list.append(token.strip())

|

| 120 |

+

with open(globals.TOKENS_FILE, "a", encoding="utf-8") as f:

|

| 121 |

+

f.write(token.strip() + "\n")

|

| 122 |

+

logger.info(f"Token count: {len(globals.token_list)}, Error token count: {len(globals.error_token_list)}")

|

| 123 |

+

tokens_count = len(set(globals.token_list) - set(globals.error_token_list))

|

| 124 |

+

return {"status": "success", "tokens_count": tokens_count}

|

api/files.py

ADDED

|

@@ -0,0 +1,144 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import io

|

| 2 |

+

|

| 3 |

+

import pybase64

|

| 4 |

+

from PIL import Image

|

| 5 |

+

|

| 6 |

+

from utils.Client import Client

|

| 7 |

+

from utils.configs import export_proxy_url, cf_file_url

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

async def get_file_content(url):

|

| 11 |

+

if url.startswith("data:"):

|

| 12 |

+

mime_type, base64_data = url.split(';')[0].split(':')[1], url.split(',')[1]

|

| 13 |

+

file_content = pybase64.b64decode(base64_data)

|

| 14 |

+

return file_content, mime_type

|

| 15 |

+

else:

|

| 16 |

+

client = Client()

|

| 17 |

+

try:

|

| 18 |

+

if cf_file_url:

|

| 19 |

+

body = {"file_url": url}

|

| 20 |

+

r = await client.post(cf_file_url, timeout=60, json=body)

|

| 21 |

+

else:

|

| 22 |

+

r = await client.get(url, proxy=export_proxy_url, timeout=60)

|

| 23 |

+

if r.status_code != 200:

|

| 24 |

+

return None, None

|

| 25 |

+

file_content = r.content

|

| 26 |

+

mime_type = r.headers.get('Content-Type', '').split(';')[0].strip()

|

| 27 |

+

return file_content, mime_type

|

| 28 |

+

finally:

|

| 29 |

+

await client.close()

|

| 30 |

+

del client

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

async def determine_file_use_case(mime_type):

|

| 34 |

+

multimodal_types = ["image/jpeg", "image/webp", "image/png", "image/gif"]

|

| 35 |

+

my_files_types = ["text/x-php", "application/msword", "text/x-c", "text/html",

|

| 36 |

+

"application/vnd.openxmlformats-officedocument.wordprocessingml.document",

|

| 37 |

+

"application/json", "text/javascript", "application/pdf",

|

| 38 |

+

"text/x-java", "text/x-tex", "text/x-typescript", "text/x-sh",

|

| 39 |

+

"text/x-csharp", "application/vnd.openxmlformats-officedocument.presentationml.presentation",

|

| 40 |

+

"text/x-c++", "application/x-latext", "text/markdown", "text/plain",

|

| 41 |

+

"text/x-ruby", "text/x-script.python"]

|

| 42 |

+

|

| 43 |

+

if mime_type in multimodal_types:

|

| 44 |

+

return "multimodal"

|

| 45 |

+

elif mime_type in my_files_types:

|

| 46 |

+

return "my_files"

|

| 47 |

+

else:

|

| 48 |

+

return "ace_upload"

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

async def get_image_size(file_content):

|

| 52 |

+

with Image.open(io.BytesIO(file_content)) as img:

|

| 53 |

+

return img.width, img.height

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

async def get_file_extension(mime_type):

|

| 57 |

+

extension_mapping = {

|

| 58 |

+

"image/jpeg": ".jpg",

|

| 59 |

+

"image/png": ".png",

|

| 60 |

+

"image/gif": ".gif",

|

| 61 |

+

"image/webp": ".webp",

|

| 62 |

+

"text/x-php": ".php",

|

| 63 |

+

"application/msword": ".doc",

|

| 64 |

+

"text/x-c": ".c",

|

| 65 |

+

"text/html": ".html",

|

| 66 |

+

"application/vnd.openxmlformats-officedocument.wordprocessingml.document": ".docx",

|

| 67 |

+

"application/json": ".json",

|

| 68 |

+

"text/javascript": ".js",

|

| 69 |

+

"application/pdf": ".pdf",

|

| 70 |

+

"text/x-java": ".java",

|

| 71 |

+

"text/x-tex": ".tex",

|

| 72 |

+

"text/x-typescript": ".ts",

|

| 73 |

+

"text/x-sh": ".sh",

|

| 74 |

+

"text/x-csharp": ".cs",

|

| 75 |

+

"application/vnd.openxmlformats-officedocument.presentationml.presentation": ".pptx",

|

| 76 |

+

"text/x-c++": ".cpp",

|

| 77 |

+

"application/x-latex": ".latex",

|

| 78 |

+

"text/markdown": ".md",

|

| 79 |

+

"text/plain": ".txt",

|

| 80 |

+

"text/x-ruby": ".rb",

|

| 81 |

+

"text/x-script.python": ".py",

|

| 82 |

+

"application/zip": ".zip",

|

| 83 |

+

"application/x-zip-compressed": ".zip",

|

| 84 |

+

"application/x-tar": ".tar",

|

| 85 |

+

"application/x-compressed-tar": ".tar.gz",

|

| 86 |

+

"application/vnd.rar": ".rar",

|

| 87 |

+

"application/x-rar-compressed": ".rar",

|

| 88 |

+

"application/x-7z-compressed": ".7z",

|

| 89 |

+

"application/octet-stream": ".bin",

|

| 90 |

+

"audio/mpeg": ".mp3",

|

| 91 |

+

"audio/wav": ".wav",

|

| 92 |

+

"audio/ogg": ".ogg",

|

| 93 |

+

"audio/aac": ".aac",

|

| 94 |

+

"video/mp4": ".mp4",

|

| 95 |

+

"video/x-msvideo": ".avi",

|

| 96 |

+

"video/x-matroska": ".mkv",

|

| 97 |

+

"video/webm": ".webm",

|

| 98 |

+

"application/rtf": ".rtf",

|

| 99 |

+

"application/vnd.ms-excel": ".xls",

|

| 100 |

+

"application/vnd.openxmlformats-officedocument.spreadsheetml.sheet": ".xlsx",

|

| 101 |

+

"text/css": ".css",

|

| 102 |

+

"text/xml": ".xml",

|

| 103 |

+

"application/xml": ".xml",

|

| 104 |

+

"application/vnd.android.package-archive": ".apk",

|

| 105 |

+

"application/vnd.apple.installer+xml": ".mpkg",

|

| 106 |

+

"application/x-bzip": ".bz",

|

| 107 |

+

"application/x-bzip2": ".bz2",

|

| 108 |

+

"application/x-csh": ".csh",

|

| 109 |

+

"application/x-debian-package": ".deb",

|

| 110 |

+

"application/x-dvi": ".dvi",

|

| 111 |

+

"application/java-archive": ".jar",

|

| 112 |

+

"application/x-java-jnlp-file": ".jnlp",

|

| 113 |

+

"application/vnd.mozilla.xul+xml": ".xul",

|

| 114 |

+

"application/vnd.ms-fontobject": ".eot",

|

| 115 |

+

"application/ogg": ".ogx",

|

| 116 |

+

"application/x-font-ttf": ".ttf",

|

| 117 |

+

"application/font-woff": ".woff",

|

| 118 |

+

"application/x-shockwave-flash": ".swf",

|

| 119 |

+

"application/vnd.visio": ".vsd",

|

| 120 |

+

"application/xhtml+xml": ".xhtml",

|

| 121 |

+

"application/vnd.ms-powerpoint": ".ppt",

|

| 122 |

+

"application/vnd.oasis.opendocument.text": ".odt",

|

| 123 |

+

"application/vnd.oasis.opendocument.spreadsheet": ".ods",

|

| 124 |

+

"application/x-xpinstall": ".xpi",

|

| 125 |

+

"application/vnd.google-earth.kml+xml": ".kml",

|

| 126 |

+

"application/vnd.google-earth.kmz": ".kmz",

|

| 127 |

+

"application/x-font-otf": ".otf",

|

| 128 |

+

"application/vnd.ms-excel.addin.macroEnabled.12": ".xlam",

|

| 129 |

+

"application/vnd.ms-excel.sheet.binary.macroEnabled.12": ".xlsb",

|

| 130 |

+

"application/vnd.ms-excel.template.macroEnabled.12": ".xltm",

|

| 131 |

+

"application/vnd.ms-powerpoint.addin.macroEnabled.12": ".ppam",

|

| 132 |

+

"application/vnd.ms-powerpoint.presentation.macroEnabled.12": ".pptm",

|

| 133 |

+

"application/vnd.ms-powerpoint.slideshow.macroEnabled.12": ".ppsm",

|

| 134 |

+

"application/vnd.ms-powerpoint.template.macroEnabled.12": ".potm",

|

| 135 |

+

"application/vnd.ms-word.document.macroEnabled.12": ".docm",

|

| 136 |

+

"application/vnd.ms-word.template.macroEnabled.12": ".dotm",

|

| 137 |

+

"application/x-ms-application": ".application",

|

| 138 |

+

"application/x-ms-wmd": ".wmd",

|

| 139 |

+

"application/x-ms-wmz": ".wmz",

|

| 140 |

+

"application/x-ms-xbap": ".xbap",

|

| 141 |

+

"application/vnd.ms-xpsdocument": ".xps",

|

| 142 |

+

"application/x-silverlight-app": ".xap"

|

| 143 |

+

}

|

| 144 |

+

return extension_mapping.get(mime_type, "")

|

api/models.py

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model_proxy = {

|

| 2 |

+

"gpt-3.5-turbo": "gpt-3.5-turbo-0125",

|

| 3 |

+

"gpt-3.5-turbo-16k": "gpt-3.5-turbo-16k-0613",

|

| 4 |

+

"gpt-4": "gpt-4-0613",

|

| 5 |

+

"gpt-4-32k": "gpt-4-32k-0613",

|

| 6 |

+

"gpt-4-turbo-preview": "gpt-4-0125-preview",

|

| 7 |

+

"gpt-4-vision-preview": "gpt-4-1106-vision-preview",

|

| 8 |

+

"gpt-4-turbo": "gpt-4-turbo-2024-04-09",

|

| 9 |

+

"gpt-4o": "gpt-4o-2024-08-06",

|

| 10 |

+

"gpt-4o-mini": "gpt-4o-mini-2024-07-18",

|

| 11 |

+

"o1-preview": "o1-preview-2024-09-12",

|

| 12 |

+

"o1-mini": "o1-mini-2024-09-12",

|

| 13 |

+

"claude-3-opus": "claude-3-opus-20240229",

|

| 14 |

+

"claude-3-sonnet": "claude-3-sonnet-20240229",

|

| 15 |

+

"claude-3-haiku": "claude-3-haiku-20240307",

|

| 16 |

+

}

|

| 17 |

+

|

| 18 |

+

model_system_fingerprint = {

|

| 19 |

+

"gpt-3.5-turbo-0125": ["fp_b28b39ffa8"],

|

| 20 |

+

"gpt-3.5-turbo-1106": ["fp_592ef5907d"],

|

| 21 |

+

"gpt-4-0125-preview": ["fp_f38f4d6482", "fp_2f57f81c11", "fp_a7daf7c51e", "fp_a865e8ede4", "fp_13c70b9f70",

|

| 22 |

+

"fp_b77cb481ed"],

|

| 23 |

+

"gpt-4-1106-preview": ["fp_e467c31c3d", "fp_d986a8d1ba", "fp_99a5a401bb", "fp_123d5a9f90", "fp_0d1affc7a6",

|

| 24 |

+

"fp_5c95a4634e"],

|

| 25 |

+

"gpt-4-turbo-2024-04-09": ["fp_d1bac968b4"],

|

| 26 |

+

"gpt-4o-2024-05-13": ["fp_3aa7262c27"],

|

| 27 |

+

"gpt-4o-mini-2024-07-18": ["fp_c9aa9c0491"]

|

| 28 |

+

}

|

api/tokens.py

ADDED

|

@@ -0,0 +1,86 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import math

|

| 2 |

+

|

| 3 |

+

import tiktoken

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

async def calculate_image_tokens(width, height, detail):

|

| 7 |

+

if detail == "low":

|

| 8 |

+

return 85

|

| 9 |

+

else:

|

| 10 |

+

max_dimension = max(width, height)

|

| 11 |

+

if max_dimension > 2048:

|

| 12 |

+

scale_factor = 2048 / max_dimension

|

| 13 |

+

new_width = int(width * scale_factor)

|

| 14 |

+

new_height = int(height * scale_factor)

|

| 15 |

+

else:

|

| 16 |

+

new_width = width

|

| 17 |

+

new_height = height

|

| 18 |

+

|

| 19 |

+

width, height = new_width, new_height

|

| 20 |

+

min_dimension = min(width, height)

|

| 21 |

+

if min_dimension > 768:

|

| 22 |

+

scale_factor = 768 / min_dimension

|

| 23 |

+

new_width = int(width * scale_factor)

|

| 24 |

+

new_height = int(height * scale_factor)

|

| 25 |

+

else:

|

| 26 |

+

new_width = width

|

| 27 |

+

new_height = height

|

| 28 |

+

|

| 29 |

+

width, height = new_width, new_height

|

| 30 |

+

num_masks_w = math.ceil(width / 512)

|

| 31 |

+

num_masks_h = math.ceil(height / 512)

|

| 32 |

+

total_masks = num_masks_w * num_masks_h

|

| 33 |

+

|

| 34 |

+

tokens_per_mask = 170

|

| 35 |

+

total_tokens = total_masks * tokens_per_mask + 85

|

| 36 |

+

|

| 37 |

+

return total_tokens

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

async def num_tokens_from_messages(messages, model=''):

|

| 41 |

+

try:

|

| 42 |

+

encoding = tiktoken.encoding_for_model(model)

|

| 43 |

+

except KeyError:

|

| 44 |

+

encoding = tiktoken.get_encoding("cl100k_base")

|

| 45 |

+

if model == "gpt-3.5-turbo-0301":

|

| 46 |

+

tokens_per_message = 4

|

| 47 |

+

else:

|

| 48 |

+

tokens_per_message = 3

|

| 49 |

+

num_tokens = 0

|

| 50 |

+

for message in messages:

|

| 51 |

+

num_tokens += tokens_per_message

|

| 52 |

+

for key, value in message.items():

|

| 53 |

+

if isinstance(value, list):

|

| 54 |

+

for item in value:

|

| 55 |

+

if item.get("type") == "text":

|

| 56 |

+

num_tokens += len(encoding.encode(item.get("text")))

|

| 57 |

+

if item.get("type") == "image_url":

|

| 58 |

+

pass

|

| 59 |

+

else:

|

| 60 |

+

num_tokens += len(encoding.encode(value))

|

| 61 |

+

num_tokens += 3

|

| 62 |

+

return num_tokens

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

async def num_tokens_from_content(content, model=None):

|

| 66 |

+

try:

|

| 67 |

+

encoding = tiktoken.encoding_for_model(model)

|

| 68 |

+

except KeyError:

|

| 69 |

+

encoding = tiktoken.get_encoding("cl100k_base")

|

| 70 |

+

encoded_content = encoding.encode(content)

|

| 71 |

+

len_encoded_content = len(encoded_content)

|

| 72 |

+

return len_encoded_content

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

async def split_tokens_from_content(content, max_tokens, model=None):

|

| 76 |

+

try:

|

| 77 |

+

encoding = tiktoken.encoding_for_model(model)

|

| 78 |

+

except KeyError:

|

| 79 |

+

encoding = tiktoken.get_encoding("cl100k_base")

|

| 80 |

+

encoded_content = encoding.encode(content)

|

| 81 |

+

len_encoded_content = len(encoded_content)

|

| 82 |

+

if len_encoded_content >= max_tokens:

|

| 83 |

+

content = encoding.decode(encoded_content[:max_tokens])

|

| 84 |

+

return content, max_tokens, "length"

|

| 85 |

+

else:

|

| 86 |

+

return content, len_encoded_content, "stop"

|

app.py

ADDED

|

@@ -0,0 +1,57 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import warnings

|

| 2 |

+

|

| 3 |

+

import uvicorn

|

| 4 |

+

from fastapi import FastAPI, HTTPException

|

| 5 |

+

from fastapi.security import HTTPBearer, HTTPAuthorizationCredentials

|

| 6 |

+

from fastapi.middleware.cors import CORSMiddleware

|

| 7 |

+

from fastapi.templating import Jinja2Templates

|

| 8 |

+

|

| 9 |

+

from utils.configs import enable_gateway, api_prefix

|

| 10 |

+

|

| 11 |

+

warnings.filterwarnings("ignore")

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

log_config = uvicorn.config.LOGGING_CONFIG

|

| 15 |

+

default_format = "%(asctime)s | %(levelname)s | %(message)s"

|

| 16 |

+

access_format = r'%(asctime)s | %(levelname)s | %(client_addr)s: %(request_line)s %(status_code)s'

|

| 17 |

+

log_config["formatters"]["default"]["fmt"] = default_format

|

| 18 |

+