Merge pull request #37 from seanpedrick-case/dev

Browse filesCan remove all redactions with same text on button, added more config options

- README.md +9 -8

- app.py +100 -87

- pyproject.toml +1 -1

- tools/config.py +76 -5

- tools/data_anonymise.py +18 -10

- tools/file_conversion.py +9 -10

- tools/file_redaction.py +31 -31

- tools/helper_functions.py +24 -33

- tools/redaction_review.py +14 -4

README.md

CHANGED

|

@@ -10,7 +10,7 @@ license: agpl-3.0

|

|

| 10 |

---

|

| 11 |

# Document redaction

|

| 12 |

|

| 13 |

-

version: 0.6.

|

| 14 |

|

| 15 |

Redact personally identifiable information (PII) from documents (pdf, images), open text, or tabular data (xlsx/csv/parquet). Please see the [User Guide](#user-guide) for a walkthrough on how to use the app. Below is a very brief overview.

|

| 16 |

|

|

@@ -223,13 +223,13 @@ On the 'Review redactions' tab you have a visual interface that allows you to in

|

|

| 223 |

|

| 224 |

### Uploading documents for review

|

| 225 |

|

| 226 |

-

The top area has a file upload area where you can upload original, unredacted PDFs, alongside the '..._review_file.csv' that is produced by the redaction process. Once you have uploaded these two files, click the 'Review PDF

|

| 227 |

|

| 228 |

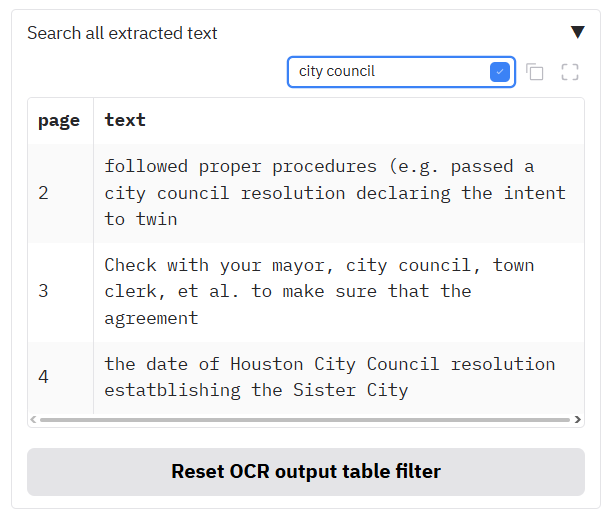

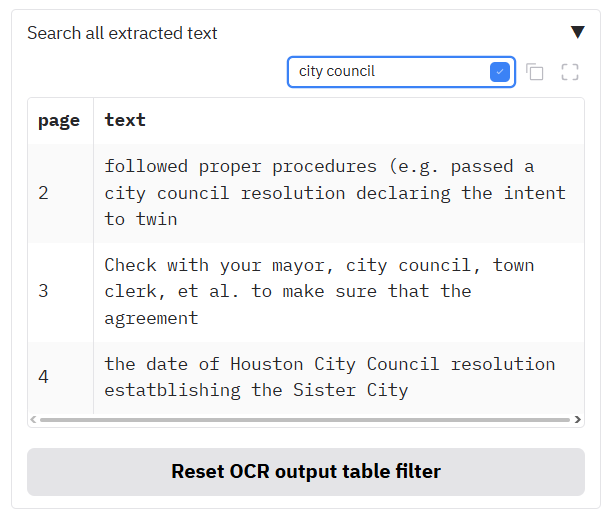

Optionally, you can also upload one of the '..._ocr_output.csv' files here that comes out of a redaction task, so that you can navigate the extracted text from the document.

|

| 229 |

|

| 230 |

|

| 231 |

|

| 232 |

-

You can upload the three review files in the box (unredacted document, '..._review_file.csv' and '..._ocr_output.csv' file) before clicking 'Review PDF

|

| 233 |

|

| 234 |

|

| 235 |

|

|

@@ -293,7 +293,7 @@ The table shows a list of all the suggested redactions in the document alongside

|

|

| 293 |

|

| 294 |

|

| 295 |

|

| 296 |

-

If you click on one of the rows in this table, you will be taken to the page of the redaction. Clicking on a redaction row on the same page

|

| 297 |

|

| 298 |

|

| 299 |

|

|

@@ -303,12 +303,13 @@ To filter the 'Search suggested redactions' table you can:

|

|

| 303 |

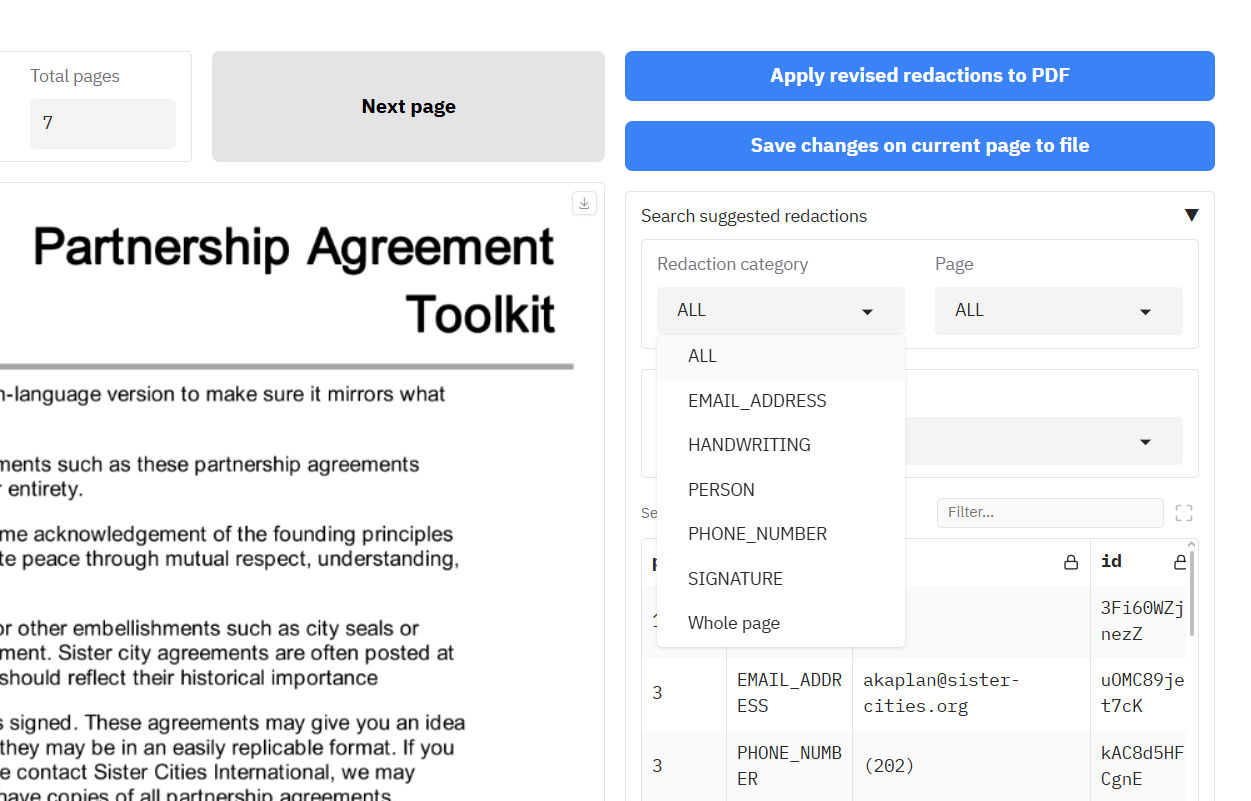

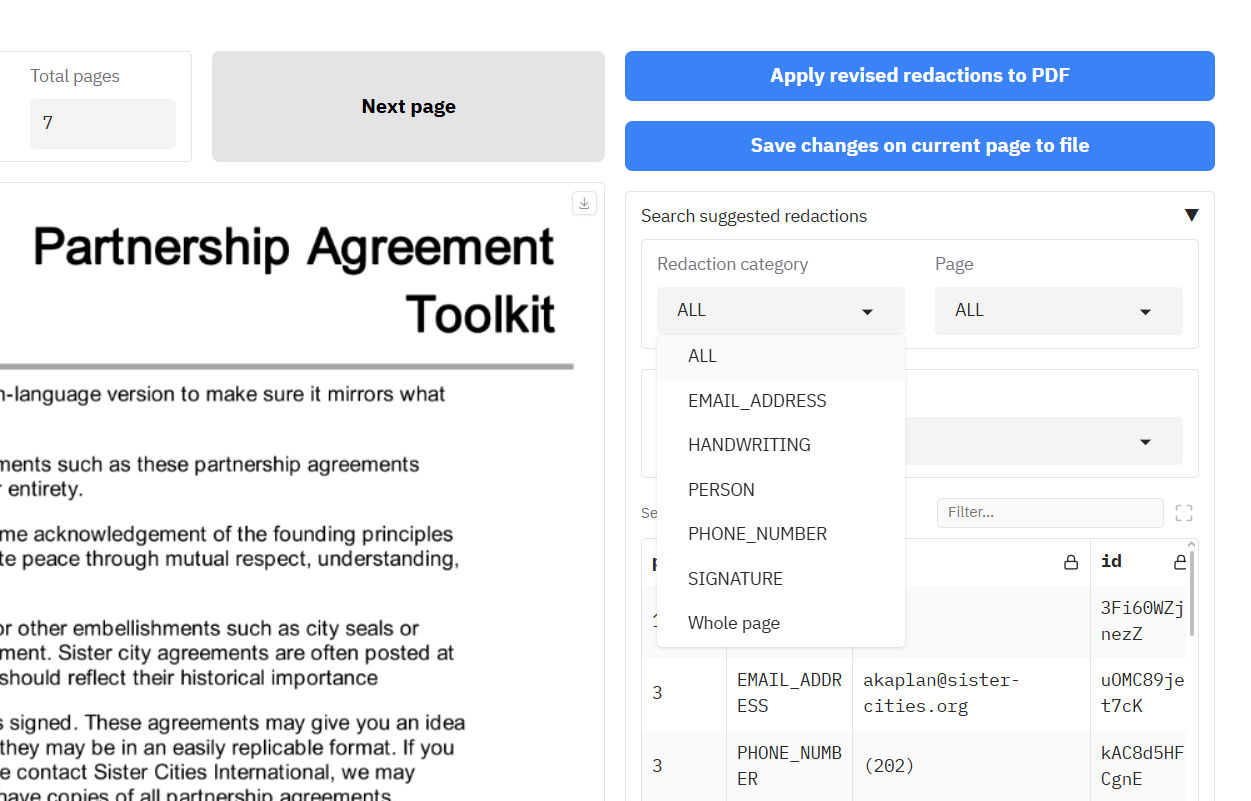

1. Click on one of the dropdowns (Redaction category, Page, Text), and select an option, or

|

| 304 |

2. Write text in the 'Filter' box just above the table. Click the blue box to apply the filter to the table.

|

| 305 |

|

| 306 |

-

Once you have filtered the table, you have a few options underneath on what you can do with the filtered rows:

|

| 307 |

|

| 308 |

-

- Click the

|

| 309 |

-

- Click the

|

|

|

|

| 310 |

|

| 311 |

-

**NOTE**: After excluding redactions using

|

| 312 |

|

| 313 |

If you made a mistake, click the 'Undo last element removal' button to restore the Search suggested redactions table to its previous state (can only undo the last action).

|

| 314 |

|

|

|

|

| 10 |

---

|

| 11 |

# Document redaction

|

| 12 |

|

| 13 |

+

version: 0.6.8

|

| 14 |

|

| 15 |

Redact personally identifiable information (PII) from documents (pdf, images), open text, or tabular data (xlsx/csv/parquet). Please see the [User Guide](#user-guide) for a walkthrough on how to use the app. Below is a very brief overview.

|

| 16 |

|

|

|

|

| 223 |

|

| 224 |

### Uploading documents for review

|

| 225 |

|

| 226 |

+

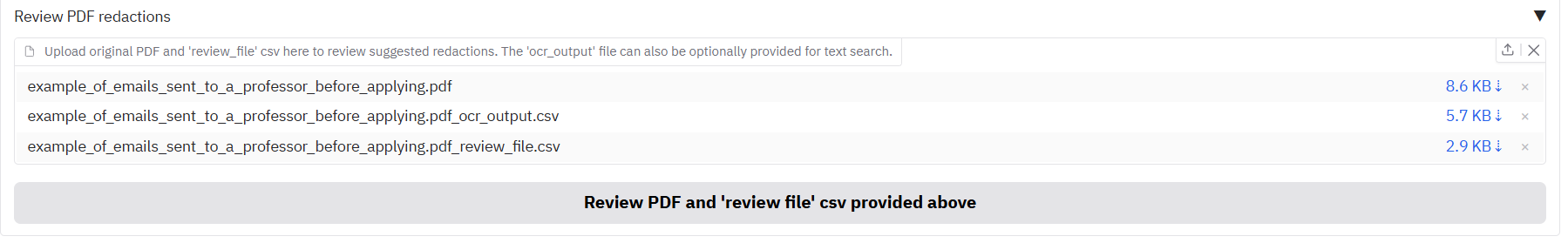

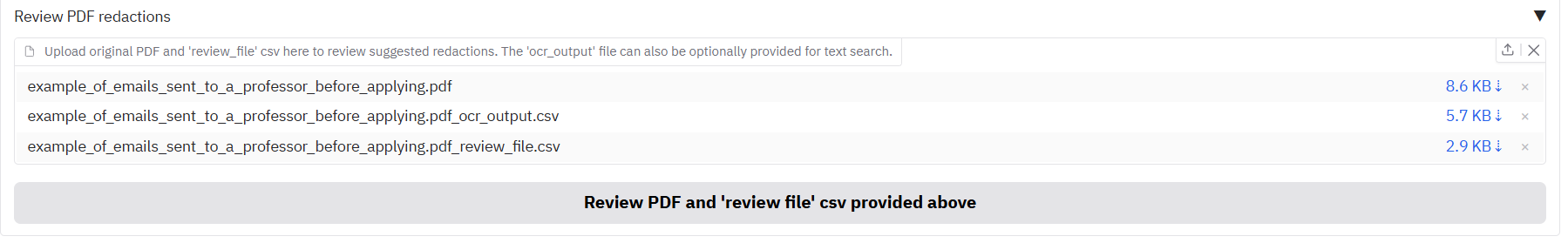

The top area has a file upload area where you can upload original, unredacted PDFs, alongside the '..._review_file.csv' that is produced by the redaction process. Once you have uploaded these two files, click the '**Review redactions based on original PDF...**' button to load in the files for review. This will allow you to visualise and modify the suggested redactions using the interface below.

|

| 227 |

|

| 228 |

Optionally, you can also upload one of the '..._ocr_output.csv' files here that comes out of a redaction task, so that you can navigate the extracted text from the document.

|

| 229 |

|

| 230 |

|

| 231 |

|

| 232 |

+

You can upload the three review files in the box (unredacted document, '..._review_file.csv' and '..._ocr_output.csv' file) before clicking '**Review redactions based on original PDF...**', as in the image below:

|

| 233 |

|

| 234 |

|

| 235 |

|

|

|

|

| 293 |

|

| 294 |

|

| 295 |

|

| 296 |

+

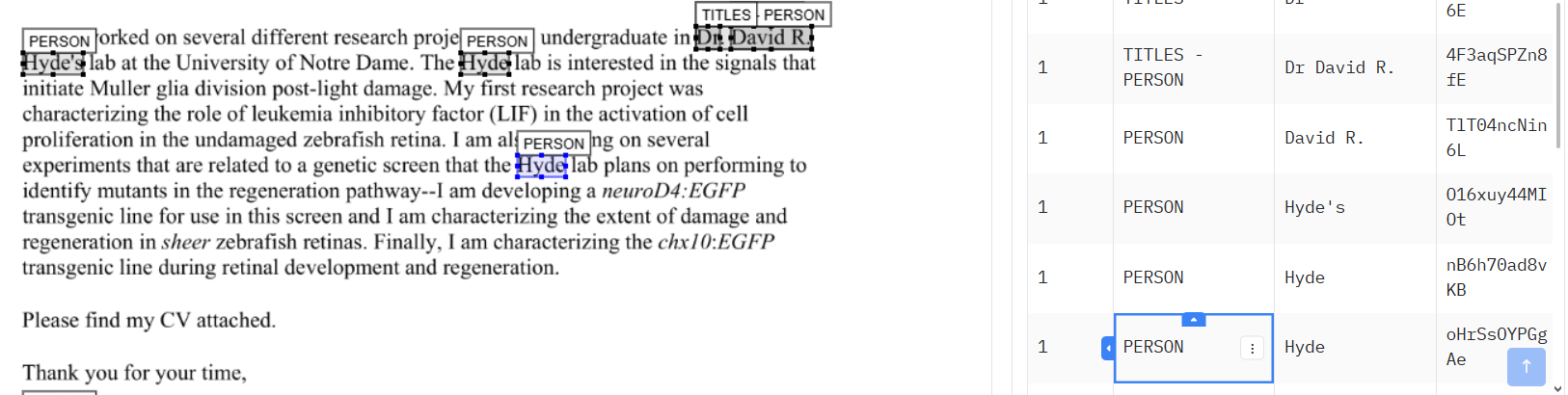

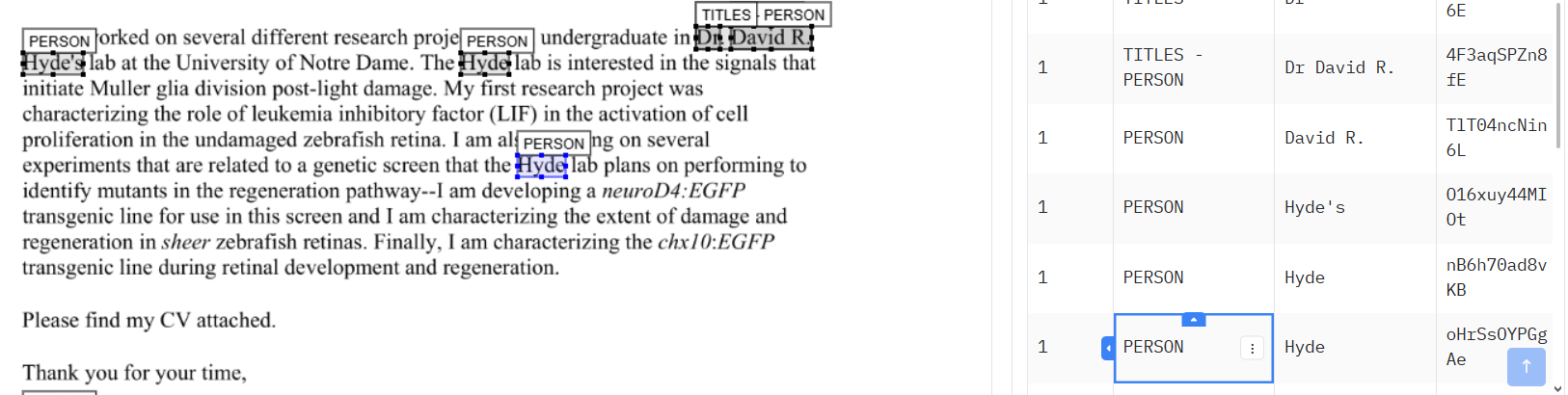

If you click on one of the rows in this table, you will be taken to the page of the redaction. Clicking on a redaction row on the same page will change the colour of redaction box to blue to help you locate it in the document viewer (just when using the app, not in redacted output PDFs).

|

| 297 |

|

| 298 |

|

| 299 |

|

|

|

|

| 303 |

1. Click on one of the dropdowns (Redaction category, Page, Text), and select an option, or

|

| 304 |

2. Write text in the 'Filter' box just above the table. Click the blue box to apply the filter to the table.

|

| 305 |

|

| 306 |

+

Once you have filtered the table, or selected a row from the table, you have a few options underneath on what you can do with the filtered rows:

|

| 307 |

|

| 308 |

+

- Click the **Exclude all redactions in table** button to remove all redactions visible in the table from the document. **Important:** ensure that you have clicked the blue tick icon next to the search box before doing this, or you will remove all redactions from the document. If you do end up doing this, click the 'Undo last element removal' button below to restore the redactions.

|

| 309 |

+

- Click the **Exclude specific redaction row** button to remove only the redaction from the last row you clicked on from the document. The currently selected row is visible below.

|

| 310 |

+

- Click the **Exclude all redactions with the same text as selected row** button to remove all redactions from the document that are exactly the same as the selected row text.

|

| 311 |

|

| 312 |

+

**NOTE**: After excluding redactions using any of the above options, click the 'Reset filters' button below to ensure that the dropdowns and table return to seeing all remaining redactions in the document.

|

| 313 |

|

| 314 |

If you made a mistake, click the 'Undo last element removal' button to restore the Search suggested redactions table to its previous state (can only undo the last action).

|

| 315 |

|

app.py

CHANGED

|

@@ -1,15 +1,13 @@

|

|

| 1 |

import os

|

| 2 |

-

import logging

|

| 3 |

import pandas as pd

|

| 4 |

import gradio as gr

|

| 5 |

from gradio_image_annotation import image_annotator

|

| 6 |

-

|

| 7 |

-

from tools.

|

| 8 |

-

from tools.

|

| 9 |

-

from tools.aws_functions import upload_file_to_s3, download_file_from_s3, upload_log_file_to_s3

|

| 10 |

from tools.file_redaction import choose_and_run_redactor

|

| 11 |

from tools.file_conversion import prepare_image_or_pdf, get_input_file_names

|

| 12 |

-

from tools.redaction_review import apply_redactions_to_review_df_and_files, update_all_page_annotation_object_based_on_previous_page, decrease_page, increase_page, update_annotator_object_and_filter_df, update_entities_df_recogniser_entities, update_entities_df_page, update_entities_df_text,

|

| 13 |

from tools.data_anonymise import anonymise_data_files

|

| 14 |

from tools.auth import authenticate_user

|

| 15 |

from tools.load_spacy_model_custom_recognisers import custom_entities

|

|

@@ -20,30 +18,7 @@ from tools.textract_batch_call import analyse_document_with_textract_api, poll_w

|

|

| 20 |

# Suppress downcasting warnings

|

| 21 |

pd.set_option('future.no_silent_downcasting', True)

|

| 22 |

|

| 23 |

-

|

| 24 |

-

|

| 25 |

-

full_comprehend_entity_list = ['BANK_ACCOUNT_NUMBER','BANK_ROUTING','CREDIT_DEBIT_NUMBER','CREDIT_DEBIT_CVV','CREDIT_DEBIT_EXPIRY','PIN','EMAIL','ADDRESS','NAME','PHONE','SSN','DATE_TIME','PASSPORT_NUMBER','DRIVER_ID','URL','AGE','USERNAME','PASSWORD','AWS_ACCESS_KEY','AWS_SECRET_KEY','IP_ADDRESS','MAC_ADDRESS','ALL','LICENSE_PLATE','VEHICLE_IDENTIFICATION_NUMBER','UK_NATIONAL_INSURANCE_NUMBER','CA_SOCIAL_INSURANCE_NUMBER','US_INDIVIDUAL_TAX_IDENTIFICATION_NUMBER','UK_UNIQUE_TAXPAYER_REFERENCE_NUMBER','IN_PERMANENT_ACCOUNT_NUMBER','IN_NREGA','INTERNATIONAL_BANK_ACCOUNT_NUMBER','SWIFT_CODE','UK_NATIONAL_HEALTH_SERVICE_NUMBER','CA_HEALTH_NUMBER','IN_AADHAAR','IN_VOTER_NUMBER', "CUSTOM_FUZZY"]

|

| 26 |

-

|

| 27 |

-

# Add custom spacy recognisers to the Comprehend list, so that local Spacy model can be used to pick up e.g. titles, streetnames, UK postcodes that are sometimes missed by comprehend

|

| 28 |

-

chosen_comprehend_entities.extend(custom_entities)

|

| 29 |

-

full_comprehend_entity_list.extend(custom_entities)

|

| 30 |

-

|

| 31 |

-

# Entities for local PII redaction option

|

| 32 |

-

chosen_redact_entities = ["TITLES", "PERSON", "PHONE_NUMBER", "EMAIL_ADDRESS", "STREETNAME", "UKPOSTCODE", "CUSTOM"]

|

| 33 |

-

|

| 34 |

-

full_entity_list = ["TITLES", "PERSON", "PHONE_NUMBER", "EMAIL_ADDRESS", "STREETNAME", "UKPOSTCODE", 'CREDIT_CARD', 'CRYPTO', 'DATE_TIME', 'IBAN_CODE', 'IP_ADDRESS', 'NRP', 'LOCATION', 'MEDICAL_LICENSE', 'URL', 'UK_NHS', 'CUSTOM', 'CUSTOM_FUZZY']

|

| 35 |

-

|

| 36 |

-

log_file_name = 'log.csv'

|

| 37 |

-

|

| 38 |

-

file_input_height = 200

|

| 39 |

-

|

| 40 |

-

if RUN_AWS_FUNCTIONS == "1":

|

| 41 |

-

default_ocr_val = textract_option

|

| 42 |

-

default_pii_detector = local_pii_detector

|

| 43 |

-

else:

|

| 44 |

-

default_ocr_val = text_ocr_option

|

| 45 |

-

default_pii_detector = local_pii_detector

|

| 46 |

-

|

| 47 |

SAVE_LOGS_TO_CSV = eval(SAVE_LOGS_TO_CSV)

|

| 48 |

SAVE_LOGS_TO_DYNAMODB = eval(SAVE_LOGS_TO_DYNAMODB)

|

| 49 |

|

|

@@ -55,6 +30,17 @@ if DYNAMODB_ACCESS_LOG_HEADERS: DYNAMODB_ACCESS_LOG_HEADERS = eval(DYNAMODB_ACCE

|

|

| 55 |

if DYNAMODB_FEEDBACK_LOG_HEADERS: DYNAMODB_FEEDBACK_LOG_HEADERS = eval(DYNAMODB_FEEDBACK_LOG_HEADERS)

|

| 56 |

if DYNAMODB_USAGE_LOG_HEADERS: DYNAMODB_USAGE_LOG_HEADERS = eval(DYNAMODB_USAGE_LOG_HEADERS)

|

| 57 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 58 |

# Create the gradio interface

|

| 59 |

app = gr.Blocks(theme = gr.themes.Base(), fill_width=True)

|

| 60 |

|

|

@@ -66,8 +52,7 @@ with app:

|

|

| 66 |

|

| 67 |

# Pymupdf doc and all image annotations objects need to be stored as State objects as they do not have a standard Gradio component equivalent

|

| 68 |

pdf_doc_state = gr.State([])

|

| 69 |

-

all_image_annotations_state = gr.State([])

|

| 70 |

-

|

| 71 |

|

| 72 |

all_decision_process_table_state = gr.Dataframe(value=pd.DataFrame(), headers=None, col_count=0, row_count = (0, "dynamic"), label="all_decision_process_table", visible=False, type="pandas", wrap=True)

|

| 73 |

review_file_state = gr.Dataframe(value=pd.DataFrame(), headers=None, col_count=0, row_count = (0, "dynamic"), label="review_file_df", visible=False, type="pandas", wrap=True)

|

|

@@ -105,11 +90,11 @@ with app:

|

|

| 105 |

backup_recogniser_entity_dataframe_base = gr.Dataframe(visible=False)

|

| 106 |

|

| 107 |

# Logging state

|

| 108 |

-

feedback_logs_state = gr.Textbox(label= "feedback_logs_state", value=FEEDBACK_LOGS_FOLDER +

|

| 109 |

feedback_s3_logs_loc_state = gr.Textbox(label= "feedback_s3_logs_loc_state", value=FEEDBACK_LOGS_FOLDER, visible=False)

|

| 110 |

-

access_logs_state = gr.Textbox(label= "access_logs_state", value=ACCESS_LOGS_FOLDER +

|

| 111 |

access_s3_logs_loc_state = gr.Textbox(label= "access_s3_logs_loc_state", value=ACCESS_LOGS_FOLDER, visible=False)

|

| 112 |

-

usage_logs_state = gr.Textbox(label= "usage_logs_state", value=USAGE_LOGS_FOLDER +

|

| 113 |

usage_s3_logs_loc_state = gr.Textbox(label= "usage_s3_logs_loc_state", value=USAGE_LOGS_FOLDER, visible=False)

|

| 114 |

|

| 115 |

session_hash_textbox = gr.Textbox(label= "session_hash_textbox", value="", visible=False)

|

|

@@ -172,8 +157,8 @@ with app:

|

|

| 172 |

s3_whole_document_textract_input_subfolder = gr.Textbox(label = "Default Textract whole_document S3 input folder", value=TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_INPUT_SUBFOLDER, visible=False)

|

| 173 |

s3_whole_document_textract_output_subfolder = gr.Textbox(label = "Default Textract whole_document S3 output folder", value=TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_OUTPUT_SUBFOLDER, visible=False)

|

| 174 |

successful_textract_api_call_number = gr.Number(precision=0, value=0, visible=False)

|

| 175 |

-

no_redaction_method_drop = gr.Radio(label = """Placeholder for no redaction method after downloading Textract outputs""", value =

|

| 176 |

-

textract_only_method_drop = gr.Radio(label="""Placeholder for Textract method after downloading Textract outputs""", value =

|

| 177 |

|

| 178 |

load_s3_whole_document_textract_logs_bool = gr.Textbox(label = "Load Textract logs or not", value=LOAD_PREVIOUS_TEXTRACT_JOBS_S3, visible=False)

|

| 179 |

s3_whole_document_textract_logs_subfolder = gr.Textbox(label = "Default Textract whole_document S3 input folder", value=TEXTRACT_JOBS_S3_LOC, visible=False)

|

|

@@ -190,6 +175,14 @@ with app:

|

|

| 190 |

all_line_level_ocr_results_df_placeholder = gr.Dataframe(visible=False)

|

| 191 |

cost_code_dataframe_base = gr.Dataframe(value=pd.DataFrame(), row_count = (0, "dynamic"), label="Cost codes", type="pandas", interactive=True, show_fullscreen_button=True, show_copy_button=True, show_search='filter', wrap=True, max_height=200, visible=False)

|

| 192 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 193 |

# Duplicate page detection

|

| 194 |

in_duplicate_pages_text = gr.Textbox(label="in_duplicate_pages_text", visible=False)

|

| 195 |

duplicate_pages_df = gr.Dataframe(value=pd.DataFrame(), headers=None, col_count=0, row_count = (0, "dynamic"), label="duplicate_pages_df", visible=False, type="pandas", wrap=True)

|

|

@@ -225,7 +218,7 @@ with app:

|

|

| 225 |

job_output_textbox = gr.Textbox(value="", label="Textract call outputs", visible=False)

|

| 226 |

job_input_textbox = gr.Textbox(value=TEXTRACT_JOBS_S3_INPUT_LOC, label="Textract call outputs", visible=False)

|

| 227 |

|

| 228 |

-

textract_job_output_file = gr.File(label="Textract job output files", height=

|

| 229 |

convert_textract_outputs_to_ocr_results = gr.Button("Placeholder - Convert Textract job outputs to OCR results (needs relevant document file uploaded above)", variant="secondary", visible=False)

|

| 230 |

|

| 231 |

###

|

|

@@ -248,15 +241,15 @@ with app:

|

|

| 248 |

###

|

| 249 |

with gr.Tab("Redact PDFs/images"):

|

| 250 |

with gr.Accordion("Redact document", open = True):

|

| 251 |

-

in_doc_files = gr.File(label="Choose a document or image file (PDF, JPG, PNG)", file_count= "multiple", file_types=['.pdf', '.jpg', '.png', '.json', '.zip'], height=

|

| 252 |

|

| 253 |

-

text_extract_method_radio = gr.Radio(label="""Choose text extraction method. Local options are lower quality but cost nothing - they may be worth a try if you are willing to spend some time reviewing outputs. AWS Textract has a cost per page - £2.66 ($3.50) per 1,000 pages with signature detection (default), £1.14 ($1.50) without. Change the settings in the tab below (AWS Textract signature detection) to change this.""", value =

|

| 254 |

|

| 255 |

with gr.Accordion("AWS Textract signature detection (default is on)", open = False):

|

| 256 |

handwrite_signature_checkbox = gr.CheckboxGroup(label="AWS Textract extraction settings", choices=["Extract handwriting", "Extract signatures"], value=["Extract handwriting", "Extract signatures"])

|

| 257 |

|

| 258 |

with gr.Row(equal_height=True):

|

| 259 |

-

pii_identification_method_drop = gr.Radio(label = """Choose personal information detection method. The local model is lower quality but costs nothing - it may be worth a try if you are willing to spend some time reviewing outputs, or if you are only interested in searching for custom search terms (see Redaction settings - custom deny list). AWS Comprehend has a cost of around £0.0075 ($0.01) per 10,000 characters.""", value =

|

| 260 |

|

| 261 |

if SHOW_COSTS == "True":

|

| 262 |

with gr.Accordion("Estimated costs and time taken. Note that costs shown only include direct usage of AWS services and do not include other running costs (e.g. storage, run-time costs)", open = True, visible=True):

|

|

@@ -303,7 +296,7 @@ with app:

|

|

| 303 |

|

| 304 |

with gr.Row():

|

| 305 |

redaction_output_summary_textbox = gr.Textbox(label="Output summary", scale=1)

|

| 306 |

-

output_file = gr.File(label="Output files", scale = 2)#, height=

|

| 307 |

latest_file_completed_text = gr.Number(value=0, label="Number of documents redacted", interactive=False, visible=False)

|

| 308 |

|

| 309 |

# Feedback elements are invisible until revealed by redaction action

|

|

@@ -318,8 +311,8 @@ with app:

|

|

| 318 |

with gr.Tab("Review redactions", id="tab_object_annotation"):

|

| 319 |

|

| 320 |

with gr.Accordion(label = "Review PDF redactions", open=True):

|

| 321 |

-

output_review_files = gr.File(label="Upload original PDF and 'review_file' csv here to review suggested redactions. The 'ocr_output' file can also be optionally provided for text search.", file_count='multiple', height=

|

| 322 |

-

upload_previous_review_file_btn = gr.Button("Review PDF and '

|

| 323 |

with gr.Row():

|

| 324 |

annotate_zoom_in = gr.Button("Zoom in", visible=False)

|

| 325 |

annotate_zoom_out = gr.Button("Zoom out", visible=False)

|

|

@@ -368,19 +361,18 @@ with app:

|

|

| 368 |

recogniser_entity_dropdown = gr.Dropdown(label="Redaction category", value="ALL", allow_custom_value=True)

|

| 369 |

page_entity_dropdown = gr.Dropdown(label="Page", value="ALL", allow_custom_value=True)

|

| 370 |

text_entity_dropdown = gr.Dropdown(label="Text", value="ALL", allow_custom_value=True)

|

| 371 |

-

|

|

|

|

| 372 |

|

| 373 |

-

with gr.Row(equal_height=True):

|

| 374 |

-

|

| 375 |

-

exclude_selected_btn = gr.Button(value="Exclude all items in table from redactions")

|

| 376 |

-

with gr.Row(equal_height=True):

|

| 377 |

-

reset_dropdowns_btn = gr.Button(value="Reset filters")

|

| 378 |

-

|

| 379 |

-

undo_last_removal_btn = gr.Button(value="Undo last element removal")

|

| 380 |

|

| 381 |

-

|

| 382 |

-

|

| 383 |

-

|

|

|

|

|

|

|

|

|

|

| 384 |

|

| 385 |

with gr.Accordion("Search all extracted text", open=True):

|

| 386 |

all_line_level_ocr_results_df = gr.Dataframe(value=pd.DataFrame(), headers=["page", "text"], col_count=(2, 'fixed'), row_count = (0, "dynamic"), label="All OCR results", visible=True, type="pandas", wrap=True, show_fullscreen_button=True, show_search='filter', show_label=False, show_copy_button=True, max_height=400)

|

|

@@ -396,12 +388,12 @@ with app:

|

|

| 396 |

###

|

| 397 |

with gr.Tab(label="Identify duplicate pages"):

|

| 398 |

with gr.Accordion("Identify duplicate pages to redact", open = True):

|

| 399 |

-

in_duplicate_pages = gr.File(label="Upload multiple 'ocr_output.csv' data files from redaction jobs here to compare", file_count="multiple", height=

|

| 400 |

with gr.Row():

|

| 401 |

duplicate_threshold_value = gr.Number(value=0.9, label="Minimum similarity to be considered a duplicate (maximum = 1)", scale =1)

|

| 402 |

find_duplicate_pages_btn = gr.Button(value="Identify duplicate pages", variant="primary", scale = 4)

|

| 403 |

|

| 404 |

-

duplicate_pages_out = gr.File(label="Duplicate pages analysis output", file_count="multiple", height=

|

| 405 |

|

| 406 |

###

|

| 407 |

# TEXT / TABULAR DATA TAB

|

|

@@ -411,13 +403,13 @@ with app:

|

|

| 411 |

with gr.Accordion("Redact open text", open = False):

|

| 412 |

in_text = gr.Textbox(label="Enter open text", lines=10)

|

| 413 |

with gr.Accordion("Upload xlsx or csv files", open = True):

|

| 414 |

-

in_data_files = gr.File(label="Choose Excel or csv files", file_count= "multiple", file_types=['.xlsx', '.xls', '.csv', '.parquet', '.csv.gz'], height=

|

| 415 |

|

| 416 |

in_excel_sheets = gr.Dropdown(choices=["Choose Excel sheets to anonymise"], multiselect = True, label="Select Excel sheets that you want to anonymise (showing sheets present across all Excel files).", visible=False, allow_custom_value=True)

|

| 417 |

|

| 418 |

in_colnames = gr.Dropdown(choices=["Choose columns to anonymise"], multiselect = True, label="Select columns that you want to anonymise (showing columns present across all files).")

|

| 419 |

|

| 420 |

-

pii_identification_method_drop_tabular = gr.Radio(label = "Choose PII detection method. AWS Comprehend has a cost of approximately $0.01 per 10,000 characters.", value =

|

| 421 |

|

| 422 |

with gr.Accordion("Anonymisation output format", open = False):

|

| 423 |

anon_strat = gr.Radio(choices=["replace with 'REDACTED'", "replace with <ENTITY_NAME>", "redact completely", "hash", "mask"], label="Select an anonymisation method.", value = "replace with 'REDACTED'") # , "encrypt", "fake_first_name" are also available, but are not currently included as not that useful in current form

|

|

@@ -443,13 +435,13 @@ with app:

|

|

| 443 |

with gr.Accordion("Custom allow, deny, and full page redaction lists", open = True):

|

| 444 |

with gr.Row():

|

| 445 |

with gr.Column():

|

| 446 |

-

in_allow_list = gr.File(label="Import allow list file - csv table with one column of a different word/phrase on each row (case insensitive). Terms in this file will not be redacted.", file_count="multiple", height=

|

| 447 |

in_allow_list_text = gr.Textbox(label="Custom allow list load status")

|

| 448 |

with gr.Column():

|

| 449 |

-

in_deny_list = gr.File(label="Import custom deny list - csv table with one column of a different word/phrase on each row (case insensitive). Terms in this file will always be redacted.", file_count="multiple", height=

|

| 450 |

in_deny_list_text = gr.Textbox(label="Custom deny list load status")

|

| 451 |

with gr.Column():

|

| 452 |

-

in_fully_redacted_list = gr.File(label="Import fully redacted pages list - csv table with one column of page numbers on each row. Page numbers in this file will be fully redacted.", file_count="multiple", height=

|

| 453 |

in_fully_redacted_list_text = gr.Textbox(label="Fully redacted page list load status")

|

| 454 |

with gr.Accordion("Manually modify custom allow, deny, and full page redaction lists (NOTE: you need to press Enter after modifying/adding an entry to the lists to apply them)", open = False):

|

| 455 |

with gr.Row():

|

|

@@ -458,8 +450,8 @@ with app:

|

|

| 458 |

in_fully_redacted_list_state = gr.Dataframe(value=pd.DataFrame(), headers=["fully_redacted_pages_list"], col_count=(1, "fixed"), row_count = (0, "dynamic"), label="Fully redacted pages", visible=True, type="pandas", interactive=True, show_fullscreen_button=True, show_copy_button=True, datatype='number', wrap=True)

|

| 459 |

|

| 460 |

with gr.Accordion("Select entity types to redact", open = True):

|

| 461 |

-

in_redact_entities = gr.Dropdown(value=

|

| 462 |

-

in_redact_comprehend_entities = gr.Dropdown(value=

|

| 463 |

|

| 464 |

with gr.Row():

|

| 465 |

max_fuzzy_spelling_mistakes_num = gr.Number(label="Maximum number of spelling mistakes allowed for fuzzy matching (CUSTOM_FUZZY entity).", value=1, minimum=0, maximum=9, precision=0)

|

|

@@ -478,8 +470,6 @@ with app:

|

|

| 478 |

aws_access_key_textbox = gr.Textbox(value='', label="AWS access key for account with permissions for AWS Textract and Comprehend", visible=True, type="password")

|

| 479 |

aws_secret_key_textbox = gr.Textbox(value='', label="AWS secret key for account with permissions for AWS Textract and Comprehend", visible=True, type="password")

|

| 480 |

|

| 481 |

-

|

| 482 |

-

|

| 483 |

with gr.Accordion("Log file outputs", open = False):

|

| 484 |

log_files_output = gr.File(label="Log file output", interactive=False)

|

| 485 |

|

|

@@ -492,7 +482,7 @@ with app:

|

|

| 492 |

all_output_files = gr.File(label="All files in output folder", file_count='multiple', file_types=['.csv'], interactive=False)

|

| 493 |

|

| 494 |

###

|

| 495 |

-

|

| 496 |

###

|

| 497 |

|

| 498 |

###

|

|

@@ -565,7 +555,6 @@ with app:

|

|

| 565 |

|

| 566 |

textract_job_detail_df.select(df_select_callback_textract_api, inputs=[textract_output_found_checkbox], outputs=[job_id_textbox, job_type_dropdown, selected_job_id_row])

|

| 567 |

|

| 568 |

-

|

| 569 |

convert_textract_outputs_to_ocr_results.click(replace_existing_pdf_input_for_whole_document_outputs, inputs = [s3_whole_document_textract_input_subfolder, doc_file_name_no_extension_textbox, output_folder_textbox, s3_whole_document_textract_default_bucket, in_doc_files, input_folder_textbox], outputs = [in_doc_files, doc_file_name_no_extension_textbox, doc_file_name_with_extension_textbox, doc_full_file_name_textbox, doc_file_name_textbox_list, total_pdf_page_count]).\

|

| 570 |

success(fn = prepare_image_or_pdf, inputs=[in_doc_files, text_extract_method_radio, latest_file_completed_text, redaction_output_summary_textbox, first_loop_state, annotate_max_pages, all_image_annotations_state, prepare_for_review_bool_false, in_fully_redacted_list_state, output_folder_textbox, input_folder_textbox, prepare_images_bool_false], outputs=[redaction_output_summary_textbox, prepared_pdf_state, images_pdf_state, annotate_max_pages, annotate_max_pages_bottom, pdf_doc_state, all_image_annotations_state, review_file_state, document_cropboxes, page_sizes, textract_output_found_checkbox, all_img_details_state, all_line_level_ocr_results_df_base, local_ocr_output_found_checkbox]).\

|

| 571 |

success(fn=check_for_existing_textract_file, inputs=[doc_file_name_no_extension_textbox, output_folder_textbox], outputs=[textract_output_found_checkbox]).\

|

|

@@ -587,17 +576,34 @@ with app:

|

|

| 587 |

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number, review_file_state, page_sizes, doc_full_file_name_textbox, input_folder_textbox], outputs = [annotator, annotate_current_page, annotate_current_page_bottom, annotate_previous_page, recogniser_entity_dropdown, recogniser_entity_dataframe, recogniser_entity_dataframe_base, text_entity_dropdown, page_entity_dropdown, page_sizes, all_image_annotations_state])

|

| 588 |

|

| 589 |

# Page number controls

|

| 590 |

-

annotate_current_page.

|

| 591 |

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number, review_file_state, page_sizes, doc_full_file_name_textbox, input_folder_textbox], outputs = [annotator, annotate_current_page, annotate_current_page_bottom, annotate_previous_page, recogniser_entity_dropdown, recogniser_entity_dataframe, recogniser_entity_dataframe_base, text_entity_dropdown, page_entity_dropdown, page_sizes, all_image_annotations_state]).\

|

| 592 |

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page, review_file_state, output_folder_textbox, do_not_save_pdf_state, page_sizes], outputs=[pdf_doc_state, all_image_annotations_state, output_review_files, log_files_output, review_file_state])

|

| 593 |

|

| 594 |

-

annotation_last_page_button.click(fn=decrease_page, inputs=[annotate_current_page], outputs=[annotate_current_page, annotate_current_page_bottom])

|

| 595 |

-

|

|

|

|

|

|

|

| 596 |

|

| 597 |

-

|

| 598 |

-

|

|

|

|

|

|

|

| 599 |

|

| 600 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 601 |

|

| 602 |

# Apply page redactions

|

| 603 |

annotation_button_apply.click(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page, review_file_state, output_folder_textbox, save_pdf_state, page_sizes], outputs=[pdf_doc_state, all_image_annotations_state, output_review_files, log_files_output, review_file_state], scroll_to_output=True)

|

|

@@ -613,7 +619,7 @@ with app:

|

|

| 613 |

text_entity_dropdown.select(update_entities_df_text, inputs=[text_entity_dropdown, recogniser_entity_dataframe_base, recogniser_entity_dropdown, page_entity_dropdown], outputs=[recogniser_entity_dataframe, recogniser_entity_dropdown, page_entity_dropdown])

|

| 614 |

|

| 615 |

# Clicking on a cell in the recogniser entity dataframe will take you to that page, and also highlight the target redaction box in blue

|

| 616 |

-

recogniser_entity_dataframe.select(

|

| 617 |

success(update_selected_review_df_row_colour, inputs=[selected_entity_dataframe_row, review_file_state, selected_entity_id, selected_entity_colour], outputs=[review_file_state, selected_entity_id, selected_entity_colour]).\

|

| 618 |

success(update_annotator_page_from_review_df, inputs=[review_file_state, images_pdf_state, page_sizes, all_image_annotations_state, annotator, selected_entity_dataframe_row, input_folder_textbox, doc_full_file_name_textbox], outputs=[annotator, all_image_annotations_state, annotate_current_page, page_sizes, review_file_state, annotate_previous_page])

|

| 619 |

|

|

@@ -621,25 +627,32 @@ with app:

|

|

| 621 |

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number, review_file_state, page_sizes, doc_full_file_name_textbox, input_folder_textbox], outputs = [annotator, annotate_current_page, annotate_current_page_bottom, annotate_previous_page, recogniser_entity_dropdown, recogniser_entity_dataframe, recogniser_entity_dataframe_base, text_entity_dropdown, page_entity_dropdown, page_sizes, all_image_annotations_state])

|

| 622 |

|

| 623 |

# Exclude current selection from annotator and outputs

|

| 624 |

-

# Exclude only row

|

| 625 |

exclude_selected_row_btn.click(exclude_selected_items_from_redaction, inputs=[review_file_state, selected_entity_dataframe_row, images_pdf_state, page_sizes, all_image_annotations_state, recogniser_entity_dataframe_base], outputs=[review_file_state, all_image_annotations_state, recogniser_entity_dataframe_base, backup_review_state, backup_image_annotations_state, backup_recogniser_entity_dataframe_base]).\

|

| 626 |

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number, review_file_state, page_sizes, doc_full_file_name_textbox, input_folder_textbox], outputs = [annotator, annotate_current_page, annotate_current_page_bottom, annotate_previous_page, recogniser_entity_dropdown, recogniser_entity_dataframe, recogniser_entity_dataframe_base, text_entity_dropdown, page_entity_dropdown, page_sizes, all_image_annotations_state]).\

|

| 627 |

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page, review_file_state, output_folder_textbox, do_not_save_pdf_state, page_sizes], outputs=[pdf_doc_state, all_image_annotations_state, output_review_files, log_files_output, review_file_state]).\

|

| 628 |

success(update_all_entity_df_dropdowns, inputs=[recogniser_entity_dataframe_base, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown], outputs=[recogniser_entity_dropdown, text_entity_dropdown, page_entity_dropdown])

|

| 629 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 630 |

# Exclude everything visible in table

|

| 631 |

exclude_selected_btn.click(exclude_selected_items_from_redaction, inputs=[review_file_state, recogniser_entity_dataframe, images_pdf_state, page_sizes, all_image_annotations_state, recogniser_entity_dataframe_base], outputs=[review_file_state, all_image_annotations_state, recogniser_entity_dataframe_base, backup_review_state, backup_image_annotations_state, backup_recogniser_entity_dataframe_base]).\

|

| 632 |

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number, review_file_state, page_sizes, doc_full_file_name_textbox, input_folder_textbox], outputs = [annotator, annotate_current_page, annotate_current_page_bottom, annotate_previous_page, recogniser_entity_dropdown, recogniser_entity_dataframe, recogniser_entity_dataframe_base, text_entity_dropdown, page_entity_dropdown, page_sizes, all_image_annotations_state]).\

|

| 633 |

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page, review_file_state, output_folder_textbox, do_not_save_pdf_state, page_sizes], outputs=[pdf_doc_state, all_image_annotations_state, output_review_files, log_files_output, review_file_state]).\

|

| 634 |

success(update_all_entity_df_dropdowns, inputs=[recogniser_entity_dataframe_base, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown], outputs=[recogniser_entity_dropdown, text_entity_dropdown, page_entity_dropdown])

|

|

|

|

|

|

|

| 635 |

|

| 636 |

undo_last_removal_btn.click(undo_last_removal, inputs=[backup_review_state, backup_image_annotations_state, backup_recogniser_entity_dataframe_base], outputs=[review_file_state, all_image_annotations_state, recogniser_entity_dataframe_base]).\

|

| 637 |

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number, review_file_state, page_sizes, doc_full_file_name_textbox, input_folder_textbox], outputs = [annotator, annotate_current_page, annotate_current_page_bottom, annotate_previous_page, recogniser_entity_dropdown, recogniser_entity_dataframe, recogniser_entity_dataframe_base, text_entity_dropdown, page_entity_dropdown, page_sizes, all_image_annotations_state]).\

|

| 638 |

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page, review_file_state, output_folder_textbox, do_not_save_pdf_state, page_sizes], outputs=[pdf_doc_state, all_image_annotations_state, output_review_files, log_files_output, review_file_state])

|

| 639 |

-

|

| 640 |

-

|

| 641 |

-

|

| 642 |

-

# Review OCR text buttom

|

| 643 |

all_line_level_ocr_results_df.select(df_select_callback_ocr, inputs=[all_line_level_ocr_results_df], outputs=[annotate_current_page, selected_entity_dataframe_row])

|

| 644 |

reset_all_ocr_results_btn.click(reset_ocr_base_dataframe, inputs=[all_line_level_ocr_results_df_base], outputs=[all_line_level_ocr_results_df])

|

| 645 |

|

|

@@ -737,7 +750,7 @@ with app:

|

|

| 737 |

|

| 738 |

### ACCESS LOGS

|

| 739 |

# Log usernames and times of access to file (to know who is using the app when running on AWS)

|

| 740 |

-

access_callback = CSVLogger_custom(dataset_file_name=

|

| 741 |

access_callback.setup([session_hash_textbox, host_name_textbox], ACCESS_LOGS_FOLDER)

|

| 742 |

session_hash_textbox.change(lambda *args: access_callback.flag(list(args), save_to_csv=SAVE_LOGS_TO_CSV, save_to_dynamodb=SAVE_LOGS_TO_DYNAMODB, dynamodb_table_name=ACCESS_LOG_DYNAMODB_TABLE_NAME, dynamodb_headers=DYNAMODB_ACCESS_LOG_HEADERS, replacement_headers=CSV_ACCESS_LOG_HEADERS), [session_hash_textbox, host_name_textbox], None, preprocess=False).\

|

| 743 |

success(fn = upload_log_file_to_s3, inputs=[access_logs_state, access_s3_logs_loc_state], outputs=[s3_logs_output_textbox])

|

|

@@ -745,25 +758,25 @@ with app:

|

|

| 745 |

### FEEDBACK LOGS

|

| 746 |

if DISPLAY_FILE_NAMES_IN_LOGS == 'True':

|

| 747 |

# User submitted feedback for pdf redactions

|

| 748 |

-

pdf_callback = CSVLogger_custom(dataset_file_name=

|

| 749 |

pdf_callback.setup([pdf_feedback_radio, pdf_further_details_text, doc_file_name_no_extension_textbox], FEEDBACK_LOGS_FOLDER)

|

| 750 |

pdf_submit_feedback_btn.click(lambda *args: pdf_callback.flag(list(args), save_to_csv=SAVE_LOGS_TO_CSV, save_to_dynamodb=SAVE_LOGS_TO_DYNAMODB, dynamodb_table_name=FEEDBACK_LOG_DYNAMODB_TABLE_NAME, dynamodb_headers=DYNAMODB_FEEDBACK_LOG_HEADERS, replacement_headers=CSV_FEEDBACK_LOG_HEADERS), [pdf_feedback_radio, pdf_further_details_text, doc_file_name_no_extension_textbox], None, preprocess=False).\

|

| 751 |

success(fn = upload_log_file_to_s3, inputs=[feedback_logs_state, feedback_s3_logs_loc_state], outputs=[pdf_further_details_text])

|

| 752 |

|

| 753 |

# User submitted feedback for data redactions

|

| 754 |

-

data_callback = CSVLogger_custom(dataset_file_name=

|

| 755 |

data_callback.setup([data_feedback_radio, data_further_details_text, data_full_file_name_textbox], FEEDBACK_LOGS_FOLDER)

|

| 756 |

data_submit_feedback_btn.click(lambda *args: data_callback.flag(list(args), save_to_csv=SAVE_LOGS_TO_CSV, save_to_dynamodb=SAVE_LOGS_TO_DYNAMODB, dynamodb_table_name=FEEDBACK_LOG_DYNAMODB_TABLE_NAME, dynamodb_headers=DYNAMODB_FEEDBACK_LOG_HEADERS, replacement_headers=CSV_FEEDBACK_LOG_HEADERS), [data_feedback_radio, data_further_details_text, data_full_file_name_textbox], None, preprocess=False).\

|

| 757 |

success(fn = upload_log_file_to_s3, inputs=[feedback_logs_state, feedback_s3_logs_loc_state], outputs=[data_further_details_text])

|

| 758 |

else:

|

| 759 |

# User submitted feedback for pdf redactions

|

| 760 |

-

pdf_callback = CSVLogger_custom(dataset_file_name=

|

| 761 |

pdf_callback.setup([pdf_feedback_radio, pdf_further_details_text, doc_file_name_no_extension_textbox], FEEDBACK_LOGS_FOLDER)

|

| 762 |

pdf_submit_feedback_btn.click(lambda *args: pdf_callback.flag(list(args), save_to_csv=SAVE_LOGS_TO_CSV, save_to_dynamodb=SAVE_LOGS_TO_DYNAMODB, dynamodb_table_name=FEEDBACK_LOG_DYNAMODB_TABLE_NAME, dynamodb_headers=DYNAMODB_FEEDBACK_LOG_HEADERS, replacement_headers=CSV_FEEDBACK_LOG_HEADERS), [pdf_feedback_radio, pdf_further_details_text, placeholder_doc_file_name_no_extension_textbox_for_logs], None, preprocess=False).\

|

| 763 |

success(fn = upload_log_file_to_s3, inputs=[feedback_logs_state, feedback_s3_logs_loc_state], outputs=[pdf_further_details_text])

|

| 764 |

|

| 765 |

# User submitted feedback for data redactions

|

| 766 |

-

data_callback = CSVLogger_custom(dataset_file_name=

|

| 767 |

data_callback.setup([data_feedback_radio, data_further_details_text, data_full_file_name_textbox], FEEDBACK_LOGS_FOLDER)

|

| 768 |

data_submit_feedback_btn.click(lambda *args: data_callback.flag(list(args), save_to_csv=SAVE_LOGS_TO_CSV, save_to_dynamodb=SAVE_LOGS_TO_DYNAMODB, dynamodb_table_name=FEEDBACK_LOG_DYNAMODB_TABLE_NAME, dynamodb_headers=DYNAMODB_FEEDBACK_LOG_HEADERS, replacement_headers=CSV_FEEDBACK_LOG_HEADERS), [data_feedback_radio, data_further_details_text, placeholder_data_file_name_no_extension_textbox_for_logs], None, preprocess=False).\

|

| 769 |

success(fn = upload_log_file_to_s3, inputs=[feedback_logs_state, feedback_s3_logs_loc_state], outputs=[data_further_details_text])

|

|

@@ -771,7 +784,7 @@ with app:

|

|

| 771 |

### USAGE LOGS

|

| 772 |

# Log processing usage - time taken for redaction queries, and also logs for queries to Textract/Comprehend

|

| 773 |

|

| 774 |

-

usage_callback = CSVLogger_custom(dataset_file_name=

|

| 775 |

|

| 776 |

if DISPLAY_FILE_NAMES_IN_LOGS == 'True':

|

| 777 |

usage_callback.setup([session_hash_textbox, doc_file_name_no_extension_textbox, data_full_file_name_textbox, total_pdf_page_count, actual_time_taken_number, textract_query_number, pii_identification_method_drop, comprehend_query_number, cost_code_choice_drop, handwrite_signature_checkbox, host_name_textbox, text_extract_method_radio, is_a_textract_api_call], USAGE_LOGS_FOLDER)

|

|

@@ -809,7 +822,7 @@ if __name__ == "__main__":

|

|

| 809 |

|

| 810 |

main(first_loop_state, latest_file_completed=0, redaction_output_summary_textbox="", output_file_list=None,

|

| 811 |

log_files_list=None, estimated_time=0, textract_metadata="", comprehend_query_num=0,

|

| 812 |

-

current_loop_page=0, page_break=False, pdf_doc_state = [], all_image_annotations = [], all_line_level_ocr_results_df = pd.DataFrame(), all_decision_process_table = pd.DataFrame(),

|

| 813 |

|

| 814 |

# AWS options - placeholder for possibility of storing data on s3 and retrieving it in app

|

| 815 |

# with gr.Tab(label="Advanced options"):

|

|

|

|

| 1 |

import os

|

|

|

|

| 2 |

import pandas as pd

|

| 3 |

import gradio as gr

|

| 4 |

from gradio_image_annotation import image_annotator

|

| 5 |

+

from tools.config import OUTPUT_FOLDER, INPUT_FOLDER, RUN_DIRECT_MODE, MAX_QUEUE_SIZE, DEFAULT_CONCURRENCY_LIMIT, MAX_FILE_SIZE, GRADIO_SERVER_PORT, ROOT_PATH, GET_DEFAULT_ALLOW_LIST, ALLOW_LIST_PATH, S3_ALLOW_LIST_PATH, FEEDBACK_LOGS_FOLDER, ACCESS_LOGS_FOLDER, USAGE_LOGS_FOLDER, REDACTION_LANGUAGE, GET_COST_CODES, COST_CODES_PATH, S3_COST_CODES_PATH, ENFORCE_COST_CODES, DISPLAY_FILE_NAMES_IN_LOGS, SHOW_COSTS, RUN_AWS_FUNCTIONS, DOCUMENT_REDACTION_BUCKET, SHOW_WHOLE_DOCUMENT_TEXTRACT_CALL_OPTIONS, TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_BUCKET, TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_INPUT_SUBFOLDER, TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_OUTPUT_SUBFOLDER, SESSION_OUTPUT_FOLDER, LOAD_PREVIOUS_TEXTRACT_JOBS_S3, TEXTRACT_JOBS_S3_LOC, TEXTRACT_JOBS_LOCAL_LOC, HOST_NAME, DEFAULT_COST_CODE, OUTPUT_COST_CODES_PATH, OUTPUT_ALLOW_LIST_PATH, COGNITO_AUTH, SAVE_LOGS_TO_CSV, SAVE_LOGS_TO_DYNAMODB, ACCESS_LOG_DYNAMODB_TABLE_NAME, DYNAMODB_ACCESS_LOG_HEADERS, CSV_ACCESS_LOG_HEADERS, FEEDBACK_LOG_DYNAMODB_TABLE_NAME, DYNAMODB_FEEDBACK_LOG_HEADERS, CSV_FEEDBACK_LOG_HEADERS, USAGE_LOG_DYNAMODB_TABLE_NAME, DYNAMODB_USAGE_LOG_HEADERS, CSV_USAGE_LOG_HEADERS, TEXTRACT_JOBS_S3_INPUT_LOC, TEXTRACT_TEXT_EXTRACT_OPTION, NO_REDACTION_PII_OPTION, TEXT_EXTRACTION_MODELS, PII_DETECTION_MODELS, DEFAULT_TEXT_EXTRACTION_MODEL, DEFAULT_PII_DETECTION_MODEL, LOG_FILE_NAME, CHOSEN_COMPREHEND_ENTITIES, FULL_COMPREHEND_ENTITY_LIST, CHOSEN_REDACT_ENTITIES, FULL_ENTITY_LIST, FILE_INPUT_HEIGHT, TABULAR_PII_DETECTION_MODELS

|

| 6 |

+

from tools.helper_functions import put_columns_in_df, get_connection_params, reveal_feedback_buttons, custom_regex_load, reset_state_vars, load_in_default_allow_list, reset_review_vars, merge_csv_files, load_all_output_files, update_dataframe, check_for_existing_textract_file, load_in_default_cost_codes, enforce_cost_codes, calculate_aws_costs, calculate_time_taken, reset_base_dataframe, reset_ocr_base_dataframe, update_cost_code_dataframe_from_dropdown_select, check_for_existing_local_ocr_file, reset_data_vars, reset_aws_call_vars

|

| 7 |

+

from tools.aws_functions import download_file_from_s3, upload_log_file_to_s3

|

|

|

|

| 8 |

from tools.file_redaction import choose_and_run_redactor

|

| 9 |

from tools.file_conversion import prepare_image_or_pdf, get_input_file_names

|

| 10 |

+

from tools.redaction_review import apply_redactions_to_review_df_and_files, update_all_page_annotation_object_based_on_previous_page, decrease_page, increase_page, update_annotator_object_and_filter_df, update_entities_df_recogniser_entities, update_entities_df_page, update_entities_df_text, df_select_callback_dataframe_row, convert_df_to_xfdf, convert_xfdf_to_dataframe, reset_dropdowns, exclude_selected_items_from_redaction, undo_last_removal, update_selected_review_df_row_colour, update_all_entity_df_dropdowns, df_select_callback_cost, update_other_annotator_number_from_current, update_annotator_page_from_review_df, df_select_callback_ocr, df_select_callback_textract_api, get_all_rows_with_same_text

|

| 11 |

from tools.data_anonymise import anonymise_data_files

|

| 12 |

from tools.auth import authenticate_user

|

| 13 |

from tools.load_spacy_model_custom_recognisers import custom_entities

|

|

|

|

| 18 |

# Suppress downcasting warnings

|

| 19 |

pd.set_option('future.no_silent_downcasting', True)

|

| 20 |

|

| 21 |

+

# Convert string environment variables to string or list

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 22 |

SAVE_LOGS_TO_CSV = eval(SAVE_LOGS_TO_CSV)

|

| 23 |

SAVE_LOGS_TO_DYNAMODB = eval(SAVE_LOGS_TO_DYNAMODB)

|

| 24 |

|

|

|

|

| 30 |

if DYNAMODB_FEEDBACK_LOG_HEADERS: DYNAMODB_FEEDBACK_LOG_HEADERS = eval(DYNAMODB_FEEDBACK_LOG_HEADERS)

|

| 31 |

if DYNAMODB_USAGE_LOG_HEADERS: DYNAMODB_USAGE_LOG_HEADERS = eval(DYNAMODB_USAGE_LOG_HEADERS)

|

| 32 |

|

| 33 |

+

if CHOSEN_COMPREHEND_ENTITIES: CHOSEN_COMPREHEND_ENTITIES = eval(CHOSEN_COMPREHEND_ENTITIES)

|

| 34 |

+

if FULL_COMPREHEND_ENTITY_LIST: FULL_COMPREHEND_ENTITY_LIST = eval(FULL_COMPREHEND_ENTITY_LIST)

|

| 35 |

+

if CHOSEN_REDACT_ENTITIES: CHOSEN_REDACT_ENTITIES = eval(CHOSEN_REDACT_ENTITIES)

|

| 36 |

+

if FULL_ENTITY_LIST: FULL_ENTITY_LIST = eval(FULL_ENTITY_LIST)

|

| 37 |

+

|

| 38 |

+

# Add custom spacy recognisers to the Comprehend list, so that local Spacy model can be used to pick up e.g. titles, streetnames, UK postcodes that are sometimes missed by comprehend

|

| 39 |

+

CHOSEN_COMPREHEND_ENTITIES.extend(custom_entities)

|

| 40 |

+

FULL_COMPREHEND_ENTITY_LIST.extend(custom_entities)

|

| 41 |

+

|

| 42 |

+

FILE_INPUT_HEIGHT = int(FILE_INPUT_HEIGHT)

|

| 43 |

+

|

| 44 |

# Create the gradio interface

|

| 45 |

app = gr.Blocks(theme = gr.themes.Base(), fill_width=True)

|

| 46 |

|

|

|

|

| 52 |

|

| 53 |

# Pymupdf doc and all image annotations objects need to be stored as State objects as they do not have a standard Gradio component equivalent

|

| 54 |

pdf_doc_state = gr.State([])

|

| 55 |

+

all_image_annotations_state = gr.State([])

|

|

|

|

| 56 |

|

| 57 |

all_decision_process_table_state = gr.Dataframe(value=pd.DataFrame(), headers=None, col_count=0, row_count = (0, "dynamic"), label="all_decision_process_table", visible=False, type="pandas", wrap=True)

|

| 58 |

review_file_state = gr.Dataframe(value=pd.DataFrame(), headers=None, col_count=0, row_count = (0, "dynamic"), label="review_file_df", visible=False, type="pandas", wrap=True)

|

|

|

|

| 90 |

backup_recogniser_entity_dataframe_base = gr.Dataframe(visible=False)

|

| 91 |

|

| 92 |

# Logging state

|

| 93 |

+

feedback_logs_state = gr.Textbox(label= "feedback_logs_state", value=FEEDBACK_LOGS_FOLDER + LOG_FILE_NAME, visible=False)

|

| 94 |

feedback_s3_logs_loc_state = gr.Textbox(label= "feedback_s3_logs_loc_state", value=FEEDBACK_LOGS_FOLDER, visible=False)

|

| 95 |

+

access_logs_state = gr.Textbox(label= "access_logs_state", value=ACCESS_LOGS_FOLDER + LOG_FILE_NAME, visible=False)

|

| 96 |

access_s3_logs_loc_state = gr.Textbox(label= "access_s3_logs_loc_state", value=ACCESS_LOGS_FOLDER, visible=False)

|

| 97 |

+

usage_logs_state = gr.Textbox(label= "usage_logs_state", value=USAGE_LOGS_FOLDER + LOG_FILE_NAME, visible=False)

|

| 98 |

usage_s3_logs_loc_state = gr.Textbox(label= "usage_s3_logs_loc_state", value=USAGE_LOGS_FOLDER, visible=False)

|

| 99 |

|

| 100 |

session_hash_textbox = gr.Textbox(label= "session_hash_textbox", value="", visible=False)

|

|

|

|

| 157 |

s3_whole_document_textract_input_subfolder = gr.Textbox(label = "Default Textract whole_document S3 input folder", value=TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_INPUT_SUBFOLDER, visible=False)

|

| 158 |

s3_whole_document_textract_output_subfolder = gr.Textbox(label = "Default Textract whole_document S3 output folder", value=TEXTRACT_WHOLE_DOCUMENT_ANALYSIS_OUTPUT_SUBFOLDER, visible=False)

|

| 159 |

successful_textract_api_call_number = gr.Number(precision=0, value=0, visible=False)

|

| 160 |

+

no_redaction_method_drop = gr.Radio(label = """Placeholder for no redaction method after downloading Textract outputs""", value = NO_REDACTION_PII_OPTION, choices=[NO_REDACTION_PII_OPTION], visible=False)

|

| 161 |

+

textract_only_method_drop = gr.Radio(label="""Placeholder for Textract method after downloading Textract outputs""", value = TEXTRACT_TEXT_EXTRACT_OPTION, choices=[TEXTRACT_TEXT_EXTRACT_OPTION], visible=False)

|

| 162 |

|

| 163 |

load_s3_whole_document_textract_logs_bool = gr.Textbox(label = "Load Textract logs or not", value=LOAD_PREVIOUS_TEXTRACT_JOBS_S3, visible=False)

|

| 164 |

s3_whole_document_textract_logs_subfolder = gr.Textbox(label = "Default Textract whole_document S3 input folder", value=TEXTRACT_JOBS_S3_LOC, visible=False)

|

|

|

|

| 175 |

all_line_level_ocr_results_df_placeholder = gr.Dataframe(visible=False)

|

| 176 |

cost_code_dataframe_base = gr.Dataframe(value=pd.DataFrame(), row_count = (0, "dynamic"), label="Cost codes", type="pandas", interactive=True, show_fullscreen_button=True, show_copy_button=True, show_search='filter', wrap=True, max_height=200, visible=False)

|

| 177 |

|

| 178 |

+

# Placeholder for selected entity dataframe row

|

| 179 |

+

selected_entity_id = gr.Textbox(value="", label="selected_entity_id", visible=False)

|

| 180 |

+

selected_entity_colour = gr.Textbox(value="", label="selected_entity_colour", visible=False)

|

| 181 |

+

selected_entity_dataframe_row_text = gr.Textbox(value="", label="selected_entity_dataframe_row_text", visible=False)

|

| 182 |

+

|

| 183 |

+

# This is an invisible dataframe that holds all items from the redaction outputs that have the same text as the selected row

|

| 184 |

+

recogniser_entity_dataframe_same_text = gr.Dataframe(pd.DataFrame(data={"page":[], "label":[], "text":[], "id":[]}), col_count=(4,"fixed"), type="pandas", label="Click table row to select and go to page", headers=["page", "label", "text", "id"], show_fullscreen_button=True, wrap=True, max_height=400, static_columns=[0,1,2,3], visible=False)

|

| 185 |

+

|

| 186 |

# Duplicate page detection

|

| 187 |

in_duplicate_pages_text = gr.Textbox(label="in_duplicate_pages_text", visible=False)

|

| 188 |

duplicate_pages_df = gr.Dataframe(value=pd.DataFrame(), headers=None, col_count=0, row_count = (0, "dynamic"), label="duplicate_pages_df", visible=False, type="pandas", wrap=True)

|

|

|

|

| 218 |

job_output_textbox = gr.Textbox(value="", label="Textract call outputs", visible=False)

|

| 219 |

job_input_textbox = gr.Textbox(value=TEXTRACT_JOBS_S3_INPUT_LOC, label="Textract call outputs", visible=False)

|

| 220 |

|

| 221 |

+

textract_job_output_file = gr.File(label="Textract job output files", height=FILE_INPUT_HEIGHT, visible=False)

|

| 222 |

convert_textract_outputs_to_ocr_results = gr.Button("Placeholder - Convert Textract job outputs to OCR results (needs relevant document file uploaded above)", variant="secondary", visible=False)

|

| 223 |

|

| 224 |

###

|

|

|

|

| 241 |

###

|

| 242 |

with gr.Tab("Redact PDFs/images"):

|

| 243 |

with gr.Accordion("Redact document", open = True):

|

| 244 |

+

in_doc_files = gr.File(label="Choose a document or image file (PDF, JPG, PNG)", file_count= "multiple", file_types=['.pdf', '.jpg', '.png', '.json', '.zip'], height=FILE_INPUT_HEIGHT)

|

| 245 |

|

| 246 |

+

text_extract_method_radio = gr.Radio(label="""Choose text extraction method. Local options are lower quality but cost nothing - they may be worth a try if you are willing to spend some time reviewing outputs. AWS Textract has a cost per page - £2.66 ($3.50) per 1,000 pages with signature detection (default), £1.14 ($1.50) without. Change the settings in the tab below (AWS Textract signature detection) to change this.""", value = DEFAULT_TEXT_EXTRACTION_MODEL, choices=TEXT_EXTRACTION_MODELS)

|

| 247 |

|

| 248 |

with gr.Accordion("AWS Textract signature detection (default is on)", open = False):

|

| 249 |

handwrite_signature_checkbox = gr.CheckboxGroup(label="AWS Textract extraction settings", choices=["Extract handwriting", "Extract signatures"], value=["Extract handwriting", "Extract signatures"])

|

| 250 |

|

| 251 |

with gr.Row(equal_height=True):

|

| 252 |

+

pii_identification_method_drop = gr.Radio(label = """Choose personal information detection method. The local model is lower quality but costs nothing - it may be worth a try if you are willing to spend some time reviewing outputs, or if you are only interested in searching for custom search terms (see Redaction settings - custom deny list). AWS Comprehend has a cost of around £0.0075 ($0.01) per 10,000 characters.""", value = DEFAULT_PII_DETECTION_MODEL, choices=PII_DETECTION_MODELS)

|

| 253 |

|

| 254 |

if SHOW_COSTS == "True":

|

| 255 |

with gr.Accordion("Estimated costs and time taken. Note that costs shown only include direct usage of AWS services and do not include other running costs (e.g. storage, run-time costs)", open = True, visible=True):

|

|

|

|

| 296 |

|

| 297 |

with gr.Row():

|

| 298 |

redaction_output_summary_textbox = gr.Textbox(label="Output summary", scale=1)

|

| 299 |

+

output_file = gr.File(label="Output files", scale = 2)#, height=FILE_INPUT_HEIGHT)

|

| 300 |

latest_file_completed_text = gr.Number(value=0, label="Number of documents redacted", interactive=False, visible=False)

|

| 301 |

|

| 302 |

# Feedback elements are invisible until revealed by redaction action

|

|

|

|

| 311 |

with gr.Tab("Review redactions", id="tab_object_annotation"):

|

| 312 |

|

| 313 |

with gr.Accordion(label = "Review PDF redactions", open=True):

|

| 314 |

+

output_review_files = gr.File(label="Upload original PDF and 'review_file' csv here to review suggested redactions. The 'ocr_output' file can also be optionally provided for text search.", file_count='multiple', height=FILE_INPUT_HEIGHT)

|

| 315 |

+

upload_previous_review_file_btn = gr.Button("Review redactions based on original PDF and 'review_file' csv provided above ('ocr_output' csv optional)", variant="secondary")

|

| 316 |

with gr.Row():

|

| 317 |

annotate_zoom_in = gr.Button("Zoom in", visible=False)

|

| 318 |

annotate_zoom_out = gr.Button("Zoom out", visible=False)

|

|

|

|

| 361 |

recogniser_entity_dropdown = gr.Dropdown(label="Redaction category", value="ALL", allow_custom_value=True)

|

| 362 |

page_entity_dropdown = gr.Dropdown(label="Page", value="ALL", allow_custom_value=True)

|

| 363 |

text_entity_dropdown = gr.Dropdown(label="Text", value="ALL", allow_custom_value=True)

|

| 364 |

+

reset_dropdowns_btn = gr.Button(value="Reset filters")

|

| 365 |

+

recogniser_entity_dataframe = gr.Dataframe(pd.DataFrame(data={"page":[], "label":[], "text":[], "id":[]}), col_count=(4,"fixed"), type="pandas", label="Click table row to select and go to page", headers=["page", "label", "text", "id"], show_fullscreen_button=True, wrap=True, max_height=400, static_columns=[0,1,2,3])

|

| 366 |

|

| 367 |

+

with gr.Row(equal_height=True):

|

| 368 |

+

exclude_selected_btn = gr.Button(value="Exclude all redactions in table")

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 369 |

|

| 370 |

+

with gr.Accordion("Selected redaction row", open=True):

|

| 371 |

+

selected_entity_dataframe_row = gr.Dataframe(pd.DataFrame(data={"page":[], "label":[], "text":[], "id":[]}), col_count=4, type="pandas", visible=True, headers=["page", "label", "text", "id"], wrap=True)

|

| 372 |

+

exclude_selected_row_btn = gr.Button(value="Exclude specific redaction row")

|

| 373 |

+

exclude_text_with_same_as_selected_row_btn = gr.Button(value="Exclude all redactions with same text as selected row")

|

| 374 |

+

|

| 375 |

+

undo_last_removal_btn = gr.Button(value="Undo last element removal", variant="primary")

|

| 376 |

|

| 377 |

with gr.Accordion("Search all extracted text", open=True):

|

| 378 |

all_line_level_ocr_results_df = gr.Dataframe(value=pd.DataFrame(), headers=["page", "text"], col_count=(2, 'fixed'), row_count = (0, "dynamic"), label="All OCR results", visible=True, type="pandas", wrap=True, show_fullscreen_button=True, show_search='filter', show_label=False, show_copy_button=True, max_height=400)

|

|

|

|

| 388 |

###

|

| 389 |

with gr.Tab(label="Identify duplicate pages"):

|

| 390 |

with gr.Accordion("Identify duplicate pages to redact", open = True):

|

| 391 |

+

in_duplicate_pages = gr.File(label="Upload multiple 'ocr_output.csv' data files from redaction jobs here to compare", file_count="multiple", height=FILE_INPUT_HEIGHT, file_types=['.csv'])

|

| 392 |

with gr.Row():

|

| 393 |

duplicate_threshold_value = gr.Number(value=0.9, label="Minimum similarity to be considered a duplicate (maximum = 1)", scale =1)

|

| 394 |

find_duplicate_pages_btn = gr.Button(value="Identify duplicate pages", variant="primary", scale = 4)

|

| 395 |

|

| 396 |

+

duplicate_pages_out = gr.File(label="Duplicate pages analysis output", file_count="multiple", height=FILE_INPUT_HEIGHT, file_types=['.csv'])

|

| 397 |

|

| 398 |

###

|

| 399 |

# TEXT / TABULAR DATA TAB

|

|

|

|

| 403 |

with gr.Accordion("Redact open text", open = False):

|

| 404 |

in_text = gr.Textbox(label="Enter open text", lines=10)

|

| 405 |

with gr.Accordion("Upload xlsx or csv files", open = True):

|

| 406 |

+

in_data_files = gr.File(label="Choose Excel or csv files", file_count= "multiple", file_types=['.xlsx', '.xls', '.csv', '.parquet', '.csv.gz'], height=FILE_INPUT_HEIGHT)

|

| 407 |

|

| 408 |

in_excel_sheets = gr.Dropdown(choices=["Choose Excel sheets to anonymise"], multiselect = True, label="Select Excel sheets that you want to anonymise (showing sheets present across all Excel files).", visible=False, allow_custom_value=True)

|

| 409 |

|

| 410 |

in_colnames = gr.Dropdown(choices=["Choose columns to anonymise"], multiselect = True, label="Select columns that you want to anonymise (showing columns present across all files).")

|

| 411 |

|

| 412 |

+

pii_identification_method_drop_tabular = gr.Radio(label = "Choose PII detection method. AWS Comprehend has a cost of approximately $0.01 per 10,000 characters.", value = DEFAULT_PII_DETECTION_MODEL, choices=TABULAR_PII_DETECTION_MODELS)

|

| 413 |

|

| 414 |

with gr.Accordion("Anonymisation output format", open = False):

|

| 415 |

anon_strat = gr.Radio(choices=["replace with 'REDACTED'", "replace with <ENTITY_NAME>", "redact completely", "hash", "mask"], label="Select an anonymisation method.", value = "replace with 'REDACTED'") # , "encrypt", "fake_first_name" are also available, but are not currently included as not that useful in current form

|

|

|

|

| 435 |

with gr.Accordion("Custom allow, deny, and full page redaction lists", open = True):

|

| 436 |

with gr.Row():

|

| 437 |

with gr.Column():

|

| 438 |

+

in_allow_list = gr.File(label="Import allow list file - csv table with one column of a different word/phrase on each row (case insensitive). Terms in this file will not be redacted.", file_count="multiple", height=FILE_INPUT_HEIGHT)

|

| 439 |

in_allow_list_text = gr.Textbox(label="Custom allow list load status")

|

| 440 |

with gr.Column():

|

| 441 |

+

in_deny_list = gr.File(label="Import custom deny list - csv table with one column of a different word/phrase on each row (case insensitive). Terms in this file will always be redacted.", file_count="multiple", height=FILE_INPUT_HEIGHT)

|

| 442 |

in_deny_list_text = gr.Textbox(label="Custom deny list load status")

|

| 443 |

with gr.Column():

|

| 444 |

+

in_fully_redacted_list = gr.File(label="Import fully redacted pages list - csv table with one column of page numbers on each row. Page numbers in this file will be fully redacted.", file_count="multiple", height=FILE_INPUT_HEIGHT)

|

| 445 |

in_fully_redacted_list_text = gr.Textbox(label="Fully redacted page list load status")

|

| 446 |

with gr.Accordion("Manually modify custom allow, deny, and full page redaction lists (NOTE: you need to press Enter after modifying/adding an entry to the lists to apply them)", open = False):

|

| 447 |

with gr.Row():

|

|

|

|

| 450 |

in_fully_redacted_list_state = gr.Dataframe(value=pd.DataFrame(), headers=["fully_redacted_pages_list"], col_count=(1, "fixed"), row_count = (0, "dynamic"), label="Fully redacted pages", visible=True, type="pandas", interactive=True, show_fullscreen_button=True, show_copy_button=True, datatype='number', wrap=True)

|

| 451 |

|

| 452 |

with gr.Accordion("Select entity types to redact", open = True):

|

| 453 |

+

in_redact_entities = gr.Dropdown(value=CHOSEN_REDACT_ENTITIES, choices=FULL_ENTITY_LIST, multiselect=True, label="Local PII identification model (click empty space in box for full list)")

|

| 454 |

+

in_redact_comprehend_entities = gr.Dropdown(value=CHOSEN_COMPREHEND_ENTITIES, choices=FULL_COMPREHEND_ENTITY_LIST, multiselect=True, label="AWS Comprehend PII identification model (click empty space in box for full list)")

|

| 455 |

|

| 456 |

with gr.Row():

|

| 457 |

max_fuzzy_spelling_mistakes_num = gr.Number(label="Maximum number of spelling mistakes allowed for fuzzy matching (CUSTOM_FUZZY entity).", value=1, minimum=0, maximum=9, precision=0)

|

|

|

|

| 470 |

aws_access_key_textbox = gr.Textbox(value='', label="AWS access key for account with permissions for AWS Textract and Comprehend", visible=True, type="password")

|

| 471 |

aws_secret_key_textbox = gr.Textbox(value='', label="AWS secret key for account with permissions for AWS Textract and Comprehend", visible=True, type="password")

|

| 472 |

|

|

|

|

|

|

|

| 473 |

with gr.Accordion("Log file outputs", open = False):

|

| 474 |

log_files_output = gr.File(label="Log file output", interactive=False)

|

| 475 |

|

|

|

|

| 482 |

all_output_files = gr.File(label="All files in output folder", file_count='multiple', file_types=['.csv'], interactive=False)

|

| 483 |

|

| 484 |

###

|

| 485 |

+

# UI INTERACTION

|

| 486 |

###

|

| 487 |

|

| 488 |

###

|

|

|

|

| 555 |

|

| 556 |

textract_job_detail_df.select(df_select_callback_textract_api, inputs=[textract_output_found_checkbox], outputs=[job_id_textbox, job_type_dropdown, selected_job_id_row])

|

| 557 |

|

|

|

|

| 558 |

convert_textract_outputs_to_ocr_results.click(replace_existing_pdf_input_for_whole_document_outputs, inputs = [s3_whole_document_textract_input_subfolder, doc_file_name_no_extension_textbox, output_folder_textbox, s3_whole_document_textract_default_bucket, in_doc_files, input_folder_textbox], outputs = [in_doc_files, doc_file_name_no_extension_textbox, doc_file_name_with_extension_textbox, doc_full_file_name_textbox, doc_file_name_textbox_list, total_pdf_page_count]).\

|

| 559 |

success(fn = prepare_image_or_pdf, inputs=[in_doc_files, text_extract_method_radio, latest_file_completed_text, redaction_output_summary_textbox, first_loop_state, annotate_max_pages, all_image_annotations_state, prepare_for_review_bool_false, in_fully_redacted_list_state, output_folder_textbox, input_folder_textbox, prepare_images_bool_false], outputs=[redaction_output_summary_textbox, prepared_pdf_state, images_pdf_state, annotate_max_pages, annotate_max_pages_bottom, pdf_doc_state, all_image_annotations_state, review_file_state, document_cropboxes, page_sizes, textract_output_found_checkbox, all_img_details_state, all_line_level_ocr_results_df_base, local_ocr_output_found_checkbox]).\

|

| 560 |

success(fn=check_for_existing_textract_file, inputs=[doc_file_name_no_extension_textbox, output_folder_textbox], outputs=[textract_output_found_checkbox]).\

|

|

|

|

| 576 |

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number, review_file_state, page_sizes, doc_full_file_name_textbox, input_folder_textbox], outputs = [annotator, annotate_current_page, annotate_current_page_bottom, annotate_previous_page, recogniser_entity_dropdown, recogniser_entity_dataframe, recogniser_entity_dataframe_base, text_entity_dropdown, page_entity_dropdown, page_sizes, all_image_annotations_state])

|

| 577 |

|

| 578 |

# Page number controls

|

| 579 |

+

annotate_current_page.submit(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_previous_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 580 |

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number, review_file_state, page_sizes, doc_full_file_name_textbox, input_folder_textbox], outputs = [annotator, annotate_current_page, annotate_current_page_bottom, annotate_previous_page, recogniser_entity_dropdown, recogniser_entity_dataframe, recogniser_entity_dataframe_base, text_entity_dropdown, page_entity_dropdown, page_sizes, all_image_annotations_state]).\

|

| 581 |

success(apply_redactions_to_review_df_and_files, inputs=[annotator, doc_full_file_name_textbox, pdf_doc_state, all_image_annotations_state, annotate_current_page, review_file_state, output_folder_textbox, do_not_save_pdf_state, page_sizes], outputs=[pdf_doc_state, all_image_annotations_state, output_review_files, log_files_output, review_file_state])

|

| 582 |

|

| 583 |

+

annotation_last_page_button.click(fn=decrease_page, inputs=[annotate_current_page], outputs=[annotate_current_page, annotate_current_page_bottom]).\

|

| 584 |

+

success(update_all_page_annotation_object_based_on_previous_page, inputs = [annotator, annotate_current_page, annotate_previous_page, all_image_annotations_state, page_sizes], outputs = [all_image_annotations_state, annotate_previous_page, annotate_current_page_bottom]).\

|

| 585 |

+

success(update_annotator_object_and_filter_df, inputs=[all_image_annotations_state, annotate_current_page, recogniser_entity_dropdown, page_entity_dropdown, text_entity_dropdown, recogniser_entity_dataframe_base, annotator_zoom_number, review_file_state, page_sizes, doc_full_file_name_textbox, input_folder_textbox], outputs = [annotator, annotate_current_page, annotate_current_page_bottom, annotate_previous_page, recogniser_entity_dropdown, recogniser_entity_dataframe, recogniser_entity_dataframe_base, text_entity_dropdown, page_entity_dropdown, page_sizes, all_image_annotations_state]).\

|

| 586 |

+