Spaces:

Running

Running

Upload 186 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

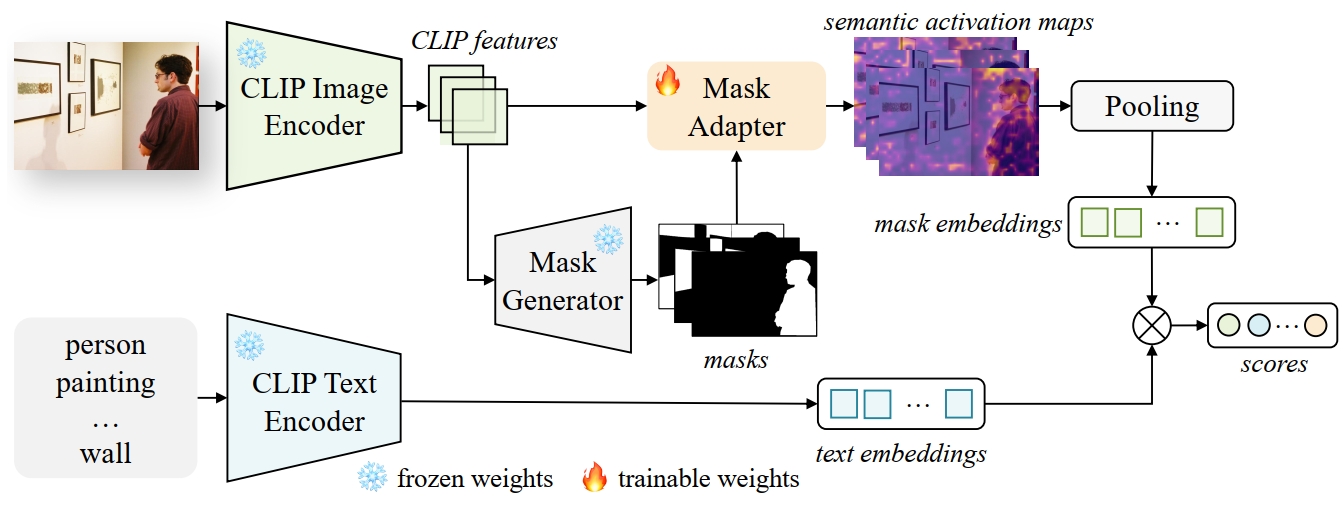

- assets/main_fig.png +0 -0

- configs/ground-truth-warmup/Base-COCO-PanopticSegmentation.yaml +60 -0

- configs/ground-truth-warmup/mask-adapter/mask_adapter_convnext_large_cocopan_eval_ade20k.yaml +40 -0

- configs/ground-truth-warmup/mask-adapter/mask_adapter_maft_convnext_base_cocostuff_eval_ade20k.yaml +40 -0

- configs/ground-truth-warmup/mask-adapter/mask_adapter_maft_convnext_large_cocostuff_eval_ade20k.yaml +40 -0

- configs/ground-truth-warmup/maskformer2_R50_bs16_50ep.yaml +45 -0

- configs/mixed-mask-training/fc-clip/Base-COCO-PanopticSegmentation.yaml +49 -0

- configs/mixed-mask-training/fc-clip/fcclip/fcclip_convnext_large_eval_a847.yaml +12 -0

- configs/mixed-mask-training/fc-clip/fcclip/fcclip_convnext_large_eval_ade20k.yaml +55 -0

- configs/mixed-mask-training/fc-clip/fcclip/fcclip_convnext_large_eval_coco.yaml +4 -0

- configs/mixed-mask-training/fc-clip/fcclip/fcclip_convnext_large_eval_pas20.yaml +12 -0

- configs/mixed-mask-training/fc-clip/fcclip/fcclip_convnext_large_eval_pc459.yaml +12 -0

- configs/mixed-mask-training/fc-clip/fcclip/fcclip_convnext_large_eval_pc59.yaml +12 -0

- configs/mixed-mask-training/fc-clip/maskformer2_R50_bs16_50ep.yaml +45 -0

- configs/mixed-mask-training/maftp/Base-COCO-PanopticSegmentation.yaml +62 -0

- configs/mixed-mask-training/maftp/maskformer2_R50_bs16_50ep.yaml +45 -0

- configs/mixed-mask-training/maftp/semantic/eval_a847.yaml +13 -0

- configs/mixed-mask-training/maftp/semantic/eval_pas20.yaml +12 -0

- configs/mixed-mask-training/maftp/semantic/eval_pas21.yaml +13 -0

- configs/mixed-mask-training/maftp/semantic/eval_pc459.yaml +12 -0

- configs/mixed-mask-training/maftp/semantic/eval_pc59.yaml +12 -0

- configs/mixed-mask-training/maftp/semantic/train_semantic_base_eval_a150.yaml +50 -0

- configs/mixed-mask-training/maftp/semantic/train_semantic_large_eval_a150.yaml +46 -0

- demo/demo.py +201 -0

- demo/images/000000000605.jpg +0 -0

- demo/images/000000001025.jpg +0 -0

- demo/images/000000290833.jpg +0 -0

- demo/images/ADE_val_00000739.jpg +0 -0

- demo/images/ADE_val_00000979.jpg +0 -0

- demo/images/ADE_val_00001200.jpg +0 -0

- demo/predictor.py +280 -0

- mask_adapter/.DS_Store +0 -0

- mask_adapter/__init__.py +44 -0

- mask_adapter/__pycache__/__init__.cpython-310.pyc +0 -0

- mask_adapter/__pycache__/__init__.cpython-38.pyc +0 -0

- mask_adapter/__pycache__/config.cpython-310.pyc +0 -0

- mask_adapter/__pycache__/config.cpython-38.pyc +0 -0

- mask_adapter/__pycache__/fcclip.cpython-310.pyc +0 -0

- mask_adapter/__pycache__/fcclip.cpython-38.pyc +0 -0

- mask_adapter/__pycache__/mask_adapter.cpython-310.pyc +0 -0

- mask_adapter/__pycache__/mask_adapter.cpython-38.pyc +0 -0

- mask_adapter/__pycache__/sam_maskadapter.cpython-310.pyc +0 -0

- mask_adapter/__pycache__/test_time_augmentation.cpython-310.pyc +0 -0

- mask_adapter/__pycache__/test_time_augmentation.cpython-38.pyc +0 -0

- mask_adapter/config.py +150 -0

- mask_adapter/data/.DS_Store +0 -0

- mask_adapter/data/__init__.py +16 -0

- mask_adapter/data/__pycache__/__init__.cpython-310.pyc +0 -0

- mask_adapter/data/__pycache__/__init__.cpython-38.pyc +0 -0

- mask_adapter/data/__pycache__/custom_dataset_dataloader.cpython-310.pyc +0 -0

assets/main_fig.png

ADDED

|

configs/ground-truth-warmup/Base-COCO-PanopticSegmentation.yaml

ADDED

|

@@ -0,0 +1,60 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MODEL:

|

| 2 |

+

BACKBONE:

|

| 3 |

+

FREEZE_AT: 0

|

| 4 |

+

NAME: "build_resnet_backbone"

|

| 5 |

+

WEIGHTS: "detectron2://ImageNetPretrained/torchvision/R-50.pkl"

|

| 6 |

+

PIXEL_MEAN: [123.675, 116.280, 103.530]

|

| 7 |

+

PIXEL_STD: [58.395, 57.120, 57.375]

|

| 8 |

+

RESNETS:

|

| 9 |

+

DEPTH: 50

|

| 10 |

+

STEM_TYPE: "basic" # not used

|

| 11 |

+

STEM_OUT_CHANNELS: 64

|

| 12 |

+

STRIDE_IN_1X1: False

|

| 13 |

+

OUT_FEATURES: ["res2", "res3", "res4", "res5"]

|

| 14 |

+

# NORM: "SyncBN"

|

| 15 |

+

RES5_MULTI_GRID: [1, 1, 1] # not used

|

| 16 |

+

|

| 17 |

+

SOLVER:

|

| 18 |

+

IMS_PER_BATCH: 8

|

| 19 |

+

BASE_LR: 0.0001

|

| 20 |

+

STEPS: (260231, 283888)

|

| 21 |

+

MAX_ITER: 295717

|

| 22 |

+

WARMUP_FACTOR: 1.0

|

| 23 |

+

WARMUP_ITERS: 10

|

| 24 |

+

CHECKPOINT_PERIOD: 10000

|

| 25 |

+

WEIGHT_DECAY: 0.05

|

| 26 |

+

OPTIMIZER: "ADAMW"

|

| 27 |

+

BACKBONE_MULTIPLIER: 0.1

|

| 28 |

+

CLIP_GRADIENTS:

|

| 29 |

+

ENABLED: True

|

| 30 |

+

CLIP_TYPE: "full_model"

|

| 31 |

+

CLIP_VALUE: 1.0

|

| 32 |

+

NORM_TYPE: 2.0

|

| 33 |

+

AMP:

|

| 34 |

+

ENABLED: True

|

| 35 |

+

INPUT:

|

| 36 |

+

IMAGE_SIZE: 768

|

| 37 |

+

MIN_SCALE: 0.1

|

| 38 |

+

MAX_SCALE: 2.0

|

| 39 |

+

FORMAT: "RGB"

|

| 40 |

+

MIN_SIZE_TRAIN: (1024,)

|

| 41 |

+

MAX_SIZE_TRAIN: 1024

|

| 42 |

+

DATASET_MAPPER_NAME: "coco_combine_lsj"

|

| 43 |

+

MASK_FORMAT: "bitmask"

|

| 44 |

+

COLOR_AUG_SSD: True

|

| 45 |

+

|

| 46 |

+

DATASETS:

|

| 47 |

+

TRAIN: ("openvocab_coco_2017_train_panoptic_with_sem_seg",)

|

| 48 |

+

TEST: ("openvocab_ade20k_panoptic_val",) # to evaluate instance and semantic performance as well

|

| 49 |

+

DATALOADER:

|

| 50 |

+

SAMPLER_TRAIN: "MultiDatasetSampler"

|

| 51 |

+

USE_DIFF_BS_SIZE: False

|

| 52 |

+

DATASET_RATIO: [1.0]

|

| 53 |

+

DATASET_BS: [2]

|

| 54 |

+

USE_RFS: [False]

|

| 55 |

+

NUM_WORKERS: 8

|

| 56 |

+

DATASET_ANN: ['mask']

|

| 57 |

+

ASPECT_RATIO_GROUPING: True

|

| 58 |

+

TEST:

|

| 59 |

+

EVAL_PERIOD: 10000

|

| 60 |

+

VERSION: 2

|

configs/ground-truth-warmup/mask-adapter/mask_adapter_convnext_large_cocopan_eval_ade20k.yaml

ADDED

|

@@ -0,0 +1,40 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: ../maskformer2_R50_bs16_50ep.yaml

|

| 2 |

+

MODEL:

|

| 3 |

+

META_ARCHITECTURE: "MASK_Adapter"

|

| 4 |

+

MASK_ADAPTER:

|

| 5 |

+

NAME: "MASKAdapterHead"

|

| 6 |

+

MASK_IN_CHANNELS: 16

|

| 7 |

+

NUM_CHANNELS: 768

|

| 8 |

+

USE_CHECKPOINT: False

|

| 9 |

+

NUM_OUTPUT_MAPS: 16

|

| 10 |

+

# backbone part.

|

| 11 |

+

BACKBONE:

|

| 12 |

+

NAME: "CLIP"

|

| 13 |

+

WEIGHTS: ""

|

| 14 |

+

PIXEL_MEAN: [122.7709383, 116.7460125, 104.09373615]

|

| 15 |

+

PIXEL_STD: [68.5005327, 66.6321579, 70.32316305]

|

| 16 |

+

FC_CLIP:

|

| 17 |

+

CLIP_MODEL_NAME: "convnext_large_d_320"

|

| 18 |

+

CLIP_PRETRAINED_WEIGHTS: "laion2b_s29b_b131k_ft_soup"

|

| 19 |

+

EMBED_DIM: 768

|

| 20 |

+

GEOMETRIC_ENSEMBLE_ALPHA: -1.0

|

| 21 |

+

GEOMETRIC_ENSEMBLE_BETA: -1.0

|

| 22 |

+

MASK_FORMER:

|

| 23 |

+

NUM_OBJECT_QUERIES: 250

|

| 24 |

+

TEST:

|

| 25 |

+

SEMANTIC_ON: True

|

| 26 |

+

INSTANCE_ON: True

|

| 27 |

+

PANOPTIC_ON: True

|

| 28 |

+

OVERLAP_THRESHOLD: 0.8

|

| 29 |

+

OBJECT_MASK_THRESHOLD: 0.0

|

| 30 |

+

|

| 31 |

+

INPUT:

|

| 32 |

+

DATASET_MAPPER_NAME: "coco_panoptic_lsj"

|

| 33 |

+

|

| 34 |

+

DATALOADER:

|

| 35 |

+

SAMPLER_TRAIN: "TrainingSampler"

|

| 36 |

+

|

| 37 |

+

DATASETS:

|

| 38 |

+

TRAIN: ("openvocab_coco_2017_train_panoptic_with_sem_seg",)

|

| 39 |

+

TEST: ("openvocab_ade20k_panoptic_val",)

|

| 40 |

+

OUTPUT_DIR: ./training/first-phase/fcclip-l-adapter

|

configs/ground-truth-warmup/mask-adapter/mask_adapter_maft_convnext_base_cocostuff_eval_ade20k.yaml

ADDED

|

@@ -0,0 +1,40 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: ../maskformer2_R50_bs16_50ep.yaml

|

| 2 |

+

MODEL:

|

| 3 |

+

META_ARCHITECTURE: "MASK_Adapter"

|

| 4 |

+

MASK_ADAPTER:

|

| 5 |

+

NAME: "MASKAdapterHead"

|

| 6 |

+

MASK_IN_CHANNELS: 16

|

| 7 |

+

NUM_CHANNELS: 768

|

| 8 |

+

USE_CHECKPOINT: False

|

| 9 |

+

NUM_OUTPUT_MAPS: 16

|

| 10 |

+

TRAIN_MAFT: True

|

| 11 |

+

# backbone part.

|

| 12 |

+

BACKBONE:

|

| 13 |

+

NAME: "CLIP"

|

| 14 |

+

WEIGHTS: ""

|

| 15 |

+

PIXEL_MEAN: [122.7709383, 116.7460125, 104.09373615]

|

| 16 |

+

PIXEL_STD: [68.5005327, 66.6321579, 70.32316305]

|

| 17 |

+

FC_CLIP:

|

| 18 |

+

CLIP_MODEL_NAME: "convnext_base_w_320"

|

| 19 |

+

CLIP_PRETRAINED_WEIGHTS: "laion_aesthetic_s13b_b82k_augreg"

|

| 20 |

+

EMBED_DIM: 640

|

| 21 |

+

GEOMETRIC_ENSEMBLE_ALPHA: -1.0

|

| 22 |

+

GEOMETRIC_ENSEMBLE_BETA: -1.0

|

| 23 |

+

MASK_FORMER:

|

| 24 |

+

NUM_OBJECT_QUERIES: 250

|

| 25 |

+

TEST:

|

| 26 |

+

SEMANTIC_ON: True

|

| 27 |

+

INSTANCE_ON: True

|

| 28 |

+

PANOPTIC_ON: True

|

| 29 |

+

OVERLAP_THRESHOLD: 0.8

|

| 30 |

+

OBJECT_MASK_THRESHOLD: 0.0

|

| 31 |

+

|

| 32 |

+

INPUT:

|

| 33 |

+

DATASET_MAPPER_NAME: "mask_former_semantic"

|

| 34 |

+

|

| 35 |

+

DATASETS:

|

| 36 |

+

TRAIN: ("openvocab_coco_2017_train_stuff_sem_seg",)

|

| 37 |

+

TEST: ("openvocab_ade20k_panoptic_val",)

|

| 38 |

+

DATALOADER:

|

| 39 |

+

SAMPLER_TRAIN: "TrainingSampler"

|

| 40 |

+

OUTPUT_DIR: ./training/first-phase/maft_b_adapter

|

configs/ground-truth-warmup/mask-adapter/mask_adapter_maft_convnext_large_cocostuff_eval_ade20k.yaml

ADDED

|

@@ -0,0 +1,40 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: ../maskformer2_R50_bs16_50ep.yaml

|

| 2 |

+

MODEL:

|

| 3 |

+

META_ARCHITECTURE: "MASK_Adapter"

|

| 4 |

+

MASK_ADAPTER:

|

| 5 |

+

NAME: "MASKAdapterHead"

|

| 6 |

+

MASK_IN_CHANNELS: 16

|

| 7 |

+

NUM_CHANNELS: 768

|

| 8 |

+

USE_CHECKPOINT: False

|

| 9 |

+

NUM_OUTPUT_MAPS: 16

|

| 10 |

+

TRAIN_MAFT: True

|

| 11 |

+

# backbone part.

|

| 12 |

+

BACKBONE:

|

| 13 |

+

NAME: "CLIP"

|

| 14 |

+

WEIGHTS: ""

|

| 15 |

+

PIXEL_MEAN: [122.7709383, 116.7460125, 104.09373615]

|

| 16 |

+

PIXEL_STD: [68.5005327, 66.6321579, 70.32316305]

|

| 17 |

+

FC_CLIP:

|

| 18 |

+

CLIP_MODEL_NAME: "convnext_large_d_320"

|

| 19 |

+

CLIP_PRETRAINED_WEIGHTS: "laion2b_s29b_b131k_ft_soup"

|

| 20 |

+

EMBED_DIM: 768

|

| 21 |

+

GEOMETRIC_ENSEMBLE_ALPHA: -1.0

|

| 22 |

+

GEOMETRIC_ENSEMBLE_BETA: -1.0

|

| 23 |

+

MASK_FORMER:

|

| 24 |

+

NUM_OBJECT_QUERIES: 250

|

| 25 |

+

TEST:

|

| 26 |

+

SEMANTIC_ON: True

|

| 27 |

+

INSTANCE_ON: True

|

| 28 |

+

PANOPTIC_ON: True

|

| 29 |

+

OVERLAP_THRESHOLD: 0.8

|

| 30 |

+

OBJECT_MASK_THRESHOLD: 0.0

|

| 31 |

+

|

| 32 |

+

INPUT:

|

| 33 |

+

DATASET_MAPPER_NAME: "mask_former_semantic"

|

| 34 |

+

|

| 35 |

+

DATASETS:

|

| 36 |

+

TRAIN: ("openvocab_coco_2017_train_stuff_sem_seg",)

|

| 37 |

+

TEST: ("openvocab_ade20k_panoptic_val",)

|

| 38 |

+

DATALOADER:

|

| 39 |

+

SAMPLER_TRAIN: "TrainingSampler"

|

| 40 |

+

OUTPUT_DIR: ./training/first-phase/maft_l_adapter

|

configs/ground-truth-warmup/maskformer2_R50_bs16_50ep.yaml

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: Base-COCO-PanopticSegmentation.yaml

|

| 2 |

+

MODEL:

|

| 3 |

+

META_ARCHITECTURE: "MaskFormer"

|

| 4 |

+

SEM_SEG_HEAD:

|

| 5 |

+

NAME: "FCCLIPMASKHead"

|

| 6 |

+

IN_FEATURES: ["res2", "res3", "res4", "res5"]

|

| 7 |

+

IGNORE_VALUE: 255

|

| 8 |

+

NUM_CLASSES: 133

|

| 9 |

+

LOSS_WEIGHT: 1.0

|

| 10 |

+

CONVS_DIM: 256

|

| 11 |

+

MASK_DIM: 256

|

| 12 |

+

NORM: "GN"

|

| 13 |

+

# pixel decoder

|

| 14 |

+

PIXEL_DECODER_NAME: "MSDeformAttnPixelDecoder"

|

| 15 |

+

IN_FEATURES: ["res2", "res3", "res4", "res5"]

|

| 16 |

+

DEFORMABLE_TRANSFORMER_ENCODER_IN_FEATURES: ["res3", "res4", "res5"]

|

| 17 |

+

COMMON_STRIDE: 4

|

| 18 |

+

TRANSFORMER_ENC_LAYERS: 6

|

| 19 |

+

MASK_FORMER:

|

| 20 |

+

TRANSFORMER_DECODER_NAME: "MultiScaleMaskedTransformerDecoder"

|

| 21 |

+

TRANSFORMER_IN_FEATURE: "multi_scale_pixel_decoder"

|

| 22 |

+

DEEP_SUPERVISION: True

|

| 23 |

+

NO_OBJECT_WEIGHT: 0.1

|

| 24 |

+

CLASS_WEIGHT: 2.0

|

| 25 |

+

MASK_WEIGHT: 5.0

|

| 26 |

+

DICE_WEIGHT: 5.0

|

| 27 |

+

HIDDEN_DIM: 256

|

| 28 |

+

NUM_OBJECT_QUERIES: 100

|

| 29 |

+

NHEADS: 8

|

| 30 |

+

DROPOUT: 0.0

|

| 31 |

+

DIM_FEEDFORWARD: 2048

|

| 32 |

+

ENC_LAYERS: 0

|

| 33 |

+

PRE_NORM: False

|

| 34 |

+

ENFORCE_INPUT_PROJ: False

|

| 35 |

+

SIZE_DIVISIBILITY: 32

|

| 36 |

+

DEC_LAYERS: 10 # 9 decoder layers, add one for the loss on learnable query

|

| 37 |

+

TRAIN_NUM_POINTS: 12544

|

| 38 |

+

OVERSAMPLE_RATIO: 3.0

|

| 39 |

+

IMPORTANCE_SAMPLE_RATIO: 0.75

|

| 40 |

+

TEST:

|

| 41 |

+

SEMANTIC_ON: True

|

| 42 |

+

INSTANCE_ON: True

|

| 43 |

+

PANOPTIC_ON: True

|

| 44 |

+

OVERLAP_THRESHOLD: 0.8

|

| 45 |

+

OBJECT_MASK_THRESHOLD: 0.8

|

configs/mixed-mask-training/fc-clip/Base-COCO-PanopticSegmentation.yaml

ADDED

|

@@ -0,0 +1,49 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MODEL:

|

| 2 |

+

BACKBONE:

|

| 3 |

+

FREEZE_AT: 0

|

| 4 |

+

NAME: "build_resnet_backbone"

|

| 5 |

+

WEIGHTS: "detectron2://ImageNetPretrained/torchvision/R-50.pkl"

|

| 6 |

+

PIXEL_MEAN: [123.675, 116.280, 103.530]

|

| 7 |

+

PIXEL_STD: [58.395, 57.120, 57.375]

|

| 8 |

+

RESNETS:

|

| 9 |

+

DEPTH: 50

|

| 10 |

+

STEM_TYPE: "basic" # not used

|

| 11 |

+

STEM_OUT_CHANNELS: 64

|

| 12 |

+

STRIDE_IN_1X1: False

|

| 13 |

+

OUT_FEATURES: ["res2", "res3", "res4", "res5"]

|

| 14 |

+

# NORM: "SyncBN"

|

| 15 |

+

RES5_MULTI_GRID: [1, 1, 1] # not used

|

| 16 |

+

DATASETS:

|

| 17 |

+

TRAIN: ("openvocab_coco_2017_train_stuff_sem_seg",)

|

| 18 |

+

TEST: ("openvocab_ade20k_panoptic_val",) # to evaluate instance and semantic performance as well

|

| 19 |

+

SOLVER:

|

| 20 |

+

IMS_PER_BATCH: 18

|

| 21 |

+

BASE_LR: 0.0001

|

| 22 |

+

STEPS: (216859, 236574)

|

| 23 |

+

MAX_ITER: 246431

|

| 24 |

+

WARMUP_FACTOR: 1.0

|

| 25 |

+

WARMUP_ITERS: 10

|

| 26 |

+

WEIGHT_DECAY: 0.05

|

| 27 |

+

OPTIMIZER: "ADAMW"

|

| 28 |

+

BACKBONE_MULTIPLIER: 0.1

|

| 29 |

+

CLIP_GRADIENTS:

|

| 30 |

+

ENABLED: True

|

| 31 |

+

CLIP_TYPE: "full_model"

|

| 32 |

+

CLIP_VALUE: 1.0

|

| 33 |

+

NORM_TYPE: 2.0

|

| 34 |

+

AMP:

|

| 35 |

+

ENABLED: True

|

| 36 |

+

INPUT:

|

| 37 |

+

IMAGE_SIZE: 1024

|

| 38 |

+

MIN_SCALE: 0.1

|

| 39 |

+

MAX_SCALE: 2.0

|

| 40 |

+

MIN_SIZE_TEST: 896

|

| 41 |

+

MAX_SIZE_TEST: 896

|

| 42 |

+

FORMAT: "RGB"

|

| 43 |

+

DATASET_MAPPER_NAME: "coco_panoptic_lsj"

|

| 44 |

+

TEST:

|

| 45 |

+

EVAL_PERIOD: 5000

|

| 46 |

+

DATALOADER:

|

| 47 |

+

FILTER_EMPTY_ANNOTATIONS: True

|

| 48 |

+

NUM_WORKERS: 4

|

| 49 |

+

VERSION: 2

|

configs/mixed-mask-training/fc-clip/fcclip/fcclip_convnext_large_eval_a847.yaml

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: ./fcclip_convnext_large_eval_ade20k.yaml

|

| 2 |

+

|

| 3 |

+

MODEL:

|

| 4 |

+

MASK_FORMER:

|

| 5 |

+

TEST:

|

| 6 |

+

PANOPTIC_ON: False

|

| 7 |

+

INSTANCE_ON: False

|

| 8 |

+

|

| 9 |

+

DATASETS:

|

| 10 |

+

TEST: ("openvocab_ade20k_full_sem_seg_val",)

|

| 11 |

+

|

| 12 |

+

OUTPUT_DIR: ./evaluation/fc-clip/a847

|

configs/mixed-mask-training/fc-clip/fcclip/fcclip_convnext_large_eval_ade20k.yaml

ADDED

|

@@ -0,0 +1,55 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: ../maskformer2_R50_bs16_50ep.yaml

|

| 2 |

+

MODEL:

|

| 3 |

+

META_ARCHITECTURE: "FCCLIP"

|

| 4 |

+

SEM_SEG_HEAD:

|

| 5 |

+

NAME: "FCCLIPHead"

|

| 6 |

+

# backbone part.

|

| 7 |

+

MASK_ADAPTER:

|

| 8 |

+

NAME: "MASKAdapterHead"

|

| 9 |

+

MASK_IN_CHANNELS: 16

|

| 10 |

+

NUM_CHANNELS: 768

|

| 11 |

+

USE_CHECKPOINT: False

|

| 12 |

+

NUM_OUTPUT_MAPS: 16

|

| 13 |

+

MASK_THRESHOLD: 0.5

|

| 14 |

+

BACKBONE:

|

| 15 |

+

NAME: "CLIP"

|

| 16 |

+

WEIGHTS: ""

|

| 17 |

+

PIXEL_MEAN: [122.7709383, 116.7460125, 104.09373615]

|

| 18 |

+

PIXEL_STD: [68.5005327, 66.6321579, 70.32316305]

|

| 19 |

+

FC_CLIP:

|

| 20 |

+

CLIP_MODEL_NAME: "convnext_large_d_320"

|

| 21 |

+

CLIP_PRETRAINED_WEIGHTS: "laion2b_s29b_b131k_ft_soup"

|

| 22 |

+

EMBED_DIM: 768

|

| 23 |

+

GEOMETRIC_ENSEMBLE_ALPHA: 0.7

|

| 24 |

+

GEOMETRIC_ENSEMBLE_BETA: 0.9

|

| 25 |

+

MASK_FORMER:

|

| 26 |

+

NUM_OBJECT_QUERIES: 250

|

| 27 |

+

TEST:

|

| 28 |

+

SEMANTIC_ON: True

|

| 29 |

+

INSTANCE_ON: True

|

| 30 |

+

PANOPTIC_ON: True

|

| 31 |

+

OBJECT_MASK_THRESHOLD: 0.0

|

| 32 |

+

|

| 33 |

+

INPUT:

|

| 34 |

+

IMAGE_SIZE: 1024

|

| 35 |

+

MIN_SCALE: 0.1

|

| 36 |

+

MAX_SCALE: 2.0

|

| 37 |

+

COLOR_AUG_SSD: False

|

| 38 |

+

SOLVER:

|

| 39 |

+

IMS_PER_BATCH: 24

|

| 40 |

+

BASE_LR: 0.0001

|

| 41 |

+

WARMUP_FACTOR: 1.0

|

| 42 |

+

WARMUP_ITERS: 0

|

| 43 |

+

WEIGHT_DECAY: 0.05

|

| 44 |

+

STEPS: (86743, 94629)

|

| 45 |

+

MAX_ITER: 98572

|

| 46 |

+

CHECKPOINT_PERIOD: 3300

|

| 47 |

+

TEST:

|

| 48 |

+

EVAL_PERIOD: 3300

|

| 49 |

+

|

| 50 |

+

#SEED: 9782623

|

| 51 |

+

DATASETS:

|

| 52 |

+

TRAIN: ("openvocab_coco_2017_train_panoptic_with_sem_seg",)

|

| 53 |

+

TEST: ("openvocab_ade20k_panoptic_val",)

|

| 54 |

+

|

| 55 |

+

OUTPUT_DIR: ./evaluation/fc-clip/ade20k

|

configs/mixed-mask-training/fc-clip/fcclip/fcclip_convnext_large_eval_coco.yaml

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: ./fcclip_convnext_large_eval_ade20k.yaml

|

| 2 |

+

DATASETS:

|

| 3 |

+

TEST: ("openvocab_coco_2017_val_panoptic_with_sem_seg",)

|

| 4 |

+

OUTPUT_DIR: ./coco-test

|

configs/mixed-mask-training/fc-clip/fcclip/fcclip_convnext_large_eval_pas20.yaml

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: ./fcclip_convnext_large_eval_ade20k.yaml

|

| 2 |

+

|

| 3 |

+

MODEL:

|

| 4 |

+

MASK_FORMER:

|

| 5 |

+

TEST:

|

| 6 |

+

PANOPTIC_ON: False

|

| 7 |

+

INSTANCE_ON: False

|

| 8 |

+

|

| 9 |

+

DATASETS:

|

| 10 |

+

TEST: ("openvocab_pascal20_sem_seg_val",)

|

| 11 |

+

|

| 12 |

+

OUTPUT_DIR: ./evaluation/fc-clip/pas20

|

configs/mixed-mask-training/fc-clip/fcclip/fcclip_convnext_large_eval_pc459.yaml

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: ./fcclip_convnext_large_eval_ade20k.yaml

|

| 2 |

+

|

| 3 |

+

MODEL:

|

| 4 |

+

MASK_FORMER:

|

| 5 |

+

TEST:

|

| 6 |

+

PANOPTIC_ON: False

|

| 7 |

+

INSTANCE_ON: False

|

| 8 |

+

|

| 9 |

+

DATASETS:

|

| 10 |

+

TEST: ("openvocab_pascal_ctx459_sem_seg_val",)

|

| 11 |

+

|

| 12 |

+

OUTPUT_DIR: ./evaluation/fc-clip/pc459

|

configs/mixed-mask-training/fc-clip/fcclip/fcclip_convnext_large_eval_pc59.yaml

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: ./fcclip_convnext_large_eval_ade20k.yaml

|

| 2 |

+

|

| 3 |

+

MODEL:

|

| 4 |

+

MASK_FORMER:

|

| 5 |

+

TEST:

|

| 6 |

+

PANOPTIC_ON: False

|

| 7 |

+

INSTANCE_ON: False

|

| 8 |

+

|

| 9 |

+

DATASETS:

|

| 10 |

+

TEST: ("openvocab_pascal_ctx59_sem_seg_val",)

|

| 11 |

+

|

| 12 |

+

OUTPUT_DIR: ./evaluation/fc-clip/pc59

|

configs/mixed-mask-training/fc-clip/maskformer2_R50_bs16_50ep.yaml

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: Base-COCO-PanopticSegmentation.yaml

|

| 2 |

+

MODEL:

|

| 3 |

+

META_ARCHITECTURE: "MaskFormer"

|

| 4 |

+

SEM_SEG_HEAD:

|

| 5 |

+

NAME: "MaskFormerHead"

|

| 6 |

+

IN_FEATURES: ["res2", "res3", "res4", "res5"]

|

| 7 |

+

IGNORE_VALUE: 255

|

| 8 |

+

NUM_CLASSES: 133

|

| 9 |

+

LOSS_WEIGHT: 1.0

|

| 10 |

+

CONVS_DIM: 256

|

| 11 |

+

MASK_DIM: 256

|

| 12 |

+

NORM: "GN"

|

| 13 |

+

# pixel decoder

|

| 14 |

+

PIXEL_DECODER_NAME: "MSDeformAttnPixelDecoder"

|

| 15 |

+

IN_FEATURES: ["res2", "res3", "res4", "res5"]

|

| 16 |

+

DEFORMABLE_TRANSFORMER_ENCODER_IN_FEATURES: ["res3", "res4", "res5"]

|

| 17 |

+

COMMON_STRIDE: 4

|

| 18 |

+

TRANSFORMER_ENC_LAYERS: 6

|

| 19 |

+

MASK_FORMER:

|

| 20 |

+

TRANSFORMER_DECODER_NAME: "MultiScaleMaskedTransformerDecoder"

|

| 21 |

+

TRANSFORMER_IN_FEATURE: "multi_scale_pixel_decoder"

|

| 22 |

+

DEEP_SUPERVISION: True

|

| 23 |

+

NO_OBJECT_WEIGHT: 0.1

|

| 24 |

+

CLASS_WEIGHT: 2.0

|

| 25 |

+

MASK_WEIGHT: 5.0

|

| 26 |

+

DICE_WEIGHT: 5.0

|

| 27 |

+

HIDDEN_DIM: 256

|

| 28 |

+

NUM_OBJECT_QUERIES: 100

|

| 29 |

+

NHEADS: 8

|

| 30 |

+

DROPOUT: 0.0

|

| 31 |

+

DIM_FEEDFORWARD: 2048

|

| 32 |

+

ENC_LAYERS: 0

|

| 33 |

+

PRE_NORM: False

|

| 34 |

+

ENFORCE_INPUT_PROJ: False

|

| 35 |

+

SIZE_DIVISIBILITY: 32

|

| 36 |

+

DEC_LAYERS: 10 # 9 decoder layers, add one for the loss on learnable query

|

| 37 |

+

TRAIN_NUM_POINTS: 12544

|

| 38 |

+

OVERSAMPLE_RATIO: 3.0

|

| 39 |

+

IMPORTANCE_SAMPLE_RATIO: 0.75

|

| 40 |

+

TEST:

|

| 41 |

+

SEMANTIC_ON: True

|

| 42 |

+

INSTANCE_ON: True

|

| 43 |

+

PANOPTIC_ON: True

|

| 44 |

+

OVERLAP_THRESHOLD: 0.8

|

| 45 |

+

OBJECT_MASK_THRESHOLD: 0.8

|

configs/mixed-mask-training/maftp/Base-COCO-PanopticSegmentation.yaml

ADDED

|

@@ -0,0 +1,62 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MODEL:

|

| 2 |

+

BACKBONE:

|

| 3 |

+

FREEZE_AT: 0

|

| 4 |

+

NAME: "CLIP"

|

| 5 |

+

# WEIGHTS: "detectron2://ImageNetPretrained/torchvision/R-50.pkl"

|

| 6 |

+

PIXEL_MEAN: [122.7709383, 116.7460125, 104.09373615]

|

| 7 |

+

PIXEL_STD: [68.5005327, 66.6321579, 70.32316305]

|

| 8 |

+

RESNETS:

|

| 9 |

+

DEPTH: 50

|

| 10 |

+

STEM_TYPE: "basic" # not used

|

| 11 |

+

STEM_OUT_CHANNELS: 64

|

| 12 |

+

STRIDE_IN_1X1: False

|

| 13 |

+

OUT_FEATURES: ["res2", "res3", "res4", "res5"]

|

| 14 |

+

# NORM: "SyncBN"

|

| 15 |

+

RES5_MULTI_GRID: [1, 1, 1] # not used

|

| 16 |

+

DATASETS:

|

| 17 |

+

TRAIN: ("coco_2017_train_panoptic",)

|

| 18 |

+

TEST: ("coco_2017_val_panoptic_with_sem_seg",) # to evaluate instance and semantic performance as well

|

| 19 |

+

SOLVER:

|

| 20 |

+

IMS_PER_BATCH: 8

|

| 21 |

+

BASE_LR: 0.0001

|

| 22 |

+

BIAS_LR_FACTOR: 1.0

|

| 23 |

+

CHECKPOINT_PERIOD: 50000000

|

| 24 |

+

MAX_ITER: 55000

|

| 25 |

+

LR_SCHEDULER_NAME: WarmupPolyLR

|

| 26 |

+

MOMENTUM: 0.9

|

| 27 |

+

NESTEROV: false

|

| 28 |

+

OPTIMIZER: ADAMW

|

| 29 |

+

POLY_LR_CONSTANT_ENDING: 0.0

|

| 30 |

+

POLY_LR_POWER: 0.9

|

| 31 |

+

REFERENCE_WORLD_SIZE: 0

|

| 32 |

+

WARMUP_FACTOR: 1.0

|

| 33 |

+

WARMUP_ITERS: 10

|

| 34 |

+

WARMUP_METHOD: linear

|

| 35 |

+

WEIGHT_DECAY: 2.0e-05

|

| 36 |

+

#WEIGHT_DECAY: 0.05

|

| 37 |

+

WEIGHT_DECAY_BIAS: null

|

| 38 |

+

WEIGHT_DECAY_EMBED: 0.0

|

| 39 |

+

WEIGHT_DECAY_NORM: 0.0

|

| 40 |

+

STEPS: (327778, 355092)

|

| 41 |

+

BACKBONE_MULTIPLIER: 0.1

|

| 42 |

+

CLIP_GRADIENTS:

|

| 43 |

+

ENABLED: True

|

| 44 |

+

CLIP_TYPE: "full_model"

|

| 45 |

+

CLIP_VALUE: 1.0

|

| 46 |

+

NORM_TYPE: 2.0

|

| 47 |

+

AMP:

|

| 48 |

+

ENABLED: True

|

| 49 |

+

INPUT:

|

| 50 |

+

IMAGE_SIZE: 1024

|

| 51 |

+

MIN_SCALE: 0.1

|

| 52 |

+

MAX_SCALE: 2.0

|

| 53 |

+

MIN_SIZE_TEST: 896

|

| 54 |

+

MAX_SIZE_TEST: 896

|

| 55 |

+

FORMAT: "RGB"

|

| 56 |

+

DATASET_MAPPER_NAME: "coco_panoptic_lsj"

|

| 57 |

+

TEST:

|

| 58 |

+

EVAL_PERIOD: 5000

|

| 59 |

+

DATALOADER:

|

| 60 |

+

FILTER_EMPTY_ANNOTATIONS: True

|

| 61 |

+

NUM_WORKERS: 8

|

| 62 |

+

VERSION: 2

|

configs/mixed-mask-training/maftp/maskformer2_R50_bs16_50ep.yaml

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: Base-COCO-PanopticSegmentation.yaml

|

| 2 |

+

MODEL:

|

| 3 |

+

META_ARCHITECTURE: "MaskFormer"

|

| 4 |

+

SEM_SEG_HEAD:

|

| 5 |

+

NAME: "MaskFormerHead"

|

| 6 |

+

IN_FEATURES: ["res2", "res3", "res4", "res5"]

|

| 7 |

+

IGNORE_VALUE: 255

|

| 8 |

+

NUM_CLASSES: 133

|

| 9 |

+

LOSS_WEIGHT: 1.0

|

| 10 |

+

CONVS_DIM: 256

|

| 11 |

+

MASK_DIM: 256

|

| 12 |

+

NORM: "GN"

|

| 13 |

+

# pixel decoder

|

| 14 |

+

PIXEL_DECODER_NAME: "MSDeformAttnPixelDecoder"

|

| 15 |

+

IN_FEATURES: ["res2", "res3", "res4", "res5"]

|

| 16 |

+

DEFORMABLE_TRANSFORMER_ENCODER_IN_FEATURES: ["res3", "res4", "res5"]

|

| 17 |

+

COMMON_STRIDE: 4

|

| 18 |

+

TRANSFORMER_ENC_LAYERS: 6

|

| 19 |

+

MASK_FORMER:

|

| 20 |

+

TRANSFORMER_DECODER_NAME: "MultiScaleMaskedTransformerDecoder"

|

| 21 |

+

TRANSFORMER_IN_FEATURE: "multi_scale_pixel_decoder"

|

| 22 |

+

DEEP_SUPERVISION: True

|

| 23 |

+

NO_OBJECT_WEIGHT: 0.1

|

| 24 |

+

CLASS_WEIGHT: 2.0

|

| 25 |

+

MASK_WEIGHT: 5.0

|

| 26 |

+

DICE_WEIGHT: 5.0

|

| 27 |

+

HIDDEN_DIM: 256

|

| 28 |

+

NUM_OBJECT_QUERIES: 100

|

| 29 |

+

NHEADS: 8

|

| 30 |

+

DROPOUT: 0.0

|

| 31 |

+

DIM_FEEDFORWARD: 2048

|

| 32 |

+

ENC_LAYERS: 0

|

| 33 |

+

PRE_NORM: False

|

| 34 |

+

ENFORCE_INPUT_PROJ: False

|

| 35 |

+

SIZE_DIVISIBILITY: 32

|

| 36 |

+

DEC_LAYERS: 10 # 9 decoder layers, add one for the loss on learnable query

|

| 37 |

+

TRAIN_NUM_POINTS: 12544

|

| 38 |

+

OVERSAMPLE_RATIO: 3.0

|

| 39 |

+

IMPORTANCE_SAMPLE_RATIO: 0.75

|

| 40 |

+

TEST:

|

| 41 |

+

SEMANTIC_ON: True

|

| 42 |

+

INSTANCE_ON: False

|

| 43 |

+

PANOPTIC_ON: False

|

| 44 |

+

OBJECT_MASK_THRESHOLD: 0.2

|

| 45 |

+

OVERLAP_THRESHOLD: 0.7

|

configs/mixed-mask-training/maftp/semantic/eval_a847.yaml

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: ./eval.yaml

|

| 2 |

+

|

| 3 |

+

MODEL:

|

| 4 |

+

MASK_FORMER:

|

| 5 |

+

TEST:

|

| 6 |

+

PANOPTIC_ON: False

|

| 7 |

+

INSTANCE_ON: False

|

| 8 |

+

|

| 9 |

+

DATASETS:

|

| 10 |

+

TEST: ("openvocab_ade20k_full_sem_seg_val",)

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

OUTPUT_DIR: ./eval/a847

|

configs/mixed-mask-training/maftp/semantic/eval_pas20.yaml

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: ./eval.yaml

|

| 2 |

+

|

| 3 |

+

MODEL:

|

| 4 |

+

MASK_FORMER:

|

| 5 |

+

TEST:

|

| 6 |

+

PANOPTIC_ON: False

|

| 7 |

+

INSTANCE_ON: False

|

| 8 |

+

|

| 9 |

+

DATASETS:

|

| 10 |

+

TEST: ("openvocab_pascal20_sem_seg_val",)

|

| 11 |

+

|

| 12 |

+

OUTPUT_DIR: ./eval/pas20

|

configs/mixed-mask-training/maftp/semantic/eval_pas21.yaml

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: ./eval.yaml

|

| 2 |

+

|

| 3 |

+

MODEL:

|

| 4 |

+

MASK_FORMER:

|

| 5 |

+

TEST:

|

| 6 |

+

PANOPTIC_ON: False

|

| 7 |

+

INSTANCE_ON: False

|

| 8 |

+

|

| 9 |

+

DATASETS:

|

| 10 |

+

TEST: ("openvocab_pascal21_sem_seg_val",)

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

OUTPUT_DIR: ./eval/pas21

|

configs/mixed-mask-training/maftp/semantic/eval_pc459.yaml

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: ./eval.yaml

|

| 2 |

+

|

| 3 |

+

MODEL:

|

| 4 |

+

MASK_FORMER:

|

| 5 |

+

TEST:

|

| 6 |

+

PANOPTIC_ON: False

|

| 7 |

+

INSTANCE_ON: False

|

| 8 |

+

|

| 9 |

+

DATASETS:

|

| 10 |

+

TEST: ("openvocab_pascal_ctx459_sem_seg_val",)

|

| 11 |

+

|

| 12 |

+

OUTPUT_DIR: ./eval/pc459

|

configs/mixed-mask-training/maftp/semantic/eval_pc59.yaml

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_BASE_: ./eval.yaml

|

| 2 |

+

|

| 3 |

+

MODEL:

|

| 4 |

+

MASK_FORMER:

|

| 5 |

+

TEST:

|

| 6 |

+

PANOPTIC_ON: False

|

| 7 |

+

INSTANCE_ON: False

|

| 8 |

+

|

| 9 |

+

DATASETS:

|

| 10 |

+

TEST: ("openvocab_pascal_ctx59_sem_seg_val",)

|

| 11 |

+

|

| 12 |

+

OUTPUT_DIR: ./eval/pc59

|

configs/mixed-mask-training/maftp/semantic/train_semantic_base_eval_a150.yaml

ADDED

|

@@ -0,0 +1,50 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# python train_net.py --config-file configs/semantic/train_semantic_base.yaml --num-gpus 8

|

| 2 |

+

|

| 3 |

+

_BASE_: ../maskformer2_R50_bs16_50ep.yaml

|

| 4 |

+

MODEL:

|

| 5 |

+

META_ARCHITECTURE: "MAFT_Plus" # FCCLIP MAFT_Plus

|

| 6 |

+

SEM_SEG_HEAD:

|

| 7 |

+

NAME: "FCCLIPHead"

|

| 8 |

+

NUM_CLASSES: 171

|

| 9 |

+

MASK_ADAPTER:

|

| 10 |

+

NAME: "MASKAdapterHead"

|

| 11 |

+

MASK_IN_CHANNELS: 16

|

| 12 |

+

NUM_CHANNELS: 768

|

| 13 |

+

USE_CHECKPOINT: False

|

| 14 |

+

NUM_OUTPUT_MAPS: 16

|

| 15 |

+

MASK_THRESHOLD: 0.5

|

| 16 |

+

FC_CLIP:

|

| 17 |

+

CLIP_MODEL_NAME: "convnext_base_w_320"

|

| 18 |

+

CLIP_PRETRAINED_WEIGHTS: "laion_aesthetic_s13b_b82k_augreg"

|

| 19 |

+

EMBED_DIM: 640

|

| 20 |

+

GEOMETRIC_ENSEMBLE_ALPHA: 0.7

|

| 21 |

+

GEOMETRIC_ENSEMBLE_BETA: 1.0

|

| 22 |

+

rc_weights: 0.1

|

| 23 |

+

MASK_FORMER:

|

| 24 |

+

TEST:

|

| 25 |

+

SEMANTIC_ON: True

|

| 26 |

+

INSTANCE_ON: False

|

| 27 |

+

PANOPTIC_ON: False

|

| 28 |

+

OBJECT_MASK_THRESHOLD: 0.0

|

| 29 |

+

cdt_params:

|

| 30 |

+

- 640

|

| 31 |

+

- 8

|

| 32 |

+

|

| 33 |

+

INPUT:

|

| 34 |

+

DATASET_MAPPER_NAME: "mask_former_semantic" # mask_former_semantic coco_panoptic_lsj

|

| 35 |

+

DATASETS:

|

| 36 |

+

TRAIN: ("openvocab_coco_2017_train_stuff_sem_seg",)

|

| 37 |

+

TEST: ('openvocab_ade20k_panoptic_val',)

|

| 38 |

+

|

| 39 |

+

SOLVER:

|

| 40 |

+

IMS_PER_BATCH: 24

|

| 41 |

+

BASE_LR: 0.0001

|

| 42 |

+

STEPS: (43371, 47314)

|

| 43 |

+

MAX_ITER: 49286

|

| 44 |

+

CHECKPOINT_PERIOD: 2500

|

| 45 |

+

TEST:

|

| 46 |

+

EVAL_PERIOD: 2500

|

| 47 |

+

INPUT:

|

| 48 |

+

DATASET_MAPPER_NAME: "mask_former_semantic" #

|

| 49 |

+

OUTPUT_DIR: ../evaluation/maftp-base/ade20k

|

| 50 |

+

|

configs/mixed-mask-training/maftp/semantic/train_semantic_large_eval_a150.yaml

ADDED

|

@@ -0,0 +1,46 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# python train_net.py --config-file configs/semantic/train_semantic_large.yaml --num-gpus 8

|

| 2 |

+

|

| 3 |

+

_BASE_: ../maskformer2_R50_bs16_50ep.yaml

|

| 4 |

+

MODEL:

|

| 5 |

+

META_ARCHITECTURE: "MAFT_Plus" # FCCLIP MAFT_Plus

|

| 6 |

+

SEM_SEG_HEAD:

|

| 7 |

+

NAME: "FCCLIPHead"

|

| 8 |

+

NUM_CLASSES: 171

|

| 9 |

+

MASK_ADAPTER:

|

| 10 |

+

NAME: "MASKAdapterHead"

|

| 11 |

+

MASK_IN_CHANNELS: 16

|

| 12 |

+

NUM_CHANNELS: 768

|

| 13 |

+

USE_CHECKPOINT: False

|

| 14 |

+

NUM_OUTPUT_MAPS: 16

|

| 15 |

+

MASK_THRESHOLD: 0.5

|

| 16 |

+

FC_CLIP:

|

| 17 |

+

CLIP_MODEL_NAME: "convnext_large_d_320"

|

| 18 |

+

CLIP_PRETRAINED_WEIGHTS: "laion2b_s29b_b131k_ft_soup"

|

| 19 |

+

EMBED_DIM: 768

|

| 20 |

+

GEOMETRIC_ENSEMBLE_ALPHA: 0.8

|

| 21 |

+

GEOMETRIC_ENSEMBLE_BETA: 1.0

|

| 22 |

+

rc_weights: 0.1

|

| 23 |

+

MASK_FORMER:

|

| 24 |

+

TEST:

|

| 25 |

+

SEMANTIC_ON: True

|

| 26 |

+

INSTANCE_ON: True

|

| 27 |

+

PANOPTIC_ON: True

|

| 28 |

+

OBJECT_MASK_THRESHOLD: 0.0

|

| 29 |

+

|

| 30 |

+

SOLVER:

|

| 31 |

+

IMS_PER_BATCH: 24

|

| 32 |

+

BASE_LR: 0.0001

|

| 33 |

+

STEPS: (43371, 47314)

|

| 34 |

+

MAX_ITER: 49286

|

| 35 |

+

CHECKPOINT_PERIOD: 2500

|

| 36 |

+

TEST:

|

| 37 |

+

EVAL_PERIOD: 2500

|

| 38 |

+

INPUT:

|

| 39 |

+

DATASET_MAPPER_NAME: "mask_former_semantic" # mask_former_semantic coco_panoptic_lsj

|

| 40 |

+

DATASETS:

|

| 41 |

+

TRAIN: ("openvocab_coco_2017_train_stuff_sem_seg",) # openvocab_coco_2017_train_panoptic_with_sem_seg

|

| 42 |

+

TEST: ('openvocab_ade20k_panoptic_val',)

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

OUTPUT_DIR: ../evaluation/maftp-large/ade20k

|

demo/demo.py

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

This file may have been modified by Bytedance Ltd. and/or its affiliates (“Bytedance's Modifications”).

|

| 3 |

+

All Bytedance's Modifications are Copyright (year) Bytedance Ltd. and/or its affiliates.

|

| 4 |

+

|

| 5 |

+

Reference: https://github.com/facebookresearch/Mask2Former/blob/main/demo/demo.py

|

| 6 |

+

"""

|

| 7 |

+

|

| 8 |

+

import argparse

|

| 9 |

+

import glob

|

| 10 |

+

import multiprocessing as mp

|

| 11 |

+

import os

|

| 12 |

+

|

| 13 |

+

# fmt: off

|

| 14 |

+

import sys

|

| 15 |

+

sys.path.insert(1, os.path.join(sys.path[0], '..'))

|

| 16 |

+

# fmt: on

|

| 17 |

+

|

| 18 |

+

import tempfile

|

| 19 |

+

import time

|

| 20 |

+

import warnings

|

| 21 |

+

|

| 22 |

+

import cv2

|

| 23 |

+

import numpy as np

|

| 24 |

+

import tqdm

|

| 25 |

+

|

| 26 |

+

from detectron2.config import get_cfg

|

| 27 |

+

from detectron2.data.detection_utils import read_image

|

| 28 |

+

from detectron2.projects.deeplab import add_deeplab_config

|

| 29 |

+

from detectron2.utils.logger import setup_logger

|

| 30 |

+

|

| 31 |

+

from fcclip import add_maskformer2_config, add_fcclip_config, add_mask_adapter_config

|

| 32 |

+

from predictor import VisualizationDemo

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

# constants

|

| 36 |

+

WINDOW_NAME = "mask-adapter demo"

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

def setup_cfg(args):

|

| 40 |

+

# load config from file and command-line arguments

|

| 41 |

+

cfg = get_cfg()

|

| 42 |

+

add_deeplab_config(cfg)

|

| 43 |

+

add_maskformer2_config(cfg)

|

| 44 |

+

add_fcclip_config(cfg)

|

| 45 |

+

add_mask_adapter_config(cfg)

|

| 46 |

+

cfg.merge_from_file(args.config_file)

|

| 47 |

+

cfg.merge_from_list(args.opts)

|

| 48 |

+

cfg.freeze()

|

| 49 |

+

return cfg

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

def get_parser():

|

| 53 |

+

parser = argparse.ArgumentParser(description="mask-adapter demo for builtin configs")

|

| 54 |

+

parser.add_argument(

|

| 55 |

+

"--config-file",

|

| 56 |

+

default="configs/mixed-mask-training/fc-clip/fcclip/fcclip_convnext_large_eval_ade20k.yaml",

|

| 57 |

+

metavar="FILE",

|

| 58 |

+

help="path to config file",

|

| 59 |

+

)

|

| 60 |

+

parser.add_argument("--webcam", action="store_true", help="Take inputs from webcam.")

|

| 61 |

+

parser.add_argument("--video-input", help="Path to video file.")

|

| 62 |

+

parser.add_argument(

|

| 63 |

+

"--input",

|

| 64 |

+

nargs="+",

|

| 65 |

+

help="A list of space separated input images; "

|

| 66 |

+

"or a single glob pattern such as 'directory/*.jpg'",

|

| 67 |

+

)

|

| 68 |

+

parser.add_argument(

|

| 69 |

+

"--output",

|

| 70 |

+

help="A file or directory to save output visualizations. "

|

| 71 |

+

"If not given, will show output in an OpenCV window.",

|

| 72 |

+

)

|

| 73 |

+

|

| 74 |

+

parser.add_argument(

|

| 75 |

+

"--confidence-threshold",

|

| 76 |

+

type=float,

|

| 77 |

+

default=0.5,

|

| 78 |

+

help="Minimum score for instance predictions to be shown",

|

| 79 |

+

)

|

| 80 |

+

parser.add_argument(

|

| 81 |

+

"--opts",

|

| 82 |

+

help="Modify config options using the command-line 'KEY VALUE' pairs",

|

| 83 |

+

default=[],

|

| 84 |

+

nargs=argparse.REMAINDER,

|

| 85 |

+

)

|

| 86 |

+

return parser

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

def test_opencv_video_format(codec, file_ext):

|

| 90 |

+

with tempfile.TemporaryDirectory(prefix="video_format_test") as dir:

|

| 91 |

+

filename = os.path.join(dir, "test_file" + file_ext)

|

| 92 |

+

writer = cv2.VideoWriter(

|

| 93 |

+

filename=filename,

|

| 94 |

+

fourcc=cv2.VideoWriter_fourcc(*codec),

|

| 95 |

+

fps=float(30),

|

| 96 |

+

frameSize=(10, 10),

|

| 97 |

+

isColor=True,

|

| 98 |

+

)

|

| 99 |

+

[writer.write(np.zeros((10, 10, 3), np.uint8)) for _ in range(30)]

|

| 100 |

+

writer.release()

|

| 101 |

+

if os.path.isfile(filename):

|

| 102 |

+

return True

|

| 103 |

+

return False

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

if __name__ == "__main__":

|

| 107 |

+

mp.set_start_method("spawn", force=True)

|

| 108 |

+

args = get_parser().parse_args()

|

| 109 |

+

setup_logger(name="fvcore")

|

| 110 |

+

logger = setup_logger()

|

| 111 |

+

logger.info("Arguments: " + str(args))

|

| 112 |

+

|

| 113 |

+

cfg = setup_cfg(args)

|

| 114 |

+

|

| 115 |

+

demo = VisualizationDemo(cfg)

|

| 116 |

+

|

| 117 |

+

if args.input:

|

| 118 |

+

if len(args.input) == 1:

|

| 119 |

+

args.input = glob.glob(os.path.expanduser(args.input[0]))

|

| 120 |

+

assert args.input, "The input path(s) was not found"

|

| 121 |

+

for path in tqdm.tqdm(args.input, disable=not args.output):

|

| 122 |

+

# use PIL, to be consistent with evaluation

|

| 123 |

+

img = read_image(path, format="BGR")

|

| 124 |

+

start_time = time.time()

|

| 125 |

+

predictions, visualized_output = demo.run_on_image(img)

|

| 126 |

+

logger.info(

|

| 127 |

+