W&B: Add advanced features tutorial (#4384)

Browse files* Improve docstrings and run names

* default wandb login prompt with timeout

* return key

* Update api_key check logic

* Properly support zipped dataset feature

* update docstring

* Revert tuorial change

* extend changes to log_dataset

* add run name

* bug fix

* bug fix

* Update comment

* fix import check

* remove unused import

* Hardcore .yaml file extension

* reduce code

* Reformat using pycharm

* Remove redundant try catch

* More refactoring and bug fixes

* retry

* Reformat using pycharm

* respect LOGGERS include list

* Initial readme update

* Update README.md

* Update README.md

* Update README.md

* Update README.md

* Update README.md

Co-authored-by: Glenn Jocher <[email protected]>

- utils/loggers/wandb/README.md +140 -0

utils/loggers/wandb/README.md

ADDED

|

@@ -0,0 +1,140 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

📚 This guide explains how to use **Weights & Biases** (W&B) with YOLOv5 🚀.

|

| 2 |

+

* [About Weights & Biases](#about-weights-&-biases)

|

| 3 |

+

* [First-Time Setup](#first-time-setup)

|

| 4 |

+

* [Viewing runs](#viewing-runs)

|

| 5 |

+

* [Advanced Usage: Dataset Versioning and Evaluation](#advanced-usage)

|

| 6 |

+

* [Reports: Share your work with the world!](#reports)

|

| 7 |

+

|

| 8 |

+

## About Weights & Biases

|

| 9 |

+

Think of [W&B](https://wandb.ai/site?utm_campaign=repo_yolo_wandbtutorial) like GitHub for machine learning models. With a few lines of code, save everything you need to debug, compare and reproduce your models — architecture, hyperparameters, git commits, model weights, GPU usage, and even datasets and predictions.

|

| 10 |

+

|

| 11 |

+

Used by top researchers including teams at OpenAI, Lyft, Github, and MILA, W&B is part of the new standard of best practices for machine learning. How W&B can help you optimize your machine learning workflows:

|

| 12 |

+

|

| 13 |

+

* [Debug](https://wandb.ai/wandb/getting-started/reports/Visualize-Debug-Machine-Learning-Models--VmlldzoyNzY5MDk#Free-2) model performance in real time

|

| 14 |

+

* [GPU usage](https://wandb.ai/wandb/getting-started/reports/Visualize-Debug-Machine-Learning-Models--VmlldzoyNzY5MDk#System-4), visualized automatically

|

| 15 |

+

* [Custom charts](https://wandb.ai/wandb/customizable-charts/reports/Powerful-Custom-Charts-To-Debug-Model-Peformance--VmlldzoyNzY4ODI) for powerful, extensible visualization

|

| 16 |

+

* [Share insights](https://wandb.ai/wandb/getting-started/reports/Visualize-Debug-Machine-Learning-Models--VmlldzoyNzY5MDk#Share-8) interactively with collaborators

|

| 17 |

+

* [Optimize hyperparameters](https://docs.wandb.com/sweeps) efficiently

|

| 18 |

+

* [Track](https://docs.wandb.com/artifacts) datasets, pipelines, and production models

|

| 19 |

+

|

| 20 |

+

## First-Time Setup

|

| 21 |

+

<details open>

|

| 22 |

+

<summary> Toggle Details </summary>

|

| 23 |

+

When you first train, W&B will prompt you to create a new account and will generate an **API key** for you. If you are an existing user you can retrieve your key from https://wandb.ai/authorize. This key is used to tell W&B where to log your data. You only need to supply your key once, and then it is remembered on the same device.

|

| 24 |

+

|

| 25 |

+

W&B will create a cloud **project** (default is 'YOLOv5') for your training runs, and each new training run will be provided a unique run **name** within that project as project/name. You can also manually set your project and run name as:

|

| 26 |

+

|

| 27 |

+

```shell

|

| 28 |

+

$ python train.py --project ... --name ...

|

| 29 |

+

```

|

| 30 |

+

|

| 31 |

+

<img alt="" width="800" src="https://user-images.githubusercontent.com/26833433/98183367-4acbc600-1f08-11eb-9a23-7266a4192355.jpg">

|

| 32 |

+

</details>

|

| 33 |

+

|

| 34 |

+

## Viewing Runs

|

| 35 |

+

<details open>

|

| 36 |

+

<summary> Toggle Details </summary>

|

| 37 |

+

Run information streams from your environment to the W&B cloud console as you train. This allows you to monitor and even cancel runs in <b>realtime</b> . All important information is logged:

|

| 38 |

+

|

| 39 |

+

* Training & Validation losses

|

| 40 |

+

* Metrics: Precision, Recall, [email protected], [email protected]:0.95

|

| 41 |

+

* Learning Rate over time

|

| 42 |

+

* A bounding box debugging panel, showing the training progress over time

|

| 43 |

+

* GPU: Type, **GPU Utilization**, power, temperature, **CUDA memory usage**

|

| 44 |

+

* System: Disk I/0, CPU utilization, RAM memory usage

|

| 45 |

+

* Your trained model as W&B Artifact

|

| 46 |

+

* Environment: OS and Python types, Git repository and state, **training command**

|

| 47 |

+

|

| 48 |

+

<img alt="" width="800" src="https://user-images.githubusercontent.com/26833433/98184457-bd3da580-1f0a-11eb-8461-95d908a71893.jpg">

|

| 49 |

+

</details>

|

| 50 |

+

|

| 51 |

+

## Advanced Usage

|

| 52 |

+

You can leverage W&B artifacts and Tables integration to easily visualize and manage your datasets, models and training evaluations. Here are some quick examples to get you started.

|

| 53 |

+

<details open>

|

| 54 |

+

<h3>1. Visualize and Version Datasets</h3>

|

| 55 |

+

Log, visualize, dynamically query, and understand your data with <a href='https://docs.wandb.ai/guides/data-vis/tables'>W&B Tables</a>. You can use the following command to log your dataset as a W&B Table. This will generate a <code>{dataset}_wandb.yaml</code> file which can be used to train from dataset artifact.

|

| 56 |

+

<details>

|

| 57 |

+

<summary> <b>Usage</b> </summary>

|

| 58 |

+

<b>Code</b> <code> $ python utils/logger/wandb/log_dataset.py --project ... --name ... --data .. </code>

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

</details>

|

| 62 |

+

|

| 63 |

+

<h3> 2: Train and Log Evaluation simultaneousy </h3>

|

| 64 |

+

This is an extension of the previous section, but it'll also training after uploading the dataset. <b> This also evaluation Table</b>

|

| 65 |

+

Evaluation table compares your predictions and ground truths across the validation set for each epoch. It uses the references to the already uploaded datasets,

|

| 66 |

+

so no images will be uploaded from your system more than once.

|

| 67 |

+

<details>

|

| 68 |

+

<summary> <b>Usage</b> </summary>

|

| 69 |

+

<b>Code</b> <code> $ python utils/logger/wandb/log_dataset.py --data .. --upload_data </code>

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

</details>

|

| 73 |

+

|

| 74 |

+

<h3> 3: Train using dataset artifact </h3>

|

| 75 |

+

When you upload a dataset as described in the first section, you get a new config file with an added `_wandb` to its name. This file contains the information that

|

| 76 |

+

can be used to train a model directly from the dataset artifact. <b> This also logs evaluation </b>

|

| 77 |

+

<details>

|

| 78 |

+

<summary> <b>Usage</b> </summary>

|

| 79 |

+

<b>Code</b> <code> $ python utils/logger/wandb/log_dataset.py --data {data}_wandb.yaml </code>

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

</details>

|

| 83 |

+

|

| 84 |

+

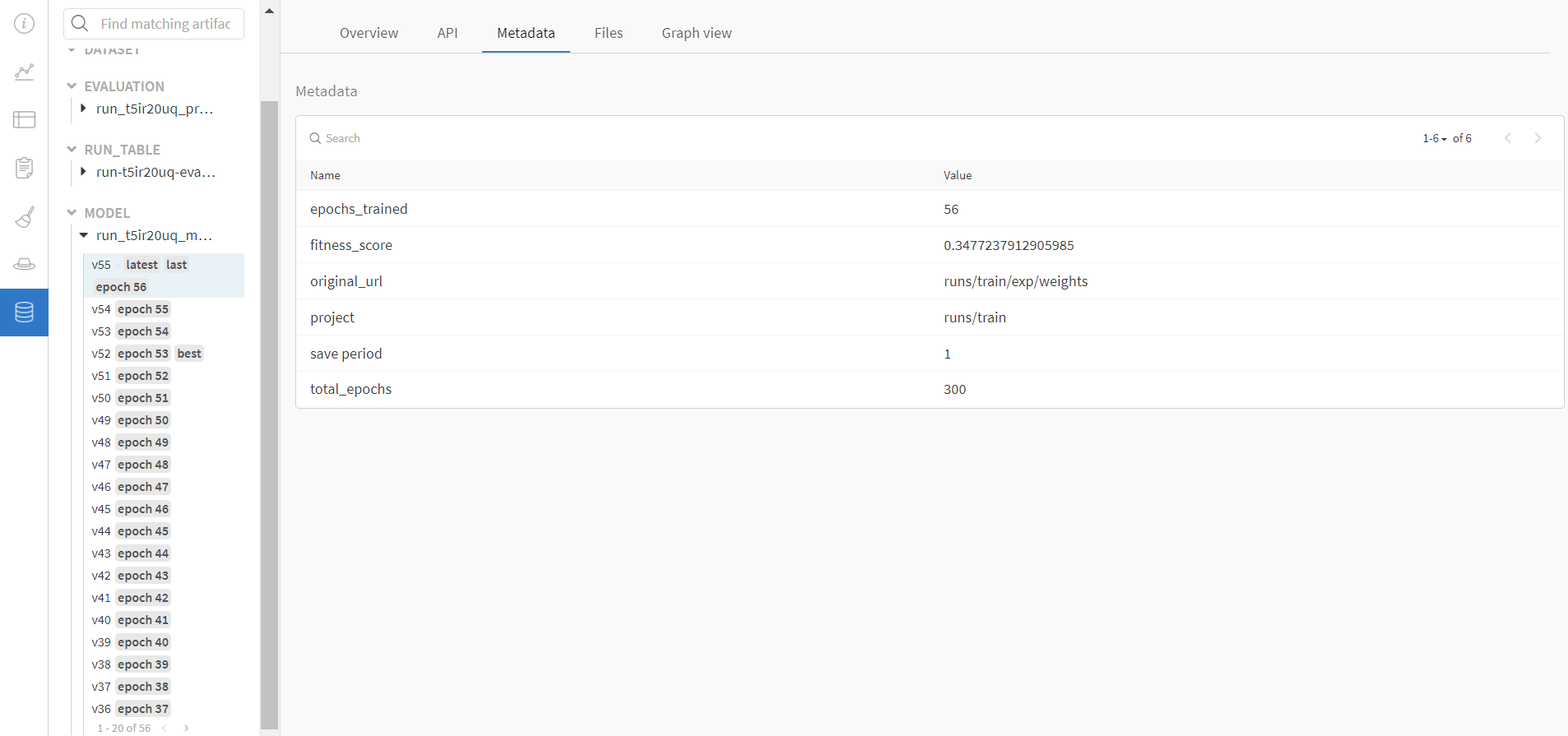

<h3> 4: Save model checkpoints as artifacts </h3>

|

| 85 |

+

To enable saving and versioning checkpoints of your experiment, pass `--save_period n` with the base cammand, where `n` represents checkpoint interval.

|

| 86 |

+

You can also log both the dataset and model checkpoints simultaneously. If not passed, only the final model will be logged

|

| 87 |

+

|

| 88 |

+

<details>

|

| 89 |

+

<summary> <b>Usage</b> </summary>

|

| 90 |

+

<b>Code</b> <code> $ python train.py --save_period 1 </code>

|

| 91 |

+

|

| 92 |

+

|

| 93 |

+

</details>

|

| 94 |

+

|

| 95 |

+

</details>

|

| 96 |

+

|

| 97 |

+

<h3> 5: Resume runs from checkpoint artifacts. </h3>

|

| 98 |

+

Any run can be resumed using artifacts if the <code>--resume</code> argument starts with <code>wandb-artifact://</code> prefix followed by the run path, i.e, <code>wandb-artifact://username/project/runid </code>. This doesn't require the model checkpoint to be present on the local system.

|

| 99 |

+

|

| 100 |

+

<details>

|

| 101 |

+

<summary> <b>Usage</b> </summary>

|

| 102 |

+

<b>Code</b> <code> $ python train.py --resume wandb-artifact://{run_path} </code>

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

</details>

|

| 106 |

+

|

| 107 |

+

<h3> 6: Resume runs from dataset artifact & checkpoint artifacts. </h3>

|

| 108 |

+

<b> Local dataset or model checkpoints are not required. This can be used to resume runs directly on a different device </b>

|

| 109 |

+

The syntax is same as the previous section, but you'll need to lof both the dataset and model checkpoints as artifacts, i.e, set bot <code>--upload_dataset</code> or

|

| 110 |

+

train from <code>_wandb.yaml</code> file and set <code>--save_period</code>

|

| 111 |

+

|

| 112 |

+

<details>

|

| 113 |

+

<summary> <b>Usage</b> </summary>

|

| 114 |

+

<b>Code</b> <code> $ python train.py --resume wandb-artifact://{run_path} </code>

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

</details>

|

| 118 |

+

|

| 119 |

+

</details>

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

|

| 123 |

+

<h3> Reports </h3>

|

| 124 |

+

W&B Reports can be created from your saved runs for sharing online. Once a report is created you will receive a link you can use to publically share your results. Here is an example report created from the COCO128 tutorial trainings of all four YOLOv5 models ([link](https://wandb.ai/glenn-jocher/yolov5_tutorial/reports/YOLOv5-COCO128-Tutorial-Results--VmlldzozMDI5OTY)).

|

| 125 |

+

|

| 126 |

+

<img alt="" width="800" src="https://user-images.githubusercontent.com/26833433/98185222-794ba000-1f0c-11eb-850f-3e9c45ad6949.jpg">

|

| 127 |

+

|

| 128 |

+

## Environments

|

| 129 |

+

YOLOv5 may be run in any of the following up-to-date verified environments (with all dependencies including [CUDA](https://developer.nvidia.com/cuda)/[CUDNN](https://developer.nvidia.com/cudnn), [Python](https://www.python.org/) and [PyTorch](https://pytorch.org/) preinstalled):

|

| 130 |

+

|

| 131 |

+

* **Google Colab and Kaggle** notebooks with free GPU: [](https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb) [](https://www.kaggle.com/ultralytics/yolov5)

|

| 132 |

+

* **Google Cloud** Deep Learning VM. See [GCP Quickstart Guide](https://github.com/ultralytics/yolov5/wiki/GCP-Quickstart)

|

| 133 |

+

* **Amazon** Deep Learning AMI. See [AWS Quickstart Guide](https://github.com/ultralytics/yolov5/wiki/AWS-Quickstart)

|

| 134 |

+

* **Docker Image**. See [Docker Quickstart Guide](https://github.com/ultralytics/yolov5/wiki/Docker-Quickstart) [](https://hub.docker.com/r/ultralytics/yolov5)

|

| 135 |

+

|

| 136 |

+

## Status

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

If this badge is green, all [YOLOv5 GitHub Actions](https://github.com/ultralytics/yolov5/actions) Continuous Integration (CI) tests are currently passing. CI tests verify correct operation of YOLOv5 training ([train.py](https://github.com/ultralytics/yolov5/blob/master/train.py)), validation ([val.py](https://github.com/ultralytics/yolov5/blob/master/val.py)), inference ([detect.py](https://github.com/ultralytics/yolov5/blob/master/detect.py)) and export ([export.py](https://github.com/ultralytics/yolov5/blob/master/export.py)) on MacOS, Windows, and Ubuntu every 24 hours and on every commit.

|

| 140 |

+

|