W&B: refactor W&B tables (#5737)

Browse files* update

* [pre-commit.ci] auto fixes from pre-commit.com hooks

for more information, see https://pre-commit.ci

* reformat

* Single-line argparser argument

* Update README.md

* [pre-commit.ci] auto fixes from pre-commit.com hooks

for more information, see https://pre-commit.ci

* Update README.md

* [pre-commit.ci] auto fixes from pre-commit.com hooks

for more information, see https://pre-commit.ci

Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com>

Co-authored-by: Glenn Jocher <[email protected]>

- train.py +1 -1

- utils/loggers/wandb/README.md +19 -14

- utils/loggers/wandb/wandb_utils.py +48 -20

train.py

CHANGED

|

@@ -475,7 +475,7 @@ def parse_opt(known=False):

|

|

| 475 |

|

| 476 |

# Weights & Biases arguments

|

| 477 |

parser.add_argument('--entity', default=None, help='W&B: Entity')

|

| 478 |

-

parser.add_argument('--upload_dataset',

|

| 479 |

parser.add_argument('--bbox_interval', type=int, default=-1, help='W&B: Set bounding-box image logging interval')

|

| 480 |

parser.add_argument('--artifact_alias', type=str, default='latest', help='W&B: Version of dataset artifact to use')

|

| 481 |

|

|

|

|

| 475 |

|

| 476 |

# Weights & Biases arguments

|

| 477 |

parser.add_argument('--entity', default=None, help='W&B: Entity')

|

| 478 |

+

parser.add_argument('--upload_dataset', nargs='?', const=True, default=False, help='W&B: Upload data, "val" option')

|

| 479 |

parser.add_argument('--bbox_interval', type=int, default=-1, help='W&B: Set bounding-box image logging interval')

|

| 480 |

parser.add_argument('--artifact_alias', type=str, default='latest', help='W&B: Version of dataset artifact to use')

|

| 481 |

|

utils/loggers/wandb/README.md

CHANGED

|

@@ -2,6 +2,7 @@

|

|

| 2 |

* [About Weights & Biases](#about-weights-&-biases)

|

| 3 |

* [First-Time Setup](#first-time-setup)

|

| 4 |

* [Viewing runs](#viewing-runs)

|

|

|

|

| 5 |

* [Advanced Usage: Dataset Versioning and Evaluation](#advanced-usage)

|

| 6 |

* [Reports: Share your work with the world!](#reports)

|

| 7 |

|

|

@@ -49,31 +50,36 @@ Run information streams from your environment to the W&B cloud console as you tr

|

|

| 49 |

* Environment: OS and Python types, Git repository and state, **training command**

|

| 50 |

|

| 51 |

<p align="center"><img width="900" alt="Weights & Biases dashboard" src="https://user-images.githubusercontent.com/26833433/135390767-c28b050f-8455-4004-adb0-3b730386e2b2.png"></p>

|

|

|

|

| 52 |

|

|

|

|

|

|

|

|

|

|

| 53 |

|

| 54 |

-

|

|

|

|

| 55 |

|

| 56 |

## Advanced Usage

|

| 57 |

You can leverage W&B artifacts and Tables integration to easily visualize and manage your datasets, models and training evaluations. Here are some quick examples to get you started.

|

| 58 |

<details open>

|

| 59 |

-

<h3>1

|

| 60 |

-

|

| 61 |

-

|

|

|

|

|

|

|

| 62 |

<summary> <b>Usage</b> </summary>

|

| 63 |

-

<b>Code</b> <code> $ python

|

| 64 |

|

| 65 |

-

|

| 66 |

</details>

|

| 67 |

|

| 68 |

-

<h3>

|

| 69 |

-

|

| 70 |

-

Evaluation table compares your predictions and ground truths across the validation set for each epoch. It uses the references to the already uploaded datasets,

|

| 71 |

-

so no images will be uploaded from your system more than once.

|

| 72 |

<details>

|

| 73 |

<summary> <b>Usage</b> </summary>

|

| 74 |

-

<b>Code</b> <code> $ python utils/logger/wandb/log_dataset.py --data ..

|

| 75 |

|

| 76 |

-

|

| 87 |

</details>

|

|

@@ -123,7 +129,6 @@ Any run can be resumed using artifacts if the <code>--resume</code> argument sta

|

|

| 123 |

|

| 124 |

</details>

|

| 125 |

|

| 126 |

-

|

| 127 |

<h3> Reports </h3>

|

| 128 |

W&B Reports can be created from your saved runs for sharing online. Once a report is created you will receive a link you can use to publically share your results. Here is an example report created from the COCO128 tutorial trainings of all four YOLOv5 models ([link](https://wandb.ai/glenn-jocher/yolov5_tutorial/reports/YOLOv5-COCO128-Tutorial-Results--VmlldzozMDI5OTY)).

|

| 129 |

|

|

|

|

| 2 |

* [About Weights & Biases](#about-weights-&-biases)

|

| 3 |

* [First-Time Setup](#first-time-setup)

|

| 4 |

* [Viewing runs](#viewing-runs)

|

| 5 |

+

* [Disabling wandb](#disabling-wandb)

|

| 6 |

* [Advanced Usage: Dataset Versioning and Evaluation](#advanced-usage)

|

| 7 |

* [Reports: Share your work with the world!](#reports)

|

| 8 |

|

|

|

|

| 50 |

* Environment: OS and Python types, Git repository and state, **training command**

|

| 51 |

|

| 52 |

<p align="center"><img width="900" alt="Weights & Biases dashboard" src="https://user-images.githubusercontent.com/26833433/135390767-c28b050f-8455-4004-adb0-3b730386e2b2.png"></p>

|

| 53 |

+

</details>

|

| 54 |

|

| 55 |

+

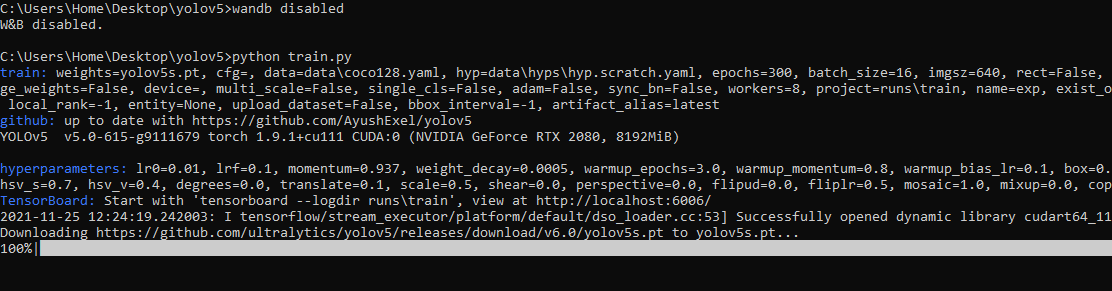

## Disabling wandb

|

| 56 |

+

* training after running `wandb disabled` inside that directory creates no wandb run

|

| 57 |

+

|

| 58 |

|

| 59 |

+

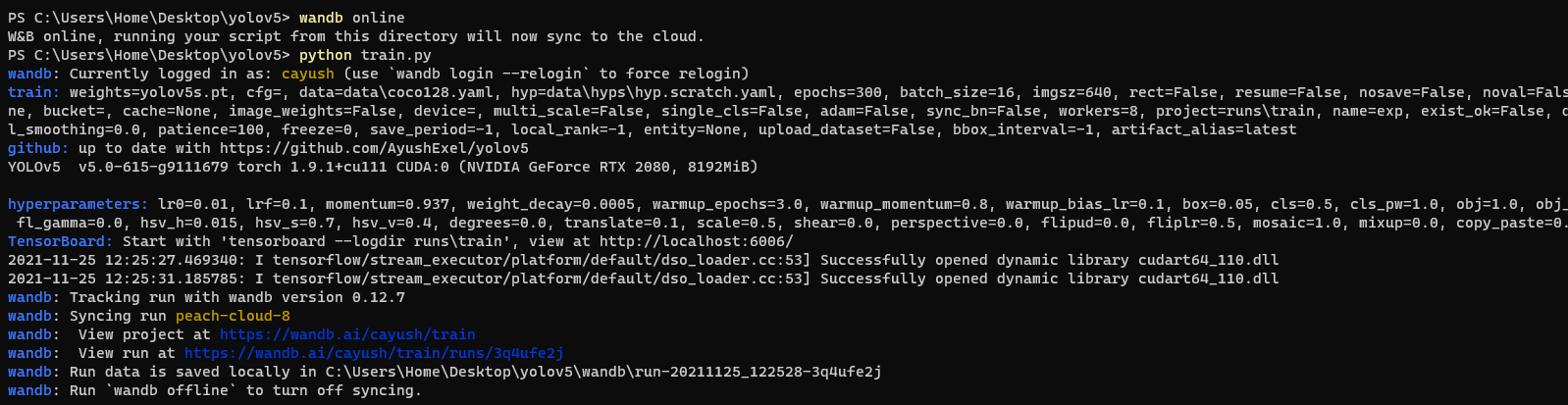

* To enable wandb again, run `wandb online`

|

| 60 |

+

|

| 61 |

|

| 62 |

## Advanced Usage

|

| 63 |

You can leverage W&B artifacts and Tables integration to easily visualize and manage your datasets, models and training evaluations. Here are some quick examples to get you started.

|

| 64 |

<details open>

|

| 65 |

+

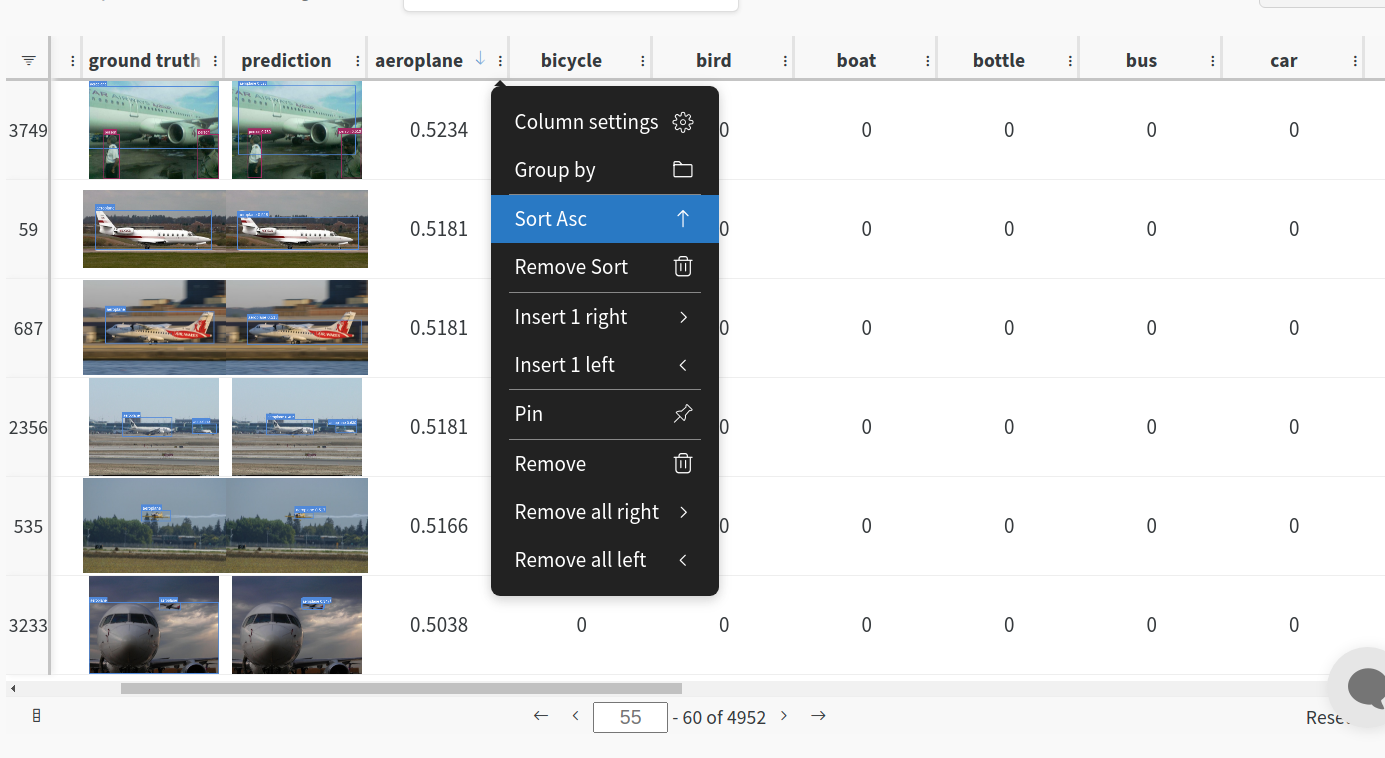

<h3> 1: Train and Log Evaluation simultaneousy </h3>

|

| 66 |

+

This is an extension of the previous section, but it'll also training after uploading the dataset. <b> This also evaluation Table</b>

|

| 67 |

+

Evaluation table compares your predictions and ground truths across the validation set for each epoch. It uses the references to the already uploaded datasets,

|

| 68 |

+

so no images will be uploaded from your system more than once.

|

| 69 |

+

<details open>

|

| 70 |

<summary> <b>Usage</b> </summary>

|

| 71 |

+

<b>Code</b> <code> $ python train.py --upload_data val</code>

|

| 72 |

|

| 73 |

+

|

| 74 |

</details>

|

| 75 |

|

| 76 |

+

<h3>2. Visualize and Version Datasets</h3>

|

| 77 |

+

Log, visualize, dynamically query, and understand your data with <a href='https://docs.wandb.ai/guides/data-vis/tables'>W&B Tables</a>. You can use the following command to log your dataset as a W&B Table. This will generate a <code>{dataset}_wandb.yaml</code> file which can be used to train from dataset artifact.

|

|

|

|

|

|

|

| 78 |

<details>

|

| 79 |

<summary> <b>Usage</b> </summary>

|

| 80 |

+

<b>Code</b> <code> $ python utils/logger/wandb/log_dataset.py --project ... --name ... --data .. </code>

|

| 81 |

|

| 82 |

+

|

| 83 |

</details>

|

| 84 |

|

| 85 |

<h3> 3: Train using dataset artifact </h3>

|

|

|

|

| 87 |

can be used to train a model directly from the dataset artifact. <b> This also logs evaluation </b>

|

| 88 |

<details>

|

| 89 |

<summary> <b>Usage</b> </summary>

|

| 90 |

+

<b>Code</b> <code> $ python train.py --data {data}_wandb.yaml </code>

|

| 91 |

|

| 92 |

|

| 93 |

</details>

|

|

|

|

| 129 |

|

| 130 |

</details>

|

| 131 |

|

|

|

|

| 132 |

<h3> Reports </h3>

|

| 133 |

W&B Reports can be created from your saved runs for sharing online. Once a report is created you will receive a link you can use to publically share your results. Here is an example report created from the COCO128 tutorial trainings of all four YOLOv5 models ([link](https://wandb.ai/glenn-jocher/yolov5_tutorial/reports/YOLOv5-COCO128-Tutorial-Results--VmlldzozMDI5OTY)).

|

| 134 |

|

utils/loggers/wandb/wandb_utils.py

CHANGED

|

@@ -202,7 +202,6 @@ class WandbLogger():

|

|

| 202 |

config_path = self.log_dataset_artifact(opt.data,

|

| 203 |

opt.single_cls,

|

| 204 |

'YOLOv5' if opt.project == 'runs/train' else Path(opt.project).stem)

|

| 205 |

-

LOGGER.info(f"Created dataset config file {config_path}")

|

| 206 |

with open(config_path, errors='ignore') as f:

|

| 207 |

wandb_data_dict = yaml.safe_load(f)

|

| 208 |

return wandb_data_dict

|

|

@@ -244,7 +243,9 @@ class WandbLogger():

|

|

| 244 |

|

| 245 |

if self.val_artifact is not None:

|

| 246 |

self.result_artifact = wandb.Artifact("run_" + wandb.run.id + "_progress", "evaluation")

|

| 247 |

-

|

|

|

|

|

|

|

| 248 |

self.val_table = self.val_artifact.get("val")

|

| 249 |

if self.val_table_path_map is None:

|

| 250 |

self.map_val_table_path()

|

|

@@ -331,28 +332,41 @@ class WandbLogger():

|

|

| 331 |

returns:

|

| 332 |

the new .yaml file with artifact links. it can be used to start training directly from artifacts

|

| 333 |

"""

|

|

|

|

|

|

|

| 334 |

self.data_dict = check_dataset(data_file) # parse and check

|

| 335 |

data = dict(self.data_dict)

|

| 336 |

nc, names = (1, ['item']) if single_cls else (int(data['nc']), data['names'])

|

| 337 |

names = {k: v for k, v in enumerate(names)} # to index dictionary

|

| 338 |

-

|

| 339 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 340 |

self.val_artifact = self.create_dataset_table(LoadImagesAndLabels(

|

| 341 |

data['val'], rect=True, batch_size=1), names, name='val') if data.get('val') else None

|

| 342 |

-

if data.get('train'):

|

| 343 |

-

data['train'] = WANDB_ARTIFACT_PREFIX + str(Path(project) / 'train')

|

| 344 |

if data.get('val'):

|

| 345 |

data['val'] = WANDB_ARTIFACT_PREFIX + str(Path(project) / 'val')

|

| 346 |

-

|

| 347 |

-

path = (

|

| 348 |

-

|

| 349 |

-

|

| 350 |

-

|

| 351 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 352 |

|

| 353 |

if self.job_type == 'Training': # builds correct artifact pipeline graph

|

|

|

|

|

|

|

|

|

|

| 354 |

self.wandb_run.use_artifact(self.val_artifact)

|

| 355 |

-

self.wandb_run.use_artifact(self.train_artifact)

|

| 356 |

self.val_artifact.wait()

|

| 357 |

self.val_table = self.val_artifact.get('val')

|

| 358 |

self.map_val_table_path()

|

|

@@ -371,7 +385,7 @@ class WandbLogger():

|

|

| 371 |

for i, data in enumerate(tqdm(self.val_table.data)):

|

| 372 |

self.val_table_path_map[data[3]] = data[0]

|

| 373 |

|

| 374 |

-

def create_dataset_table(self, dataset: LoadImagesAndLabels, class_to_id: Dict[int,str], name: str = 'dataset'):

|

| 375 |

"""

|

| 376 |

Create and return W&B artifact containing W&B Table of the dataset.

|

| 377 |

|

|

@@ -424,23 +438,34 @@ class WandbLogger():

|

|

| 424 |

"""

|

| 425 |

class_set = wandb.Classes([{'id': id, 'name': name} for id, name in names.items()])

|

| 426 |

box_data = []

|

| 427 |

-

|

|

|

|

| 428 |

for *xyxy, conf, cls in predn.tolist():

|

| 429 |

if conf >= 0.25:

|

|

|

|

| 430 |

box_data.append(

|

| 431 |

{"position": {"minX": xyxy[0], "minY": xyxy[1], "maxX": xyxy[2], "maxY": xyxy[3]},

|

| 432 |

-

"class_id":

|

| 433 |

"box_caption": f"{names[cls]} {conf:.3f}",

|

| 434 |

"scores": {"class_score": conf},

|

| 435 |

"domain": "pixel"})

|

| 436 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 437 |

boxes = {"predictions": {"box_data": box_data, "class_labels": names}} # inference-space

|

| 438 |

id = self.val_table_path_map[Path(path).name]

|

| 439 |

self.result_table.add_data(self.current_epoch,

|

| 440 |

id,

|

| 441 |

self.val_table.data[id][1],

|

| 442 |

wandb.Image(self.val_table.data[id][1], boxes=boxes, classes=class_set),

|

| 443 |

-

|

| 444 |

)

|

| 445 |

|

| 446 |

def val_one_image(self, pred, predn, path, names, im):

|

|

@@ -490,7 +515,8 @@ class WandbLogger():

|

|

| 490 |

try:

|

| 491 |

wandb.log(self.log_dict)

|

| 492 |

except BaseException as e:

|

| 493 |

-

LOGGER.info(

|

|

|

|

| 494 |

self.wandb_run.finish()

|

| 495 |

self.wandb_run = None

|

| 496 |

|

|

@@ -502,7 +528,9 @@ class WandbLogger():

|

|

| 502 |

('best' if best_result else '')])

|

| 503 |

|

| 504 |

wandb.log({"evaluation": self.result_table})

|

| 505 |

-

|

|

|

|

|

|

|

| 506 |

self.result_artifact = wandb.Artifact("run_" + wandb.run.id + "_progress", "evaluation")

|

| 507 |

|

| 508 |

def finish_run(self):

|

|

|

|

| 202 |

config_path = self.log_dataset_artifact(opt.data,

|

| 203 |

opt.single_cls,

|

| 204 |

'YOLOv5' if opt.project == 'runs/train' else Path(opt.project).stem)

|

|

|

|

| 205 |

with open(config_path, errors='ignore') as f:

|

| 206 |

wandb_data_dict = yaml.safe_load(f)

|

| 207 |

return wandb_data_dict

|

|

|

|

| 243 |

|

| 244 |

if self.val_artifact is not None:

|

| 245 |

self.result_artifact = wandb.Artifact("run_" + wandb.run.id + "_progress", "evaluation")

|

| 246 |

+

columns = ["epoch", "id", "ground truth", "prediction"]

|

| 247 |

+

columns.extend(self.data_dict['names'])

|

| 248 |

+

self.result_table = wandb.Table(columns)

|

| 249 |

self.val_table = self.val_artifact.get("val")

|

| 250 |

if self.val_table_path_map is None:

|

| 251 |

self.map_val_table_path()

|

|

|

|

| 332 |

returns:

|

| 333 |

the new .yaml file with artifact links. it can be used to start training directly from artifacts

|

| 334 |

"""

|

| 335 |

+

upload_dataset = self.wandb_run.config.upload_dataset

|

| 336 |

+

log_val_only = isinstance(upload_dataset, str) and upload_dataset == 'val'

|

| 337 |

self.data_dict = check_dataset(data_file) # parse and check

|

| 338 |

data = dict(self.data_dict)

|

| 339 |

nc, names = (1, ['item']) if single_cls else (int(data['nc']), data['names'])

|

| 340 |

names = {k: v for k, v in enumerate(names)} # to index dictionary

|

| 341 |

+

|

| 342 |

+

# log train set

|

| 343 |

+

if not log_val_only:

|

| 344 |

+

self.train_artifact = self.create_dataset_table(LoadImagesAndLabels(

|

| 345 |

+

data['train'], rect=True, batch_size=1), names, name='train') if data.get('train') else None

|

| 346 |

+

if data.get('train'):

|

| 347 |

+

data['train'] = WANDB_ARTIFACT_PREFIX + str(Path(project) / 'train')

|

| 348 |

+

|

| 349 |

self.val_artifact = self.create_dataset_table(LoadImagesAndLabels(

|

| 350 |

data['val'], rect=True, batch_size=1), names, name='val') if data.get('val') else None

|

|

|

|

|

|

|

| 351 |

if data.get('val'):

|

| 352 |

data['val'] = WANDB_ARTIFACT_PREFIX + str(Path(project) / 'val')

|

| 353 |

+

|

| 354 |

+

path = Path(data_file)

|

| 355 |

+

# create a _wandb.yaml file with artifacts links if both train and test set are logged

|

| 356 |

+

if not log_val_only:

|

| 357 |

+

path = (path.stem if overwrite_config else path.stem + '_wandb') + '.yaml' # updated data.yaml path

|

| 358 |

+

path = Path('data') / path

|

| 359 |

+

data.pop('download', None)

|

| 360 |

+

data.pop('path', None)

|

| 361 |

+

with open(path, 'w') as f:

|

| 362 |

+

yaml.safe_dump(data, f)

|

| 363 |

+

LOGGER.info(f"Created dataset config file {path}")

|

| 364 |

|

| 365 |

if self.job_type == 'Training': # builds correct artifact pipeline graph

|

| 366 |

+

if not log_val_only:

|

| 367 |

+

self.wandb_run.log_artifact(

|

| 368 |

+

self.train_artifact) # calling use_artifact downloads the dataset. NOT NEEDED!

|

| 369 |

self.wandb_run.use_artifact(self.val_artifact)

|

|

|

|

| 370 |

self.val_artifact.wait()

|

| 371 |

self.val_table = self.val_artifact.get('val')

|

| 372 |

self.map_val_table_path()

|

|

|

|

| 385 |

for i, data in enumerate(tqdm(self.val_table.data)):

|

| 386 |

self.val_table_path_map[data[3]] = data[0]

|

| 387 |

|

| 388 |

+

def create_dataset_table(self, dataset: LoadImagesAndLabels, class_to_id: Dict[int, str], name: str = 'dataset'):

|

| 389 |

"""

|

| 390 |

Create and return W&B artifact containing W&B Table of the dataset.

|

| 391 |

|

|

|

|

| 438 |

"""

|

| 439 |

class_set = wandb.Classes([{'id': id, 'name': name} for id, name in names.items()])

|

| 440 |

box_data = []

|

| 441 |

+

avg_conf_per_class = [0] * len(self.data_dict['names'])

|

| 442 |

+

pred_class_count = {}

|

| 443 |

for *xyxy, conf, cls in predn.tolist():

|

| 444 |

if conf >= 0.25:

|

| 445 |

+

cls = int(cls)

|

| 446 |

box_data.append(

|

| 447 |

{"position": {"minX": xyxy[0], "minY": xyxy[1], "maxX": xyxy[2], "maxY": xyxy[3]},

|

| 448 |

+

"class_id": cls,

|

| 449 |

"box_caption": f"{names[cls]} {conf:.3f}",

|

| 450 |

"scores": {"class_score": conf},

|

| 451 |

"domain": "pixel"})

|

| 452 |

+

avg_conf_per_class[cls] += conf

|

| 453 |

+

|

| 454 |

+

if cls in pred_class_count:

|

| 455 |

+

pred_class_count[cls] += 1

|

| 456 |

+

else:

|

| 457 |

+

pred_class_count[cls] = 1

|

| 458 |

+

|

| 459 |

+

for pred_class in pred_class_count.keys():

|

| 460 |

+

avg_conf_per_class[pred_class] = avg_conf_per_class[pred_class] / pred_class_count[pred_class]

|

| 461 |

+

|

| 462 |

boxes = {"predictions": {"box_data": box_data, "class_labels": names}} # inference-space

|

| 463 |

id = self.val_table_path_map[Path(path).name]

|

| 464 |

self.result_table.add_data(self.current_epoch,

|

| 465 |

id,

|

| 466 |

self.val_table.data[id][1],

|

| 467 |

wandb.Image(self.val_table.data[id][1], boxes=boxes, classes=class_set),

|

| 468 |

+

*avg_conf_per_class

|

| 469 |

)

|

| 470 |

|

| 471 |

def val_one_image(self, pred, predn, path, names, im):

|

|

|

|

| 515 |

try:

|

| 516 |

wandb.log(self.log_dict)

|

| 517 |

except BaseException as e:

|

| 518 |

+

LOGGER.info(

|

| 519 |

+

f"An error occurred in wandb logger. The training will proceed without interruption. More info\n{e}")

|

| 520 |

self.wandb_run.finish()

|

| 521 |

self.wandb_run = None

|

| 522 |

|

|

|

|

| 528 |

('best' if best_result else '')])

|

| 529 |

|

| 530 |

wandb.log({"evaluation": self.result_table})

|

| 531 |

+

columns = ["epoch", "id", "ground truth", "prediction"]

|

| 532 |

+

columns.extend(self.data_dict['names'])

|

| 533 |

+

self.result_table = wandb.Table(columns)

|

| 534 |

self.result_artifact = wandb.Artifact("run_" + wandb.run.id + "_progress", "evaluation")

|

| 535 |

|

| 536 |

def finish_run(self):

|