Spaces:

Sleeping

Sleeping

Merge branch 'lib' into hf-main

Browse files- .github/workflows/docker-image.yml +41 -0

- .gitignore +7 -0

- Dockerfile +20 -0

- LICENSE +21 -0

- README.md +262 -8

- app/config.py +18 -0

- app/examples/log.csv +236 -0

- app/gradio_meta_prompt.py +103 -0

- config.yml +39 -0

- demo/cot_meta_prompt.ipynb +69 -0

- demo/cot_meta_prompt.py +61 -0

- demo/default_meta_prompts.py +219 -0

- demo/langgraph_meta_prompt.ipynb +0 -0

- demo/prompt_ui.py +712 -0

- meta_prompt/__init__.py +4 -0

- meta_prompt/meta_prompt.py +442 -0

- poetry.lock +0 -0

- pyproject.toml +24 -0

- requirements.txt +122 -0

- tests/meta_prompt_graph_test.py +160 -0

.github/workflows/docker-image.yml

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

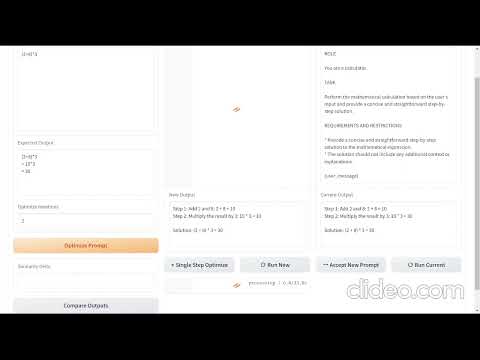

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Docker Image CI

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches: [ "main" ]

|

| 6 |

+

pull_request:

|

| 7 |

+

branches: [ "main" ]

|

| 8 |

+

|

| 9 |

+

jobs:

|

| 10 |

+

|

| 11 |

+

# build:

|

| 12 |

+

|

| 13 |

+

# runs-on: ubuntu-latest

|

| 14 |

+

|

| 15 |

+

# steps:

|

| 16 |

+

# - uses: actions/checkout@v3

|

| 17 |

+

# - name: Build the Docker image

|

| 18 |

+

# run: docker build . --file Dockerfile --tag yaleh/meta-prompt:$(date +%s)

|

| 19 |

+

|

| 20 |

+

build-and-publish:

|

| 21 |

+

runs-on: ubuntu-latest

|

| 22 |

+

|

| 23 |

+

steps:

|

| 24 |

+

- name: Checkout code

|

| 25 |

+

uses: actions/checkout@v2

|

| 26 |

+

|

| 27 |

+

- name: Login to Docker Hub

|

| 28 |

+

uses: docker/login-action@v1

|

| 29 |

+

with:

|

| 30 |

+

username: ${{ secrets.DOCKER_USERNAME }}

|

| 31 |

+

password: ${{ secrets.DOCKER_PASSWORD }}

|

| 32 |

+

|

| 33 |

+

- name: Build and Publish Docker image

|

| 34 |

+

uses: docker/build-push-action@v2

|

| 35 |

+

with:

|

| 36 |

+

context: .

|

| 37 |

+

push: true

|

| 38 |

+

tags: |

|

| 39 |

+

yaleh/meta-prompt:${{ github.sha }}

|

| 40 |

+

${{ github.ref == 'refs/heads/main' && 'yaleh/meta-prompt:latest' || '' }}

|

| 41 |

+

|

.gitignore

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.venv

|

| 2 |

+

.vscode

|

| 3 |

+

__pycache__

|

| 4 |

+

.env

|

| 5 |

+

config.yml.debug

|

| 6 |

+

debug.yml

|

| 7 |

+

dist

|

Dockerfile

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Use an official Python runtime as the base image

|

| 2 |

+

FROM python:3.10

|

| 3 |

+

|

| 4 |

+

# Set the working directory in the container

|

| 5 |

+

WORKDIR /app

|

| 6 |

+

|

| 7 |

+

# Copy all files from the current directory to the working directory in the container

|

| 8 |

+

COPY config.yml poetry.lock pyproject.toml /app/

|

| 9 |

+

COPY app /app/app/

|

| 10 |

+

COPY meta_prompt /app/meta_prompt/

|

| 11 |

+

|

| 12 |

+

RUN pip install --no-cache-dir -U poetry

|

| 13 |

+

RUN poetry config virtualenvs.create false

|

| 14 |

+

RUN poetry install --with=dev

|

| 15 |

+

|

| 16 |

+

# Expose the port (if necessary)

|

| 17 |

+

EXPOSE 7860

|

| 18 |

+

|

| 19 |

+

# Run the script when the container launches

|

| 20 |

+

CMD python app/gradio_meta_prompt.py

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023 Yale Huang

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,10 +1,264 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

-

title: Meta Prompt Docker

|

| 3 |

-

emoji: 🦀

|

| 4 |

-

colorFrom: red

|

| 5 |

-

colorTo: purple

|

| 6 |

-

sdk: docker

|

| 7 |

-

pinned: false

|

| 8 |

-

---

|

| 9 |

|

| 10 |

-

|

|

|

|

|

|

|

|

|

| 1 |

+

# Meta Prompt Demo

|

| 2 |

+

|

| 3 |

+

This project is a demonstration of the concept of Meta Prompt, which involves generating a language model prompt using another language model. The demo showcases how a language model can be used to generate high-quality prompts for another language model.

|

| 4 |

+

|

| 5 |

+

[](https://www.youtube.com/watch?v=eNFUq2AjKCk)

|

| 6 |

+

|

| 7 |

+

## Overview

|

| 8 |

+

|

| 9 |

+

The demo utilizes OpenAI's language models and provides a user interface for interacting with the chatbot. It allows users to input prompts, execute model calls, compare outputs, and optimize prompts based on desired criteria.

|

| 10 |

+

|

| 11 |

+

**(2023/10/15)** A new working mode called `Other User Prompts` has been added. In the prompt optimization process, similar compatible prompts to the original user prompt are referenced to significantly reduce iteration cycles.

|

| 12 |

+

|

| 13 |

+

**(2024/06/30)** The `langgraph_meta_prompt.ipynb` file, committed on 06/30/2024, introduces a sophisticated framework for generating and refining system messages for AI assistants. Powered by LangGraph, this notebook can generate high-quality prompts with much more models, including `claude-3.5-sonnet:beta`, `llama-3-70b-instruct`, and quite some with 70B+ parameters. The notebook also introduced a new approach to converge the system messages automatically.

|

| 14 |

+

|

| 15 |

+

## Try it out!

|

| 16 |

+

|

| 17 |

+

| Name | Colab Notebook |

|

| 18 |

+

|------------------------|----------------------------------------------------------------------------------------------------------------------------|

|

| 19 |

+

| Meta Prompt | [](https://colab.research.google.com/github/yaleh/meta-prompt/blob/main/meta_prompt.ipynb) |

|

| 20 |

+

| LangGraph Meta Prompt | [](https://colab.research.google.com/github/yaleh/meta-prompt/blob/main/langgraph_meta_prompt.ipynb) |

|

| 21 |

+

|

| 22 |

+

## Installation

|

| 23 |

+

|

| 24 |

+

To use this demo, please follow these steps:

|

| 25 |

+

|

| 26 |

+

1. Clone the repository: `git clone https://github.com/yaleh/meta-prompt.git`

|

| 27 |

+

2. Change into the project directory: `cd meta-prompt`

|

| 28 |

+

3. Install the required dependencies: `pip install -r requirements.txt`

|

| 29 |

+

|

| 30 |

+

Please note that you need to have Python and pip installed on your system.

|

| 31 |

+

|

| 32 |

+

## Usage

|

| 33 |

+

|

| 34 |

+

To run the demo, execute the following command:

|

| 35 |

+

|

| 36 |

+

```

|

| 37 |

+

python meta_prompt.py --api_key YOUR_API_KEY

|

| 38 |

+

```

|

| 39 |

+

|

| 40 |

+

Replace `YOUR_API_KEY` with your OpenAI API key. Other optional parameters can be specified as well, such as proxy settings, model name, API base URL, maximum message length, sharing option, and advanced mode. Please refer to the command-line argument options in the script for more details.

|

| 41 |

+

|

| 42 |

+

Once the demo is running, you can interact with the chatbot through the user interface provided. Enter prompts, execute model calls, compare outputs, and explore the functionality of the Meta Prompt concept.

|

| 43 |

+

|

| 44 |

+

To perform the demo on the web, follow these steps:

|

| 45 |

+

|

| 46 |

+

1. Fill in the user prompt in the "Testing User Prompt" section with a prompt suitable for training/testing.

|

| 47 |

+

2. Fill in the expected output in the "Expected Output" section to specify the desired response from the model.

|

| 48 |

+

3. Set the "Optimize Iterations" parameter. It is recommended to start with 1 iteration and gradually increase it later.

|

| 49 |

+

4. Click on "Optimize Prompts" or "Single Step Optimize" to optimize (generate) the prompt.

|

| 50 |

+

5. After generating the "New System Prompt," click "Run New" to validate it using the "New System Prompt" and "Testing User Prompt."

|

| 51 |

+

6. If the "New Output" is better than the "Current Output," click "Accept New Prompt" to copy the "New System Prompt" and "New Output" to the "Current System Prompt" and "Current Output," respectively, as a basis for further optimization.

|

| 52 |

+

7. Adjust the "Optimize Iterations" and optimize again.

|

| 53 |

+

|

| 54 |

+

Usually, simple questions (such as arithmetic operations) require around 3 iterations of optimization, while complex problems may require more than 10 iterations.

|

| 55 |

+

|

| 56 |

+

### Settings

|

| 57 |

+

|

| 58 |

+

It is recommended to use GPT-4 as the Generating LLM Model for running the meta prompt. GPT-3.5 may not reliably generate the expected results for most questions.

|

| 59 |

+

|

| 60 |

+

You can use either GPT-4 or GPT-3.5 as the Testing LLM Model, similar to when using GPT/ChatGPT in regular scenarios.

|

| 61 |

+

|

| 62 |

+

If you have access to ChatGPT and want to save costs on GPT-4 API usage, you can also manually execute the meta-prompt by clicking "Merge Meta System Prompt." This will generate a complete prompt, including the meta-prompt and the current example, that can be used with ChatGPT. However, note that if further iterations are required, you need to manually copy the newly generated system prompt to the Current System Prompt and click "Run Current" to update the Current Output.

|

| 63 |

+

|

| 64 |

+

## Running Docker Image

|

| 65 |

+

|

| 66 |

+

To perform the demo using Docker, make sure you have Docker installed on your system, and then follow these steps:

|

| 67 |

+

|

| 68 |

+

1. Pull the Meta Prompt Docker image by running the following command:

|

| 69 |

+

|

| 70 |

+

```

|

| 71 |

+

docker pull yaleh/meta-prompt

|

| 72 |

+

```

|

| 73 |

+

|

| 74 |

+

2. Run the Docker container with the following command:

|

| 75 |

+

|

| 76 |

+

```

|

| 77 |

+

docker run -d --name meta-prompt-container -p 7860:7860 -e API_KEY=YOUR_API_KEY -e OTHER_ARGS="--advanced_mode" -e OPENAI_API_BASE=https://openai.lrfz.com/v1 yaleh/meta-prompt

|

| 78 |

+

```

|

| 79 |

+

|

| 80 |

+

Replace `YOUR_API_KEY` with your OpenAI API key. You can modify other environment variables if needed.

|

| 81 |

+

3. You can now access the Meta Prompt demo by opening your web browser and visiting `http://localhost:7860`.

|

| 82 |

+

|

| 83 |

+

To stop and remove the Meta Prompt container, run the following commands:

|

| 84 |

+

|

| 85 |

+

```

|

| 86 |

+

docker stop meta-prompt-container

|

| 87 |

+

docker rm meta-prompt-container

|

| 88 |

+

```

|

| 89 |

+

|

| 90 |

+

Usually, simple questions (such as arithmetic operations) require around 3 iterations of optimization, while complex problems may require more than 10 iterations.

|

| 91 |

+

|

| 92 |

+

## Examples

|

| 93 |

+

|

| 94 |

+

### Arithmetic

|

| 95 |

+

|

| 96 |

+

#### Testing User Prompt

|

| 97 |

+

|

| 98 |

+

```

|

| 99 |

+

(2+8)*3

|

| 100 |

+

```

|

| 101 |

+

|

| 102 |

+

#### Expected Output

|

| 103 |

+

|

| 104 |

+

```

|

| 105 |

+

(2+8)*3

|

| 106 |

+

= 10*3

|

| 107 |

+

= 30

|

| 108 |

+

```

|

| 109 |

+

|

| 110 |

+

#### Prompt After 4 Interations

|

| 111 |

+

|

| 112 |

+

```

|

| 113 |

+

ROLE

|

| 114 |

+

|

| 115 |

+

You are a math tutor.

|

| 116 |

+

|

| 117 |

+

TASK

|

| 118 |

+

|

| 119 |

+

Your task is to solve the mathematical expression provided by the user and provide a concise, step-by-step solution. Each step should only include the calculation and the result, without any additional explanations or step labels.

|

| 120 |

+

|

| 121 |

+

REQUIREMENTS AND RESTRICTIONS

|

| 122 |

+

|

| 123 |

+

* The solution should be provided in standard mathematical notation.

|

| 124 |

+

* The format of the mathematical expressions should be consistent with the user's input.

|

| 125 |

+

* The symbols used in the mathematical expressions should be consistent with the user's input.

|

| 126 |

+

* No spaces should be included around the mathematical operators.

|

| 127 |

+

* Avoid unnecessary explanations or verbosity.

|

| 128 |

+

* Do not include any additional information or explanations beyond the direct calculation steps.

|

| 129 |

+

* Do not include a final solution statement.

|

| 130 |

+

|

| 131 |

+

{user_message}

|

| 132 |

+

```

|

| 133 |

+

|

| 134 |

+

### GDP

|

| 135 |

+

|

| 136 |

+

#### Testing User Prompt

|

| 137 |

+

|

| 138 |

+

```

|

| 139 |

+

Here is the GDP data in billions of US dollars (USD) for these years:

|

| 140 |

+

|

| 141 |

+

Germany:

|

| 142 |

+

|

| 143 |

+

2015: $3,368.29 billion

|

| 144 |

+

2016: $3,467.79 billion

|

| 145 |

+

2017: $3,677.83 billion

|

| 146 |

+

2018: $3,946.00 billion

|

| 147 |

+

2019: $3,845.03 billion

|

| 148 |

+

France:

|

| 149 |

+

|

| 150 |

+

2015: $2,423.47 billion

|

| 151 |

+

2016: $2,465.12 billion

|

| 152 |

+

2017: $2,582.49 billion

|

| 153 |

+

2018: $2,787.86 billion

|

| 154 |

+

2019: $2,715.52 billion

|

| 155 |

+

United Kingdom:

|

| 156 |

+

|

| 157 |

+

2015: $2,860.58 billion

|

| 158 |

+

2016: $2,650.90 billion

|

| 159 |

+

2017: $2,622.43 billion

|

| 160 |

+

2018: $2,828.87 billion

|

| 161 |

+

2019: $2,829.21 billion

|

| 162 |

+

Italy:

|

| 163 |

+

|

| 164 |

+

2015: $1,815.72 billion

|

| 165 |

+

2016: $1,852.50 billion

|

| 166 |

+

2017: $1,937.80 billion

|

| 167 |

+

2018: $2,073.90 billion

|

| 168 |

+

2019: $1,988.14 billion

|

| 169 |

+

Spain:

|

| 170 |

+

|

| 171 |

+

2015: $1,199.74 billion

|

| 172 |

+

2016: $1,235.95 billion

|

| 173 |

+

2017: $1,313.13 billion

|

| 174 |

+

2018: $1,426.19 billion

|

| 175 |

+

2019: $1,430.38 billion

|

| 176 |

+

|

| 177 |

+

```

|

| 178 |

+

|

| 179 |

+

#### Expected Output

|

| 180 |

+

|

| 181 |

+

```

|

| 182 |

+

Year,Germany,France,United Kingdom,Italy,Spain

|

| 183 |

+

2016-2015,2.96%,1.71%,-7.35%,2.02%,3.04%

|

| 184 |

+

2017-2016,5.08%,4.78%,-1.07%,4.61%,6.23%

|

| 185 |

+

2018-2017,7.48%,7.99%,7.89%,7.10%,8.58%

|

| 186 |

+

2019-2018,-2.56%,-2.59%,0.01%,-4.11%,0.30%

|

| 187 |

+

```

|

| 188 |

+

|

| 189 |

+

#### Other User Prompts

|

| 190 |

+

|

| 191 |

+

```

|

| 192 |

+

Here is the GDP data in billions of US dollars (USD) for these years:

|

| 193 |

+

|

| 194 |

+

1. China:

|

| 195 |

+

- 2010: $6,101.18 billion

|

| 196 |

+

- 2011: $7,572.80 billion

|

| 197 |

+

- 2012: $8,560.59 billion

|

| 198 |

+

- 2013: $9,607.23 billion

|

| 199 |

+

- 2014: $10,482.65 billion

|

| 200 |

+

|

| 201 |

+

2. India:

|

| 202 |

+

- 2010: $1,675.62 billion

|

| 203 |

+

- 2011: $1,823.05 billion

|

| 204 |

+

- 2012: $1,827.64 billion

|

| 205 |

+

- 2013: $1,856.72 billion

|

| 206 |

+

- 2014: $2,046.88 billion

|

| 207 |

+

|

| 208 |

+

3. Japan:

|

| 209 |

+

- 2010: $5,700.35 billion

|

| 210 |

+

- 2011: $6,157.47 billion

|

| 211 |

+

- 2012: $6,203.21 billion

|

| 212 |

+

- 2013: $5,155.72 billion

|

| 213 |

+

- 2014: $4,616.52 billion

|

| 214 |

+

|

| 215 |

+

4. South Korea:

|

| 216 |

+

- 2010: $1,464.26 billion

|

| 217 |

+

- 2011: $1,622.03 billion

|

| 218 |

+

- 2012: $1,624.76 billion

|

| 219 |

+

- 2013: $1,305.76 billion

|

| 220 |

+

- 2014: $1,411.25 billion

|

| 221 |

+

|

| 222 |

+

5. Indonesia:

|

| 223 |

+

- 2010: $706.39 billion

|

| 224 |

+

- 2011: $846.48 billion

|

| 225 |

+

- 2012: $878.47 billion

|

| 226 |

+

- 2013: $868.36 billion

|

| 227 |

+

- 2014: $891.77 billion

|

| 228 |

+

```

|

| 229 |

+

|

| 230 |

+

#### Prompt After 1 Interation

|

| 231 |

+

|

| 232 |

+

```

|

| 233 |

+

ROLE

|

| 234 |

+

|

| 235 |

+

You are an economic analyst.

|

| 236 |

+

|

| 237 |

+

TASK

|

| 238 |

+

|

| 239 |

+

Your task is to calculate the annual percentage change in GDP for each country based on the provided data.

|

| 240 |

+

|

| 241 |

+

REQUIREMENTS_AND_RESTRICTIONS

|

| 242 |

+

|

| 243 |

+

- The data will be provided in the format: "Year: $GDP in billions"

|

| 244 |

+

- Calculate the percentage change from year to year for each country.

|

| 245 |

+

- Present the results in a table format, with each row representing the change from one year to the next, and each column representing a different country.

|

| 246 |

+

- The table should be formatted as "Year-Year,Country1,Country2,..."

|

| 247 |

+

- The percentage change should be calculated as ((GDP Year 2 - GDP Year 1) / GDP Year 1) * 100

|

| 248 |

+

- The percentage change should be rounded to two decimal places and followed by a "%" symbol.

|

| 249 |

+

- If data for a year is missing for a country, leave that cell blank in the table.

|

| 250 |

+

```

|

| 251 |

+

|

| 252 |

+

## License

|

| 253 |

+

|

| 254 |

+

This project is licensed under the MIT License. Please see the [LICENSE](LICENSE) file for more information.

|

| 255 |

+

|

| 256 |

+

## Contact

|

| 257 |

+

|

| 258 |

+

For any questions or feedback regarding this project, please feel free to reach out to Yale Huang at [email protected].

|

| 259 |

+

|

| 260 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 261 |

|

| 262 |

+

**Acknowledgements:**

|

| 263 |

+

|

| 264 |

+

I would like to express my gratitude to my colleagues at [Wiz.AI](https://www.wiz.ai/) for their support and contributions.

|

app/config.py

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# config.py

|

| 2 |

+

from confz import BaseConfig

|

| 3 |

+

from pydantic import BaseModel, Extra

|

| 4 |

+

from typing import Optional

|

| 5 |

+

|

| 6 |

+

class LLMConfig(BaseModel):

|

| 7 |

+

type: str

|

| 8 |

+

|

| 9 |

+

class Config:

|

| 10 |

+

extra = Extra.allow

|

| 11 |

+

|

| 12 |

+

class MetaPromptConfig(BaseConfig):

|

| 13 |

+

llms: Optional[dict[str, LLMConfig]]

|

| 14 |

+

examples_path: Optional[str]

|

| 15 |

+

server_name: Optional[str] = None

|

| 16 |

+

server_port: Optional[int] = None

|

| 17 |

+

recursion_limit: Optional[int] = 25

|

| 18 |

+

recursion_limit_max: Optional[int] = 50

|

app/examples/log.csv

ADDED

|

@@ -0,0 +1,236 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

User Message,Expected Output,Acceptance Criteria

|

| 2 |

+

How do I reverse a list in Python?,Use the `[::-1]` slicing technique or the `list.reverse()` method.,"Similar in meaning, text length and style."

|

| 3 |

+

(2+8)*3,"(2+8)*3

|

| 4 |

+

= 10*3

|

| 5 |

+

= 30

|

| 6 |

+

","

|

| 7 |

+

* Exactly text match.

|

| 8 |

+

* Acceptable differences:

|

| 9 |

+

* Extra or missing spaces.

|

| 10 |

+

* Extra or missing line breaks at the beginning or end of the output.

|

| 11 |

+

"

|

| 12 |

+

"Here is the GDP data in billions of US dollars (USD) for these years:

|

| 13 |

+

|

| 14 |

+

Germany:

|

| 15 |

+

|

| 16 |

+

2015: $3,368.29 billion

|

| 17 |

+

2016: $3,467.79 billion

|

| 18 |

+

2017: $3,677.83 billion

|

| 19 |

+

2018: $3,946.00 billion

|

| 20 |

+

2019: $3,845.03 billion

|

| 21 |

+

France:

|

| 22 |

+

|

| 23 |

+

2015: $2,423.47 billion

|

| 24 |

+

2016: $2,465.12 billion

|

| 25 |

+

2017: $2,582.49 billion

|

| 26 |

+

2018: $2,787.86 billion

|

| 27 |

+

2019: $2,715.52 billion

|

| 28 |

+

United Kingdom:

|

| 29 |

+

|

| 30 |

+

2015: $2,860.58 billion

|

| 31 |

+

2016: $2,650.90 billion

|

| 32 |

+

2017: $2,622.43 billion

|

| 33 |

+

2018: $2,828.87 billion

|

| 34 |

+

2019: $2,829.21 billion

|

| 35 |

+

Italy:

|

| 36 |

+

|

| 37 |

+

2015: $1,815.72 billion

|

| 38 |

+

2016: $1,852.50 billion

|

| 39 |

+

2017: $1,937.80 billion

|

| 40 |

+

2018: $2,073.90 billion

|

| 41 |

+

2019: $1,988.14 billion

|

| 42 |

+

Spain:

|

| 43 |

+

|

| 44 |

+

2015: $1,199.74 billion

|

| 45 |

+

2016: $1,235.95 billion

|

| 46 |

+

2017: $1,313.13 billion

|

| 47 |

+

2018: $1,426.19 billion

|

| 48 |

+

2019: $1,430.38 billion

|

| 49 |

+

","Year,Germany,France,United Kingdom,Italy,Spain

|

| 50 |

+

2016-2015,2.96%,1.71%,-7.35%,2.02%,3.04%

|

| 51 |

+

2017-2016,5.08%,4.78%,-1.07%,4.61%,6.23%

|

| 52 |

+

2018-2017,7.48%,7.99%,7.89%,7.10%,8.58%

|

| 53 |

+

2019-2018,-2.56%,-2.59%,0.01%,-4.11%,0.30%

|

| 54 |

+

","

|

| 55 |

+

* Strict text matching of the header row and first column(year).

|

| 56 |

+

* Acceptable differences:

|

| 57 |

+

* Differences in digital/percentage values in the table, even significant ones.

|

| 58 |

+

* Extra or missing spaces.

|

| 59 |

+

* Extra or missing line breaks.

|

| 60 |

+

"

|

| 61 |

+

"Gene sequence: ATGGCCATGGCGCCCAGAACTGAGATCAATAGTACCCGTATTAACGGGTGA

|

| 62 |

+

Species: Escherichia coli","{

|

| 63 |

+

""Gene Sequence Analysis Results"": {

|

| 64 |

+

""Basic Information"": {

|

| 65 |

+

""Sequence Length"": 54,

|

| 66 |

+

""GC Content"": ""51.85%""

|

| 67 |

+

},

|

| 68 |

+

""Nucleotide Composition"": {

|

| 69 |

+

""A"": {""Count"": 12, ""Percentage"": ""22.22%""},

|

| 70 |

+

""T"": {""Count"": 11, ""Percentage"": ""20.37%""},

|

| 71 |

+

""G"": {""Count"": 16, ""Percentage"": ""29.63%""},

|

| 72 |

+

""C"": {""Count"": 15, ""Percentage"": ""27.78%""}

|

| 73 |

+

},

|

| 74 |

+

""Codon Analysis"": {

|

| 75 |

+

""Start Codon"": ""ATG"",

|

| 76 |

+

""Stop Codon"": ""TGA"",

|

| 77 |

+

""Codon Table"": [

|

| 78 |

+

{""Codon"": ""ATG"", ""Amino Acid"": ""Methionine"", ""Position"": 1},

|

| 79 |

+

{""Codon"": ""GCC"", ""Amino Acid"": ""Alanine"", ""Position"": 2},

|

| 80 |

+

{""Codon"": ""ATG"", ""Amino Acid"": ""Methionine"", ""Position"": 3},

|

| 81 |

+

// ... other codons ...

|

| 82 |

+

{""Codon"": ""TGA"", ""Amino Acid"": ""Stop Codon"", ""Position"": 18}

|

| 83 |

+

]

|

| 84 |

+

},

|

| 85 |

+

""Potential Function Prediction"": {

|

| 86 |

+

""Protein Length"": 17,

|

| 87 |

+

""Possible Functional Domains"": [

|

| 88 |

+

{""Domain Name"": ""ABC Transporter"", ""Start Position"": 5, ""End Position"": 15, ""Confidence"": ""75%""},

|

| 89 |

+

{""Domain Name"": ""Membrane Protein"", ""Start Position"": 1, ""End Position"": 17, ""Confidence"": ""60%""}

|

| 90 |

+

],

|

| 91 |

+

""Secondary Structure Prediction"": {

|

| 92 |

+

""α-helix"": [""2-8"", ""12-16""],

|

| 93 |

+

""β-sheet"": [""9-11""],

|

| 94 |

+

""Random Coil"": [""1"", ""17""]

|

| 95 |

+

}

|

| 96 |

+

},

|

| 97 |

+

""Homology Analysis"": {

|

| 98 |

+

""Most Similar Sequences"": [

|

| 99 |

+

{

|

| 100 |

+

""Gene Name"": ""abcT"",

|

| 101 |

+

""Species"": ""Salmonella enterica"",

|

| 102 |

+

""Similarity"": ""89%"",

|

| 103 |

+

""E-value"": ""3e-25""

|

| 104 |

+

},

|

| 105 |

+

{

|

| 106 |

+

""Gene Name"": ""yojI"",

|

| 107 |

+

""Species"": ""Escherichia coli"",

|

| 108 |

+

""Similarity"": ""95%"",

|

| 109 |

+

""E-value"": ""1e-30""

|

| 110 |

+

}

|

| 111 |

+

]

|

| 112 |

+

},

|

| 113 |

+

""Mutation Analysis"": {

|

| 114 |

+

""SNP Sites"": [

|

| 115 |

+

{""Position"": 27, ""Wild Type"": ""A"", ""Mutant"": ""G"", ""Amino Acid Change"": ""Glutamine->Arginine""},

|

| 116 |

+

{""Position"": 42, ""Wild Type"": ""C"", ""Mutant"": ""T"", ""Amino Acid Change"": ""None (Synonymous Mutation)""}

|

| 117 |

+

]

|

| 118 |

+

}

|

| 119 |

+

}

|

| 120 |

+

}","* Consistent with Expected Output:

|

| 121 |

+

* Formats of all JSON sections

|

| 122 |

+

* Data types of all JSON fields

|

| 123 |

+

* Top layer sections

|

| 124 |

+

* Acceptable differences:

|

| 125 |

+

* Extra or missing spaces

|

| 126 |

+

* Extra or missing line breaks at the beginning or end of the output

|

| 127 |

+

* Differences in JSON field values

|

| 128 |

+

* JSON wrapped in backquotes"

|

| 129 |

+

今天下午3点,在北京国家会议中心,阿里巴巴集团董事局主席马云宣布将投资100亿元人民币用于农村电商发展。这一决定受到了与会代表的热烈欢迎,大家认为这将为中国农村经济带来新的机遇。,"{

|

| 130 |

+

""文本分析结果"": {

|

| 131 |

+

""情感分析"": {

|

| 132 |

+

""整体情感"": ""积极"",

|

| 133 |

+

""情感得分"": 0.82,

|

| 134 |

+

""情感细分"": {

|

| 135 |

+

""乐观"": 0.75,

|

| 136 |

+

""兴奋"": 0.60,

|

| 137 |

+

""期待"": 0.85

|

| 138 |

+

}

|

| 139 |

+

},

|

| 140 |

+

""实体识别"": [

|

| 141 |

+

{""实体"": ""北京"", ""类型"": ""地点"", ""起始位置"": 7, ""结束位置"": 9},

|

| 142 |

+

{""实体"": ""国家会议中心"", ""类型"": ""地点"", ""起始位置"": 9, ""结束位置"": 15},

|

| 143 |

+

{""实体"": ""阿里巴巴集团"", ""类型"": ""组织"", ""起始位置"": 16, ""结束位置"": 22},

|

| 144 |

+

{""实体"": ""马云"", ""类型"": ""人物"", ""起始位置"": 26, ""结束位置"": 28},

|

| 145 |

+

{""实体"": ""100亿元"", ""类型"": ""金额"", ""起始位置"": 32, ""结束位置"": 37},

|

| 146 |

+

{""实体"": ""人民币"", ""类型"": ""货币"", ""起始位置"": 37, ""结束位置"": 40},

|

| 147 |

+

{""实体"": ""中国"", ""类型"": ""地点"", ""起始位置"": 71, ""结束位置"": 73}

|

| 148 |

+

],

|

| 149 |

+

""关键词提取"": [

|

| 150 |

+

{""关键词"": ""农村电商"", ""权重"": 0.95},

|

| 151 |

+

{""关键词"": ""马云"", ""权重"": 0.85},

|

| 152 |

+

{""关键词"": ""投资"", ""权重"": 0.80},

|

| 153 |

+

{""关键词"": ""阿里巴巴"", ""权重"": 0.75},

|

| 154 |

+

{""关键词"": ""经济机遇"", ""权重"": 0.70}

|

| 155 |

+

]

|

| 156 |

+

}

|

| 157 |

+

}","* Consistent with Expected Output:

|

| 158 |

+

* Formats of all JSON sections

|

| 159 |

+

* Data types of all JSON fields

|

| 160 |

+

* Top layer sections

|

| 161 |

+

* Acceptable differences:

|

| 162 |

+

* Differences in digital values in the table.

|

| 163 |

+

* Extra or missing spaces.

|

| 164 |

+

* Extra or missing line breaks at the beginning or end of the output.

|

| 165 |

+

* Differences in JSON field values

|

| 166 |

+

* Differences in section/item orders.

|

| 167 |

+

* JSON wrapped in backquotes."

|

| 168 |

+

Low-noise amplifier,"A '''low-noise amplifier''' ('''LNA''') is an electronic component that amplifies a very low-power [[signal]] without significantly degrading its [[signal-to-noise ratio]] (SNR). Any [[electronic amplifier]] will increase the power of both the signal and the [[Noise (electronics)|noise]] present at its input, but the amplifier will also introduce some additional noise. LNAs are designed to minimize that additional noise, by choosing special components, operating points, and [[Circuit topology (electrical)|circuit topologies]]. Minimizing additional noise must balance with other design goals such as [[power gain]] and [[impedance matching]].

|

| 169 |

+

|

| 170 |

+

LNAs are found in [[Radio|radio communications]] systems, [[Amateur Radio]] stations, medical instruments and [[electronic test equipment]]. A typical LNA may supply a power gain of 100 (20 [[decibels]] (dB)) while decreasing the SNR by less than a factor of two (a 3 dB [[noise figure]] (NF)). Although LNAs are primarily concerned with weak signals that are just above the [[noise floor]], they must also consider the presence of larger signals that cause [[intermodulation distortion]].","* Consistent with Expected Output:

|

| 171 |

+

* Language

|

| 172 |

+

* Text length

|

| 173 |

+

* Text style

|

| 174 |

+

* Text structures

|

| 175 |

+

* Cover all the major content of Expected Output.

|

| 176 |

+

* Acceptable differences:

|

| 177 |

+

* Minor format differences.

|

| 178 |

+

* Expression differences.

|

| 179 |

+

* Numerical differences.

|

| 180 |

+

* Additional content in Actual Output.

|

| 181 |

+

* Missing minor content in Actual Output."

|

| 182 |

+

What is the meaning of life?,"[

|

| 183 |

+

{""persona"": ""Philosopher"", ""prompt"": ""Explore the concept of life's meaning through the lens of existentialism and purpose-driven existence.""},

|

| 184 |

+

{""persona"": ""Scientist"", ""prompt"": ""Examine the biological and evolutionary perspectives on the function and significance of life.""},

|

| 185 |

+

{""persona"": ""Child"", ""prompt"": ""Imagine you're explaining to a curious 7-year-old what makes life special and important.""}

|

| 186 |

+

]","* Consistent with Expected Output:

|

| 187 |

+

* Formats of all JSON sections

|

| 188 |

+

* Data types and formats of all JSON fields

|

| 189 |

+

* Top layer sections

|

| 190 |

+

* Acceptable differences:

|

| 191 |

+

* Different personas or prompts

|

| 192 |

+

* Different numbers of personas

|

| 193 |

+

* Extra or missing spaces

|

| 194 |

+

* Extra or missing line breaks at the beginning or end of the output

|

| 195 |

+

* Unacceptable:

|

| 196 |

+

* Showing the personas in Expected Output in System Message"

|

| 197 |

+

"<?php

|

| 198 |

+

$username = $_POST['username'];

|

| 199 |

+

$password = $_POST['password'];

|

| 200 |

+

|

| 201 |

+

$query = ""SELECT * FROM users WHERE username = '$username' AND password = '$password'"";

|

| 202 |

+

$result = mysqli_query($connection, $query);

|

| 203 |

+

|

| 204 |

+

if (mysqli_num_rows($result) > 0) {

|

| 205 |

+

echo ""Login successful"";

|

| 206 |

+

} else {

|

| 207 |

+

echo ""Login failed"";

|

| 208 |

+

}

|

| 209 |

+

?>","security_analysis:

|

| 210 |

+

vulnerabilities:

|

| 211 |

+

- type: SQL Injection

|

| 212 |

+

severity: Critical

|

| 213 |

+

description: Unsanitized user input directly used in SQL query

|

| 214 |

+

mitigation: Use prepared statements or parameterized queries

|

| 215 |

+

- type: Password Storage

|

| 216 |

+

severity: High

|

| 217 |

+

description: Passwords stored in plain text

|

| 218 |

+

mitigation: Use password hashing (e.g., bcrypt) before storage

|

| 219 |

+

additional_issues:

|

| 220 |

+

- Lack of input validation

|

| 221 |

+

- No CSRF protection

|

| 222 |

+

- Potential for timing attacks in login logic

|

| 223 |

+

overall_risk_score: 9.5/10

|

| 224 |

+

recommended_actions:

|

| 225 |

+

- Implement proper input sanitization

|

| 226 |

+

- Use secure password hashing algorithms

|

| 227 |

+

- Add CSRF tokens to forms

|

| 228 |

+

- Consider using a secure authentication library","* Consistent with Expected Output:

|

| 229 |

+

* Formats of all YAML sections

|

| 230 |

+

* Data types and formats of all YAML fields

|

| 231 |

+

* Top layer sections

|

| 232 |

+

* Acceptable differences:

|

| 233 |

+

* Differences in field values

|

| 234 |

+

* Extra or missing spaces

|

| 235 |

+

* Extra or missing line breaks at the beginning or end of the output

|

| 236 |

+

* YAML wrapped in backquotes"

|

app/gradio_meta_prompt.py

ADDED

|

@@ -0,0 +1,103 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from confz import BaseConfig, CLArgSource, EnvSource, FileSource

|

| 3 |

+

from meta_prompt import MetaPromptGraph, AgentState

|

| 4 |

+

from langchain_openai import ChatOpenAI

|

| 5 |

+

from app.config import MetaPromptConfig

|

| 6 |

+

|

| 7 |

+

class LLMModelFactory:

|

| 8 |

+

def __init__(self):

|

| 9 |

+

pass

|

| 10 |

+

|

| 11 |

+

def create(self, model_type: str, **kwargs):

|

| 12 |

+

model_class = globals()[model_type]

|

| 13 |

+

return model_class(**kwargs)

|

| 14 |

+

|

| 15 |

+

llm_model_factory = LLMModelFactory()

|

| 16 |

+

|

| 17 |

+

def process_message(user_message, expected_output, acceptance_criteria, initial_system_message,

|

| 18 |

+

recursion_limit: int, model_name: str):

|

| 19 |

+

# Create the input state

|

| 20 |

+

input_state = AgentState(

|

| 21 |

+

user_message=user_message,

|

| 22 |

+

expected_output=expected_output,

|

| 23 |

+

acceptance_criteria=acceptance_criteria,

|

| 24 |

+

system_message=initial_system_message

|

| 25 |

+

)

|

| 26 |

+

|

| 27 |

+

# Get the output state from MetaPromptGraph

|

| 28 |

+

type = config.llms[model_name].type

|

| 29 |

+

args = config.llms[model_name].model_dump(exclude={'type'})

|

| 30 |

+

llm = llm_model_factory.create(type, **args)

|

| 31 |

+

meta_prompt_graph = MetaPromptGraph(llms=llm)

|

| 32 |

+

output_state = meta_prompt_graph(input_state, recursion_limit=recursion_limit)

|

| 33 |

+

|

| 34 |

+

# Validate the output state

|

| 35 |

+

system_message = ''

|

| 36 |

+

output = ''

|

| 37 |

+

analysis = ''

|

| 38 |

+

|

| 39 |

+

if 'best_system_message' in output_state and output_state['best_system_message'] is not None:

|

| 40 |

+

system_message = output_state['best_system_message']

|

| 41 |

+

else:

|

| 42 |

+

system_message = "Error: The output state does not contain a valid 'best_system_message'"

|

| 43 |

+

|

| 44 |

+

if 'best_output' in output_state and output_state['best_output'] is not None:

|

| 45 |

+

output = output_state["best_output"]

|

| 46 |

+

else:

|

| 47 |

+

output = "Error: The output state does not contain a valid 'best_output'"

|

| 48 |

+

|

| 49 |

+

if 'analysis' in output_state and output_state['analysis'] is not None:

|

| 50 |

+

analysis = output_state['analysis']

|

| 51 |

+

else:

|

| 52 |

+

analysis = "Error: The output state does not contain a valid 'analysis'"

|

| 53 |

+

|

| 54 |

+

return system_message, output, analysis

|

| 55 |

+

|

| 56 |

+

class FileConfig(BaseConfig):

|

| 57 |

+

config_file: str = 'config.yml' # default path

|

| 58 |

+

|

| 59 |

+

pre_config_sources = [

|

| 60 |

+

EnvSource(prefix='METAPROMPT_', allow_all=True),

|

| 61 |

+

CLArgSource()

|

| 62 |

+

]

|

| 63 |

+

pre_config = FileConfig(config_sources=pre_config_sources)

|

| 64 |

+

|

| 65 |

+

config_sources = [

|

| 66 |

+

FileSource(file=pre_config.config_file, optional=True),

|

| 67 |

+

EnvSource(prefix='METAPROMPT_', allow_all=True),

|

| 68 |

+

CLArgSource()

|

| 69 |

+

]

|

| 70 |

+

|

| 71 |

+

config = MetaPromptConfig(config_sources=config_sources)

|

| 72 |

+

|

| 73 |

+

# Create the Gradio interface

|

| 74 |

+

iface = gr.Interface(

|

| 75 |

+

fn=process_message,

|

| 76 |

+

inputs=[

|

| 77 |

+

gr.Textbox(label="User Message", show_copy_button=True),

|

| 78 |

+

gr.Textbox(label="Expected Output", show_copy_button=True),

|

| 79 |

+

gr.Textbox(label="Acceptance Criteria", show_copy_button=True),

|

| 80 |

+

],

|

| 81 |

+

outputs=[

|

| 82 |

+

gr.Textbox(label="System Message", show_copy_button=True),

|

| 83 |

+

gr.Textbox(label="Output", show_copy_button=True),

|

| 84 |

+

gr.Textbox(label="Analysis", show_copy_button=True)

|

| 85 |

+

],

|

| 86 |

+

additional_inputs=[

|

| 87 |

+

gr.Textbox(label="Initial System Message", show_copy_button=True, value=""),

|

| 88 |

+

gr.Number(label="Recursion Limit", value=config.recursion_limit,

|

| 89 |

+

precision=0, minimum=1, maximum=config.recursion_limit_max, step=1),

|

| 90 |

+

gr.Dropdown(

|

| 91 |

+

label="Model Name",

|

| 92 |

+

choices=config.llms.keys(),

|

| 93 |

+

value=list(config.llms.keys())[0],

|

| 94 |

+

)

|

| 95 |

+

],

|

| 96 |

+

# stop_btn = gr.Button("Stop", variant="stop", visible=True),

|

| 97 |

+

title="MetaPromptGraph Chat Interface",

|

| 98 |

+

description="A chat interface for MetaPromptGraph to process user inputs and generate system messages.",

|

| 99 |

+

examples=config.examples_path

|

| 100 |

+

)

|

| 101 |

+

|

| 102 |

+

# Launch the Gradio app

|

| 103 |

+

iface.launch(server_name=config.server_name, server_port=config.server_port)

|

config.yml

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

llms:

|

| 2 |

+

groq/llama3-70b-8192:

|

| 3 |

+

type: ChatOpenAI

|

| 4 |

+

temperature: 0.1

|

| 5 |

+

model_name: "llama3-70b-8192"

|

| 6 |

+

# openai_api_key: ""

|

| 7 |

+

openai_api_base: "https://api.groq.com/openai/v1"

|

| 8 |

+

max_tokens: 8192

|

| 9 |

+

verbose: true

|

| 10 |

+

# anthropic/claude-3-haiku:

|

| 11 |

+

# type: ChatOpenAI

|

| 12 |

+

# temperature: 0.1

|

| 13 |

+

# model_name: "anthropic/claude-3-haiku:beta"

|

| 14 |

+

# openai_api_key: ""

|

| 15 |

+

# openai_api_base: "https://openrouter.ai/api/v1"

|

| 16 |

+

# max_tokens: 8192

|

| 17 |

+

# verbose: true

|

| 18 |

+

# anthropic/claude-3-sonnet:

|

| 19 |

+

# type: ChatOpenAI

|

| 20 |

+

# temperature: 0.1

|

| 21 |

+

# model_name: "anthropic/claude-3-sonnet:beta"

|

| 22 |

+

# openai_api_key: ""

|

| 23 |

+

# openai_api_base: "https://openrouter.ai/api/v1"

|

| 24 |

+

# max_tokens: 8192

|

| 25 |

+

# verbose: true

|

| 26 |

+

# anthropic/deepseek-chat:

|

| 27 |

+

# type: ChatOpenAI

|

| 28 |

+

# temperature: 0.1

|

| 29 |

+

# model_name: "deepseek/deepseek-chat"

|

| 30 |

+

# openai_api_key: ""

|

| 31 |

+

# openai_api_base: "https://openrouter.ai/api/v1"

|

| 32 |

+

# max_tokens: 8192

|

| 33 |

+

# verbose: true

|

| 34 |

+

|

| 35 |

+

examples_path: "app/examples"

|

| 36 |

+

# server_name: 0.0.0.0

|

| 37 |

+

# server_port: 7860

|

| 38 |

+

recursion_limit: 16

|

| 39 |

+

recursion_limit_max: 20

|

demo/cot_meta_prompt.ipynb

ADDED

|

@@ -0,0 +1,69 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "code",

|

| 5 |

+

"execution_count": null,

|

| 6 |

+

"metadata": {

|

| 7 |

+

"id": "v98KGZUT17EJ"

|

| 8 |

+

},

|

| 9 |

+

"outputs": [],

|

| 10 |

+

"source": [

|

| 11 |

+

"!wget https://github.com/yaleh/meta-prompt/raw/main/prompt_ui.py\n",

|

| 12 |

+

"!wget https://github.com/yaleh/meta-prompt/raw/main/default_meta_prompts.py"

|

| 13 |

+

]

|

| 14 |

+

},

|

| 15 |

+

{

|

| 16 |

+

"cell_type": "code",

|

| 17 |

+

"execution_count": null,

|

| 18 |

+

"metadata": {

|

| 19 |

+

"id": "MO89Z8-UY5Ht"

|

| 20 |

+

},

|

| 21 |

+

"outputs": [],

|

| 22 |

+

"source": [

|

| 23 |

+

"!pip install gradio langchain scikit-learn openai"

|

| 24 |

+

]

|

| 25 |

+

},

|

| 26 |

+

{

|

| 27 |

+

"cell_type": "code",

|

| 28 |

+

"execution_count": null,

|

| 29 |

+

"metadata": {

|

| 30 |

+

"id": "9BZL20lBYLbj"

|

| 31 |

+

},

|

| 32 |

+

"outputs": [],

|

| 33 |

+

"source": [

|

| 34 |

+

"import openai\n",

|

| 35 |

+

"import os\n",

|

| 36 |

+

"openai.api_key = ''\n",

|

| 37 |

+

"os.environ[\"OPENAI_API_KEY\"] = openai.api_key\n"

|

| 38 |

+

]

|

| 39 |

+

},

|

| 40 |

+

{

|

| 41 |

+

"cell_type": "code",

|

| 42 |

+

"execution_count": null,

|

| 43 |

+

"metadata": {

|

| 44 |

+

"id": "Z8M3eFzXZaOb"

|

| 45 |

+

},

|

| 46 |

+

"outputs": [],

|

| 47 |

+

"source": [

|

| 48 |

+

"from prompt_ui import PromptUI\n",

|

| 49 |

+

"\n",

|

| 50 |

+

"app = PromptUI()\n",

|