Spaces:

Sleeping

Sleeping

init

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- CLIP/.github/workflows/test.yml +33 -0

- CLIP/.gitignore +10 -0

- CLIP/CLIP.png +0 -0

- CLIP/LICENSE +22 -0

- CLIP/MANIFEST.in +1 -0

- CLIP/README.md +199 -0

- CLIP/clip/__init__.py +1 -0

- CLIP/clip/bpe_simple_vocab_16e6.txt.gz +3 -0

- CLIP/clip/clip.py +245 -0

- CLIP/clip/model.py +436 -0

- CLIP/clip/simple_tokenizer.py +132 -0

- CLIP/data/country211.md +12 -0

- CLIP/data/prompts.md +3401 -0

- CLIP/data/rendered-sst2.md +11 -0

- CLIP/data/yfcc100m.md +14 -0

- CLIP/hubconf.py +42 -0

- CLIP/model-card.md +120 -0

- CLIP/notebooks/Interacting_with_CLIP.ipynb +0 -0

- CLIP/notebooks/Prompt_Engineering_for_ImageNet.ipynb +1107 -0

- CLIP/requirements.txt +5 -0

- CLIP/setup.py +21 -0

- CLIP/tests/test_consistency.py +25 -0

- Dockerfile +6 -0

- Dockerfile~ +6 -0

- LICENSE +33 -0

- README.md +10 -12

- app.py +33 -141

- configs/polos-trainer.yaml +46 -0

- docker.sh +4 -0

- install.sh +4 -0

- pacscore/README.md +135 -0

- pacscore/compute_correlations.py +111 -0

- pacscore/compute_metrics.py +110 -0

- pacscore/data/__init__.py +6 -0

- pacscore/data/dataset.py +55 -0

- pacscore/data/tokenizer/__init__.py +0 -0

- pacscore/data/tokenizer/bpe_simple_vocab_16e6.txt.gz +3 -0

- pacscore/data/tokenizer/simple_tokenizer.py +144 -0

- pacscore/environment.yml +92 -0

- pacscore/evaluation/__init__.py +44 -0

- pacscore/evaluation/pac_score/__init__.py +1 -0

- pacscore/evaluation/pac_score/pac_score.py +133 -0

- pacscore/evaluation/tokenizer.py +63 -0

- pacscore/example/bad_captions.json +5 -0

- pacscore/example/good_captions.json +4 -0

- pacscore/example/images/image1.jpg +0 -0

- pacscore/example/images/image2.jpg +0 -0

- pacscore/example/refs.json +18 -0

- pacscore/images/model.png +0 -0

- pacscore/models/__init__.py +0 -0

CLIP/.github/workflows/test.yml

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: test

|

| 2 |

+

on:

|

| 3 |

+

push:

|

| 4 |

+

branches:

|

| 5 |

+

- main

|

| 6 |

+

pull_request:

|

| 7 |

+

branches:

|

| 8 |

+

- main

|

| 9 |

+

jobs:

|

| 10 |

+

CLIP-test:

|

| 11 |

+

runs-on: ubuntu-latest

|

| 12 |

+

strategy:

|

| 13 |

+

matrix:

|

| 14 |

+

python-version: [3.8]

|

| 15 |

+

pytorch-version: [1.7.1, 1.9.1, 1.10.1]

|

| 16 |

+

include:

|

| 17 |

+

- python-version: 3.8

|

| 18 |

+

pytorch-version: 1.7.1

|

| 19 |

+

torchvision-version: 0.8.2

|

| 20 |

+

- python-version: 3.8

|

| 21 |

+

pytorch-version: 1.9.1

|

| 22 |

+

torchvision-version: 0.10.1

|

| 23 |

+

- python-version: 3.8

|

| 24 |

+

pytorch-version: 1.10.1

|

| 25 |

+

torchvision-version: 0.11.2

|

| 26 |

+

steps:

|

| 27 |

+

- uses: conda-incubator/setup-miniconda@v2

|

| 28 |

+

- run: conda install -n test python=${{ matrix.python-version }} pytorch=${{ matrix.pytorch-version }} torchvision=${{ matrix.torchvision-version }} cpuonly -c pytorch

|

| 29 |

+

- uses: actions/checkout@v2

|

| 30 |

+

- run: echo "$CONDA/envs/test/bin" >> $GITHUB_PATH

|

| 31 |

+

- run: pip install pytest

|

| 32 |

+

- run: pip install .

|

| 33 |

+

- run: pytest

|

CLIP/.gitignore

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

__pycache__/

|

| 2 |

+

*.py[cod]

|

| 3 |

+

*$py.class

|

| 4 |

+

*.egg-info

|

| 5 |

+

.pytest_cache

|

| 6 |

+

.ipynb_checkpoints

|

| 7 |

+

|

| 8 |

+

thumbs.db

|

| 9 |

+

.DS_Store

|

| 10 |

+

.idea

|

CLIP/CLIP.png

ADDED

|

CLIP/LICENSE

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2021 OpenAI

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

| 22 |

+

|

CLIP/MANIFEST.in

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

include clip/bpe_simple_vocab_16e6.txt.gz

|

CLIP/README.md

ADDED

|

@@ -0,0 +1,199 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# CLIP

|

| 2 |

+

|

| 3 |

+

[[Blog]](https://openai.com/blog/clip/) [[Paper]](https://arxiv.org/abs/2103.00020) [[Model Card]](model-card.md) [[Colab]](https://colab.research.google.com/github/openai/clip/blob/master/notebooks/Interacting_with_CLIP.ipynb)

|

| 4 |

+

|

| 5 |

+

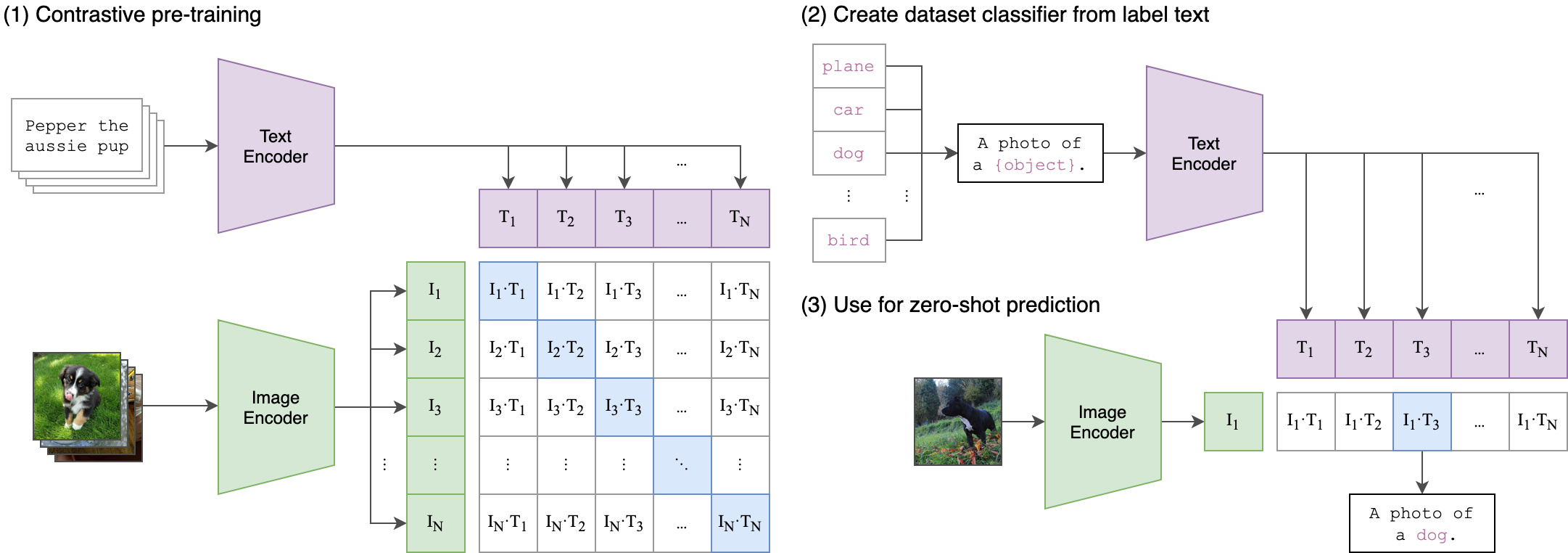

CLIP (Contrastive Language-Image Pre-Training) is a neural network trained on a variety of (image, text) pairs. It can be instructed in natural language to predict the most relevant text snippet, given an image, without directly optimizing for the task, similarly to the zero-shot capabilities of GPT-2 and 3. We found CLIP matches the performance of the original ResNet50 on ImageNet “zero-shot” without using any of the original 1.28M labeled examples, overcoming several major challenges in computer vision.

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

## Approach

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

## Usage

|

| 16 |

+

|

| 17 |

+

First, [install PyTorch 1.7.1](https://pytorch.org/get-started/locally/) (or later) and torchvision, as well as small additional dependencies, and then install this repo as a Python package. On a CUDA GPU machine, the following will do the trick:

|

| 18 |

+

|

| 19 |

+

```bash

|

| 20 |

+

$ conda install --yes -c pytorch pytorch=1.7.1 torchvision cudatoolkit=11.0

|

| 21 |

+

$ pip install ftfy regex tqdm

|

| 22 |

+

$ pip install git+https://github.com/openai/CLIP.git

|

| 23 |

+

```

|

| 24 |

+

|

| 25 |

+

Replace `cudatoolkit=11.0` above with the appropriate CUDA version on your machine or `cpuonly` when installing on a machine without a GPU.

|

| 26 |

+

|

| 27 |

+

```python

|

| 28 |

+

import torch

|

| 29 |

+

import clip

|

| 30 |

+

from PIL import Image

|

| 31 |

+

|

| 32 |

+

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 33 |

+

model, preprocess = clip.load("ViT-B/32", device=device)

|

| 34 |

+

|

| 35 |

+

image = preprocess(Image.open("CLIP.png")).unsqueeze(0).to(device)

|

| 36 |

+

text = clip.tokenize(["a diagram", "a dog", "a cat"]).to(device)

|

| 37 |

+

|

| 38 |

+

with torch.no_grad():

|

| 39 |

+

image_features = model.encode_image(image)

|

| 40 |

+

text_features = model.encode_text(text)

|

| 41 |

+

|

| 42 |

+

logits_per_image, logits_per_text = model(image, text)

|

| 43 |

+

probs = logits_per_image.softmax(dim=-1).cpu().numpy()

|

| 44 |

+

|

| 45 |

+

print("Label probs:", probs) # prints: [[0.9927937 0.00421068 0.00299572]]

|

| 46 |

+

```

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

## API

|

| 50 |

+

|

| 51 |

+

The CLIP module `clip` provides the following methods:

|

| 52 |

+

|

| 53 |

+

#### `clip.available_models()`

|

| 54 |

+

|

| 55 |

+

Returns the names of the available CLIP models.

|

| 56 |

+

|

| 57 |

+

#### `clip.load(name, device=..., jit=False)`

|

| 58 |

+

|

| 59 |

+

Returns the model and the TorchVision transform needed by the model, specified by the model name returned by `clip.available_models()`. It will download the model as necessary. The `name` argument can also be a path to a local checkpoint.

|

| 60 |

+

|

| 61 |

+

The device to run the model can be optionally specified, and the default is to use the first CUDA device if there is any, otherwise the CPU. When `jit` is `False`, a non-JIT version of the model will be loaded.

|

| 62 |

+

|

| 63 |

+

#### `clip.tokenize(text: Union[str, List[str]], context_length=77)`

|

| 64 |

+

|

| 65 |

+

Returns a LongTensor containing tokenized sequences of given text input(s). This can be used as the input to the model

|

| 66 |

+

|

| 67 |

+

---

|

| 68 |

+

|

| 69 |

+

The model returned by `clip.load()` supports the following methods:

|

| 70 |

+

|

| 71 |

+

#### `model.encode_image(image: Tensor)`

|

| 72 |

+

|

| 73 |

+

Given a batch of images, returns the image features encoded by the vision portion of the CLIP model.

|

| 74 |

+

|

| 75 |

+

#### `model.encode_text(text: Tensor)`

|

| 76 |

+

|

| 77 |

+

Given a batch of text tokens, returns the text features encoded by the language portion of the CLIP model.

|

| 78 |

+

|

| 79 |

+

#### `model(image: Tensor, text: Tensor)`

|

| 80 |

+

|

| 81 |

+

Given a batch of images and a batch of text tokens, returns two Tensors, containing the logit scores corresponding to each image and text input. The values are cosine similarities between the corresponding image and text features, times 100.

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

## More Examples

|

| 86 |

+

|

| 87 |

+

### Zero-Shot Prediction

|

| 88 |

+

|

| 89 |

+

The code below performs zero-shot prediction using CLIP, as shown in Appendix B in the paper. This example takes an image from the [CIFAR-100 dataset](https://www.cs.toronto.edu/~kriz/cifar.html), and predicts the most likely labels among the 100 textual labels from the dataset.

|

| 90 |

+

|

| 91 |

+

```python

|

| 92 |

+

import os

|

| 93 |

+

import clip

|

| 94 |

+

import torch

|

| 95 |

+

from torchvision.datasets import CIFAR100

|

| 96 |

+

|

| 97 |

+

# Load the model

|

| 98 |

+

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 99 |

+

model, preprocess = clip.load('ViT-B/32', device)

|

| 100 |

+

|

| 101 |

+

# Download the dataset

|

| 102 |

+

cifar100 = CIFAR100(root=os.path.expanduser("~/.cache"), download=True, train=False)

|

| 103 |

+

|

| 104 |

+

# Prepare the inputs

|

| 105 |

+

image, class_id = cifar100[3637]

|

| 106 |

+

image_input = preprocess(image).unsqueeze(0).to(device)

|

| 107 |

+

text_inputs = torch.cat([clip.tokenize(f"a photo of a {c}") for c in cifar100.classes]).to(device)

|

| 108 |

+

|

| 109 |

+

# Calculate features

|

| 110 |

+

with torch.no_grad():

|

| 111 |

+

image_features = model.encode_image(image_input)

|

| 112 |

+

text_features = model.encode_text(text_inputs)

|

| 113 |

+

|

| 114 |

+

# Pick the top 5 most similar labels for the image

|

| 115 |

+

image_features /= image_features.norm(dim=-1, keepdim=True)

|

| 116 |

+

text_features /= text_features.norm(dim=-1, keepdim=True)

|

| 117 |

+

similarity = (100.0 * image_features @ text_features.T).softmax(dim=-1)

|

| 118 |

+

values, indices = similarity[0].topk(5)

|

| 119 |

+

|

| 120 |

+

# Print the result

|

| 121 |

+

print("\nTop predictions:\n")

|

| 122 |

+

for value, index in zip(values, indices):

|

| 123 |

+

print(f"{cifar100.classes[index]:>16s}: {100 * value.item():.2f}%")

|

| 124 |

+

```

|

| 125 |

+

|

| 126 |

+

The output will look like the following (the exact numbers may be slightly different depending on the compute device):

|

| 127 |

+

|

| 128 |

+

```

|

| 129 |

+

Top predictions:

|

| 130 |

+

|

| 131 |

+

snake: 65.31%

|

| 132 |

+

turtle: 12.29%

|

| 133 |

+

sweet_pepper: 3.83%

|

| 134 |

+

lizard: 1.88%

|

| 135 |

+

crocodile: 1.75%

|

| 136 |

+

```

|

| 137 |

+

|

| 138 |

+

Note that this example uses the `encode_image()` and `encode_text()` methods that return the encoded features of given inputs.

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

### Linear-probe evaluation

|

| 142 |

+

|

| 143 |

+

The example below uses [scikit-learn](https://scikit-learn.org/) to perform logistic regression on image features.

|

| 144 |

+

|

| 145 |

+

```python

|

| 146 |

+

import os

|

| 147 |

+

import clip

|

| 148 |

+

import torch

|

| 149 |

+

|

| 150 |

+

import numpy as np

|

| 151 |

+

from sklearn.linear_model import LogisticRegression

|

| 152 |

+

from torch.utils.data import DataLoader

|

| 153 |

+

from torchvision.datasets import CIFAR100

|

| 154 |

+

from tqdm import tqdm

|

| 155 |

+

|

| 156 |

+

# Load the model

|

| 157 |

+

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 158 |

+

model, preprocess = clip.load('ViT-B/32', device)

|

| 159 |

+

|

| 160 |

+

# Load the dataset

|

| 161 |

+

root = os.path.expanduser("~/.cache")

|

| 162 |

+

train = CIFAR100(root, download=True, train=True, transform=preprocess)

|

| 163 |

+

test = CIFAR100(root, download=True, train=False, transform=preprocess)

|

| 164 |

+

|

| 165 |

+

|

| 166 |

+

def get_features(dataset):

|

| 167 |

+

all_features = []

|

| 168 |

+

all_labels = []

|

| 169 |

+

|

| 170 |

+

with torch.no_grad():

|

| 171 |

+

for images, labels in tqdm(DataLoader(dataset, batch_size=100)):

|

| 172 |

+

features = model.encode_image(images.to(device))

|

| 173 |

+

|

| 174 |

+

all_features.append(features)

|

| 175 |

+

all_labels.append(labels)

|

| 176 |

+

|

| 177 |

+

return torch.cat(all_features).cpu().numpy(), torch.cat(all_labels).cpu().numpy()

|

| 178 |

+

|

| 179 |

+

# Calculate the image features

|

| 180 |

+

train_features, train_labels = get_features(train)

|

| 181 |

+

test_features, test_labels = get_features(test)

|

| 182 |

+

|

| 183 |

+

# Perform logistic regression

|

| 184 |

+

classifier = LogisticRegression(random_state=0, C=0.316, max_iter=1000, verbose=1)

|

| 185 |

+

classifier.fit(train_features, train_labels)

|

| 186 |

+

|

| 187 |

+

# Evaluate using the logistic regression classifier

|

| 188 |

+

predictions = classifier.predict(test_features)

|

| 189 |

+

accuracy = np.mean((test_labels == predictions).astype(float)) * 100.

|

| 190 |

+

print(f"Accuracy = {accuracy:.3f}")

|

| 191 |

+

```

|

| 192 |

+

|

| 193 |

+

Note that the `C` value should be determined via a hyperparameter sweep using a validation split.

|

| 194 |

+

|

| 195 |

+

|

| 196 |

+

## See Also

|

| 197 |

+

|

| 198 |

+

* [OpenCLIP](https://github.com/mlfoundations/open_clip): includes larger and independently trained CLIP models up to ViT-G/14

|

| 199 |

+

* [Hugging Face implementation of CLIP](https://huggingface.co/docs/transformers/model_doc/clip): for easier integration with the HF ecosystem

|

CLIP/clip/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

from .clip import *

|

CLIP/clip/bpe_simple_vocab_16e6.txt.gz

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:924691ac288e54409236115652ad4aa250f48203de50a9e4722a6ecd48d6804a

|

| 3 |

+

size 1356917

|

CLIP/clip/clip.py

ADDED

|

@@ -0,0 +1,245 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import hashlib

|

| 2 |

+

import os

|

| 3 |

+

import urllib

|

| 4 |

+

import warnings

|

| 5 |

+

from typing import Any, Union, List

|

| 6 |

+

from pkg_resources import packaging

|

| 7 |

+

|

| 8 |

+

import torch

|

| 9 |

+

from PIL import Image

|

| 10 |

+

from torchvision.transforms import Compose, Resize, CenterCrop, ToTensor, Normalize

|

| 11 |

+

from tqdm import tqdm

|

| 12 |

+

|

| 13 |

+

from .model import build_model

|

| 14 |

+

from .simple_tokenizer import SimpleTokenizer as _Tokenizer

|

| 15 |

+

|

| 16 |

+

try:

|

| 17 |

+

from torchvision.transforms import InterpolationMode

|

| 18 |

+

BICUBIC = InterpolationMode.BICUBIC

|

| 19 |

+

except ImportError:

|

| 20 |

+

BICUBIC = Image.BICUBIC

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

if packaging.version.parse(torch.__version__) < packaging.version.parse("1.7.1"):

|

| 24 |

+

warnings.warn("PyTorch version 1.7.1 or higher is recommended")

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

__all__ = ["available_models", "load", "tokenize"]

|

| 28 |

+

_tokenizer = _Tokenizer()

|

| 29 |

+

|

| 30 |

+

_MODELS = {

|

| 31 |

+

"RN50": "https://openaipublic.azureedge.net/clip/models/afeb0e10f9e5a86da6080e35cf09123aca3b358a0c3e3b6c78a7b63bc04b6762/RN50.pt",

|

| 32 |

+

"RN101": "https://openaipublic.azureedge.net/clip/models/8fa8567bab74a42d41c5915025a8e4538c3bdbe8804a470a72f30b0d94fab599/RN101.pt",

|

| 33 |

+

"RN50x4": "https://openaipublic.azureedge.net/clip/models/7e526bd135e493cef0776de27d5f42653e6b4c8bf9e0f653bb11773263205fdd/RN50x4.pt",

|

| 34 |

+

"RN50x16": "https://openaipublic.azureedge.net/clip/models/52378b407f34354e150460fe41077663dd5b39c54cd0bfd2b27167a4a06ec9aa/RN50x16.pt",

|

| 35 |

+

"RN50x64": "https://openaipublic.azureedge.net/clip/models/be1cfb55d75a9666199fb2206c106743da0f6468c9d327f3e0d0a543a9919d9c/RN50x64.pt",

|

| 36 |

+

"ViT-B/32": "https://openaipublic.azureedge.net/clip/models/40d365715913c9da98579312b702a82c18be219cc2a73407c4526f58eba950af/ViT-B-32.pt",

|

| 37 |

+

"ViT-B/16": "https://openaipublic.azureedge.net/clip/models/5806e77cd80f8b59890b7e101eabd078d9fb84e6937f9e85e4ecb61988df416f/ViT-B-16.pt",

|

| 38 |

+

"ViT-L/14": "https://openaipublic.azureedge.net/clip/models/b8cca3fd41ae0c99ba7e8951adf17d267cdb84cd88be6f7c2e0eca1737a03836/ViT-L-14.pt",

|

| 39 |

+

"ViT-L/14@336px": "https://openaipublic.azureedge.net/clip/models/3035c92b350959924f9f00213499208652fc7ea050643e8b385c2dac08641f02/ViT-L-14-336px.pt",

|

| 40 |

+

}

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

def _download(url: str, root: str):

|

| 44 |

+

os.makedirs(root, exist_ok=True)

|

| 45 |

+

filename = os.path.basename(url)

|

| 46 |

+

|

| 47 |

+

expected_sha256 = url.split("/")[-2]

|

| 48 |

+

download_target = os.path.join(root, filename)

|

| 49 |

+

|

| 50 |

+

if os.path.exists(download_target) and not os.path.isfile(download_target):

|

| 51 |

+

raise RuntimeError(f"{download_target} exists and is not a regular file")

|

| 52 |

+

|

| 53 |

+

if os.path.isfile(download_target):

|

| 54 |

+

if hashlib.sha256(open(download_target, "rb").read()).hexdigest() == expected_sha256:

|

| 55 |

+

return download_target

|

| 56 |

+

else:

|

| 57 |

+

warnings.warn(f"{download_target} exists, but the SHA256 checksum does not match; re-downloading the file")

|

| 58 |

+

|

| 59 |

+

with urllib.request.urlopen(url) as source, open(download_target, "wb") as output:

|

| 60 |

+

with tqdm(total=int(source.info().get("Content-Length")), ncols=80, unit='iB', unit_scale=True, unit_divisor=1024) as loop:

|

| 61 |

+

while True:

|

| 62 |

+

buffer = source.read(8192)

|

| 63 |

+

if not buffer:

|

| 64 |

+

break

|

| 65 |

+

|

| 66 |

+

output.write(buffer)

|

| 67 |

+

loop.update(len(buffer))

|

| 68 |

+

|

| 69 |

+

if hashlib.sha256(open(download_target, "rb").read()).hexdigest() != expected_sha256:

|

| 70 |

+

raise RuntimeError("Model has been downloaded but the SHA256 checksum does not not match")

|

| 71 |

+

|

| 72 |

+

return download_target

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

def _convert_image_to_rgb(image):

|

| 76 |

+

return image.convert("RGB")

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

def _transform(n_px):

|

| 80 |

+

return Compose([

|

| 81 |

+

Resize(n_px, interpolation=BICUBIC),

|

| 82 |

+

CenterCrop(n_px),

|

| 83 |

+

_convert_image_to_rgb,

|

| 84 |

+

ToTensor(),

|

| 85 |

+

Normalize((0.48145466, 0.4578275, 0.40821073), (0.26862954, 0.26130258, 0.27577711)),

|

| 86 |

+

])

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

def available_models() -> List[str]:

|

| 90 |

+

"""Returns the names of available CLIP models"""

|

| 91 |

+

return list(_MODELS.keys())

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

def load(name: str, device: Union[str, torch.device] = "cuda" if torch.cuda.is_available() else "cpu", jit: bool = False, download_root: str = None):

|

| 95 |

+

"""Load a CLIP model

|

| 96 |

+

|

| 97 |

+

Parameters

|

| 98 |

+

----------

|

| 99 |

+

name : str

|

| 100 |

+

A model name listed by `clip.available_models()`, or the path to a model checkpoint containing the state_dict

|

| 101 |

+

|

| 102 |

+

device : Union[str, torch.device]

|

| 103 |

+

The device to put the loaded model

|

| 104 |

+

|

| 105 |

+

jit : bool

|

| 106 |

+

Whether to load the optimized JIT model or more hackable non-JIT model (default).

|

| 107 |

+

|

| 108 |

+

download_root: str

|

| 109 |

+

path to download the model files; by default, it uses "~/.cache/clip"

|

| 110 |

+

|

| 111 |

+

Returns

|

| 112 |

+

-------

|

| 113 |

+

model : torch.nn.Module

|

| 114 |

+

The CLIP model

|

| 115 |

+

|

| 116 |

+

preprocess : Callable[[PIL.Image], torch.Tensor]

|

| 117 |

+

A torchvision transform that converts a PIL image into a tensor that the returned model can take as its input

|

| 118 |

+

"""

|

| 119 |

+

if name in _MODELS:

|

| 120 |

+

model_path = _download(_MODELS[name], download_root or os.path.expanduser("~/.cache/clip"))

|

| 121 |

+

elif os.path.isfile(name):

|

| 122 |

+

model_path = name

|

| 123 |

+

else:

|

| 124 |

+

raise RuntimeError(f"Model {name} not found; available models = {available_models()}")

|

| 125 |

+

|

| 126 |

+

with open(model_path, 'rb') as opened_file:

|

| 127 |

+

try:

|

| 128 |

+

# loading JIT archive

|

| 129 |

+

model = torch.jit.load(opened_file, map_location=device if jit else "cpu").eval()

|

| 130 |

+

state_dict = None

|

| 131 |

+

except RuntimeError:

|

| 132 |

+

# loading saved state dict

|

| 133 |

+

if jit:

|

| 134 |

+

warnings.warn(f"File {model_path} is not a JIT archive. Loading as a state dict instead")

|

| 135 |

+

jit = False

|

| 136 |

+

state_dict = torch.load(opened_file, map_location="cpu")

|

| 137 |

+

|

| 138 |

+

if not jit:

|

| 139 |

+

model = build_model(state_dict or model.state_dict()).to(device)

|

| 140 |

+

if str(device) == "cpu":

|

| 141 |

+

model.float()

|

| 142 |

+

return model, _transform(model.visual.input_resolution)

|

| 143 |

+

|

| 144 |

+

# patch the device names

|

| 145 |

+

device_holder = torch.jit.trace(lambda: torch.ones([]).to(torch.device(device)), example_inputs=[])

|

| 146 |

+

device_node = [n for n in device_holder.graph.findAllNodes("prim::Constant") if "Device" in repr(n)][-1]

|

| 147 |

+

|

| 148 |

+

def _node_get(node: torch._C.Node, key: str):

|

| 149 |

+

"""Gets attributes of a node which is polymorphic over return type.

|

| 150 |

+

|

| 151 |

+

From https://github.com/pytorch/pytorch/pull/82628

|

| 152 |

+

"""

|

| 153 |

+

sel = node.kindOf(key)

|

| 154 |

+

return getattr(node, sel)(key)

|

| 155 |

+

|

| 156 |

+

def patch_device(module):

|

| 157 |

+

try:

|

| 158 |

+

graphs = [module.graph] if hasattr(module, "graph") else []

|

| 159 |

+

except RuntimeError:

|

| 160 |

+

graphs = []

|

| 161 |

+

|

| 162 |

+

if hasattr(module, "forward1"):

|

| 163 |

+

graphs.append(module.forward1.graph)

|

| 164 |

+

|

| 165 |

+

for graph in graphs:

|

| 166 |

+

for node in graph.findAllNodes("prim::Constant"):

|

| 167 |

+

if "value" in node.attributeNames() and str(_node_get(node, "value")).startswith("cuda"):

|

| 168 |

+

node.copyAttributes(device_node)

|

| 169 |

+

|

| 170 |

+

model.apply(patch_device)

|

| 171 |

+

patch_device(model.encode_image)

|

| 172 |

+

patch_device(model.encode_text)

|

| 173 |

+

|

| 174 |

+

# patch dtype to float32 on CPU

|

| 175 |

+

if str(device) == "cpu":

|

| 176 |

+

float_holder = torch.jit.trace(lambda: torch.ones([]).float(), example_inputs=[])

|

| 177 |

+

float_input = list(float_holder.graph.findNode("aten::to").inputs())[1]

|

| 178 |

+

float_node = float_input.node()

|

| 179 |

+

|

| 180 |

+

def patch_float(module):

|

| 181 |

+

try:

|

| 182 |

+

graphs = [module.graph] if hasattr(module, "graph") else []

|

| 183 |

+

except RuntimeError:

|

| 184 |

+

graphs = []

|

| 185 |

+

|

| 186 |

+

if hasattr(module, "forward1"):

|

| 187 |

+

graphs.append(module.forward1.graph)

|

| 188 |

+

|

| 189 |

+

for graph in graphs:

|

| 190 |

+

for node in graph.findAllNodes("aten::to"):

|

| 191 |

+

inputs = list(node.inputs())

|

| 192 |

+

for i in [1, 2]: # dtype can be the second or third argument to aten::to()

|

| 193 |

+

if _node_get(inputs[i].node(), "value") == 5:

|

| 194 |

+

inputs[i].node().copyAttributes(float_node)

|

| 195 |

+

|

| 196 |

+

model.apply(patch_float)

|

| 197 |

+

patch_float(model.encode_image)

|

| 198 |

+

patch_float(model.encode_text)

|

| 199 |

+

|

| 200 |

+

model.float()

|

| 201 |

+

|

| 202 |

+

return model, _transform(model.input_resolution.item())

|

| 203 |

+

|

| 204 |

+

|

| 205 |

+

def tokenize(texts: Union[str, List[str]], context_length: int = 77, truncate: bool = False) -> Union[torch.IntTensor, torch.LongTensor]:

|

| 206 |

+

"""

|

| 207 |

+

Returns the tokenized representation of given input string(s)

|

| 208 |

+

|

| 209 |

+

Parameters

|

| 210 |

+

----------

|

| 211 |

+

texts : Union[str, List[str]]

|

| 212 |

+

An input string or a list of input strings to tokenize

|

| 213 |

+

|

| 214 |

+

context_length : int

|

| 215 |

+

The context length to use; all CLIP models use 77 as the context length

|

| 216 |

+

|

| 217 |

+

truncate: bool

|

| 218 |

+

Whether to truncate the text in case its encoding is longer than the context length

|

| 219 |

+

|

| 220 |

+

Returns

|

| 221 |

+

-------

|

| 222 |

+

A two-dimensional tensor containing the resulting tokens, shape = [number of input strings, context_length].

|

| 223 |

+

We return LongTensor when torch version is <1.8.0, since older index_select requires indices to be long.

|

| 224 |

+

"""

|

| 225 |

+

if isinstance(texts, str):

|

| 226 |

+

texts = [texts]

|

| 227 |

+

|

| 228 |

+

sot_token = _tokenizer.encoder["<|startoftext|>"]

|

| 229 |

+

eot_token = _tokenizer.encoder["<|endoftext|>"]

|

| 230 |

+

all_tokens = [[sot_token] + _tokenizer.encode(text) + [eot_token] for text in texts]

|

| 231 |

+

if packaging.version.parse(torch.__version__) < packaging.version.parse("1.8.0"):

|

| 232 |

+

result = torch.zeros(len(all_tokens), context_length, dtype=torch.long)

|

| 233 |

+

else:

|

| 234 |

+

result = torch.zeros(len(all_tokens), context_length, dtype=torch.int)

|

| 235 |

+

|

| 236 |

+

for i, tokens in enumerate(all_tokens):

|

| 237 |

+

if len(tokens) > context_length:

|

| 238 |

+

if truncate:

|

| 239 |

+

tokens = tokens[:context_length]

|

| 240 |

+

tokens[-1] = eot_token

|

| 241 |

+

else:

|

| 242 |

+

raise RuntimeError(f"Input {texts[i]} is too long for context length {context_length}")

|

| 243 |

+

result[i, :len(tokens)] = torch.tensor(tokens)

|

| 244 |

+

|

| 245 |

+

return result

|

CLIP/clip/model.py

ADDED

|

@@ -0,0 +1,436 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from collections import OrderedDict

|

| 2 |

+

from typing import Tuple, Union

|

| 3 |

+

|

| 4 |

+

import numpy as np

|

| 5 |

+

import torch

|

| 6 |

+

import torch.nn.functional as F

|

| 7 |

+

from torch import nn

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

class Bottleneck(nn.Module):

|

| 11 |

+

expansion = 4

|

| 12 |

+

|

| 13 |

+

def __init__(self, inplanes, planes, stride=1):

|

| 14 |

+

super().__init__()

|

| 15 |

+

|

| 16 |

+

# all conv layers have stride 1. an avgpool is performed after the second convolution when stride > 1

|

| 17 |

+

self.conv1 = nn.Conv2d(inplanes, planes, 1, bias=False)

|

| 18 |

+

self.bn1 = nn.BatchNorm2d(planes)

|

| 19 |

+

self.relu1 = nn.ReLU(inplace=True)

|

| 20 |

+

|

| 21 |

+

self.conv2 = nn.Conv2d(planes, planes, 3, padding=1, bias=False)

|

| 22 |

+

self.bn2 = nn.BatchNorm2d(planes)

|

| 23 |

+

self.relu2 = nn.ReLU(inplace=True)

|

| 24 |

+

|

| 25 |

+

self.avgpool = nn.AvgPool2d(stride) if stride > 1 else nn.Identity()

|

| 26 |

+

|

| 27 |

+

self.conv3 = nn.Conv2d(planes, planes * self.expansion, 1, bias=False)

|

| 28 |

+

self.bn3 = nn.BatchNorm2d(planes * self.expansion)

|

| 29 |

+

self.relu3 = nn.ReLU(inplace=True)

|

| 30 |

+

|

| 31 |

+

self.downsample = None

|

| 32 |

+

self.stride = stride

|

| 33 |

+

|

| 34 |

+

if stride > 1 or inplanes != planes * Bottleneck.expansion:

|

| 35 |

+

# downsampling layer is prepended with an avgpool, and the subsequent convolution has stride 1

|

| 36 |

+

self.downsample = nn.Sequential(OrderedDict([

|

| 37 |

+

("-1", nn.AvgPool2d(stride)),

|

| 38 |

+

("0", nn.Conv2d(inplanes, planes * self.expansion, 1, stride=1, bias=False)),

|

| 39 |

+

("1", nn.BatchNorm2d(planes * self.expansion))

|

| 40 |

+

]))

|

| 41 |

+

|

| 42 |

+

def forward(self, x: torch.Tensor):

|

| 43 |

+

identity = x

|

| 44 |

+

|

| 45 |

+

out = self.relu1(self.bn1(self.conv1(x)))

|

| 46 |

+

out = self.relu2(self.bn2(self.conv2(out)))

|

| 47 |

+

out = self.avgpool(out)

|

| 48 |

+

out = self.bn3(self.conv3(out))

|

| 49 |

+

|

| 50 |

+

if self.downsample is not None:

|

| 51 |

+

identity = self.downsample(x)

|

| 52 |

+

|

| 53 |

+

out += identity

|

| 54 |

+

out = self.relu3(out)

|

| 55 |

+

return out

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

class AttentionPool2d(nn.Module):

|

| 59 |

+

def __init__(self, spacial_dim: int, embed_dim: int, num_heads: int, output_dim: int = None):

|

| 60 |

+

super().__init__()

|

| 61 |

+

self.positional_embedding = nn.Parameter(torch.randn(spacial_dim ** 2 + 1, embed_dim) / embed_dim ** 0.5)

|

| 62 |

+

self.k_proj = nn.Linear(embed_dim, embed_dim)

|

| 63 |

+

self.q_proj = nn.Linear(embed_dim, embed_dim)

|

| 64 |

+

self.v_proj = nn.Linear(embed_dim, embed_dim)

|

| 65 |

+

self.c_proj = nn.Linear(embed_dim, output_dim or embed_dim)

|

| 66 |

+

self.num_heads = num_heads

|

| 67 |

+

|

| 68 |

+

def forward(self, x):

|

| 69 |

+

x = x.flatten(start_dim=2).permute(2, 0, 1) # NCHW -> (HW)NC

|

| 70 |

+

x = torch.cat([x.mean(dim=0, keepdim=True), x], dim=0) # (HW+1)NC

|

| 71 |

+

x = x + self.positional_embedding[:, None, :].to(x.dtype) # (HW+1)NC

|

| 72 |

+

x, _ = F.multi_head_attention_forward(

|

| 73 |

+

query=x[:1], key=x, value=x,

|

| 74 |

+

embed_dim_to_check=x.shape[-1],

|

| 75 |

+

num_heads=self.num_heads,

|

| 76 |

+

q_proj_weight=self.q_proj.weight,

|

| 77 |

+

k_proj_weight=self.k_proj.weight,

|

| 78 |

+

v_proj_weight=self.v_proj.weight,

|

| 79 |

+

in_proj_weight=None,

|

| 80 |

+

in_proj_bias=torch.cat([self.q_proj.bias, self.k_proj.bias, self.v_proj.bias]),

|

| 81 |

+

bias_k=None,

|

| 82 |

+

bias_v=None,

|

| 83 |

+

add_zero_attn=False,

|

| 84 |

+

dropout_p=0,

|

| 85 |

+

out_proj_weight=self.c_proj.weight,

|

| 86 |

+

out_proj_bias=self.c_proj.bias,

|

| 87 |

+

use_separate_proj_weight=True,

|

| 88 |

+

training=self.training,

|

| 89 |

+

need_weights=False

|

| 90 |

+

)

|

| 91 |

+

return x.squeeze(0)

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

class ModifiedResNet(nn.Module):

|

| 95 |

+

"""

|

| 96 |

+

A ResNet class that is similar to torchvision's but contains the following changes:

|

| 97 |

+

- There are now 3 "stem" convolutions as opposed to 1, with an average pool instead of a max pool.

|

| 98 |

+

- Performs anti-aliasing strided convolutions, where an avgpool is prepended to convolutions with stride > 1

|

| 99 |

+

- The final pooling layer is a QKV attention instead of an average pool

|

| 100 |

+

"""

|

| 101 |

+

|

| 102 |

+

def __init__(self, layers, output_dim, heads, input_resolution=224, width=64):

|

| 103 |

+

super().__init__()

|

| 104 |

+

self.output_dim = output_dim

|

| 105 |

+

self.input_resolution = input_resolution

|

| 106 |

+

|

| 107 |

+

# the 3-layer stem

|

| 108 |

+

self.conv1 = nn.Conv2d(3, width // 2, kernel_size=3, stride=2, padding=1, bias=False)

|

| 109 |

+

self.bn1 = nn.BatchNorm2d(width // 2)

|

| 110 |

+

self.relu1 = nn.ReLU(inplace=True)

|

| 111 |

+

self.conv2 = nn.Conv2d(width // 2, width // 2, kernel_size=3, padding=1, bias=False)

|

| 112 |

+

self.bn2 = nn.BatchNorm2d(width // 2)

|

| 113 |

+

self.relu2 = nn.ReLU(inplace=True)

|

| 114 |

+

self.conv3 = nn.Conv2d(width // 2, width, kernel_size=3, padding=1, bias=False)

|

| 115 |

+

self.bn3 = nn.BatchNorm2d(width)

|

| 116 |

+

self.relu3 = nn.ReLU(inplace=True)

|

| 117 |

+

self.avgpool = nn.AvgPool2d(2)

|

| 118 |

+

|

| 119 |

+

# residual layers

|

| 120 |

+

self._inplanes = width # this is a *mutable* variable used during construction

|

| 121 |

+

self.layer1 = self._make_layer(width, layers[0])

|

| 122 |

+

self.layer2 = self._make_layer(width * 2, layers[1], stride=2)

|

| 123 |

+

self.layer3 = self._make_layer(width * 4, layers[2], stride=2)

|

| 124 |

+

self.layer4 = self._make_layer(width * 8, layers[3], stride=2)

|

| 125 |

+

|

| 126 |

+

embed_dim = width * 32 # the ResNet feature dimension

|

| 127 |

+

self.attnpool = AttentionPool2d(input_resolution // 32, embed_dim, heads, output_dim)

|

| 128 |

+

|

| 129 |

+

def _make_layer(self, planes, blocks, stride=1):

|

| 130 |

+

layers = [Bottleneck(self._inplanes, planes, stride)]

|

| 131 |

+

|

| 132 |

+

self._inplanes = planes * Bottleneck.expansion

|

| 133 |

+

for _ in range(1, blocks):

|

| 134 |

+

layers.append(Bottleneck(self._inplanes, planes))

|

| 135 |

+

|

| 136 |

+

return nn.Sequential(*layers)

|

| 137 |

+

|

| 138 |

+

def forward(self, x):

|

| 139 |

+

def stem(x):

|

| 140 |

+

x = self.relu1(self.bn1(self.conv1(x)))

|

| 141 |

+

x = self.relu2(self.bn2(self.conv2(x)))

|

| 142 |

+

x = self.relu3(self.bn3(self.conv3(x)))

|

| 143 |

+

x = self.avgpool(x)

|

| 144 |

+

return x

|

| 145 |

+

|

| 146 |

+

x = x.type(self.conv1.weight.dtype)

|

| 147 |

+

x = stem(x)

|

| 148 |

+

x = self.layer1(x)

|

| 149 |

+

x = self.layer2(x)

|

| 150 |

+

x = self.layer3(x)

|

| 151 |

+

x = self.layer4(x)

|

| 152 |

+

x = self.attnpool(x)

|

| 153 |

+

|

| 154 |

+

return x

|

| 155 |

+

|

| 156 |

+

|

| 157 |

+

class LayerNorm(nn.LayerNorm):

|

| 158 |

+

"""Subclass torch's LayerNorm to handle fp16."""

|

| 159 |

+

|

| 160 |

+

def forward(self, x: torch.Tensor):

|

| 161 |

+

orig_type = x.dtype

|

| 162 |

+

ret = super().forward(x.type(torch.float32))

|

| 163 |

+

return ret.type(orig_type)

|

| 164 |

+

|

| 165 |

+

|

| 166 |

+

class QuickGELU(nn.Module):

|

| 167 |

+

def forward(self, x: torch.Tensor):

|

| 168 |

+

return x * torch.sigmoid(1.702 * x)

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

class ResidualAttentionBlock(nn.Module):

|

| 172 |

+

def __init__(self, d_model: int, n_head: int, attn_mask: torch.Tensor = None):

|

| 173 |

+

super().__init__()

|

| 174 |

+

|

| 175 |

+

self.attn = nn.MultiheadAttention(d_model, n_head)

|

| 176 |

+

self.ln_1 = LayerNorm(d_model)

|

| 177 |

+

self.mlp = nn.Sequential(OrderedDict([

|

| 178 |

+

("c_fc", nn.Linear(d_model, d_model * 4)),

|

| 179 |

+

("gelu", QuickGELU()),

|

| 180 |

+

("c_proj", nn.Linear(d_model * 4, d_model))

|

| 181 |

+

]))

|

| 182 |

+

self.ln_2 = LayerNorm(d_model)

|

| 183 |

+

self.attn_mask = attn_mask

|

| 184 |

+

|

| 185 |

+

def attention(self, x: torch.Tensor):

|

| 186 |

+

self.attn_mask = self.attn_mask.to(dtype=x.dtype, device=x.device) if self.attn_mask is not None else None

|

| 187 |

+

return self.attn(x, x, x, need_weights=False, attn_mask=self.attn_mask)[0]

|

| 188 |

+

|

| 189 |

+

def forward(self, x: torch.Tensor):

|

| 190 |

+

x = x + self.attention(self.ln_1(x))

|

| 191 |

+

x = x + self.mlp(self.ln_2(x))

|

| 192 |

+

return x

|

| 193 |

+

|

| 194 |

+

|

| 195 |

+

class Transformer(nn.Module):

|

| 196 |

+

def __init__(self, width: int, layers: int, heads: int, attn_mask: torch.Tensor = None):

|

| 197 |

+

super().__init__()

|

| 198 |

+

self.width = width

|

| 199 |

+

self.layers = layers

|

| 200 |

+

self.resblocks = nn.Sequential(*[ResidualAttentionBlock(width, heads, attn_mask) for _ in range(layers)])

|

| 201 |

+

|

| 202 |

+

def forward(self, x: torch.Tensor):

|

| 203 |

+

return self.resblocks(x)

|

| 204 |

+

|

| 205 |

+

|

| 206 |

+

class VisionTransformer(nn.Module):

|

| 207 |

+

def __init__(self, input_resolution: int, patch_size: int, width: int, layers: int, heads: int, output_dim: int):

|

| 208 |

+

super().__init__()

|

| 209 |

+

self.input_resolution = input_resolution

|

| 210 |

+

self.output_dim = output_dim

|

| 211 |

+

self.conv1 = nn.Conv2d(in_channels=3, out_channels=width, kernel_size=patch_size, stride=patch_size, bias=False)

|

| 212 |

+

|

| 213 |

+

scale = width ** -0.5

|

| 214 |

+

self.class_embedding = nn.Parameter(scale * torch.randn(width))

|

| 215 |

+

self.positional_embedding = nn.Parameter(scale * torch.randn((input_resolution // patch_size) ** 2 + 1, width))

|

| 216 |

+

self.ln_pre = LayerNorm(width)

|

| 217 |

+

|

| 218 |

+

self.transformer = Transformer(width, layers, heads)

|

| 219 |

+