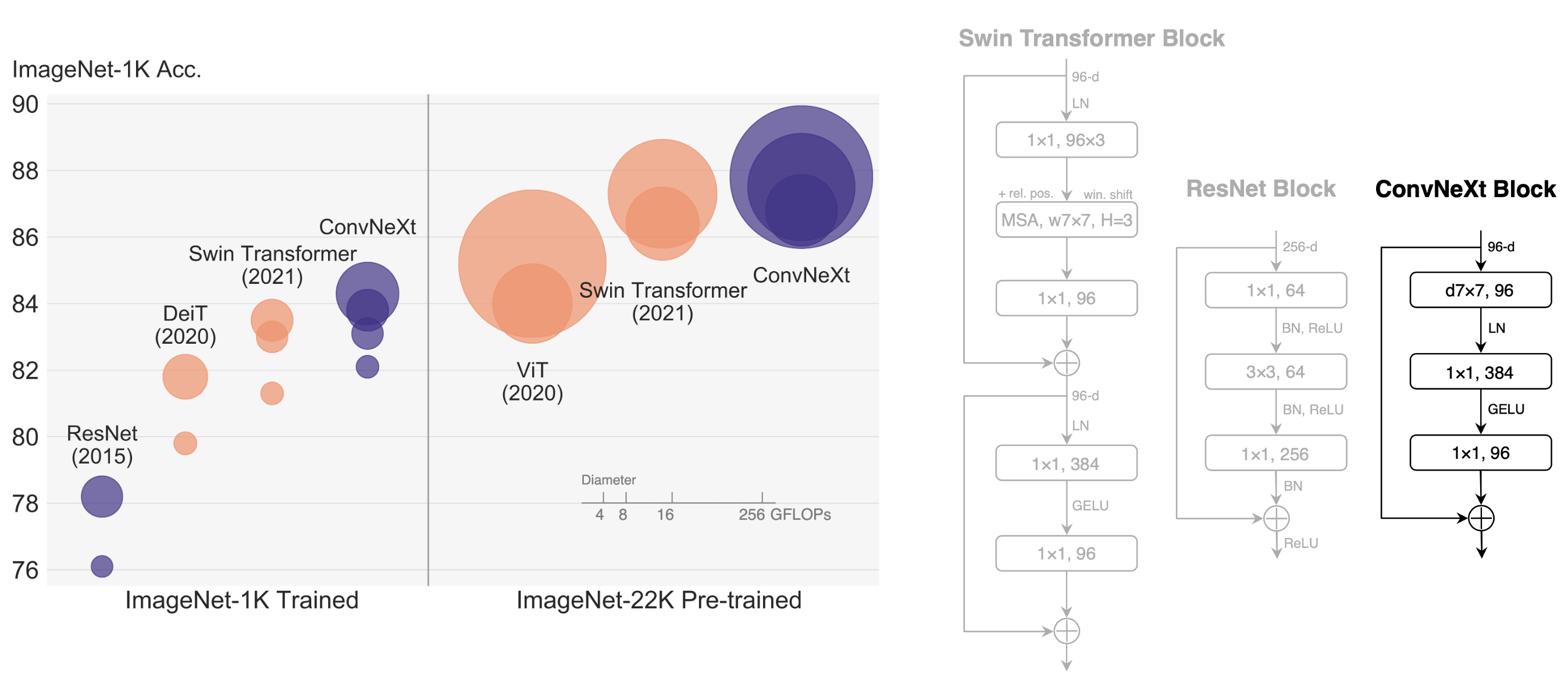

+

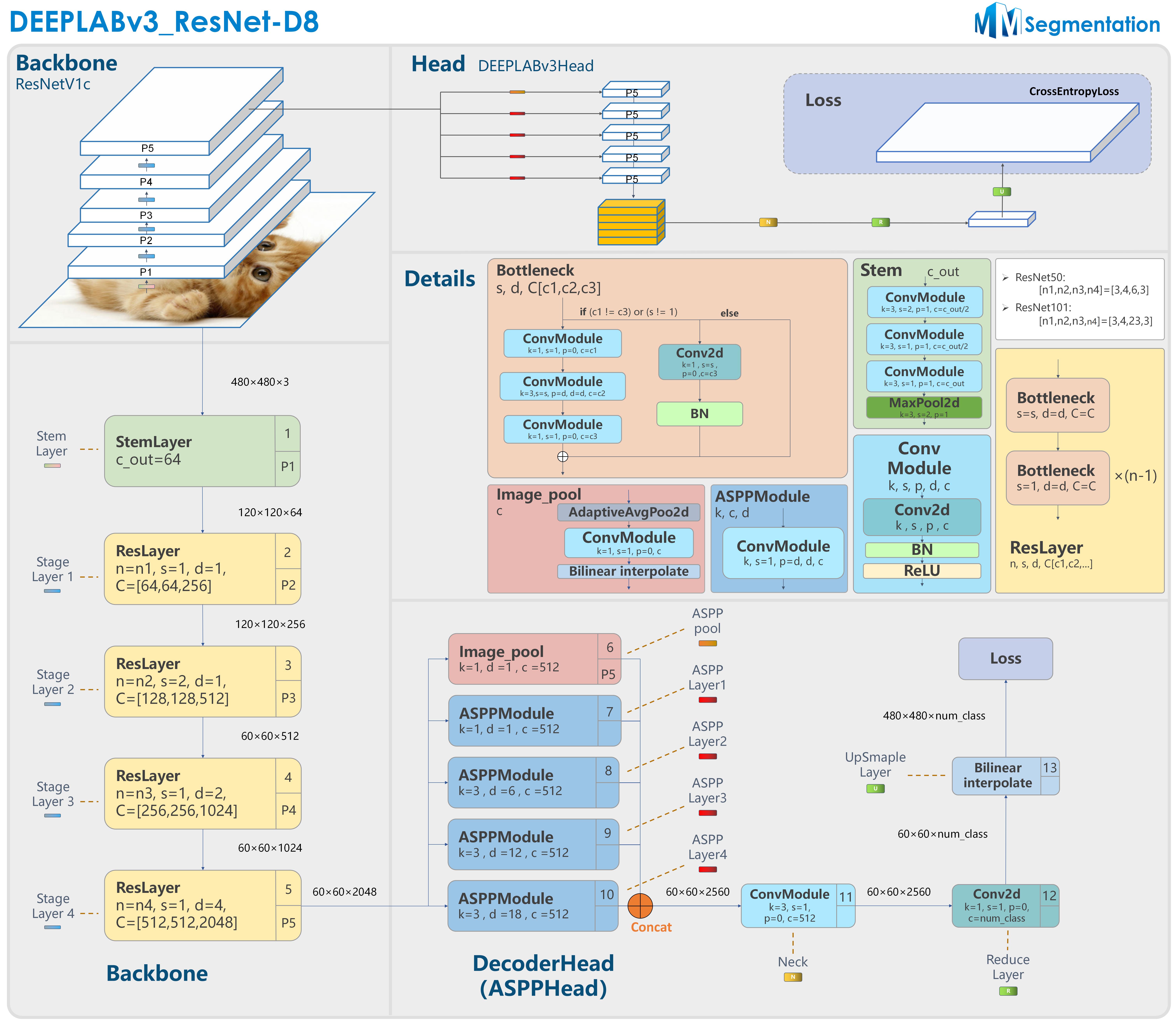

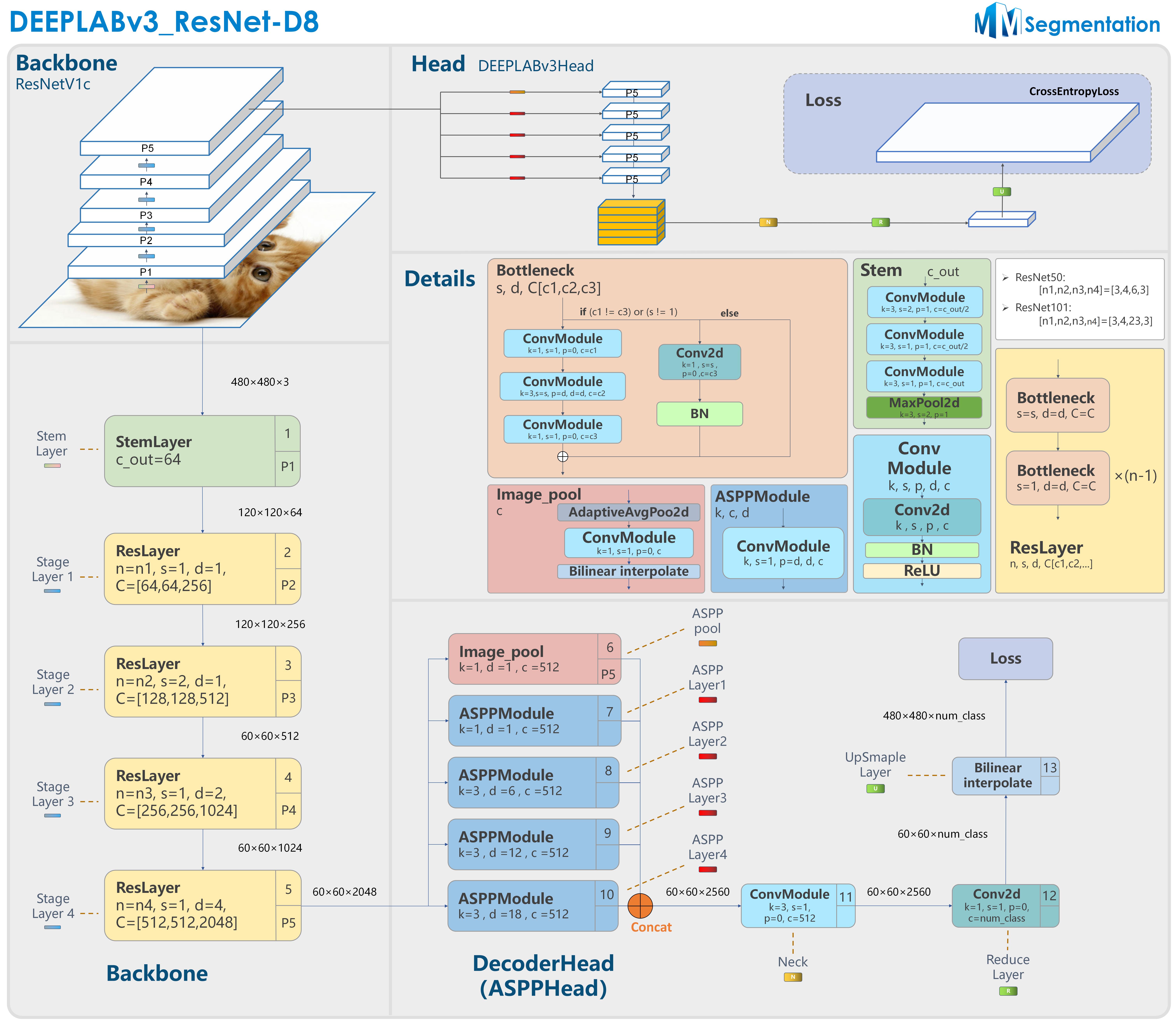

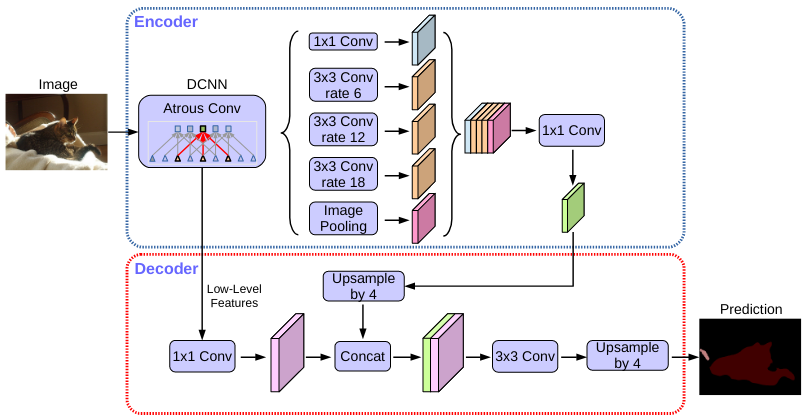

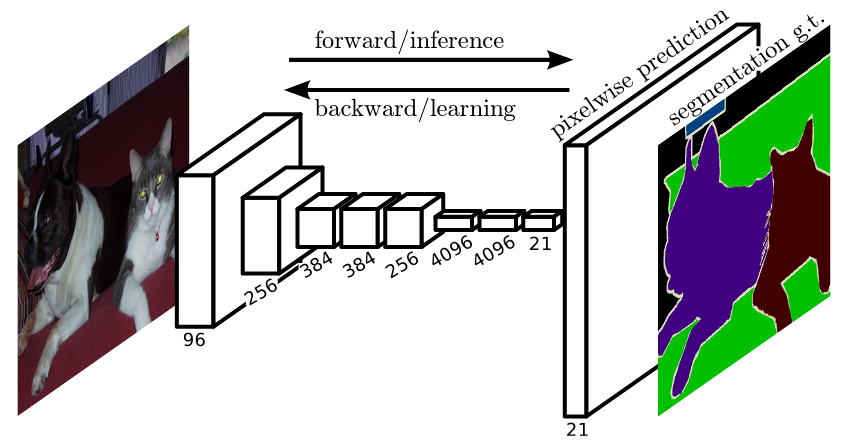

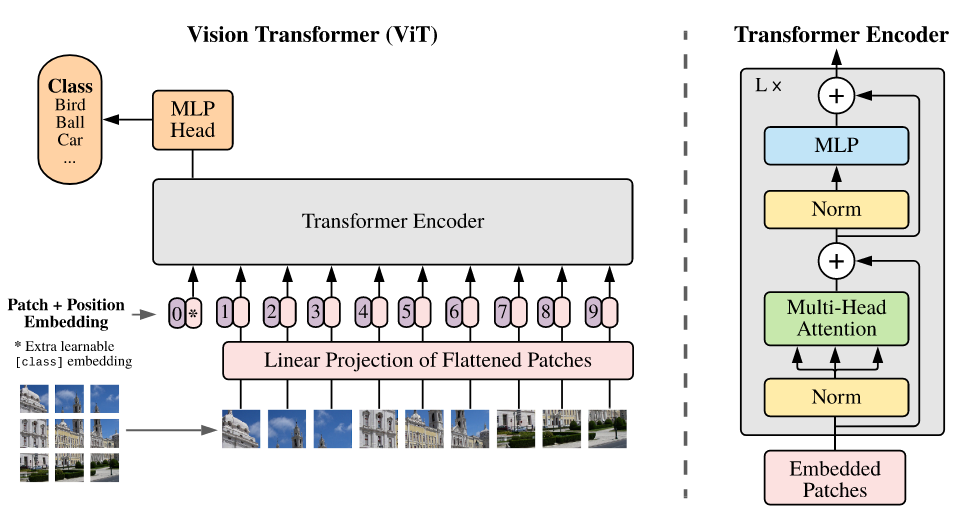

+DEEPLABv3_ResNet-D8 model structure

+

+

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +DEEPLABv3_ResNet-D8 model structure

+

+DEEPLABv3_ResNet-D8 model structure

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

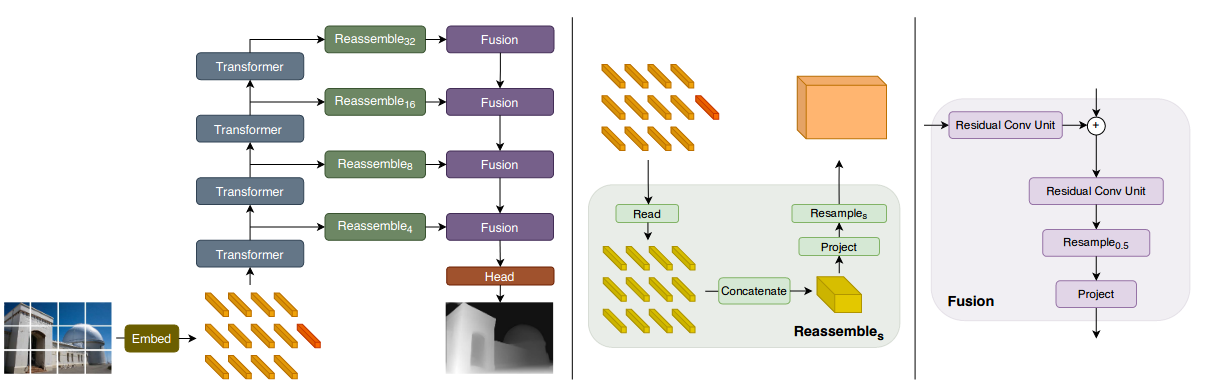

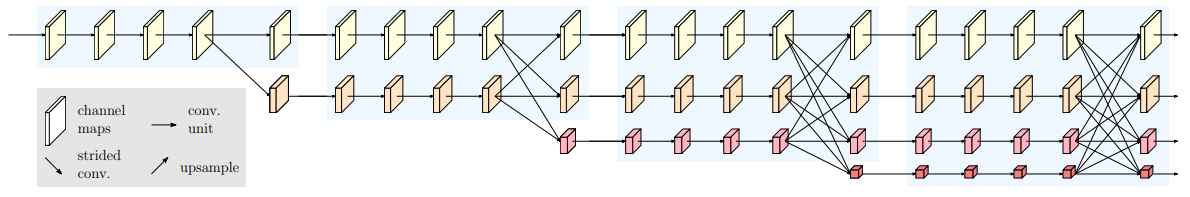

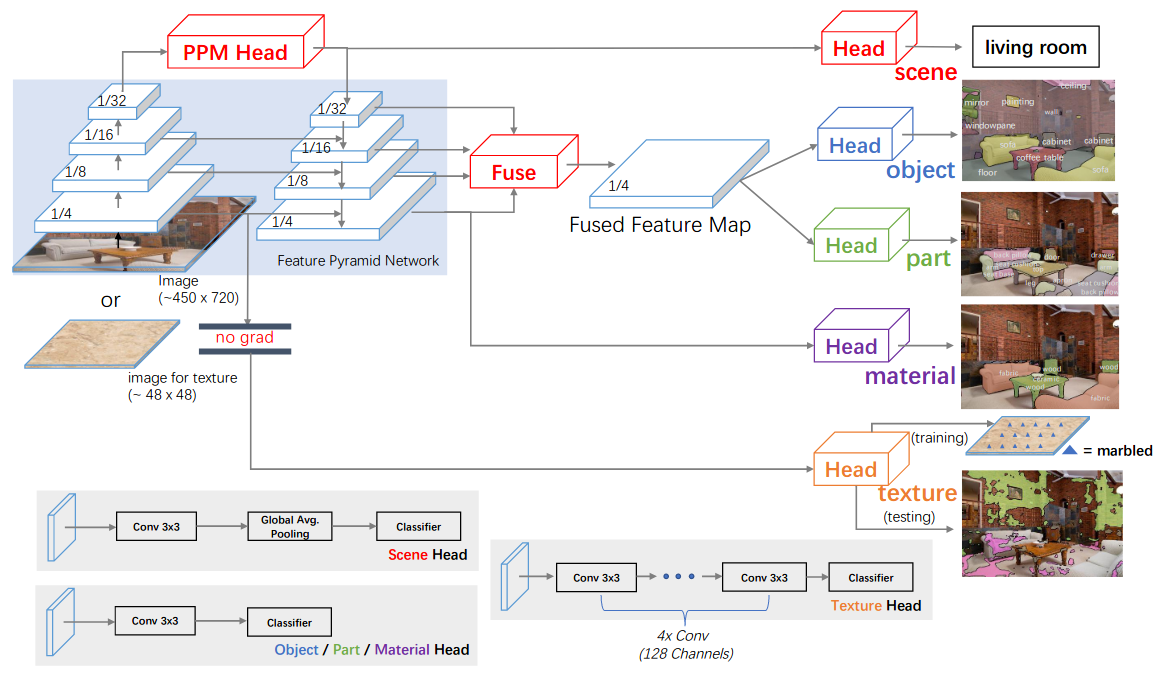

+ +PSPNet-R50 D8 model structure

+

+PSPNet-R50 D8 model structure

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+