Update README.md

Browse files

README.md

CHANGED

|

@@ -16,7 +16,7 @@ tags:

|

|

| 16 |

|

| 17 |

# Breeze-7B-FC-v1_0-GGUF

|

| 18 |

|

| 19 |

-

- Original model:

|

| 20 |

|

| 21 |

A conversion of [Breeze-7B-FC-v1_0](https://huggingface.co/MediaTek-Research/Breeze-7B-FC-v1_0) into diffrent quantisation levels via [llama.cpp](https://github.com/ggerganov/llama.cpp).

|

| 22 |

|

|

@@ -111,3 +111,120 @@ for text in llm(tokenizer.apply_chat_template(chat, tokenize=False), stream=True

|

|

| 111 |

# 4. 珍珠奶茶 (Bubble tea) - 珍珠奶茶是一種以紅茶為基底的飲品,加入珍珠(Q彈的小湯圓)和鮮奶。它起源於台灣,並迅速成為全球流行的飲料。珍珠奶茶在全台灣都有不少知名品牌,例如茶湯會、五桐號等。

|

| 112 |

# 5. 臭豆腐 (Stinky tofu) - 臭豆腐是一種以發酵豆腐為原料製作的傳統小吃。它具有強烈的氣味,但味道獨特且深受台灣人喜愛。臭豆腐通常會搭配多種調味料和配料,例如辣椒醬、蒜泥、酸菜等。臭豆腐在全台灣都有不少知名店家,例如阿宗麵線、大勇街臭豆腐等。

|

| 113 |

```

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 16 |

|

| 17 |

# Breeze-7B-FC-v1_0-GGUF

|

| 18 |

|

| 19 |

+

- Original model: [Breeze-7B-FC-v1_0](https://huggingface.co/MediaTek-Research/Breeze-7B-FC-v1_0)

|

| 20 |

|

| 21 |

A conversion of [Breeze-7B-FC-v1_0](https://huggingface.co/MediaTek-Research/Breeze-7B-FC-v1_0) into diffrent quantisation levels via [llama.cpp](https://github.com/ggerganov/llama.cpp).

|

| 22 |

|

|

|

|

| 111 |

# 4. 珍珠奶茶 (Bubble tea) - 珍珠奶茶是一種以紅茶為基底的飲品,加入珍珠(Q彈的小湯圓)和鮮奶。它起源於台灣,並迅速成為全球流行的飲料。珍珠奶茶在全台灣都有不少知名品牌,例如茶湯會、五桐號等。

|

| 112 |

# 5. 臭豆腐 (Stinky tofu) - 臭豆腐是一種以發酵豆腐為原料製作的傳統小吃。它具有強烈的氣味,但味道獨特且深受台灣人喜愛。臭豆腐通常會搭配多種調味料和配料,例如辣椒醬、蒜泥、酸菜等。臭豆腐在全台灣都有不少知名店家,例如阿宗麵線、大勇街臭豆腐等。

|

| 113 |

```

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

**Instruction following**

|

| 117 |

+

|

| 118 |

+

```python

|

| 119 |

+

from mtkresearch.llm.prompt import MRPromptV2

|

| 120 |

+

|

| 121 |

+

sys_prompt = ('You are a helpful AI assistant built by MediaTek Research. '

|

| 122 |

+

'The user you are helping speaks Traditional Chinese and comes from Taiwan.')

|

| 123 |

+

|

| 124 |

+

prompt_engine = MRPromptV2()

|

| 125 |

+

|

| 126 |

+

conversations = [

|

| 127 |

+

{"role": "system", "content": sys_prompt},

|

| 128 |

+

{"role": "user", "content": "請問什麼是深度學習?"},

|

| 129 |

+

]

|

| 130 |

+

|

| 131 |

+

prompt = prompt_engine.get_prompt(conversations)

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

output_str = _inference(prompt, llm, params)

|

| 135 |

+

result = prompt_engine.parse_generated_str(output_str)

|

| 136 |

+

|

| 137 |

+

print(result)

|

| 138 |

+

# {'role': 'assistant',

|

| 139 |

+

# 'content': '深度學習(Deep Learning)是一種機器學習方法,它模仿人類大腦的神經網路結構來

|

| 140 |

+

# 處理複雜的數據和任務。在深度學習中,模型由多層人工神經元組成,每個神經元之間有

|

| 141 |

+

# 權重連接,並通過非線性轉換進行計算。這些層與層之間的相互作用使模型能夠學習複雜

|

| 142 |

+

# 的函數關係或模式,從而解決各種問題,如圖像識別、自然語言理解、語音辨識等。深度

|

| 143 |

+

# 學習通常需要大量的數據和強大的計算能力,因此經常使用圖形處理器(GPU)或特殊的

|

| 144 |

+

# 加速器來執行。'}

|

| 145 |

+

```

|

| 146 |

+

|

| 147 |

+

**Function Calling**

|

| 148 |

+

|

| 149 |

+

```python

|

| 150 |

+

import json

|

| 151 |

+

|

| 152 |

+

from mtkresearch.llm.prompt import MRPromptV2

|

| 153 |

+

|

| 154 |

+

functions = [

|

| 155 |

+

{

|

| 156 |

+

"name": "get_current_weather",

|

| 157 |

+

"description": "Get the current weather in a given location",

|

| 158 |

+

"parameters": {

|

| 159 |

+

"type": "object",

|

| 160 |

+

"properties": {

|

| 161 |

+

"location": {

|

| 162 |

+

"type": "string",

|

| 163 |

+

"description": "The city and state, e.g. San Francisco, CA"

|

| 164 |

+

},

|

| 165 |

+

"unit": {

|

| 166 |

+

"type": "string",

|

| 167 |

+

"enum": ["celsius", "fahrenheit"]

|

| 168 |

+

}

|

| 169 |

+

},

|

| 170 |

+

"required": ["location"]

|

| 171 |

+

}

|

| 172 |

+

}

|

| 173 |

+

]

|

| 174 |

+

|

| 175 |

+

def fake_get_current_weather(location, unit=None):

|

| 176 |

+

return {'temperature': 30}

|

| 177 |

+

|

| 178 |

+

mapping = {

|

| 179 |

+

'get_current_weather': fake_get_current_weather

|

| 180 |

+

}

|

| 181 |

+

|

| 182 |

+

prompt_engine = MRPromptV2()

|

| 183 |

+

|

| 184 |

+

# stage 1: query

|

| 185 |

+

conversations = [

|

| 186 |

+

{"role": "user", "content": "請問台北目前溫度是攝氏幾度?"},

|

| 187 |

+

]

|

| 188 |

+

|

| 189 |

+

prompt = prompt_engine.get_prompt(conversations, functions=functions)

|

| 190 |

+

|

| 191 |

+

output_str = _inference(prompt, llm, params)

|

| 192 |

+

result = prompt_engine.parse_generated_str(output_str)

|

| 193 |

+

|

| 194 |

+

print(result)

|

| 195 |

+

# {'role': 'assistant',

|

| 196 |

+

# 'tool_calls': [

|

| 197 |

+

# {'id': 'call_U9bYCBRAbF639uUqfwehwSbw', 'type': 'function',

|

| 198 |

+

# 'function': {'name': 'get_current_weather', 'arguments': '{"location": "台北, 台灣", "unit": "celsius"}'}}]}

|

| 199 |

+

|

| 200 |

+

# stage 2: execute called functions

|

| 201 |

+

conversations.append(result)

|

| 202 |

+

|

| 203 |

+

tool_call = result['tool_calls'][0]

|

| 204 |

+

func_name = tool_call['function']['name']

|

| 205 |

+

func = mapping[func_name]

|

| 206 |

+

arguments = json.loads(tool_call['function']['arguments'])

|

| 207 |

+

called_result = func(**arguments)

|

| 208 |

+

|

| 209 |

+

# stage 3: put executed results

|

| 210 |

+

conversations.append(

|

| 211 |

+

{

|

| 212 |

+

'role': 'tool',

|

| 213 |

+

'tool_call_id': tool_call['id'],

|

| 214 |

+

'name': func_name,

|

| 215 |

+

'content': json.dumps(called_result)

|

| 216 |

+

}

|

| 217 |

+

)

|

| 218 |

+

|

| 219 |

+

prompt = prompt_engine.get_prompt(conversations, functions=functions)

|

| 220 |

+

|

| 221 |

+

output_str2 = _inference(prompt, llm, params)

|

| 222 |

+

result2 = prompt_engine.parse_generated_str(output_str2)

|

| 223 |

+

print(result2)

|

| 224 |

+

# {'role': 'assistant', 'content': '台北目前的溫度是攝氏30度'}

|

| 225 |

+

```

|

| 226 |

+

|

| 227 |

+

|

| 228 |

+

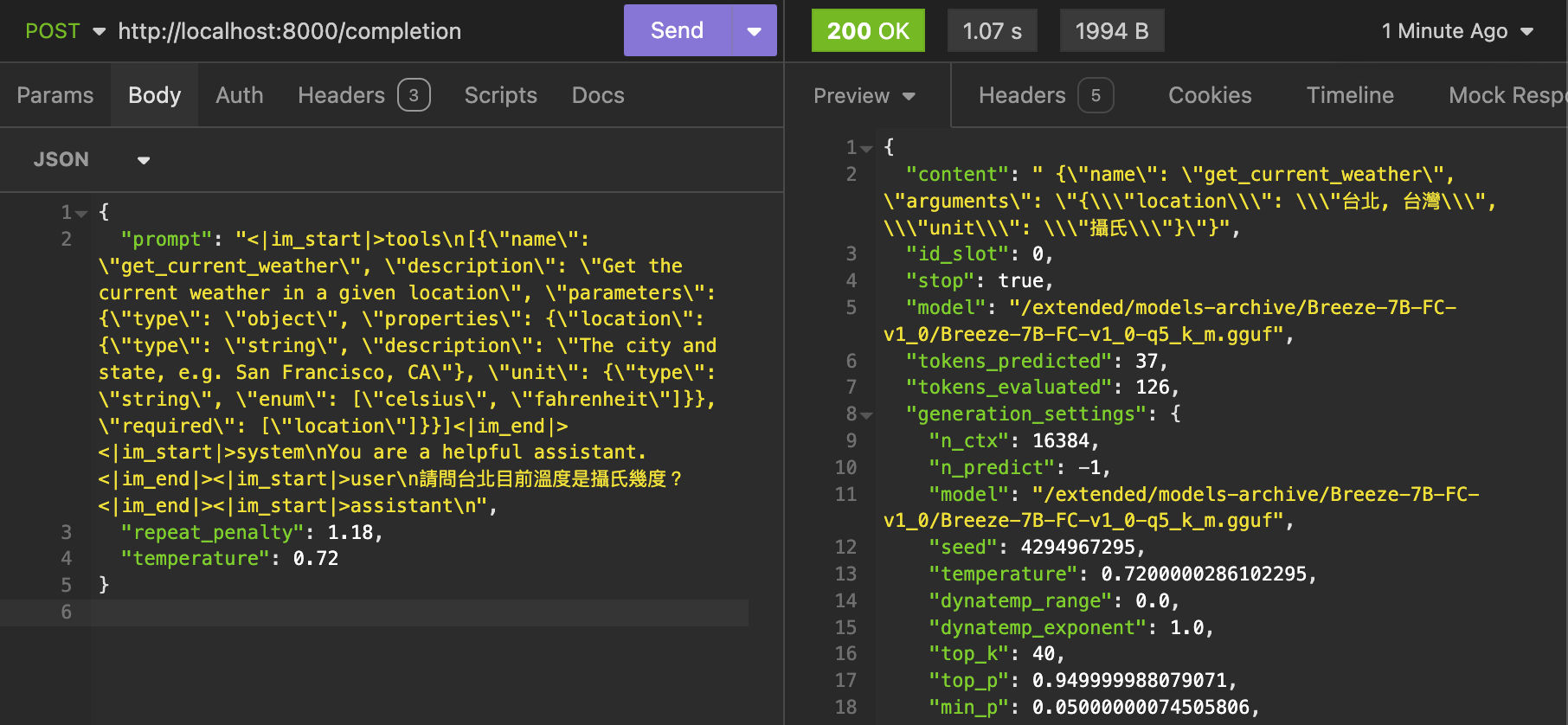

3. Example function calling via `llama.cpp` server:

|

| 229 |

+

|

| 230 |

+

|