A-LLM: Korean Large Language Model based on Llama-3

Introduction

A-LLM is a Korean large language model built on Meta's Llama-3-8B architecture , specifically optimized for Korean language understanding and generation. The model was trained using the DoRA (Weight-Decomposed Low-Rank Adaptation) methodology on a comprehensive Korean dataset , achieving state-of-the-art performance among open-source Korean language models.

Performance Benchmarks

Horangi Korean LLM Leaderboard

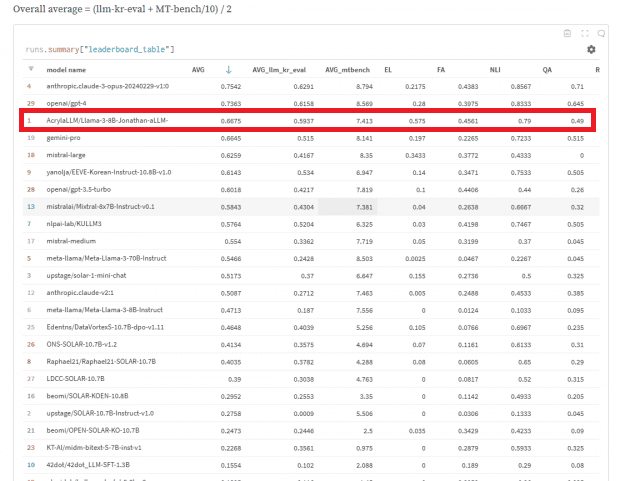

The model's performance was evaluated using the Horangi Korean LLM Leaderboard , which combines two major evaluation frameworks normalized to a 1.0 scale and averages their scores.

1. LLM-KR-EVAL

A comprehensive benchmark that measures fundamental NLP capabilities across 5 core tasks:

- Natural Language Inference (NLI)

- Question Answering (QA)

- Reading Comprehension (RC)

- Entity Linking (EL)

- Fundamental Analysis (FA)

The benchmark comprises 10 different datasets distributed across these tasks , providing a thorough assessment of Korean language understanding and processing capabilities.

2. MT-Bench

A diverse evaluation framework consisting of 80 questions (10 questions each from 8 categories) , evaluated using GPT-4 as the judge. Categories include:

- Writing

- Roleplay

- Extraction

- Reasoning

- Math

- Coding

- Knowledge (STEM)

- Knowledge (Humanities/social science)

Performance Results (documented on 10/04/24)

| Model | Total Score | AVG_llm_kr_eval | AVG_mtbench |

|---|---|---|---|

| A-LLM (Ours) | 0.6675 | 0.5937 | 7.413 |

| Mixtral-8x7B | 0.5843 | 0.4304 | 7.381 |

| KULLM3 | 0.5764 | 0.5204 | 6.325 |

| SOLAR-1-mini | 0.5173 | 0.37 | 6.647 |

Our model achieves the highest performance among open-source Korean large language models, showcasing exceptional capabilities in both general language understanding (LLM-KR-EVAL) and diverse task-specific applications (MT-Bench). Additionally, it surpasses similarly scaled models with sizes below approximately 10 B parameters.

Model Components

This repository provides:

- Tokenizer configuration

- Model weights in safetensor format

Usage Instructions

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

# Load tokenizer and model

model_path = "AcrylaLLM/Llama-3-8B-Jonathan-aLLM-Instruct-v1.0"

tokenizer = AutoTokenizer.from_pretrained(model_path)

model = AutoModelForCausalLM.from_pretrained(

model_path,

torch_dtype=torch.float16,

device_map="auto"

)

# Example prompt template

def generate_prompt(instruction: str, context: str = None) -> str:

if context:

return f"""### Instruction:

{instruction}

### Context:

{context}

### Response:"""

else:

return f"""### Instruction:

{instruction}

### Response:"""

# Example usage

instruction = "다음 질문에 답변해주세요: 인공지능의 발전이 우리 사회에 미치는 영향은 무엇일까요?"

prompt = generate_prompt(instruction)

# Tokenize and generate

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

outputs = model.generate(

**inputs,

max_length=512,

temperature=0.7,

top_p=0.9,

repetition_penalty=1.2,

do_sample=True

)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(response)

Generation Settings

# generation parameters

generation_config = {

"bos_token_id": 128000,

"do_sample": True,

"eos_token_id": 128001,

"max_length": 4096,

"temperature": 0.6,

"top_p": 0.9,

"transformers_version": "4.40.1"

}

# Generate with specific config

outputs = model.generate(

**inputs,

**generation_config

)

Prerequisites

- Python 3.8 or higher

- PyTorch 2.0 or higher

- Transformers library

Acknowledgement

The authors thank the following contributors and projects.

- This work was supported by Institute of Information & communications Technology Planning & Evaluation (IITP) grant funded by the Korea government(MSIT) (2022-0-00043, Adaptive Personality for Intelligent Agents).

- This work was supported by Institute of Information & communications Technology Planning & Evaluation (IITP) grant funded by the Korea government(MSIT) (RS-2023-00228255, PIM-NPU Based Processing System Software Developments for Hyper-scale Artificial Neural Network Processing).

License

Please refer to the model card on HuggingFace for licensing information.

- Downloads last month

- 536