Model Card for Stefan Zweig Language Model

This model is a fine-tuned version of ibm-granite/granite-3.1-2b-instruct. It has been trained using TRL.

Model Details

This model is designed to emulate Stefan Zweig's distinctive writing and conversational style in chat format. Used a fine-tuning approach based on the methodology described in the DeepSeek-V3 technical report. The project aims to create a language model that emulates Stefan Zweig's distinctive writing and conversational style using a two-stage training process: Supervised Fine-Tuning (SFT) followed by Group Relative Policy Optimization (GRPO).

Quick start

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

device = "cuda" if torch.cuda.is_available() else "cpu"

model = AutoModelForCausalLM.from_pretrained("Chan-Y/Stefan-Zweig-Granite", device_map=device)

tokenizer = AutoTokenizer.from_pretrained("Chan-Y/Stefan-Zweig-Granite")

input_text = "As an experienced and famous writer Stefan Zweig, what's your opinion on artificial intelligence?"

inputs = tokenizer(input_text, return_tensors="pt").to(device)

with torch.no_grad():

outputs = model.generate(

**inputs,

max_length=512,

num_return_sequences=1,

do_sample=True,

temperature=0.7,

top_p=0.9,

)

# Decode the generated text

generated_text = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(generated_text.split(input_text)[-1])

Training procedure

Dataset: Custom synthetic dataset generated using argilla/synthetic-data-generator with Qwen2.5:14b

Data Format: Structured conversations with specific role markers and custom tokens.

Data Processing: Implementation of special tokens and for style consistency

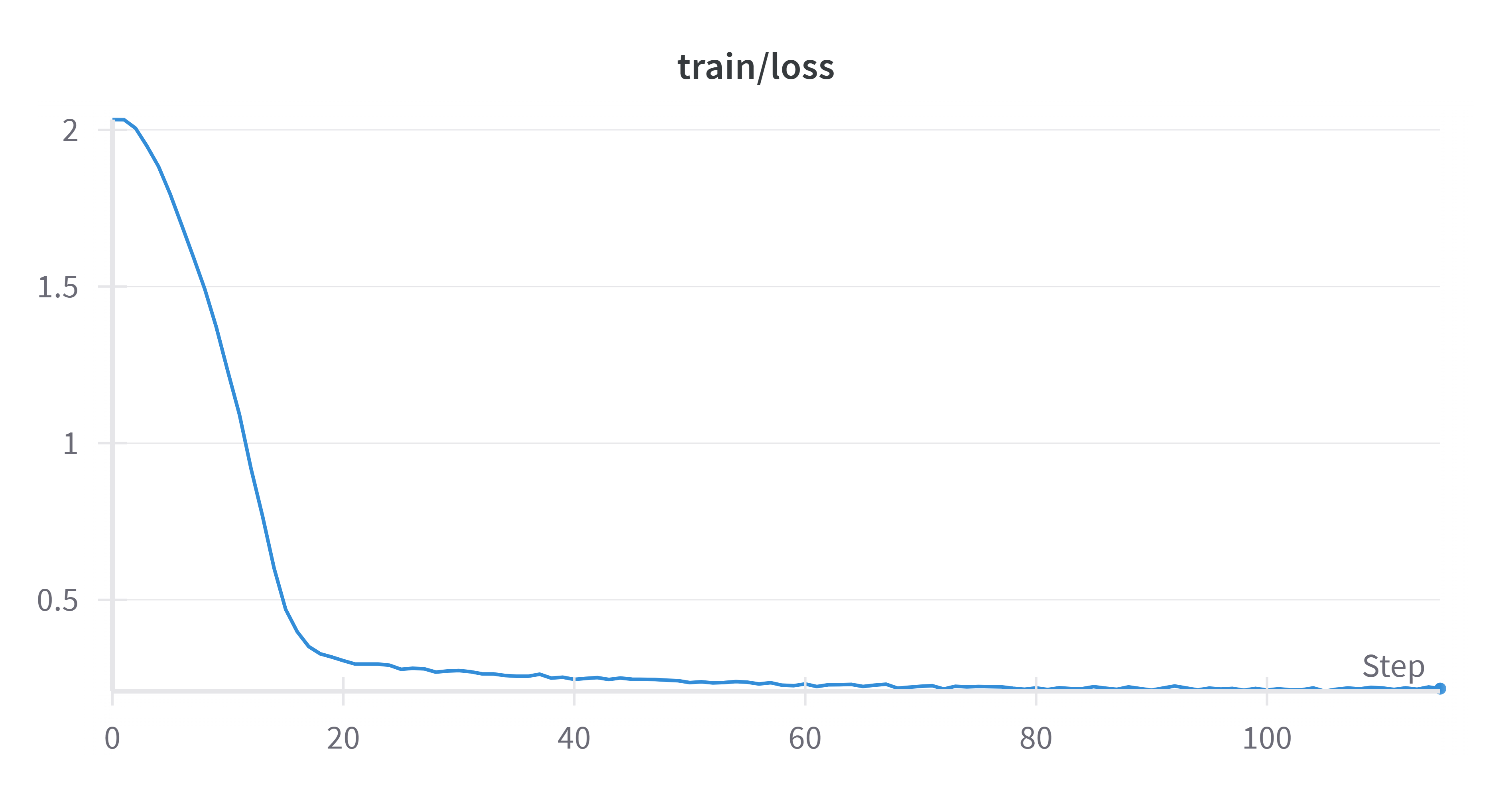

Training Type: Two-stage training pipeline

- Supervised Fine-Tuning (SFT)

- Group Relative Policy Optimization (GRPO)

Framework versions

- TRL: 0.14.0.dev0

- Transformers: 4.48.1

- Pytorch: 2.5.1+cu124

- Datasets: 3.2.0

- Tokenizers: 0.21.0

Model tree for Chan-Y/Stefan-Zweig-Granite-2B

Base model

ibm-granite/granite-3.1-2b-base