metadata

license: creativeml-openrail-m

Google Safesearch Mini Model Card

This model is trained on InceptionV3 and 2,224,000 images scraped from Google Safesearch, Reddit, Imgur, and Github. It predicts the likelihood of an image being nsfw_gore, nsfw_suggestive, and safe.

After 20 epochs on PyTorch, the model achieves 94% train and test accuracy.

PyTorch

pip install --upgrade transformers

from transformers import AutoModelForImageClassification

from torch import cuda

model = AutoModelForImageClassification.from_pretrained("FredZhang7/google-safesearch-mini", trust_remote_code=True, revision="d0b4c6be6d908c39c0dd83d25dce50c0e861e46a")

PATH_TO_IMAGE = 'https://images.unsplash.com/photo-1594568284297-7c64464062b1'

PRINT_TENSOR = False

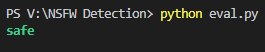

prediction = model.predict(PATH_TO_IMAGE, device="cuda" if cuda.is_available() else "cpu", print_tensor=PRINT_TENSOR)

print(f"\033[1;{'32' if prediction == 'safe' else '33'}m{prediction}\033[0m")

Bias and Limitations

Each person's definition of "safe" is different. The images in the dataset are classified as safe/unsafe by Google SafeSearch, Reddit, and Imgur. It is possible that some images may be safe to others but not to you.