Model Card for GemmaX2-28

Model Details

Model Description

GemmaX2-28-9B-Pretrain is a language model that results from continual pretraining of Gemma2-9B on a mix of 56 billion tokens of monolingual and parallel data in 28 different languages — Arabic, Bengali, Czech, German, English, Spanish, Persian, French, Hebrew, Hindi, Indonesian, Italian, Japanese, Khmer, Korean, Lao, Malay, Burmese, Dutch, polish, Portuguese, Russian, Thai, Tagalog, Turkish, Urdu, Vietnamese, Chinese.

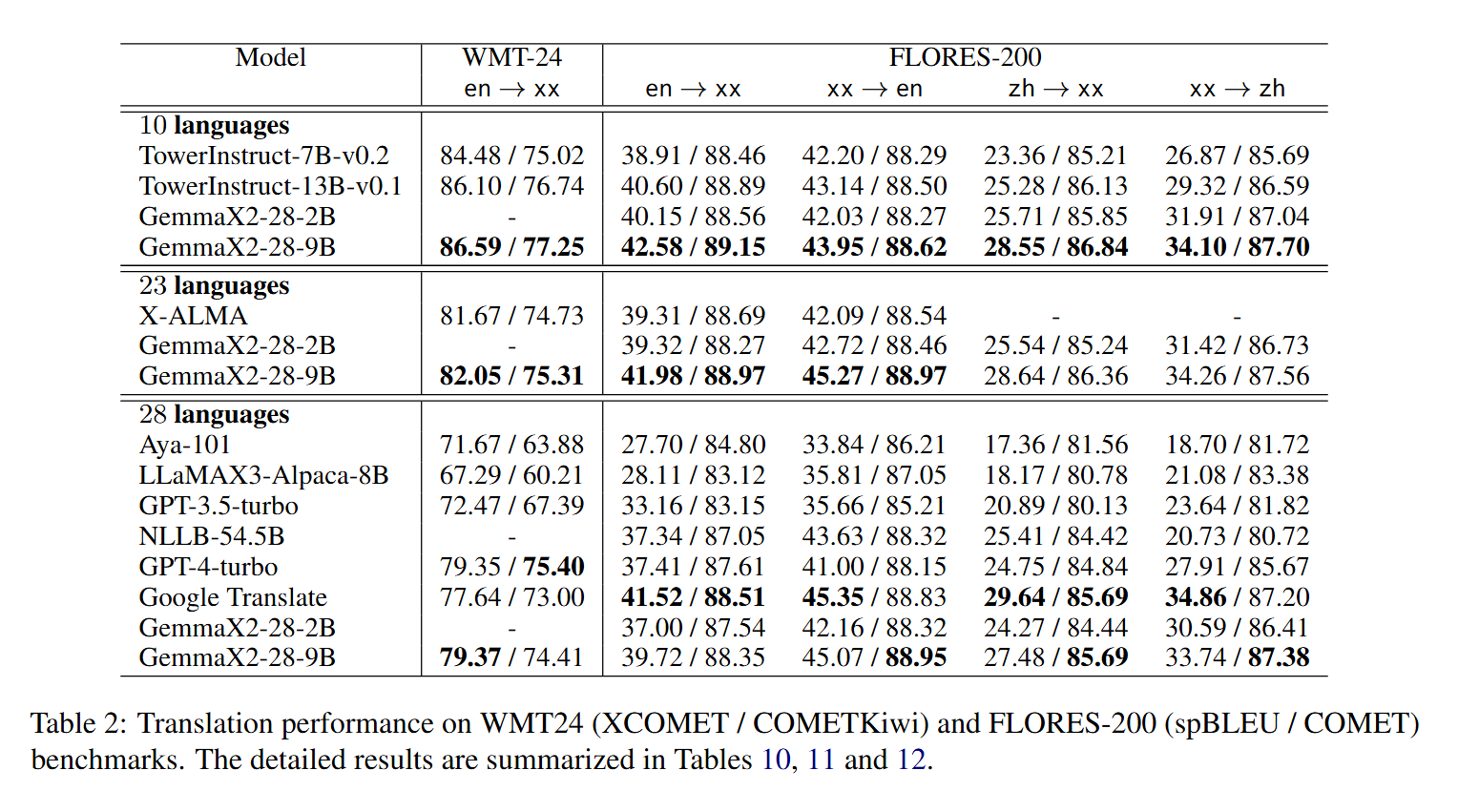

GemmaX2-28-9B-v0.1 is the first model in the series. Compared to the current open-source state-of-the-art (SOTA) models, it achieves optimal translation performance across 28 languages, even reaching performance comparable to GPT-4 and Google Translate, indicating it has achieved translation capabilities on par with industry standards.

- Developed by: Xiaomi

- Model type: A 9B parameter model base on Gemma2, we obtained GemmaX2-28-9B-Pretrain by continuing pre-training on a large amount of monolingual and parallel data. Afterward, GemmaX2-28-9B-v0.1 was derived through supervised fine-tuning on a small set of high-quality instruction data.

- Language(s) (NLP): Arabic, Bengali, Czech, German, English, Spanish, Persian, French, Hebrew, Hindi, Indonesian, Italian, Japanese, Khmer, Korean, Lao, Malay, Burmese, Dutch, polish, Portuguese, Russian, Thai, Tagalog, Turkish, Urdu, Vietnamese, Chinese.

- License: gemma

Model Source

- paper: coming soon.

Model Performance

Limitations

GemmaX2-28-9B-v0.1 supports only the 28 most commonly used languages and does not guarantee powerful translation performance for other languages. Additionally, we will continue to improve GemmaX2-28-9B's translation performance, and future models will be release in due course.

Run the model

from transformers import AutoModelForCausalLM, AutoTokenizer

model_id = "ModelSpace/GemmaX2-28-9B-Pretrain"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id)

text = "Translate this from Chinese to English:\nChinese: 我爱机器翻译\nEnglish:"

inputs = tokenizer(text, return_tensors="pt")

outputs = model.generate(**inputs, max_new_tokens=50)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

Training Data

We collected monolingual data from CulturaX and MADLAD-400. For parallel data, we collected all Chinese-centric and English-centric parallel dataset from the OPUS collection up to Auguest 2024 and underwent a series of filtering processes, such as language detection, semantic duplication filtering, quality filtering, and more.

Citation

@misc{gemmax2,

title = {Multilingual Machine Translation with Open Large Language Models at Practical Scale: An Empirical Study},

url = {},

author = {XiaoMi Team},

month = {October},

year = {2024}

}

- Downloads last month

- 54