PAIR-Diffusion Model Card - Stable Diffusion 1.5 finetuned with COCO-Stuff

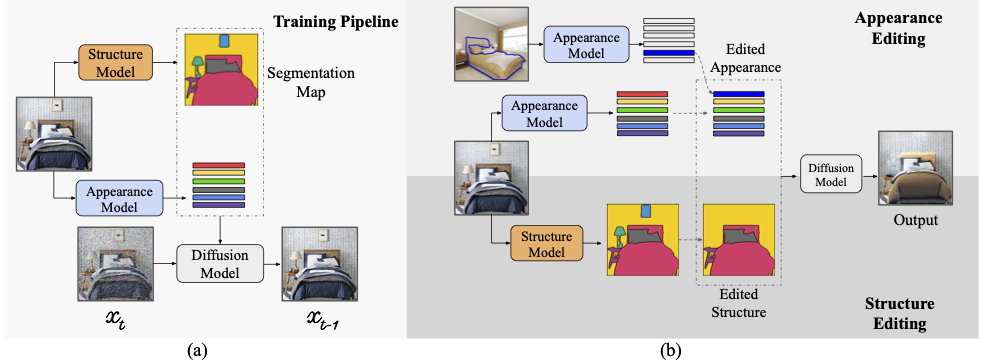

PAIR Diffusion models an image as composition of multiple objects and aim to control structural and appearance properties of these objects. It allows reference image-guided appearance manipulation and structure editing of an image at an object level. Describing object appearances using text can be challenging and ambiguous, PAIR Diffusion enables a user to control the appearance of an object using images. Having fine-grained control over appearance and structure at object level can be beneficial for future works in video and 3D beside image editing, where we need to have consistent appearance across time in case of video or across various viewing positions in case of 3D.

For more information please refer to GitHub, arXiv

This model card applies the method to Stable Diffusion 1.5 (SDv1.5). We used COCO-Stuff dataset to finetune SDv1.5 using ControlNet due to its efficiency. The model can be tested using the publicly available demo here

Model Details

- Developed by: Vidit Goel, Elia Peruzzo, Yifan Jiang, Dejia Xu, Nicu Sebe, Trevor, Darrell, Atlas Wang, Humphrey Shi

- Model type: Object Level Editing using Diffusion Models

- Language(s): English

- License: MIT

- Resources for more information: GitHub Repository, Paper.

- Cite as:

@article{goel2023pair,

title={PAIR-Diffusion: Object-Level Image Editing with Structure-and-Appearance Paired Diffusion Models},

author={Goel, Vidit and Peruzzo, Elia and Jiang, Yifan and Xu, Dejia and Sebe, Nicu and Darrell, Trevor and

Wang, Zhangyang and Shi, Humphrey},

journal={arXiv preprint arXiv:2303.17546},

year={2023}

}