QuantFactory/Gemma-2-2b-Chinese-it-GGUF

This is quantized version of stvlynn/Gemma-2-2b-Chinese-it created using llama.cpp

Original Model Card

Gemma-2-2b-Chinese-it (Gemma-2-2b-中文)

Intro

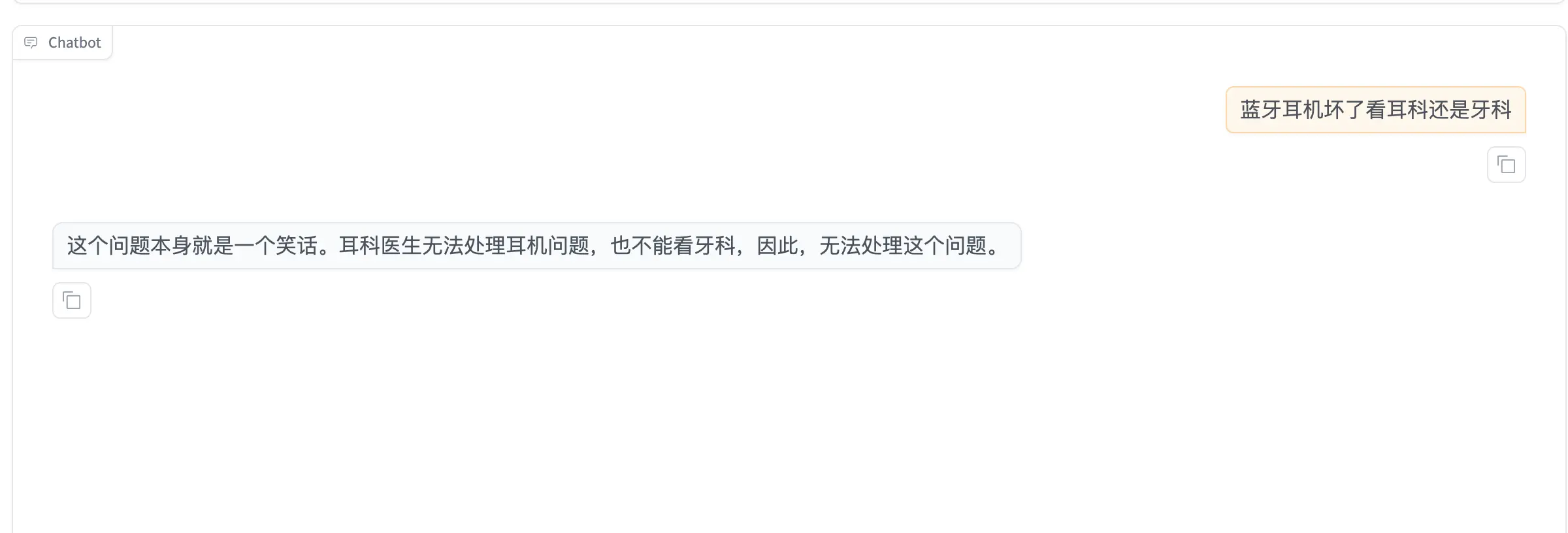

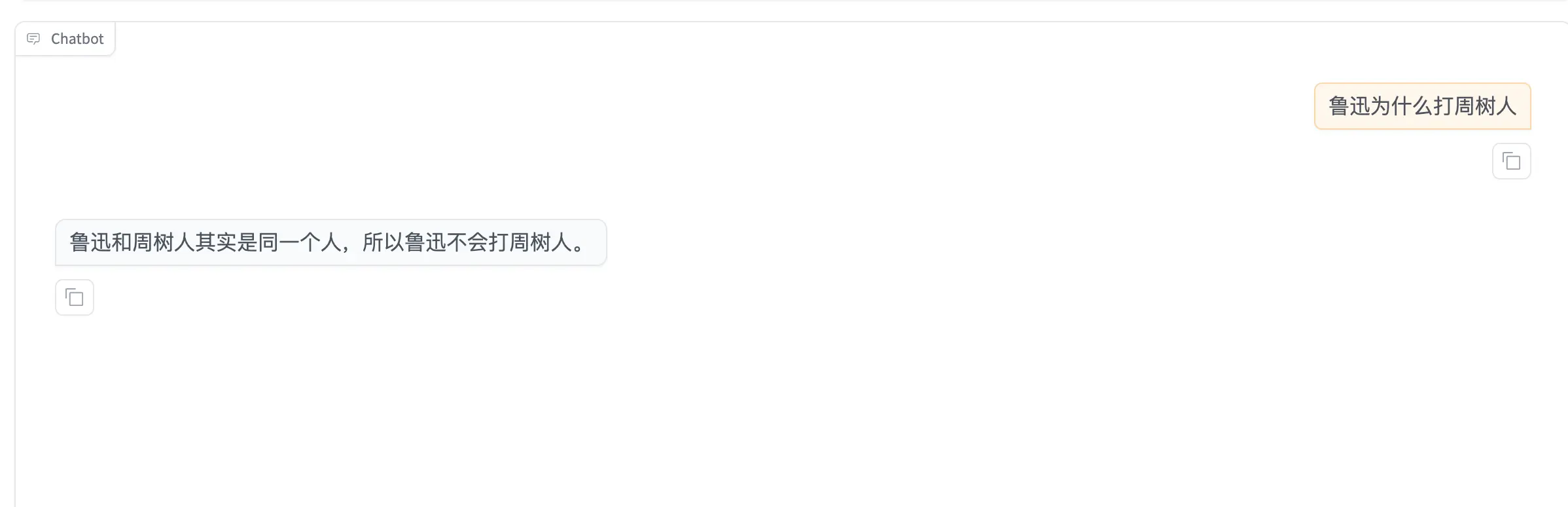

Gemma-2-2b-Chinese-it used approximately 6.4k rows of ruozhiba dataset to fine-tune Gemma-2-2b-it.

Gemma-2-2b-中文使用了约6.4k弱智吧数据对Gemma-2-2b-it进行微调

Demo

Usage

see Google's doc:

If you have any questions or suggestions, feel free to contact me.

- Downloads last month

- 364