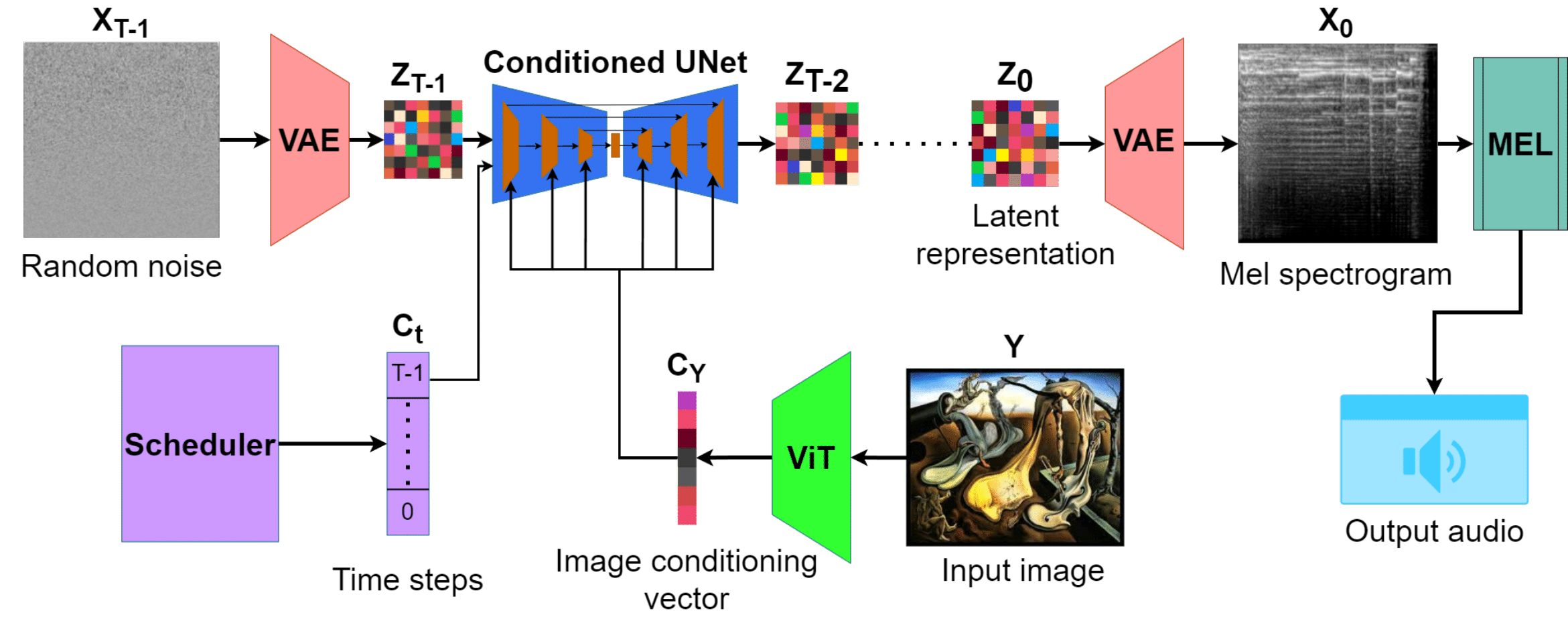

Model description

clMusDiff is a Conditional Latent Diffusion model, which leverages the reverse diffusion process and image conditioning to generate Mel spectrograms, which can then be converted into audio. It performs soundtrack generation when initiated by providing an image, specifying the number of denoising steps, and generating a random noise size-matched to the spectrogram. During the image encoding, the input undergoes encoding by the ViT module. In denoising scheduling, a vector of time steps is generated, which is crucial in controlling the denoising. Next, during latent mapping, the initial noise is transformed into a probabilistic distribution to reduce the size of the data and accelerate the generation. At every denoising step, the model predicts the noise to be removed from the sample, which is scaled by the scheduler. Finally, the scaled noise is applied to the sample, creating a less noisy version. The final latent representation is reconstructed into a Mel spectrogram using VAE. The output audio is retrieved by inversion of the spectrogram.

Pipeline

Training data

The model was trained on EMID dataset.

- Downloads last month

- 8

Model tree for Woleek/clMusDiff

Base model

google/vit-base-patch16-224-in21k