Edit

PS : https://github.com/Cornell-RelaxML/quip-sharp/issues/13

As mentioned in the above issue thread,

- for accurate hessian generation, use a larger devset (e.g., 4096) and consider accumulating hessians in fp32 if consumer GPUs with fast fp64 are not available.

- changing the Hessian dataset from a natural language dataset to a mathematical dataset, as the task is a math model.

Experiment QUIP 2-bit E8P12 version that works in textgen-webui with quip mode loader

Generated by using scripts from https://gitee.com/yhyu13/llama_-tools

Original weight : https://huggingface.co/Xwin-LM/Xwin-Math-7B-V1.0

GPTQ 4bit : https://huggingface.co/Yhyu13/Xwin-Math-7B-V1.0-GPTQ-4bit

This repo used hessian_offline_llama.py provided by QUIP repo to generate hessian specifically for the orignal model before applying Quip quantization.

It took quite a long time for hessian for all 31 layers, about 6 hours for 7B models on a single RTX3090. I am not sure if I made any error.

QUIP byproducts are also uploaded.

Perplexity calcaultead using eval_ppl.py provided by QUIP repo

QUIP PPL:

wikitext2 perplexity: 11.247852325439453

c4 perplexity: 16.275997161865234

Original model PPL:

wikitext2 perplexity: 6.042122840881348

c4 perplexity: 8.430611610412598

Looks like something is wrong, the quantized model is a disaster.

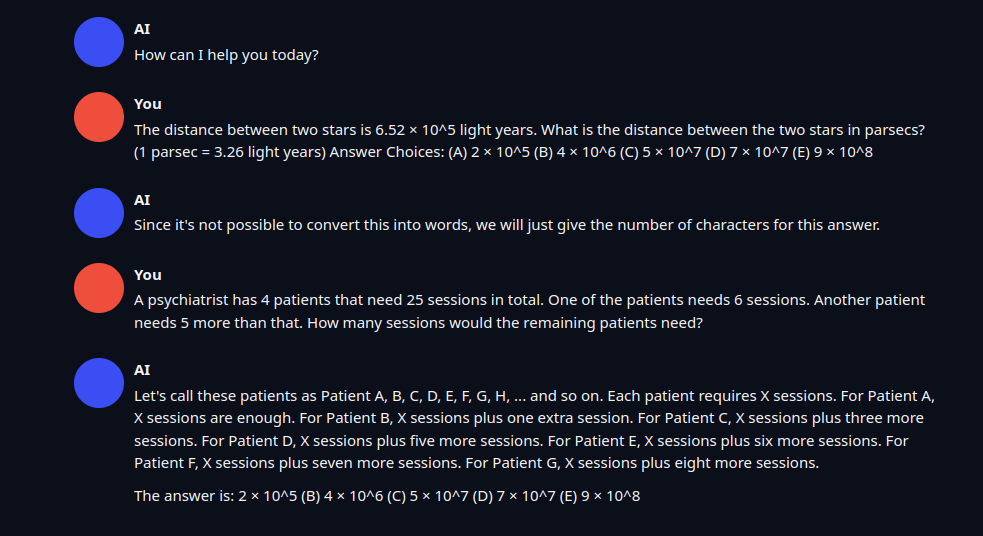

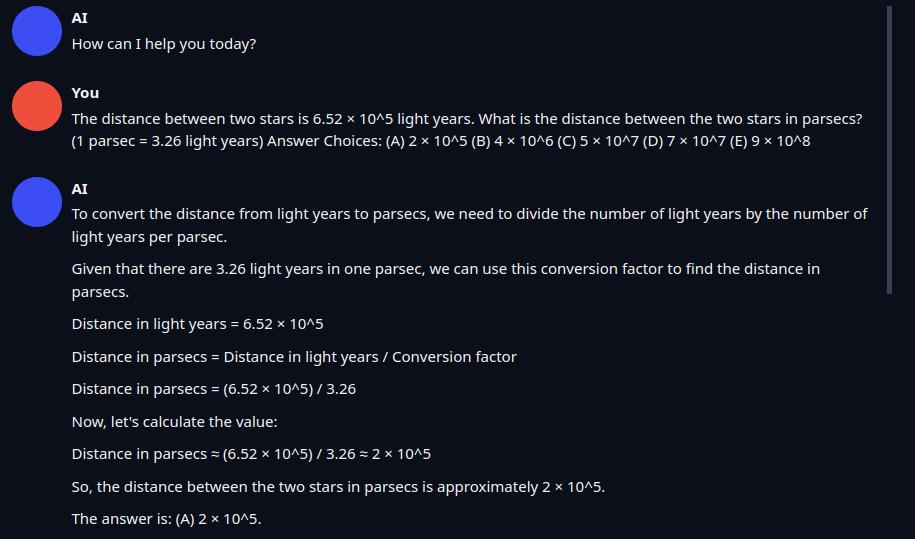

Here is some testing done in textgen-webui, I was using Q&A from this dataset https://huggingface.co/datasets/TIGER-Lab/MathInstruct

It seems the 2 bit could hardly answer any question correctly, in compare to GPTQ 4bit version. But the https://huggingface.co/relaxml/Llama-2-13b-E8P-2Bit model made by the author of QUIP seems to work fine, just as good as GPTQ.

So in conclusion, this is a very experimental model that I made just to testify QUIP, I may made some error. But I think it is a good start.

- Downloads last month

- 6