Exllamav2 quant (exl2 / 2.5 bpw) made with ExLlamaV2 v0.1.1

Other EXL2 quants:

| Quant | Model Size | lm_head |

|---|---|---|

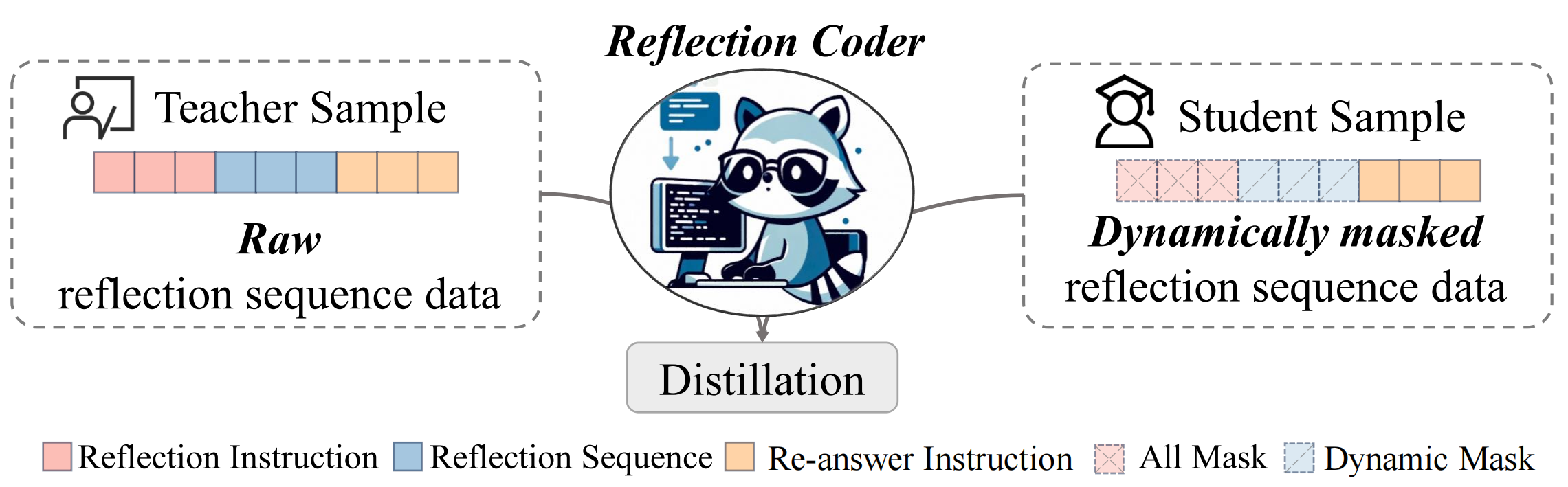

ReflectionCoder: Learning from Reflection Sequence for Enhanced One-off Code Generation

📄 Paper • 🏠 Repo • 🤖 Models • 📚 Datasets

Introduction

ReflectionCoder is a novel approach that effectively leverages reflection sequences constructed by integrating compiler feedback to improve one-off code generation performance. Please refer to our paper and repo for more details!

Models

| Model | Checkpoint | Size | HumanEval (+) | MBPP (+) | License |

|---|---|---|---|---|---|

| ReflectionCoder-CL-7B | 🤗 HF Link | 7B | 75.0 (68.9) | 72.2 (61.4) | Llama2 |

| ReflectionCoder-CL-34B | 🤗 HF Link | 34B | 70.7 (66.5) | 68.4 (56.6) | Llama2 |

| ReflectionCoder-DS-6.7B | 🤗 HF Link | 6.7B | 80.5 (74.4) | 81.5 (69.6) | DeepSeek |

| ReflectionCoder-DS-33B | 🤗 HF Link | 33B | 82.9 (76.8) | 84.1 (72.0) | DeepSeek |

Datasets

How to Use

Chat Format

Following chat templates of most models, we use two special tokens to wrap the message of user and assistant, i.e., <|user|>, <|assistant|>, and <|endofmessage|>. Furthermore, we use two special tokens to wrap the content of different blocks, i.e., <|text|> and <|endofblock|>. You can use the following template to prompt our ReflectionCoder.

<|user|><|text|>

Your Instruction

<|endofblock|><|endofmessage|><|assistant|>

Inference Code

Please refer to our GitHub Repo for more technical details.

Citation

If you find this repo useful for your research, please kindly cite our paper:

@misc{ren2024reflectioncoder,

title={ReflectionCoder: Learning from Reflection Sequence for Enhanced One-off Code Generation},

author={Houxing Ren and Mingjie Zhan and Zhongyuan Wu and Aojun Zhou and Junting Pan and Hongsheng Li},

year={2024},

eprint={2405.17057},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

Acknowledgments

We thank the following amazing projects that truly inspired us:

- Downloads last month

- 6