issue_owner_repo

listlengths 2

2

| issue_body

stringlengths 0

261k

⌀ | issue_title

stringlengths 1

925

| issue_comments_url

stringlengths 56

81

| issue_comments_count

int64 0

2.5k

| issue_created_at

stringlengths 20

20

| issue_updated_at

stringlengths 20

20

| issue_html_url

stringlengths 37

62

| issue_github_id

int64 387k

2.46B

| issue_number

int64 1

127k

|

|---|---|---|---|---|---|---|---|---|---|

[

"hwchase17",

"langchain"

]

| It would be great to see a new LangChain tool for blockchain search engines (or blockchain API providers). There are several blockchain search engine APIs available, including those provided by [Blockchain.com](https://www.blockchain.com/api), [Blockchair](https://blockchair.com/), [Bitquery](https://bitquery.io/), and [Crypto APIs](https://cryptoapis.io/). Jelvix has also compiled a list of the [top 10 best blockchain API providers for developers in 2023](https://jelvix.com/blog/how-to-choose-the-best-blockchain-api-for-your-project). | [Tool Request] Blockchain Search Engine | https://api.github.com/repos/langchain-ai/langchain/issues/1294/comments | 1 | 2023-02-25T18:46:09Z | 2023-09-10T16:43:41Z | https://github.com/langchain-ai/langchain/issues/1294 | 1,599,796,432 | 1,294 |

[

"hwchase17",

"langchain"

]

| I was trying the `SQLDatabaseChain`:

```

db = SQLDatabase.from_uri("sqlite:///../app.db", include_tables=["events"])

llm = OpenAI(temperature=0)

db_chain = SQLDatabaseChain(llm=llm, database=db, verbose=True)

result = db_chain("How many events are there?")

result

```

but was seeing flakey behavior in validity of SQL syntax.

```

OperationalError: (sqlite3.OperationalError) near "SQLQuery": syntax error

[SQL:

SQLQuery: SELECT COUNT(*) FROM events;]

(Background on this error at: https://sqlalche.me/e/14/e3q8)

```

it turns out, upgrading the model fixed the outputs 😲

* thoughts on catching this SQL exception in `SQLDatabaseChain` and throwing a graceful error message?

* more broadly, how do you think about error propagation?

(loving the library overall!! 😄) | Graceful failures with SQL syntax error | https://api.github.com/repos/langchain-ai/langchain/issues/1292/comments | 1 | 2023-02-25T17:35:58Z | 2023-08-24T16:16:43Z | https://github.com/langchain-ai/langchain/issues/1292 | 1,599,777,331 | 1,292 |

[

"hwchase17",

"langchain"

]

| Im having this bug when trying to setup a model within a lambda cloud running SelfHostedHuggingFaceLLM() after the rh.cluster() function.

`

from langchain.llms import SelfHostedPipeline, SelfHostedHuggingFaceLLM

from langchain import PromptTemplate, LLMChain

import runhouse as rh

gpu = rh.cluster(name="rh-a10", instance_type="A10:1").save()

template = """Question: {question}

Answer: Let's think step by step."""

prompt = PromptTemplate(template=template, input_variables=["question"])

llm = SelfHostedHuggingFaceLLM(model_id="gpt2", hardware=gpu, model_reqs=["pip:./", "transformers", "torch"])

`

I made sure with sky check that the lambda credentials are set, but the error i get within the log is this, which i havent been able to solve.

If i can get any help solving this i would appreciate it. | RuntimeError when setting up self hosted model + runhouse integration | https://api.github.com/repos/langchain-ai/langchain/issues/1290/comments | 2 | 2023-02-25T03:38:32Z | 2023-09-10T16:43:47Z | https://github.com/langchain-ai/langchain/issues/1290 | 1,599,532,047 | 1,290 |

[

"hwchase17",

"langchain"

]

| Please provide tutorials for using other LLM models beside OpenAI. Even though documentation implies it is possible to use other LLM models there is no solid example of that. I would like to download a LLM model and use it with langchain. | Please provide tutorials for using other LLM models beside OpenAI. | https://api.github.com/repos/langchain-ai/langchain/issues/1289/comments | 2 | 2023-02-25T01:04:12Z | 2023-09-18T16:24:10Z | https://github.com/langchain-ai/langchain/issues/1289 | 1,599,462,279 | 1,289 |

[

"hwchase17",

"langchain"

]

| Hi there, loving the library.

I'm running into an issue when trying to run the chromadb example using Python.

It fine upto the following:

`docs = docsearch.similarity_search(query)`

Receiving the following error:

`File "pydantic/main.py", line 342, in pydantic.main.BaseModel.__init__

pydantic.error_wrappers.ValidationError: 1 validation error for Document

metadata

none is not an allowed value (type=type_error.none.not_allowed)`

I did some debugging and found that the metadatas from the `results` in chromadb in this code bit:

```

docs = [

# TODO: Chroma can do batch querying,

# we shouldn't hard code to the 1st result

Document(page_content=result[0], metadata=result[1])

for result in zip(results["documents"][0], results["metadatas"][0])

]

```

Are all "None" shown as follows:

`.. this.']], 'metadatas': [[None, None, None, None]], 'distances': [[0.3913411498069763, 0.43421220779418945, 0.4523361325263977, 0.45244452357292175]]}`

Running langchain-0.0.94 and have all libraries up to date.

Complete code:

```

with open('state_of_the_union.txt') as f:

state_of_the_union = f.read()

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

texts = text_splitter.split_text(state_of_the_union)

embeddings = OpenAIEmbeddings()

docsearch = Chroma.from_texts(texts, embeddings)

print('making query')

query = "What did the president say about Ketanji Brown Jackson"

docs = docsearch.similarity_search(query)

print(docs[0].page_content)

```

Any help would be appreciated 👍 | ChromaDB validation error for Document metadata. | https://api.github.com/repos/langchain-ai/langchain/issues/1287/comments | 3 | 2023-02-25T00:07:41Z | 2023-09-18T16:24:15Z | https://github.com/langchain-ai/langchain/issues/1287 | 1,599,426,456 | 1,287 |

[

"hwchase17",

"langchain"

]

| The `load` method of `UnstructuredURLLoader` returns a list of Documents, with a single Document for each url.

```python

def load(self) -> List[Document]:

"""Load file."""

from unstructured.partition.html import partition_html

docs: List[Document] = list()

for url in self.urls:

elements = partition_html(url=url)

text = "\n\n".join([str(el) for el in elements])

metadata = {"source": url}

docs.append(Document(page_content=text, metadata=metadata))

return docs

```

It could be helpful to have a `load_text` method that returns the raw text or a `split_load` that requires a text splitter as an argument, and returns either a list of lists of documents or another data structure.

```python

def load_text(self) -> List[str]:

"""Load file."""

from unstructured.partition.html import partition_html

texts: List[str] = list()

for url in self.urls:

elements = partition_html(url=url)

text = "\n\n".join([str(el) for el in elements])

texts.append(text)

return texts

```

The `split_load` would have to be compatible with all the text splitters or raise an error if it's not implemented for a specific splitter, so I won't add the code here, but it's quite straightforward too. | UnstructuredURLLoader not able to split text | https://api.github.com/repos/langchain-ai/langchain/issues/1283/comments | 1 | 2023-02-24T16:32:09Z | 2023-08-24T16:16:49Z | https://github.com/langchain-ai/langchain/issues/1283 | 1,598,946,490 | 1,283 |

[

"hwchase17",

"langchain"

]

| null | OpenSearchVectorSearch.from_texts auto-generating index_name should be optional | https://api.github.com/repos/langchain-ai/langchain/issues/1281/comments | 0 | 2023-02-24T15:54:39Z | 2023-02-24T19:58:13Z | https://github.com/langchain-ai/langchain/issues/1281 | 1,598,887,726 | 1,281 |

[

"hwchase17",

"langchain"

]

| Loving Langchain it's awesome!

I have a Hugging Face project which is a clone of this one hwchase17/chat-your-data-state-of-the-union.

This was all working fine on Langchain 0.0.86 but now I'm on 0.0.93 it doesn't, and I'm getting the following error:

File "/home/user/.local/lib/python3.8/site-packages/langchain/vectorstores/faiss.py", line 107, in similarity_search_with_score_by_vector

scores, indices = self.index.search(np.array([embedding], dtype=np.float32), k)

TypeError: search() missing 3 required positional arguments: 'k', 'distances', and 'labels'

If I run the app locally it's also working fine. I'm also on Python 3.8 for both envs.

Any help is much appreciated! | Error with ChatVectorDBChain.from_llm on Hugging Face | https://api.github.com/repos/langchain-ai/langchain/issues/1273/comments | 7 | 2023-02-24T13:30:31Z | 2023-08-11T16:32:01Z | https://github.com/langchain-ai/langchain/issues/1273 | 1,598,636,733 | 1,273 |

[

"hwchase17",

"langchain"

]

| Langchain depends on sqlalchemy<2. This prevents usage of the current version of sqlalchemy, even if neither the caching nor sqlchains features of langchain are being used.

Looking at this purely as a user it'd be ideal if langchain would be compatible with both sqlalchemy 1.4.x and 2.x.x. Implementing this might be a lot of work though. I think few users would be impacted if sqlalchemy would be converted into an optional dependency of langchain, which would also resolve this issue for me.

| Sqlalchemy 2 cannot be used in projects using langchain. | https://api.github.com/repos/langchain-ai/langchain/issues/1272/comments | 6 | 2023-02-24T11:34:36Z | 2023-03-18T09:43:32Z | https://github.com/langchain-ai/langchain/issues/1272 | 1,598,464,619 | 1,272 |

[

"hwchase17",

"langchain"

]

| We manage all our dependencies with a Conda environment file. It would be great if that could cover Langchain too! As Langchain is taking off, there are probably other Conda users in the same shoes.

(Thanks for making Langchain! It's great!) | Put Langchain on Conda-forge | https://api.github.com/repos/langchain-ai/langchain/issues/1271/comments | 16 | 2023-02-24T08:10:32Z | 2023-12-28T20:58:15Z | https://github.com/langchain-ai/langchain/issues/1271 | 1,598,132,401 | 1,271 |

[

"hwchase17",

"langchain"

]

| Hey @hwchase17 , the arXiv API is a pretty useful one for retrieving specific arXiv information, including title, authors, topics, and text abstracts. Am thinking of working to include it within langchain, to add to the collection of API wrappers available.

How does that sound? | arXiv API Wrapper | https://api.github.com/repos/langchain-ai/langchain/issues/1269/comments | 3 | 2023-02-24T03:54:36Z | 2023-09-10T16:44:02Z | https://github.com/langchain-ai/langchain/issues/1269 | 1,597,856,162 | 1,269 |

[

"hwchase17",

"langchain"

]

| When using the map_reduce chain, it would be nice to be able to provide a second llm for the combine step with separate configuration.

I may want 500 tokens on the map phase, but on the reduce phase (combine) I might want to increase to 2000+ tokens to not receive such a small summary for a lengthy document. | Allow for separate token limits between map and combine | https://api.github.com/repos/langchain-ai/langchain/issues/1268/comments | 1 | 2023-02-24T02:40:06Z | 2023-08-24T16:16:54Z | https://github.com/langchain-ai/langchain/issues/1268 | 1,597,805,672 | 1,268 |

[

"hwchase17",

"langchain"

]

| I created a virtual environment and ran the following commands:

pip install 'langchain [all]'

pip install 'unstructured[local-inference]'

However, when running the code below, I still get the following exception:

loader = UnstructuredPDFLoader("<path>")

data = loader.load()

ModuleNotFoundError: No module named 'layoutparser.models'

...

Exception: unstructured_inference module not found... try running pip install unstructured[local-inference] if you installed the unstructured library as a package. If you cloned the unstructured repository, try running make install-local-inference from the root directory of the repository. | unstructured_inference module not found even after pip install unstructured[local-inference] | https://api.github.com/repos/langchain-ai/langchain/issues/1267/comments | 4 | 2023-02-24T02:08:20Z | 2024-04-24T17:01:11Z | https://github.com/langchain-ai/langchain/issues/1267 | 1,597,783,521 | 1,267 |

[

"hwchase17",

"langchain"

]

| Currently, the TextSplitter interface only allows for splitting text into fixed-size chunks and returning the entire list before any queries are run. It would be great if we could split text input dynamically to provide each query step with as large context window as possible, this would make tasks like summarization much more efficient.

It should estimate the number of available tokens for each query and ask the TextSplitter to yield the next chunk of at most X tokens.

Implementing such TextSplitter seems pretty easy (I can contribute it), the harder problem is to integrate the support for it in various places in the library. | Implement a streaming text splitter | https://api.github.com/repos/langchain-ai/langchain/issues/1264/comments | 5 | 2023-02-23T23:17:40Z | 2023-09-18T16:24:21Z | https://github.com/langchain-ai/langchain/issues/1264 | 1,597,657,276 | 1,264 |

[

"hwchase17",

"langchain"

]

| ## Problem

The default embeddings (e.g. Ada-002 from OpenAI, etc) are great **_generalists_**. However, they are not **_tailored_** for **your** specific use-case.

## Proposed Solution

**_🎉 Customizing Embeddings!_**

> ℹ️ See [my tutorial / lessons learned](https://twitter.com/GlavinW/status/1627657346225676288?s=20) if you're interested in learning more, step-by-step, with screenshots and tips.

<img src="https://user-images.githubusercontent.com/1885333/221033175-de90a47d-d66c-489e-a360-c0386cbd36f4.png" width="auto" height="300" />

### How it works

#### Training

```mermaid

flowchart LR

subgraph "Basic Text Embeddings"

Input[Input Text]

OpenAI[OpenAI Embedding API]

Embed[Original Embedding]

end

subgraph "Train Custom Embedding Matrix"

Input-->OpenAI

OpenAI-->Embed

Raw1["Original Embedding #1"]

Raw2["Original Embedding #2"]

Raw3["Original Embedding #3"]

Embed-->Raw1 & Raw2 & Raw3

Score1_2["Similarity Label for (#1, #2) => Similar (1)"]

Raw1 & Raw2-->Score1_2

Score2_3["Similarity Label for (#2, #3) => Dissimilar (-1)"]

Raw2 & Raw3-->Score2_3

Dataset["Similarity Training Dataset\n[First, Second, Label]\n[1, 2, 1]\n[2, 3, -1]\n..."]

Raw1 & Raw2 & Raw3 -->Dataset

Score1_2-->|1|Dataset

Score2_3 -->|-1|Dataset

Train["Train Custom Embedding Matrix"]

Dataset-->Train

Train-->CustomMatrix

CustomMatrix["Custom Embedding Matrix"]

end

```

#### Embedding

```mermaid

flowchart LR

subgraph "Similarity Search"

direction LR

CustomMatrix["Custom Embedding Matrix\n(e.g. custom-embedding.npy)"]

Multiply["(Original Embedding) x (Matrix)"]

CustomMatrix --> Multiply

Text1["Original Texts #1, #2, #3..."]

Raw1'["Original Embeddings #1, #2, #3, ..."]

Custom1["Custom Embeddings #1, #2, #3, ..."]

Text1-->Raw1'

Raw1' --> Multiply

Multiply --> Custom1

DB["Vector Database"]

Custom1 -->|Upsert| DB

Search["Search Query"]

EmbedSearch["Original Embedding for Search Query"]

CustomEmbedSearch["Custom Embedding for Search Query"]

Search-->EmbedSearch

EmbedSearch-->Multiply

Multiply-->CustomEmbedSearch

SimilarFound["Similar Embeddings Found"]

CustomEmbedSearch -->|Search| DB

DB-->|Search Results|SimilarFound

end

```

### Example

```python

from langchain.embeddings import OpenAIEmbeddings, CustomizeEmbeddings

### Generalized Embeddings

embeddings = OpenAIEmbeddings()

text = "This is a test document."

query_result1 = embeddings.embed_query(text)

doc_result1 = embeddings.embed_documents([text])

### Training Customized Embeddings

# Data Preparation

# TODO: How to improve this developer experience using Langchain? Need pairs of Documents with a desired similarity score/label.

data = [

{

# Pre-computed embedding vectors

"vector_1": [0.1, 0.2, -0.3, ...],

"vector_2": [0.1, 0.2, -0.3, ...],

"similar": 1, # Or -1

},

{

# Original text which need to be embedded lazily

"text_1": [0.1, 0.2, -0.3, ...],

"text_2": [0.1, 0.2, -0.3, ...],

"similar": 1, # Or -1

},

]

# Training

options = {

"modified_embedding_length": 1536,

"test_fraction": 0.5,

"random_seed": 123,

"max_epochs": 30,

"dropout_fraction": 0.2,

"progress": True,

"batch_size": [10, 100, 1000],

"learning_rate": [10, 100, 1000],

}

customEmbeddings = CustomizeEmbeddings(embeddings) # Pass `embeddings` for computing any embeddings lazily

customEmbeddings.train(data, options) # Stores results in training_results and best_result

all_results = customEmbeddings.training_results

best_result = customEmbeddings.best_result

# best_result = { "accuracy": 0.98, "matrix": [...], "options": {...} }

# Usage

custom_query_result1 = customEmbeddings.embed_query(text)

custom_doc_result1 = customEmbeddings.embed_documents([text])

# Saving

customEmbeddings.save("custom-embedding.npy") # Saves the best

### Loading Customized Embeddings

customEmbeddings2 = CustomizeEmbeddings(embeddings)

customEmbeddings2.load("custom-embedding.npy")

# Usage

custom_query_result2 = customEmbeddings2.embed_query(text)

custom_doc_result2 = customEmbeddings2.embed_documents([text])

```

### Parameters

#### `.train` options

| Param | Type | Description | Default Value |

| --- | --- | --- | --- |

| `random_seed` | `int` | Random seed is arbitrary, but is helpful in reproducibility | 123 |

| `modified_embedding_length` | `int` | Dimension size of output custom embedding. | 1536 |

| `test_fraction` | `float` | % split data into train and test sets | 0.5 |

| `max_epochs` | `int` | Total # of iterations using all of the training data in one cycle | 10 |

| `dropout_fraction` | `float` | [Probability of an element to be zeroed](https://pytorch.org/docs/stable/generated/torch.nn.Dropout.html) | 0.2 |

| `batch_size` | `List[int]` | [How many samples per batch to load](https://pytorch.org/docs/stable/data.html#torch.utils.data.DataLoader) | [10, 100, 1000] |

| `learning_rate` | `List[int]` | Works best when similar to `batch_size` | `[10, 100, 1000]` |

| `progress` | `boolean` | Whether to show progress in logs | `True` |

## Recommended Reading

- [My tutorial / lessons learned](https://twitter.com/GlavinW/status/1627657346225676288?s=20)

- [Customizing embeddings from OpenAI Cookbook](https://github.com/openai/openai-cookbook/blob/main/examples/Customizing_embeddings.ipynb)

- @pullerz 's [blog post on lessons learned](https://twitter.com/AlistairPullen/status/1628557761352204288?s=20)

---

_P.S. I'd love to personally contribute this to the Langchain repo and community! Please let me know if you think it is a valuable idea and any feedback on the proposed solution. Thank you!_ | Utility helpers to train and use Custom Embeddings | https://api.github.com/repos/langchain-ai/langchain/issues/1260/comments | 4 | 2023-02-23T22:04:38Z | 2023-09-27T16:14:06Z | https://github.com/langchain-ai/langchain/issues/1260 | 1,597,590,916 | 1,260 |

[

"hwchase17",

"langchain"

]

| ## Description

Based on my experiments with `SQLDatabaseChain` class, I have noticed that the LLM tends to hallucinate `Answer` if `SQLResult` field results as `[]`. As I primarily used OpenAI as the LLM, I am not sure if this problem exists for other LLMs.

This is surely an undesirable outcome for users if the agent responds back with incorrect answers. With some prompt engineering, I think the following can be added to the default prompt to tackle this problem:

```

If the SQLResult is empty, the Answer should be "No results found". DO NOT hallucinate an answer if there is no result.

```

If there are better prompts to tackle this, that's great too! | Fix hallucination problem with `SQLDatabaseChain` | https://api.github.com/repos/langchain-ai/langchain/issues/1254/comments | 17 | 2023-02-23T18:42:41Z | 2023-09-28T16:12:13Z | https://github.com/langchain-ai/langchain/issues/1254 | 1,597,356,290 | 1,254 |

[

"hwchase17",

"langchain"

]

| I was looking for a .ipynb loader and realized that there isn't one. I already started to build one where you can specify if to include cell outputs, set a max length for output to include, and decide if to include newline characters. This latter feature is to avoid useless token usage, as openai models can detect spaces (at least davinci does), but could be useful to include it for other applications. | notebooks (.ipynb) loader | https://api.github.com/repos/langchain-ai/langchain/issues/1248/comments | 1 | 2023-02-23T13:57:27Z | 2023-07-13T17:34:57Z | https://github.com/langchain-ai/langchain/issues/1248 | 1,596,920,992 | 1,248 |

[

"hwchase17",

"langchain"

]

| Hi. I hope you are doing well.

I am writing to request a new feature in the LangChain library that combines the benefits of both the **Stuffing** and **Map Reduce** methods for working with GPT language models. The proposed approach involves sending parallel requests to GPT, but instead of treating each request as a single document (as in the MapReduceDocumentsChain), the requests will concatenate multiple documents until they reach the maximum token size of the GPT prompt. GPT will then attempt to find an answer for each concatenated document.

It reduces the number of requests sent to GPT, similar to the StuffDocumentsChain method, but also allows for parallel requests like the MapReduceDocumentsChain method. It can be beneficial when documents are chunked and the length of them is fairly short.

We believe that this new approach would be a valuable addition to the LangChain library, allowing users to work more efficiently with GPT language models and increasing the library's usefulness for various natural language processing tasks.

Thank you very much for considering our request.

| Request for a new approach to combineDocument Chain for efficient GPT processing | https://api.github.com/repos/langchain-ai/langchain/issues/1247/comments | 1 | 2023-02-23T11:20:35Z | 2023-09-10T16:44:13Z | https://github.com/langchain-ai/langchain/issues/1247 | 1,596,673,406 | 1,247 |

[

"hwchase17",

"langchain"

]

| I have tried using memory inside load_qa_with_sources_chain but it throws up an error. Works fine with load_qa_chain. No other way to do this other than creating a custom chain? | Is there no chain for question answer with sources and memory? | https://api.github.com/repos/langchain-ai/langchain/issues/1246/comments | 5 | 2023-02-23T09:56:05Z | 2023-03-30T03:08:42Z | https://github.com/langchain-ai/langchain/issues/1246 | 1,596,544,537 | 1,246 |

[

"hwchase17",

"langchain"

]

| It simply doesn't work. There are a few parameters need to be added:

openai.api_type = "azure"

openai.ap_base = xxxxx

| Azure OpenAI isn't working | https://api.github.com/repos/langchain-ai/langchain/issues/1243/comments | 1 | 2023-02-23T06:10:46Z | 2023-08-24T16:17:04Z | https://github.com/langchain-ai/langchain/issues/1243 | 1,596,279,033 | 1,243 |

[

"hwchase17",

"langchain"

]

| pip install rust-python gave invalid syntax (<string>, line 1)

should the agent not have permission to install the necessary packages?

'''

https://replit.com/@viswatejaG/Python-Agent-LLM

agent.run("run rust code in python function?")

> Entering new AgentExecutor chain...

I need to find a way to execute rust code in python

Action: Python REPL

Action Input: import rust

Observation: No module named 'rust'

Thought:Retrying langchain.llms.openai.BaseOpenAI.completion_with_retry.<locals>._completion_with_retry in 4.0 seconds as it raised Timeout: Request timed out: HTTPSConnectionPool(host='api.openai.com', port=443): Read timed out. (read timeout=600).

I need to find a library that allows me to execute rust code in python

Action: Python REPL

Action Input: pip install rust-python

Observation: invalid syntax (<string>, line 1)

Thought:Retrying langchain.llms.openai.BaseOpenAI.completion_with_retry.<locals>._completion_with_retry in 4.0 seconds as it raised Timeout: Request timed out: HTTPSConnectionPool(host='api.openai.com', port=443): Read timed out. (read timeout=600).

I need to find a library that allows me to execute rust code in python

Action: Google Search

Action Input: rust python library

''' | Action: Python REPL Action Input: pip install rust-python Observation: invalid syntax (<string>, line 1) | https://api.github.com/repos/langchain-ai/langchain/issues/1239/comments | 2 | 2023-02-22T22:46:05Z | 2023-09-10T16:44:17Z | https://github.com/langchain-ai/langchain/issues/1239 | 1,595,953,200 | 1,239 |

[

"hwchase17",

"langchain"

]

| Running the following code:

```

store = FAISS.from_texts(

chunks["texts"], embeddings_instance, metadatas=chunks["metadatas"]

)

faiss.write_index(store.index, "index.faiss")

store.index = None

with open("faiss_store.pkl", "wb") as f:

pickle.dump(store, f)

```

Returns the following errors:

```

Error ingesting document: cannot pickle '_queue.SimpleQueue' object

Traceback (most recent call last):

File "/Users/colin/data/form-filler-demo/ingest.py", line 95, in ingest_pdf

index_chunks(chunks)

File "/Users/colin/data/form-filler-demo/ingest.py", line 72, in index_chunks

pickle.dump(store, f)

TypeError: cannot pickle '_queue.SimpleQueue' object

2023-02-22 13:27:53.225 Uncaught app exception

Traceback (most recent call last):

File "/opt/homebrew/lib/python3.10/site-packages/streamlit/runtime/scriptrunner/script_runner.py", line 565, in _run_script

exec(code, module.__dict__)

File "/Users/colin/data/form-filler-demo/main.py", line 77, in <module>

store = pickle.load(f)

EOFError: Ran out of input

```

When using embeddings_instance=CohereEmbeddings(). Works fine with OpenAIEmbeddings(). Planning to look into it when I have time, unless somebody else has experienced this! | Cohere embeddings can't be pickled | https://api.github.com/repos/langchain-ai/langchain/issues/1236/comments | 3 | 2023-02-22T18:30:48Z | 2023-09-25T16:18:26Z | https://github.com/langchain-ai/langchain/issues/1236 | 1,595,601,877 | 1,236 |

[

"hwchase17",

"langchain"

]

| I am getting the above error frequently while using the SQLDatabaseChain to run some queries against a postgres database. Trying to google for this error has not yielded any promising results. Appreciate any help I can get from this forum. | TypeError: sqlalchemy.cyextension.immutabledict.immutabledict is not a sequence | https://api.github.com/repos/langchain-ai/langchain/issues/1234/comments | 2 | 2023-02-22T16:50:05Z | 2023-09-10T16:44:22Z | https://github.com/langchain-ai/langchain/issues/1234 | 1,595,452,844 | 1,234 |

[

"hwchase17",

"langchain"

]

| I have some existing embeddings created from

`doc_embeddings = embeddings.embed_documents(docs)`

how to pass doc embeddings to FAISS vector store

`from langchain.vectorstores import FAISS`

right now FAISS.from_text() only takes an embedding client and not existing embeddings.

| How to pass existing doc embeddings to FAISS ? | https://api.github.com/repos/langchain-ai/langchain/issues/1233/comments | 5 | 2023-02-22T14:37:21Z | 2023-09-11T03:34:47Z | https://github.com/langchain-ai/langchain/issues/1233 | 1,595,221,029 | 1,233 |

[

"hwchase17",

"langchain"

]

| There are so many hardcoded keywords in this library.

for example :

```python

tools = load_tools(["serpapi", "llm-math"], llm=llm)

agent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)

```

I want to add enums for all possible arguments. But I dont have the list of them.

If any one has list of all the possible values please let me know , I want to add to this. | Why there are no `enums` ? | https://api.github.com/repos/langchain-ai/langchain/issues/1230/comments | 3 | 2023-02-22T12:09:35Z | 2023-09-12T21:30:09Z | https://github.com/langchain-ai/langchain/issues/1230 | 1,594,994,929 | 1,230 |

[

"hwchase17",

"langchain"

]

| There are so many hardcoded keywords in this library.

for example :

```python

tools = load_tools(["serpapi", "llm-math"], llm=llm)

agent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)

```

I want to add enums for all possible arguments. But I dont have the list of them.

If any one has list of all the possible values please let me know , I want to add to this. | Why there are no `enums` ? | https://api.github.com/repos/langchain-ai/langchain/issues/1229/comments | 0 | 2023-02-22T12:09:25Z | 2023-02-28T09:21:02Z | https://github.com/langchain-ai/langchain/issues/1229 | 1,594,994,630 | 1,229 |

[

"hwchase17",

"langchain"

]

| I've used the docker-compose yaml file to set up the langchain Front-end...

Is there any documentation on how to use the langchain front-end? | Langchain Front-end documentation | https://api.github.com/repos/langchain-ai/langchain/issues/1227/comments | 2 | 2023-02-22T08:36:19Z | 2023-09-10T16:44:27Z | https://github.com/langchain-ai/langchain/issues/1227 | 1,594,681,742 | 1,227 |

[

"hwchase17",

"langchain"

]

| https://github.com/marqo-ai/marqo | Another search option: marqo | https://api.github.com/repos/langchain-ai/langchain/issues/1220/comments | 1 | 2023-02-21T22:39:15Z | 2023-08-24T16:17:19Z | https://github.com/langchain-ai/langchain/issues/1220 | 1,594,188,203 | 1,220 |

[

"hwchase17",

"langchain"

]

| Currently, `GoogleDriveLoader` only allows passing a `folder_id` or a `document_id` which works like a charm.

However, it would be great if we could also pass custom queries to allow for more flexibility (e.g., accessing files shared with me). | Allow adding own query to GoogleDriveLoader | https://api.github.com/repos/langchain-ai/langchain/issues/1215/comments | 4 | 2023-02-21T17:38:32Z | 2023-11-21T16:08:01Z | https://github.com/langchain-ai/langchain/issues/1215 | 1,593,866,336 | 1,215 |

[

"hwchase17",

"langchain"

]

| We should implement all abstract methods in VectorStore so that users can use weaviate as the vector store for any use case.

| Implement max_marginal_relevance_search_by_vector method in the weaviate VectorStore | https://api.github.com/repos/langchain-ai/langchain/issues/1214/comments | 0 | 2023-02-21T17:29:08Z | 2023-04-24T18:50:58Z | https://github.com/langchain-ai/langchain/issues/1214 | 1,593,854,452 | 1,214 |

[

"hwchase17",

"langchain"

]

| We should implement all abstract methods in VectorStore so that users can use weaviate as the vector store for any use case. | Implement max_marginal_relevance_search method in the weaviate VectorStore | https://api.github.com/repos/langchain-ai/langchain/issues/1213/comments | 1 | 2023-02-21T17:28:17Z | 2023-04-17T07:29:19Z | https://github.com/langchain-ai/langchain/issues/1213 | 1,593,853,377 | 1,213 |

[

"hwchase17",

"langchain"

]

| We should implement all abstract methods in VectorStore so that users can use weaviate as the vector store for any use case. | Implement similarity_search_by_vector in the weaviate VectorStore | https://api.github.com/repos/langchain-ai/langchain/issues/1212/comments | 3 | 2023-02-21T17:27:33Z | 2023-04-17T07:30:26Z | https://github.com/langchain-ai/langchain/issues/1212 | 1,593,852,505 | 1,212 |

[

"hwchase17",

"langchain"

]

| We should implement all abstract methods in VectorStore so that users can use weaviate as the vector store for any use case. | Implement from_texts class method in weaviate VectorStore | https://api.github.com/repos/langchain-ai/langchain/issues/1211/comments | 1 | 2023-02-21T17:26:21Z | 2023-04-17T07:23:10Z | https://github.com/langchain-ai/langchain/issues/1211 | 1,593,851,016 | 1,211 |

[

"hwchase17",

"langchain"

]

| weaviate supports [multithreaded batch import.](https://weaviate.io/developers/weaviate/client-libraries/python#batching) We should expose this option to the user when they initialise the Weaviate vectorstore to help speed up the document import stage. | Allow users to configure batch settings when uploading docs to weaviate | https://api.github.com/repos/langchain-ai/langchain/issues/1210/comments | 1 | 2023-02-21T17:21:17Z | 2023-08-24T16:17:24Z | https://github.com/langchain-ai/langchain/issues/1210 | 1,593,844,868 | 1,210 |

[

"hwchase17",

"langchain"

]

| I'm now currentary working on chatbot with the context using Youtube loaders.

Is there a way to load non-English video? For now, languages=['en'] is hard-coded in youtube.py like,

transcript_pieces = YouTubeTranscriptApi.get_transcript(self.video_id, languages=['en'])

Can I create a PR to change this part to allow language specification?

Thank you. | Youtubeloader for specific languages | https://api.github.com/repos/langchain-ai/langchain/issues/1206/comments | 0 | 2023-02-21T10:57:54Z | 2023-02-22T13:04:41Z | https://github.com/langchain-ai/langchain/issues/1206 | 1,593,264,673 | 1,206 |

[

"hwchase17",

"langchain"

]

| I am trying Q&A on my own dataset, When i tried 1-10 Questions on model it gives me better result. but when I passed more than 20 question and try to fetch answers, the model exclude some questions from the list and give the answers of only 13 Questions.

Is there any limit for model in Lang chain to give the answers of only limited queries? | Model is not able to answers multiple questions at a time> | https://api.github.com/repos/langchain-ai/langchain/issues/1205/comments | 1 | 2023-02-21T09:40:20Z | 2023-08-24T16:17:29Z | https://github.com/langchain-ai/langchain/issues/1205 | 1,593,147,317 | 1,205 |

[

"hwchase17",

"langchain"

]

| ```from langchain.llms import GooseAI, OpenAI

import os

os.environ['OPENAI_API_KEY'] = 'ooo'

os.environ['GOOSEAI_API_KEY'] = 'ggg'

import openai

print(openai.api_key)

o = OpenAI()

print(openai.api_key)

g = GooseAI()

print(openai.api_key)

o = OpenAI()

print(openai.api_key)

------

ooo

ooo

ggg

ooo

```

This makes it annoying (impossible?) to switch back and forth between calls to OpenAI models like Babbage and GooseAI models like GPT-J in the same notebook.

```

prompt = 'The best basketball player of all time is '

args = {'max_tokens': 3, 'temperature': 0}

openai_llm = OpenAI(**args)

print(openai_llm(prompt))

gooseai_llm = GooseAI(**args)

print(gooseai_llm(prompt))

print(openai_llm(prompt))

---

Michael Jordan

Michael Jordan

InvalidRequestError: you must provide a model parameter

```

I'm pretty sure this behavior comes from the validate_environment method in the [two](https://github.com/hwchase17/langchain/blob/master/langchain/llms/openai.py) llm [files](https://github.com/hwchase17/langchain/blob/master/langchain/llms/gooseai.py) which despite its name seems to not just validate the environment but also change it. | OpenAI and GooseAI each overwrite openai.api_key | https://api.github.com/repos/langchain-ai/langchain/issues/1192/comments | 4 | 2023-02-21T00:50:07Z | 2023-09-10T16:44:33Z | https://github.com/langchain-ai/langchain/issues/1192 | 1,592,616,503 | 1,192 |

[

"hwchase17",

"langchain"

]

| Things like `execution_count` and `id` create unnecessary diffs.

`nbdev_install_hooks` from nbdev should help with this.

See here: https://nbdev.fast.ai/tutorials/tutorial.html#install-hooks-for-git-friendly-notebooks

| Clean jupyter notebook metadata before committing | https://api.github.com/repos/langchain-ai/langchain/issues/1190/comments | 1 | 2023-02-20T21:14:17Z | 2023-08-24T16:17:34Z | https://github.com/langchain-ai/langchain/issues/1190 | 1,592,452,787 | 1,190 |

[

"hwchase17",

"langchain"

]

| Until last week [LangChain 0.0.86], there used to be a link associated with "Question Answering Notebook" on this page -- https://langchain.readthedocs.io/en/latest/use_cases/question_answering.html). But it's not there anymore. Can it be fixed?

None of the links here https://github.com/hwchase17/langchain/blob/master/docs/use_cases/question_answering.md seem to be working.

@ShreyaR @hwchase17 | Link missing for Question Answering Notebook | https://api.github.com/repos/langchain-ai/langchain/issues/1189/comments | 1 | 2023-02-20T20:10:35Z | 2023-02-21T06:54:28Z | https://github.com/langchain-ai/langchain/issues/1189 | 1,592,390,745 | 1,189 |

[

"hwchase17",

"langchain"

]

| #1117 didn't seem to fix it? I still get an error `KeyError: -1`

Code to reproduce:

```py

output = docsearch.max_marginal_relevance_search_by_vector(query_vec, k=10)

```

where `k > len(docsearch)`. Pushing PR with unittest/fix shortly. | max_marginal_relevance_search_by_vector with k > doc size | https://api.github.com/repos/langchain-ai/langchain/issues/1186/comments | 0 | 2023-02-20T19:19:29Z | 2023-02-21T01:51:10Z | https://github.com/langchain-ai/langchain/issues/1186 | 1,592,346,321 | 1,186 |

[

"hwchase17",

"langchain"

]

| I am trying to load a document using the `UnstructuredFileLoader` class but the file isn't accessible via the local file system and a filename. Instead the document is accessible through an `fsspec` filesystem on a remote system via an `OpenFile` object ([see the docs](https://filesystem-spec.readthedocs.io/en/latest/api.html#fsspec.core.OpenFile)). I believe the `Unstructured.partition` function used by `UnstructuredFileLoader._get_elements` method allows for something like this using the `file: Optional[IO]` parameter, but this is not exposed with `UnstructuredFileLoader` | UnstructuredFileLoader with unstructured.partition `file` in addition to `filename` | https://api.github.com/repos/langchain-ai/langchain/issues/1182/comments | 1 | 2023-02-20T16:26:52Z | 2023-02-21T06:54:50Z | https://github.com/langchain-ai/langchain/issues/1182 | 1,592,154,462 | 1,182 |

[

"hwchase17",

"langchain"

]

| The return Source Documents parameter for ChatVectorDBChain is not working. Getting the following error -

<img width="698" alt="image" src="https://user-images.githubusercontent.com/46256520/220151201-669c6a1a-63af-4a49-bc67-8289da1788c3.png">

Code causing the error below -

qa = ChatVectorDBChain.from_llm(OpenAI(temperature=0), vectorstore, qa_prompt=QA_PROMPT,return_source_documents=True)

| bug : error - TypeError: from_llm() got an unexpected keyword argument 'return_source_documents' | https://api.github.com/repos/langchain-ai/langchain/issues/1179/comments | 2 | 2023-02-20T15:51:00Z | 2023-09-10T16:44:37Z | https://github.com/langchain-ai/langchain/issues/1179 | 1,592,097,809 | 1,179 |

[

"hwchase17",

"langchain"

]

| For example, for a search task: to get the results from both bing, google and summarize them | Can multiple tools/agents be set so they are always used? | https://api.github.com/repos/langchain-ai/langchain/issues/1173/comments | 3 | 2023-02-20T10:55:43Z | 2023-09-12T21:30:08Z | https://github.com/langchain-ai/langchain/issues/1173 | 1,591,608,951 | 1,173 |

[

"hwchase17",

"langchain"

]

| ## Description

I was going through the Intermediate Steps documentation: https://langchain.readthedocs.io/en/latest/modules/agents/examples/intermediate_steps.html.

My use case is to use this feature for conversation agent.

Sample code:

```

from langchain.agents import initialize_agent, load_tools

from langchain.chains.conversation.memory import ConversationBufferWindowMemory

from langchain.llms import OpenAI

llm = OpenAI(temperature=0, model_name="text-davinci-002")

tools = load_tools(["llm-math"], llm=llm)

agent = initialize_agent(

tools,

llm,

agent="conversational-react-description",

memory=ConversationBufferWindowMemory(memory_key="chat_history"),

verbose=True,

return_intermediate_steps=True,

)

response = agent(

{

"input": "Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?"

}

)

```

### Actual Result

I get a `ValueError`:

```

Traceback (most recent call last):

File "test.py", line 17, in <module>

response = agent(

File "/home/ubuntu/miniconda3/envs/langchain-test/lib/python3.10/site-packages/langchain/chains/base.py", line 144, in __call__

return self.prep_outputs(inputs, outputs, return_only_outputs)

File "/home/ubuntu/miniconda3/envs/langchain-test/lib/python3.10/site-packages/langchain/chains/base.py", line 183, in prep_outputs

self.memory.save_context(inputs, outputs)

File "/home/ubuntu/miniconda3/envs/langchain-test/lib/python3.10/site-packages/langchain/chains/conversation/memory.py", line 146, in save_context

raise ValueError(f"One output key expected, got {outputs.keys()}")

ValueError: One output key expected, got dict_keys(['output', 'intermediate_steps'])

```

### Expected Result

It should work similar to `"zero-shot-react-description"` agent from the docs.

## Remarks

I'd be happy to contribute to solve this issue.

| bug: `return_intermediate_steps=True` doesn't work for `"zero-shot-react-description"` agent | https://api.github.com/repos/langchain-ai/langchain/issues/1171/comments | 9 | 2023-02-20T09:36:34Z | 2023-03-03T15:31:41Z | https://github.com/langchain-ai/langchain/issues/1171 | 1,591,485,197 | 1,171 |

[

"hwchase17",

"langchain"

]

| I couldn't find a complete tutorial for a Slack Bot to query information from pdf or docx files. Uses Weaviate as the vector database.

Please consider adding this to your gallery.

https://github.com/normandmickey/MrsStax

| Weaviate Slack Bot | https://api.github.com/repos/langchain-ai/langchain/issues/1164/comments | 0 | 2023-02-20T03:16:40Z | 2023-02-20T16:21:02Z | https://github.com/langchain-ai/langchain/issues/1164 | 1,591,055,073 | 1,164 |

[

"hwchase17",

"langchain"

]

| When trying to assign Knowledge graph memory to a chain while networkx is not installed yet, you'll get the error below. This is not in line with the rest of the langchain codebase, which will catch the error and tell you to

`pip install networkx

`

The documentation on Knowledge graph memory also doesn't refer to networkx.

```

File "pydantic/main.py", line 340, in pydantic.main.BaseModel.__init__

File "pydantic/main.py", line 1067, in pydantic.main.validate_model

File "pydantic/fields.py", line 439, in pydantic.fields.ModelField.get_default

File "/Users/<local>/envs/py310/lib/python3.10/site-packages/langchain/graphs/networkx_graph.py", line 53, in __init__

import networkx as nx

ModuleNotFoundError: No module named 'networkx'

```

Great to have this memory option!

| Knowledge graph memory misses instructions on library to install | https://api.github.com/repos/langchain-ai/langchain/issues/1161/comments | 1 | 2023-02-20T00:14:39Z | 2023-02-21T05:43:03Z | https://github.com/langchain-ai/langchain/issues/1161 | 1,590,921,561 | 1,161 |

[

"hwchase17",

"langchain"

]

| I've been trying to use document loaders and when I run the code, I always receive the error 'ImportError: failed to find libmagic. Check your installation'. I've uninstalled and reinstalled python-magic, done the same for the parent folder as well, but I still see the issue.

I've previously used langchain document loaders and I did not have any issue then. I've only been seeing this the past couple days. I believe this is an issue in my local env (VS code and Jupyter notebook), but I'm not sure what to do (I've tested in Google Colab and I'm able to use the document loaders there). Help much appreciated! | Receiving the error 'ImportError: failed to find libmagic. Check your installation' when I try to use Document Loaders in local environment | https://api.github.com/repos/langchain-ai/langchain/issues/1148/comments | 1 | 2023-02-19T02:10:53Z | 2023-02-19T07:15:45Z | https://github.com/langchain-ai/langchain/issues/1148 | 1,590,531,350 | 1,148 |

[

"hwchase17",

"langchain"

]

| Currently it is making up to N requests for all provided documents

```

results = self.llm_chain.apply(

# FYI - this is parallelized and so it is fast.

[{**{self.document_variable_name: d.page_content}, **kwargs} for d in docs]

)

```

This cause a problem when i have a large doc (1000+ tokens) and I hit openai rate limit very fast. Can we add a parameter to control the concurrency overthere? | How to control parallelism of map-reduce/map-rerank QA chain? | https://api.github.com/repos/langchain-ai/langchain/issues/1145/comments | 2 | 2023-02-18T23:50:24Z | 2023-06-11T16:13:28Z | https://github.com/langchain-ai/langchain/issues/1145 | 1,590,504,857 | 1,145 |

[

"hwchase17",

"langchain"

]

| What would be the best area to look up how to integrate Langchain with with BLOOM, FLAN, GPT-NEO, GPT-J etc., outside of a pre-existing cloud service?

So it could be used locally, or with a API setup by a developer.

And if not available, what are the things and steps that should be considered to develop and contribute integration code?

| Integration with BLOOM, FLAN, GPT-NEO, GPT-J etc. | https://api.github.com/repos/langchain-ai/langchain/issues/1138/comments | 6 | 2023-02-18T13:15:15Z | 2023-09-26T16:16:21Z | https://github.com/langchain-ai/langchain/issues/1138 | 1,590,342,542 | 1,138 |

[

"hwchase17",

"langchain"

]

| Are the sources for `langchain-frontend` and `Dockerfile`s for images named in `docker-compose.yaml` available in a public repo? | Frontend and Dockerfile sources | https://api.github.com/repos/langchain-ai/langchain/issues/1137/comments | 1 | 2023-02-18T13:07:37Z | 2023-02-21T05:35:09Z | https://github.com/langchain-ai/langchain/issues/1137 | 1,590,340,631 | 1,137 |

[

"hwchase17",

"langchain"

]

| Here is an example:

- I have created vector stores from several podcasts

- `metadata = {"guest": guest_name}`

- `question = "which guests have talked about <topic>?"`

Using `VectorDBQA`, this could be possible if `{context}` contained text + metadata | How can `Document` metadata be passed into prompts? | https://api.github.com/repos/langchain-ai/langchain/issues/1136/comments | 17 | 2023-02-18T11:20:42Z | 2024-05-10T23:26:09Z | https://github.com/langchain-ai/langchain/issues/1136 | 1,590,314,629 | 1,136 |

[

"hwchase17",

"langchain"

]

| 🌚 | Dark mode for docs | https://api.github.com/repos/langchain-ai/langchain/issues/1132/comments | 2 | 2023-02-18T00:22:47Z | 2023-09-12T21:30:07Z | https://github.com/langchain-ai/langchain/issues/1132 | 1,590,126,603 | 1,132 |

[

"hwchase17",

"langchain"

]

| See solution below:

` def add_documents(

self,

documents: List[Document],

ids: Optional[List[str]] = None,

) -> List[str]:

"""Run more documents through the embeddings and add to the vectorstore.

Args:

documents (List[Document]: Documents to add to the vectorstore.

Returns:

List[str]: List of IDs of the added texts.

"""

# TODO: Handle the case where the user doesn't provide ids on the Collection

texts = [doc.page_content for doc in documents]

metadatas = [doc.metadata for doc in documents]

if ids is None:

ids = [str(uuid.uuid1()) for _ in texts]

embeddings = None

if self._embedding_function is not None:

embeddings = self._embedding_function.embed_documents(list(texts))

self._collection.add(

metadatas=metadatas, embeddings=embeddings, documents=texts, ids=ids

)

return ids` | Chroma vectorstore has add_text method but no add_document method, even though it has from_document method | https://api.github.com/repos/langchain-ai/langchain/issues/1127/comments | 2 | 2023-02-17T21:07:49Z | 2023-09-18T16:24:25Z | https://github.com/langchain-ai/langchain/issues/1127 | 1,589,964,143 | 1,127 |

[

"hwchase17",

"langchain"

]

| This argument is found in qa_chain and should really be an argument in all chains

<img width="487" alt="image" src="https://user-images.githubusercontent.com/32659330/219785026-cdf75552-63a6-4582-92b5-aaf5b467397e.png">

| ChatVectorDB missing a useful arg: return_source_documents | https://api.github.com/repos/langchain-ai/langchain/issues/1126/comments | 0 | 2023-02-17T20:19:11Z | 2023-02-17T21:40:54Z | https://github.com/langchain-ai/langchain/issues/1126 | 1,589,913,086 | 1,126 |

[

"hwchase17",

"langchain"

]

| <img width="716" alt="Screen Shot 2023-02-17 at 12 31 43 PM" src="https://user-images.githubusercontent.com/32659330/219725974-db991b3a-441d-4838-becd-2d9795734c05.png">

<img width="1004" alt="Screen Shot 2023-02-17 at 12 31 17 PM" src="https://user-images.githubusercontent.com/32659330/219725985-5d3aa4b9-f598-48b9-af53-d78730f2d5b9.png">

| Chroma embedding_function not a keyword for _client on most recent pull request | https://api.github.com/repos/langchain-ai/langchain/issues/1123/comments | 3 | 2023-02-17T17:32:52Z | 2023-03-05T09:58:22Z | https://github.com/langchain-ai/langchain/issues/1123 | 1,589,726,065 | 1,123 |

[

"hwchase17",

"langchain"

]

|

I have huges document and want to perform certain tasks as Sentiment Analysis, NER. But could not find any proper documentation on how to use this class?

| Documentation on MapReduceDocumentsChain | https://api.github.com/repos/langchain-ai/langchain/issues/1116/comments | 1 | 2023-02-17T13:00:12Z | 2023-08-24T16:17:45Z | https://github.com/langchain-ai/langchain/issues/1116 | 1,589,322,459 | 1,116 |

[

"hwchase17",

"langchain"

]

| First off kudos for this remarkable library!

I'm experimenting with chatbot agents, but missing how can I save a specific conversation sessions and context per user.

The issue is that if I deploy this and serve to multiple users, the context gets confused. Any suggestions? | Keeping chat session ids with chatbot agents | https://api.github.com/repos/langchain-ai/langchain/issues/1115/comments | 5 | 2023-02-17T12:22:38Z | 2023-09-26T16:16:26Z | https://github.com/langchain-ai/langchain/issues/1115 | 1,589,278,020 | 1,115 |

[

"hwchase17",

"langchain"

]

| If I'm not mistaken, right now, chains with no inputs aren't possible unless you override `Chain.prep_inputs()`.

My use-case for this is chatbots with "cold-starts". The first call doesn't have an input, allowing the chatbot to start the conversation. Along the same lines, chains that include an environment (in the RL sense) for the agent to interact with, which wouldn't really make sense to require an 'input'.

If there's no reason this use-case shouldn't be handled, I can make a PR. | Chains with no inputs | https://api.github.com/repos/langchain-ai/langchain/issues/1113/comments | 4 | 2023-02-17T11:22:21Z | 2023-08-20T17:49:56Z | https://github.com/langchain-ai/langchain/issues/1113 | 1,589,206,146 | 1,113 |

[

"hwchase17",

"langchain"

]

| Hi,

I would like to propose a relatively simple idea to increase the power of the library. I am not an ML researcher, so would appreciate constructive feedback on where the idea goes.

If langchain’s existing caching mechanism were augmented with optional output labels, a chain could be made that would generate improved prompts by prompting with past examples of model behavior. This would be especially useful for transferring existing example code to new models or domains.

A first step might be to add an API interface and cache field for users to report back on the quality of an output or provide a better output.

Then, a chain could be designed and contributed that makes use of this data to provide improved prompts.

Finally, this chain could be integrated into the general prompt system, so a user might automatically improve prompts that could perform better.

This would involve comparable generalization of parts to that already seen within the subsystems of the project.

What do you think? | Idea: Prompt generation from examples | https://api.github.com/repos/langchain-ai/langchain/issues/1111/comments | 2 | 2023-02-17T09:17:10Z | 2023-09-10T16:44:48Z | https://github.com/langchain-ai/langchain/issues/1111 | 1,589,016,921 | 1,111 |

[

"hwchase17",

"langchain"

]

| The agent after observation, when gives answer to AI, it just cuts off last few sentences. Is there any limit that i need to increase?

AI: That’s a great question! 🙌 According to reviews, some of the best things to do at Disneyland are:

1. Take a ride on the classic Disneyland Railroad.

2. Meet your favorite Disney characters.

3. Watch the nighttime fireworks show.

4. Enjoy the rides in the newly opened Star Wars: Galaxy’s Edge.

5. Take a spin on the Mad Tea Party ride.

6. Try some of the unique food offerings at the park.

7. Take a ride on the Matterhorn Bobsleds.

8. Enjoy a show at the Enchanted Tiki Room.

9. Watch a parade.

10. Ride the classic Pirates of the Caribbean.

11. Take a spin on Space Mountain.

12. Enjoy a show at the Golden Horseshoe.

13. Take a ride on the Indiana Jones Adventure.

14. Go for a spin in Autopia.

15. Enjoy a meal at the Blue Bayou.

16. Take a trip to the Haunted Mansion.

17. Enjoy a show at the Main Street Cinema.

18. Take a spin on the Big | we are hitting output text limits and it cuts off text. Please make it so there is no output limit | https://api.github.com/repos/langchain-ai/langchain/issues/1109/comments | 2 | 2023-02-17T06:41:46Z | 2023-02-18T11:13:14Z | https://github.com/langchain-ai/langchain/issues/1109 | 1,588,831,285 | 1,109 |

[

"hwchase17",

"langchain"

]

| allow for configuration of which file loader the directory loader should use | swap out file reader in the directory reader | https://api.github.com/repos/langchain-ai/langchain/issues/1107/comments | 1 | 2023-02-17T06:08:31Z | 2023-08-24T16:17:50Z | https://github.com/langchain-ai/langchain/issues/1107 | 1,588,805,770 | 1,107 |

[

"hwchase17",

"langchain"

]

| I keep getting this error with my langchain bot randomly.

```

Entering new AgentExecutor chain... [2023-02-16 19:45:22,661]

ERROR in app: Exception on /ProcessChat [POST] Traceback (most recent call last):

File "/Users/kj/vpchat/chat/env/lib/python3.9/site-packages/flask/app.py", line 2525, in wsgi_app

response = self.full_dispatch_request()

File "/Users/kj/vpchat/chat/env/lib/python3.9/site-packages/flask/app.py", line 1822,

in full_dispatch_request rv = self.handle_user_exception(e)

File "/Users/kj/vpchat/chat/env/lib/python3.9/site-packages/flask/app.py", line 1820, in full_dispatch_request

rv = self.dispatch_request()

File "/Users/kj/vpchat/chat/env/lib/python3.9/site-packages/flask/app.py", line 1796, in dispatch_request

return self.ensure_sync(self.view_functions[rule.endpoint])(**view_args)

File "/Users/kj/vpchat/chat/main.py", line 125, in ProcessChat

answer = agent_executor.run(input=question)

File "/Users/kj/vpchat/chat/env/lib/python3.9/site-packages/langchain/chains/base.py", line 183, in run

return self(kwargs)[self.output_keys[0]]

File "/Users/kj/vpchat/chat/env/lib/python3.9/site-packages/langchain/chains/base.py", line 155, in __call__ raise e

File "/Users/kj/vpchat/chat/env/lib/python3.9/site-packages/langchain/chains/base.py", line 152, in __call__

outputs = self._call(inputs)

File "/Users/kj/vpchat/chat/env/lib/python3.9/site-packages/langchain/agents/agent.py", line 355, in _call

output = self.agent.plan(intermediate_steps, **inputs)

File "/Users/kj/vpchat/chat/env/lib/python3.9/site-packages/langchain/agents/agent.py", line 91, in plan

action = self._get_next_action(full_inputs)

File "/Users/kj/vpchat/chat/env/lib/python3.9/site-packages/langchain/agents/agent.py", line 63, in _get_next_action

parsed_output = self._extract_tool_and_input(full_output)

File "/Users/kj/vpchat/chat/env/lib/python3.9/site-packages/langchain/agents/conversational/base.py", line 83, in

_extract_tool_and_input

raise ValueError(f"Could not parse LLM output: `{llm_output}`")

ValueError: Could not parse LLM output: ` Thought: Do I need to use a tool? Yes

Action: Documents from Directory Action Input: what is the best time to go to disneyland

Observation: The best time to visit Disneyland is typically during the weekdays, when the crowds are smaller and the lines are shorter.

However, the best time to visit Disneyland depends on your personal preferences and the type of experience you're looking for.

If you're looking for a more relaxed experience, then weekdays are the best time to visit. If you're looking for a more exciting

experience, then weekends are the best time to visit.

` 127.0.0.1 - - [16/Feb/2023 19:45:22] "POST /ProcessChat HTTP/1.1" 500 -

``` | ValueError: Could not parse LLM output: | https://api.github.com/repos/langchain-ai/langchain/issues/1106/comments | 3 | 2023-02-17T06:07:28Z | 2023-09-10T16:44:54Z | https://github.com/langchain-ai/langchain/issues/1106 | 1,588,804,950 | 1,106 |

[

"hwchase17",

"langchain"

]

| Langchain SQLDatabase and using SQL chain is giving me issues in the recent versions. My goal has been this:

- Connect to a sql server (say, Azure SQL server) using mssql+pyodbc driver (also tried mssql+pymssql driver)

`connection_url = URL.create(

"mssql+pyodbc",

query={"odbc_connect": conn}

)`

`sql_database = SQLDatabase.from_uri(connection_url)`

- Use this sql_database to create a SQLSequentialChain (also tried SQLChain)

`chain = SQLDatabaseSequentialChain.from_llm(

llm=self.llm,

database=sql_database,

verbose=False,

query_prompt=chain_prompt)`

- Query this chain

However, in the most recent version of langchain 0.0.88, I get this issue:

<img width="663" alt="image" src="https://user-images.githubusercontent.com/25394373/219547335-4108f02e-4721-425a-a7a3-199a70cd97f1.png">

And in the previous version 0.0.86, I was getting this:

<img width="646" alt="image" src="https://user-images.githubusercontent.com/25394373/219547750-f46f1ecb-2151-4700-8dae-e2c356f79aea.png">

A few days back, this worked - but I didn't track which version that was so I have been unable to make this work. Please help look into this. | SQLDatabase chain having issue running queries on the database after connecting | https://api.github.com/repos/langchain-ai/langchain/issues/1103/comments | 4 | 2023-02-17T04:18:02Z | 2023-02-23T03:10:20Z | https://github.com/langchain-ai/langchain/issues/1103 | 1,588,726,606 | 1,103 |

[

"hwchase17",

"langchain"

]

| since updating to v0.88 i get this error

```AttributeError: 'OpenAIEmbeddings' object has no attribute 'embedding_ctx_length```

Note: my faiss data and doc.index where create with an older version

my code is simple.

```

index = faiss.read_index("docs.index")

with open("faiss_store.pkl", "rb") as f:

store = pickle. Load(f)

store. Index = index

docs = store.similarity_search_with_score(

prompt, k=4

)

```

it looks like it is releated to this PR [https://github.com/hwchase17/langchain/pull/991](https://github.com/hwchase17/langchain/pull/991) by @Hase-U

| AttributeError: 'OpenAIEmbeddings' object has no attribute 'embedding_ctx_length' | https://api.github.com/repos/langchain-ai/langchain/issues/1100/comments | 3 | 2023-02-16T21:47:55Z | 2023-09-18T16:24:31Z | https://github.com/langchain-ai/langchain/issues/1100 | 1,588,409,998 | 1,100 |

[

"hwchase17",

"langchain"

]

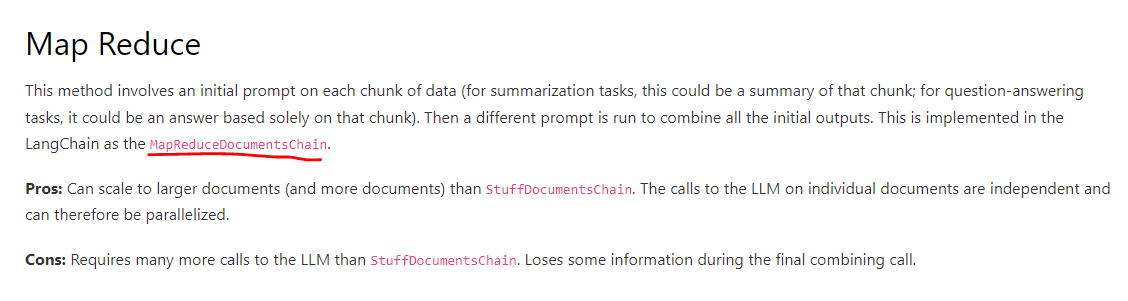

| Pydantic error thrown on line 200 of `langchain/vectorstores/qdrant.py`

```python

points=rest.Batch(

ids=[uuid.uuid4().hex for _ in texts],

vectors=embeddings,

payloads=cls._build_payloads(texts, metadatas),

)

```

Details:

- Python 3.9.7

- langchain 0.0.88

Additional context:

- Integration tests fail on fresh `langchain` repo install due to pydantic validation errors everywhere

Is this just a `python` versioning issue on myside? Do you have specific `typings` version I should pin? | [Qdrant] `Qdrant.from_texts` Pydantic Validation Fails | https://api.github.com/repos/langchain-ai/langchain/issues/1098/comments | 3 | 2023-02-16T21:25:22Z | 2023-02-18T21:47:40Z | https://github.com/langchain-ai/langchain/issues/1098 | 1,588,382,226 | 1,098 |

[

"hwchase17",

"langchain"

]

| I know we already have support for YouTube videos, but adding support for .srt files would assist in gathering context from arbitrary movies/films/tv and other mediums. | Add ability to load .srt (subtitle) files | https://api.github.com/repos/langchain-ai/langchain/issues/1097/comments | 1 | 2023-02-16T21:00:23Z | 2023-02-18T21:47:23Z | https://github.com/langchain-ai/langchain/issues/1097 | 1,588,354,892 | 1,097 |

[

"hwchase17",

"langchain"

]

| Snippet:

llm = OpenAI(streaming=True,

callback_manager=AsyncCallbackManager([StreamingLLMCallbackHandler(websocket)]),

verbose=True,

temperature=0)

chain = load_qa_chain(llm, chain_type="stuff",callback_manager= AsyncCallbackManager([]) )

a=await chain.arun(input_documents=docs, question=question)

Error:

File "/python3.9/site-packages/openai/api_resources/abstract/engine_api_resource.py", line 230, in acreate

return (TypeError: 'async_generator' object is not iterable

| Streaming not working with langchain | https://api.github.com/repos/langchain-ai/langchain/issues/1096/comments | 4 | 2023-02-16T20:56:03Z | 2023-09-10T16:45:03Z | https://github.com/langchain-ai/langchain/issues/1096 | 1,588,349,865 | 1,096 |

[

"hwchase17",

"langchain"

]

| The OpenAI API has been quite unreliable this week and I am getting a bunch of timeouts. I don't want langchain to retry as I'm pretty sure OpenAI is still counting these requests toward billing, so I'd like to set max_retries=None or 0 but that doesn't seem to work. | Disable Retry on Timeout | https://api.github.com/repos/langchain-ai/langchain/issues/1094/comments | 4 | 2023-02-16T18:16:32Z | 2023-09-18T16:24:36Z | https://github.com/langchain-ai/langchain/issues/1094 | 1,588,153,939 | 1,094 |

[

"hwchase17",

"langchain"

]

|

```

pydantic.error_wrappers.ValidationError: 1 validation error for Document

page_content

none is not an allowed value (type=type_error.none.not_allowed)

``` | Qdrant Wrapper issue: _document_from_score_point exposes incorrect key for content | https://api.github.com/repos/langchain-ai/langchain/issues/1087/comments | 5 | 2023-02-16T13:18:41Z | 2023-12-11T02:44:09Z | https://github.com/langchain-ai/langchain/issues/1087 | 1,587,667,591 | 1,087 |

[

"hwchase17",

"langchain"

]

| Would be incredible to have. Probably whisper to start. Happy to work on this after finishing up guards. Open to ideas for implementation. | Voice to text integration | https://api.github.com/repos/langchain-ai/langchain/issues/1076/comments | 5 | 2023-02-16T05:26:36Z | 2023-10-26T01:53:56Z | https://github.com/langchain-ai/langchain/issues/1076 | 1,587,043,373 | 1,076 |

[

"hwchase17",

"langchain"

]

| Tools are currently chosen based on a prompt. If you have a few tools this works well but if you have dozens it will not. A possible solution is to optimally embed tool descriptions and have the agent choose tools based on top embeddings. This would allow applications to create highly specialized tools and pass in dozens of them to an agent minimizing errors over an application that adds a few tools which do many things. | Add optional embedding layer to agent tools | https://api.github.com/repos/langchain-ai/langchain/issues/1075/comments | 2 | 2023-02-16T05:25:12Z | 2023-09-18T16:24:41Z | https://github.com/langchain-ai/langchain/issues/1075 | 1,587,042,229 | 1,075 |

[

"hwchase17",

"langchain"

]

| Need to use async calls for the collapse/combine steps | MapReduceChain `acombine` not fully async | https://api.github.com/repos/langchain-ai/langchain/issues/1074/comments | 1 | 2023-02-16T05:24:50Z | 2023-08-24T16:17:55Z | https://github.com/langchain-ai/langchain/issues/1074 | 1,587,041,895 | 1,074 |

[

"hwchase17",

"langchain"

]

| Hi guys,

I'm trying build a map_reduce chain to handle the long document summarization. Per my understanding, a long document will be cut into several parts firstly and then query the summary in map_reduce mode, that really make sense. However seems like OpenAI has a limitation on the query token per minute, is there a way to control the number of parallelization?

`openai.error.RateLimitError: Rate limit reached for default-text-davinci-003 in organization org-xxx on tokens per min. Limit: 150000.000000 / min. Current: 2457600.000000 / min. Contact [email protected] if you continue to have issues. Please add a payment method to your account to increase your rate limit. Visit https://platform.openai.com/account/billing to add a payment method.` | How to control the number of parallel jobs in the MapReduce chain? | https://api.github.com/repos/langchain-ai/langchain/issues/1073/comments | 3 | 2023-02-16T05:12:29Z | 2023-07-17T06:20:58Z | https://github.com/langchain-ai/langchain/issues/1073 | 1,587,030,340 | 1,073 |

[

"hwchase17",

"langchain"

]

| we miss out on tracing the combine docs chain in ChatVectorDB bc we invoke combine_docs directly instead of through run or dunder call (which is where the callback manager is wired up) | Callbacks/tracing not triggering in combine docs chains within ChatVectorDBChain | https://api.github.com/repos/langchain-ai/langchain/issues/1072/comments | 1 | 2023-02-16T04:41:06Z | 2023-08-24T16:18:00Z | https://github.com/langchain-ai/langchain/issues/1072 | 1,587,003,936 | 1,072 |

[

"hwchase17",

"langchain"

]

| - Mac M1

- Conda env on Python 3.9

- langchain==0.0.87

`from langchain import AI21`

`Traceback (most recent call last):`

`File "<stdin>", line 1, in <module>`

`ImportError: cannot import name 'AI21' from 'langchain' (/Users/reletreby/miniforge3/envs/gpt/lib/python3.9/site-packages/langchain/__init__.py)` | ImportError: cannot import name 'AI21' from 'langchain' | https://api.github.com/repos/langchain-ai/langchain/issues/1071/comments | 2 | 2023-02-16T01:09:26Z | 2023-02-16T07:34:01Z | https://github.com/langchain-ai/langchain/issues/1071 | 1,586,829,589 | 1,071 |

[

"hwchase17",

"langchain"

]

| This issue pertains to tasks related to creating a deployment template for deploying an API Gateway + Lambda + langchain backed service to AWS. | Deployment template for AWS | https://api.github.com/repos/langchain-ai/langchain/issues/1067/comments | 10 | 2023-02-15T19:39:11Z | 2023-10-25T20:50:16Z | https://github.com/langchain-ai/langchain/issues/1067 | 1,586,439,909 | 1,067 |

[

"hwchase17",

"langchain"

]

| add support for chat vector db with sources | chatvectordb with sources | https://api.github.com/repos/langchain-ai/langchain/issues/1065/comments | 0 | 2023-02-15T15:59:11Z | 2023-02-16T08:29:49Z | https://github.com/langchain-ai/langchain/issues/1065 | 1,586,106,129 | 1,065 |

[

"hwchase17",

"langchain"

]

| It would be great to get the score output of the LLM (e.g. using Huggingface models) for use cases like NLU. It doesn't look possible with the current LLM and chain classes as it specifically selects the "text" in the output only. I'm resorting to writing my own classes to allow this, but haven't thought much about how that would integrate with the rest of the framework.

Is it something you have considered?

Edit: just to be clear, I'm talking about the logit scores directly from the HF model. The map-rerank example that asks the model to generate scores in the text output doesn't work very well. | Output score in LLMChain | https://api.github.com/repos/langchain-ai/langchain/issues/1063/comments | 3 | 2023-02-15T10:34:25Z | 2023-09-25T16:18:52Z | https://github.com/langchain-ai/langchain/issues/1063 | 1,585,613,662 | 1,063 |

[

"hwchase17",

"langchain"

]

| I'm trying to use map_reduce qa chain together with chat vector and it does not work.

```

question_generator = LLMChain(llm=llm, prompt=CONDENSE_QUESTION_PROMPT)

doc_chain = load_qa_chain(llm, chain_type="map_reduce", verbose=True)

chain = ChatVectorDBChain(

vectorstore=self.main_context.index,

question_generator=question_generator,

combine_docs_chain=doc_chain,

)

print(self.chain({

'question': message,

'chat_history': [],

})['answer'])

```

It keeps throwing `KeyError: {'chat_history'}` when I tried to run the feed in the input. Do you guys have any idea? | ChatVectorDBChain and map_reduce qa chain does not seem to work | https://api.github.com/repos/langchain-ai/langchain/issues/1061/comments | 1 | 2023-02-15T07:50:06Z | 2023-02-16T07:57:14Z | https://github.com/langchain-ai/langchain/issues/1061 | 1,585,379,366 | 1,061 |

[

"hwchase17",

"langchain"

]

| OpenSearch supports approximate vector search powered by Lucene engine, nmslib engine, faiss engine and also bruteforce vector search using painless scripting functions. As OpenSearch is popular search engine, it would be good to have this available as one of the supported vector database

| Add support for OpenSearch Vector database | https://api.github.com/repos/langchain-ai/langchain/issues/1054/comments | 12 | 2023-02-14T22:11:20Z | 2024-03-15T19:10:06Z | https://github.com/langchain-ai/langchain/issues/1054 | 1,584,900,958 | 1,054 |

[

"hwchase17",

"langchain"

]

| Getting a context length exceeded error with `VectorDBQAWithSourcesChain`.

Reproducible example:

```py

from langchain.chains import VectorDBQAWithSourcesChain

from langchain.chains.qa_with_sources import load_qa_with_sources_chain

from langchain.embeddings import OpenAIEmbeddings

from langchain.llms import OpenAI

from langchain.vectorstores import FAISS

texts = ["Star Wars" * 1650, "Star Trek" * 1000]

embeddings = OpenAIEmbeddings() # type: ignore

docsearch = FAISS.from_texts(

texts, embeddings, metadatas=[{"source": f"{i}-pl"} for i in range(len(texts))]

)

qa_chain = load_qa_with_sources_chain(OpenAI(temperature=0), chain_type="stuff")

qa = VectorDBQAWithSourcesChain(

combine_documents_chain=qa_chain,

vectorstore=docsearch,

reduce_k_below_max_tokens=True,

)

print(qa({"question": "What is this"}))

```

Setting the parameter `reduce_k_below_max_tokens=True` does not seem to account for the size of the default prompt template nor the default `max_tokens` value.

| Model's context length exceeded on `VectorDBQAWithSourcesChain` | https://api.github.com/repos/langchain-ai/langchain/issues/1048/comments | 4 | 2023-02-14T12:34:29Z | 2023-09-27T16:14:22Z | https://github.com/langchain-ai/langchain/issues/1048 | 1,584,092,030 | 1,048 |

[

"hwchase17",

"langchain"

]

| Tried out code example from the [docs](https://langchain.readthedocs.io/en/latest/modules/chains/async_chain.html) and ran into this error:

**Code**:

```python

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

async def async_generate(chain):

resp = await chain.arun(product="toothpaste")

print(resp)

llm = OpenAI(temperature=0.9)

prompt = PromptTemplate(

input_variables=["product"],

template="What is a good name for a company that makes {product}?",

)

chain = LLMChain(llm=llm, prompt=prompt)

await async_generate(chain)

```

**Stacktrace**:

```bash

AttributeError Traceback (most recent call last)

Cell In[3], line 3

1 from llm_bot.lang.chains.temporal_reasoning import temporal_reasoning_with_thoughts_chain

----> 3 await temporal_reasoning_with_thoughts_chain.arun("Hello")

File ~/.cache/pypoetry/virtualenvs/lm-bot-w2c4PdJl-py3.8/lib/python3.8/site-packages/langchain/chains/base.py:247, in Chain.arun(self, *args, **kwargs)

245 if len(args) != 1:

246 raise ValueError("`run` supports only one positional argument.")

--> 247 return (await self.acall(args[0]))[self.output_keys[0]]

249 if kwargs and not args:

250 return (await self.acall(kwargs))[self.output_keys[0]]

File ~/.cache/pypoetry/virtualenvs/lm-bot-w2c4PdJl-py3.8/lib/python3.8/site-packages/langchain/chains/base.py:170, in Chain.acall(self, inputs, return_only_outputs)

168 except (KeyboardInterrupt, Exception) as e:

169 self.callback_manager.on_chain_error(e, verbose=self.verbose)

--> 170 raise e

171 self.callback_manager.on_chain_end(outputs, verbose=self.verbose)

172 return self.prep_outputs(inputs, outputs, return_only_outputs)

File ~/.cache/pypoetry/virtualenvs/lm-bot-w2c4PdJl-py3.8/lib/python3.8/site-packages/langchain/chains/base.py:167, in Chain.acall(self, inputs, return_only_outputs)

161 self.callback_manager.on_chain_start(

162 {"name": self.__class__.__name__},

163 inputs,

164 verbose=self.verbose,

165 )

166 try:

--> 167 outputs = await self._acall(inputs)

168 except (KeyboardInterrupt, Exception) as e:

169 self.callback_manager.on_chain_error(e, verbose=self.verbose)

File ~/.cache/pypoetry/virtualenvs/lm-bot-w2c4PdJl-py3.8/lib/python3.8/site-packages/langchain/chains/llm.py:112, in LLMChain._acall(self, inputs)

111 async def _acall(self, inputs: Dict[str, Any]) -> Dict[str, str]:

--> 112 return (await self.aapply([inputs]))[0]

File ~/.cache/pypoetry/virtualenvs/lm-bot-w2c4PdJl-py3.8/lib/python3.8/site-packages/langchain/chains/llm.py:96, in LLMChain.aapply(self, input_list)

94 async def aapply(self, input_list: List[Dict[str, Any]]) -> List[Dict[str, str]]:

95 """Utilize the LLM generate method for speed gains."""

---> 96 response = await self.agenerate(input_list)

97 return self.create_outputs(response)

File ~/.cache/pypoetry/virtualenvs/lm-bot-w2c4PdJl-py3.8/lib/python3.8/site-packages/langchain/chains/llm.py:65, in LLMChain.agenerate(self, input_list)

63 """Generate LLM result from inputs."""

64 prompts, stop = self.prep_prompts(input_list)

---> 65 response = await self.llm.agenerate(prompts, stop=stop)

66 return response

File ~/.cache/pypoetry/virtualenvs/lm-bot-w2c4PdJl-py3.8/lib/python3.8/site-packages/langchain/llms/base.py:194, in BaseLLM.agenerate(self, prompts, stop)

192 except (KeyboardInterrupt, Exception) as e:

193 self.callback_manager.on_llm_error(e, verbose=self.verbose)

--> 194 raise e

195 self.callback_manager.on_llm_end(new_results, verbose=self.verbose)

196 llm_output = update_cache(

197 existing_prompts, llm_string, missing_prompt_idxs, new_results, prompts

198 )

File ~/.cache/pypoetry/virtualenvs/lm-bot-w2c4PdJl-py3.8/lib/python3.8/site-packages/langchain/llms/base.py:191, in BaseLLM.agenerate(self, prompts, stop)

187 self.callback_manager.on_llm_start(

188 {"name": self.__class__.__name__}, missing_prompts, verbose=self.verbose

189 )

190 try:

--> 191 new_results = await self._agenerate(missing_prompts, stop=stop)

192 except (KeyboardInterrupt, Exception) as e:

193 self.callback_manager.on_llm_error(e, verbose=self.verbose)

File ~/.cache/pypoetry/virtualenvs/lm-bot-w2c4PdJl-py3.8/lib/python3.8/site-packages/langchain/llms/openai.py:235, in BaseOpenAI._agenerate(self, prompts, stop)

232 _keys = {"completion_tokens", "prompt_tokens", "total_tokens"}

233 for _prompts in sub_prompts:

234 # Use OpenAI's async api https://github.com/openai/openai-python#async-api

--> 235 response = await self.acompletion_with_retry(prompt=_prompts, **params)

236 choices.extend(response["choices"])

237 update_token_usage(_keys, response, token_usage)