url

stringlengths 62

66

| repository_url

stringclasses 1

value | labels_url

stringlengths 76

80

| comments_url

stringlengths 71

75

| events_url

stringlengths 69

73

| html_url

stringlengths 50

56

| id

int64 377M

2.15B

| node_id

stringlengths 18

32

| number

int64 1

29.2k

| title

stringlengths 1

487

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 2

classes | assignee

dict | assignees

list | comments

list | created_at

int64 1.54k

1.71k

| updated_at

int64 1.54k

1.71k

| closed_at

int64 1.54k

1.71k

⌀ | author_association

stringclasses 4

values | active_lock_reason

stringclasses 2

values | body

stringlengths 0

234k

⌀ | reactions

dict | timeline_url

stringlengths 71

75

| state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/transformers/issues/12444 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12444/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12444/comments | https://api.github.com/repos/huggingface/transformers/issues/12444/events | https://github.com/huggingface/transformers/issues/12444 | 933,902,336 | MDU6SXNzdWU5MzM5MDIzMzY= | 12,444 | Cannot load model saved with AutoModelForMaskedLM.from_pretrained if state_dict = True | {

"login": "q-clint",

"id": 77411256,

"node_id": "MDQ6VXNlcjc3NDExMjU2",

"avatar_url": "https://avatars.githubusercontent.com/u/77411256?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/q-clint",

"html_url": "https://github.com/q-clint",

"followers_url": "https://api.github.com/users/q-clint/followers",

"following_url": "https://api.github.com/users/q-clint/following{/other_user}",

"gists_url": "https://api.github.com/users/q-clint/gists{/gist_id}",

"starred_url": "https://api.github.com/users/q-clint/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/q-clint/subscriptions",

"organizations_url": "https://api.github.com/users/q-clint/orgs",

"repos_url": "https://api.github.com/users/q-clint/repos",

"events_url": "https://api.github.com/users/q-clint/events{/privacy}",

"received_events_url": "https://api.github.com/users/q-clint/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Hello! You can find the documentation regarding `from_pretrained` here: https://huggingface.co/transformers/main_classes/model.html#transformers.PreTrainedModel.from_pretrained\r\n\r\nNamely, regarding the state dict:\r\n```\r\nstate_dict (Dict[str, torch.Tensor], optional) –\r\n A state dictionary to use instead of a state dictionary loaded from saved weights file.\r\n\r\n This option can be used if you want to create a model from a pretrained configuration but load your own weights. In this case \r\n though, you should check if using save_pretrained() and from_pretrained() is not a simpler option.\r\n```\r\nIt accepts a state dict, not a boolean.\r\n\r\nWhat are you trying to do by passing it `state_dict=True`?",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,625 | 1,628 | 1,628 | NONE | null | Hello,

It seems that setting the `state_dict=True` flag in a model's `.save_pretrained` method breaks the load process for `AutoModelForMaskedLM.from_pretrained`

The following code works

```

from transformers import AutoModelForMaskedLM

model = AutoModelForMaskedLM.from_pretrained('distilroberta-base')

model.save_pretrained(

save_directory = './deleteme',

save_config = True,

#state_dict = True,

push_to_hub = False,

)

model = AutoModelForMaskedLM.from_pretrained('./deleteme')

```

However, the following code does not

```

from transformers import AutoModelForMaskedLM

model = AutoModelForMaskedLM.from_pretrained('distilroberta-base')

model.save_pretrained(

save_directory = './deleteme',

save_config = True,

state_dict = True,

push_to_hub = False,

)

model = AutoModelForMaskedLM.from_pretrained('./deleteme')

```

with error:

```

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

<ipython-input-17-3ab36ee4e5d3> in <module>

8 push_to_hub = False,

9 )

---> 10 model = AutoModelForMaskedLM.from_pretrained('./deleteme')

~/anaconda3/envs/pytorch/lib/python3.8/site-packages/transformers/models/auto/auto_factory.py in from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs)

393 if type(config) in cls._model_mapping.keys():

394 model_class = _get_model_class(config, cls._model_mapping)

--> 395 return model_class.from_pretrained(pretrained_model_name_or_path, *model_args, config=config, **kwargs)

396 raise ValueError(

397 f"Unrecognized configuration class {config.__class__} for this kind of AutoModel: {cls.__name__}.\n"

~/anaconda3/envs/pytorch/lib/python3.8/site-packages/transformers/modeling_utils.py in from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs)

1217 )

1218

-> 1219 model, missing_keys, unexpected_keys, error_msgs = cls._load_state_dict_into_model(

1220 model, state_dict, pretrained_model_name_or_path, _fast_init=_fast_init

1221 )

~/anaconda3/envs/pytorch/lib/python3.8/site-packages/transformers/modeling_utils.py in _load_state_dict_into_model(cls, model, state_dict, pretrained_model_name_or_path, _fast_init)

1243 old_keys = []

1244 new_keys = []

-> 1245 for key in state_dict.keys():

1246 new_key = None

1247 if "gamma" in key:

AttributeError: 'bool' object has no attribute 'keys'

```

Environment:

```

>>> transformers.__version__

'4.8.2'

>>> torch.__version__

'1.8.0+cu111'

```

Fixes tried (but same error):

- tried with transformers 4.8.1

- tried with absolute paths (rather than relative)

- tried also saving configuration with:

```

from transformers import AutoConfig

config = AutoConfig.from_pretrained('distilroberta-base')

config.save_pretrained('./deleteme')

``` | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12444/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12444/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/12443 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12443/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12443/comments | https://api.github.com/repos/huggingface/transformers/issues/12443/events | https://github.com/huggingface/transformers/issues/12443 | 933,889,837 | MDU6SXNzdWU5MzM4ODk4Mzc= | 12,443 | [Wav2Vec2] Better names for internal classes | {

"login": "patrickvonplaten",

"id": 23423619,

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/patrickvonplaten",

"html_url": "https://github.com/patrickvonplaten",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2796628563,

"node_id": "MDU6TGFiZWwyNzk2NjI4NTYz",

"url": "https://api.github.com/repos/huggingface/transformers/labels/WIP",

"name": "WIP",

"color": "234C99",

"default": false,

"description": "Label your PR/Issue with WIP for some long outstanding Issues/PRs that are work in progress"

}

] | open | false | null | [] | [

"This also concerns a badly chosen duplicate of `Wav2Vec2FeatureExtractor` as part of `modeling_wav2vec2.py` since it's also the \"FeatureExtractor\" in `feature_extraction_wav2Vec2.py` -> should be corrected as well.",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,625 | 1,629 | null | MEMBER | null | Wav2Vec2's classes have too many names, *e.g.*: FlaxWav2Vec2EncoderLayerStableLayerNormCollection.

We should make those names easier (reminder for myself @patrickvonplaten to do this) | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12443/reactions",

"total_count": 2,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 1,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12443/timeline | null | null | null |

https://api.github.com/repos/huggingface/transformers/issues/12442 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12442/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12442/comments | https://api.github.com/repos/huggingface/transformers/issues/12442/events | https://github.com/huggingface/transformers/pull/12442 | 933,782,775 | MDExOlB1bGxSZXF1ZXN0NjgwOTM5NjI3 | 12,442 | Add to talks section | {

"login": "suzana-ilic",

"id": 27798583,

"node_id": "MDQ6VXNlcjI3Nzk4NTgz",

"avatar_url": "https://avatars.githubusercontent.com/u/27798583?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/suzana-ilic",

"html_url": "https://github.com/suzana-ilic",

"followers_url": "https://api.github.com/users/suzana-ilic/followers",

"following_url": "https://api.github.com/users/suzana-ilic/following{/other_user}",

"gists_url": "https://api.github.com/users/suzana-ilic/gists{/gist_id}",

"starred_url": "https://api.github.com/users/suzana-ilic/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/suzana-ilic/subscriptions",

"organizations_url": "https://api.github.com/users/suzana-ilic/orgs",

"repos_url": "https://api.github.com/users/suzana-ilic/repos",

"events_url": "https://api.github.com/users/suzana-ilic/events{/privacy}",

"received_events_url": "https://api.github.com/users/suzana-ilic/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [] | 1,625 | 1,625 | 1,625 | CONTRIBUTOR | null | Added DeepMind details, switched time slots Ben Wang and DeepMind | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12442/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12442/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/12442",

"html_url": "https://github.com/huggingface/transformers/pull/12442",

"diff_url": "https://github.com/huggingface/transformers/pull/12442.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/12442.patch",

"merged_at": 1625065083000

} |

https://api.github.com/repos/huggingface/transformers/issues/12441 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12441/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12441/comments | https://api.github.com/repos/huggingface/transformers/issues/12441/events | https://github.com/huggingface/transformers/pull/12441 | 933,695,474 | MDExOlB1bGxSZXF1ZXN0NjgwODY0OTg5 | 12,441 | Add template for adding flax models | {

"login": "cccntu",

"id": 31893406,

"node_id": "MDQ6VXNlcjMxODkzNDA2",

"avatar_url": "https://avatars.githubusercontent.com/u/31893406?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/cccntu",

"html_url": "https://github.com/cccntu",

"followers_url": "https://api.github.com/users/cccntu/followers",

"following_url": "https://api.github.com/users/cccntu/following{/other_user}",

"gists_url": "https://api.github.com/users/cccntu/gists{/gist_id}",

"starred_url": "https://api.github.com/users/cccntu/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/cccntu/subscriptions",

"organizations_url": "https://api.github.com/users/cccntu/orgs",

"repos_url": "https://api.github.com/users/cccntu/repos",

"events_url": "https://api.github.com/users/cccntu/events{/privacy}",

"received_events_url": "https://api.github.com/users/cccntu/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"It's mostly done. I can successfully create a new model with flax. See #12454\r\nTODO:\r\n* Finish modeling_flax for encoder-decoder architecture\r\n* Tests are mapped from tf to np, need fixes.\r\n* Refactor coockie-cutter workflow, make flax blend in with pytorch, tensorflow.\r\n* ??",

"I think only the test for template is failing now, can someone take a quick look? Thanks!\r\n@patil-suraj @patrickvonplaten @LysandreJik ",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.",

"Maybe I should rebase and open another PR?",

"Sorry for being so late on this one! I'll take a look tomorrow!",

"yaaaay - finally all the tests are green! @cccntu - amazing work. I've done a lot of small fixes that are quite time-consuming and tedious to make everything pass, but the main work was all done by you - thanks a mille!",

"Thank you very much @patrickvonplaten! 🤗\r\nThis is my biggest open source contribution so far, so I really appreciate you help fixing so many stuff and writing the test! (I merely copied the tf version and did some simple substitution.)\r\n\r\nI am curious how do you run the template tests? It was confusing to run them locally because the it would overwrite the tracked files and leave unused files, making the work tree messy. I guess `commit -> test -> clean, reset --hard` would work, but I always fear that I would accidentally delete the wrong thing."

] | 1,625 | 1,630 | 1,630 | CONTRIBUTOR | null | # What does this PR do?

Fixes #12440

## From Patrick

@LysandreJik @sgugger Previously both the PT and TF encoder-decoder templates tests weren't run because the test name didn't match the "*template*" regex - I've fixed that here as pointed out in the comment below.

@cccntu added both FlaxBERT and FlaxBART templates and we've added two tests to make sure those work as expected.

The PR should be good for review now :-) | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12441/reactions",

"total_count": 5,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 5,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12441/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/12441",

"html_url": "https://github.com/huggingface/transformers/pull/12441",

"diff_url": "https://github.com/huggingface/transformers/pull/12441.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/12441.patch",

"merged_at": 1630482544000

} |

https://api.github.com/repos/huggingface/transformers/issues/12440 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12440/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12440/comments | https://api.github.com/repos/huggingface/transformers/issues/12440/events | https://github.com/huggingface/transformers/issues/12440 | 933,666,639 | MDU6SXNzdWU5MzM2NjY2Mzk= | 12,440 | cookiecutter template for adding flax model | {

"login": "cccntu",

"id": 31893406,

"node_id": "MDQ6VXNlcjMxODkzNDA2",

"avatar_url": "https://avatars.githubusercontent.com/u/31893406?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/cccntu",

"html_url": "https://github.com/cccntu",

"followers_url": "https://api.github.com/users/cccntu/followers",

"following_url": "https://api.github.com/users/cccntu/following{/other_user}",

"gists_url": "https://api.github.com/users/cccntu/gists{/gist_id}",

"starred_url": "https://api.github.com/users/cccntu/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/cccntu/subscriptions",

"organizations_url": "https://api.github.com/users/cccntu/orgs",

"repos_url": "https://api.github.com/users/cccntu/repos",

"events_url": "https://api.github.com/users/cccntu/events{/privacy}",

"received_events_url": "https://api.github.com/users/cccntu/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,625 | 1,630 | 1,630 | CONTRIBUTOR | null | # 🚀 Feature request

Add cookiecutter template for adding flax model.

## Motivation

There is no cookiecutter template for adding flax model.

## Your contribution

I am trying to add a flax model (#12411), I think it's a good opportunity to create a template at the same time. Can I work on this? Any suggestions?

@patil-suraj @patrickvonplaten

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12440/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12440/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/12439 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12439/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12439/comments | https://api.github.com/repos/huggingface/transformers/issues/12439/events | https://github.com/huggingface/transformers/issues/12439 | 933,657,370 | MDU6SXNzdWU5MzM2NTczNzA= | 12,439 | Expand text-generation pipeline support for other causal models e.g., BigBirdForCausalLM | {

"login": "AliOskooeiTR",

"id": 60223746,

"node_id": "MDQ6VXNlcjYwMjIzNzQ2",

"avatar_url": "https://avatars.githubusercontent.com/u/60223746?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/AliOskooeiTR",

"html_url": "https://github.com/AliOskooeiTR",

"followers_url": "https://api.github.com/users/AliOskooeiTR/followers",

"following_url": "https://api.github.com/users/AliOskooeiTR/following{/other_user}",

"gists_url": "https://api.github.com/users/AliOskooeiTR/gists{/gist_id}",

"starred_url": "https://api.github.com/users/AliOskooeiTR/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/AliOskooeiTR/subscriptions",

"organizations_url": "https://api.github.com/users/AliOskooeiTR/orgs",

"repos_url": "https://api.github.com/users/AliOskooeiTR/repos",

"events_url": "https://api.github.com/users/AliOskooeiTR/events{/privacy}",

"received_events_url": "https://api.github.com/users/AliOskooeiTR/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2648621985,

"node_id": "MDU6TGFiZWwyNjQ4NjIxOTg1",

"url": "https://api.github.com/repos/huggingface/transformers/labels/Feature%20request",

"name": "Feature request",

"color": "FBCA04",

"default": false,

"description": "Request for a new feature"

}

] | open | false | null | [] | [] | 1,625 | 1,625 | null | NONE | null | # 🚀 Feature request

Tried using the text generation pipeline (TextGenerationPipeline) with BigBirdForCausalLM but seems like the pipeline currently only supports a limited number of models. Is there a reason for this? Is there a workaround short of implementing the pipeline myself? Thank you.

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12439/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12439/timeline | null | null | null |

https://api.github.com/repos/huggingface/transformers/issues/12438 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12438/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12438/comments | https://api.github.com/repos/huggingface/transformers/issues/12438/events | https://github.com/huggingface/transformers/issues/12438 | 933,649,789 | MDU6SXNzdWU5MzM2NDk3ODk= | 12,438 | IndexError: index out of bound, MLM+XLA (pre-training) | {

"login": "neel04",

"id": 11617870,

"node_id": "MDQ6VXNlcjExNjE3ODcw",

"avatar_url": "https://avatars.githubusercontent.com/u/11617870?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/neel04",

"html_url": "https://github.com/neel04",

"followers_url": "https://api.github.com/users/neel04/followers",

"following_url": "https://api.github.com/users/neel04/following{/other_user}",

"gists_url": "https://api.github.com/users/neel04/gists{/gist_id}",

"starred_url": "https://api.github.com/users/neel04/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/neel04/subscriptions",

"organizations_url": "https://api.github.com/users/neel04/orgs",

"repos_url": "https://api.github.com/users/neel04/repos",

"events_url": "https://api.github.com/users/neel04/events{/privacy}",

"received_events_url": "https://api.github.com/users/neel04/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Maybe @lhoestq has an idea for the error in `datasets`",

"@lhoestq Any possible leads as to who can solve this bug?",

"This is the full traceback BTW, If it may help things going. I am also willing to create a reproducible Colab if you guys want:-\r\n````js\r\n06/28/2021 17:23:13 - WARNING - run_mlm - Process rank: -1, device: xla:0, n_gpu: 0distributed training: False, 16-bits training: False\r\n06/28/2021 17:23:13 - WARNING - datasets.builder - Using custom data configuration default-e8bc7b301aa1b353\r\n06/28/2021 17:23:13 - WARNING - datasets.builder - Reusing dataset text (/root/.cache/huggingface/datasets/text/default-e8bc7b301aa1b353/0.0.0/e16f44aa1b321ece1f87b07977cc5d70be93d69b20486d6dacd62e12cf25c9a5)\r\nDownloading and preparing dataset text/default (download: Unknown size, generated: Unknown size, post-processed: Unknown size, total: Unknown size) to /root/.cache/huggingface/datasets/text/default-e8bc7b301aa1b353/0.0.0/e16f44aa1b321ece1f87b07977cc5d70be93d69b20486d6dacd62e12cf25c9a5...\r\nDataset text downloaded and prepared to /root/.cache/huggingface/datasets/text/default-e8bc7b301aa1b353/0.0.0/e16f44aa1b321ece1f87b07977cc5d70be93d69b20486d6dacd62e12cf25c9a5. Subsequent calls will reuse this data.\r\n\r\nWARNING:root:TPU has started up successfully with version pytorch-1.9\r\nWARNING:root:TPU has started up successfully with version pytorch-1.9\r\nWARNING:run_mlm:Process rank: -1, device: xla:1, n_gpu: 0distributed training: False, 16-bits training: False\r\nINFO:run_mlm:Training/evaluation parameters TrainingArguments(\r\n_n_gpu=0,\r\nadafactor=False,\r\nadam_beta1=0.9,\r\nadam_beta2=0.98,\r\nadam_epsilon=1e-08,\r\ndataloader_drop_last=False,\r\ndataloader_num_workers=0,\r\ndataloader_pin_memory=True,\r\nddp_find_unused_parameters=None,\r\ndebug=[],\r\ndeepspeed=None,\r\ndisable_tqdm=False,\r\ndo_eval=True,\r\ndo_predict=False,\r\ndo_train=True,\r\neval_accumulation_steps=10,\r\neval_steps=50,\r\nevaluation_strategy=IntervalStrategy.STEPS,\r\nfp16=False,\r\nfp16_backend=auto,\r\nfp16_full_eval=False,\r\nfp16_opt_level=O1,\r\ngradient_accumulation_steps=1,\r\ngreater_is_better=True,\r\ngroup_by_length=False,\r\nignore_data_skip=False,\r\nlabel_names=None,\r\nlabel_smoothing_factor=0.0,\r\nlearning_rate=0.0003,\r\nlength_column_name=length,\r\nload_best_model_at_end=True,\r\nlocal_rank=-1,\r\nlog_level=-1,\r\nlog_level_replica=-1,\r\nlog_on_each_node=True,\r\nlogging_dir=./logs,\r\nlogging_first_step=False,\r\nlogging_steps=50,\r\nlogging_strategy=IntervalStrategy.STEPS,\r\nlr_scheduler_type=SchedulerType.LINEAR,\r\nmax_grad_norm=1.0,\r\nmax_steps=-1,\r\nmetric_for_best_model=validation,\r\nmp_parameters=,\r\nno_cuda=False,\r\nnum_train_epochs=5.0,\r\noutput_dir=./results,\r\noverwrite_output_dir=True,\r\npast_index=-1,\r\nper_device_eval_batch_size=1,\r\nper_device_train_batch_size=1,\r\nprediction_loss_only=False,\r\npush_to_hub=False,\r\npush_to_hub_model_id=results,\r\npush_to_hub_organization=None,\r\npush_to_hub_token=None,\r\nremove_unused_columns=True,\r\nreport_to=['tensorboard'],\r\nresume_from_checkpoint=None,\r\nrun_name=./results,\r\nsave_steps=500,\r\nsave_strategy=IntervalStrategy.EPOCH,\r\nsave_total_limit=None,\r\nseed=42,\r\nsharded_ddp=[],\r\nskip_memory_metrics=True,\r\ntpu_metrics_debug=False,\r\ntpu_num_cores=8,\r\nuse_legacy_prediction_loop=False,\r\nwarmup_ratio=0.0,\r\nwarmup_steps=1000,\r\nweight_decay=0.01,\r\n)\r\nWARNING:datasets.builder:Using custom data configuration default-e8bc7b301aa1b353\r\nINFO:datasets.utils.filelock:Lock 139795201622480 acquired on /root/.cache/huggingface/datasets/_root_.cache_huggingface_datasets_text_default-e8bc7b301aa1b353_0.0.0_e16f44aa1b321ece1f87b07977cc5d70be93d69b20486d6dacd62e12cf25c9a5.lock\r\nINFO:datasets.utils.filelock:Lock 139795201622480 released on /root/.cache/huggingface/datasets/_root_.cache_huggingface_datasets_text_default-e8bc7b301aa1b353_0.0.0_e16f44aa1b321ece1f87b07977cc5d70be93d69b20486d6dacd62e12cf25c9a5.lock\r\nINFO:datasets.utils.filelock:Lock 139795201622864 acquired on /root/.cache/huggingface/datasets/_root_.cache_huggingface_datasets_text_default-e8bc7b301aa1b353_0.0.0_e16f44aa1b321ece1f87b07977cc5d70be93d69b20486d6dacd62e12cf25c9a5.lock\r\nINFO:datasets.builder:Generating dataset text (/root/.cache/huggingface/datasets/text/default-e8bc7b301aa1b353/0.0.0/e16f44aa1b321ece1f87b07977cc5d70be93d69b20486d6dacd62e12cf25c9a5)\r\n100%|██████████| 2/2 [00:00<00:00, 2330.17it/s]\r\nINFO:datasets.utils.download_manager:Downloading took 0.0 min\r\nINFO:datasets.utils.download_manager:Checksum Computation took 0.0 min\r\n100%|██████████| 2/2 [00:00<00:00, 920.91it/s]\r\nINFO:datasets.utils.info_utils:Unable to verify checksums.\r\nINFO:datasets.builder:Generating split train\r\nINFO:datasets.arrow_writer:Done writing 8 examples in 172 bytes /root/.cache/huggingface/datasets/text/default-e8bc7b301aa1b353/0.0.0/e16f44aa1b321ece1f87b07977cc5d70be93d69b20486d6dacd62e12cf25c9a5.incomplete/text-train.arrow.\r\nINFO:datasets.builder:Generating split validation\r\nINFO:datasets.arrow_writer:Done writing 8 examples in 172 bytes /root/.cache/huggingface/datasets/text/default-e8bc7b301aa1b353/0.0.0/e16f44aa1b321ece1f87b07977cc5d70be93d69b20486d6dacd62e12cf25c9a5.incomplete/text-validation.arrow.\r\nINFO:datasets.utils.info_utils:Unable to verify splits sizes.\r\nINFO:datasets.utils.filelock:Lock 139795201625808 acquired on /root/.cache/huggingface/datasets/_root_.cache_huggingface_datasets_text_default-e8bc7b301aa1b353_0.0.0_e16f44aa1b321ece1f87b07977cc5d70be93d69b20486d6dacd62e12cf25c9a5.incomplete.lock\r\nINFO:datasets.utils.filelock:Lock 139795201625808 released on /root/.cache/huggingface/datasets/_root_.cache_huggingface_datasets_text_default-e8bc7b301aa1b353_0.0.0_e16f44aa1b321ece1f87b07977cc5d70be93d69b20486d6dacd62e12cf25c9a5.incomplete.lock\r\nINFO:datasets.utils.filelock:Lock 139795201622864 released on /root/.cache/huggingface/datasets/_root_.cache_huggingface_datasets_text_default-e8bc7b301aa1b353_0.0.0_e16f44aa1b321ece1f87b07977cc5d70be93d69b20486d6dacd62e12cf25c9a5.lock\r\nINFO:datasets.builder:Constructing Dataset for split train, validation, from /root/.cache/huggingface/datasets/text/default-e8bc7b301aa1b353/0.0.0/e16f44aa1b321ece1f87b07977cc5d70be93d69b20486d6dacd62e12cf25c9a5\r\n100%|██████████| 2/2 [00:00<00:00, 458.74it/s]\r\n[INFO|configuration_utils.py:528] 2021-06-28 17:23:13,619 >> loading configuration file ./config/config.json\r\n[INFO|configuration_utils.py:566] 2021-06-28 17:23:13,619 >> Model config BigBirdConfig {\r\n \"architectures\": [\r\n \"BigBirdForMaskedLM\"\r\n ],\r\n \"attention_probs_dropout_prob\": 0.1,\r\n \"attention_type\": \"block_sparse\",\r\n \"block_size\": 64,\r\n \"bos_token_id\": 1,\r\n \"eos_token_id\": 2,\r\n \"gradient_checkpointing\": false,\r\n \"hidden_act\": \"gelu_new\",\r\n \"hidden_dropout_prob\": 0.1,\r\n \"hidden_size\": 768,\r\n \"initializer_range\": 0.02,\r\n \"intermediate_size\": 3072,\r\n \"layer_norm_eps\": 1e-12,\r\n \"max_position_embeddings\": 16000,\r\n \"model_type\": \"big_bird\",\r\n \"num_attention_heads\": 4,\r\n \"num_hidden_layers\": 4,\r\n \"num_random_blocks\": 3,\r\n \"pad_token_id\": 0,\r\n \"rescale_embeddings\": false,\r\n \"sep_token_id\": 66,\r\n \"transformers_version\": \"4.9.0.dev0\",\r\n \"type_vocab_size\": 2,\r\n \"use_bias\": true,\r\n \"use_cache\": true,\r\n \"vocab_size\": 40000\r\n}\r\n\r\n[INFO|tokenization_utils_base.py:1651] 2021-06-28 17:23:13,620 >> Didn't find file ./tokenizer/spiece.model. We won't load it.\r\n[INFO|tokenization_utils_base.py:1651] 2021-06-28 17:23:13,620 >> Didn't find file ./tokenizer/added_tokens.json. We won't load it.\r\n[INFO|tokenization_utils_base.py:1715] 2021-06-28 17:23:13,620 >> loading file None\r\n[INFO|tokenization_utils_base.py:1715] 2021-06-28 17:23:13,620 >> loading file ./tokenizer/tokenizer.json\r\n[INFO|tokenization_utils_base.py:1715] 2021-06-28 17:23:13,620 >> loading file None\r\n[INFO|tokenization_utils_base.py:1715] 2021-06-28 17:23:13,620 >> loading file ./tokenizer/special_tokens_map.json\r\n[INFO|tokenization_utils_base.py:1715] 2021-06-28 17:23:13,620 >> loading file ./tokenizer/tokenizer_config.json\r\nINFO:run_mlm:Training new model from scratch\r\nException in device=TPU:6: Default process group has not been initialized, please make sure to call init_process_group.\r\nTraceback (most recent call last):\r\n File \"/usr/local/lib/python3.7/dist-packages/torch_xla/distributed/xla_multiprocessing.py\", line 329, in _mp_start_fn\r\n _start_fn(index, pf_cfg, fn, args)\r\n File \"/usr/local/lib/python3.7/dist-packages/torch_xla/distributed/xla_multiprocessing.py\", line 323, in _start_fn\r\n fn(gindex, *args)\r\n File \"/content/run_mlm.py\", line 529, in _mp_fn\r\n main()\r\n File \"/content/run_mlm.py\", line 386, in main\r\n with training_args.main_process_first(desc=\"dataset map tokenization\"):\r\n File \"/usr/lib/python3.7/contextlib.py\", line 112, in __enter__\r\n return next(self.gen)\r\n File \"/usr/local/lib/python3.7/dist-packages/transformers/training_args.py\", line 1005, in main_process_first\r\n torch.distributed.barrier()\r\n File \"/usr/local/lib/python3.7/dist-packages/torch/distributed/distributed_c10d.py\", line 2523, in barrier\r\n default_pg = _get_default_group()\r\n File \"/usr/local/lib/python3.7/dist-packages/torch/distributed/distributed_c10d.py\", line 358, in _get_default_group\r\n raise RuntimeError(\"Default process group has not been initialized, \"\r\nRuntimeError: Default process group has not been initialized, please make sure to call init_process_group.\r\nINFO:datasets.arrow_writer:Done writing 1 indices in 8 bytes .\r\nINFO:datasets.arrow_writer:Done writing 1 indices in 8 bytes .\r\nINFO:datasets.arrow_writer:Done writing 1 indices in 8 bytes .\r\nINFO:datasets.arrow_writer:Done writing 1 indices in 8 bytes .\r\nINFO:datasets.arrow_writer:Done writing 1 indices in 8 bytes .\r\nINFO:datasets.arrow_writer:Done writing 1 indices in 8 bytes .\r\nINFO:datasets.arrow_writer:Done writing 1 indices in 8 bytes .\r\nINFO:datasets.arrow_writer:Done writing 1 indices in 8 bytes .\r\nINFO:datasets.arrow_writer:Done writing 0 indices in 0 bytes .\r\nException in device=TPU:0: Default process group has not been initialized, please make sure to call init_process_group.\r\nTraceback (most recent call last):\r\n File \"/usr/local/lib/python3.7/dist-packages/transformers/training_args.py\", line 1006, in main_process_first\r\n yield\r\n File \"/content/run_mlm.py\", line 393, in main\r\n desc=\"Running tokenizer on dataset line_by_line\",\r\n File \"/usr/local/lib/python3.7/dist-packages/datasets/dataset_dict.py\", line 489, in map\r\n for k, dataset in self.items()\r\n File \"/usr/local/lib/python3.7/dist-packages/datasets/dataset_dict.py\", line 489, in <dictcomp>\r\n for k, dataset in self.items()\r\n File \"/usr/local/lib/python3.7/dist-packages/datasets/arrow_dataset.py\", line 1664, in map\r\n for rank in range(num_proc)\r\n File \"/usr/local/lib/python3.7/dist-packages/datasets/arrow_dataset.py\", line 1664, in <listcomp>\r\n for rank in range(num_proc)\r\n File \"/usr/local/lib/python3.7/dist-packages/datasets/arrow_dataset.py\", line 2664, in shard\r\n writer_batch_size=writer_batch_size,\r\n File \"/usr/local/lib/python3.7/dist-packages/datasets/arrow_dataset.py\", line 186, in wrapper\r\n out: Union[\"Dataset\", \"DatasetDict\"] = func(self, *args, **kwargs)\r\n File \"/usr/local/lib/python3.7/dist-packages/datasets/fingerprint.py\", line 397, in wrapper\r\n out = func(self, *args, **kwargs)\r\n File \"/usr/local/lib/python3.7/dist-packages/datasets/arrow_dataset.py\", line 2254, in select\r\n return self._new_dataset_with_indices(indices_buffer=buf_writer.getvalue(), fingerprint=new_fingerprint)\r\n File \"/usr/local/lib/python3.7/dist-packages/datasets/arrow_dataset.py\", line 2170, in _new_dataset_with_indices\r\n fingerprint=fingerprint,\r\n File \"/usr/local/lib/python3.7/dist-packages/datasets/arrow_dataset.py\", line 297, in __init__\r\n self._indices.column(0)[0].type\r\n File \"pyarrow/table.pxi\", line 162, in pyarrow.lib.ChunkedArray.__getitem__\r\n File \"pyarrow/array.pxi\", line 549, in pyarrow.lib._normalize_index\r\nIndexError: index out of bounds\r\n\r\nDuring handling of the above exception, another exception occurred:\r\n\r\nTraceback (most recent call last):\r\n File \"/usr/local/lib/python3.7/dist-packages/torch_xla/distributed/xla_multiprocessing.py\", line 329, in _mp_start_fn\r\n _start_fn(index, pf_cfg, fn, args)\r\n File \"/usr/local/lib/python3.7/dist-packages/torch_xla/distributed/xla_multiprocessing.py\", line 323, in _start_fn\r\n fn(gindex, *args)\r\n File \"/content/run_mlm.py\", line 529, in _mp_fn\r\n main()\r\n File \"/content/run_mlm.py\", line 393, in main\r\n desc=\"Running tokenizer on dataset line_by_line\",\r\n File \"/usr/lib/python3.7/contextlib.py\", line 130, in __exit__\r\n self.gen.throw(type, value, traceback)\r\n File \"/usr/local/lib/python3.7/dist-packages/transformers/training_args.py\", line 1011, in main_process_first\r\n torch.distributed.barrier()\r\n File \"/usr/local/lib/python3.7/dist-packages/torch/distributed/distributed_c10d.py\", line 2523, in barrier\r\n default_pg = _get_default_group()\r\n File \"/usr/local/lib/python3.7/dist-packages/torch/distributed/distributed_c10d.py\", line 358, in _get_default_group\r\n raise RuntimeError(\"Default process group has not been initialized, \"\r\nRuntimeError: Default process group has not been initialized, please make sure to call init_process_group.\r\nTraceback (most recent call last):\r\n File \"xla_spawn.py\", line 85, in <module>\r\n main()\r\n File \"xla_spawn.py\", line 81, in main\r\n xmp.spawn(mod._mp_fn, args=(), nprocs=args.num_cores)\r\n File \"/usr/local/lib/python3.7/dist-packages/torch_xla/distributed/xla_multiprocessing.py\", line 394, in spawn\r\n start_method=start_method)\r\n File \"/usr/local/lib/python3.7/dist-packages/torch/multiprocessing/spawn.py\", line 188, in start_processes\r\n while not context.join():\r\n File \"/usr/local/lib/python3.7/dist-packages/torch/multiprocessing/spawn.py\", line 144, in join\r\n exit_code=exitcode\r\ntorch.multiprocessing.spawn.ProcessExitedException: process 6 terminated with exit code 17\r\n```",

"Hi !\r\nThis might be because `num_proc` is set to a value higher than the size of the dataset (so you end up with an empty dataset in one process).\r\nThis has recently been solved by this PR https://github.com/huggingface/datasets/pull/2566. There will be a new release of `datasets` today to make this fix available. In the meantime, you can try using a bigger dataset or reduce the number of data processing workers.",

"Hmmm...my dataset is about 25k sequences, which I cut down to 15k to save memory :thinking: so the `num_proc` shouldn't pose any issue. Right now, following up on your suggestion I ve set it to the default.\r\n\r\nAnyways, following up with the suggestion made by @LysandreJik, it seems that there might be some inconsistency while creating the dataset - putting it at a `max_length` of `512` and a few other flags for grad accumulation seems that it can train properly. \r\n\r\n> Could you try this out for me: set the max_seq_length value to something low, like 512 or 256. Does it still crash then?\r\n\r\nFor such lower values, it definitely doesn't crash which means you might be right. I would look to double-check my dataset generation process, but it still irks me why I can't see `max_seq_length` in the accepted TrainingArguments. Also, even if there aren't enough tokens to generate the require `16k` limit, why doesn't `pad_to_max_length` flag act here in this case, and pad till the max length?",

"In such case, should I crop long sequences and pad smaller sequences manually - or is this supposed to be done automatically by the dataset processing part of the script?",

"> it still irks me why I can't see max_seq_length in the accepted TrainingArguments.\r\n\r\n`max_seq_length` isn't a `TrainingArguments`, it's a `DataTrainingArguments`. The difference is that the former is used by the `Trainer`, while the latter is only used by the script to do pre/post-processing, and is [not passed to the `Trainer`](https://github.com/huggingface/transformers/blob/master/examples/pytorch/language-modeling/run_mlm.py#L470).\r\n\r\n> why doesn't pad_to_max_length flag act here in this case, and pad till the max length?\r\n\r\nI'm thinking the issue is happening earlier than the `pad_to_max_length` flax is consumed. I can reproduce with the following:\r\n\r\n```bash\r\necho \"This is a random sentence\" > small_file.txt\r\npython ~/transformers/examples/pytorch/language-modeling/run_mlm.py \\\r\n --output_dir=output_dir \\\r\n --model_name_or_path=google/bigbird-roberta-base \\\r\n --train_file=small_file.txt \\\r\n --do_train\r\n```\r\n\r\nThe error comes from the dataset map that is calling the [`group_text`](https://github.com/huggingface/transformers/blob/master/examples/pytorch/language-modeling/run_mlm.py#L414-L426) method. This method tries to put all of the tokenized examples in the `result` dictionary, but drops the small remainder. As we don't have enough data to complete a single sequence, then this method returns an empty result:\r\n```\r\n{'attention_mask': [], 'input_ids': [], 'special_tokens_mask': []}\r\n```\r\n\r\n@sgugger can chime in if my approach is wrong, but the following modifications to the `group_texts` method seems to do the trick:\r\n\r\n```diff\r\n def group_texts(examples):\r\n # Concatenate all texts.\r\n concatenated_examples = {k: sum(examples[k], []) for k in examples.keys()}\r\n total_length = len(concatenated_examples[list(examples.keys())[0]])\r\n # We drop the small remainder, we could add padding if the model supported it instead of this drop, you can\r\n # customize this part to your needs.\r\n- total_length = (total_length // max_seq_length) * max_seq_length\r\n+ truncated_total_length = (total_length // max_seq_length) * max_seq_length\r\n # Split by chunks of max_len.\r\n- result = {\r\n- k: [t[i : i + max_seq_length] for i in range(0, total_length, max_seq_length)]\r\n- for k, t in concatenated_examples.items()\r\n- }\r\n+ if total_length == 0:\r\n+ result = {\r\n+ k: [t[i : i + max_seq_length] for i in range(0, truncated_total_length, max_seq_length)]\r\n+ for k, t in concatenated_examples.items()\r\n+ }\r\n+ else:\r\n+ result = {\r\n+ k: [t[i: i + max_seq_length] for i in range(0, total_length, max_seq_length)]\r\n+ for k, t in concatenated_examples.items()\r\n+ }\r\n return result\r\n``` ",

"That clears up a lot of things @LysandreJik! Thanx a ton :rocket: :cake: :1st_place_medal:\r\n\r\n~~Just a minor peek, When running the scripts it apparently doesn't log anything to the Colab's cell output. tried using different logging levels and setting to defaults to no avail~~ (no bother, simply bash piped it to a file to save time and `tail -f` to view updates to file in real-time)",

"I don't understand your diff @LysandreJik . If `total_length==0` then `truncated_total_length` is also 0. I think you meant something more like this maybe?\r\n```diff\r\n- total_length = (total_length // max_seq_length) * max_seq_length\r\n+ if total_length >= max_seq_length:\r\n+ total_length = (total_length // max_seq_length) * max_seq_length\r\n```",

"Ah I think I did a typo when copying the code, my local code has the following:\r\n`if truncated_total_length != 0:` instead of `if total_length == 0:`.\r\n\r\nThis way, if the truncated total length is equal to 0 (like in this case), then it will use the `total_length` (which is of 7) to create the example.\r\n\r\nIf the truncated total length is not 0, then it will use this value to create the example; which was the case before.\r\n\r\nFeel free to modify as you wish so that it's clearer for you!\r\n",

"Yes, then it's equivalent to my suggestion. Thanks!",

"@LysandreJik I may be misunderstanding how argument parsing works, but for flags like `evaluation_strategy`, it doesn't seem that the script parses it at all? I have a logging problem (https://discuss.huggingface.co/t/no-step-wise-logging-for-xla-mlm-scripts-in-colab-jupyter/8134) which seems to ignore the arguments/fails to override them. I am getting log of loss only at the start of epoch (`0.19`) somewhere again (`epoch-1.89`) and never again, when set for 5 epochs.\r\n\r\nThis seems strange, nor can I judge my models as to how they are performing. any ideas?"

] | 1,625 | 1,625 | 1,625 | NONE | null | ## Environment info

- `transformers` version: 4.9.0.dev0

- Platform: Linux-5.4.104+-x86_64-with-Ubuntu-18.04-bionic

- Python version: 3.7.10

- PyTorch version (GPU?): 1.9.0+cu102 (False)

- Tensorflow version (GPU?): 2.5.0 (False)

- Flax version (CPU?/GPU?/TPU?): 0.3.4 (cpu)

- Jax version: 0.2.13

- JaxLib version: 0.1.66

- Using GPU in script?: False

- Using distributed or parallel set-up in script?: False (Only `TPU` cores)

### Who can help

Not sure who might be the most appropriate person

## Information

Model I am using (Bert, XLNet ...): `BigBird` (MLM)

The problem arises when using:

* [x] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [x] my own task or dataset: (give details below)

## To reproduce

This is an error with the `MLM` script (PyTorch) for attempting to pre-train BigBird on TPUs over XLA. The dataset in question is a custom dataset, and the model config and tokenizer has been initialized appropriately.

This is a continuation of [this unanswered](https://discuss.huggingface.co/t/indexerror-index-out-of-bounds/2859) Forum post that faces the same error.

Command used to run the script:-

```py

%%bash

python xla_spawn.py --num_cores=8 ./run_mlm.py --output_dir="./results" \

--model_type="big_bird" \

--config_name="./config" \

--tokenizer_name="./tokenizer" \

--train_file="./dataset.txt" \

--validation_file="./val.txt" \

--line_by_line="True" \

--max_seq_length="16000" \

--weight_decay="0.01" \

--per_device_train_batch_size="1" \

--per_device_eval_batch_size="1" \

--learning_rate="3e-4" \

--tpu_num_cores='8' \

--warmup_steps="1000" \

--overwrite_output_dir \

--pad_to_max_length \

--num_train_epochs="5" \

--adam_beta1="0.9" \

--adam_beta2="0.98" \

--do_train \

--do_eval \

--logging_steps="50" \

--evaluation_strategy="steps" \

--eval_accumulation_steps='10' \

--report_to="tensorboard" \

--logging_dir='./logs' \

--save_strategy="epoch" \

--load_best_model_at_end='True' \

--metric_for_best_model='validation' \

--preprocessing_num_workers='15'

```

I am facing two errors to be precise,

```py

Exception in device=TPU:0: Default process group has not been initialized, please make sure to call init_process_group.

Traceback (most recent call last):

File "/usr/local/lib/python3.7/dist-packages/transformers/training_args.py", line 1006, in main_process_first

yield

File "/content/run_mlm.py", line 393, in main

desc="Running tokenizer on dataset line_by_line",

File "/usr/local/lib/python3.7/dist-packages/datasets/dataset_dict.py", line 489, in map

for k, dataset in self.items()

File "/usr/local/lib/python3.7/dist-packages/datasets/dataset_dict.py", line 489, in <dictcomp>

for k, dataset in self.items()

File "/usr/local/lib/python3.7/dist-packages/datasets/arrow_dataset.py", line 1664, in map

for rank in range(num_proc)

File "/usr/local/lib/python3.7/dist-packages/datasets/arrow_dataset.py", line 1664, in <listcomp>

for rank in range(num_proc)

File "/usr/local/lib/python3.7/dist-packages/datasets/arrow_dataset.py", line 2664, in shard

writer_batch_size=writer_batch_size,

File "/usr/local/lib/python3.7/dist-packages/datasets/arrow_dataset.py", line 186, in wrapper

out: Union["Dataset", "DatasetDict"] = func(self, *args, **kwargs)

File "/usr/local/lib/python3.7/dist-packages/datasets/fingerprint.py", line 397, in wrapper

out = func(self, *args, **kwargs)

File "/usr/local/lib/python3.7/dist-packages/datasets/arrow_dataset.py", line 2254, in select

return self._new_dataset_with_indices(indices_buffer=buf_writer.getvalue(), fingerprint=new_fingerprint)

File "/usr/local/lib/python3.7/dist-packages/datasets/arrow_dataset.py", line 2170, in _new_dataset_with_indices

fingerprint=fingerprint,

File "/usr/local/lib/python3.7/dist-packages/datasets/arrow_dataset.py", line 297, in __init__

self._indices.column(0)[0].type

File "pyarrow/table.pxi", line 162, in pyarrow.lib.ChunkedArray.__getitem__

File "pyarrow/array.pxi", line 549, in pyarrow.lib._normalize_index

IndexError: index out of bounds

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/lib/python3.7/dist-packages/torch_xla/distributed/xla_multiprocessing.py", line 329, in _mp_start_fn

_start_fn(index, pf_cfg, fn, args)

File "/usr/local/lib/python3.7/dist-packages/torch_xla/distributed/xla_multiprocessing.py", line 323, in _start_fn

fn(gindex, *args)

File "/content/run_mlm.py", line 529, in _mp_fn

main()

File "/content/run_mlm.py", line 393, in main

desc="Running tokenizer on dataset line_by_line",

File "/usr/lib/python3.7/contextlib.py", line 130, in __exit__

self.gen.throw(type, value, traceback)

File "/usr/local/lib/python3.7/dist-packages/transformers/training_args.py", line 1011, in main_process_first

torch.distributed.barrier()

File "/usr/local/lib/python3.7/dist-packages/torch/distributed/distributed_c10d.py", line 2523, in barrier

default_pg = _get_default_group()

File "/usr/local/lib/python3.7/dist-packages/torch/distributed/distributed_c10d.py", line 358, in _get_default_group

raise RuntimeError("Default process group has not been initialized, "

RuntimeError: Default process group has not been initialized, please make sure to call init_process_group.

```

I haven't modified the script to call the `init_process_group` yet, focusing on the earlier error of index out of bounds. Clearly, the problem is arising from my own dataset - which was working before however. Interestingly, we get it when its in the tokenizing stage.

At some point, when constructing the arrow dataset its failing. I have no idea about Apache Arrow, so I can't debug further :sweat_smile:

As for the dataset to use, A few simple lines of code with random numbers would be more than enough to reproduce the dataset.

```py

!touch dataset.txt

import random

f = open('./dataset.txt', 'w')

for lines in range(50):

f.write(' '.join(m for m in [str(random.randint(0, 40000)) for i in range(16000)]) + '\n') #16000 words/(numbers) in one line, with random numbers from 0-40000 only.

f.close()

```

Can anyone give me some guidance on where should I start to investigate the error and some possible leads as to the origin?

Any ideas how I can solve it?

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12438/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12438/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/12437 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12437/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12437/comments | https://api.github.com/repos/huggingface/transformers/issues/12437/events | https://github.com/huggingface/transformers/pull/12437 | 933,594,990 | MDExOlB1bGxSZXF1ZXN0NjgwNzc4MTc2 | 12,437 | Add test for a WordLevel tokenizer model | {

"login": "SaulLu",

"id": 55560583,

"node_id": "MDQ6VXNlcjU1NTYwNTgz",

"avatar_url": "https://avatars.githubusercontent.com/u/55560583?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/SaulLu",

"html_url": "https://github.com/SaulLu",

"followers_url": "https://api.github.com/users/SaulLu/followers",

"following_url": "https://api.github.com/users/SaulLu/following{/other_user}",

"gists_url": "https://api.github.com/users/SaulLu/gists{/gist_id}",

"starred_url": "https://api.github.com/users/SaulLu/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/SaulLu/subscriptions",

"organizations_url": "https://api.github.com/users/SaulLu/orgs",

"repos_url": "https://api.github.com/users/SaulLu/repos",

"events_url": "https://api.github.com/users/SaulLu/events{/privacy}",

"received_events_url": "https://api.github.com/users/SaulLu/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [] | 1,625 | 1,625 | 1,625 | CONTRIBUTOR | null | # What does this PR do?

In this PR I propose to add a test for the feature developed in PR #12361. Unless I'm mistaken, no language model tested currently uses a tokenizer that would use the WordLevel model.

The tokenizer created for this test is hosted here: [https://hf.co/robot-test/dummy-tokenizer-wordlevel](https://huggingface.co/robot-test/dummy-tokenizer-wordlevel)

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12437/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12437/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/12437",

"html_url": "https://github.com/huggingface/transformers/pull/12437",

"diff_url": "https://github.com/huggingface/transformers/pull/12437.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/12437.patch",

"merged_at": 1625135827000

} |

https://api.github.com/repos/huggingface/transformers/issues/12436 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12436/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12436/comments | https://api.github.com/repos/huggingface/transformers/issues/12436/events | https://github.com/huggingface/transformers/issues/12436 | 933,588,599 | MDU6SXNzdWU5MzM1ODg1OTk= | 12,436 | Add DEBERTA-base model for usage in EncoderDecoderModel. | {

"login": "avacaondata",

"id": 35173563,

"node_id": "MDQ6VXNlcjM1MTczNTYz",

"avatar_url": "https://avatars.githubusercontent.com/u/35173563?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/avacaondata",

"html_url": "https://github.com/avacaondata",

"followers_url": "https://api.github.com/users/avacaondata/followers",

"following_url": "https://api.github.com/users/avacaondata/following{/other_user}",

"gists_url": "https://api.github.com/users/avacaondata/gists{/gist_id}",

"starred_url": "https://api.github.com/users/avacaondata/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/avacaondata/subscriptions",

"organizations_url": "https://api.github.com/users/avacaondata/orgs",

"repos_url": "https://api.github.com/users/avacaondata/repos",

"events_url": "https://api.github.com/users/avacaondata/events{/privacy}",

"received_events_url": "https://api.github.com/users/avacaondata/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2648621985,

"node_id": "MDU6TGFiZWwyNjQ4NjIxOTg1",

"url": "https://api.github.com/repos/huggingface/transformers/labels/Feature%20request",

"name": "Feature request",

"color": "FBCA04",

"default": false,

"description": "Request for a new feature"

}

] | open | false | null | [] | [

"Great idea! Do you want to take a stab at it?",

"Hi @alexvaca0,\r\nThis is an interesting feature, \r\nBut I was curious that Deberta, Bert, and Roberta are encoder-based models so there is no decoder part right? I checked their model class and I could not find the Decoder / EncoderDecoder class!\r\nCan you please give more insight into it? ",

"That's right, there's no decoder in those models, but there is a class in Transformers, EncoderDecoderModel, that enables to create encoder-decoder architectures from encoder-only architectures :)\r\n\r\nPerfect, let me have a look at it and see if I can code that adaptation @LysandreJik",

"Great! If you run into any blockers, feel free to ping us. If you want to add the possibility for DeBERTa to be a decoder, you'll probably need to add the cross attention layers. \r\n\r\ncc @patrickvonplaten and @patil-suraj which have extensive experience with enc-dec models.",

"Hey, is this feature being worked on by someone? If not then I can pick it up! @LysandreJik ",

"Would be great if you could pick it up @manish-p-gupta :-) ",

"Great!. Any specific things I should go through before taking it up? I'm familiar with the Code of conduct and contributing guidelines. I'll also open a draft PR to carry on the discussions there. Let me know if you think I need to look at anything else. @patrickvonplaten ",

"@ArthurZucker has been working with DeBERTa models recently and can likely help and give advice!",

"Yes! Feel free to ping me for an early review if you have any doubts"

] | 1,625 | 1,674 | null | NONE | null | # 🚀 Feature request

Add DEBERTA-base model as an option for creating an EncoderDecoderModel.

## Motivation

Currently only BERT and RoBERTa models can be transformed to a Seq2Seq model via EncoderDecoder class, and for those of use developing DeBERTa models from scratch it would be wonderful to be able to generate a Seq2Seq model from them. Also, the Deberta-base model works much better than BERT and RoBERTa.

## Your contribution

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12436/reactions",

"total_count": 2,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 2,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12436/timeline | null | null | null |

https://api.github.com/repos/huggingface/transformers/issues/12435 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12435/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12435/comments | https://api.github.com/repos/huggingface/transformers/issues/12435/events | https://github.com/huggingface/transformers/issues/12435 | 933,586,798 | MDU6SXNzdWU5MzM1ODY3OTg= | 12,435 | Using huggingface Pipeline in industry | {

"login": "Lassehhansen",

"id": 54820693,

"node_id": "MDQ6VXNlcjU0ODIwNjkz",

"avatar_url": "https://avatars.githubusercontent.com/u/54820693?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Lassehhansen",

"html_url": "https://github.com/Lassehhansen",

"followers_url": "https://api.github.com/users/Lassehhansen/followers",

"following_url": "https://api.github.com/users/Lassehhansen/following{/other_user}",

"gists_url": "https://api.github.com/users/Lassehhansen/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Lassehhansen/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Lassehhansen/subscriptions",

"organizations_url": "https://api.github.com/users/Lassehhansen/orgs",

"repos_url": "https://api.github.com/users/Lassehhansen/repos",

"events_url": "https://api.github.com/users/Lassehhansen/events{/privacy}",

"received_events_url": "https://api.github.com/users/Lassehhansen/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"The pipeline is a simple wrapper over the model and tokenizer. When using a pipeline in production you should be aware of:\r\n- The pipeline is doing simple pre and post-processing reflecting the model's behavior. If you want to understand how the pipeline behaves, you should understand how the model behaves as the pipeline isn't doing anything fancy (true for sentiment analysis, less true for token classification or QA which have very specific post-processing).\r\n- Pipelines are very simple to use, but they remain an abstraction over the model and tokenizer. If you're looking for performance and you have a very specific use-case, you will get on par or better performance when using the model and tokenizer directly.\r\n\r\nDoes that answer your question?",

"I think my question was minded for whether the pipeline is free to use in industry, and or whether there is any security issues with using it in an automated work process for industry projects? Not so much minded for the bias of the model.\r\n\r\nThanks in advance\r\nLasse",

"For industry usage including security guidance etc I would recommend getting in touch with our Expert Acceleration Program at https://huggingface.co/support\r\n\r\nCheers",

"Perfect, I will try and do that. Thanks for the help\r\n"

] | 1,625 | 1,625 | 1,625 | NONE | null | Hi,

I was wondering if there is anything to be aware of when using your sentiment-analysis pipeline for industry projects at work. Are there any limitations to what I can or cannot do?

Thank you for your always amazing service.

Lasse | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12435/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12435/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/12434 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12434/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12434/comments | https://api.github.com/repos/huggingface/transformers/issues/12434/events | https://github.com/huggingface/transformers/issues/12434 | 933,535,430 | MDU6SXNzdWU5MzM1MzU0MzA= | 12,434 | TPU not initialized when running official `run_mlm_flax.py` example. | {

"login": "patrickvonplaten",

"id": 23423619,

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/patrickvonplaten",

"html_url": "https://github.com/patrickvonplaten",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Given that when running this command I'm getting ~30 training iterations per second, I'm assuming though that it's just a warning and not an error.",

"I am getting the same errors here. Also noting that the parameters from TrainingArguments are written at the start of the run_mlm_script, I see a few weird settings, like:\r\n```\r\nno_cuda=False,\r\ntpu_num_cores=None,\r\n``` \r\nI am not getting a stable report on iterations per second, so it is hard to see if this is a going well. Progress is still at 0%. Occationally, I am also getting error messages like this written to the screen roughly each minute:\r\n\r\n```\r\ntcmalloc: large alloc 25737314304 bytes == 0x770512000 @ 0x7ff6c92be680 0x7ff6c92df824 0x7ff6c92dfb8a 0x7ff49fbb6417 0x7ff49a9c43d0 0x7ff49a9d1ef4 0x7ff49a9d4e77 0x7ff49a9261dd 0x7ff49a6a0563 0x7ff49a68e460 0x5f5b29 0x5f66f6 0x50ad17 0x570296 0x56951a 0x5f60b3 0x5f6b6b 0x664e8d 0x5f556e 0x56ca9e 0x56951a 0x5f60b3 0x5f54e7 0x56ca9e 0x5f5ed6 0x56b3fe 0x5f5ed6 0x56b3fe 0x56951a 0x5f60b3 0x5f54e7\r\n```\r\nIt might also be mentioned that I am initially getting \"Missing XLA configuration\". I had to manually set the environment variable: `export XRT_TPU_CONFIG=\"localservice;0;localhost:51011\".` for the script to run. I am not sure if this really does the right thing. Maybe also the tpu driver needs to be specified?\r\n\r\n",

"...and ... I see this warning, tat does not look good:\r\n`[10:28:02] - WARNING - absl - No GPU/TPU found, falling back to CPU. (Set TF_CPP_MIN_LOG_LEVEL=0 and rerun for more info.)`",

"If of interest, this is my notes on installing this. It is mainly based on Patricks tutorial:\r\nhttps://github.com/NBAiLab/notram/blob/master/guides/flax.md",

"The simplest way to see if you're running on TPU is to call `jax.devices()`. E.g. you may see:\r\n\r\n```\r\n[TpuDevice(id=0, process_index=0, coords=(0,0,0), core_on_chip=0),\r\n TpuDevice(id=1, process_index=0, coords=(0,0,0), core_on_chip=1),\r\n TpuDevice(id=2, process_index=0, coords=(1,0,0), core_on_chip=0),\r\n TpuDevice(id=3, process_index=0, coords=(1,0,0), core_on_chip=1),\r\n TpuDevice(id=4, process_index=0, coords=(0,1,0), core_on_chip=0),\r\n TpuDevice(id=5, process_index=0, coords=(0,1,0), core_on_chip=1),\r\n TpuDevice(id=6, process_index=0, coords=(1,1,0), core_on_chip=0),\r\n TpuDevice(id=7, process_index=0, coords=(1,1,0), core_on_chip=1)]\r\n ```",

"@avital. \"import jax;jax.devices()\" gives me exactly the same response.\r\n\r\nI am also able to make simple calculations on the TPU. The problem seem only to be related to the run_mlm_flax-script.",

"@avital. I also found this log file. It seems to think that the TPU is busy.\r\n```\r\nE0630 13:24:28.491443 20778 kernel_dma_mapper.cc:88] Error setting number simples with FAILED_PRECONDITION: ioctl failed [type.googleapis.com/util.ErrorSpacePayload='util::PosixErrorSpace::Device or resource busy']\r\nE0630 13:24:28.491648 20778 tensor_node.cc:436] [0000:00:04.0 PE0 C0 MC-1 TN0] Failed to set number of simple DMA addresses: FAILED_PRECONDITION: ioctl failed [type.googleapis.com/util.ErrorSpacePayload='util::PosixErrorSpace::Device or resource busy']\r\nE0630 13:24:28.491660 20778 driver.cc:806] [0000:00:04.0 PE0 C0 MC-1] tensor node 0 open failed: FAILED_PRECONDITION: ioctl failed [type.googleapis.com/util.ErrorSpacePayload='util::PosixErrorSpace::Device or resource busy']\r\nE0630 13:24:28.491678 20778 driver.cc:194] [0000:00:04.0 PE0 C0 MC-1] Device has failed. Status:FAILED_PRECONDITION: ioctl failed [type.googleapis.com/util.ErrorSpacePayload='util::PosixErrorSpace::Device or resource busy']\r\nE0630 13:24:28.492048 20777 kernel_dma_mapper.cc:88] Error setting number simples with FAILED_PRECONDITION: ioctl failed [type.googleapis.com/util.ErrorSpacePayload='util::PosixErrorSpace::Device or resource busy']\r\nE0630 13:24:28.492093 20777 tensor_node.cc:436] [0000:00:05.0 PE0 C1 MC-1 TN0] Failed to set number of simple DMA addresses: FAILED_PRECONDITION: ioctl failed [type.googleapis.com/util.ErrorSpacePayload='util::PosixErrorSpace::Device or resource busy']\r\nE0630 13:24:28.492100 20777 driver.cc:806] [0000:00:05.0 PE0 C1 MC-1] tensor node 0 open failed: FAILED_PRECONDITION: ioctl failed [type.googleapis.com/util.ErrorSpacePayload='util::PosixErrorSpace::Device or resource busy']\r\nE0630 13:24:28.492110 20777 driver.cc:194] [0000:00:05.0 PE0 C1 MC-1] Device has failed. Status:FAILED_PRECONDITION: ioctl failed [type.googleapis.com/util.ErrorSpacePayload='util::PosixErrorSpace::Device or resource busy']\r\nE0630 13:24:28.492996 20778 driver.cc:165] [0000:00:04.0 PE0 C0 MC-1] Transitioned to State::FAILED, dumping core\r\nE0630 13:24:28.493215 20777 driver.cc:165] [0000:00:05.0 PE0 C1 MC-1] Transitioned to State::FAILED, dumping core\r\nE0630 13:24:28.494112 20786 kernel_dma_mapper.cc:88] Error setting number simples with FAILED_PRECONDITION: ioctl failed [type.googleapis.com/util.ErrorSpacePayload='util::PosixErrorSpace::Device or resource busy']\r\nE0630 13:24:28.494225 20786 tensor_node.cc:436] [0000:00:07.0 PE0 C3 MC-1 TN0] Failed to set number of simple DMA addresses: FAILED_PRECONDITION: ioctl failed [type.googleapis.com/util.ErrorSpacePayload='util::PosixErrorSpace::Device or resource busy']\r\n```\r\n",

"I am now able to get this to run, and think I understand why this is happening.\r\n\r\nThe initial error I am seeing is: \"RuntimeError: tensorflow/compiler/xla/xla_client/computation_client.cc:273 : Missing XLA configuration\"\r\n\r\nI have been getting around this error by setting \"export XRT_TPU_CONFIG=\"localservice;0;localhost:51011\". This is probably the right way of doing it on torch system, but it also leads to torch stealing the TPU from Jax, and letting the run_mlm_flax.py train on CPU.\r\n\r\nHowever, it seems like there is a function in transformers/training_args.py called \"is_torch_tpu_available\". If this returns TRUE, it also asks for the XRT_TPU_CONFIG. I am really not sure why it is returning TRUE on my system but it might be because the VM I am using have other preinstalled software. \r\n\r\nLots of ways of fixing this of course. You guys probably know the best way. ",

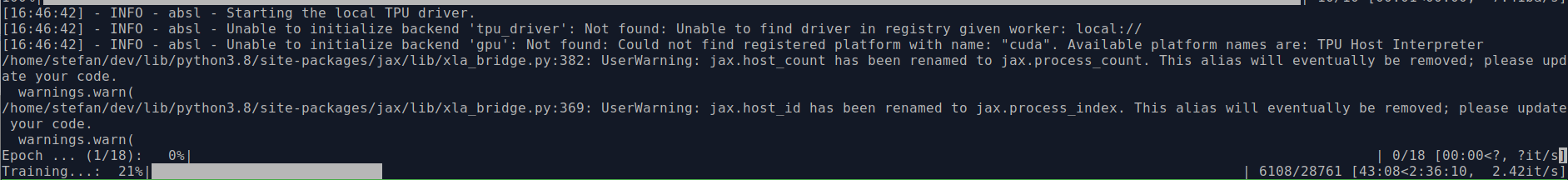

"I've seen the same `INFO` message from `absl`:\r\n\r\n\r\n\r\nBut training seems to work (batch size 128 as from the mlm flax documentation)",

"Closing this issue now as it is expected",

"I am having the exact same issue described here. I see that @peregilk found a work around, but he/she hasn't shared what it was.\r\n\r\nCould you describe how you overcame this issue? @peregilk ",

"@erensezener I think a lot has changed in the code here since this was written. I am linking to my internal notes above. I have repeated that one several times, and know it gets a working system up and running.\r\n\r\nJust a wild guess: Have you tried setting ```export USE_TORCH=False```",

"> Just a wild guess: Have you tried setting `export USE_TORCH=False`\r\n\r\nThis solves the issue indeed! Thank you, you saved me many more hours of debugging :)\r\n\r\n"

] | 1,625 | 1,635 | 1,626 | MEMBER | null | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.9.0.dev0

- Platform: Linux-5.4.0-1043-gcp-x86_64-with-glibc2.29

- Python version: 3.8.5

- PyTorch version (GPU?): 1.9.0+cu102 (False)

- Tensorflow version (GPU?): 2.5.0 (False)

- Flax version (CPU?/GPU?/TPU?): 0.3.4 (tpu)

- Jax version: 0.2.16

- JaxLib version: 0.1.68

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: No

### Who can help

@avital @marcvanzee

## Information

I am setting up a new TPU VM according to the [Cloud TPU VM JAX quickstart](https://cloud.google.com/tpu/docs/jax-quickstart-tpu-vm) and the following the installation steps as described here: https://github.com/huggingface/transformers/tree/master/examples/research_projects/jax-projects#how-to-install-relevant-libraries to install `flax`, `jax` `transformers`, and `datasets`.

Then, when running a simple example using the [`run_mlm_flax.py`](https://github.com/huggingface/transformers/blob/master/examples/flax/language-modeling/run_mlm_flax.py) script, I'm encounting an error/ warning:

```

INFO:absl:Starting the local TPU driver.

INFO:absl:Unable to initialize backend 'tpu_driver': Not found: Unable to find driver in registry given worker: local://

INFO:absl:Unable to initialize backend 'gpu': Not found: Could not find registered platform with name: "cuda". Available platform names are: TPU Interpreter Host

```

=> I am now unsure whether the code actually runs on TPU or instead on CPU.

## To reproduce

The problem can be easily reproduced by:

1. sshing into a TPU, *e.g.* `patrick-test` (Flax, JAX, & Transformers should already be installed)

If one goes into `patrick-test` the libraries are already installed - on an "newly" created TPU VM, one can follow [these](https://github.com/huggingface/transformers/tree/master/examples/research_projects/jax-projects#how-to-install-relevant-libraries) steps to install the relevant libraries.

2. Going to home folder

```

cd ~/

```

3. creating a new dir:

```

mkdir test && cd test

```

4. cloning a dummy repo into it

```

GIT_LFS_SKIP_SMUDGE=1 git clone https://huggingface.co/patrickvonplaten/norwegian-roberta-als

```

4. Linking the `run_mlm_flax.py` script

```

ln -s $(realpath ~/transformers/examples/flax/language-modeling/run_mlm_flax.py) ./

```

5. Running the following command (which should show the above warning/error again):

```

./run_mlm_flax.py \

--output_dir="norwegian-roberta-als" \

--model_type="roberta" \

--config_name="norwegian-roberta-als" \

--tokenizer_name="norwegian-roberta-als" \

--dataset_name="oscar" \

--dataset_config_name="unshuffled_deduplicated_als" \

--max_seq_length="128" \

--per_device_train_batch_size="8" \

--per_device_eval_batch_size="8" \

--learning_rate="3e-4" \

--overwrite_output_dir \

--num_train_epochs="3"

```

=>

You should see a console print that says:

```

[10:15:48] - INFO - absl - Starting the local TPU driver.

[10:15:48] - INFO - absl - Unable to initialize backend 'tpu_driver': Not found: Unable to find driver in registry given worker: local://

[10:15:48] - INFO - absl - Unable to initialize backend 'gpu': Not found: Could not find registered platform with name: "cuda". Available platform names are: TPU Host Interpreter

```

## Expected behavior

I think this warning / error should not be displayed and the TPU should be correctly configured.

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12434/reactions",

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 1

} | https://api.github.com/repos/huggingface/transformers/issues/12434/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/12433 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12433/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12433/comments | https://api.github.com/repos/huggingface/transformers/issues/12433/events | https://github.com/huggingface/transformers/pull/12433 | 933,502,288 | MDExOlB1bGxSZXF1ZXN0NjgwNjk3OTc3 | 12,433 | Added to talks section | {

"login": "suzana-ilic",

"id": 27798583,

"node_id": "MDQ6VXNlcjI3Nzk4NTgz",

"avatar_url": "https://avatars.githubusercontent.com/u/27798583?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/suzana-ilic",

"html_url": "https://github.com/suzana-ilic",

"followers_url": "https://api.github.com/users/suzana-ilic/followers",

"following_url": "https://api.github.com/users/suzana-ilic/following{/other_user}",

"gists_url": "https://api.github.com/users/suzana-ilic/gists{/gist_id}",

"starred_url": "https://api.github.com/users/suzana-ilic/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/suzana-ilic/subscriptions",

"organizations_url": "https://api.github.com/users/suzana-ilic/orgs",

"repos_url": "https://api.github.com/users/suzana-ilic/repos",

"events_url": "https://api.github.com/users/suzana-ilic/events{/privacy}",

"received_events_url": "https://api.github.com/users/suzana-ilic/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [] | 1,625 | 1,625 | 1,625 | CONTRIBUTOR | null | Added one more confirmed speaker, zoom links and gcal event links | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/12433/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/12433/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/12433",

"html_url": "https://github.com/huggingface/transformers/pull/12433",

"diff_url": "https://github.com/huggingface/transformers/pull/12433.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/12433.patch",

"merged_at": 1625051651000

} |

https://api.github.com/repos/huggingface/transformers/issues/12432 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/12432/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/12432/comments | https://api.github.com/repos/huggingface/transformers/issues/12432/events | https://github.com/huggingface/transformers/pull/12432 | 933,498,457 | MDExOlB1bGxSZXF1ZXN0NjgwNjk0ODI0 | 12,432 | fix typo in mt5 configuration docstring | {

"login": "fcakyon",

"id": 34196005,

"node_id": "MDQ6VXNlcjM0MTk2MDA1",