url

stringlengths 62

66

| repository_url

stringclasses 1

value | labels_url

stringlengths 76

80

| comments_url

stringlengths 71

75

| events_url

stringlengths 69

73

| html_url

stringlengths 50

56

| id

int64 377M

2.15B

| node_id

stringlengths 18

32

| number

int64 1

29.2k

| title

stringlengths 1

487

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 2

classes | assignee

dict | assignees

list | comments

sequence | created_at

int64 1.54k

1.71k

| updated_at

int64 1.54k

1.71k

| closed_at

int64 1.54k

1.71k

⌀ | author_association

stringclasses 4

values | active_lock_reason

stringclasses 2

values | body

stringlengths 0

234k

⌀ | reactions

dict | timeline_url

stringlengths 71

75

| state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/transformers/issues/10535 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10535/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10535/comments | https://api.github.com/repos/huggingface/transformers/issues/10535/events | https://github.com/huggingface/transformers/issues/10535 | 822,826,159 | MDU6SXNzdWU4MjI4MjYxNTk= | 10,535 | tensorflow model convert onnx | {

"login": "Zjq9409",

"id": 62974595,

"node_id": "MDQ6VXNlcjYyOTc0NTk1",

"avatar_url": "https://avatars.githubusercontent.com/u/62974595?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Zjq9409",

"html_url": "https://github.com/Zjq9409",

"followers_url": "https://api.github.com/users/Zjq9409/followers",

"following_url": "https://api.github.com/users/Zjq9409/following{/other_user}",

"gists_url": "https://api.github.com/users/Zjq9409/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Zjq9409/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Zjq9409/subscriptions",

"organizations_url": "https://api.github.com/users/Zjq9409/orgs",

"repos_url": "https://api.github.com/users/Zjq9409/repos",

"events_url": "https://api.github.com/users/Zjq9409/events{/privacy}",

"received_events_url": "https://api.github.com/users/Zjq9409/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"and when I upgrade transformers version to 4.3.3, onnx version is 1.8.1,another error occurred:\r\nTraceback (most recent call last):\r\n File \"test_segment.py\", line 104, in <module>\r\n session = onnxruntime.InferenceSession(output_model_path, sess_options, providers=['CPUExecutionProvider'])\r\n File \"/usr/lib64/python3.6/site-packages/onnxruntime/capi/onnxruntime_inference_collection.py\", line 206, in __init__\r\n self._create_inference_session(providers, provider_options)\r\n File \"/usr/lib64/python3.6/site-packages/onnxruntime/capi/onnxruntime_inference_collection.py\", line 226, in _create_inference_session\r\n sess = C.InferenceSession(session_options, self._model_path, True, self._read_config_from_model)\r\nonnxruntime.capi.onnxruntime_pybind11_state.Fail: [ONNXRuntimeError] : 1 : FAIL : Load model from onnx_tf/segment.onnx failed:Fatal error: BroadcastTo is not a registered function/op",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,614 | 1,619 | 1,619 | NONE | null | I use this code to transfer TFBertModel to onnx:

”convert(framework="tf", model=model, tokenizer=tokenizer, output=Path("onnx_tf/segment.onnx"), opset=12)”

and the output log as follows:

`Using framework TensorFlow: 2.2.0, keras2onnx: 1.7.0

Found input input_ids with shape: {0: 'batch', 1: 'sequence'}

Found input token_type_ids with shape: {0: 'batch', 1: 'sequence'}

Found input attention_mask with shape: {0: 'batch', 1: 'sequence'}

Found output output_0 with shape: {0: 'batch'}

Found output output_1 with shape: {0: 'batch', 1: 'sequence'}

Found output output_2 with shape: {0: 'batch', 1: 'sequence'}

Found output output_3 with shape: {0: 'batch', 1: 'sequence'}

Found output output_4 with shape: {0: 'batch', 1: 'sequence'}

Found output output_5 with shape: {0: 'batch', 1: 'sequence'}

Found output output_6 with shape: {0: 'batch', 1: 'sequence'}

Found output output_7 with shape: {0: 'batch', 1: 'sequence'}

Found output output_8 with shape: {0: 'batch', 1: 'sequence'}

Found output output_9 with shape: {0: 'batch', 1: 'sequence'}

Found output output_10 with shape: {0: 'batch', 1: 'sequence'}

Found output output_11 with shape: {0: 'batch', 1: 'sequence'}

Found output output_12 with shape: {0: 'batch', 1: 'sequence'}

Found output output_13 with shape: {0: 'batch', 1: 'sequence'}`

but,I run the output onnx,an error is occured as follows:

logits = session.run(None, inputs_onnx)

File "/usr/lib64/python3.6/site-packages/onnxruntime/capi/onnxruntime_inference_collection.py", line 124, in run

return self._sess.run(output_names, input_feed, run_options)

onnxruntime.capi.onnxruntime_pybind11_state.InvalidArgument: [ONNXRuntimeError] : 2 : INVALID_ARGUMENT : Invalid Feed Input Name:token_type_ids:0

transformers 3.0.2 | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10535/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10535/timeline | completed | null | null |

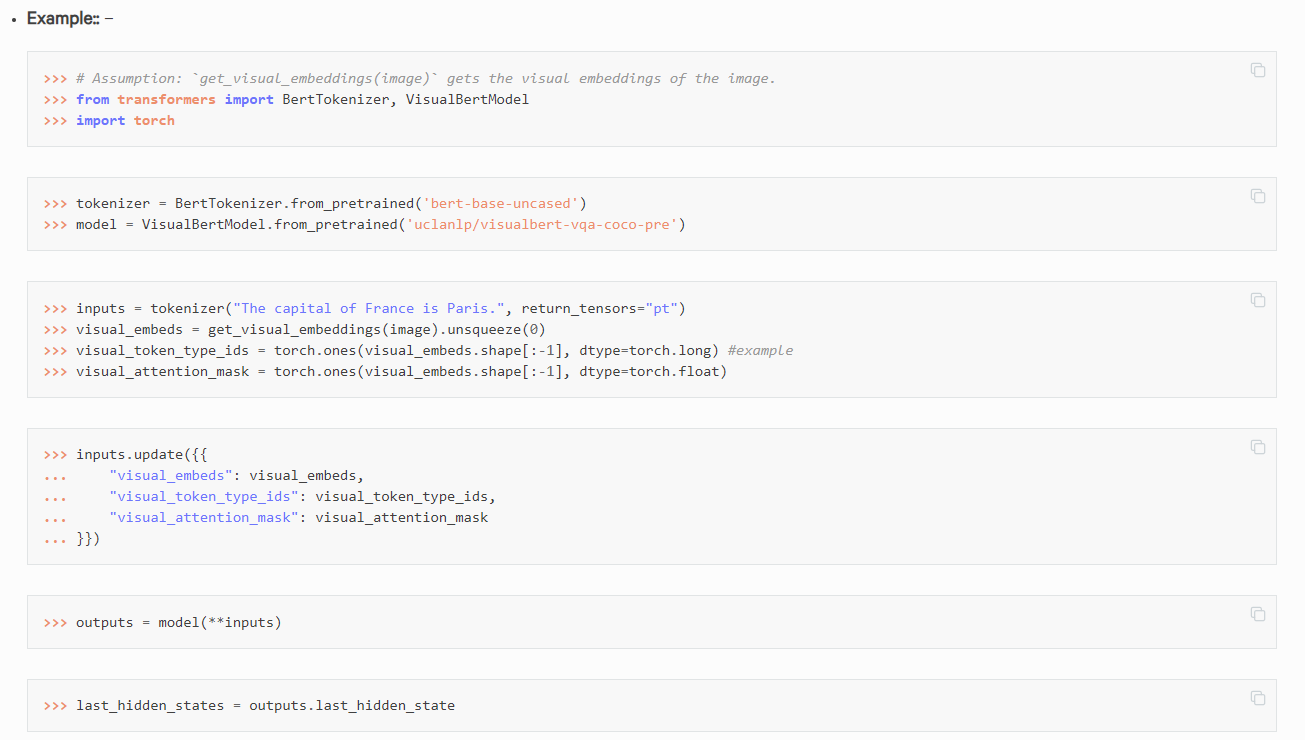

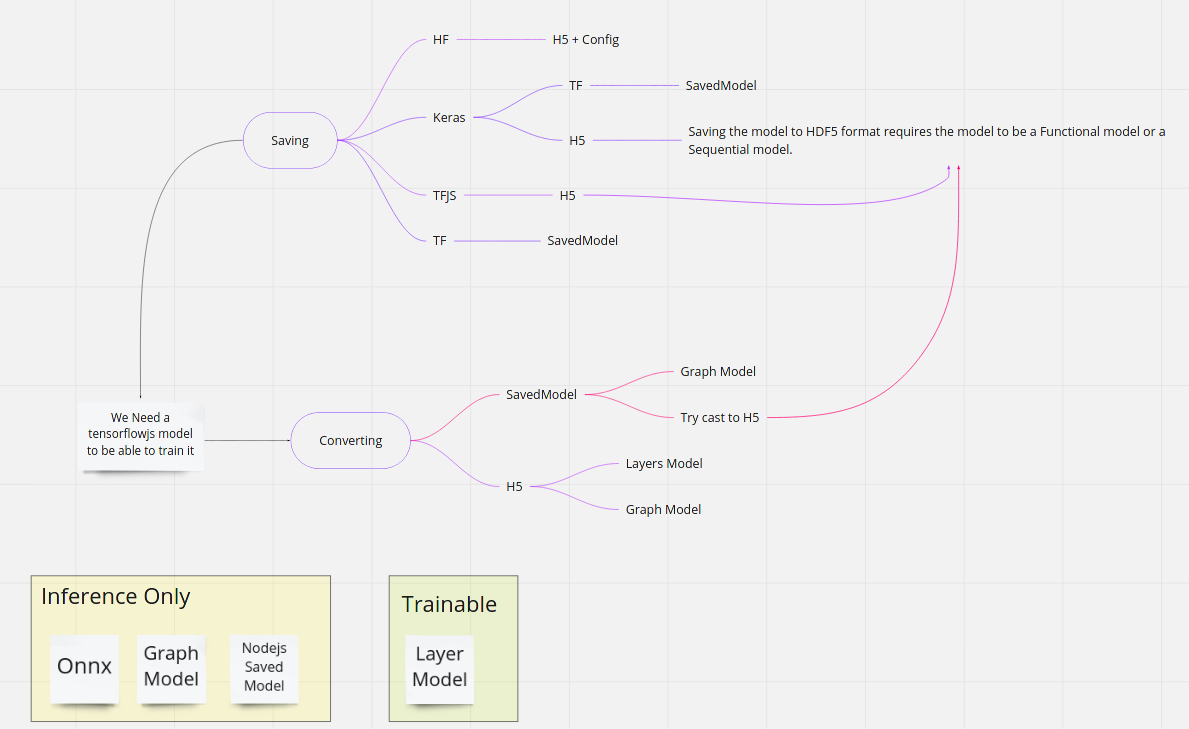

https://api.github.com/repos/huggingface/transformers/issues/10534 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10534/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10534/comments | https://api.github.com/repos/huggingface/transformers/issues/10534/events | https://github.com/huggingface/transformers/pull/10534 | 822,739,024 | MDExOlB1bGxSZXF1ZXN0NTg1MzE0MjM3 | 10,534 | VisualBERT | {

"login": "gchhablani",

"id": 29076344,

"node_id": "MDQ6VXNlcjI5MDc2MzQ0",

"avatar_url": "https://avatars.githubusercontent.com/u/29076344?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/gchhablani",

"html_url": "https://github.com/gchhablani",

"followers_url": "https://api.github.com/users/gchhablani/followers",

"following_url": "https://api.github.com/users/gchhablani/following{/other_user}",

"gists_url": "https://api.github.com/users/gchhablani/gists{/gist_id}",

"starred_url": "https://api.github.com/users/gchhablani/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/gchhablani/subscriptions",

"organizations_url": "https://api.github.com/users/gchhablani/orgs",

"repos_url": "https://api.github.com/users/gchhablani/repos",

"events_url": "https://api.github.com/users/gchhablani/events{/privacy}",

"received_events_url": "https://api.github.com/users/gchhablani/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2796628563,

"node_id": "MDU6TGFiZWwyNzk2NjI4NTYz",

"url": "https://api.github.com/repos/huggingface/transformers/labels/WIP",

"name": "WIP",

"color": "234C99",

"default": false,

"description": "Label your PR/Issue with WIP for some long outstanding Issues/PRs that are work in progress"

}

] | closed | false | null | [] | [

"Hi @gchhablani \r\n\r\nThis is great! You can ping me if you need any help with this model.\r\n\r\n\r\nAlso, we have now added a step-by-step doc for how to add a model, you can find it here \r\nhttps://huggingface.co/transformers/add_new_model.html\r\n\r\nAlso have a look at the `cookiecutter` tool, which will help you generate lots of boilerplate code.\r\nhttps://github.com/huggingface/transformers/tree/master/templates/adding_a_new_model",

"Hi @patil-suraj\r\n\r\nThanks a lot.\r\n\r\nI wanted to add this model in 2020, but I faced a lot of issues and got busy with some other work. Seems like the code structure has changed quite a bit after that. I'll check the shared links and get back.",

"Hi @patil-suraj,\r\n\r\nI have started adding some code. For comparison, you can see: https://github.com/uclanlp/visualbert/blob/master/pytorch_pretrained_bert/modeling.py.\r\n\r\nPlease look at the `VisualBertEmbeddings` and `VisualBertModel`. I have checked these using dummy tensors. I am adding/fixing other kinds of down-stream models. Please tell me if you think this is going in the right direction, and if there are any things that to need to be kept in mind.\r\n\r\nI skipped testing the original repository for now, they don't have an entry point, and require a bunch of installs for loading /handling the dataset(s) which are huge.\r\n\r\nThere are several different checkpoints, even for the pre-trained models (with different embedding dimensions, etc.). For each we'll have to make a separate checkpoint, I guess.\r\n\r\nIn addition, I was wondering if we want to provide encoder-decoder features (`cross-attention`, `past_key_value`, etc.). I don't think it has been used in the original code, but it will certainly be a nice feature to have in case we have some task in the future which involves generation of text given an image and a text (probably there is something already).\r\n\r\nThanks :)",

"Hi @patil-suraj\r\n\r\nI would appreciate some feedback :) It'll help me know if I am going in the right direction,\r\n\r\nThanks,\r\nGunjan",

"Hey @gchhablani \r\n\r\nI'm thinking about this and will get back to you tomorrow :)",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.",

"Unstale",

"Hi @LysandreJik \r\nThanks for reviewing.\r\n\r\nI'll make the suggested changes in a few (4-5) hours.\r\n\r\nThe `position_embeddings_type` is not exactly being used by the authors. They use absolute embeddings. They do have an `embedding_strategy_type` argument but it is unused and kept as `'plain'`.\r\n\r\nYes, almost all of it is copied from BERT. The new additions are the embeddings and the model classes.\r\n\r\nInitially, I did have plans to add actual examples and notebooks initially in the same PR :stuck_out_tongue: I guess I'll work on it now.",

"@LysandreJik Do you wanna take a final look?",

":') Thanks a lot for all your help @patil-suraj @LysandreJik @sgugger @patrickvonplaten\r\n\r\nEdit:\r\nI will be working on another PR soon to add more/better examples, and to use the `SimpleDetector` as used by the original authors. Probably will also attempt to create `TF`/`Flax` models.",

"The PR looks awesome! I still think however that we should add `get_visual_embeddings` with a Processor in this PR to have it complete. @gchhablani @patil-suraj - do you think it would be too time-consuming to add a `VisualBERTFeatureExtractor` here? Just don't really think people will be able to run an example after we merge it at the moment",

"@patrickvonplaten here `visual_embeddings` comes from a cnn backbone (ResNet) or object detection model. So I don't think\r\n we can add `VisualBERTFeatureExtractor`. The plan is to add the cnn model and a demo notebook in `research_projects` dir in a follow-up a PR.",

"> @patrickvonplaten here `visual_embeddings` comes from a cnn backbone (ResNet) or object detection model. So I don't think\r\n> we can add `VisualBERTFeatureExtractor`. The plan is to add the cnn model and a demo notebook in `research_projects` dir in a follow-up a PR.\r\n\r\nOk! Do we have another model that could give us those embeddings? ViT maybe?",

"@patrickvonplaten, I am not sure about ViT as I haven't used or read about it yet. The VisualBERT authors used Detectron-based (MaskRCNN with FPN-ResNet-101 backbone) features for 3 out of 4 tasks. Each \"token\" is actually an object detected and the token features/embeddings from the detectron classifier layer. In case of VCR task, they use a ResNet on given box coordinates. \r\n\r\nUnless ViT has/is an extractor similar to this, if we could use ViT, it'd be very different from the original and might not work with the provided pre-trained weights. :/\r\n",

"Adding a common Fast/Faster/MaskRCNN feature extractor, however, will help with LXMERT/VisualBERT and other models I'm planning to contribute in the future - ViLBERT (#11986), VL-BERT, (and possibly MCAN). \r\n\r\n**Edit**: There's already an example for LXMERT: https://github.com/huggingface/transformers/blob/master/examples/research_projects/lxmert/modeling_frcnn.py which I'll build upon.",

"Ok! Good to merge for me then",

"maybe not promote it yet",

"@patrickvonplaten Yes, won't be promoted before adding an example.\r\n\r\nThe plan forward is to add a detector and example notebook in `research_projects` dir in a follow-up PR.\r\n\r\nVerified that all slow tests are passing :)\r\n\r\nMerging!",

"Great model addition @gchhablani. Small note: can you fix the code examples of the HTML page?\r\n\r\nCurrently they look like this:\r\n\r\n\r\n\r\nThe Returns: statement should be below the Example: statement in `modeling_visual_bert.py`. Sorry for nitpicking ;) "

] | 1,614 | 1,622 | 1,622 | CONTRIBUTOR | null | This PR adds VisualBERT (See Closed Issue #5095). | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10534/reactions",

"total_count": 4,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 4,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10534/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/10534",

"html_url": "https://github.com/huggingface/transformers/pull/10534",

"diff_url": "https://github.com/huggingface/transformers/pull/10534.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/10534.patch",

"merged_at": 1622637788000

} |

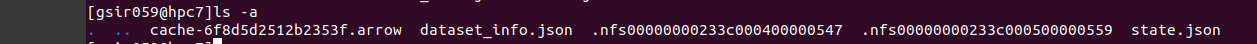

https://api.github.com/repos/huggingface/transformers/issues/10533 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10533/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10533/comments | https://api.github.com/repos/huggingface/transformers/issues/10533/events | https://github.com/huggingface/transformers/issues/10533 | 822,734,299 | MDU6SXNzdWU4MjI3MzQyOTk= | 10,533 | RAG with RAY workers keep repetitive copies of knowledge base as .nfs files until the process is done. | {

"login": "shamanez",

"id": 16892570,

"node_id": "MDQ6VXNlcjE2ODkyNTcw",

"avatar_url": "https://avatars.githubusercontent.com/u/16892570?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/shamanez",

"html_url": "https://github.com/shamanez",

"followers_url": "https://api.github.com/users/shamanez/followers",

"following_url": "https://api.github.com/users/shamanez/following{/other_user}",

"gists_url": "https://api.github.com/users/shamanez/gists{/gist_id}",

"starred_url": "https://api.github.com/users/shamanez/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/shamanez/subscriptions",

"organizations_url": "https://api.github.com/users/shamanez/orgs",

"repos_url": "https://api.github.com/users/shamanez/repos",

"events_url": "https://api.github.com/users/shamanez/events{/privacy}",

"received_events_url": "https://api.github.com/users/shamanez/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"I don't know what those .nfs are used for in Ray, is it safe to remove them @amogkam ?"

] | 1,614 | 1,617 | 1,617 | CONTRIBUTOR | null |

As mentioned in [this PR](https://github.com/huggingface/transformers/pull/10410), I update the **my_knowledge_dataset** object all the time. I save the new my_knowledge_dataset in the same place by removing previously saved stuff. But still, I see there are always some hidden files left. Please check the screenshot below.

I did some checks and found that these .nfs files being used by RAY. But my local KB is 30GB, so I do not want to add a .nfs file in every iteration. Is there a way to get over this?

@amogkam

@lhoestq

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10533/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10533/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/10532 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10532/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10532/comments | https://api.github.com/repos/huggingface/transformers/issues/10532/events | https://github.com/huggingface/transformers/issues/10532 | 822,728,018 | MDU6SXNzdWU4MjI3MjgwMTg= | 10,532 | Calling Inference API returns input text | {

"login": "gstranger",

"id": 36181416,

"node_id": "MDQ6VXNlcjM2MTgxNDE2",

"avatar_url": "https://avatars.githubusercontent.com/u/36181416?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/gstranger",

"html_url": "https://github.com/gstranger",

"followers_url": "https://api.github.com/users/gstranger/followers",

"following_url": "https://api.github.com/users/gstranger/following{/other_user}",

"gists_url": "https://api.github.com/users/gstranger/gists{/gist_id}",

"starred_url": "https://api.github.com/users/gstranger/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/gstranger/subscriptions",

"organizations_url": "https://api.github.com/users/gstranger/orgs",

"repos_url": "https://api.github.com/users/gstranger/repos",

"events_url": "https://api.github.com/users/gstranger/events{/privacy}",

"received_events_url": "https://api.github.com/users/gstranger/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Could you try to add `\"max_length\": 200` to your payload ? (also cc @Narsil )",

"Hi @gstranger ,\r\n\r\nCan you reproduce the problem locally ? It could be that your model simply produces EOS token with high probability (leading to having the exact same prompt as output)\r\n\r\nIf not, do you mind telling us your username by DM `[email protected]` so we can investigate this issue ?",

"Hello @Narsil ,\r\n\r\nWhen I run the same model locally, without either `max_length` or `min_length` I receive additional output, typically it will generate about 20-40 tokens. Also @patrickvonplaten when I add either that parameter or the `min_length` parameter the model still returns in less than a second with the same input text. I've sent an email with additional information. ",

"Hi @gstranger,\r\n\r\nIt does seem like a `max_length`. Your config defines it as `20` which is not long enough for the prompt that gets automatically added (because it's a transfo-xl model): https://github.com/huggingface/transformers/blob/master/src/transformers/pipelines/text_generation.py#L23\r\n\r\nYou can override both `max_length` and `prefix` within the config to override the default `transfo-xl` behavior (depending on how it was trained it might lead to significant perf boost, or loss).\r\n\r\nBy default the API will read the config first. Replying also with more information by email with more information. Just sharing here so that the community can get help too.\r\n",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,614 | 1,619 | 1,619 | NONE | null | - `transformers` version: 4.4.0dev0

- Platform: MACosx

- Python version: 3.7

- PyTorch version (GPU?): N/A

- Tensorflow version (GPU?): N/A

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: No

### Who can help

Library:

@patrickvonplaten

@LysandreJik

@sgugger

## Information

Model I am using (Bert, XLNet ...): TransformerXL

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [x] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [x] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

1. Upload private model to hub

2. Follow the tutorial around calling the Inference API (pasted below)

I've trained a `TransformerXLLMHeadModel` and am using the equivalent tokenizer class `TransfoXLTokenizer` on a custom dataset. I've saved both of these classes and have verified that loading the directory using the Auto classes succeeds and that the model and tokenizer are usable. When attempting to call the Inference API, I only get back my input text.

### Specific code from tutorial

```python

import json

import requests

API_TOKEN = "api_1234"

API_URL = "https://api-inference.huggingface.co/models/private/model"

headers = {"Authorization": f"Bearer {API_TOKEN}"}

def query(payload):

data = json.dumps(payload)

response = requests.request("POST", API_URL, headers=headers, data=data)

return json.loads(response.content.decode("utf-8"))

data = query({"inputs": "Begin 8Bars ", "temperature": .85, "num_return_sequences": 5})

# data = [{'generated_text': 'Begin First '}]

```

## Expected behavior

When calling the Inference API on my private model I would expect it to return additional output rather than just my input text.

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10532/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10532/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/10531 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10531/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10531/comments | https://api.github.com/repos/huggingface/transformers/issues/10531/events | https://github.com/huggingface/transformers/pull/10531 | 822,720,356 | MDExOlB1bGxSZXF1ZXN0NTg1Mjk4NTYy | 10,531 | Typo correction. | {

"login": "cliang1453",

"id": 14855272,

"node_id": "MDQ6VXNlcjE0ODU1Mjcy",

"avatar_url": "https://avatars.githubusercontent.com/u/14855272?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/cliang1453",

"html_url": "https://github.com/cliang1453",

"followers_url": "https://api.github.com/users/cliang1453/followers",

"following_url": "https://api.github.com/users/cliang1453/following{/other_user}",

"gists_url": "https://api.github.com/users/cliang1453/gists{/gist_id}",

"starred_url": "https://api.github.com/users/cliang1453/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/cliang1453/subscriptions",

"organizations_url": "https://api.github.com/users/cliang1453/orgs",

"repos_url": "https://api.github.com/users/cliang1453/repos",

"events_url": "https://api.github.com/users/cliang1453/events{/privacy}",

"received_events_url": "https://api.github.com/users/cliang1453/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [] | 1,614 | 1,614 | 1,614 | CONTRIBUTOR | null | # What does this PR do?

Fix a typo: DEBERTA_PRETRAINED_MODEL_ARCHIVE_LIST => DEBERTA_V2_PRETRAINED_MODEL_ARCHIVE_LIST in line 31.

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes #10529

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @jplu

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- nlp datasets: [different repo](https://github.com/huggingface/nlp)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10531/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10531/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/10531",

"html_url": "https://github.com/huggingface/transformers/pull/10531",

"diff_url": "https://github.com/huggingface/transformers/pull/10531.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/10531.patch",

"merged_at": 1614976030000

} |

https://api.github.com/repos/huggingface/transformers/issues/10530 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10530/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10530/comments | https://api.github.com/repos/huggingface/transformers/issues/10530/events | https://github.com/huggingface/transformers/issues/10530 | 822,713,227 | MDU6SXNzdWU4MjI3MTMyMjc= | 10,530 | Test/Predict on summarization task | {

"login": "zyxnlp",

"id": 31751455,

"node_id": "MDQ6VXNlcjMxNzUxNDU1",

"avatar_url": "https://avatars.githubusercontent.com/u/31751455?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/zyxnlp",

"html_url": "https://github.com/zyxnlp",

"followers_url": "https://api.github.com/users/zyxnlp/followers",

"following_url": "https://api.github.com/users/zyxnlp/following{/other_user}",

"gists_url": "https://api.github.com/users/zyxnlp/gists{/gist_id}",

"starred_url": "https://api.github.com/users/zyxnlp/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/zyxnlp/subscriptions",

"organizations_url": "https://api.github.com/users/zyxnlp/orgs",

"repos_url": "https://api.github.com/users/zyxnlp/repos",

"events_url": "https://api.github.com/users/zyxnlp/events{/privacy}",

"received_events_url": "https://api.github.com/users/zyxnlp/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Hi,\r\n\r\ncould you please ask questions related to training of models on the [forum](https://discuss.huggingface.co/)?\r\n\r\nAll questions related to fine-tuning a model for summarization on CNN can be found [here](https://discuss.huggingface.co/search?q=summarization%20cnn) for example. \r\n\r\n",

"> Hi,\r\n> \r\n> could you please ask questions related to training of models on the [forum](https://discuss.huggingface.co/)?\r\n> \r\n> All questions related to fine-tuning a model for summarization on CNN can be found [here](https://discuss.huggingface.co/search?q=summarization%20cnn) for example.\r\n\r\n@NielsRogge Oh, thank you for your reminder, that really helped!",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored."

] | 1,614 | 1,619 | 1,619 | NONE | null | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.4.0.dev0

- Platform: Linux-5.3.0-53-generic-x86_64-with-glibc2.10

- Python version: 3.8.3

- PyTorch version (GPU?): 1.7.1 (True)

- Tensorflow version (GPU?): not installed (NA)

- Using GPU in script?: <fill in>

- Using distributed or parallel set-up in script?: <fill in>

### Who can help

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

@patrickvonplaten, @patil-suraj

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @jplu

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- nlp datasets: [different repo](https://github.com/huggingface/nlp)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

- maintained examples using bart: @patrickvonplaten, @patil-suraj

## Information

Model I am using (Bart):

The problem arises when using:

* [ ] only have train and eval example, do not have test/predict example script

* [ ] my own modified scripts: (give details below)

* [ ] CUDA_VISIBLE_DEVICES=2,3 python examples/seq2seq/run_seq2seq.py \

--model_name_or_path /home/yxzhou/experiment/ASBG/output/xsum_bart_large/ \

--do_predict \

--task summarization \

--dataset_name xsum \

--output_dir /home/yxzhou/experiment/ASBG/output/xsum_bart_large/test/ \

--num_beams 1 \

The tasks I am working on is:

* [ ] CNNDAILYMAIL , XSUM

## Expected behavior

<!-- A clear and concise description of what you would expect to happen. -->

Use the above script to test on CNNDAILYMAIL and XSUM dataset, the program seems will be always stuck at training_step (e.g., 26/709)

Could you please kindly provide a test/predict example script of the summarization task (e.g., XSUM). Thank you so much! | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10530/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10530/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/10529 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10529/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10529/comments | https://api.github.com/repos/huggingface/transformers/issues/10529/events | https://github.com/huggingface/transformers/issues/10529 | 822,685,396 | MDU6SXNzdWU4MjI2ODUzOTY= | 10,529 | Typo in deberta_v2/__init__.py | {

"login": "cliang1453",

"id": 14855272,

"node_id": "MDQ6VXNlcjE0ODU1Mjcy",

"avatar_url": "https://avatars.githubusercontent.com/u/14855272?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/cliang1453",

"html_url": "https://github.com/cliang1453",

"followers_url": "https://api.github.com/users/cliang1453/followers",

"following_url": "https://api.github.com/users/cliang1453/following{/other_user}",

"gists_url": "https://api.github.com/users/cliang1453/gists{/gist_id}",

"starred_url": "https://api.github.com/users/cliang1453/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/cliang1453/subscriptions",

"organizations_url": "https://api.github.com/users/cliang1453/orgs",

"repos_url": "https://api.github.com/users/cliang1453/repos",

"events_url": "https://api.github.com/users/cliang1453/events{/privacy}",

"received_events_url": "https://api.github.com/users/cliang1453/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"That's correct! Do you want to open a PR to fix it?"

] | 1,614 | 1,614 | 1,614 | CONTRIBUTOR | null | https://github.com/huggingface/transformers/blob/c503a1c15ec1b11e69a3eaaf06edfa87c05a2849/src/transformers/models/deberta_v2/__init__.py#L31

Should be '' DEBERTA_V2_PRETRAINED_MODEL_ARCHIVE_LIST ''. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10529/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10529/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/10528 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10528/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10528/comments | https://api.github.com/repos/huggingface/transformers/issues/10528/events | https://github.com/huggingface/transformers/issues/10528 | 822,678,374 | MDU6SXNzdWU4MjI2NzgzNzQ= | 10,528 | Different vocab_size between model and tokenizer of mT5 | {

"login": "cih9088",

"id": 11530592,

"node_id": "MDQ6VXNlcjExNTMwNTky",

"avatar_url": "https://avatars.githubusercontent.com/u/11530592?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/cih9088",

"html_url": "https://github.com/cih9088",

"followers_url": "https://api.github.com/users/cih9088/followers",

"following_url": "https://api.github.com/users/cih9088/following{/other_user}",

"gists_url": "https://api.github.com/users/cih9088/gists{/gist_id}",

"starred_url": "https://api.github.com/users/cih9088/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/cih9088/subscriptions",

"organizations_url": "https://api.github.com/users/cih9088/orgs",

"repos_url": "https://api.github.com/users/cih9088/repos",

"events_url": "https://api.github.com/users/cih9088/events{/privacy}",

"received_events_url": "https://api.github.com/users/cih9088/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Hello! This is a duplicate of https://github.com/huggingface/transformers/issues/4875, https://github.com/huggingface/transformers/issues/10144 and https://github.com/huggingface/transformers/issues/9247\r\n\r\n@patrickvonplaten, maybe we could do something about this in the docs? In the docs we recommend doing this:\r\n```py\r\nmodel.resize_token_embedding(len(tokenizer))\r\n```\r\nbut this is unfortunately false for T5!",

"@LysandreJik @cih9088 , actually I think doing: \r\n\r\n```python\r\nmodel.resize_token_embedding(len(tokenizer))\r\n```\r\n\r\nis fine -> it shouldn't throw an error & logically it should also be correct...Can you try it out?\r\n",

"```python\r\nmodel.resize_token_embedding(len(tokenizer))\r\n```\r\nThis works perfectly fine but here is the thing.\r\n\r\nOne might add the `model.resize_token_embedding(len(tokenizer))` in their code and use other configuration packages such as `hydra` from Facebook to train models with additional tokens or without them dynamically at runtime.\r\nHe would naturally think that `vocab_size` of tokenizer (no tokens added) and `vocab_size` of model are the same because other models are.\r\nEventually, he fine-tunes the `google/mt5-base` model without added tokens but because of `model.resize_token_embedding(len(tokenizer))`, model he will fine-tune is not the same with `google/mt5-base`.\r\nAfter training, he wants to load the trained model to test but the model complains about inconsistent embedding size between a loaded model which is `google/mt5-base`, and the trained model which has a smaller size of token embedding.\r\n\r\nOf course, we could resize token embedding before loading model, but what matters is inconsistency with other models I think.\r\nI reckon that people would not very much care about how the dictionary is composed in tokenizer. Maybe we add some dummy tokens to the tokenizer to keep consistency with other huggingface models or add documentation about it (I could not find any).\r\n\r\nWhat do you think?",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.",

"\r\n> Hello! This is a duplicate of #4875, #10144 and #9247\r\n> \r\n> @patrickvonplaten, maybe we could do something about this in the docs? In the docs we recommend doing this:\r\n> \r\n> ```python\r\n> model.resize_token_embedding(len(tokenizer))\r\n> ```\r\n> \r\n> but this is unfortunately false for T5!\r\n\r\nWhat is the correct way to resize_token_embedding for T5/mT5?"

] | 1,614 | 1,628 | 1,619 | NONE | null | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.1.1

- Platform: ubuntu 18.04

- Python version: 3.8.5

- PyTorch version (GPU?): 1.7.1

### Who can help

@patrickvonplaten

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @jplu

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- nlp datasets: [different repo](https://github.com/huggingface/nlp)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

## To reproduce

Steps to reproduce the behavior:

```python

from transformers import AutoModelForSeq2SeqLM

from transformers import AutoTokenizer

mt5s = ['google/mt5-base', 'google/mt5-small', 'google/mt5-large', 'google/mt5-xl', 'google/mt5-xxl']

for mt5 in mt5s:

model = AutoModelForSeq2SeqLM.from_pretrained(mt5)

tokenizer = AutoTokenizer.from_pretrained(mt5)

print()

print(mt5)

print(f"tokenizer vocab: {tokenizer.vocab_size}, model vocab: {model.config.vocab_size}")

```

This is problematic in case when one addes some (special) tokens to tokenizer and resizes the token embedding of the model with `model.resize_token_embedding(len(tokenizer))`

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

vocab_size for model and tokenizer should be the same?

<!-- A clear and concise description of what you would expect to happen. -->

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10528/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10528/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/10527 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10527/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10527/comments | https://api.github.com/repos/huggingface/transformers/issues/10527/events | https://github.com/huggingface/transformers/pull/10527 | 822,658,264 | MDExOlB1bGxSZXF1ZXN0NTg1MjQ3NjE0 | 10,527 | Refactoring checkpoint names for multiple models | {

"login": "danielpatrickhug",

"id": 38571110,

"node_id": "MDQ6VXNlcjM4NTcxMTEw",

"avatar_url": "https://avatars.githubusercontent.com/u/38571110?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/danielpatrickhug",

"html_url": "https://github.com/danielpatrickhug",

"followers_url": "https://api.github.com/users/danielpatrickhug/followers",

"following_url": "https://api.github.com/users/danielpatrickhug/following{/other_user}",

"gists_url": "https://api.github.com/users/danielpatrickhug/gists{/gist_id}",

"starred_url": "https://api.github.com/users/danielpatrickhug/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/danielpatrickhug/subscriptions",

"organizations_url": "https://api.github.com/users/danielpatrickhug/orgs",

"repos_url": "https://api.github.com/users/danielpatrickhug/repos",

"events_url": "https://api.github.com/users/danielpatrickhug/events{/privacy}",

"received_events_url": "https://api.github.com/users/danielpatrickhug/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"...The test pasts on my local machine, I ran make test, style, quality, fixup. I dont know why this failed..",

"I just rebased the PR to ensure that the tests pass. We'll merge if all is green!",

"Thanks guys"

] | 1,614 | 1,615 | 1,614 | CONTRIBUTOR | null | Hi, @sgugger reupload without datasets dir and added tf_modeling files, removed extra decorator in distilbert.

Linked to #10193, this PR refactors the checkpoint names in one private constant.

one note: longformer_tf has two checkpoints "allenai/longformer-base-4096" & "allenai/longformer-large-4096-finetuned-triviaqa". I set the checkpoint constant to "allenai/longformer-base-4096" and left the one decorator with "allenai/longformer-large-4096-finetuned-triviaqa".

Fixes #10193

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10527/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10527/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/10527",

"html_url": "https://github.com/huggingface/transformers/pull/10527",

"diff_url": "https://github.com/huggingface/transformers/pull/10527.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/10527.patch",

"merged_at": 1614985615000

} |

https://api.github.com/repos/huggingface/transformers/issues/10526 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10526/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10526/comments | https://api.github.com/repos/huggingface/transformers/issues/10526/events | https://github.com/huggingface/transformers/pull/10526 | 822,581,103 | MDExOlB1bGxSZXF1ZXN0NTg1MTgzMjYy | 10,526 | Fix Adafactor documentation (recommend correct settings) | {

"login": "jsrozner",

"id": 1113285,

"node_id": "MDQ6VXNlcjExMTMyODU=",

"avatar_url": "https://avatars.githubusercontent.com/u/1113285?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jsrozner",

"html_url": "https://github.com/jsrozner",

"followers_url": "https://api.github.com/users/jsrozner/followers",

"following_url": "https://api.github.com/users/jsrozner/following{/other_user}",

"gists_url": "https://api.github.com/users/jsrozner/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jsrozner/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jsrozner/subscriptions",

"organizations_url": "https://api.github.com/users/jsrozner/orgs",

"repos_url": "https://api.github.com/users/jsrozner/repos",

"events_url": "https://api.github.com/users/jsrozner/events{/privacy}",

"received_events_url": "https://api.github.com/users/jsrozner/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | {

"login": "stas00",

"id": 10676103,

"node_id": "MDQ6VXNlcjEwNjc2MTAz",

"avatar_url": "https://avatars.githubusercontent.com/u/10676103?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/stas00",

"html_url": "https://github.com/stas00",

"followers_url": "https://api.github.com/users/stas00/followers",

"following_url": "https://api.github.com/users/stas00/following{/other_user}",

"gists_url": "https://api.github.com/users/stas00/gists{/gist_id}",

"starred_url": "https://api.github.com/users/stas00/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/stas00/subscriptions",

"organizations_url": "https://api.github.com/users/stas00/orgs",

"repos_url": "https://api.github.com/users/stas00/repos",

"events_url": "https://api.github.com/users/stas00/events{/privacy}",

"received_events_url": "https://api.github.com/users/stas00/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "stas00",

"id": 10676103,

"node_id": "MDQ6VXNlcjEwNjc2MTAz",

"avatar_url": "https://avatars.githubusercontent.com/u/10676103?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/stas00",

"html_url": "https://github.com/stas00",

"followers_url": "https://api.github.com/users/stas00/followers",

"following_url": "https://api.github.com/users/stas00/following{/other_user}",

"gists_url": "https://api.github.com/users/stas00/gists{/gist_id}",

"starred_url": "https://api.github.com/users/stas00/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/stas00/subscriptions",

"organizations_url": "https://api.github.com/users/stas00/orgs",

"repos_url": "https://api.github.com/users/stas00/repos",

"events_url": "https://api.github.com/users/stas00/events{/privacy}",

"received_events_url": "https://api.github.com/users/stas00/received_events",

"type": "User",

"site_admin": false

}

] | [

"Is this part correct?\r\n\r\n> Recommended T5 finetuning settings:\r\n> - Scheduled LR warm-up to fixed LR\r\n> - disable relative updates\r\n> - use clip threshold: https://arxiv.org/abs/2004.14546\r\n\r\nIn particular:\r\n- are we supposed to do scheduled LR? adafactor handles this no?\r\n- we *should not* disable relative updates\r\n- i don't know what clip threshold means in this context",

"@sshleifer can you accept this documentation change?",

"No but @stas00 can!",

"@jsrozner, thank you for the PR. \r\n\r\nReading https://github.com/huggingface/transformers/issues/7789 it appears that the `Recommended T5 finetuning settings` are invalid.\r\n\r\nSo if we are fixing this, in addition to changing the example the prose above it should be synced as well. \r\n\r\nI don't know where the original recommendation came from - do you by chance have a source we could point to for the corrected recommendation? If you know that is, if not, please don't worry.\r\n\r\nThank you.\r\n\r\n",

"I receive the following error when using this the \"recommended way\":\r\n```{python}\r\nTraceback (most recent call last):\r\n File \"./folder_aws/transformers/examples/seq2seq/run_seq2seq.py\", line 759, in <module>\r\n main()\r\n File \"./folder_aws/transformers/examples/seq2seq/run_seq2seq.py\", line 651, in main\r\n train_result = trainer.train(resume_from_checkpoint=checkpoint)\r\n File \"/home/alejandro.vaca/SpainAI_Hackaton_2020/folder_aws/transformers/src/transformers/trainer.py\", line 909, in train\r\n self.create_optimizer_and_scheduler(num_training_steps=max_steps)\r\n File \"/home/alejandro.vaca/SpainAI_Hackaton_2020/folder_aws/transformers/src/transformers/trainer.py\", line 660, in create_optimizer_and_scheduler\r\n self.optimizer = optimizer_cls(optimizer_grouped_parameters, **optimizer_kwargs)\r\n File \"/home/alejandro.vaca/SpainAI_Hackaton_2020/folder_aws/transformers/src/transformers/optimization.py\", line 452, in __init__\r\n raise ValueError(\"warmup_init requires relative_step=True\")\r\nValueError: warmup_init requires relative_step=True\r\n```\r\n\r\nFollowing this example in documentation:\r\n\r\n```{python}\r\nAdafactor(model.parameters(), lr=1e-3, relative_step=False, warmup_init=True)\r\n```\r\n@sshleifer @stas00 @jsrozner ",

"@alexvaca0, what was the command line you run when you received this error?\r\n\r\nHF Trainer doesn't set `warmup_init=True`. Unless you modified the script?\r\n\r\nhttps://github.com/huggingface/transformers/blob/21e86f99e6b91af2e4df3790ba6c781e85fa0eb5/src/transformers/trainer.py#L649-L651\r\n\r\nIt is possible that the whole conflict comes from misunderstanding how this optimizer has to be used?\r\n\r\n> To use a manual (external) learning rate schedule you should set `scale_parameter=False` and `relative_step=False`.\r\n\r\nwhich is what the Trainer does at the moment. \r\n\r\nand:\r\n> relative_step (:obj:`bool`, `optional`, defaults to :obj:`True`):\r\n> If True, time-dependent learning rate is computed instead of external learning rate\r\n\r\nIs it possible that you are trying to use both an external and the internal scheduler at the same time?\r\n\r\nIt'd help a lot of you could show us the code that breaks, (perhaps on colab?) and how you invoke it. Basically, help us reproduce it.\r\n\r\nThank you.",

"Hi, @stas00 , first thank you very much for looking into it so fast. I forgot to say it, but yes, I changed the code in Trainer because I was trying to use the recommended settings for training T5 (I mean, setting an external learning rate with warmup_init = True as in the documentation. `Training without LR warmup or clip threshold is not recommended. Additional optimizer operations like gradient clipping should not be used alongside Adafactor.` From your answer, I understand that Trainer was designed for using Adam, as it uses the external learning rate scheduler and doesn't let you pass None as learning rate. Is there a workaround to be able to use the Trainer class with Adafactor following Adafactor settings recommended in the documentation? I'd also like to try using Adafactor without specifying the learning rate, would that be possible? I think maybe this documentation causes a little bit of confusion, because when you set the parameters specified in it `Adafactor(model.parameters(), lr=1e-3, relative_step=False, warmup_init=True)` it breaks. \r\n\r\n\r\n",

"OK, so first, as @jsrozner PR goes and others commented, the current recommendation appears to be invalid.\r\n\r\nSo we want to gather all the different combinations that work and identify which of them provides the best outcome. I originally replied to this PR asking if someone knows of an authoritative paper we could copy the recommendation from - i.e. finding someone who already did the quality studies, so that we won't have it. So I'm all ears if any of you knows of such source.\r\n\r\nNow to your commentary, @alexvaca0, while HF trainer has Adam as the default it has `--adafactor` which enables it in the mode with using the external scheduler. Surely, we could change the Trainer to skip the external scheduler (or perhaps simpler feeding it some no-op scheduler) and instead use this recommendation if @sgugger agrees with that. But we first need to see that it provides a better outcome in the general case. Or alternatively to make `--adafactor` configurable so it could support more than just one way.\r\n\r\nFor me personally I want to understand first the different combinations, what are the impacts and how many of those combinations should we expose through the Trainer. e.g. like apex optimization level, we could have named combos `--adafactor setup1`, `--adafactor setup2` and would activate the corresponding configuration. But first let's compile the list of desirable combos.\r\n\r\nWould any of the current participants be interested in taking a lead on that? I'm asking you since you are already trying to get the best outcome with your data and so are best positioned to judge which combinations work the best for what situation.\r\n\r\nOnce we compiled the data it'd be trivial to update the documented recommendation and potentially extend HF Trainer to support more than one setting for Adafactor.\r\n\r\n",

"I only ported the `--adafactor` option s it was implemented for the `Seq2SeqTrainer` to keep the commands using it working as they were. The `Trainer` does not have vocation to support all the optimizers and all their possible setups, just one sensible default that works well, that is the reason you can:\r\n- pass an `optimizer` at init\r\n- subclass and override `create_optimizer`.\r\n\r\nIn retrospect, I shouldn't have ported the Adafactor option and it should have stayed just in the script using it.",

"Thank you for your feedback, @sgugger. \r\n\r\nSo let's leave the trainer as it is and let's then solve this for Adafactor as just an optimizer and then document the best combinations. ",

"Per my comment on #7789, I observed that \r\n> I can confirm that `Adafactor(lr=1e-3, relative_step=False, warmup_init=False)` seems to break training (i.e. I observe no learning over 4 epochs, whereas `Adafactor(model.parameters(), relative_step=True, warmup_init=True, lr=None)` works well (much better than adam)\r\n\r\nGiven that relative_step and warmup_init must take on the same value, it seems like there is only one configuration that is working?\r\n\r\nBut, this is also confusing (see my comment above): https://github.com/huggingface/transformers/pull/10526#issuecomment-791012771\r\n\r\n> > Recommended T5 finetuning settings:\r\n> > - Scheduled LR warm-up to fixed LR\r\n> > - disable relative updates\r\n> > - use clip threshold: https://arxiv.org/abs/2004.14546\r\n> \r\n> In particular:\r\n> - are we supposed to do scheduled LR? adafactor handles this no?\r\n> - we *should not* disable relative updates\r\n> - i don't know what clip threshold means in this context\r\n",

"I first validated that HF `Adafactor` is 100% identical to the latest [fairseq version](https://github.com/pytorch/fairseq/blob/5273bbb7c18a9b147e3f0cfc97121cc945a962bd/fairseq/optim/adafactor.py).\r\n\r\nI then tried to find out the source of these recommendations and found:\r\n\r\n1. https://discuss.huggingface.co/t/t5-finetuning-tips/684/3\r\n```\r\nlr=0.001, scale_parameter=False, relative_step=False\r\n```\r\n2. https://discuss.huggingface.co/t/t5-finetuning-tips/684/22 which is your comment @jsrozner where you propose that the opposite combination works well:\r\n\r\n```\r\nlr=None, relative_step=True, warmup_init=True\r\n```\r\n\r\nIf both found to be working, I propose we solve this conundrum by documenting this as following:\r\n```\r\n Recommended T5 finetuning settings (https://discuss.huggingface.co/t/t5-finetuning-tips/684/3):\r\n\r\n - Scheduled LR warm-up to fixed LR\r\n - disable relative updates\r\n - scale_parameter=False\r\n - use clip threshold: https://arxiv.org/abs/2004.14546\r\n\r\n Example::\r\n\r\n Adafactor(model.parameters(), scale_parameter=False, relative_step=False, warmup_init=False, lr=1e-3)\r\n\r\n Others reported the following combination to work well::\r\n\r\n Adafactor(model.parameters(), scale_parameter=False, relative_step=True, warmup_init=True, lr=None)\r\n\r\n - Training without LR warmup or clip threshold is not recommended. Additional optimizer operations like\r\n gradient clipping should not be used alongside Adafactor.\r\n```\r\n\r\nhttps://discuss.huggingface.co/t/t5-finetuning-tips/684/22 Also highly recommends to turn `scale_parameter=False` - so I added that to the documentation and the example above in both cases. Please correct me if I'm wrong.\r\n\r\nAnd @jsrozner's correction in this PR is absolutely right to the point.\r\n\r\nPlease let me know if my proposal makes sense, in particular I'd like your validation, @jsrozner, since I added your alternative proposal. And don't have any other voices to agree or disagree with it.\r\n\r\nThank you!\r\n\r\n\r\n\r\n\r\n",

"> Is this part correct?\r\n> \r\n> > ```\r\n> > Recommended T5 finetuning settings:\r\n> > - Scheduled LR warm-up to fixed LR\r\n> > - disable relative updates\r\n> > - use clip threshold: https://arxiv.org/abs/2004.14546\r\n> > ```\r\n> \r\n> In particular:\r\n> \r\n> * are we supposed to do scheduled LR? adafactor handles this no?\r\n\r\nsee my last comment - it depends on whether we use the external LR scheduler or not.\r\n\r\n> * we _should not_ disable relative updates\r\n\r\nsee my last comment - it depends on `warmup_init`'s value\r\n\r\n> * i don't know what clip threshold means in this context\r\n\r\nthis?\r\n```\r\n def __init__(\r\n [....]\r\n clip_threshold=1.0,\r\n```",

"I'm running some experiments, playing around with Adafactor parameters. I'll post here which configuration has best results. From T5 paper, they used the following parameters for fine-tuning: Adafactor with *constant* lr 1e-3, with batch size 128, if I understood the paper well. Therefore, I find it appropriate the documentation changes mentioned above, leaving the recommendations from the paper while mentioning other configs that have worked well for other users. In my case, for example, the configuration from the paper doesn't work very well and I quickly overfit. ",

"Finally, I'm trying to understand the confusing:\r\n```\r\n - use clip threshold: https://arxiv.org/abs/2004.14546\r\n [...]\r\n gradient clipping should not be used alongside Adafactor.\r\n```\r\n\r\nAs the paper explains these are 2 different types of clipping.\r\n\r\nSince the code is:\r\n```\r\nupdate.div_((self._rms(update) / group[\"clip_threshold\"]).clamp_(min=1.0))\r\n```\r\nthis probably means that the default `clip_threshold=1.0` is in effect disables clip threshold. \r\n\r\nI can't find any mentioning of clip threshold in https://arxiv.org/abs/2004.14546 - is this a wrong paper? Perhaps it needed to link to the original paper https://arxiv.org/abs/1804.04235 where clipping is actually discussed? I think it's the param `d` in the latter paper and it proposes to get the best results with `d=1.0` without learning rate warmup:\r\n\r\npage 5 from https://arxiv.org/pdf/1804.04235:\r\n> We added update clipping to the previously described fast-\r\n> decay experiments. For the experiment without learning rate\r\n> warmup, update clipping with d = 1 significantly amelio-\r\n> rated the instability problem – see Table 2 (A) vs. (H). With\r\n> d = 2, the instability was not improved. Update clipping\r\n> did not significantly affect the experiments with warmup\r\n> (with no instability problems).\r\n\r\nSo I will change the doc to a non-ambiguous:\r\n\r\n`use clip_threshold=1.0 `",

"OK, so here is the latest proposal. I re-organized the notes:\r\n\r\n```\r\n Recommended T5 finetuning settings (https://discuss.huggingface.co/t/t5-finetuning-tips/684/3):\r\n\r\n - Training without LR warmup or clip_threshold is not recommended. \r\n * use scheduled LR warm-up to fixed LR\r\n * use clip_threshold=1.0 (https://arxiv.org/abs/1804.04235)\r\n - Disable relative updates\r\n - Use scale_parameter=False\r\n - Additional optimizer operations like gradient clipping should not be used alongside Adafactor\r\n\r\n Example::\r\n\r\n Adafactor(model.parameters(), scale_parameter=False, relative_step=False, warmup_init=False, lr=1e-3)\r\n\r\n Others reported the following combination to work well::\r\n\r\n Adafactor(model.parameters(), scale_parameter=False, relative_step=True, warmup_init=True, lr=None)\r\n```\r\n\r\nI added these into this PR, please have a look.\r\n",

"Let's just wait to hear from both @jsrozner and @alexvaca0 to ensure that my edits are valid before merging.",

"I observed that `Adafactor(lr=1e-3, relative_step=False, warmup_init=False)` failed to lead to any learning. I guess this is because I didn't change `scale_parameter` to False? I can try rerunning with scale_param false.\r\n\r\nAnd when I ran with `Adafactor(model.parameters(), relative_step=True, warmup_init=True, lr=None)`, I *did not* set `scale_parameter=False`. Before adding the \"others seem to have success with ...\" bit, we should check on the effect of scale_parameter.\r\n\r\nRegarding clip_threshold - just confirming that the comment is correct that when using adafactor we should *not* have any other gradient clipping (e.g. `nn.utils.clip_grad_norm_()`)?\r\n\r\nSemi-related per @alexvaca0, regarding T5 paper's recommended batch_size: is the 128 recommendation agnostic to the length of input sequences? Or is there a target number of tokens per batch that would be optimal? (E.g. input sequences of max length 10 tokens vs input sequences of max length 100 tokens -- should we expect 128 to work optimally for both?)\r\n\r\nBut most importantly, shouldn't we change the defaults so that a call to `Adafactor(model.paramaters())` == `Adafactor(model.parameters(), scale_parameter=False, relative_step=False, warmup_init=False, lr=1e-3)`\r\n\r\ni.e, we default to what we suggest?",

"> I observed that Adafactor(lr=1e-3, relative_step=False, warmup_init=False) failed to lead to any learning. I guess this is because I didn't change scale_parameter to False? I can try rerunning with scale_param false.\r\n\r\nYes, please and thank you!\r\n\r\n\r\n\r\n\r\n> Regarding clip_threshold - just confirming that the comment is correct that when using adafactor we should not have any other gradient clipping (e.g. nn.utils.clip_grad_norm_())?\r\n\r\nThank you for validating this, @jsrozner \r\n\r\nIs the current incarnation of the doc clear wrt this subject matter or should we add an explicit example?\r\n\r\nOne thing I'm concerned about is that the Trainer doesn't validate this and will happily run `clip_grad_norm` with Adafactor\r\n\r\nMight we need to add to:\r\n\r\nhttps://github.com/huggingface/transformers/blob/9f8fa4e9730b8e658bcd5625610cc70f3a019818/src/transformers/trainer.py#L649-L651\r\n\r\n```\r\nif self.args.max_grad_norm:\r\n raise ValueError(\"don't use max_grad_norm with adafactor\")\r\n```\r\n\r\n\r\n> But most importantly, shouldn't we change the defaults so that a call to Adafactor(model.paramaters()) == `Adafactor(model.parameters(), scale_parameter=False, relative_step=False, warmup_init=False, lr=1e-3)`\r\n\r\nSince we copied the code verbatim from fairseq, it might be a good idea to keep the defaults the same? I'm not attached to either way. @sgugger what do you think?\r\n\r\nedit: I don't think we can/should since it may break people's code that relies on the current defaults.",

"> > Regarding clip_threshold - just confirming that the comment is correct that when using adafactor we should not have any other gradient clipping (e.g. nn.utils.clip_grad_norm_())?\r\n> \r\n> Thank you for validating this, @jsrozner\r\n\r\nSorry, I didn't validate this. I wanted to confirm with you all that this is correct.\r\n\r\n> > But most importantly, shouldn't we change the defaults so that a call to Adafactor(model.paramaters()) == `Adafactor(model.parameters(), scale_parameter=False, relative_step=False, warmup_init=False, lr=1e-3)`\r\n> \r\n> Since we copied the code verbatim from fairseq, it might be a good idea to keep the defaults the same? I'm not attached to either way. @sgugger what do you think?\r\n\r\nAlternative is to not provide defaults for these values and force the user to read documentation and decide what he/she wants. Can provide the default implementation as well as Adafactor's recommended settings\r\n\r\n",

"> I'm running some experiments, playing around with Adafactor parameters. I'll post here which configuration has best results. From T5 paper, they used the following parameters for fine-tuning: Adafactor with _constant_ lr 1e-3, with batch size 128, if I understood the paper well. Therefore, I find it appropriate the documentation changes mentioned above, leaving the recommendations from the paper while mentioning other configs that have worked well for other users. In my case, for example, the configuration from the paper doesn't work very well and I quickly overfit.\r\n\r\n@alexvaca0 \r\nWhat set of adafactor params did you find work well when you were finetuning t5?",

"> Sorry, I didn't validate this. I wanted to confirm with you all that this is correct.\r\n\r\nI meant validating as in reading over and checking that it makes sense. So all is good. Thank you for extra clarification so we were on the same page, @jsrozner \r\n \r\n> Alternative is to not provide defaults for these values and force the user to read documentation and decide what he/she wants. Can provide the default implementation as well as Adafactor's recommended settings\r\n\r\nas I appended to my initial comment, this would be a breaking change. So if it's crucial that we do that, this would need to happen in the next major release.\r\n",

"Or maybe add a warning message that indicates that default params may not be optimal? It will be logged only a single time at optimizer init so not too annoying.\r\n\r\n`log.warning('Initializing Adafactor. If you are using default settings, it is recommended that you read the documentation to ensure that these are optimal for your use case.)`",

"But we now changed it propose two different ways - which one is the recommended one? The one used by the Trainer?\r\n\r\nSince it's pretty clear that there is more than one way, surely the user will find their way to the doc if they aren't happy with the results.\r\n\r\n",

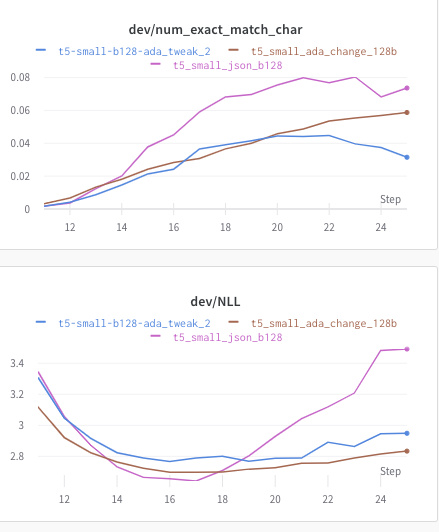

"I ran my model under three different adafactor setups:\r\n\r\n```python\r\n optimizer = Adafactor(self.model.parameters(),\r\n relative_step=True,\r\n warmup_init=True)\r\n```\r\n```python\r\noptimizer = Adafactor(self.model.parameters(),\r\n relative_step=True,\r\n warmup_init=True,\r\n scale_parameter=False)\r\n```\r\n```python\r\n optimizer = Adafactor(self.model.parameters(),\r\n lr=1e-3,\r\n relative_step=False,\r\n warmup_init=False,\r\n scale_parameter=False)\r\n```\r\n\r\nI track exact match and NLL on the dev set. Epochs are tracked at the bottom. They start at 11 because of how I'm doing things. (i.e. x=11 => epoch=1)\r\n\r\nNote that I'm training a t5-small model on 80,000 examples, so maybe there's some variability with the sort of model we're training?\r\n\r\n\r\n(Image works if you navigate to the link, but seems not to appear?)\r\n\r\npurple is (1)\r\nblue is (2) and by far the worst (i.e. shows that scale_param should be set to True if we are using relative_step)\r\nbrown is (3)\r\n\r\nIn particular, it looks like scale_param should be True for the setting under \" Others reported the following combination to work well::\"\r\n\r\nOn the other hand, it looks like for a t5-large model, (3) does better than (1) (although I also had substantially different batch sizes). ",

"Thank you, @jsrozner, for running these experiments and the analysis.\r\n\r\nSo basically we should adjust \" Others reported the following combination to work well::\" to `scale_param=True`, correct? \r\n\r\nInterestingly we end up with 2 almost total opposites.",

"@jsrozner Batch size and learning rate configuration go hand in hand, therefore it's difficult to know about your last reflexion, as having different different batch sizes lead to different gradient estimations (in particular, the lower the batch size, the worse your gradient estimation is), the larger your batch size, the larger your learning rate can be without negatively affecting performance. \r\nFor the rest of the experiments, thanks a lot, the comparison is very clear and this will be very helpful for those of us who want to have some \"default\" parameters to start from. I see a clear advantage to leave the learning rate as None, as when setting an external learning rate we typically have to make experiments to find the optimal one for that concrete problem, so if it provides results similar or better to the ones provided by the paper's recommended parameters, I'd go with that as default. \r\nI hope I can have my experiments done soon, which will be with t5-large probably, to see if they coincide with your findings.",

"OK, let's merge this and if we need to make updates for any new findings we will do it then. ",

"Although I didn't really run an experiment, I have found that my settings for adafactor (relative step, warmup, scale all true) do well when training t5-large, also.\r\n\r\n@alexvaca0 please post your results when you have them!"

] | 1,614 | 1,617 | 1,617 | CONTRIBUTOR | null | This PR fixes documentation to reflect optimal settings for Adafactor:

- fix an impossible arg combination erroneously proposed in the example

- use the correct link to the adafactor paper where `clip_treshold` is discussed

- document the recommended `scale_parameter=False`

- add other recommended settings combinations, which are quite different from the original

- re-org notes

- make the errors less ambiguous

Fixes #7789

@sgugger

(edited by @stas00 to reflect it's pre-merge state as the PR evolved since it's original submission) | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/10526/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/10526/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/10526",

"html_url": "https://github.com/huggingface/transformers/pull/10526",

"diff_url": "https://github.com/huggingface/transformers/pull/10526.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/10526.patch",

"merged_at": 1617249818000

} |

https://api.github.com/repos/huggingface/transformers/issues/10525 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/10525/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/10525/comments | https://api.github.com/repos/huggingface/transformers/issues/10525/events | https://github.com/huggingface/transformers/issues/10525 | 822,490,543 | MDU6SXNzdWU4MjI0OTA1NDM= | 10,525 | fine-tune Pegasus with xsum using Colab but generation results have no difference | {

"login": "harrywang",

"id": 595772,

"node_id": "MDQ6VXNlcjU5NTc3Mg==",

"avatar_url": "https://avatars.githubusercontent.com/u/595772?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/harrywang",

"html_url": "https://github.com/harrywang",

"followers_url": "https://api.github.com/users/harrywang/followers",

"following_url": "https://api.github.com/users/harrywang/following{/other_user}",

"gists_url": "https://api.github.com/users/harrywang/gists{/gist_id}",

"starred_url": "https://api.github.com/users/harrywang/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/harrywang/subscriptions",

"organizations_url": "https://api.github.com/users/harrywang/orgs",

"repos_url": "https://api.github.com/users/harrywang/repos",

"events_url": "https://api.github.com/users/harrywang/events{/privacy}",

"received_events_url": "https://api.github.com/users/harrywang/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Hi,\r\n\r\ncould you please ask this question on the [forum](https://discuss.huggingface.co/)? We're happy to help you there!\r\n\r\nQuestions regarding training of models are a perfect use case for the forum :) for example, [here](https://discuss.huggingface.co/search?q=pegasus) you can find all questions related to fine-tuning PEGASUS.",

"@NielsRogge thanks a lot! I will post the question in forum 😄🤝",