url

stringlengths 62

66

| repository_url

stringclasses 1

value | labels_url

stringlengths 76

80

| comments_url

stringlengths 71

75

| events_url

stringlengths 69

73

| html_url

stringlengths 50

56

| id

int64 377M

2.15B

| node_id

stringlengths 18

32

| number

int64 1

29.2k

| title

stringlengths 1

487

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 2

classes | assignee

dict | assignees

list | comments

list | created_at

int64 1.54k

1.71k

| updated_at

int64 1.54k

1.71k

| closed_at

int64 1.54k

1.71k

⌀ | author_association

stringclasses 4

values | active_lock_reason

stringclasses 2

values | body

stringlengths 0

234k

⌀ | reactions

dict | timeline_url

stringlengths 71

75

| state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/transformers/issues/25220

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/25220/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/25220/comments

|

https://api.github.com/repos/huggingface/transformers/issues/25220/events

|

https://github.com/huggingface/transformers/issues/25220

| 1,830,368,736 |

I_kwDOCUB6oc5tGTXg

| 25,220 |

OASST model is unavailable for Transformer Agent: `'inputs' must have less than 1024 tokens.`

|

{

"login": "sim-so",

"id": 96299403,

"node_id": "U_kgDOBb1piw",

"avatar_url": "https://avatars.githubusercontent.com/u/96299403?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/sim-so",

"html_url": "https://github.com/sim-so",

"followers_url": "https://api.github.com/users/sim-so/followers",

"following_url": "https://api.github.com/users/sim-so/following{/other_user}",

"gists_url": "https://api.github.com/users/sim-so/gists{/gist_id}",

"starred_url": "https://api.github.com/users/sim-so/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/sim-so/subscriptions",

"organizations_url": "https://api.github.com/users/sim-so/orgs",

"repos_url": "https://api.github.com/users/sim-so/repos",

"events_url": "https://api.github.com/users/sim-so/events{/privacy}",

"received_events_url": "https://api.github.com/users/sim-so/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"Hi there. We temporarily increased the max length for this endpoint when releasing the Agents framework, but it's not back to its normal value. So yes, this one won't work anymore.",

"Thank you for the info, @sgugger!\r\n\r\n> So yes, this one won't work anymore.\r\n\r\nThen other OpenAssisant models may also only work with customizing a prompt. For now, I believe removing that model from the notebook or replacing it with another one would reduce the inconvenience.\r\n\r\nMay I try to edit the prompt so that other models with less input max length will be available?",

"You can definitely try!",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored."

] | 1,690 | 1,694 | 1,694 |

CONTRIBUTOR

| null |

### System Info

- transformers version: 4.29.0

- huggingface_hub version: 0.16.4

- python version: 3.10.6

- OS: Ubuntu 22.04.2 LTS

* run on Google Colab using [the provided notebook](https://colab.research.google.com/drive/1c7MHD-T1forUPGcC_jlwsIptOzpG3hSj?usp=sharing).

* [my notebook](https://colab.research.google.com/drive/1UBIWVCIXowlUJpp5gwD-Z0hmVlLLCr9I?usp=sharing), copied from the above.

### Who can help?

@sgugger

`OpenAssistant/oasst-sft-4-pythia-12b-epoch-3.5`, one of the models listed as available in the official notebook, is unusable due to the length of the tokens. When executing `agent.chat()` or `agent.run()` with the model, the following error raised:

```

ValueError: Error 422: {'error': 'Input validation error: `inputs` must have less than 1024 tokens. Given: 1553', 'error_type': 'validation'}

```

I guess that `max_input_length` of the model is `1024` if it follows the model configuration [here](https://github.com/LAION-AI/Open-Assistant/blob/main/oasst-shared/oasst_shared/model_configs.py#L50). Could you check this error? In addition, I would like to hear if you will update to reduce the length of the default prompt for Agent.

### Information

- [X] The official example scripts

- [ ] My own modified scripts

### Tasks

- [X] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

Below is the code for the first three cells of the official code provided in the notebook.

```

transformers_version = "v4.29.0"

print(f"Setting up everything with transformers version {transformers_version}")

!pip install huggingface_hub>=0.14.1 git+https://github.com/huggingface/transformers@$transformers_version -q diffusers accelerate datasets torch soundfile sentencepiece opencv-python openai

import IPython

import soundfile as sf

def play_audio(audio):

sf.write("speech_converted.wav", audio.numpy(), samplerate=16000)

return IPython.display.Audio("speech_converted.wav")

from huggingface_hub import notebook_login

notebook_login()

```

```

agent_name = "OpenAssistant (HF Token)"

import getpass

if agent_name == "StarCoder (HF Token)":

from transformers.tools import HfAgent

agent = HfAgent("https://api-inference.huggingface.co/models/bigcode/starcoder")

print("StarCoder is initialized 💪")

elif agent_name == "OpenAssistant (HF Token)":

from transformers.tools import HfAgent

agent = HfAgent(url_endpoint="https://api-inference.huggingface.co/models/OpenAssistant/oasst-sft-4-pythia-12b-epoch-3.5")

print("OpenAssistant is initialized 💪")

if agent_name == "OpenAI (API Key)":

from transformers.tools import OpenAiAgent

pswd = getpass.getpass('OpenAI API key:')

agent = OpenAiAgent(model="text-davinci-003", api_key=pswd)

print("OpenAI is initialized 💪")

```

```

boat = agent.run("Generate an image of a boat in the water")

boat

```

### Expected behavior

```

==Explanation from the agent==

I will use the following tool: `image_generator` to generate an image.

==Code generated by the agent==

image = image_generator(prompt="a boat in the water")

==Result==

<image.png>

```

as like `bigcode/starcoder` or `text-davinci-003`, but I got

```

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

[<ipython-input-3-4578d52c5ccf>](https://localhost:8080/#) in <cell line: 1>()

----> 1 boat = agent.run("Generate an image of a boat in the water")

2 boat

1 frames

[/usr/local/lib/python3.10/dist-packages/transformers/tools/agents.py](https://localhost:8080/#) in run(self, task, return_code, remote, **kwargs)

312 """

313 prompt = self.format_prompt(task)

--> 314 result = self.generate_one(prompt, stop=["Task:"])

315 explanation, code = clean_code_for_run(result)

316

[/usr/local/lib/python3.10/dist-packages/transformers/tools/agents.py](https://localhost:8080/#) in generate_one(self, prompt, stop)

486 return self._generate_one(prompt)

487 elif response.status_code != 200:

--> 488 raise ValueError(f"Error {response.status_code}: {response.json()}")

489

490 result = response.json()[0]["generated_text"]

ValueError: Error 422: {'error': 'Input validation error: `inputs` must have less than 1024 tokens. Given: 1553', 'error_type': 'validation'}

```

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/25220/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/25220/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/25219

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/25219/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/25219/comments

|

https://api.github.com/repos/huggingface/transformers/issues/25219/events

|

https://github.com/huggingface/transformers/issues/25219

| 1,830,148,402 |

I_kwDOCUB6oc5tFdky

| 25,219 |

Trainer.model.push_to_hub() should allow private repository flag

|

{

"login": "arikanev",

"id": 16505410,

"node_id": "MDQ6VXNlcjE2NTA1NDEw",

"avatar_url": "https://avatars.githubusercontent.com/u/16505410?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/arikanev",

"html_url": "https://github.com/arikanev",

"followers_url": "https://api.github.com/users/arikanev/followers",

"following_url": "https://api.github.com/users/arikanev/following{/other_user}",

"gists_url": "https://api.github.com/users/arikanev/gists{/gist_id}",

"starred_url": "https://api.github.com/users/arikanev/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/arikanev/subscriptions",

"organizations_url": "https://api.github.com/users/arikanev/orgs",

"repos_url": "https://api.github.com/users/arikanev/repos",

"events_url": "https://api.github.com/users/arikanev/events{/privacy}",

"received_events_url": "https://api.github.com/users/arikanev/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"Hi @arikanev, thanks for raising this issue. \r\n\r\nIn `TrainingArguments` you can set [hub_private_repo to `True`](https://huggingface.co/docs/transformers/v4.31.0/en/main_classes/trainer#transformers.TrainingArguments.hub_private_repo) to control this. ",

"Thanks for the heads up! Time saver :) ",

"Please note, I tried using this in TrainingArguments and it did not work! I set hub_private_repo to True.",

"Hi @arikanev, OK thanks for reporting.. \r\n\r\nSo that we can help, could you provide some more details: \r\n* A minimal code snippet to reproduce the issue \r\n* Information about the running environment: run `transformers-cli env` in the terminal and copy-paste the output \r\n* More information about the expected and observed behaviour: when you say it didn't work, what specifically? Did it fail with an error, not create a repo, create a public repo etc? ",

"```\r\ntraining_args = TrainingArguments(\r\n output_dir=\"./myLM\", # output directory for model predictions and checkpoints\r\n overwrite_output_dir=True,\r\n num_train_epochs=50, # total number of training epochs\r\n per_device_train_batch_size=16, # batch size per device during training\r\n per_device_eval_batch_size=64, # batch size for evaluation\r\n warmup_steps=warmup_steps, # number of warmup steps for learning rate scheduler\r\n weight_decay=weight_decay, # strength of weight decay\r\n logging_dir=\"./logs\", # directory for storing logs\r\n logging_steps=10000, # when to print log\r\n evaluation_strategy=\"steps\",\r\n report_to='wandb',\r\n save_total_limit=2,\r\n hub_private_repo=True,\r\n fp16=True,\r\n )\r\n \r\n```\r\n\r\n\r\ntokenizers-0.13.3 transformers-4.31.0\r\n\r\nIt created a public repository even though I set private_hub_repo=True\r\n\r\nThanks!\r\n\r\n\r\n\r\n\r\n \r\n ",

"Hi @arikanev, thanks for confirming. \r\n\r\nThat's really weird 🤔 I'm not able to reproduce on my end. Could you try running the following and let me know if it works:\r\n\r\n```\r\npython examples/pytorch/language-modeling/run_clm.py \\\r\n --model_name_or_path gpt2 \\\r\n --dataset_name wikitext \\\r\n --dataset_config_name wikitext-2-raw-v1 \\\r\n --per_device_train_batch_size 8 \\\r\n --per_device_eval_batch_size 8 \\\r\n --do_train \\\r\n --do_eval \\\r\n --output_dir /tmp/test-clm \\\r\n --hub_private_repo \\\r\n --push_to_hub \\\r\n --max_train_samples 10 \\\r\n --max_eval_samples 10\r\n```\r\n\r\nThis is running [this example script](https://github.com/huggingface/transformers/blob/5ee9693a1c77c617ebc43ef20194b6d3b674318e/examples/pytorch/language-modeling/run_clm.py) in transformers.",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored."

] | 1,690 | 1,694 | 1,694 |

NONE

| null |

### Feature request

Trainer.model.push_to_hub() should allow a push to a private repository, as opposed to just pushing to a public and having to private it after.

### Motivation

I get frustrated having to private my repositories instead of being able to upload models by default to a private repo programmatically.

### Your contribution

I’m not sure I have the bandwidth at the moment or have the infrastructure know how to contribute this option, but if this is of interest to many people and you guys could use the help I can work on a PR.

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/25219/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/25219/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/25218

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/25218/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/25218/comments

|

https://api.github.com/repos/huggingface/transformers/issues/25218/events

|

https://github.com/huggingface/transformers/pull/25218

| 1,830,127,630 |

PR_kwDOCUB6oc5W2ZB1

| 25,218 |

inject automatic end of utterance tokens

|

{

"login": "stas00",

"id": 10676103,

"node_id": "MDQ6VXNlcjEwNjc2MTAz",

"avatar_url": "https://avatars.githubusercontent.com/u/10676103?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/stas00",

"html_url": "https://github.com/stas00",

"followers_url": "https://api.github.com/users/stas00/followers",

"following_url": "https://api.github.com/users/stas00/following{/other_user}",

"gists_url": "https://api.github.com/users/stas00/gists{/gist_id}",

"starred_url": "https://api.github.com/users/stas00/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/stas00/subscriptions",

"organizations_url": "https://api.github.com/users/stas00/orgs",

"repos_url": "https://api.github.com/users/stas00/repos",

"events_url": "https://api.github.com/users/stas00/events{/privacy}",

"received_events_url": "https://api.github.com/users/stas00/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"_The documentation is not available anymore as the PR was closed or merged._",

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_25218). All of your documentation changes will be reflected on that endpoint."

] | 1,690 | 1,690 | 1,690 |

CONTRIBUTOR

| null |

This adds a new feature:

For select models add `<end_of_utterance>` token at the end of each utterance.

The user can now easily break up their prompt and not need to worry about messing with tokens.

So for this prompt:

```

[

"User:",

image,

"Describe this image.",

"Assistant: An image of two kittens in grass.",

"User:",

"https://hips.hearstapps.com/hmg-prod/images/dog-puns-1581708208.jpg",

"Describe this image.",

"Assistant:",

],

```

this new code with add_end_of_utterance_token=True will generate:

`full_text='<s>User:<fake_token_around_image><image><fake_token_around_image>Describe this image.<end_of_utterance>Assistant: An image of two kittens in grass.<end_of_utterance>User:<fake_token_around_image><image><fake_token_around_image>Describe this image.<end_of_utterance>Assistant:'

`

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/25218/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/25218/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/25218",

"html_url": "https://github.com/huggingface/transformers/pull/25218",

"diff_url": "https://github.com/huggingface/transformers/pull/25218.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/25218.patch",

"merged_at": 1690846918000

}

|

https://api.github.com/repos/huggingface/transformers/issues/25217

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/25217/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/25217/comments

|

https://api.github.com/repos/huggingface/transformers/issues/25217/events

|

https://github.com/huggingface/transformers/issues/25217

| 1,829,805,442 |

I_kwDOCUB6oc5tEJ2C

| 25,217 |

Scoring translations is unacceptably slow

|

{

"login": "erip",

"id": 2348806,

"node_id": "MDQ6VXNlcjIzNDg4MDY=",

"avatar_url": "https://avatars.githubusercontent.com/u/2348806?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/erip",

"html_url": "https://github.com/erip",

"followers_url": "https://api.github.com/users/erip/followers",

"following_url": "https://api.github.com/users/erip/following{/other_user}",

"gists_url": "https://api.github.com/users/erip/gists{/gist_id}",

"starred_url": "https://api.github.com/users/erip/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/erip/subscriptions",

"organizations_url": "https://api.github.com/users/erip/orgs",

"repos_url": "https://api.github.com/users/erip/repos",

"events_url": "https://api.github.com/users/erip/events{/privacy}",

"received_events_url": "https://api.github.com/users/erip/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"cc @gante ",

"Hey @erip 👋 \r\n\r\nSadly, I'm out of bandwidth to dive into the performance of very specific generation modes (in this case, beam search with `PrefixConstrainedLogitsProcessor`). If you'd like to explore the issue and pinpoint the cause of the performance issue, I may be able to help, depending on the complexity of the fix.\r\n\r\nMeanwhile, I've noticed that you use `torch.compile`. I would advise you not to use it with text generation, as your model observes different shapes at each forward pass call, resulting in potential slowdowns :) ",

"Cheers, @gante. I'll try removing the compilation to see how far that moves the needle. I'm trying to score ~17m translations which tqdm is reporting will take ~50 days so we'll see what the delta is without `torch.compile`. I'll post updates here as well.\r\n\r\nEdit: 96 days w/o `torch.compile` :-)",

"@erip have you considered applying 4-bit quantization ([docs](https://huggingface.co/docs/transformers/v4.31.0/en/main_classes/quantization#load-a-large-model-in-4bit), reduces the GPU ram requirements to ~1/6 of the original size AND should result in speedups) and then increasing the batch size as much as possible? \r\n\r\nYou may be able to get it <1 week this way, and the noise introduced by 4 bit quantization is small.",

"I guess I'm more concerned that this is going to take a lot of time at all. Fairseq, Marian, and Sockeye can score translations extremely quickly (17m would probably take ~1-2 days on similar hardware). Transformers can translate in that amount of time, so I'm lead to conclude that logits processors are just performance killers.",

"@erip some of them are performance killers (e.g. `PrefixConstrainedLogitsProcessor ` seems to need vectorization). Our Pytorch beam search implementation is not optimized either, compared to our TF/FLAX implementation.\r\n\r\nWe focus on breadth of techniques and models, but welcome optimization contributions 🤗 ",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored."

] | 1,690 | 1,694 | 1,694 |

CONTRIBUTOR

| null |

### System Info

- `transformers` version: 4.29.0

- Platform: Linux-3.10.0-862.11.6.el7.x86_64-x86_64-with-glibc2.17

- Python version: 3.9.16

- Huggingface_hub version: 0.12.1

- Safetensors version: 0.3.1

- PyTorch version (GPU?): 2.0.1+cu117 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: No

### Who can help?

@ArthurZucker and @younesbelkada

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [X] My own task or dataset (give details below)

### Reproduction

1. Install transformers, pytorch, tqdm

2. Create `forced_decode.py` [^1]

3. Create `repro.sh` [^2]

4. Run `bash repro.sh` and observe extremely slow scoring speeds.

[^1]:

```python

#!/usr/bin/env python3

import itertools

from argparse import ArgumentParser, FileType

from tqdm import tqdm

import torch

from transformers import PrefixConstrainedLogitsProcessor, AutoTokenizer, AutoModelForSeq2SeqLM

def setup_argparse():

parser = ArgumentParser()

parser.add_argument("-t", "--tokenizer", type=str, required=True)

parser.add_argument("-m", "--model", type=str, required=True)

parser.add_argument("-bs", "--batch-size", type=int, default=16)

parser.add_argument("-i", "--input", type=FileType("r"), default="-")

parser.add_argument("-o", "--output", type=FileType("w"), default="-")

parser.add_argument("-d", "--delimiter", type=str, default="\t")

parser.add_argument("--device", type=str, default="cpu")

return parser

def create_processor_fn(ref_tokens_by_segment):

def inner(batch_id, _):

return ref_tokens_by_segment[batch_id]

return inner

def tokenize(src, tgt, tokenizer):

inputs = tokenizer(src, text_target=tgt, padding=True, return_tensors="pt")

return inputs

def forced_decode(inputs, model, num_beams=5):

inputs = inputs.to(model.device)

logit_processor = PrefixConstrainedLogitsProcessor(create_processor_fn(inputs["labels"]), num_beams=num_beams)

output = model.generate(**inputs, num_beams=num_beams, logits_processor=[logit_processor], return_dict_in_generate=True, output_scores=True)

return output.sequences_scores.tolist()

def batch_lines(it, batch_size):

it = iter(it)

item = list(itertools.islice(it, batch_size))

while item:

yield item

item = list(itertools.islice(it, batch_size))

if __name__ == "__main__":

args = setup_argparse().parse_args()

f_tokenizer = AutoTokenizer.from_pretrained(args.tokenizer)

f_model = torch.compile(AutoModelForSeq2SeqLM.from_pretrained(args.model).to(args.device))

with args.input as fin:

inputs = list(batch_lines(map(str.strip, fin), args.batch_size))

inputs_logits = []

for batch in tqdm(inputs):

src, tgt = zip(*[line.split(args.delimiter) for line in batch])

inputs_logits.append(tokenize(src, tgt, f_tokenizer))

with args.output as fout, torch.no_grad():

for input in tqdm(inputs_logits):

scores = forced_decode(input, f_model)

print(*scores, sep="\n", file=fout)

```

[^2]:

```bash

#!/usr/bin/env bash

function get_input {

curl -s https://gist.githubusercontent.com/erip/e37283b8f51d4e2c16996fc8a6a01aa7/raw/f5a3daffb04dad76464188c2a6949649f5cf3f9c/en-de.tsv

}

python forced_decode.py \

-t Helsinki-NLP/opus-mt-en-de -m Helsinki-NLP/opus-mt-en-de \

-i <(get_input) \

--device cuda:0 \

-bs 16

```

### Expected behavior

Scoring should be _very fast_ since the beam doesn't actually need to be searched, but I'm finding speeds on the order of seconds per batch which is far slower than generating.

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/25217/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/25217/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/25216

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/25216/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/25216/comments

|

https://api.github.com/repos/huggingface/transformers/issues/25216/events

|

https://github.com/huggingface/transformers/pull/25216

| 1,829,739,665 |

PR_kwDOCUB6oc5W1EHH

| 25,216 |

[`Docs`/`quantization`] Clearer explanation on how things works under the hood. + remove outdated info

|

{

"login": "younesbelkada",

"id": 49240599,

"node_id": "MDQ6VXNlcjQ5MjQwNTk5",

"avatar_url": "https://avatars.githubusercontent.com/u/49240599?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/younesbelkada",

"html_url": "https://github.com/younesbelkada",

"followers_url": "https://api.github.com/users/younesbelkada/followers",

"following_url": "https://api.github.com/users/younesbelkada/following{/other_user}",

"gists_url": "https://api.github.com/users/younesbelkada/gists{/gist_id}",

"starred_url": "https://api.github.com/users/younesbelkada/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/younesbelkada/subscriptions",

"organizations_url": "https://api.github.com/users/younesbelkada/orgs",

"repos_url": "https://api.github.com/users/younesbelkada/repos",

"events_url": "https://api.github.com/users/younesbelkada/events{/privacy}",

"received_events_url": "https://api.github.com/users/younesbelkada/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"_The documentation is not available anymore as the PR was closed or merged._"

] | 1,690 | 1,690 | 1,690 |

CONTRIBUTOR

| null |

# What does this PR do?

As discussed internally with @amyeroberts , this PR makes things clearer to users on how things work under the hood for quantized models. Before this PR it was not clear to users how the other modules (non `torch.nn.Linear`) were treated under the hood when quantizing a model.

cc @amyeroberts

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/25216/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/25216/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/25216",

"html_url": "https://github.com/huggingface/transformers/pull/25216",

"diff_url": "https://github.com/huggingface/transformers/pull/25216.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/25216.patch",

"merged_at": 1690880212000

}

|

https://api.github.com/repos/huggingface/transformers/issues/25215

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/25215/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/25215/comments

|

https://api.github.com/repos/huggingface/transformers/issues/25215/events

|

https://github.com/huggingface/transformers/issues/25215

| 1,829,715,520 |

I_kwDOCUB6oc5tDz5A

| 25,215 |

config.json file not available

|

{

"login": "andysingal",

"id": 20493493,

"node_id": "MDQ6VXNlcjIwNDkzNDkz",

"avatar_url": "https://avatars.githubusercontent.com/u/20493493?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/andysingal",

"html_url": "https://github.com/andysingal",

"followers_url": "https://api.github.com/users/andysingal/followers",

"following_url": "https://api.github.com/users/andysingal/following{/other_user}",

"gists_url": "https://api.github.com/users/andysingal/gists{/gist_id}",

"starred_url": "https://api.github.com/users/andysingal/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/andysingal/subscriptions",

"organizations_url": "https://api.github.com/users/andysingal/orgs",

"repos_url": "https://api.github.com/users/andysingal/repos",

"events_url": "https://api.github.com/users/andysingal/events{/privacy}",

"received_events_url": "https://api.github.com/users/andysingal/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"Hi @andysingal \r\nit seems you are trying to load an adapter model. You can load it with\r\n\r\n```python\r\nfrom peft import AutoPeftModelForCausalLM\r\n\r\nmodel = AutoPeftModelForCausalLM.from_pretrained(\"Andyrasika/qlora-2-7b-andy\")\r\n```\r\n\r\nIf you want to load the base model in 4bit:\r\n\r\n```python\r\nfrom peft import AutoPeftModelForCausalLM\r\n\r\nmodel = AutoPeftModelForCausalLM.from_pretrained(\"Andyrasika/qlora-2-7b-andy\", load_in_4bit=True)\r\n```\r\n\r\nOnce https://github.com/huggingface/transformers/pull/25077 will get merged you'll be able to load the model directly with `AutoModelForCausalLM`.",

"Thanks for your email. But why am I getting the error message?. I already\r\nhave adapter_config. JSON .\r\n\r\nOn Mon, Jul 31, 2023 at 23:09 Younes Belkada ***@***.***>\r\nwrote:\r\n\r\n> Hi @andysingal <https://github.com/andysingal>\r\n> it seems you are trying to load an adapter model. You can load it with\r\n>\r\n> from peft import AutoPeftModelForCausalLM\r\n> model = AutoPeftModelForCausalLM.from_pretrained(\"Andyrasika/qlora-2-7b-andy\")\r\n>\r\n> If you want to load the base model in 4bit:\r\n>\r\n> from peft import AutoPeftModelForCausalLM\r\n> model = AutoPeftModelForCausalLM.from_pretrained(\"Andyrasika/qlora-2-7b-andy\", load_in_4bit=True)\r\n>\r\n> Once #25077 <https://github.com/huggingface/transformers/pull/25077> will\r\n> get merged you'll be able to load the model directly with\r\n> AutoModelForCausalLM.\r\n>\r\n> —\r\n> Reply to this email directly, view it on GitHub\r\n> <https://github.com/huggingface/transformers/issues/25215#issuecomment-1658859481>,\r\n> or unsubscribe\r\n> <https://github.com/notifications/unsubscribe-auth/AE4LJNPF5H4NF3BC4XKTXL3XS7UUDANCNFSM6AAAAAA26SP5AE>\r\n> .\r\n> You are receiving this because you were mentioned.Message ID:\r\n> ***@***.***>\r\n>\r\n",

"Hi @andysingal \r\nIt is because `AutoModelForCausalLM` will look if there is any `config.json` file present on that model folder and not `adapter_config.json` which are two different file names",

"When you run the model created it gives the same error. Assume I am making\r\nan error in the notebook, but inference does not need to show the error on\r\nyour website?\r\nPlease advise on how to fix it?\r\n\r\nOn Mon, Jul 31, 2023 at 23:22 Younes Belkada ***@***.***>\r\nwrote:\r\n\r\n> Hi @andysingal <https://github.com/andysingal>\r\n> It is because AutoModelForCausalLM will look if there is any config.json\r\n> file present on that model folder and not adapter_config.json which are\r\n> two different file names\r\n>\r\n> —\r\n> Reply to this email directly, view it on GitHub\r\n> <https://github.com/huggingface/transformers/issues/25215#issuecomment-1658883214>,\r\n> or unsubscribe\r\n> <https://github.com/notifications/unsubscribe-auth/AE4LJNK3H374C3UHR2ZABBTXS7WF7ANCNFSM6AAAAAA26SP5AE>\r\n> .\r\n> You are receiving this because you were mentioned.Message ID:\r\n> ***@***.***>\r\n>\r\n",

"@younesbelkada Any updates?",

"Hi @andysingal \r\nThanks for the ping, as stated above, in your repository only adapter weights and config are stored. Currently it is not supported to load apapted models directly using `AutoModelForCausalLM.from_pretrained(xxx)`, please refer to this comment https://github.com/huggingface/transformers/issues/25215#issuecomment-1658859481 to effectively load the adapted model using PEFT library.",

"> \r\n\r\nThanks @younesbelkada for your instant reply. My question is when i compute Text generation inference on your website it gives that error. **I understand i need to use peft for loading the adpater and config files using peft in my preferred env**\r\n\r\nLooking forward to hearing from you @ArthurZucker ",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored.",

"> > \r\n> \r\n> Thanks @younesbelkada for your instant reply. My question is when i compute Text generation inference on your website it gives that error. **I understand i need to use peft for loading the adpater and config files using peft in my preferred env**\r\n> \r\n> Looking forward to hearing from you @ArthurZucker\r\n\r\nyou may need to load the lora_adapter into model using the the following code\r\n```python\r\nmodel = AutoModelForCausalLM.from_pretrained(\"./original_model_path \", trust_remote_code=True)\r\nmodel = PeftModel.from_pretrained(model, \"./lora_model_path\")\r\n```",

"Im running into the same issue through the user interface",

"it is throwing the same OS error even with \r\n`model = AutoPeftModelForCausalLM.from_pretrained(\"Andyrasika/qlora-2-7b-andy\"`"

] | 1,690 | 1,706 | 1,694 |

NONE

| null |

### System Info

colab

notebook: https://colab.research.google.com/drive/118RTcKAQFIICDsgTcabIF-_XKmOgM-cc?usp=sharing

### Who can help?

@sgugger @younesbelkada

### Information

- [ ] The official example scripts

- [ ] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

```

RepositoryNotFoundError: 404 Client Error. (Request ID:

Root=1-64c7ee9d-240cd76b269a914d67b458fa;dcab1901-0ebf-4282-b8a4-9d1e087de5b4)

Repository Not Found for url: https://huggingface.co/None/resolve/main/config.json.

Please make sure you specified the correct `repo_id` and `repo_type`.

If you are trying to access a private or gated repo, make sure you are authenticated.

During handling of the above exception, another exception occurred:

```

### Expected behavior

https://huggingface.co/Andyrasika/qlora-2-7b-andy giving error:

```

Andyrasika/qlora-2-7b-andy does not appear to have a file named config.json. Checkout 'https://huggingface.co/Andyrasika/qlora-2-7b-andy/7a0facc5b1f630824ac5b38853dec5e988a5569e' for available files.

```

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/25215/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/25215/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/25214

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/25214/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/25214/comments

|

https://api.github.com/repos/huggingface/transformers/issues/25214/events

|

https://github.com/huggingface/transformers/pull/25214

| 1,829,588,972 |

PR_kwDOCUB6oc5W0jNa

| 25,214 |

Fix docker image build failure

|

{

"login": "ydshieh",

"id": 2521628,

"node_id": "MDQ6VXNlcjI1MjE2Mjg=",

"avatar_url": "https://avatars.githubusercontent.com/u/2521628?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ydshieh",

"html_url": "https://github.com/ydshieh",

"followers_url": "https://api.github.com/users/ydshieh/followers",

"following_url": "https://api.github.com/users/ydshieh/following{/other_user}",

"gists_url": "https://api.github.com/users/ydshieh/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ydshieh/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ydshieh/subscriptions",

"organizations_url": "https://api.github.com/users/ydshieh/orgs",

"repos_url": "https://api.github.com/users/ydshieh/repos",

"events_url": "https://api.github.com/users/ydshieh/events{/privacy}",

"received_events_url": "https://api.github.com/users/ydshieh/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"_The documentation is not available anymore as the PR was closed or merged._"

] | 1,690 | 1,690 | 1,690 |

COLLABORATOR

| null |

# What does this PR do?

We again get not enough disk size error on docker image build CI. I should try to learn some ways to reduce the size and avoid this error, but this PR fixes this situation in a quick way: install torch/tensorflow before running `pip install .[dev]`, so they are only install once, and we have fewer docker layers produced.

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/25214/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/25214/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/25214",

"html_url": "https://github.com/huggingface/transformers/pull/25214",

"diff_url": "https://github.com/huggingface/transformers/pull/25214.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/25214.patch",

"merged_at": 1690827196000

}

|

https://api.github.com/repos/huggingface/transformers/issues/25213

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/25213/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/25213/comments

|

https://api.github.com/repos/huggingface/transformers/issues/25213/events

|

https://github.com/huggingface/transformers/pull/25213

| 1,829,494,005 |

PR_kwDOCUB6oc5W0NhC

| 25,213 |

Update tiny model info. and pipeline testing

|

{

"login": "ydshieh",

"id": 2521628,

"node_id": "MDQ6VXNlcjI1MjE2Mjg=",

"avatar_url": "https://avatars.githubusercontent.com/u/2521628?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ydshieh",

"html_url": "https://github.com/ydshieh",

"followers_url": "https://api.github.com/users/ydshieh/followers",

"following_url": "https://api.github.com/users/ydshieh/following{/other_user}",

"gists_url": "https://api.github.com/users/ydshieh/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ydshieh/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ydshieh/subscriptions",

"organizations_url": "https://api.github.com/users/ydshieh/orgs",

"repos_url": "https://api.github.com/users/ydshieh/repos",

"events_url": "https://api.github.com/users/ydshieh/events{/privacy}",

"received_events_url": "https://api.github.com/users/ydshieh/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"_The documentation is not available anymore as the PR was closed or merged._",

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_25213). All of your documentation changes will be reflected on that endpoint."

] | 1,690 | 1,690 | 1,690 |

COLLABORATOR

| null |

# What does this PR do?

Just a regular update.

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/25213/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/25213/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/25213",

"html_url": "https://github.com/huggingface/transformers/pull/25213",

"diff_url": "https://github.com/huggingface/transformers/pull/25213.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/25213.patch",

"merged_at": 1690824933000

}

|

https://api.github.com/repos/huggingface/transformers/issues/25212

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/25212/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/25212/comments

|

https://api.github.com/repos/huggingface/transformers/issues/25212/events

|

https://github.com/huggingface/transformers/issues/25212

| 1,829,319,221 |

I_kwDOCUB6oc5tCTI1

| 25,212 |

MinNewTokensLengthLogitsProcessor

|

{

"login": "gante",

"id": 12240844,

"node_id": "MDQ6VXNlcjEyMjQwODQ0",

"avatar_url": "https://avatars.githubusercontent.com/u/12240844?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/gante",

"html_url": "https://github.com/gante",

"followers_url": "https://api.github.com/users/gante/followers",

"following_url": "https://api.github.com/users/gante/following{/other_user}",

"gists_url": "https://api.github.com/users/gante/gists{/gist_id}",

"starred_url": "https://api.github.com/users/gante/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/gante/subscriptions",

"organizations_url": "https://api.github.com/users/gante/orgs",

"repos_url": "https://api.github.com/users/gante/repos",

"events_url": "https://api.github.com/users/gante/events{/privacy}",

"received_events_url": "https://api.github.com/users/gante/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[] | 1,690 | 1,690 | 1,690 |

MEMBER

| null | null |

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/25212/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/25212/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/25211

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/25211/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/25211/comments

|

https://api.github.com/repos/huggingface/transformers/issues/25211/events

|

https://github.com/huggingface/transformers/pull/25211

| 1,829,299,673 |

PR_kwDOCUB6oc5WziM6

| 25,211 |

Fix `all_model_classes` in `FlaxBloomGenerationTest`

|

{

"login": "ydshieh",

"id": 2521628,

"node_id": "MDQ6VXNlcjI1MjE2Mjg=",

"avatar_url": "https://avatars.githubusercontent.com/u/2521628?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ydshieh",

"html_url": "https://github.com/ydshieh",

"followers_url": "https://api.github.com/users/ydshieh/followers",

"following_url": "https://api.github.com/users/ydshieh/following{/other_user}",

"gists_url": "https://api.github.com/users/ydshieh/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ydshieh/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ydshieh/subscriptions",

"organizations_url": "https://api.github.com/users/ydshieh/orgs",

"repos_url": "https://api.github.com/users/ydshieh/repos",

"events_url": "https://api.github.com/users/ydshieh/events{/privacy}",

"received_events_url": "https://api.github.com/users/ydshieh/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"_The documentation is not available anymore as the PR was closed or merged._"

] | 1,690 | 1,691 | 1,690 |

COLLABORATOR

| null |

# What does this PR do?

It should be a tuple (which requires the ending `,`)

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/25211/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/25211/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/25211",

"html_url": "https://github.com/huggingface/transformers/pull/25211",

"diff_url": "https://github.com/huggingface/transformers/pull/25211.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/25211.patch",

"merged_at": 1690817526000

}

|

https://api.github.com/repos/huggingface/transformers/issues/25210

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/25210/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/25210/comments

|

https://api.github.com/repos/huggingface/transformers/issues/25210/events

|

https://github.com/huggingface/transformers/issues/25210

| 1,829,299,671 |

I_kwDOCUB6oc5tCOXX

| 25,210 |

importlib.metadata.PackageNotFoundError: bitsandbytes

|

{

"login": "looperEit",

"id": 46367388,

"node_id": "MDQ6VXNlcjQ2MzY3Mzg4",

"avatar_url": "https://avatars.githubusercontent.com/u/46367388?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/looperEit",

"html_url": "https://github.com/looperEit",

"followers_url": "https://api.github.com/users/looperEit/followers",

"following_url": "https://api.github.com/users/looperEit/following{/other_user}",

"gists_url": "https://api.github.com/users/looperEit/gists{/gist_id}",

"starred_url": "https://api.github.com/users/looperEit/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/looperEit/subscriptions",

"organizations_url": "https://api.github.com/users/looperEit/orgs",

"repos_url": "https://api.github.com/users/looperEit/repos",

"events_url": "https://api.github.com/users/looperEit/events{/privacy}",

"received_events_url": "https://api.github.com/users/looperEit/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"Hi @looperEit, thanks for reporting this issue! \r\n\r\nCould you share the installed version of bitsandbytes and how you installed it? \r\n\r\ncc @younesbelkada ",

"i used the `pip install -r *requriment.txt\"`,and the txt file like:\r\n\r\n\r\naccelerate\r\ncolorama~=0.4.6\r\ncpm_kernels\r\nsentencepiece~=0.1.99\r\nstreamlit~=1.25.0\r\ntransformers_stream_generator\r\ntorch~=2.0.1\r\ntransformers~=4.31.0",

"Hi @looperEit \r\nCan you try to run\r\n```bash\r\npip install bitsandbytes\r\n```\r\nit looks like this is missing in `requirements.txt` file",

"> Hi @looperEit Can you try to run\r\n> \r\n> ```shell\r\n> pip install bitsandbytes\r\n> ```\r\n> \r\n> it looks like this is missing in `requirements.txt` file\r\nwhen i installed the bitsandbytes, it shows:\r\n\r\nmay i join it in my `requirements.txt` file?",

"@looperEit Yes, you can certainly add it to your own requirements.txt file. \r\n\r\nFor the error being raised, could you copy paste the full text of the traceback, rather than a screenshot? This makes it easier for us to debug, as we highlight and copy the text, and also makes the issue findable through search for anyone else who's had the issue. \r\n\r\nIn the screenshot for the error after installing bitsandbytes, could you show the full trackback? The final error message / exception appears to be missing. ",

"i'm so sorry QAQ ,here is the problem when i installed the bitsandbytes:\r\n`/root/anaconda3/envs/baichuan/bin/python3.9 /tmp/Baichuan-13B/ demo. pyTraceback (most recent call last):\r\nFile \"/root/anacondaS/envs/baichuan/1io/pythons.9/site-packages/transfonmens/utils/import_utils.py\",line 1099,in _get_modulereturn importlib.import_module(\".\" + module_name,self.__name_-)\r\nFile \"/root/anaconda3/envs/baichuan/lib/python3.9/impontlib/.-init...py\",line 127,in impont_ modulereturn _bootstrap. _gcd_import(name[level:], package,level)\r\nFile \"<frozen importlib._bootstrap>\",line 1030,in _gcd_importFile \"<frozen importlib._bootstrap>\",line 1007,in _find_and_load\r\nFile \"<frozen importlib._bootstrap>\",line 986, in _find_and_load_unlockedFile \"<frozen importlib._bootstrap>\",line 680, in _load_unlocked\r\nFile \"<frozen importlib._bootstrap_externals\", line 850, in exec_module\r\nFile \"<frozen importlib._bootstrap>\", line 228,in _call_with_frames_removed`\r\nbut finally when i installed the `spicy`, i make it. i didn't know why. Maybe the transfomer package and bitsandbytes must coexist with spicy?",

"maybe 🤷♀️ although the package manager should have installed any dependencies alongside the library itself. Do you mean `scipy` for the dependency? I've never heard of spicy. \r\n\r\nEither way, I'm glad to hear that you were able to resolve the issue :) Managing python environments is a perpetual juggling act. ",

"> maybe 🤷♀️ although the package manager should have installed any dependencies alongside the library itself. Do you mean `scipy` for the dependency? I've never heard of spicy.\r\n> \r\n> Either way, I'm glad to hear that you were able to resolve the issue :) Managing python environments is a perpetual juggling act.\r\n\r\ni'm so sorry,I know where the problem is. The model requirement I use does not include the scipy package. I'm really sorry for wasting your time and disturbing you. Thanks.\r\n\r\n",

"`pip install bitsandbytes ` works for me.",

"`pip install bitsandbytes` really works for me. But the bitsandbytes library only works on CUDA GPU. What a pity! I want to use it on Intel cpu.",

"> Hi @looperEit Can you try to run\r\n> \r\n> ```shell\r\n> pip install bitsandbytes\r\n> ```\r\n> \r\n> it looks like this is missing in `requirements.txt` file\r\n\r\nNice, thank you bro",

"> ```shell\r\n> pip install bitsandbytes\r\n> ```\r\n\r\nThanks! works for me"

] | 1,690 | 1,701 | 1,690 |

NONE

| null |

### System Info

`transformers` version: 4.32.0.dev0

- Platform: Linux-5.4.0-153-generic-x86_64-with-glibc2.27

- Python version: 3.9.17

- Huggingface_hub version: 0.16.4

- Safetensors version: 0.3.1

- Accelerate version: 0.21.0

- Accelerate config: not found

- PyTorch version (GPU?): 2.0.1+cu117 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: <fill in>

- Using distributed or parallel set-up in script?: <fill in>

### Who can help?

_No response_

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [X] My own task or dataset (give details below)

### Reproduction

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, AutoConfig, AutoModel

from transformers import BitsAndBytesConfig

from transformers.generation.utils import GenerationConfig

import torch.nn as nn

model_name_or_path = "Baichuan-13B-Chat"

bnb_config = BitsAndBytesConfig(load_in_4bit=True, bnb_4bit_compute_dtype=torch.float16, bnb_4bit_use_double_quant=True, bnb_4bit_quant_type="nf4", llm_int8_threshold=6.0, llm_int8_has_fp16_weight=False)

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path, use_fast=False, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(model_name_or_path, trust_remote_code=True)

model.generation_config = GenerationConfig.from_pretrained(model_name_or_path)

messages = []

messages.append({"role": "user", "content": "世界上第二高的山峰是哪座"})

response = model.chat(tokenizer, messages)

print(response)

### Expected behavior

`import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, AutoConfig, AutoModel

from transformers import BitsAndBytesConfig

from transformers.generation.utils import GenerationConfig

import torch.nn as nn

model_name_or_path = "Baichuan-13B-Chat"

bnb_config = BitsAndBytesConfig(load_in_4bit=True, bnb_4bit_compute_dtype=torch.float16, bnb_4bit_use_double_quant=True, bnb_4bit_quant_type="nf4", llm_int8_threshold=6.0, llm_int8_has_fp16_weight=False)

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path, use_fast=False, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(model_name_or_path, trust_remote_code=True)

model.generation_config = GenerationConfig.from_pretrained(model_name_or_path)

messages = []

messages.append({"role": "user", "content": "世界上第二高的山峰是哪座"})

response = model.chat(tokenizer, messages)

print(response)

`

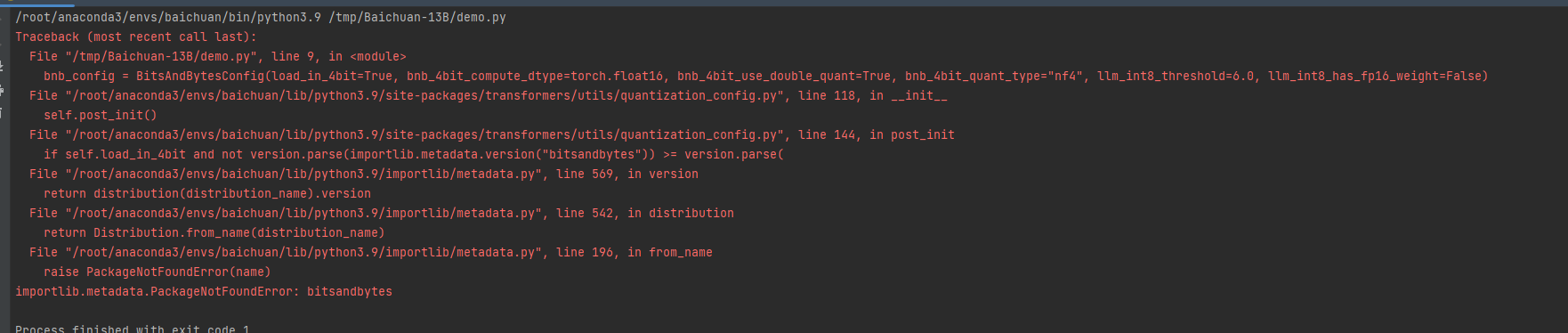

I reported an error after importing BitsAndBytesConfig from transformer:

But after I installed bitsandbytes, I still reported an error:

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/25210/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/25210/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/25209

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/25209/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/25209/comments

|

https://api.github.com/repos/huggingface/transformers/issues/25209/events

|

https://github.com/huggingface/transformers/pull/25209

| 1,829,156,249 |

PR_kwDOCUB6oc5WzCvk

| 25,209 |

Update InstructBLIP & Align values after rescale update

|

{

"login": "amyeroberts",

"id": 22614925,

"node_id": "MDQ6VXNlcjIyNjE0OTI1",

"avatar_url": "https://avatars.githubusercontent.com/u/22614925?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/amyeroberts",

"html_url": "https://github.com/amyeroberts",

"followers_url": "https://api.github.com/users/amyeroberts/followers",

"following_url": "https://api.github.com/users/amyeroberts/following{/other_user}",

"gists_url": "https://api.github.com/users/amyeroberts/gists{/gist_id}",

"starred_url": "https://api.github.com/users/amyeroberts/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/amyeroberts/subscriptions",

"organizations_url": "https://api.github.com/users/amyeroberts/orgs",

"repos_url": "https://api.github.com/users/amyeroberts/repos",

"events_url": "https://api.github.com/users/amyeroberts/events{/privacy}",

"received_events_url": "https://api.github.com/users/amyeroberts/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"_The documentation is not available anymore as the PR was closed or merged._",

"Agreed with your plan!",

"I also prefer 2., but I am a bit confused\r\n\r\n> Update rescale and ViVit config\r\n\r\nSo this only changes `ViVit` config and its `rescale`. And Align uses `EfficientNet` image processor. So when we change something in `ViVitf`, how this fixes the CI failing ... 🤔 ?",

"> So this only changes ViVit config and its rescale. And Align uses EfficientNet image processor. So when we change something in ViVitf, how this fixes the CI failing ... 🤔 ?\r\n\r\n@ydshieh Sorry, it wasn't super clear. The reason the CI is failing is because:\r\n* Align doesn't have its own image processor - it uses EfficientNet's\r\n* EfficientNet and ViVit both have the option to 'offset' when rescaling i.e. centering the pixel values around 0. \r\n* As both EfficientNet and ViVit's image processors have a rescale_factor of `1/255` by default, their docstrings mention setting `rescale_offset=True` rescales between `[-1, 1]` and they offset before rescaling, I assumed that then intention was to optionally rescale by `2 * rescale_factor` if `rescale_offset=True` for both\r\n* This was true for ViVit.\r\n* Align image processor config value are actually already updated so `rescale_factor` is `2 * (1 / 255) = 1 / 127.5`\r\n* Therefore, the resulting pixel values from Align's image processor weren't in the range `[-1, 1]` when rescale was changed. \r\n\r\nUpdating something in ViVit doesn't fix the CI directly. I'll also have to update `rescale` for both the methods to use Align's intended logic. \r\n\r\n",

"@ydshieh I've made the updates for option 2: \r\n\r\n* Reverted to the previous `rescale` behaviour for EfficientNet: 7c3b3bb\r\n* Same behaviour is copied across to ViVit, also in 7c3b3bb\r\n* Made PRs to update the rescale values in ViVit models - `rescale_factor` 1/255 -> 1/127.5\r\n - https://huggingface.co/google/vivit-b-16x2/discussions/1#64c92542c96a10fa85bbca0b\r\n - https://huggingface.co/google/vivit-b-16x2-kinetics400/discussions/2#64c9253aaf935d3927ec1409\r\n\r\n",

"Oh I know why I get confused now \r\n\r\n> Update the values in the ViVit model config. Revert the rescale behaviour so that rescale_offset and rescale_factor are independent.\r\n\r\nI thought only ViVit would be changed in this PR, but actually you mean both ViVit and `EfficientNet` (but the revert to before #25174).\r\n\r\nThanks for the update!\r\n"

] | 1,690 | 1,691 | 1,691 |

COLLABORATOR

| null |

# What does this PR do?

After #25174 the integration tests for Align and InstructBLIP fail.

### InstructBLIP

The difference in the output logits is small. Additionally, when debugging to check the differences and resolve the failing tests, it was noticed that the InstructBLIP tests are not independent. Running

```

RUN_SLOW=1 pytest tests/models/instructblip/test_modeling_instructblip.py::InstructBlipModelIntegrationTest::test_inference_vicuna_7b

```

produces different logits than running:

```

RUN_SLOW=1 pytest tests/models/instructblip/test_modeling_instructblip.py::InstructBlipModelIntegrationTest

```

The size differences between these two runs was similar to the size of differences seen with the update in `rescale`. Hence, I decided that updating the logits was OK.

### Align

The differences in align come from the model's image processor config values. Align uses EfficientNet's image processor. By default, [EfficientNet has `rescale_offset` set to `False`](https://github.com/huggingface/transformers/blob/0fd8d2aa2cc9e172a8af9af8508b2530f55ca14c/src/transformers/models/efficientnet/image_processing_efficientnet.py#L92) and [`rescale_factor` set to `1 / 255`](https://github.com/huggingface/transformers/blob/0fd8d2aa2cc9e172a8af9af8508b2530f55ca14c/src/transformers/models/efficientnet/image_processing_efficientnet.py#L91). Whereas Align has it set to `True` e.g. for [this config](https://huggingface.co/kakaobrain/align-base/blob/e96a37facc7b1f59090ece82293226b817afd6ba/preprocessor_config.json#L25) and the [`rescale_factor` set to `1 / 127.5`](https://huggingface.co/kakaobrain/align-base/blob/e96a37facc7b1f59090ece82293226b817afd6ba/preprocessor_config.json#L24).

In #25174, the `rescale` logic was updated so that if `rescale` is called with `offset=True`, the image values are rescaled between by `scale * 2`. This was because this was I was working from the EfficientNet and ViVit `rescale_factor` values which were both 1/255, so assumed the intention was to have this adjust if `rescale_offset` was True.

There's three options for resolving this:

1. Update Align Config

Update the values in the align checkpoint configs so that `rescale_factor` is `1 / 255` instead of `1 /127.5`.

* ✅ Rescale behaviour and config flags consistent across image processors

* ❌ Remaining unexpected behaviour for anyone who has their own checkpoints of this model.

2. Update rescale and ViVit config

Update the values in the ViVit model config. Revert the rescale behaviour so that `rescale_offset` and `rescale_factor` are independent.

* ✅ Rescale behaviour and config flags consistent across image processors

* ❌ Remaining unexpected behaviour for anyone who has their own checkpoints of this model.

* 🟡 No magic behaviour (adjusting `rescale_factor`) but relies on the user correctly updating two arguments to rescale between `[-1, 1]`

3. Revert EfficientNet's rescale method to previous behaviour.

* ✅ Both models fully backwards compatible with previous rescale behaviour and config values

* ❌ Rescale behaviour and config flags not consistent across image processors

I think option 2 is best. ViVit is a newly added model, it keeps consistent behaviour between Align / EffiicentNet and ViVit and the `rescale` method isn't doesn't anything magic to make the other arguments work. @sgugger @ydshieh I would be good to have your opinion on what you think is best here.

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/25209/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/25209/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/25209",

"html_url": "https://github.com/huggingface/transformers/pull/25209",

"diff_url": "https://github.com/huggingface/transformers/pull/25209.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/25209.patch",

"merged_at": 1691056870000

}

|

https://api.github.com/repos/huggingface/transformers/issues/25208

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/25208/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/25208/comments

|

https://api.github.com/repos/huggingface/transformers/issues/25208/events

|

https://github.com/huggingface/transformers/issues/25208

| 1,829,119,355 |

I_kwDOCUB6oc5tBiV7

| 25,208 |

Getting error while implementing Falcon-7B model: AttributeError: module 'signal' has no attribute 'SIGALRM'

|

{

"login": "amitkedia007",

"id": 83700281,

"node_id": "MDQ6VXNlcjgzNzAwMjgx",

"avatar_url": "https://avatars.githubusercontent.com/u/83700281?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/amitkedia007",

"html_url": "https://github.com/amitkedia007",

"followers_url": "https://api.github.com/users/amitkedia007/followers",

"following_url": "https://api.github.com/users/amitkedia007/following{/other_user}",

"gists_url": "https://api.github.com/users/amitkedia007/gists{/gist_id}",

"starred_url": "https://api.github.com/users/amitkedia007/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/amitkedia007/subscriptions",

"organizations_url": "https://api.github.com/users/amitkedia007/orgs",

"repos_url": "https://api.github.com/users/amitkedia007/repos",

"events_url": "https://api.github.com/users/amitkedia007/events{/privacy}",

"received_events_url": "https://api.github.com/users/amitkedia007/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"Hey @amitkedia007 ! I'm suspecting you are using Windows? Have you tried [this](https://huggingface.co/tiiuae/falcon-7b-instruct/discussions/57)?\r\n\r\nMaybe adding `trust_remote_code = True` to `tokenizer = AutoTokenizer.from_pretrained(model_name)` in order to allow downloading the appropriate tokenizer would work. \r\nPlease let me know if this works. Trying to help you fast here :)",

"Yes I tried this as well, as you said. But still I am getting the same error: \r\nTraceback (most recent call last):\r\n File \"C:\\DissData\\Dissertation-Brunel\\Falcon-7b.py\", line 8, in <module>\r\n text_generator = pipeline(\"text-generation\", model=model_name, tokenizer=tokenizer)\r\n File \"C:\\Users\\2267302\\AppData\\Roaming\\Python\\Python39\\site-packages\\transformers\\pipelines\\__init__.py\", line 705, in pipeline\r\n config = AutoConfig.from_pretrained(model, _from_pipeline=task, **hub_kwargs, **model_kwargs)\r\n File \"C:\\Users\\2267302\\AppData\\Roaming\\Python\\Python39\\site-packages\\transformers\\models\\auto\\configuration_auto.py\", line 986, in from_pretrained\r\n trust_remote_code = resolve_trust_remote_code(\r\n File \"C:\\Users\\2267302\\AppData\\Roaming\\Python\\Python39\\site-packages\\transformers\\dynamic_module_utils.py\", line 535, in resolve_trust_remote_code\r\n signal.signal(signal.SIGALRM, _raise_timeout_error)\r\nAttributeError: module 'signal' has no attribute 'SIGALRM'",

"I'm going through the code, and I'm finding dynamic_module_utils.py [verbose trace](https://github.com/huggingface/transformers/blob/main/src/transformers/dynamic_module_utils.py#L556C47-L556C47) at 556 instead of 535 . Have a look at the [function as well](https://github.com/huggingface/transformers/blob/9ca3aa01564bb81e1362288a8fdf5ac6e0e63126/src/transformers/dynamic_module_utils.py#L550)\r\nWhich version of the transformers library are you using?",

"See #25049, but basically\r\n\r\n> \"Loading this model requires you to execute execute some code in that repo on your local machine. \"\r\n> \"Make sure you have read the code at https://hf.co/{model_name} to avoid malicious use, then set \"\r\n> \"the option `trust_remote_code=True` to remove this error.\"",

"This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.\n\nPlease note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored."

] | 1,690 | 1,694 | 1,694 |

NONE

| null |

### System Info

### Who can help?

_No response_

### Information

- [ ] The official example scripts

- [ ] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

from transformers import AutoTokenizer, pipeline

# Load the tokenizer

model_name = "tiiuae/falcon-7b"

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Create a text generation pipeline

text_generator = pipeline("text-generation", model=model_name, tokenizer=tokenizer)

# Generate text

input_text = "Hello! How are you?"

output = text_generator(input_text, max_length=100, do_sample=True)

generated_text = output[0]["generated_text"]

# Print the generated text

print(generated_text)

### Expected behavior

It should get the text generated by the model. But it was showing me this error:

"Traceback (most recent call last):

File "C:\DissData\Dissertation-Brunel\Falcon-7b.py", line 8, in <module>

text_generator = pipeline("text-generation", model=model_name, tokenizer=tokenizer)

File "C:\Users\2267302\AppData\Roaming\Python\Python39\site-packages\transformers\pipelines\__init__.py", line 705, in pipeline

config = AutoConfig.from_pretrained(model, _from_pipeline=task, **hub_kwargs, **model_kwargs)

File "C:\Users\2267302\AppData\Roaming\Python\Python39\site-packages\transformers\models\auto\configuration_auto.py", line 986, in from_pretrained

trust_remote_code = resolve_trust_remote_code(

File "C:\Users\2267302\AppData\Roaming\Python\Python39\site-packages\transformers\dynamic_module_utils.py", line 535, in resolve_trust_remote_code

signal.signal(signal.SIGALRM, _raise_timeout_error)

AttributeError: module 'signal' has no attribute 'SIGALRM'"

Is it possible to resolve this error as soon as possible?

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/25208/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/25208/timeline

|

completed

| null | null |

https://api.github.com/repos/huggingface/transformers/issues/25207

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/25207/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/25207/comments

|

https://api.github.com/repos/huggingface/transformers/issues/25207/events

|

https://github.com/huggingface/transformers/pull/25207

| 1,828,990,463 |

PR_kwDOCUB6oc5WyeKl

| 25,207 |

[`pipeline`] revisit device check for pipeline

|

{

"login": "younesbelkada",

"id": 49240599,

"node_id": "MDQ6VXNlcjQ5MjQwNTk5",

"avatar_url": "https://avatars.githubusercontent.com/u/49240599?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/younesbelkada",

"html_url": "https://github.com/younesbelkada",

"followers_url": "https://api.github.com/users/younesbelkada/followers",

"following_url": "https://api.github.com/users/younesbelkada/following{/other_user}",

"gists_url": "https://api.github.com/users/younesbelkada/gists{/gist_id}",

"starred_url": "https://api.github.com/users/younesbelkada/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/younesbelkada/subscriptions",

"organizations_url": "https://api.github.com/users/younesbelkada/orgs",

"repos_url": "https://api.github.com/users/younesbelkada/repos",

"events_url": "https://api.github.com/users/younesbelkada/events{/privacy}",

"received_events_url": "https://api.github.com/users/younesbelkada/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] |

[

"After thinking about it, maybe this shouldn't be the right fix, it is a bad intent from users to add a `device_map` + `device` argument.\r\nLet me know what do you think",

"_The documentation is not available anymore as the PR was closed or merged._",

"Yeah let's raise an error!"

] | 1,690 | 1,690 | 1,690 |

CONTRIBUTOR

| null |

# What does this PR do?

Fixes https://github.com/huggingface/transformers/issues/23336#issuecomment-1657792271

Currently `.to` is called to the model in pipeline even if the model is loaded with accelerate - which is a bad practice and can lead to unexpected behaviour if the model is loaded across multiple GPUs or offloaded to CPU/disk.

This PR simply revisits the check for device assignment

Simple snippet to reproduce the issue:

```python

from transformers import AutoModelForCausalLM, AutoConfig, AutoTokenizer, pipeline

import torch

model_path="facebook/opt-350m"

config = AutoConfig.from_pretrained(model_path)

model = AutoModelForCausalLM.from_pretrained(model_path, load_in_8bit=True, device_map="auto")

tokenizer = AutoTokenizer.from_pretrained(model_path)

params = {

"max_length":1024,

"pad_token_id": 0,

"device_map":"auto",

"load_in_8bit": True,

# "torch_dtype":"auto"

}

pipe = pipeline(

task="text-generation",

model=model,

tokenizer=tokenizer,

device=0,

model_kwargs=params,

)

```

cc @sgugger @Narsil

|

{

"url": "https://api.github.com/repos/huggingface/transformers/issues/25207/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/transformers/issues/25207/timeline

| null | false |

{

"url": "https://api.github.com/repos/huggingface/transformers/pulls/25207",

"html_url": "https://github.com/huggingface/transformers/pull/25207",

"diff_url": "https://github.com/huggingface/transformers/pull/25207.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/25207.patch",

"merged_at": 1690821802000

}

|

https://api.github.com/repos/huggingface/transformers/issues/25206

|

https://api.github.com/repos/huggingface/transformers

|

https://api.github.com/repos/huggingface/transformers/issues/25206/labels{/name}

|

https://api.github.com/repos/huggingface/transformers/issues/25206/comments

|

https://api.github.com/repos/huggingface/transformers/issues/25206/events

|

https://github.com/huggingface/transformers/pull/25206

| 1,828,925,185 |

PR_kwDOCUB6oc5WyPw1

| 25,206 |

[`PreTrainedModel`] Wrap `cuda` and `to` method correctly

|

{

"login": "younesbelkada",

"id": 49240599,

"node_id": "MDQ6VXNlcjQ5MjQwNTk5",

"avatar_url": "https://avatars.githubusercontent.com/u/49240599?v=4",

"gravatar_id": "",