CVPR 2023 Accepted Paper Meta Info Dataset

This dataset is collect from the CVPR 2023 Open Access website (https://openaccess.thecvf.com/CVPR2023) as well as the arxiv website DeepNLP paper arxiv (http://www.deepnlp.org/content/paper/cvpr2023). For researchers who are interested in doing analysis of CVPR 2023 accepted papers and potential trends, you can use the already cleaned up json files. Each row contains the meta information of a paper in the CVPR 2024 conference. To explore more AI & Robotic papers (NIPS/ICML/ICLR/IROS/ICRA/etc) and AI equations, feel free to navigate the Equation Search Engine (http://www.deepnlp.org/search/equation) as well as the AI Agent Search Engine to find the deployed AI Apps and Agents (http://www.deepnlp.org/search/agent) in your domain.

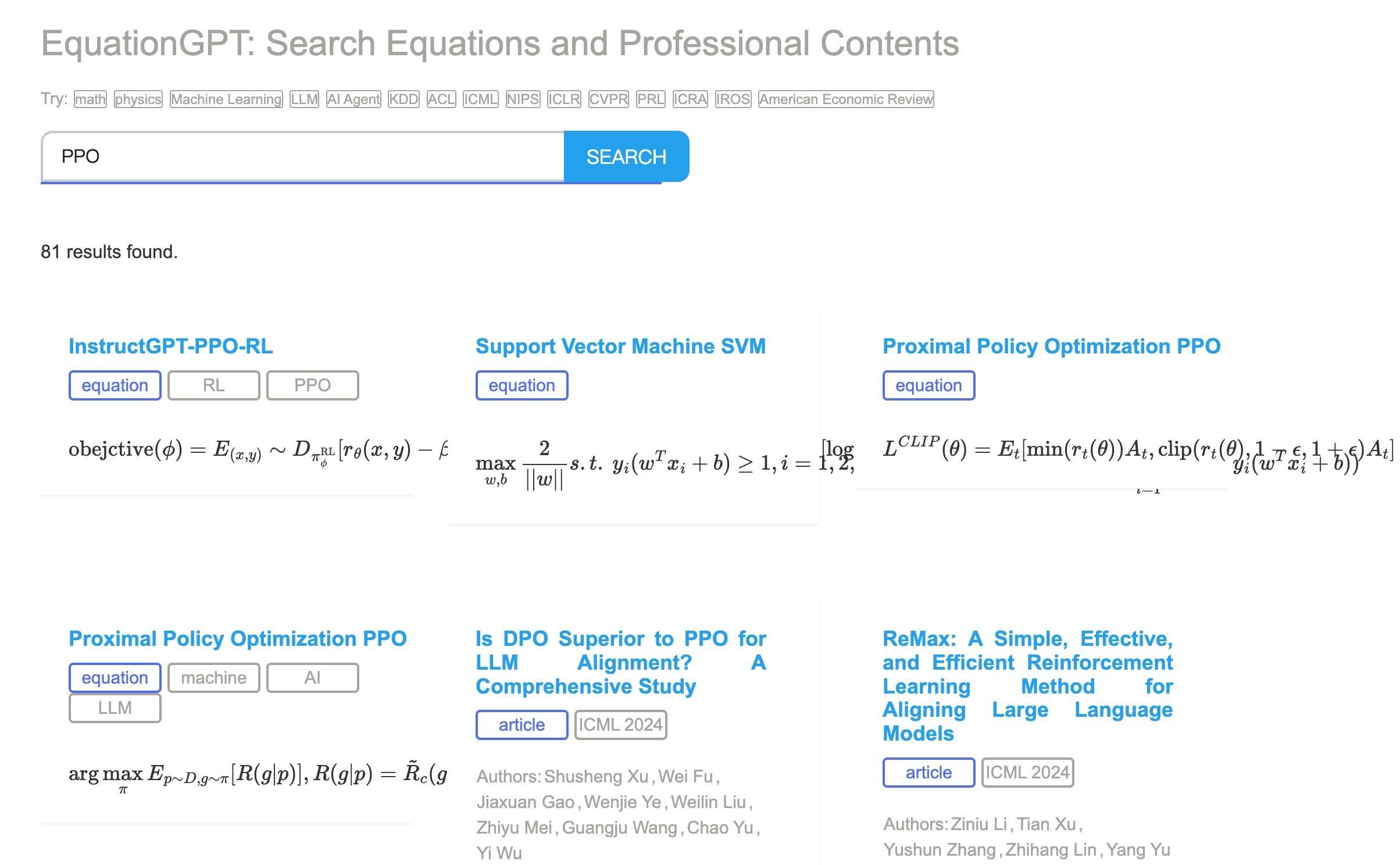

Equations Latex code and Papers Search Engine

Meta Information of Json File of Paper

{

"title": "Dual Cross-Attention Learning for Fine-Grained Visual Categorization and Object Re-Identification",

"authors": "Haowei Zhu, Wenjing Ke, Dong Li, Ji Liu, Lu Tian, Yi Shan",

"abstract": "Recently, self-attention mechanisms have shown impressive performance in various NLP and CV tasks, which can help capture sequential characteristics and derive global information. In this work, we explore how to extend self-attention modules to better learn subtle feature embeddings for recognizing fine-grained objects, e.g., different bird species or person identities. To this end, we propose a dual cross-attention learning (DCAL) algorithm to coordinate with self-attention learning. First, we propose global-local cross-attention (GLCA) to enhance the interactions between global images and local high-response regions, which can help reinforce the spatial-wise discriminative clues for recognition. Second, we propose pair-wise cross-attention (PWCA) to establish the interactions between image pairs. PWCA can regularize the attention learning of an image by treating another image as distractor and will be removed during inference. We observe that DCAL can reduce misleading attentions and diffuse the attention response to discover more complementary parts for recognition. We conduct extensive evaluations on fine-grained visual categorization and object re-identification. Experiments demonstrate that DCAL performs on par with state-of-the-art methods and consistently improves multiple self-attention baselines, e.g., surpassing DeiT-Tiny and ViT-Base by 2.8% and 2.4% mAP on MSMT17, respectively.",

"pdf": "https://openaccess.thecvf.com/content/CVPR2022/papers/Zhu_Dual_Cross-Attention_Learning_for_Fine-Grained_Visual_Categorization_and_Object_Re-Identification_CVPR_2022_paper.pdf",

"supp": "https://openaccess.thecvf.com/content/CVPR2022/supplemental/Zhu_Dual_Cross-Attention_Learning_CVPR_2022_supplemental.pdf",

"arXiv": "http://arxiv.org/abs/2205.02151",

"bibtex": "https://openaccess.thecvf.com",

"url": "https://openaccess.thecvf.com/content/CVPR2022/html/Zhu_Dual_Cross-Attention_Learning_for_Fine-Grained_Visual_Categorization_and_Object_Re-Identification_CVPR_2022_paper.html",

"detail_url": "https://openaccess.thecvf.com/content/CVPR2022/html/Zhu_Dual_Cross-Attention_Learning_for_Fine-Grained_Visual_Categorization_and_Object_Re-Identification_CVPR_2022_paper.html",

"tags": "CVPR 2022"

}

Related

AI Agent Marketplace and Search

AI Agent Marketplace and Search

Robot Search

Equation and Academic search

AI & Robot Comprehensive Search

AI & Robot Question

AI & Robot Community

AI Agent Marketplace Blog

AI Agent Reviews

AI Agent Marketplace Directory

Microsoft AI Agents Reviews

Claude AI Agents Reviews

OpenAI AI Agents Reviews

Saleforce AI Agents Reviews

AI Agent Builder Reviews

AI Equation

List of AI Equations and Latex

List of Math Equations and Latex

List of Physics Equations and Latex

List of Statistics Equations and Latex

List of Machine Learning Equations and Latex