file_name

stringlengths 3

137

| prefix

stringlengths 0

918k

| suffix

stringlengths 0

962k

| middle

stringlengths 0

812k

|

|---|---|---|---|

smoke.rs

|

use futures::join;

use lazy_static::lazy_static;

use native_tls::{Certificate, Identity};

use std::{fs, io::Error, path::PathBuf, process::Command};

use tokio::{

io::{AsyncReadExt, AsyncWrite, AsyncWriteExt},

net::{TcpListener, TcpStream},

};

use tokio_native_tls::{TlsAcceptor, TlsConnector};

lazy_static! {

static ref CERT_DIR: PathBuf = {

if cfg!(unix) {

let dir = tempfile::TempDir::new().unwrap();

let path = dir.path().to_str().unwrap();

Command::new("sh")

.arg("-c")

.arg(format!("./scripts/generate-certificate.sh {}", path))

.output()

.expect("failed to execute process");

dir.into_path()

} else {

PathBuf::from("tests")

}

};

}

#[tokio::test]

async fn client_to_server() {

let srv = TcpListener::bind("127.0.0.1:0").await.unwrap();

let addr = srv.local_addr().unwrap();

let (server_tls, client_tls) = context();

// Create a future to accept one socket, connect the ssl stream, and then

// read all the data from it.

let server = async move {

let (socket, _) = srv.accept().await.unwrap();

let mut socket = server_tls.accept(socket).await.unwrap();

// Verify access to all of the nested inner streams (e.g. so that peer

// certificates can be accessed). This is just a compile check.

let native_tls_stream: &native_tls::TlsStream<_> = socket.get_ref();

let _peer_cert = native_tls_stream.peer_certificate().unwrap();

let allow_std_stream: &tokio_native_tls::AllowStd<_> = native_tls_stream.get_ref();

let _tokio_tcp_stream: &tokio::net::TcpStream = allow_std_stream.get_ref();

let mut data = Vec::new();

socket.read_to_end(&mut data).await.unwrap();

data

};

// Create a future to connect to our server, connect the ssl stream, and

// then write a bunch of data to it.

let client = async move {

let socket = TcpStream::connect(&addr).await.unwrap();

let socket = client_tls.connect("foobar.com", socket).await.unwrap();

copy_data(socket).await

};

// Finally, run everything!

let (data, _) = join!(server, client);

// assert_eq!(amt, AMT);

assert!(data == vec![9; AMT]);

}

#[tokio::test]

async fn server_to_client() {

// Create a server listening on a port, then figure out what that port is

let srv = TcpListener::bind("127.0.0.1:0").await.unwrap();

let addr = srv.local_addr().unwrap();

let (server_tls, client_tls) = context();

let server = async move {

let (socket, _) = srv.accept().await.unwrap();

let socket = server_tls.accept(socket).await.unwrap();

copy_data(socket).await

};

let client = async move {

let socket = TcpStream::connect(&addr).await.unwrap();

let mut socket = client_tls.connect("foobar.com", socket).await.unwrap();

let mut data = Vec::new();

socket.read_to_end(&mut data).await.unwrap();

data

};

// Finally, run everything!

let (_, data) = join!(server, client);

assert!(data == vec![9; AMT]);

}

#[tokio::test]

async fn

|

() {

const AMT: usize = 1024;

let srv = TcpListener::bind("127.0.0.1:0").await.unwrap();

let addr = srv.local_addr().unwrap();

let (server_tls, client_tls) = context();

let server = async move {

let (socket, _) = srv.accept().await.unwrap();

let mut socket = server_tls.accept(socket).await.unwrap();

let mut amt = 0;

for b in std::iter::repeat(9).take(AMT) {

let data = [b as u8];

socket.write_all(&data).await.unwrap();

amt += 1;

}

amt

};

let client = async move {

let socket = TcpStream::connect(&addr).await.unwrap();

let mut socket = client_tls.connect("foobar.com", socket).await.unwrap();

let mut data = Vec::new();

loop {

let mut buf = [0; 1];

match socket.read_exact(&mut buf).await {

Ok(_) => data.extend_from_slice(&buf),

Err(ref err) if err.kind() == std::io::ErrorKind::UnexpectedEof => break,

Err(err) => panic!(err),

}

}

data

};

let (amt, data) = join!(server, client);

assert_eq!(amt, AMT);

assert!(data == vec![9; AMT as usize]);

}

fn context() -> (TlsAcceptor, TlsConnector) {

let pkcs12 = fs::read(CERT_DIR.join("identity.p12")).unwrap();

let der = fs::read(CERT_DIR.join("root-ca.der")).unwrap();

let identity = Identity::from_pkcs12(&pkcs12, "mypass").unwrap();

let acceptor = native_tls::TlsAcceptor::builder(identity).build().unwrap();

let cert = Certificate::from_der(&der).unwrap();

let connector = native_tls::TlsConnector::builder()

.add_root_certificate(cert)

.build()

.unwrap();

(acceptor.into(), connector.into())

}

const AMT: usize = 128 * 1024;

async fn copy_data<W: AsyncWrite + Unpin>(mut w: W) -> Result<usize, Error> {

let mut data = vec![9; AMT as usize];

let mut amt = 0;

while !data.is_empty() {

let written = w.write(&data).await?;

if written <= data.len() {

amt += written;

data.resize(data.len() - written, 0);

} else {

w.write_all(&data).await?;

amt += data.len();

break;

}

println!("remaining: {}", data.len());

}

Ok(amt)

}

|

one_byte_at_a_time

|

scene_setting.py

|

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

@author: DIYer22@github

@mail: [email protected]

Created on Thu Jan 16 18:17:20 2020

"""

from boxx import *

from boxx import deg2rad, np, pi

import bpy

import random

def set_cam_pose(cam_radius=1, cam_deg=45, cam_x_deg=None, cam=None):

cam_rad = deg2rad(cam_deg)

if cam_x_deg is None:

cam_x_deg = random.uniform(0, 360)

cam_x_rad = deg2rad(cam_x_deg)

z = cam_radius * np.sin(cam_rad)

xy = (cam_radius ** 2 - z ** 2) ** 0.5

x = xy * np.cos(cam_x_rad)

y = xy * np.sin(cam_x_rad)

cam = cam or bpy.data.objects["Camera"]

cam.location = x, y, z

cam.rotation_euler = pi / 2 - cam_rad, 0.1, pi / 2 + cam_x_rad

cam.scale = (0.1,) * 3

return cam

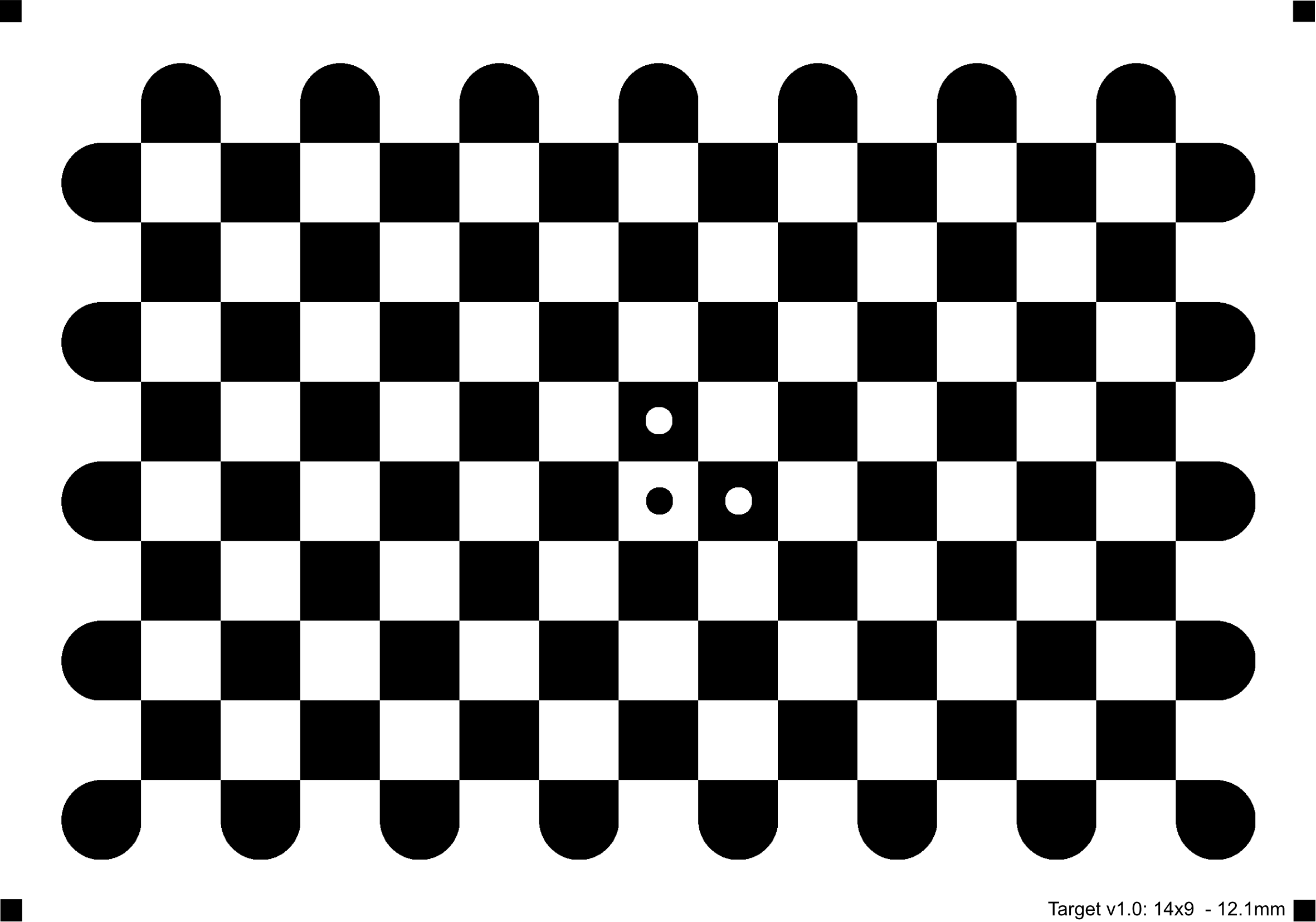

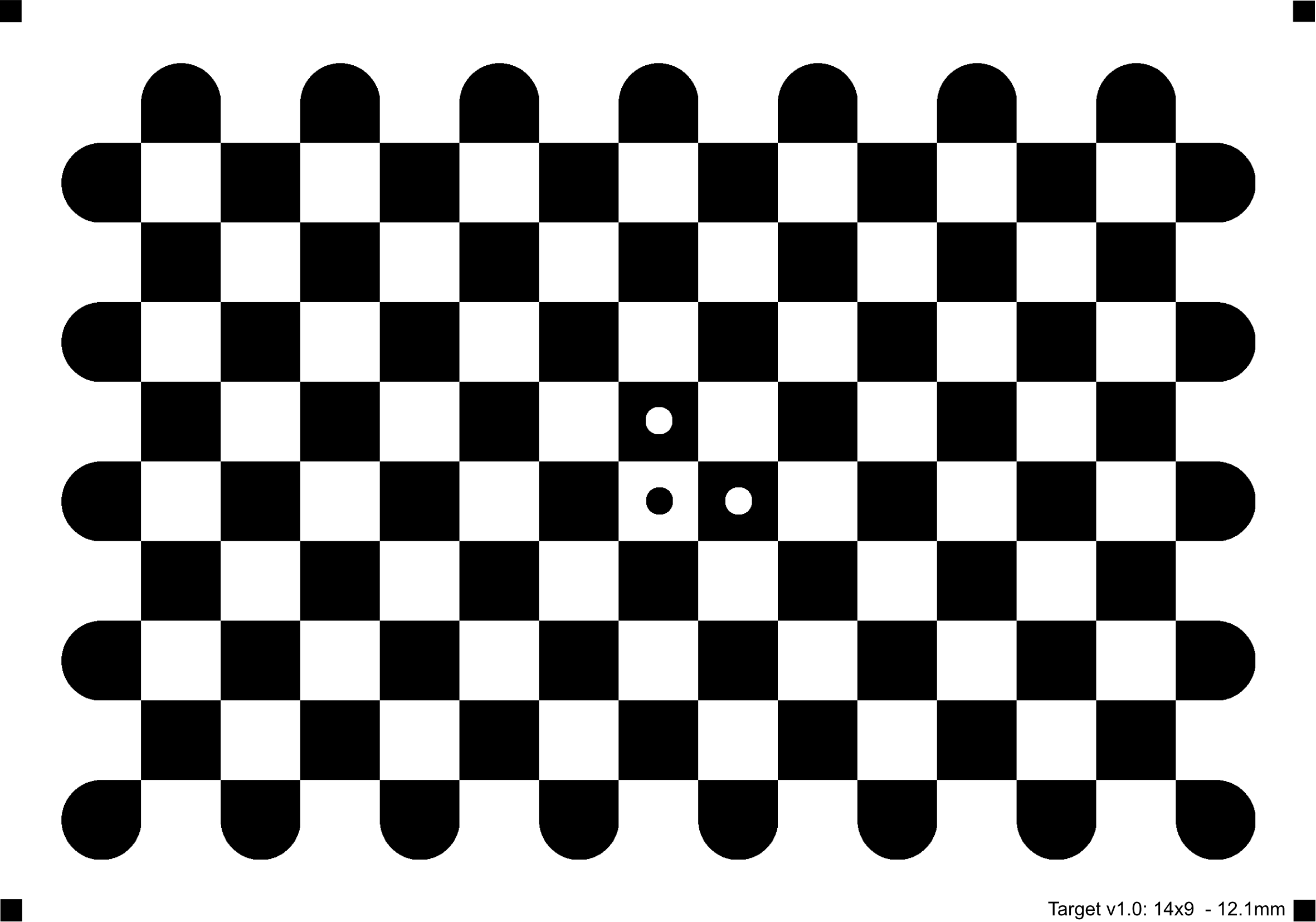

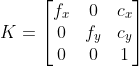

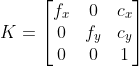

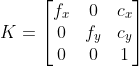

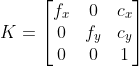

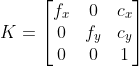

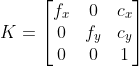

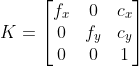

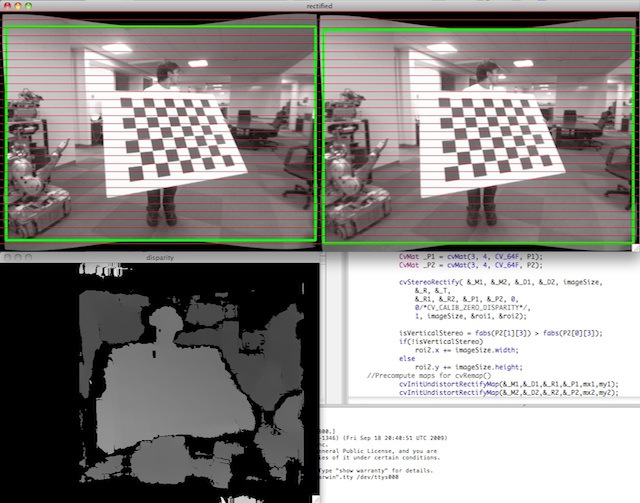

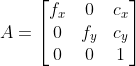

def set_cam_intrinsic(cam, intrinsic_K, hw=None):

"""

K = [[f_x, 0, c_x],

[0, f_y, c_y],

[0, 0, 1]]

Refrence: https://www.rojtberg.net/1601/from-blender-to-opencv-camera-and-back/

"""

if hw is None:

scene = bpy.context.scene

hw = scene.render.resolution_y, scene.render.resolution_x

near = lambda x, y=0, eps=1e-5: abs(x - y) < eps

assert near(intrinsic_K[0][1], 0)

assert near(intrinsic_K[1][0], 0)

h, w = hw

f_x = intrinsic_K[0][0]

f_y = intrinsic_K[1][1]

c_x = intrinsic_K[0][2]

c_y = intrinsic_K[1][2]

cam = cam.data

cam.shift_x = -(c_x / w - 0.5)

cam.shift_y = (c_y - 0.5 * h) / w

cam.lens = f_x / w * cam.sensor_width

pixel_aspect = f_y / f_x

scene.render.pixel_aspect_x = 1.0

scene.render.pixel_aspect_y = pixel_aspect

def remove_useless_data():

"""

remove all data and release RAM

"""

for block in bpy.data.meshes:

if block.users == 0:

bpy.data.meshes.remove(block)

for block in bpy.data.materials:

if block.users == 0:

bpy.data.materials.remove(block)

for block in bpy.data.textures:

if block.users == 0:

bpy.data.textures.remove(block)

for block in bpy.data.images:

if block.users == 0:

bpy.data.images.remove(block)

def clear_all():

[

bpy.data.objects.remove(obj)

for obj in bpy.data.objects

if obj.type in ("MESH", "LIGHT", "CURVE")

]

remove_useless_data()

def

|

(mode="SOLID", screens=[]):

"""

Performs an action analogous to clicking on the display/shade button of

the 3D view. Mode is one of "RENDERED", "MATERIAL", "SOLID", "WIREFRAME".

The change is applied to the given collection of bpy.data.screens.

If none is given, the function is applied to bpy.context.screen (the

active screen) only. E.g. set all screens to rendered mode:

set_shading_mode("RENDERED", bpy.data.screens)

"""

screens = screens if screens else [bpy.context.screen]

for s in screens:

for spc in s.areas:

if spc.type == "VIEW_3D":

spc.spaces[0].shading.type = mode

break # we expect at most 1 VIEW_3D space

def add_stage(size=2, transparency=False):

"""

add PASSIVE rigidbody cube for physic stage or depth background

Parameters

----------

size : float, optional

size of stage. The default is 2.

transparency : bool, optional

transparency for rgb but set limit for depth. The default is False.

"""

import bpycv

bpy.ops.mesh.primitive_cube_add(size=size, location=(0, 0, -size / 2))

stage = bpy.context.active_object

stage.name = "stage"

with bpycv.activate_obj(stage):

bpy.ops.rigidbody.object_add()

stage.rigid_body.type = "PASSIVE"

if transparency:

stage.rigid_body.use_margin = True

stage.rigid_body.collision_margin = 0.04

stage.location.z -= stage.rigid_body.collision_margin

material = bpy.data.materials.new("transparency_stage_bpycv")

material.use_nodes = True

material.node_tree.nodes.clear()

with bpycv.activate_node_tree(material.node_tree):

bpycv.Node("ShaderNodeOutputMaterial").Surface = bpycv.Node(

"ShaderNodeBsdfPrincipled", Alpha=0

).BSDF

stage.data.materials.append(material)

return stage

if __name__ == "__main__":

pass

|

set_shading_mode

|

build.rs

|

use std::env;

use std::path::PathBuf;

#[cfg(feature = "libsodium-bundled")]

use std::process::Command;

#[cfg(all(feature = "libsodium-bundled", not(target_os = "windows")))]

const LIBSODIUM_NAME: &'static str = "libsodium-1.0.18";

#[cfg(all(feature = "libsodium-bundled", not(target_os = "windows")))]

const LIBSODIUM_URL: &'static str =

"https://download.libsodium.org/libsodium/releases/libsodium-1.0.18.tar.gz";

// skip the build script when building doc on docs.rs

#[cfg(feature = "docs-rs")]

fn main() {}

#[cfg(not(feature = "docs-rs"))]

fn main() {

#[cfg(feature = "libsodium-bundled")]

download_and_install_libsodium();

#[cfg(not(feature = "libsodium-bundled"))]

{

println!("cargo:rerun-if-env-changed=SODIUM_LIB_DIR");

println!("cargo:rerun-if-env-changed=SODIUM_STATIC");

}

// add libsodium link options

if let Ok(lib_dir) = env::var("SODIUM_LIB_DIR") {

println!("cargo:rustc-link-search=native={}", lib_dir);

let mode = match env::var_os("SODIUM_STATIC") {

Some(_) => "static",

None => "dylib",

};

if cfg!(target_os = "windows") {

println!("cargo:rustc-link-lib={0}=libsodium", mode);

} else {

println!("cargo:rustc-link-lib={0}=sodium", mode);

}

} else {

// the static linking doesn't work if libsodium is installed

// under '/usr' dir, in that case use the environment variables

// mentioned above

pkg_config::Config::new()

.atleast_version("1.0.18")

.statik(true)

.probe("libsodium")

.unwrap();

}

// add liblz4 link options

if let Ok(lib_dir) = env::var("LZ4_LIB_DIR") {

println!("cargo:rustc-link-search=native={}", lib_dir);

if cfg!(target_os = "windows") {

println!("cargo:rustc-link-lib=static=liblz4");

} else {

println!("cargo:rustc-link-lib=static=lz4");

}

} else {

// build lz4 static library

let out_dir = PathBuf::from(env::var("OUT_DIR").unwrap());

if !out_dir.join("liblz4.a").exists() {

let mut compiler = cc::Build::new();

|

.file("vendor/lz4/lz4frame.c")

.file("vendor/lz4/lz4hc.c")

.file("vendor/lz4/xxhash.c")

.define("XXH_NAMESPACE", "LZ4_")

.opt_level(3)

.debug(false)

.pic(true)

.shared_flag(false);

if !cfg!(windows) {

compiler.static_flag(true);

}

compiler.compile("liblz4.a");

}

}

}

// This downloads function and builds the libsodium from source for linux and

// unix targets.

// The steps are taken from the libsodium installation instructions:

// https://libsodium.gitbook.io/doc/installation

// effectively:

// $ ./configure

// $ make && make check

// $ sudo make install

#[cfg(all(feature = "libsodium-bundled", not(target_os = "windows")))]

fn download_and_install_libsodium() {

let out_dir = PathBuf::from(env::var("OUT_DIR").unwrap());

let source_dir = out_dir.join(LIBSODIUM_NAME);

let prefix_dir = out_dir.join("libsodium");

let sodium_lib_dir = prefix_dir.join("lib");

let src_file_name = format!("{}.tar.gz", LIBSODIUM_NAME);

// check if command tools exist

Command::new("curl")

.arg("--version")

.output()

.expect("curl not found");

Command::new("tar")

.arg("--version")

.output()

.expect("tar not found");

Command::new("gpg")

.arg("--version")

.output()

.expect("gpg not found");

Command::new("make")

.arg("--version")

.output()

.expect("make not found");

if !source_dir.exists() {

// download source code file

let output = Command::new("curl")

.current_dir(&out_dir)

.args(&[LIBSODIUM_URL, "-sSfL", "-o", &src_file_name])

.output()

.expect("failed to download libsodium");

assert!(output.status.success());

// download signature file

let sig_file_name = format!("{}.sig", src_file_name);

let sig_url = format!("{}.sig", LIBSODIUM_URL);

let output = Command::new("curl")

.current_dir(&out_dir)

.args(&[&sig_url, "-sSfL", "-o", &sig_file_name])

.output()

.expect("failed to download libsodium signature file");

assert!(output.status.success());

// import libsodium author's public key

let output = Command::new("gpg")

.arg("--import")

.arg("libsodium.gpg.key")

.output()

.expect("failed to import libsodium author's gpg key");

assert!(output.status.success());

// verify signature

let output = Command::new("gpg")

.current_dir(&out_dir)

.arg("--verify")

.arg(&sig_file_name)

.output()

.expect("failed to verify libsodium file");

assert!(output.status.success());

// unpack source code files

let output = Command::new("tar")

.current_dir(&out_dir)

.args(&["zxf", &src_file_name])

.output()

.expect("failed to unpack libsodium");

assert!(output.status.success());

}

if !sodium_lib_dir.exists() {

let configure = source_dir.join("./configure");

let output = Command::new(&configure)

.current_dir(&source_dir)

.args(&[std::path::Path::new("--prefix"), &prefix_dir])

.output()

.expect("failed to execute configure");

assert!(output.status.success());

let output = Command::new("make")

.current_dir(&source_dir)

.output()

.expect("failed to execute make");

assert!(output.status.success());

let output = Command::new("make")

.current_dir(&source_dir)

.arg("install")

.output()

.expect("failed to execute make install");

assert!(output.status.success());

}

assert!(

&sodium_lib_dir.exists(),

"libsodium lib directory was not created."

);

env::set_var("SODIUM_LIB_DIR", &sodium_lib_dir);

env::set_var("SODIUM_STATIC", "true");

}

// This downloads function and builds the libsodium from source for windows msvc target.

// The binaries are pre-compiled, so we simply download and link.

// The binary is compressed in zip format.

#[cfg(all(

feature = "libsodium-bundled",

target_os = "windows",

target_env = "msvc"

))]

fn download_and_install_libsodium() {

use std::fs;

use std::fs::OpenOptions;

use std::io;

use std::path::PathBuf;

#[cfg(target_env = "msvc")]

static LIBSODIUM_ZIP: &'static str = "https://download.libsodium.org/libsodium/releases/libsodium-1.0.18-msvc.zip";

#[cfg(target_env = "mingw")]

static LIBSODIUM_ZIP: &'static str = "https://download.libsodium.org/libsodium/releases/libsodium-1.0.18-mingw.tar.gz";

let out_dir = PathBuf::from(env::var("OUT_DIR").unwrap());

let sodium_lib_dir = out_dir.join("libsodium");

if !sodium_lib_dir.exists() {

fs::create_dir(&sodium_lib_dir).unwrap();

}

let sodium_lib_file_path = sodium_lib_dir.join("libsodium.lib");

if !sodium_lib_file_path.exists() {

let mut tmpfile = tempfile::tempfile().unwrap();

reqwest::get(LIBSODIUM_ZIP)

.unwrap()

.copy_to(&mut tmpfile)

.unwrap();

let mut zip = zip::ZipArchive::new(tmpfile).unwrap();

#[cfg(target_arch = "x86_64")]

let mut lib = zip

.by_name("x64/Release/v142/static/libsodium.lib")

.unwrap();

#[cfg(target_arch = "x86")]

let mut lib = zip

.by_name("Win32/Release/v142/static/libsodium.lib")

.unwrap();

#[cfg(not(any(target_arch = "x86_64", target_arch = "x86")))]

compile_error!("Bundled libsodium is only supported on x86 or x86_64 target architecture.");

let mut libsodium_file = OpenOptions::new()

.create(true)

.write(true)

.open(&sodium_lib_file_path)

.unwrap();

io::copy(&mut lib, &mut libsodium_file).unwrap();

}

assert!(

&sodium_lib_dir.exists(),

"libsodium lib directory was not created."

);

env::set_var("SODIUM_LIB_DIR", &sodium_lib_dir);

env::set_var("SODIUM_STATIC", "true");

}

// This downloads function and builds the libsodium from source for windows mingw target.

// The binaries are pre-compiled, so we simply download and link.

// The binary is compressed in tar.gz format.

#[cfg(all(

feature = "libsodium-bundled",

target_os = "windows",

target_env = "gnu"

))]

fn download_and_install_libsodium() {

use libflate::non_blocking::gzip::Decoder;

use std::fs;

use std::fs::OpenOptions;

use std::io;

use std::path::PathBuf;

use tar::Archive;

static LIBSODIUM_ZIP: &'static str = "https://download.libsodium.org/libsodium/releases/libsodium-1.0.18-mingw.tar.gz";

let out_dir = PathBuf::from(env::var("OUT_DIR").unwrap());

let sodium_lib_dir = out_dir.join("libsodium");

if !sodium_lib_dir.exists() {

fs::create_dir(&sodium_lib_dir).unwrap();

}

let sodium_lib_file_path = sodium_lib_dir.join("libsodium.lib");

if !sodium_lib_file_path.exists() {

let response = reqwest::get(LIBSODIUM_ZIP).unwrap();

let decoder = Decoder::new(response);

let mut ar = Archive::new(decoder);

#[cfg(target_arch = "x86_64")]

let filename = PathBuf::from("libsodium-win64/lib/libsodium.a");

#[cfg(target_arch = "x86")]

let filename = PathBuf::from("libsodium-win32/lib/libsodium.a");

#[cfg(not(any(target_arch = "x86_64", target_arch = "x86")))]

compile_error!("Bundled libsodium is only supported on x86 or x86_64 target architecture.");

for file in ar.entries().unwrap() {

let mut f = file.unwrap();

if f.path().unwrap() == *filename {

let mut libsodium_file = OpenOptions::new()

.create(true)

.write(true)

.open(&sodium_lib_file_path)

.unwrap();

io::copy(&mut f, &mut libsodium_file).unwrap();

break;

}

}

}

assert!(

&sodium_lib_dir.exists(),

"libsodium lib directory was not created."

);

env::set_var("SODIUM_LIB_DIR", &sodium_lib_dir);

env::set_var("SODIUM_STATIC", "true");

}

|

compiler

.file("vendor/lz4/lz4.c")

|

proxy.go

|

// Package proxy is a cli proxy

package proxy

import (

"os"

"strings"

"time"

"github.com/go-acme/lego/v3/providers/dns/cloudflare"

"github.com/micro/cli/v2"

"github.com/micro/go-micro/v2"

"github.com/micro/go-micro/v2/api/server/acme"

"github.com/micro/go-micro/v2/api/server/acme/autocert"

"github.com/micro/go-micro/v2/api/server/acme/certmagic"

"github.com/micro/go-micro/v2/auth"

bmem "github.com/micro/go-micro/v2/broker/memory"

"github.com/micro/go-micro/v2/client"

mucli "github.com/micro/go-micro/v2/client"

"github.com/micro/go-micro/v2/config/cmd"

log "github.com/micro/go-micro/v2/logger"

"github.com/micro/go-micro/v2/proxy"

"github.com/micro/go-micro/v2/proxy/grpc"

"github.com/micro/go-micro/v2/proxy/http"

"github.com/micro/go-micro/v2/proxy/mucp"

"github.com/micro/go-micro/v2/registry"

rmem "github.com/micro/go-micro/v2/registry/memory"

"github.com/micro/go-micro/v2/router"

rs "github.com/micro/go-micro/v2/router/service"

"github.com/micro/go-micro/v2/server"

sgrpc "github.com/micro/go-micro/v2/server/grpc"

cfstore "github.com/micro/go-micro/v2/store/cloudflare"

"github.com/micro/go-micro/v2/sync/lock/memory"

"github.com/micro/go-micro/v2/util/mux"

"github.com/micro/go-micro/v2/util/wrapper"

"github.com/micro/micro/v2/internal/helper"

)

var (

// Name of the proxy

Name = "go.micro.proxy"

// The address of the proxy

Address = ":8081"

// the proxy protocol

Protocol = "grpc"

// The endpoint host to route to

Endpoint string

// ACME (Cert management)

ACMEProvider = "autocert"

ACMEChallengeProvider = "cloudflare"

ACMECA = acme.LetsEncryptProductionCA

)

func run(ctx *cli.Context, srvOpts ...micro.Option)

|

func Commands(options ...micro.Option) []*cli.Command {

command := &cli.Command{

Name: "proxy",

Usage: "Run the service proxy",

Flags: []cli.Flag{

&cli.StringFlag{

Name: "router",

Usage: "Set the router to use e.g default, go.micro.router",

EnvVars: []string{"MICRO_ROUTER"},

},

&cli.StringFlag{

Name: "router_address",

Usage: "Set the router address",

EnvVars: []string{"MICRO_ROUTER_ADDRESS"},

},

&cli.StringFlag{

Name: "address",

Usage: "Set the proxy http address e.g 0.0.0.0:8081",

EnvVars: []string{"MICRO_PROXY_ADDRESS"},

},

&cli.StringFlag{

Name: "protocol",

Usage: "Set the protocol used for proxying e.g mucp, grpc, http",

EnvVars: []string{"MICRO_PROXY_PROTOCOL"},

},

&cli.StringFlag{

Name: "endpoint",

Usage: "Set the endpoint to route to e.g greeter or localhost:9090",

EnvVars: []string{"MICRO_PROXY_ENDPOINT"},

},

&cli.StringFlag{

Name: "auth",

Usage: "Set the proxy auth e.g jwt",

EnvVars: []string{"MICRO_PROXY_AUTH"},

},

},

Action: func(ctx *cli.Context) error {

run(ctx, options...)

return nil

},

}

for _, p := range Plugins() {

if cmds := p.Commands(); len(cmds) > 0 {

command.Subcommands = append(command.Subcommands, cmds...)

}

if flags := p.Flags(); len(flags) > 0 {

command.Flags = append(command.Flags, flags...)

}

}

return []*cli.Command{command}

}

|

{

log.Init(log.WithFields(map[string]interface{}{"service": "proxy"}))

// because MICRO_PROXY_ADDRESS is used internally by the go-micro/client

// we need to unset it so we don't end up calling ourselves infinitely

os.Unsetenv("MICRO_PROXY_ADDRESS")

if len(ctx.String("server_name")) > 0 {

Name = ctx.String("server_name")

}

if len(ctx.String("address")) > 0 {

Address = ctx.String("address")

}

if len(ctx.String("endpoint")) > 0 {

Endpoint = ctx.String("endpoint")

}

if len(ctx.String("protocol")) > 0 {

Protocol = ctx.String("protocol")

}

if len(ctx.String("acme_provider")) > 0 {

ACMEProvider = ctx.String("acme_provider")

}

// Init plugins

for _, p := range Plugins() {

p.Init(ctx)

}

// service opts

srvOpts = append(srvOpts, micro.Name(Name))

if i := time.Duration(ctx.Int("register_ttl")); i > 0 {

srvOpts = append(srvOpts, micro.RegisterTTL(i*time.Second))

}

if i := time.Duration(ctx.Int("register_interval")); i > 0 {

srvOpts = append(srvOpts, micro.RegisterInterval(i*time.Second))

}

// set the context

var popts []proxy.Option

// create new router

var r router.Router

routerName := ctx.String("router")

routerAddr := ctx.String("router_address")

ropts := []router.Option{

router.Id(server.DefaultId),

router.Client(client.DefaultClient),

router.Address(routerAddr),

router.Registry(registry.DefaultRegistry),

}

// check if we need to use the router service

switch {

case routerName == "go.micro.router":

r = rs.NewRouter(ropts...)

case routerName == "service":

r = rs.NewRouter(ropts...)

case len(routerAddr) > 0:

r = rs.NewRouter(ropts...)

default:

r = router.NewRouter(ropts...)

}

// start the router

if err := r.Start(); err != nil {

log.Errorf("Proxy error starting router: %s", err)

os.Exit(1)

}

popts = append(popts, proxy.WithRouter(r))

// new proxy

var p proxy.Proxy

var srv server.Server

// set endpoint

if len(Endpoint) > 0 {

switch {

case strings.HasPrefix(Endpoint, "grpc://"):

ep := strings.TrimPrefix(Endpoint, "grpc://")

popts = append(popts, proxy.WithEndpoint(ep))

p = grpc.NewProxy(popts...)

case strings.HasPrefix(Endpoint, "http://"):

// TODO: strip prefix?

popts = append(popts, proxy.WithEndpoint(Endpoint))

p = http.NewProxy(popts...)

default:

// TODO: strip prefix?

popts = append(popts, proxy.WithEndpoint(Endpoint))

p = mucp.NewProxy(popts...)

}

}

serverOpts := []server.Option{

server.Address(Address),

server.Registry(rmem.NewRegistry()),

server.Broker(bmem.NewBroker()),

}

// enable acme will create a net.Listener which

if ctx.Bool("enable_acme") {

var ap acme.Provider

switch ACMEProvider {

case "autocert":

ap = autocert.NewProvider()

case "certmagic":

if ACMEChallengeProvider != "cloudflare" {

log.Fatal("The only implemented DNS challenge provider is cloudflare")

}

apiToken, accountID := os.Getenv("CF_API_TOKEN"), os.Getenv("CF_ACCOUNT_ID")

kvID := os.Getenv("KV_NAMESPACE_ID")

if len(apiToken) == 0 || len(accountID) == 0 {

log.Fatal("env variables CF_API_TOKEN and CF_ACCOUNT_ID must be set")

}

if len(kvID) == 0 {

log.Fatal("env var KV_NAMESPACE_ID must be set to your cloudflare workers KV namespace ID")

}

cloudflareStore := cfstore.NewStore(

cfstore.Token(apiToken),

cfstore.Account(accountID),

cfstore.Namespace(kvID),

cfstore.CacheTTL(time.Minute),

)

storage := certmagic.NewStorage(

memory.NewLock(),

cloudflareStore,

)

config := cloudflare.NewDefaultConfig()

config.AuthToken = apiToken

config.ZoneToken = apiToken

challengeProvider, err := cloudflare.NewDNSProviderConfig(config)

if err != nil {

log.Fatal(err.Error())

}

// define the provider

ap = certmagic.NewProvider(

acme.AcceptToS(true),

acme.CA(ACMECA),

acme.Cache(storage),

acme.ChallengeProvider(challengeProvider),

acme.OnDemand(false),

)

default:

log.Fatalf("Unsupported acme provider: %s\n", ACMEProvider)

}

// generate the tls config

config, err := ap.TLSConfig(helper.ACMEHosts(ctx)...)

if err != nil {

log.Fatalf("Failed to generate acme tls config: %v", err)

}

// set the tls config

serverOpts = append(serverOpts, server.TLSConfig(config))

// enable tls will leverage tls certs and generate a tls.Config

} else if ctx.Bool("enable_tls") {

// get certificates from the context

config, err := helper.TLSConfig(ctx)

if err != nil {

log.Fatal(err)

return

}

serverOpts = append(serverOpts, server.TLSConfig(config))

}

// add auth wrapper to server

if ctx.IsSet("auth") {

a, ok := cmd.DefaultAuths[ctx.String("auth")]

if !ok {

log.Fatalf("%v is not a valid auth", ctx.String("auth"))

return

}

var authOpts []auth.Option

if ctx.IsSet("auth_exclude") {

authOpts = append(authOpts, auth.Exclude(ctx.StringSlice("auth_exclude")...))

}

if ctx.IsSet("auth_public_key") {

authOpts = append(authOpts, auth.PublicKey(ctx.String("auth_public_key")))

}

if ctx.IsSet("auth_private_key") {

authOpts = append(authOpts, auth.PublicKey(ctx.String("auth_private_key")))

}

authFn := func() auth.Auth { return a(authOpts...) }

authOpt := server.WrapHandler(wrapper.AuthHandler(authFn))

serverOpts = append(serverOpts, authOpt)

}

// set proxy

if p == nil && len(Protocol) > 0 {

switch Protocol {

case "http":

p = http.NewProxy(popts...)

// TODO: http server

case "mucp":

popts = append(popts, proxy.WithClient(mucli.NewClient()))

p = mucp.NewProxy(popts...)

serverOpts = append(serverOpts, server.WithRouter(p))

srv = server.NewServer(serverOpts...)

default:

p = mucp.NewProxy(popts...)

serverOpts = append(serverOpts, server.WithRouter(p))

srv = sgrpc.NewServer(serverOpts...)

}

}

if len(Endpoint) > 0 {

log.Infof("Proxy [%s] serving endpoint: %s", p.String(), Endpoint)

} else {

log.Infof("Proxy [%s] serving protocol: %s", p.String(), Protocol)

}

// new service

service := micro.NewService(srvOpts...)

// create a new proxy muxer which includes the debug handler

muxer := mux.New(Name, p)

// set the router

service.Server().Init(

server.WithRouter(muxer),

)

// Start the proxy server

if err := srv.Start(); err != nil {

log.Fatal(err)

}

// Run internal service

if err := service.Run(); err != nil {

log.Fatal(err)

}

// Stop the server

if err := srv.Stop(); err != nil {

log.Fatal(err)

}

}

|

s3.go

|

// Copyright 2020 PingCAP, Inc.

//

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// See the License for the specific language governing permissions and

// limitations under the License.

package cdclog

import (

"context"

"net/url"

"strings"

"time"

"github.com/pingcap/errors"

backup "github.com/pingcap/kvproto/pkg/brpb"

"github.com/pingcap/log"

"github.com/pingcap/ticdc/cdc/model"

"github.com/pingcap/ticdc/cdc/sink/codec"

cerror "github.com/pingcap/ticdc/pkg/errors"

"github.com/pingcap/ticdc/pkg/quotes"

"github.com/pingcap/tidb/br/pkg/storage"

parsemodel "github.com/pingcap/tidb/parser/model"

"github.com/uber-go/atomic"

"go.uber.org/zap"

)

const (

maxPartFlushSize = 5 << 20 // The minimal multipart upload size is 5Mb.

maxCompletePartSize = 100 << 20 // rotate row changed event file if one complete file larger than 100Mb

maxDDLFlushSize = 10 << 20 // rotate ddl event file if one complete file larger than 10Mb

defaultBufferChanSize = 20480

defaultFlushRowChangedEventDuration = 5 * time.Second // TODO make it as a config

)

type tableBuffer struct {

// for log

tableID int64

dataCh chan *model.RowChangedEvent

sendSize *atomic.Int64

sendEvents *atomic.Int64

encoder codec.EventBatchEncoder

uploadParts struct {

writer storage.ExternalFileWriter

uploadNum int

byteSize int64

}

}

func (tb *tableBuffer) dataChan() chan *model.RowChangedEvent {

return tb.dataCh

}

func (tb *tableBuffer) TableID() int64 {

return tb.tableID

}

func (tb *tableBuffer) Events() *atomic.Int64 {

return tb.sendEvents

}

func (tb *tableBuffer) Size() *atomic.Int64 {

return tb.sendSize

}

func (tb *tableBuffer) isEmpty() bool {

return tb.sendEvents.Load() == 0 && tb.uploadParts.uploadNum == 0

}

func (tb *tableBuffer) shouldFlush() bool {

// if sendSize > 5 MB or data chennal is full, flush it

return tb.sendSize.Load() > maxPartFlushSize || tb.sendEvents.Load() == defaultBufferChanSize

}

func (tb *tableBuffer) flush(ctx context.Context, sink *logSink) error {

hashPart := tb.uploadParts

sendEvents := tb.sendEvents.Load()

if sendEvents == 0 && hashPart.uploadNum == 0 {

log.Info("nothing to flush", zap.Int64("tableID", tb.tableID))

return nil

}

firstCreated := false

if tb.encoder == nil {

// create encoder for each file

tb.encoder = sink.encoder()

firstCreated = true

}

var newFileName string

flushedSize := int64(0)

for event := int64(0); event < sendEvents; event++ {

row := <-tb.dataCh

flushedSize += row.ApproximateSize

if event == sendEvents-1 {

// if last event, we record ts as new rotate file name

newFileName = makeTableFileObject(row.Table.TableID, row.CommitTs)

}

_, err := tb.encoder.AppendRowChangedEvent(row)

if err != nil {

return err

}

}

rowDatas := tb.encoder.MixedBuild(firstCreated)

// reset encoder buf for next round append

defer func() {

if tb.encoder != nil {

tb.encoder.Reset()

}

}()

log.Debug("[FlushRowChangedEvents[Debug]] flush table buffer",

zap.Int64("table", tb.tableID),

zap.Int64("event size", sendEvents),

zap.Int("row data size", len(rowDatas)),

zap.Int("upload num", hashPart.uploadNum),

zap.Int64("upload byte size", hashPart.byteSize),

// zap.ByteString("rowDatas", rowDatas),

)

if len(rowDatas) > maxPartFlushSize || hashPart.uploadNum > 0 {

// S3 multi-upload need every chunk(except the last one) is greater than 5Mb

// so, if this batch data size is greater than 5Mb or it has uploadPart already

// we will use multi-upload this batch data

if len(rowDatas) > 0 {

if hashPart.writer == nil {

fileWriter, err := sink.storage().Create(ctx, newFileName)

if err != nil {

return cerror.WrapError(cerror.ErrS3SinkStorageAPI, err)

}

hashPart.writer = fileWriter

}

_, err := hashPart.writer.Write(ctx, rowDatas)

if err != nil {

return cerror.WrapError(cerror.ErrS3SinkStorageAPI, err)

}

hashPart.byteSize += int64(len(rowDatas))

hashPart.uploadNum++

}

if hashPart.byteSize > maxCompletePartSize || len(rowDatas) <= maxPartFlushSize {

// we need do complete when total upload size is greater than 100Mb

// or this part data is less than 5Mb to avoid meet EntityTooSmall error

log.Info("[FlushRowChangedEvents] complete file", zap.Int64("tableID", tb.tableID))

err := hashPart.writer.Close(ctx)

if err != nil {

return cerror.WrapError(cerror.ErrS3SinkStorageAPI, err)

}

hashPart.byteSize = 0

hashPart.uploadNum = 0

hashPart.writer = nil

tb.encoder = nil

}

} else {

// generate normal file because S3 multi-upload need every part at least 5Mb.

log.Info("[FlushRowChangedEvents] normal upload file", zap.Int64("tableID", tb.tableID))

err := sink.storage().WriteFile(ctx, newFileName, rowDatas)

if err != nil {

return cerror.WrapError(cerror.ErrS3SinkStorageAPI, err)

}

tb.encoder = nil

}

tb.sendEvents.Sub(sendEvents)

tb.sendSize.Sub(flushedSize)

tb.uploadParts = hashPart

return nil

}

func newTableBuffer(tableID int64) logUnit

|

type s3Sink struct {

*logSink

prefix string

storage storage.ExternalStorage

logMeta *logMeta

// hold encoder for ddl event log

ddlEncoder codec.EventBatchEncoder

}

func (s *s3Sink) EmitRowChangedEvents(ctx context.Context, rows ...*model.RowChangedEvent) error {

return s.emitRowChangedEvents(ctx, newTableBuffer, rows...)

}

func (s *s3Sink) flushLogMeta(ctx context.Context) error {

data, err := s.logMeta.Marshal()

if err != nil {

return cerror.WrapError(cerror.ErrMarshalFailed, err)

}

return cerror.WrapError(cerror.ErrS3SinkWriteStorage, s.storage.WriteFile(ctx, logMetaFile, data))

}

func (s *s3Sink) FlushRowChangedEvents(ctx context.Context, resolvedTs uint64) (uint64, error) {

// we should flush all events before resolvedTs, there are two kind of flush policy

// 1. flush row events to a s3 chunk: if the event size is not enough,

// TODO: when cdc crashed, we should repair these chunks to a complete file

// 2. flush row events to a complete s3 file: if the event size is enough

return s.flushRowChangedEvents(ctx, resolvedTs)

}

// EmitCheckpointTs update the global resolved ts in log meta

// sleep 5 seconds to avoid update too frequently

func (s *s3Sink) EmitCheckpointTs(ctx context.Context, ts uint64) error {

s.logMeta.GlobalResolvedTS = ts

return s.flushLogMeta(ctx)

}

// EmitDDLEvent write ddl event to S3 directory, all events split by '\n'

// Because S3 doesn't support append-like write.

// we choose a hack way to read origin file then write in place.

func (s *s3Sink) EmitDDLEvent(ctx context.Context, ddl *model.DDLEvent) error {

switch ddl.Type {

case parsemodel.ActionCreateTable:

s.logMeta.Names[ddl.TableInfo.TableID] = quotes.QuoteSchema(ddl.TableInfo.Schema, ddl.TableInfo.Table)

err := s.flushLogMeta(ctx)

if err != nil {

return err

}

case parsemodel.ActionRenameTable:

delete(s.logMeta.Names, ddl.PreTableInfo.TableID)

s.logMeta.Names[ddl.TableInfo.TableID] = quotes.QuoteSchema(ddl.TableInfo.Schema, ddl.TableInfo.Table)

err := s.flushLogMeta(ctx)

if err != nil {

return err

}

}

firstCreated := false

if s.ddlEncoder == nil {

s.ddlEncoder = s.encoder()

firstCreated = true

}

// reset encoder buf for next round append

defer s.ddlEncoder.Reset()

var (

name string

size int64

fileData []byte

)

opt := &storage.WalkOption{

SubDir: ddlEventsDir,

ListCount: 1,

}

err := s.storage.WalkDir(ctx, opt, func(key string, fileSize int64) error {

log.Debug("[EmitDDLEvent] list content from s3",

zap.String("key", key),

zap.Int64("size", size),

zap.Any("ddl", ddl))

name = strings.ReplaceAll(key, s.prefix, "")

size = fileSize

return nil

})

if err != nil {

return cerror.WrapError(cerror.ErrS3SinkStorageAPI, err)

}

// only reboot and (size = 0 or size >= maxRowFileSize) should we add version to s3

withVersion := firstCreated && (size == 0 || size >= maxDDLFlushSize)

// clean ddlEncoder version part

// if we reboot cdc and size between (0, maxDDLFlushSize), we should skip version part in

// JSONEventBatchEncoder.keyBuf, JSONEventBatchEncoder consturctor func has

// alreay filled with version part, see NewJSONEventBatchEncoder and

// JSONEventBatchEncoder.MixedBuild

if firstCreated && size > 0 && size < maxDDLFlushSize {

s.ddlEncoder.Reset()

}

_, er := s.ddlEncoder.EncodeDDLEvent(ddl)

if er != nil {

return er

}

data := s.ddlEncoder.MixedBuild(withVersion)

if size == 0 || size >= maxDDLFlushSize {

// no ddl file exists or

// exists file is oversized. we should generate a new file

fileData = data

name = makeDDLFileObject(ddl.CommitTs)

log.Debug("[EmitDDLEvent] create first or rotate ddl log",

zap.String("name", name), zap.Any("ddl", ddl))

if size > maxDDLFlushSize {

// reset ddl encoder for new file

s.ddlEncoder = nil

}

} else {

// hack way: append data to old file

log.Debug("[EmitDDLEvent] append ddl to origin log",

zap.String("name", name), zap.Any("ddl", ddl))

fileData, err = s.storage.ReadFile(ctx, name)

if err != nil {

return cerror.WrapError(cerror.ErrS3SinkStorageAPI, err)

}

fileData = append(fileData, data...)

}

return s.storage.WriteFile(ctx, name, fileData)

}

func (s *s3Sink) Initialize(ctx context.Context, tableInfo []*model.SimpleTableInfo) error {

if tableInfo != nil {

// update log meta to record the relationship about tableName and tableID

s.logMeta = makeLogMetaContent(tableInfo)

data, err := s.logMeta.Marshal()

if err != nil {

return cerror.WrapError(cerror.ErrMarshalFailed, err)

}

return s.storage.WriteFile(ctx, logMetaFile, data)

}

return nil

}

func (s *s3Sink) Close(ctx context.Context) error {

return nil

}

func (s *s3Sink) Barrier(ctx context.Context) error {

// Barrier does nothing because FlushRowChangedEvents in s3 sink has flushed

// all buffered events forcedlly.

return nil

}

// NewS3Sink creates new sink support log data to s3 directly

func NewS3Sink(ctx context.Context, sinkURI *url.URL, errCh chan error) (*s3Sink, error) {

if len(sinkURI.Host) == 0 {

return nil, errors.Errorf("please specify the bucket for s3 in %s", sinkURI)

}

prefix := strings.Trim(sinkURI.Path, "/")

s3 := &backup.S3{Bucket: sinkURI.Host, Prefix: prefix}

options := &storage.BackendOptions{}

storage.ExtractQueryParameters(sinkURI, &options.S3)

if err := options.S3.Apply(s3); err != nil {

return nil, cerror.WrapError(cerror.ErrS3SinkInitialize, err)

}

// we should set this to true, since br set it by default in parseBackend

s3.ForcePathStyle = true

backend := &backup.StorageBackend{

Backend: &backup.StorageBackend_S3{S3: s3},

}

s3storage, err := storage.New(ctx, backend, &storage.ExternalStorageOptions{

SendCredentials: false,

SkipCheckPath: true,

HTTPClient: nil,

})

if err != nil {

return nil, cerror.WrapError(cerror.ErrS3SinkInitialize, err)

}

s := &s3Sink{

prefix: prefix,

storage: s3storage,

logMeta: newLogMeta(),

logSink: newLogSink("", s3storage),

}

// important! we should flush asynchronously in another goroutine

go func() {

if err := s.startFlush(ctx); err != nil && errors.Cause(err) != context.Canceled {

select {

case <-ctx.Done():

return

case errCh <- err:

default:

log.Error("error channel is full", zap.Error(err))

}

}

}()

return s, nil

}

|

{

return &tableBuffer{

tableID: tableID,

dataCh: make(chan *model.RowChangedEvent, defaultBufferChanSize),

sendSize: atomic.NewInt64(0),

sendEvents: atomic.NewInt64(0),

uploadParts: struct {

writer storage.ExternalFileWriter

uploadNum int

byteSize int64

}{

writer: nil,

uploadNum: 0,

byteSize: 0,

},

}

}

|

main.go

|

// Copyright 2015 Keybase, Inc. All rights reserved. Use of

// this source code is governed by the included BSD license.

package main

import (

"errors"

"fmt"

"io/ioutil"

"os"

"os/signal"

"runtime"

"runtime/debug"

"runtime/pprof"

"syscall"

"time"

"github.com/keybase/client/go/client"

"github.com/keybase/client/go/externals"

"github.com/keybase/client/go/install"

"github.com/keybase/client/go/libcmdline"

"github.com/keybase/client/go/libkb"

"github.com/keybase/client/go/logger"

keybase1 "github.com/keybase/client/go/protocol/keybase1"

"github.com/keybase/client/go/service"

"github.com/keybase/client/go/uidmap"

"github.com/keybase/go-framed-msgpack-rpc/rpc"

"golang.org/x/net/context"

)

var cmd libcmdline.Command

var errParseArgs = errors.New("failed to parse command line arguments")

func handleQuickVersion() bool {

if len(os.Args) == 3 && os.Args[1] == "version" && os.Args[2] == "-S" {

fmt.Printf("%s\n", libkb.VersionString())

return true

}

return false

}

func keybaseExit(exitCode int) {

logger.Shutdown()

logger.RestoreConsoleMode()

os.Exit(exitCode)

}

func main() {

// Preserve non-critical errors that happen very early during

// startup, where logging is not set up yet, to be printed later

// when logging is functioning.

var startupErrors []error

if err := libkb.SaferDLLLoading(); err != nil {

// Don't abort here. This should not happen on any known

// version of Windows, but new MS platforms may create

// regressions.

startupErrors = append(startupErrors,

fmt.Errorf("SaferDLLLoading error: %v", err.Error()))

}

// handle a Quick version query

if handleQuickVersion() {

return

}

g := externals.NewGlobalContextInit()

go HandleSignals(g)

err := mainInner(g, startupErrors)

if g.Env.GetDebug() {

// hack to wait a little bit to receive all the log messages from the

// service before shutting down in debug mode.

time.Sleep(100 * time.Millisecond)

}

mctx := libkb.NewMetaContextTODO(g)

e2 := g.Shutdown(mctx)

if err == nil {

err = e2

}

if err != nil {

// if errParseArgs, the error was already output (along with usage)

if err != errParseArgs {

g.Log.Errorf("%s", stripFieldsFromAppStatusError(err).Error())

}

if g.ExitCode == keybase1.ExitCode_OK {

g.ExitCode = keybase1.ExitCode_NOTOK

}

}

if g.ExitCode != keybase1.ExitCode_OK {

keybaseExit(int(g.ExitCode))

}

}

func tryToDisableProcessTracing(log logger.Logger, e *libkb.Env) {

if e.GetRunMode() != libkb.ProductionRunMode || e.AllowPTrace() {

return

}

if !e.GetFeatureFlags().Admin(e.GetUID()) {

// Admin only for now

return

}

// We do our best but if it's not possible on some systems or

// configurations, it's not a fatal error. Also see documentation

// in ptrace_*.go files.

if err := libkb.DisableProcessTracing(); err != nil {

log.Debug("Unable to disable process tracing: %v", err.Error())

} else {

log.Debug("DisableProcessTracing call succeeded")

}

}

func logStartupIssues(errors []error, log logger.Logger) {

for _, err := range errors {

log.Warning(err.Error())

}

}

func warnNonProd(log logger.Logger, e *libkb.Env) {

mode := e.GetRunMode()

if mode != libkb.ProductionRunMode {

log.Warning("Running in %s mode", mode)

}

}

func checkSystemUser(log logger.Logger) {

if isAdminUser, match, _ := libkb.IsSystemAdminUser(); isAdminUser {

log.Errorf("Oops, you are trying to run as an admin user (%s). This isn't supported.", match)

keybaseExit(int(keybase1.ExitCode_NOTOK))

}

}

func osPreconfigure(g *libkb.GlobalContext) {

switch libkb.RuntimeGroup() {

case keybase1.RuntimeGroup_LINUXLIKE:

// On Linux, we used to put the mountdir in a different location, and

// then we changed it, and also added a default mountdir config var so

// we'll know if the user has changed it.

// Update the mountdir to the new location, but only if they're still

// using the old mountpoint *and* they haven't changed it since we

// added a default. This functionality was originally in the

// run_keybase script.

configReader := g.Env.GetConfig()

if configReader == nil {

// some commands don't configure config.

return

}

userMountdir := configReader.GetMountDir()

userMountdirDefault := configReader.GetMountDirDefault()

oldMountdirDefault := g.Env.GetOldMountDirDefault()

mountdirDefault := g.Env.GetMountDirDefault()

// User has not set a mountdir yet; e.g., on initial install.

nonexistentMountdir := userMountdir == ""

// User does not have a mountdirdefault; e.g., if last used Keybase

// before the change mentioned above.

nonexistentMountdirDefault := userMountdirDefault == ""

usingOldMountdirByDefault := userMountdir == oldMountdirDefault && (userMountdirDefault == oldMountdirDefault || nonexistentMountdirDefault)

shouldResetMountdir := nonexistentMountdir || usingOldMountdirByDefault

if nonexistentMountdirDefault || shouldResetMountdir {

configWriter := g.Env.GetConfigWriter()

if configWriter == nil {

// some commands don't configure config.

return

}

// Set the user's mountdirdefault to the current one if it's

// currently empty.

_ = configWriter.SetStringAtPath("mountdirdefault", mountdirDefault)

if shouldResetMountdir {

_ = configWriter.SetStringAtPath("mountdir", mountdirDefault)

}

}

default:

}

}

func mainInner(g *libkb.GlobalContext, startupErrors []error) error {

cl := libcmdline.NewCommandLine(true, client.GetExtraFlags())

cl.AddCommands(client.GetCommands(cl, g))

cl.AddCommands(service.GetCommands(cl, g))

cl.AddHelpTopics(client.GetHelpTopics())

var err error

cmd, err = cl.Parse(os.Args)

if err != nil {

g.Log.Errorf("Error parsing command line arguments: %s\n\n", err)

if _, isHelp := cmd.(*libcmdline.CmdSpecificHelp); isHelp {

// Parse returned the help command for this command, so run it:

_ = cmd.Run()

}

return errParseArgs

}

if cmd == nil {

return nil

}

if !cmd.GetUsage().AllowRoot && !g.Env.GetAllowRoot() {

checkSystemUser(g.Log)

}

if cl.IsService() {

startProfile(g)

}

if !cl.IsService() {

if logger.SaveConsoleMode() == nil {

defer logger.RestoreConsoleMode()

}

client.InitUI(g)

}

if err = g.ConfigureCommand(cl, cmd); err != nil {

return err

}

g.StartupMessage()

warnNonProd(g.Log, g.Env)

logStartupIssues(startupErrors, g.Log)

tryToDisableProcessTracing(g.Log, g.Env)

// Don't configure mountdir on a nofork command like nix configure redirector.

if cl.GetForkCmd() != libcmdline.NoFork {

osPreconfigure(g)

}

if err := configOtherLibraries(g); err != nil {

return err

}

if err = configureProcesses(g, cl, &cmd); err != nil {

return err

}

err = cmd.Run()

if !cl.IsService() && !cl.SkipOutOfDateCheck() {

// Errors that come up in printing this warning are logged but ignored.

client.PrintOutOfDateWarnings(g)

}

// Warn the user if there is an account reset in progress

if !cl.IsService() && !cl.SkipAccountResetCheck() {

// Errors that come up in printing this warning are logged but ignored.

client.PrintAccountResetWarning(g)

}

return err

}

func configOtherLibraries(g *libkb.GlobalContext) error {

// Set our UID -> Username mapping service

g.SetUIDMapper(uidmap.NewUIDMap(g.Env.GetUIDMapFullNameCacheSize()))

return nil

}

// AutoFork? Standalone? ClientServer? Brew service? This function deals with the

// various run configurations that we can run in.

func configureProcesses(g *libkb.GlobalContext, cl *libcmdline.CommandLine, cmd *libcmdline.Command) (err error) {

g.Log.Debug("+ configureProcesses")

defer func() {

g.Log.Debug("- configureProcesses -> %v", err)

}()

// On Linux, the service configures its own autostart file. Otherwise, no

// need to configure if we're a service.

if cl.IsService() {

g.Log.Debug("| in configureProcesses, is service")

if runtime.GOOS == "linux" {

g.Log.Debug("| calling AutoInstall for Linux")

_, err := install.AutoInstall(g, "", false, 10*time.Second, g.Log)

if err != nil {

return err

}

}

return nil

}

// Start the server on the other end, possibly.

// There are two cases in which we do this: (1) we want

// a local loopback server in standalone mode; (2) we

// need to "autofork" it. Do at most one of these

// operations.

if g.Env.GetStandalone() {

if cl.IsNoStandalone() {

err = client.CantRunInStandaloneError{}

return err

}

svc := service.NewService(g, false /* isDaemon */)

err = svc.SetupCriticalSubServices()

if err != nil {

return err

}

err = svc.StartLoopbackServer()

if err != nil {

return err

}

// StandaloneChatConnector is an interface with only one

// method: StartStandaloneChat. This way we can pass Service

// object while not exposing anything but that one function.

g.StandaloneChatConnector = svc

g.Standalone = true

if pflerr, ok := err.(libkb.PIDFileLockError); ok {

err = fmt.Errorf("Can't run in standalone mode with a service running (see %q)",

pflerr.Filename)

return err

}

return err

}

// After this point, we need to provide a remote logging story if necessary

// If this command specifically asks not to be forked, then we are done in this

// function. This sort of thing is true for the `ctl` commands and also the `version`

// command.

fc := cl.GetForkCmd()

if fc == libcmdline.NoFork {

return configureLogging(g, cl)

}

var newProc bool

if libkb.IsBrewBuild {

// If we're running in Brew mode, we might need to install ourselves as a persistent

// service for future invocations of the command.

newProc, err = install.AutoInstall(g, "", false, 10*time.Second, g.Log)

if err != nil {

return err

}

} else if fc == libcmdline.ForceFork || g.Env.GetAutoFork() {

// If this command warrants an autofork, do it now.

newProc, err = client.AutoForkServer(g, cl)

if err != nil {

return err

}

}

// Restart the service if we see that it's out of date. It's important to do this

// before we make any RPCs to the service --- for instance, before the logging

// calls below. See the v1.0.8 update fiasco for more details. Also, only need

// to do this if we didn't just start a new process.

if !newProc {

if err = client.FixVersionClash(g, cl); err != nil {

return err

}

}

// Ignore error

if err = client.WarnOutdatedKBFS(g, cl); err != nil {

g.Log.Debug("| Could not do kbfs versioncheck: %s", err)

}

g.Log.Debug("| After forks; newProc=%v", newProc)

if err = configureLogging(g, cl); err != nil {

return err

}

// This sends the client's PATH to the service so the service can update

// its PATH if necessary. This is called after FixVersionClash(), which

// happens above in configureProcesses().

if err = configurePath(g, cl); err != nil {

// Further note -- don't die here. It could be we're calling this method

// against an earlier version of the service that doesn't support it.

// It's not critical that it succeed, so continue on.

g.Log.Debug("Configure path failed: %v", err)

}

return nil

}

func configureLogging(g *libkb.GlobalContext, cl *libcmdline.CommandLine) error {

g.Log.Debug("+ configureLogging")

defer func() {

g.Log.Debug("- configureLogging")

}()

// Whether or not we autoforked, we're now running in client-server

// mode (as opposed to standalone). Register a global LogUI so that

// calls to G.Log() in the daemon can be copied to us. This is

// something of a hack on the daemon side.

if !g.Env.GetDoLogForward() || cl.GetLogForward() == libcmdline.LogForwardNone {

g.Log.Debug("Disabling log forwarding")

return nil

}

protocols := []rpc.Protocol{client.NewLogUIProtocol(g)}

if err := client.RegisterProtocolsWithContext(protocols, g); err != nil {

return err

}

logLevel := keybase1.LogLevel_INFO

if g.Env.GetDebug() {

logLevel = keybase1.LogLevel_DEBUG

}

logClient, err := client.GetLogClient(g)

if err != nil {

return err

}

arg := keybase1.RegisterLoggerArg{

Name: "CLI client",

Level: logLevel,

}

if err := logClient.RegisterLogger(context.TODO(), arg); err != nil {

g.Log.Warning("Failed to register as a logger: %s", err)

}

return nil

}

// configurePath sends the client's PATH to the service.

func configurePath(g *libkb.GlobalContext, cl *libcmdline.CommandLine) error {

if cl.IsService() {

// this only runs on the client

return nil

}

return client.SendPath(g)

}

func HandleSignals(g *libkb.GlobalContext) {

c := make(chan os.Signal, 1)

// Note: os.Kill can't be trapped.

signal.Notify(c, os.Interrupt, syscall.SIGTERM)

mctx := libkb.NewMetaContextTODO(g)

for {

s := <-c

if s != nil {

mctx.Debug("trapped signal %v", s)

// if the current command has a Stop function, then call it.

// It will do its own stopping of the process and calling

// shutdown

if stop, ok := cmd.(client.Stopper); ok {

mctx.Debug("Stopping command cleanly via stopper")

stop.Stop(keybase1.ExitCode_OK)

return

}

// if the current command has a Cancel function, then call it:

if canc, ok := cmd.(client.Canceler); ok {

mctx.Debug("canceling running command")

if err := canc.Cancel(); err != nil {

mctx.Warning("error canceling command: %s", err)

}

}

mctx.Debug("calling shutdown")

_ = g.Shutdown(mctx)

mctx.Error("interrupted")

keybaseExit(3)

}

}

}

// stripFieldsFromAppStatusError is an error prettifier. By default, AppStatusErrors print optional

// fields that were problematic. But they make for pretty ugly error messages spit back to the user.

// So strip that out, but still leave in an error-code integer, since those are quite helpful.

func stripFieldsFromAppStatusError(e error) error

|

func startProfile(g *libkb.GlobalContext) {

if os.Getenv("KEYBASE_PERIODIC_MEMPROFILE") == "" {

return

}

interval, err := time.ParseDuration(os.Getenv("KEYBASE_PERIODIC_MEMPROFILE"))

if err != nil {

g.Log.Debug("error parsing KEYBASE_PERIODIC_MEMPROFILE interval duration: %s", err)

return

}

go func() {

g.Log.Debug("periodic memory profile enabled, will dump memory profiles every %s", interval)

for {

time.Sleep(interval)

g.Log.Debug("dumping periodic memory profile")

f, err := ioutil.TempFile("", "keybase_memprofile")

if err != nil {

g.Log.Debug("could not create memory profile: ", err)

continue

}

debug.FreeOSMemory()

runtime.GC() // get up-to-date statistics

if err := pprof.WriteHeapProfile(f); err != nil {

g.Log.Debug("could not write memory profile: ", err)

continue

}

f.Close()

g.Log.Debug("wrote periodic memory profile to %s", f.Name())

var mems runtime.MemStats

runtime.ReadMemStats(&mems)

g.Log.Debug("runtime mem alloc: %v", mems.Alloc)

g.Log.Debug("runtime total alloc: %v", mems.TotalAlloc)

g.Log.Debug("runtime heap alloc: %v", mems.HeapAlloc)

g.Log.Debug("runtime heap sys: %v", mems.HeapSys)

}

}()

}

|

{

if e == nil {

return e

}

if ase, ok := e.(libkb.AppStatusError); ok {

return fmt.Errorf("%s (code %d)", ase.Desc, ase.Code)

}

return e

}

|

traits1.rs

|

// traits1.rs

// Time to implement some traits!

//

// Your task is to implement the trait

// `AppendBar' for the type `String'.

//

// The trait AppendBar has only one function,

// which appends "Bar" to any object

// implementing this trait.

trait AppendBar {

fn append_bar(self) -> Self;

}

impl AppendBar for String {

//Add your code here

fn append_bar(self) -> Self {

self + &String::from("Bar")

}

}

fn main() {

let s = String::from("Foo");

let s = s.append_bar();

println!("s: {}", s);

}

#[cfg(test)]

mod tests {

use super::*;

#[test]

fn

|

() {

assert_eq!(String::from("Foo").append_bar(), String::from("FooBar"));

}

#[test]

fn is_BarBar() {

assert_eq!(

String::from("").append_bar().append_bar(),

String::from("BarBar")

);

}

}

|

is_FooBar

|

api.ts

|

import axios from 'axios'

import moment from 'moment'

import {

Article,

ArticleMeta,

BlockNode,

BlockValue,

Collection,

PageChunk,

RecordValue,

UnsignedUrl,

} from '../api/types'

async function post<T>(url: string, data: any): Promise<T> {

return axios.post(`https://www.notion.so/api/v3${url}`, data)

.then(res => res.data)

}

const propertiesMap = {

name: 'R>;m',

tags: 'X<$7',

publish: '{JfZ',

date: ',n,"',

}

const getFullBlockId = (blockId: string): string => {

if (blockId.match('^[a-zA-Z0-9]+$')) {

return [

blockId.substr(0, 8),

blockId.substr(8, 4),

blockId.substr(12, 4),

blockId.substr(16, 4),

blockId.substr(20, 32),

].join('-')

}

return blockId

}

const loadPageChunk = (

pageId: string,

count: number,

cursor = { stack: [] },

): Promise<PageChunk> => {

const data = {

chunkNumber: 0,

cursor,

limit: count,

pageId: getFullBlockId(pageId),

verticalColumns: false,

}

return post('/loadPageChunk', data)

}

const queryCollection = (

collectionId: string,

collectionViewId: string,

query: any,

): Promise<Collection> => {

const data = {

collectionId: getFullBlockId(collectionId),

collectionViewId: getFullBlockId(collectionViewId),

loader: {

type: 'table',

},

query: undefined,

}

if (query !== null) {

data.query = query

}

return post('/queryCollection', data)

}

const getPageRecords = async (pageId: string): Promise<RecordValue[]> => {

const limit = 50

const result = []

let cursor = { stack: [] }

do {

const pageChunk = await Promise.resolve(loadPageChunk(pageId, limit, cursor))

for (const id of Object.keys(pageChunk.recordMap.block)) {

if (pageChunk.recordMap.block.hasOwnProperty(id)) {

const item = pageChunk.recordMap.block[id]

if (item.value.alive) {

result.push(item)

}

}

}

cursor = pageChunk.cursor

} while (cursor.stack.length > 0)

return result

}

const loadTablePageBlocks = async (collectionId: string, collectionViewId: string) => {

const pageChunkValues = await loadPageChunk(collectionId, 100)

const recordMap = pageChunkValues.recordMap

const tableView = recordMap.collection_view[getFullBlockId(collectionViewId)]

const collection = recordMap.collection[Object.keys(recordMap.collection)[0]]

return queryCollection(collection.value.id, collectionViewId, tableView.value.query)

}

// tslint:disable-next-line:no-unused

const printTreeLevel = (

root: {

value: { id: string }

children: []

},

level: number,

): void => {

let indent = ''

for (let i = 0; i < level; i++) {

indent += ' '

}

console.log(indent + root.value.id)

for (const c of root.children) {

printTreeLevel(c, level + 1)

}

}

const countTreeNode = (root: BlockNode) => {

let count = 1

for (const c of root.children) {

count += countTreeNode(c)

}

return count

|

}

const recordValueListToBlockNodes = (list: RecordValue[]) => {

type DicNode = {

children: Map<string, DicNode>

record: RecordValue

}

const recordListToDic = (recordList: RecordValue[]): Map<string, DicNode> => {

const findNode = (dic: Map<string, DicNode>, id: string): DicNode | null => {

if (dic.has(id)) {

const result = dic.get(id)

return result ? result : null

}

for (const [, entryValue] of dic) {

const find = findNode(entryValue.children, id)

if (find !== null) {

return find

}

}

return null

}

const dic = new Map()

recordList.forEach((item, idx) => {

const itemId = item.value.id

const itemParentId = item.value.parent_id

console.log(`${idx}: id: ${itemId} parent: ${itemParentId}`)

const node = {

record: item,

children: new Map(),

}

dic.forEach((entryValue, key) => {

if (entryValue.record.value.parent_id === itemId) {

node.children.set(key, entryValue)

dic.delete(key)

}

})

const parent = findNode(dic, itemParentId)

if (parent !== null) {

parent.children.set(itemId, node)

} else {

dic.set(itemId, node)

}

})

return dic

}

const convertDicNodeToBlockNode = (dicNode: DicNode): BlockNode => {

const result: BlockNode[] = []

dicNode.children.forEach(v => {

result.push(convertDicNodeToBlockNode(v))

})

return {

value: dicNode.record.value,

children: result,

}

}

const dicTree = recordListToDic(list)

const result: BlockNode[] = []

dicTree.forEach(v => {

result.push(convertDicNodeToBlockNode(v))

})

console.log(result.map(it => countTreeNode(it)))

return result

}

const getNameFromBlockValue = (value: BlockValue): string => {

const properties = value.properties

if (properties !== undefined) {

const nameValue = properties[propertiesMap.name]

if (nameValue !== undefined && nameValue.length > 0) {

return nameValue[0][0]

}

}

return ''

}

const getDateFromBlockValue = (value: BlockValue): number => {

let mom = moment(value.created_time)

const properties = value.properties

if (properties !== undefined) {

const dateValue = properties[propertiesMap.date]

if (dateValue !== undefined) {

const dateString = dateValue[0][1][0][1].start_date

mom = moment(dateString, 'YYYY-MM-DD')

}

}

return mom.unix()

}

const getTagsFromBlockValue = (value: BlockValue): string[] => {

let result = []

const properties = value.properties

if (properties !== undefined) {

const tagValue = properties[propertiesMap.tags]

if (tagValue !== undefined && tagValue.length > 0) {

result = tagValue[0][0].split(',')

}

}

return result

}

const blockValueToArticleMeta = (block: BlockValue): ArticleMeta => {

return {

name: getNameFromBlockValue(block),

tags: getTagsFromBlockValue(block),

date: getDateFromBlockValue(block),

id: block.id,

title: block.properties ? block.properties.title[0] : undefined,

createdDate: moment(block.created_time).unix(),

lastModifiedDate: moment(block.last_edited_time).unix(),

cover: block.format,

}

}

const getArticle = async (pageId: string): Promise<Article> => {

const chunk = await getPageRecords(pageId)

const tree = recordValueListToBlockNodes(chunk)

const meta = blockValueToArticleMeta(tree[0].value)

return {

meta,

blocks: tree[0].children,

}

}

const getArticleMetaList = async (tableId: string, viewId: string) => {

const result = await loadTablePageBlocks(tableId, viewId)

const blockIds = result.result.blockIds

const recordMap = result.recordMap

return blockIds

.map((it: string) => recordMap.block[it].value)

.map((it: BlockValue) => blockValueToArticleMeta(it))

}

const getSignedFileUrls = async (data: UnsignedUrl) => {

return post('/getSignedFileUrls', data)

}

export default { getArticle, getArticleMetaList, getSignedFileUrls }

| |

vtbl.rs

|

use inline_object::{DrawingContext, InlineObjectContainer};

use std::mem;

use std::panic::catch_unwind;

use std::sync::Arc;

use winapi::Interface;

use winapi::ctypes::c_void;

use winapi::shared::guiddef::{IsEqualIID, REFIID};

use winapi::shared::minwindef::{BOOL, FLOAT, ULONG};

use winapi::shared::winerror::{E_FAIL, E_NOTIMPL, HRESULT, SUCCEEDED, S_OK};

use winapi::um::dwrite::{IDWriteInlineObject, IDWriteInlineObjectVtbl, IDWriteTextRenderer,

DWRITE_BREAK_CONDITION, DWRITE_INLINE_OBJECT_METRICS,

DWRITE_OVERHANG_METRICS};

use winapi::um::unknwnbase::{IUnknown, IUnknownVtbl};

use wio::com::ComPtr;

pub static INLINE_OBJECT_VTBL: IDWriteInlineObjectVtbl = IDWriteInlineObjectVtbl {

parent: IUnknownVtbl {

QueryInterface: query_interface,

AddRef: add_ref,

Release: release,

},

Draw: draw,

GetMetrics: get_metrics,

GetOverhangMetrics: get_overhang_metrics,

GetBreakConditions: get_break_conditions,

};

pub unsafe extern "system" fn

|

(

this: *mut IUnknown,

iid: REFIID,

ppv: *mut *mut c_void,

) -> HRESULT {

if IsEqualIID(&*iid, &IUnknown::uuidof()) {

add_ref(this);

*ppv = this as *mut _;

return S_OK;

}

if IsEqualIID(&*iid, &IDWriteInlineObject::uuidof()) {

add_ref(this);

*ppv = this as *mut _;

return S_OK;

}

return E_NOTIMPL;

}

unsafe extern "system" fn add_ref(this: *mut IUnknown) -> ULONG {

let ptr = this as *const InlineObjectContainer;

let arc = Arc::from_raw(ptr);

mem::forget(arc.clone());

let count = Arc::strong_count(&arc);

mem::forget(arc);

count as ULONG

}

unsafe extern "system" fn release(this: *mut IUnknown) -> ULONG {

let ptr = this as *const InlineObjectContainer;

let arc = Arc::from_raw(ptr);

let count = Arc::strong_count(&arc);

mem::drop(arc);

count as ULONG - 1

}

unsafe extern "system" fn draw(

this: *mut IDWriteInlineObject,

ctx: *mut c_void,

renderer: *mut IDWriteTextRenderer,

origin_x: FLOAT,

origin_y: FLOAT,

is_sideways: BOOL,

is_rtl: BOOL,

effect: *mut IUnknown,

) -> HRESULT {

match catch_unwind(move || {

let obj = &*(this as *const InlineObjectContainer);

// Take a reference to the object for working with later

assert!(!renderer.is_null());

(*renderer).AddRef();

let renderer = ComPtr::from_raw(renderer);

// If there's a client effect, wrap it

let client_effect = if !effect.is_null() {

(*effect).AddRef();

Some(ComPtr::from_raw(effect))

} else {

None

};

let context = DrawingContext {

client_context: ctx,

renderer,

origin_x,

origin_y,

is_sideways: is_sideways != 0,

is_right_to_left: is_rtl != 0,

client_effect,

};

match obj.obj.draw(&context) {

Ok(()) => S_OK,

Err(err) if !SUCCEEDED(err.0) => err.0,

Err(_) => E_FAIL,

}

}) {

Ok(result) => result,

Err(_) => E_FAIL,

}

}

unsafe extern "system" fn get_metrics(

this: *mut IDWriteInlineObject,

metrics: *mut DWRITE_INLINE_OBJECT_METRICS,

) -> HRESULT {

match catch_unwind(move || {

let obj = &*(this as *const InlineObjectContainer);

let m = match obj.obj.get_metrics() {

Ok(metrics) => metrics,

Err(err) if !SUCCEEDED(err.0) => return err.0,

Err(_) => return E_FAIL,

};

let metrics = &mut *metrics;

metrics.width = m.width;

metrics.height = m.height;

metrics.baseline = m.baseline;

metrics.supportsSideways = m.supports_sideways as BOOL;

S_OK

}) {

Ok(result) => result,

Err(_) => E_FAIL,

}

}

unsafe extern "system" fn get_overhang_metrics(

this: *mut IDWriteInlineObject,

metrics: *mut DWRITE_OVERHANG_METRICS,

) -> HRESULT {

match catch_unwind(move || {

let obj = &*(this as *const InlineObjectContainer);

let m = match obj.obj.get_overhang_metrics() {

Ok(metrics) => metrics,

Err(err) if !SUCCEEDED(err.0) => return err.0,

Err(_) => return E_FAIL,

};

let metrics = &mut *metrics;

metrics.left = m.left;

metrics.top = m.top;

metrics.right = m.right;

metrics.bottom = m.bottom;

S_OK

}) {

Ok(result) => result,

Err(_) => E_FAIL,

}

}

unsafe extern "system" fn get_break_conditions(

this: *mut IDWriteInlineObject,

before: *mut DWRITE_BREAK_CONDITION,

after: *mut DWRITE_BREAK_CONDITION,

) -> HRESULT {

match catch_unwind(move || {

let obj = &*(this as *const InlineObjectContainer);

let (b, a) = match obj.obj.get_break_conditions() {

Ok(result) => result,

Err(err) if !SUCCEEDED(err.0) => return err.0,

Err(_) => return E_FAIL,

};

*before = b as u32;

*after = a as u32;

S_OK

}) {

Ok(result) => result,

Err(_) => E_FAIL,

}

}

|

query_interface

|

main.rs

|

use bytemuck::{Pod, Zeroable};

use std::{borrow::Cow, mem};

use wgpu::util::DeviceExt;

#[path = "../framework.rs"]

mod framework;

const MAX_BUNNIES: usize = 1 << 20;

const BUNNY_SIZE: f32 = 0.15 * 256.0;

const GRAVITY: f32 = -9.8 * 100.0;

const MAX_VELOCITY: f32 = 750.0;

#[repr(C)]

#[derive(Clone, Copy, Pod, Zeroable)]

struct Globals {

mvp: [[f32; 4]; 4],

size: [f32; 2],

pad: [f32; 2],

}

#[repr(C, align(256))]

#[derive(Clone, Copy, Zeroable)]

struct Locals {

position: [f32; 2],

velocity: [f32; 2],

color: u32,

_pad: u32,

}

/// Example struct holds references to wgpu resources and frame persistent data

struct Example {

global_group: wgpu::BindGroup,

local_group: wgpu::BindGroup,

pipeline: wgpu::RenderPipeline,

bunnies: Vec<Locals>,

local_buffer: wgpu::Buffer,

extent: [u32; 2],

}

impl framework::Example for Example {

fn init(

config: &wgpu::SurfaceConfiguration,

_adapter: &wgpu::Adapter,

device: &wgpu::Device,

queue: &wgpu::Queue,

) -> Self {

let shader = device.create_shader_module(&wgpu::ShaderModuleDescriptor {

label: None,

source: wgpu::ShaderSource::Wgsl(Cow::Borrowed(include_str!(

"../../../wgpu-hal/examples/halmark/shader.wgsl"

))),

});

let global_bind_group_layout =

device.create_bind_group_layout(&wgpu::BindGroupLayoutDescriptor {

entries: &[

wgpu::BindGroupLayoutEntry {

binding: 0,

visibility: wgpu::ShaderStages::VERTEX,

ty: wgpu::BindingType::Buffer {

ty: wgpu::BufferBindingType::Uniform,

has_dynamic_offset: false,

min_binding_size: wgpu::BufferSize::new(mem::size_of::<Globals>() as _),

},

count: None,

},

wgpu::BindGroupLayoutEntry {

binding: 1,

visibility: wgpu::ShaderStages::FRAGMENT,

ty: wgpu::BindingType::Texture {

sample_type: wgpu::TextureSampleType::Float { filterable: true },

view_dimension: wgpu::TextureViewDimension::D2,

multisampled: false,

},

count: None,

},

wgpu::BindGroupLayoutEntry {

binding: 2,

visibility: wgpu::ShaderStages::FRAGMENT,

ty: wgpu::BindingType::Sampler(wgpu::SamplerBindingType::Filtering),

count: None,

},

],

label: None,

});

let local_bind_group_layout =

device.create_bind_group_layout(&wgpu::BindGroupLayoutDescriptor {

entries: &[wgpu::BindGroupLayoutEntry {

binding: 0,

visibility: wgpu::ShaderStages::VERTEX,

ty: wgpu::BindingType::Buffer {

ty: wgpu::BufferBindingType::Uniform,

has_dynamic_offset: true,