repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

OMS-NetZero/FAIR-pro | 239097191 | Title: Basic Implementation of CH4 and N2O

Question:

username_0: Seems to make sense to add both of these at the same time as they are dependent on one another. By "basic", I mean fixed lifetimes for both, all equations and data will be taken from IPCC, mainly from AR5 8.SM. My plan is to do them in as similar a manner as to how Carbon is currently implemented to make the whole code as clear as possible. I'll work in the branch FAIR-pro/FAIR-basic-development/Additional_GHG_Implementation, and will comment fairly exhaustively as I code.

- [ ] Add in all additional parameters to the definition required: M_emissions, N_emissions, M_concs, N_concs, M_life, N_life, M_0, N_0. There may be some unit conversions needed (such as ppm_gtc, but I'll add these as necessary).

- [ ] Add in checks for CH4 and N2O being emissions or concentrations driven (I'm a little wary as this will likely lengthen the program by quite a bit, however, I'll try and be as concise as possible). _I think I'll start by requiring the program is either entirely concentrations driven or entirely emissions driven otherwise it will end up very long. I'll raise an error if concentrations are supplied for some gases and emissions for others._

- [ ] Add in a check that all the emissions or concentrations timeseries are the same length, raise error if not.

- [ ] Create np.zeros in which CH4 and N2O concentrations will be stored, I'll call these M and N for simplicity.

- [ ] Add lines to compute the first timestep concentrations, based on emissions or concentrations, in a manner very similar to CO2.

- [ ] Add in two extra definitions that just contain the equations for RF due to CH4 and N2O- I feel this should make the code clearer than just adding them to the RF line since they are both pretty long.

- [ ] Add the relevant terms to the RF (in both first ts and rest of run).

FAIR should now calculate the temperature including the other gases- no alterations need to be made to the temperature calculations I think.

- [ ] Add M and N concentrations as outputs.

Answers:

username_1: Looks great. The only suggestion I'd make is that to maintain some semblance of backwards compatibility you only return M and N if M and N emissions/concentrations were supplied as inputs?

username_0: Yes, that's a good point, I'll make sure that happens when I get there.

Status: Issue closed

|

google/exposure-notifications-verification-server | 701354303 | Title: User pagination

Question:

username_0: Users of the verification server may have hundreds to thousands of users which may eventually make our /users page unsuable. Consider including pagination / filtering to manager a larger user set.

Context:

https://github.com/google/exposure-notifications-verification-server/issues/517

/kind enhancement

Answers:

username_0: /assign |

zhiyb/MinecraftScripts | 253621767 | Title: 日志中添加日期时间

Question:

username_0: 日志中诸如:

`Fetching manifest...

Latest snapshot: 1.12.1 (2017-08-03T12:40:39+00:00)

File server/1.12.1.jar already exists

Fetching manifest...

Latest snapshot: 1.12.1 (2017-08-03T12:40:39+00:00)

File server/1.12.1.jar already exists

Fetching manifest...

Latest snapshot: 1.12.1 (2017-08-03T12:40:39+00:00)

File server/1.12.1.jar already exists

Fetching manifest...

Latest snapshot: 1.12.1 (2017-08-03T12:40:39+00:00)

File server/1.12.1.jar already exists

./server_update.sh: 第 30 行:echo: 写错误: 设备上没有空间

Fetching manifest...

Latest snapshot: 1.12.1 (2017-08-03T12:40:39+00:00)

File server/1.12.1.jar already exists

./server_update.sh: 第 30 行:echo: 写错误: 设备上没有空间`

出现错误的时间没有记录,希望可以在其中输出日期时间

Status: Issue closed

Answers:

username_1: Please test 56a18a1

username_0: Thanks , its very nice |

coredns/coredns | 525611599 | Title: can not

Question:

username_0: https://coredns.io/2017/07/25/compile-time-enabling-or-disabling-plugins/

https://coredns.io/explugins/pdsql/

Answers:

username_1: External plugins are independently maintained.

Please raise the issue in the external plugin's repository.

Status: Issue closed

|

PhyloStar/CogDetect | 231408917 | Title: Scores None in align_pairs

Question:

username_0: The scores array that is passed as an argument in update_pmi_dict code at line no. 271 in dataio.py code is set to scores.

If the scores is None, then the Online algorithm does not weigh a character pair by the similarity score of the word pair. A weighted version yields stable results than an unweighted version. |

caolan/async | 328855783 | Title: async queue example should show how to handle errors

Question:

username_0: Looking at the docs for async.queue

https://caolan.github.io/async/docs.html#queue

```

// create a queue object with concurrency 2

var q = async.queue(function(task, callback) {

console.log('hello ' + task.name);

callback();

}, 2);

// assign a callback

q.drain = function() {

console.log('all items have been processed');

};

```

is there an

```js

q.onError = function(err){

}

```

handler that we can use?

I don't think q.drain is an error-first callback, and I don't want to pass a callbac for each q.push or q.unshift call, I just want to use one error-first callback.

This should exist and be in the docs, right?

Status: Issue closed

Answers:

username_1: https://caolan.github.io/async/docs.html#QueueObject

`q.error` exists, and there is an optional callback you can pass to `q.push()`.

username_0: @username_1 it needs to be documented in the example, that is the bug. How can I add it to the example? How do I make a PR for the docs?

username_1: The example is in the JSDoc comment in the `queue` source.

username_0: https://github.com/caolan/async/pull/1540

sounds good |

CovertLab/arrow | 393571052 | Title: Suspend alternative propensity calculation 'form's

Question:

username_0: Playing with Numba - I don't think it likes passing around function handles. I get performance hits when trying to wrap `propensity` and `choose` with `@numba.jit`, but if I pull out the `form` option, I get roughly a four-fold speed-up. There's probably a way around this but I think it's going to be easier to step back before moving forward - we also don't have any non-default test cases for the `form` feature. @username_1 thoughts?

(Numba [FAQs](https://numba.pydata.org/numba-doc/dev/user/faq.html) suggest that this is an active area for improvement.)

Answers:

username_1: Yeah I'm happy to walk back the `form` feature if it is holding us back, feel free! It was for a feature Heejo needed, but it turns out she doesn't need it anymore

Status: Issue closed

username_0: Addressed via #28. |

nmwsharp/geometry-central | 792911274 | Title: Any interest in feature preserving mesh smoothing algorithms for triangular meshes?

Question:

username_0: Would there be any interest in an implementation of this algorithm within geometry central?

http://mesh.brown.edu/dgp/pdfs/Jones-sg03.pdf

I have a naive implemetation that I would be happy to try to clean up and submit as a PR.

But, maybe a better question is this: Is there a plan already in the works for a suite of smoothing algorithms within the library? If so, what would that look like? Or is it already there somewhere and I've just missed it? |

TGNThump/AbandonedRuins | 700848592 | Title: Script raised revive events triggered by other mods

Question:

username_0: ### Overview

script_raised_revive events are being triggered by other mods, including the base game mod for pasting blueprints and bot construction, causing assembly machine recipie locking and weaponry force changing.

Thread: https://mods.factorio.com/mod/abandoned_ruins/discussion/5f5da16ed8561730a72f9c73

### Enviroment

- Factorio 1.0

- Abandoned Ruins 1.2.0<issue_closed>

Status: Issue closed |

solo-io/gloo | 428917122 | Title: Need better error checking in glooctl for malformed manifests

Question:

username_0: ```yaml

apiVersion: gloo.solo.io/v1

kind: Upstream

metadata:

labels:

name: my-service-name

upstreamSpec:

kube:

serviceName: my-service-name

serviceNamespace: namespace-name

servicePort: 1234

```

missing `spec:` before `upstreamSpec:` causes

```

[~/charts]$ glooctl get upstream

panic: runtime error: invalid memory address or nil pointer dereference

[signal SIGSEGV: segmentation violation code=0x1 addr=0x0 pc=0x24d8af0]

goroutine 1 [running]:

github.com/solo-io/gloo/projects/gloo/cli/pkg/printers.upstreamType(0xc0007efc00, 0x0, 0x0)

/private/tmp/glooctl-20190329-19689-1njlz4k/src/github.com/solo-io/gloo/projects/gloo/cli/pkg/printers/upstream.go:43 +0x20

github.com/solo-io/gloo/projects/gloo/cli/pkg/printers.UpstreamTable(0xc000894000, 0x66, 0x70, 0x3481920, 0xc000010010)

/private/tmp/glooctl-20190329-19689-1njlz4k/src/github.com/solo-io/gloo/projects/gloo/cli/pkg/printers/upstream.go:22 +0x220

github.com/solo-io/gloo/projects/gloo/cli/pkg/helpers.PrintUpstreams.func1(0x2fe90a0, 0xc0003c7980, 0x3481920, 0xc000010010, 0x0, 0x0)

/private/tmp/glooctl-20190329-19689-1njlz4k/src/github.com/solo-io/gloo/projects/gloo/cli/pkg/helpers/print.go:16 +0x93

github.com/solo-io/gloo/vendor/github.com/solo-io/solo-kit/pkg/utils/cliutils.PrintList(0x0, 0x0, 0x0, 0x0, 0x2fe90a0, 0xc0003c7980, 0x31cb8a8, 0x3481920, 0xc000010010, 0x0, ...)

/private/tmp/glooctl-20190329-19689-1njlz4k/src/github.com/solo-io/gloo/vendor/github.com/solo-io/solo-kit/pkg/utils/cliutils/printer.go:81 +0x282

github.com/solo-io/gloo/projects/gloo/cli/pkg/helpers.PrintUpstreams(0xc000894000, 0x66, 0x70, 0x0, 0x0)

/private/tmp/glooctl-20190329-19689-1njlz4k/src/github.com/solo-io/gloo/projects/gloo/cli/pkg/helpers/print.go:14 +0xb0

github.com/solo-io/gloo/projects/gloo/cli/pkg/cmd/get.Upstream.func1(0xc000112500, 0x4a6ef60, 0x0, 0x0, 0x0, 0x0)

/private/tmp/glooctl-20190329-19689-1njlz4k/src/github.com/solo-io/gloo/projects/gloo/cli/pkg/cmd/get/upstream.go:23 +0x1dc

github.com/solo-io/gloo/vendor/github.com/spf13/cobra.(*Command).execute(0xc000112500, 0x4a6ef60, 0x0, 0x0, 0x0, 0x0)

/private/tmp/glooctl-20190329-19689-1njlz4k/src/github.com/solo-io/gloo/vendor/github.com/spf13/cobra/command.go:762 +0xb21

github.com/solo-io/gloo/vendor/github.com/spf13/cobra.(*Command).ExecuteC(0xc000566500, 0xc000112500, 0x0, 0x0)

/private/tmp/glooctl-20190329-19689-1njlz4k/src/github.com/solo-io/gloo/vendor/github.com/spf13/cobra/command.go:852 +0x677

github.com/solo-io/gloo/vendor/github.com/spf13/cobra.(*Command).Execute(0xc000566500, 0x0, 0x0)

/private/tmp/glooctl-20190329-19689-1njlz4k/src/github.com/solo-io/gloo/vendor/github.com/spf13/cobra/command.go:800 +0x3b

main.main()

/private/tmp/glooctl-20190329-19689-1njlz4k/src/github.com/solo-io/gloo/projects/gloo/cli/cmd/main.go:18 +0xde

``` |

typescript-eslint/typescript-eslint | 1184007827 | Title: Docs: Website sponsor data includes low-donation spam

Question:

username_0: ### Suggested Changes

See discussion in #4750: there are some bad actors we've reported to OpenCollective who donate <=$5 just to get on our ad space. Not nice.

If we filter our sponsors to just those who have donated, say, either $10/month or a total of $50, we'd get rid of these bad actors. We also have past sponsors such as Sentry who gave larger one-time donations that could be put on there.

### Affected URL(s)

https://typescript-eslint.io |

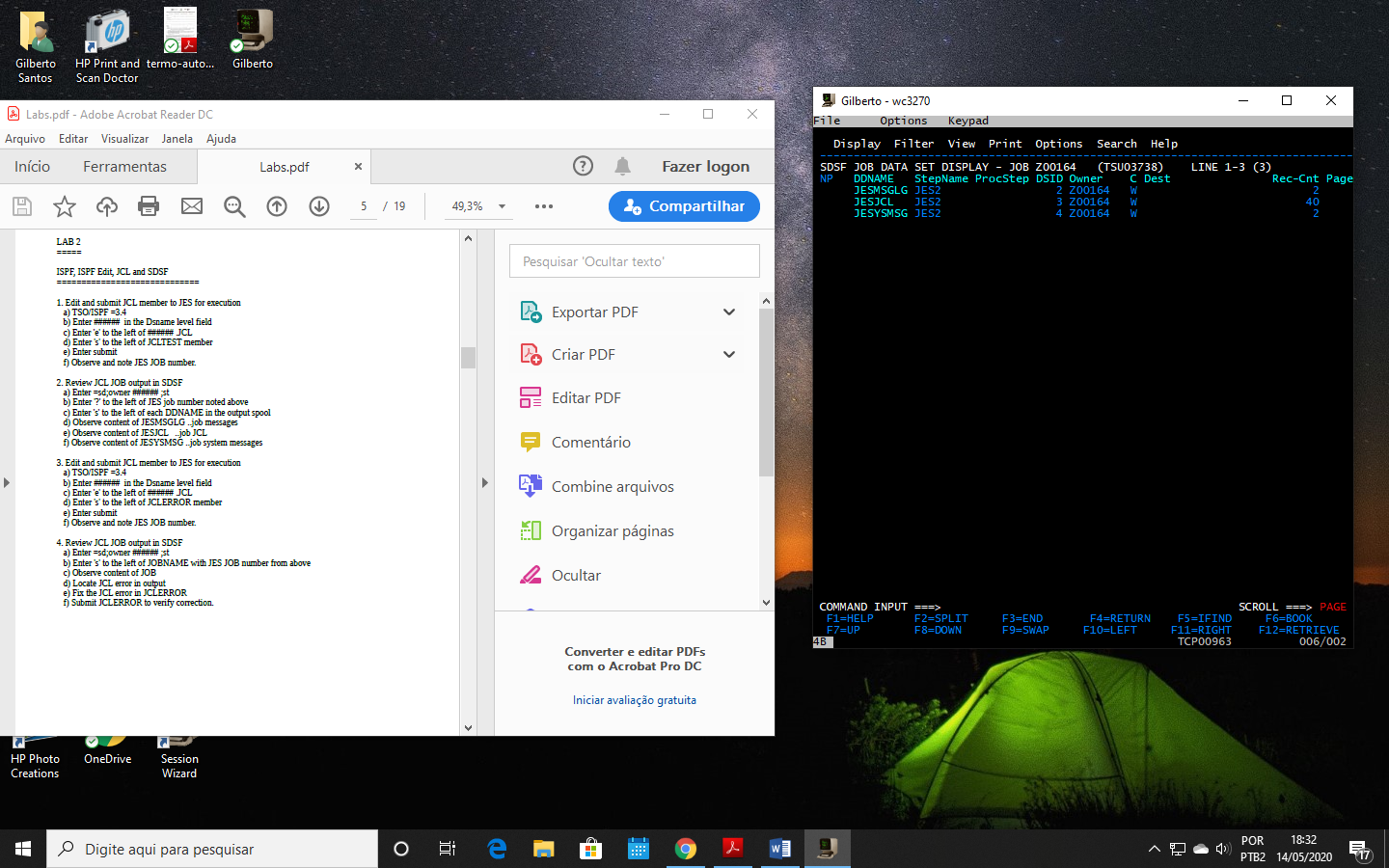

ArctosDB/arctos | 404926280 | Title: report needed: taxa used in IDs by a collection which do not have a preferred classification

Question:

username_0: for #1419

sql:

```

select

taxon_name.scientific_name

from

identification_taxonomy,

identification,

cataloged_item,

collection,

taxon_name

where

identification_taxonomy.identification_id=identification.identification_id and

identification.collection_object_id=cataloged_item.collection_object_id and

cataloged_item.collection_id=collection.collection_id and

identification_taxonomy.taxon_name_id=taxon_name.taxon_name_id and

collection.guid_prefix='{guid_prefix}' and

taxon_name.taxon_name_id not in

(select taxon_name_id from taxon_term where

taxon_term.taxon_name_id = taxon_name.taxon_name_id and

taxon_term.source=collection.PREFERRED_TAXONOMY_SOURCE

)

;

Answers:

username_1: Can you give me another way to search for these four taxa without a preferred classification for DMNS: Inv?

The SQL doesn't bring up anything - at least the way I ran it.

username_0: It should work there without the trailing semicolon, and you'll need to put in your actual GUID_Prefix.

```

select

taxon_name.scientific_name

from

identification_taxonomy,

identification,

cataloged_item,

collection,

taxon_name

where

identification_taxonomy.identification_id=identification.identification_id and

identification.collection_object_id=cataloged_item.collection_object_id and

cataloged_item.collection_id=collection.collection_id and

identification_taxonomy.taxon_name_id=taxon_name.taxon_name_id and

collection.guid_prefix='DMNS:Inv' and

taxon_name.taxon_name_id not in

(select taxon_name_id from taxon_term where

taxon_term.taxon_name_id = taxon_name.taxon_name_id and

taxon_term.source=collection.PREFERRED_TAXONOMY_SOURCE

)

;

SCIENTIFIC_NAME

------------------------------------------------------------------------------------------------------------------------

Nitidella gausapata

Cerithium septemstriatum

Gemmula gilchristie

Helicostyla stabilis

```

Status: Issue closed

username_1: Thanks. It worked.

username_2: Still getting the same invalid character error when I try Dusty's code. It would be nice to click on the preferred taxonomy error and be sent directly to a table of the specimens with the errors. Any idea what I'm doing wrong?

username_3: for #1419

sql:

```

select

taxon_name.scientific_name

from

identification_taxonomy,

identification,

cataloged_item,

collection,

taxon_name

where

identification_taxonomy.identification_id=identification.identification_id and

identification.collection_object_id=cataloged_item.collection_object_id and

cataloged_item.collection_id=collection.collection_id and

identification_taxonomy.taxon_name_id=taxon_name.taxon_name_id and

collection.guid_prefix='{guid_prefix}' and

taxon_name.taxon_name_id not in

(select taxon_name_id from taxon_term where

taxon_term.taxon_name_id = taxon_name.taxon_name_id and

taxon_term.source=collection.PREFERRED_TAXONOMY_SOURCE

)

;

username_3: @username_0 is the above possible?

username_0: The error is from the semicolon and the next line. Here's the code by itself

```

select

taxon_name.scientific_name

from

identification_taxonomy,

identification,

cataloged_item,

collection,

taxon_name

where

identification_taxonomy.identification_id=identification.identification_id and

identification.collection_object_id=cataloged_item.collection_object_id and

cataloged_item.collection_id=collection.collection_id and

identification_taxonomy.taxon_name_id=taxon_name.taxon_name_id and

collection.guid_prefix='UCSC:Herp' and

taxon_name.taxon_name_id not in

(select taxon_name_id from taxon_term where

taxon_term.taxon_name_id = taxon_name.taxon_name_id and

taxon_term.source=collection.PREFERRED_TAXONOMY_SOURCE

)

```

It finds nothing as of now.

Yes the purpose of this issue is to define and prioritize a report.

username_3: Just turn that code into a tool in Low Quality Data?

username_1: This report could use a modification or additional report.

It is showing as low quality data 779 taxa without a preferred classification. Actually, these are the 780 taxa that are not in WoRMS (via Arctos) so they default to our second source Arctos. Most of them are fossils or terrestrials that WoRMS does not include. This is helpful to know how many taxa are using Arctos classifications.

For the low quality data report, can we modify this to look for classifications from all listed sources before reporting that there is no preferred classification?

username_0: From https://github.com/ArctosDB/arctos/issues/3541

Suggest we change this to "missing from FLAT" which

1. Is easy, and

2. Finds "doesn't have useful data" which seems much more informative.

username_1: Sorry, but what does "missing from FLAT" mean?

Will the report show a list of taxa used in IDs that have no (preferred) classification?

username_0: A classification might include a remark and nothing else; that would not be detected in a "taxon has classification" test.

Etc., etc., etc. - "something there" is what I could potentially detect, that's it, and we know that plenty of classifications do in fact have problems.

"Something in flat" is essentially "has a classification, and it does something useful."

```

select scientific_name from flat where guid_prefix='DMNS:Inv' and phylum is null group by scientific_name order by scientific_name;

```

finds nothing - all of your IDs found something to use for phylum, yay you!

```

arctosprod@arctos>> select scientific_name from flat where guid_prefix='DMNS:Inv' and family is null group by scientific_name order by scientific_name;

scientific_name

-------------------

Ammonitida

Bryozoa

Caridea

Decapoda

Demospongiae

Foraminifera

Gordius

Heterobranchia

Patellogastropoda

Porifera

(10 rows)

```

For those, the scripts have gone through your preferred sources and couldn't find a family value. If any of those are families or below, there's something wrong with all of your preferred sources. If they're not (I think not?) then your preferred classifications supply something useful for all of your records.

username_1: That helps. I always just search the collections periodically for records where the Kingdom (or phylum etc.) is NULL since SQL isn't my first language. It sounds like that's the best way for me for continue to look for problem records.

Yes, the ones with a NULL family are known to be that way. We can't ID them to any lower level at this time.

username_0: That's the same data as what I'm thinking, just from a more taxa-centric viewpoint.

Yes the Dashboard-->Bare Names thing needs rewritten; it's still working under the idea that a collection will prefer exactly one Source.

Some of those are probably a legacy of that, and some may be names used in nonaccepted IDs. The classifications attached to those don't really DO anything so I don't think that's a problem; if it is, maybe it's at least a rare (or strange) enough problem that it can be dealt with via SQL on a case-by-case basis.

username_1: @username_0 I think we can close this assuming that you have a rewrite of the Dashboard "Bare Names" (and same for the cheat sheet) on your To Do list. I'll leave closing it up to you.

username_3: @username_0 the link for taxa without a classification in the something random thingee

goes to this issue, but I think it should now go here - https://github.com/ArctosDB/documentation-wiki/blob/gh-pages/_sql_cheats/taxa_without_classification.markdown

username_0: I can rewrite the query (I think!), but it would be very expensive and I don't think it can be made to run in the UI. Suggest checking computed terms in flat - https://github.com/ArctosDB/arctos/issues/1894#issuecomment-816080112

username_3: Well, how does Arctos know there are two names with no classification? And if it knows that, why can't it tell me what they are? If we can't make it easy for people to fix "low quality data" we might as well not tell them there is any.

username_0: Slowly. FLAT exists so the expensive computations don't have to happen at runtime.

"Has a classification" and "has a useful classification" are wildly different things. Flat gets at "useful" (eg, has expected ranks), along with performing reasonably well. I don't understand the complaint - this is a more informative approach, yay everybody?! https://github.com/ArctosDB/arctos/issues/1894#issuecomment-816080112

username_3: But the query above only looks for "phylum"? Nobody has time to check all the potential levels of every possible classification?

username_3: Keep a list as it goes? Is that asking too much?

username_0: Hu?

username_3: Then why mention family?

```

select scientific_name from flat where guid_prefix='DMNS:Inv' and family is null group by scientific_name order by scientific_name;

scientific_name

```

username_0: Family is just one term/rank that some collections/disciplines seem to care about.

I'd just replace family with other flat-terms and run multiple queries, but I'm not sure I understand what you're asking for here so that may not be useful. What precisely are the goals?

This will get at (or very near) "no classification data" if that's the question.

```

select scientific_name from flat where guid_prefix='DMNS:Inv' and

phylclass is null and

kingdom is null and

phylum is null and

phylorder is null and

family is null and

genus is null and

species is null and

subspecies is null and

author_text is null and

nomenclatural_code is null and

infraspecific_rank is null and

subfamily is null and

tribe is null and

subtribe is null

```

username_3: That works for this and I think would be good for most cases. I'll play with it an see if anything needs added. Thanks!

username_0: Aha! That's where we should be starting - that report is almost certainly not working correctly (https://github.com/ArctosDB/arctos/issues/1894#issuecomment-816236745) - so 2 questions

1. What (if anything) should be be checking, and

2. Then what?

username_3: Just what it says - "bare names" a name used as an identification that has no classification in any of the collection's preferred sources.

username_0: There's a new report in next release

<img width="291" alt="Screen Shot 2021-10-01 at 8 18 46 AM" src="https://user-images.githubusercontent.com/5720791/135645380-ca5a9b9e-a5b5-48b5-bb6f-050ba2bc0a5d.png">

and the dashboard-creator-thing is no longer looking for taxonomy problems because I can't find a way to do so which doesn't return lots of false positives. It should be easy enough to reintroduce that if someone eventually identifies a specific need.

Status: Issue closed

|

juj/fbcp-ili9341 | 712061773 | Title: Screen doesn't fit display

Question:

username_0: I'm relatively sure I'm having the same problem as issue #112, however, I didn't quite understand the fix.

``bcm_host_get_peripheral_address: 0xfe000000, bcm_host_get_peripheral_size: 25165824, bcm_host_get_sdram_address: 0xc0000000

BCM core speed: current: 333333333hz, max turbo: 500000000hz. SPI CDIV: 30, SPI max frequency: 16666667hz

Allocated DMA channel 7

Allocated DMA channel 1

Enabling DMA channels Tx:7 and Rx:1

DMA hardware register file is at ptr: 0xb532d000, using DMA TX channel: 7 and DMA RX channel: 1

DMA hardware TX channel register file is at ptr: 0xb532d700, DMA RX channel register file is at ptr: 0xb532d100

Resetting DMA channels for use

DMA all set up

Initializing display

Resetting display at reset GPIO pin 24

Creating SPI task thread

InitSPI done

DISPLAY_FLIP_ORIENTATION_IN_SOFTWARE: Swapping width/height to update display in portrait mode to minimize tearing.

Relevant source display area size with overscan cropped away: 768x1360.

Source GPU display is 768x1360. Output SPI display is 240x240 with a drawable area of 240x240. Applying scaling factor horiz=0.18x & vert=0.18x, xOffset: 52, yOffset: 0, scaledWidth: 136, scaledHeight: 240

Creating dispmanX resource of size 136x240 (aspect ratio=0.566667).

GPU grab rectangle is offset x=0,y=0, size w=136xh=240, aspect ratio=0.566667

All initialized, now running main loop...``

How do I get the display to correctly fit the screen?

<issue_closed>

Status: Issue closed |

vim/vim | 194862264 | Title: jobs: close_cb and exit_cb are called during processing of out_cb

Question:

username_0: ```vim

call ch_logfile('/tmp/job.log')

let g:lines = []

function Out_cb(channel, data)

echom "CALLBACK" string(a:channel) string(a:data)

sleep 200m

echom "\n"

let g:lines += [a:data]

endfunction

function Close_cb(channel)

let g:lines += ['closed']

echom "CLOSE" string(a:channel)

echom "\n"

endfunction

let job = job_start(['sh', '-c', 'sleep 0.1; echo 1'], {

\ 'out_cb': 'Out_cb',

\ 'close_cb': 'Close_cb',

\ })

sleep 200m

echom "lines" string(g:lines)

```

`vim -u t-job-exit-before-stdout.vim`:

```

CALLBACK channel 0 open '1'

CLOSE process 27123 dead

^@

^@

lines ['closed', '1']

```

I think this is rather unexpected, and that the exit/close callbacks should only be invoked after the stdout/stderr (output) handlers are finished.

Answers:

username_1: Well, why do you invoke :sleep in the callback? Perhaps you ran into

this with some other command?

We should probably change this, but it should not be a big problem.

--

hundred-and-one symptoms of being an internet addict:

109. You actually read -- and enjoy -- lists like this.

/// <NAME> -- <EMAIL> -- http://www.Moolenaar.net \\\

/// sponsor Vim, vote for features -- http://www.Vim.org/sponsor/ \\\

\\\ an exciting new programming language -- http://www.Zimbu.org ///

\\\ help me help AIDS victims -- http://ICCF-Holland.org ///

username_0: I have noticed this with Neomake's tests, where the call to the debug logging seems to trigger this, and in particular the `redraw` therein (https://github.com/neomake/neomake/blob/c65e4fd8c9d412887e2cf83a20f94eb184799856/autoload/neomake/utils.vim#L52).

But using `redraw` instead of `sleep` in the test case here does not trigger it.

(As for Neomake's test: see https://github.com/neomake/neomake/commit/dc16de05686ee5f8b63cc6eb91bca34631bbc7c7#diff-1ceaba5126b0cf477e72f87cb2767af0L775 for the workaround, and https://github.com/neomake/neomake/blob/c65e4fd8c9d412887e2cf83a20f94eb184799856/autoload/neomake/utils.vim#L22 for the core of the debug message function.)

username_0: Came across this again - it is still an issue with Vim 8.0.586.

username_2: I'm having this when I tried to porting my plugin from neovim to vim. I am not using any sleep or redraw, only calling curl with jobstart and then in cb_out the channel is already closed.

username_3: came across with same issue

I think it's because `out_cb` was doing some heavy code that takes a long time, which exceeds vim's timeout? so simply `redraw` may not trigger the issue, but `sleep` would

my current solution is, `sleep` after your original job command, for example, change `curl balabla` to `curl balabala && sleep 1`, but that's tedious and not well-designed |

trentm/node-bunyan | 176041520 | Title: Mute logging when testing

Question:

username_0: It would be great to have possibility to turn off logging when testing application

for example in node.js:

```js

const bunyan = require('bunyan');

if(process.env.NODE_ENV === 'test') {

const log = bunyan.createLogger({

mute: true

name: <string> // Required

});

}

```

Answers:

username_1: You can choose the log level you want to actually log in the logger's streams

```javascript

if (process.env.NODE_ENV === 'test') {

log = bunyan.createLogger({

name: 'Some app',

streams: [{

stream: process.stdout,

level: 'fatal' // Only logs when the level is fatal or higher

}]

});

}

```

I guess it should be enough for your use cases.

If you want to "mute" a chunk of code, you could do something like

```javascript

var oldLog = [];

function muteOn () {

oldLog.push(log);

log = bunyan.createLogger({

name: 'Some app',

streams: [{

stream: process.stdout,

level: 'fatal'

}]

});

}

function muteOff () {

if (oldLog.length) {

log = oldLog[0];

oldLog = [];

}

}

...

muteOn();

...

muteOff();

```

username_2: Thanks for the answer, @username_1.

@username_0 See also this note about a potential future "off" level above fatal that would be more explicit: https://github.com/username_2/node-bunyan/pull/148#issuecomment-53232979

For now, however, using some number higher than `bunyan.FATAL` should work.

Status: Issue closed

|

top-think/think | 441603163 | Title: 请不要再composer.json 中 "topthink/framework": "5.1.*",请每个版本改成对应的版本

Question:

username_0: 新更新的5.1.36 会对我的代码造成影响,

于是我把我的composer.json 锁定为 "topthink/think": "5.1.35"

但是你这个think 项目里用的 "topthink/framework": "5.1.*"

搞得我这个版本锁定一点用都没用!!!!!!!!!!!!!!!

始终只能用最新的topthink/framework!!!!!!!!!!!!!!!!!!!

你们这样搞直接阉割了composer 的功能啊!!!!

还有,你们在5.1.36 里干了什么!!!我的$model->count()全废了,这个我再去提一个issue

Answers:

username_0: 请不要使用 5.1.* 这种版本号,请使用准确的版本号,每次打tag就更新一次,保证 think 项目和 framework 项目的版本完全准确统一,不然我这种项目和你们版本更新不兼容的真的很痛苦啊。真的不敢随便跟着你们升啊,都是锁定版本的。

我每次发版都要手动composer require "topthink/framework": "5.1.35",很难受啊

username_1: 奇怪的是你干嘛要升级think包呢

Status: Issue closed

username_2: 请在版本库中维护 `composer.lock` |

jbcool17/MyHandyScripts | 153239772 | Title: Watch folder and upload to drive

Question:

username_0: I've got some experience with the drive api and google's 2-step authentication api.

I know how to watch folders with node scripts (NOOOODEEE) or gulp?

What do you think?

PS: you and me <- (not in a while) are the only one doing stuff on github from our class!

Answers:

username_1: Nice the dish!! Have a crack.

Yea haven't messed around with that one in a while. what i'm trying to do is create a work flow for when I export a mix(audio file) from Reason. I want it to convert to mp3 then upload to my google drive so i can listen to it later, all in one step.

The problem that I ran into is that the script executes as soon as the audio file is starting to be created, so the file ends up being corrupt. I gotta figure out how to run the script once the file finishes exporting from reason.

Always gotta keep up with the git, Hub style. NoooOOOOooOde! Also gotta get back into the WebGL soon. been too long. |

jlippold/tweakCompatible | 418241270 | Title: `GlowBadge` working on iOS 12.1.1

Question:

username_0: ```

{

"packageId": "com.sassoty.glowbadge",

"action": "working",

"userInfo": {

"arch32": false,

"packageId": "com.sassoty.glowbadge",

"deviceId": "iPhone10,3",

"url": "http://cydia.saurik.com/package/com.sassoty.glowbadge/",

"iOSVersion": "12.1.1",

"packageVersionIndexed": true,

"packageName": "GlowBadge",

"category": "Tweaks",

"repository": "BigBoss",

"name": "GlowBadge",

"installed": "1.3-6",

"packageIndexed": true,

"packageStatusExplaination": "A matching version of this tweak for this iOS version could not be found. Please submit a review if you choose to install.",

"id": "com.sassoty.glowbadge",

"commercial": false,

"packageInstalled": true,

"tweakCompatVersion": "0.1.3",

"shortDescription": "Replace app badges with icon glow!",

"latest": "1.3-6",

"author": "Sassoty",

"packageStatus": "Unknown"

},

"base64": "<KEY>

"chosenStatus": "working",

"notes": ""

}

```<issue_closed>

Status: Issue closed |

mapbox/mapbox-navigation-android | 239803133 | Title: Engine consider end of first leg as arrival (multi-legs navigation doesn't work)

Question:

username_0: **Platform:** Android 7.1.2

**SDK version:** Latest SNAPSHOT

### Steps to trigger behavior

1. Set a route with more than 1 leg

2. Navigation stops at the end of the first leg

This is closely related to the PR I sent about waypoint support, as multi-legs routes are often routes with waypoints (but I believe there is other scenarios where a route can have multiple legs).

I will soon land a PR for this.

### Expected behavior

Navigation should not end until the last leg.

### Actual behavior

Navigation stops at leg index 0.

Answers:

username_1: Duplicate of #27.

Status: Issue closed

|

axotion/laravel-dotpay | 298556333 | Title: [Question] Kiedy i jak wyłapać callback?

Question:

username_0: Cześć, dzięki za paczkę, bardzo przydatna. :)

Mam szybkie pytania o callback, jeśli możesz podpowiedzieć.

1. Czy dotpay w trybie testowym wykonuje callbacki?

2. Jaki routing pod to przygotować? Post, czy get?

3. W którym momencie płatności wykonywany jest taki callback? Czy mogę w etapie końcowym płatności poczekać aż dotpay wykona curla?

Answers:

username_1: 1. Tak, wykonuje. Musisz zarejestrować swoje testowe konto

https://ssl.dotpay.pl/test_seller/test/registration/

2. Pod wysyłkę POST, callback odpowie postem na CURL, a przekieruje użytkownika po URL

3. Gdy użytkownik zapłaci/nie zapłaci wtedy zostaje wykonany callback na CURL

Status: Issue closed

|

webmin/webmin | 98381649 | Title: Squid - missing edit config files manually

Question:

username_0: There is no option to edit config files manually like in other modules?

I think it's worth adding it, right!

Answers:

username_1: Good idea - I've added a page for that.

Status: Issue closed

username_0: Thanks, Jamie but either I'm missing something and simply can't see it but it's not there after I updated squid module?

username_1: Oops, I forgot to check in one file. |

microsoft/BotFramework-Composer | 564827439 | Title: Question: How to access a JSON inner value?

Question:

username_0: The weather tutorial gets the following JSON response from the weather service:

```json

{

"id": 500,

"main": "Rain",

"description": "light rain",

"icon": "http://openweathermap.org/img/wn/[email protected]",

"weather": "Rain",

"temp": 31,

"high": 33,

"low": 30,

"rain": true,

"city": "Schenectady"

}

```

It then stores the content in `dialog.weather` and gets the weather and temperature using `The weather is @{dialog.weather.weather} and the temp is @{dialog.weather.temp}°`

I'm trying to use [OpenWeather](https://openweathermap.org/) service which returns the following JSON:

``` json

{

"coord": {

"lon": -9.13,

"lat": 38.72

},

"weather": [

{

"id": 300,

"main": "Drizzle",

"description": "light intensity drizzle",

"icon": "09d"

}

],

"base": "stations",

"main": {

"temp": 288.12,

"feels_like": 287.16,

"temp_min": 287.15,

"temp_max": 288.71,

"pressure": 1026,

"humidity": 93

},

"visibility": 10000,

"wind": {

"speed": 3.1,

"deg": 210

},

"clouds": {

"all": 75

},

"dt": 1581595588,

"sys": {

"type": 1,

"id": 6901,

"country": "PT",

"sunrise": 1581579052,

"sunset": 1581617449

},

"timezone": 0,

"id": 2267057,

"name": "Lisbon",

"cod": 200

}

```

I also store the content in `dialog.weather` and then try to get the same info using `The weather is @{dialog.weather.weather.main} and the temp is @{dialog.weather.main.temp}°`. I get the following error: `common.lg:Error occurs when evaluating expression bfdactivity-738194(): Error occurs when evaluating expression ‘dialog.weather.weather.main’: dialog.weather.weather.main is evaluated to null`

If I use this instead: `The weather is @{dialog.weather.weather} and the temp is @{dialog.weather.main}°`. I don't get an error but the following: `The weather is [ { “id”: 802, “main”: “Clouds”, “description”: “scattered clouds”, “icon”: “03d” } ] and the temp is { “temp”: 16.05, “feels_like”: 16.23, “temp_min”: 15, “temp_max”: 16.67, “pressure”: 1025, “humidity”: 87 }°`.

Answers:

username_1: @username_0 , could you try this: The weather is @{dialog.weather.weather[0].main} and the temp is @{dialog.weather.main.temp}. weather property is an array, so you need to query the item by index.

username_0: @username_1 Thank you so much for the help!

Just for reference, using this card:

```

[ThumbnailCard

title = Weather for @{dialog.weather.name}

text = The weather is @{dialog.weather.weather[0].main} and @{dialog.weather.main.temp}° (feels like @{dialog.weather.main.feels_like}°)

image = http://openweathermap.org/img/wn/@{dialog.weather.weather[0].icon}@2x.png

]

```

I now get the following:

<img width="493" alt="Screen Shot 2020-02-17 at 10 01 54" src="https://user-images.githubusercontent.com/534533/74643447-a1238980-516c-11ea-9b3a-709c089fec7a.png">

Status: Issue closed

|

nanomsg/nng | 321028426 | Title: want to nni_aio_stop without blocking

Question:

username_0: In general, we often do not need to block when we are stopping things down. We would like to shut down all activity on a pipe, ensuring that nothing can be restarted on it, without necessarily needing to wait for callbacks to complete.

To this end, nni_aio_stop() could be modified to take a boolean, indicating whether to wait or not.

Of course, nni_aio_fini() must wait, and anything that is going to ensure that it is safe to free underlying resources must also wait.

Answers:

username_0: This would be useful with reapers -- we can "stop" without waiting, everything before we submit things for the reaper. This ensures that an orderly cleanup is begun, and then only the reaper needs to worry about blocking for it.

username_0: We have instead taken the route of a new call, nni_aio_close(). This function is much like a non-blocking nni_aio_stop(), but it causes nni_aio_begin() to return NNG_ECLOSED in addition to immediately scheduling a completion callback (avoiding the case of a potentially orphaned callback).

Evidence is that this addresses a potential race condition responsible for sometimes crashing programs in the pipedesc_arm callback.

Status: Issue closed

|

fesoliveira014/yanve | 554709614 | Title: Forward+ Rendering

Question:

username_0: Forward+ is an advanced rendering technique that combines the advantages of deferred rendering and forward rendering, allowing for thousands of lights while enabling the use of different materials and translucent objects.

Resources:

- [Forward+: Bringing Deferred Lighting to the Next Level](https://takahiroharada.files.wordpress.com/2015/04/forward_plus.pdf)

- [Forward+ Renderer](https://github.com/bcrusco/Forward-Plus-Renderer/blob/master/Forward-Plus/Forward-Plus/) |

electron-userland/electron-installer-windows | 431012927 | Title: "TypeError: expected author to be a string" in my app & electron-installer-windows/example

Question:

username_0: Creating package (this may take a while)

electron-installer-windows Reading package metadata from dist/poopie-win32-ia32/resources/app/package.json +0ms

electron-installer-windows Error creating package: expected author to be a string +3ms

TypeError: expected author to be a string

at module.exports (/usr/local/lib/node_modules/electron-installer-windows/node_modules/parse-author/index.js:14:11)

at common.readMetadata.then.pkg (/usr/local/lib/node_modules/electron-installer-windows/src/installer.js:121:26)

at <anonymous> 'TypeError: expected author to be a string\n at module.exports (/usr/local/lib/node_modules/electron-installer-windows/node_modules/parse-author/index.js:14:11)\n at common.readMetadata.then.pkg (/usr/local/lib/node_modules/electron-installer-windows/src/installer.js:121:26)\n at <anonymous>'

npm ERR! Linux 4.15.0-36-generic

npm ERR! argv "/usr/bin/node" "/usr/bin/npm" "run" "set32"

npm ERR! node v8.10.0

npm ERR! npm v3.5.2

npm ERR! code ELIFECYCLE

npm ERR! [email protected] set32: `electron-installer-windows --src dist/poopie-win32-ia32/ --dest dist/installers/ia32/ --config config.json`

npm ERR! Exit status 1

npm ERR!

npm ERR! Failed at the [email protected] set32 script 'electron-installer-windows --src dist/poopie-win32-ia32/ --dest dist/installers/ia32/ --config config.json'.

npm ERR! Make sure you have the latest version of node.js and npm installed.

npm ERR! If you do, this is most likely a problem with the poopie package,

npm ERR! not with npm itself.

npm ERR! Tell the author that this fails on your system:

npm ERR! electron-installer-windows --src dist/poopie-win32-ia32/ --dest dist/installers/ia32/ --config config.json

npm ERR! You can get information on how to open an issue for this project with:

npm ERR! npm bugs poopie

npm ERR! Or if that isn't available, you can get their info via:

npm ERR! npm owner ls poopie

npm ERR! There is likely additional logging output above.

npm ERR! Please include the following file with any support request:

npm ERR! /home/dewartestserver/dev/electron-installer-windows/example/npm-debug.log

npm ERR! Linux 4.15.0-36-generic

npm ERR! argv "/usr/bin/node" "/usr/bin/npm" "run" "build"

npm ERR! node v8.10.0

npm ERR! npm v3.5.2

npm ERR! code ELIFECYCLE

npm ERR! [email protected] build: `npm run clean && npm run exe32 && npm run set32 && npm run exe64 && npm run set64`

npm ERR! Exit status 1

npm ERR!

npm ERR! Failed at the [email protected] build script 'npm run clean && npm run exe32 && npm run set32 && npm run exe64 && npm run set64'.

[omitted some final boilerplate]

**What did you do? Please include the configuration you are using for `electron-installer-windows`.**

in project folder: npm install --save-dev electron-installer-windows

package.json:

{

"name": "safety-valve-cache-testing",

"productName": "Safety valve app-srv",

"version": "0.1.2",

"main": "main.js",

"devDependencies": {

"electron": "^4.1.1",

"electron-cli": "^0.2.8",

"electron-installer-windows": "^1.1.1",

"electron-packager": "^13.1.1"

},

"scripts": {

"package-mac": "electron-packager . --overwrite --platform=darwin --arch=x64 --icon=assets/icons/mac/icon.icns --prune=true --ignore=assets --out=release-builds",

"package-win": "electron-packager . safety-valve-training-tool --overwrite --asar=true --platform=win32 --arch=all --icon=assets/icons/win/icon.ico --prune=true --ignore=assets --out=release-builds --version-string.CompanyName=Dewar --version-string.FileDescription=Dewar --version-string.ProductName=\"Safety Valve Cache Testing\"",

[Truncated]

"setup-win32": "electron-installer-windows --src release-builds/safety-valve-training-tool-win32-ia32 --dest release-builds/installers/win32",

"setup-win64": "electron-installer-windows --src release-builds/safety-valve-training-tool-win32-ia64 --dest release-builds/installers/win64"

},

"author": {

"name": "Dewarcommunications",

"email": "[removed]",

"url": "[removed]"

}

}

----------

^^^ Note "author": "name": is one string.

npm run setup-win32

**What did you expect to happen?**

Compile a windows installer exe into [project]/release-builds/installers/win32/

**What actually happened?**

Similar "TypeError: expected author to be a string [...]" as above output of electron-installer-windows/example (!) and mentioned in prior issue(s) -- as previously reported, changing the name to a string (no spaces) does not fix the issue.

Edit: OK, I misunderstood the "string" thing (package.json "author" stuff should be on one line without brackets per "https://docs.npmjs.com/files/package.json#people-fields-author-contributors".

After fixing that, I'm now getting a mono "Error creating package with NuGet"

Status: Issue closed

Answers:

username_0: Built a package after sifting through a lot of errors and fixing them. |

dresden-elektronik/deconz-rest-plugin | 656556585 | Title: IKEA Blind Stopped Working - Now Firmware Update Killed ConBeeII

Question:

username_0: <!--

- Use this issue template to report a bug in the deCONZ REST-API.

- If you want to report a bug for the Phoscon App, please head over to: https://github.com/dresden-elektronik/phoscon-app-beta

- If you're unsure if the bug fits into this issue tracker, please ask for advise in our Discord chat: https://discord.gg/QFhTxqN

- Please make sure sure you're running the latest version of deCONZ: https://github.com/dresden-elektronik/deconz-rest-plugin/releases

-->

## Describe the bug

<!--

Describe the issue you are experiencing here to communicate to the

maintainers. Tell us what you were trying to do and what happened.

Help us understand the issue by providing valuable context.

-->

In the last couple of days, by IKEA blind has stopped responding.

I have factory reset the blind, re-paired it to Deconz, and recharged the battery. Nothing has worked. I then tried updating Deconz to the latest but that hasn't helped either.

I can see the device in VNC and on the web app. but if I try to adjust it from the web app or from home assistant nothing happens. On Home Assistant I get a "Failed to call service cover/close_cover. /lights/2 resource, /lights/2, not available" and on Node-Red I get an API error.

I'm running my system in Docker. It has been working fine for a while.

If I manually call the "cover" service in HA, I see this error in the logs:

I also see this in the logs constantly.

Also, in VNC, the device is sitting with a green flashing indicator. All other devices flash blue.

How can I troubleshoot please? The logs for the add-on don't really show much..

I have tried to update the firmware on the ConbeeII but now Deconz won't connect to it.

## Steps to reproduce the behaviour

<!--

If the problem is reproducible, list the steps here:

1. Go to '...'

2. Click on '....'

3. Scroll down to '....'

4. Observed error

If the problem can't be reproduced and is sporadic, please provide some details

on how often and when the issue happens.

-->

Adjust brightness of IKEA blind in Phoscon App - nothing happens

## Expected behavior

<!--

If applicable, describe what you expected to happen.

-->

Blind should open or close.

[Truncated]

12:54:07:650 COM: --dev: /dev/ttyACM0 (ConBee II)

12:54:08:229 device state timeout ignored in state 2

12:54:09:279 device state timeout ignored in state 2

12:54:10:331 device state timeout ignored in state 2

12:54:10:454 try to reconnect to network try=8

12:54:11:954 device state timeout ignored in state 2

12:54:12:954 device state timeout ignored in state 2

12:54:13:954 device state timeout (handled)

12:54:13:994 device disconnected reason: 1, index: 0

12:54:14:454 wait reconnect 15 seconds

12:54:14:454 void zmMaster::handleStateIdle(zmMaster::MasterEvent) not connected goto OFF state

12:54:14:454 device state timeout ignored in state 1

12:54:15:453 failed to reconnect to network try=9

12:54:15:453 wait reconnect 14 seconds`

## Additional context

<!--

If relevant, add any other context about the problem here, like network size, number of routers and end-devices

and what kind of devices/brands are in the network.

-->

Answers:

username_0: Seems like the issue I am having now is the same as this one https://github.com/dresden-elektronik/deconz-rest-plugin/issues/2702

username_0: The firmware update wouldn't work on Hassio, so I created a Ubuntu VM on my PC and flashed it there. Downgrading the firmware doesn't resolve the issue but does complete successfully. Here are the logs.

`chris@chris-VirtualBox:/usr/share/applications$ deCONZ --dbg-info=2 --dbg-zdp=1 --dbg-zcl=1 --db-aps=1 --dbg-http=1

libpng warning: iCCP: known incorrect sRGB profile

14:01:34:989 HTTP Server listen on address 0.0.0.0, port: 8080, root: /usr/share/deCONZ/webapp/

14:01:34:991 CTRL. 3.22.014:01:35:013 COM: /dev/ttyACM0 : ConBee II (0x1CF1/0x0030)

14:01:35:013 ZCLDB init file /home/chris/.local/share/dresden-elektronik/deCONZ/zcldb.txt

14:01:35:052 parent process bash

14:01:35:052 gw run mode: normal

14:01:35:052 GW sd-card image version file does not exist: /home/chris/.local/share/dresden-elektronik/deCONZ/gw-version

14:01:35:052 DB sqlite version 3.22.0

14:01:35:052 DB PRAGMA page_count: 30

14:01:35:052 DB PRAGMA page_size: 4096

14:01:35:052 DB PRAGMA freelist_count: 0

14:01:35:052 DB file size 122880 bytes, free pages 0

14:01:35:052 DB PRAGMA user_version: 6

14:01:35:052 DB cleanup

14:01:35:052 DB create temporary views

14:01:35:052 DB view [0] created

14:01:35:052 DB view [1] created

14:01:35:052 DB view [2] created

14:01:35:052 DB view [3] created

14:01:35:052 sql exec SELECT apikey,devicetype,createdate,lastusedate,useragent FROM auth

14:01:35:052 sql exec SELECT key FROM config2

14:01:35:052 sql exec SELECT key,value FROM config2

14:01:35:052 Load config UTC: 2020-07-14T11:07:46 from db.

14:01:35:052 Load config announceinterval: 10 from db.

14:01:35:053 Load config announceurl: http://dresden-light.appspot.com/discover from db.

14:01:35:053 Load config apiversion: 2.05.77 from db.

14:01:35:053 Load config bridgeid: 00212EFFFF04F8BA from db.

14:01:35:053 Load config datastoreversion: 60 from db.

14:01:35:053 Load config dhcp: true from db.

14:01:35:053 Load config discovery: true from db.

14:01:35:053 Load config factorynew: false from db.

14:01:35:053 Load config fwneedupdate: false from db.

14:01:35:053 Load config fwupdatestate: idle from db.

14:01:35:053 Load config fwversion: 0x264a0700 from db.

14:01:35:053 Load config gateway: 127.0.0.1 from db.

14:01:35:053 Load config group0: 65520 from db.

14:01:35:053 Load config groupdelay: 50 from db.

14:01:35:053 Load config gwpassword: <PASSWORD> from db.

14:01:35:053 Load config gwusername: delight from db.

14:01:35:053 Load config homebridge: not-managed from db.

14:01:35:053 Load config homebridge-pin: from db.

14:01:35:053 Load config homebridgeupdate: false from db.

14:01:35:053 Load config homebridgeupdateversion: from db.

14:01:35:053 Load config homebridgeversion: from db.

14:01:35:053 Load config ipaddress: 10.0.2.15 from db.

14:01:35:053 Load config linkbutton: false from db.

14:01:35:053 Load config localtime: 2020-07-14T12:07:46 from db.

14:01:35:053 Load config mac: 38:60:77:7c:53:18 from db.

14:01:35:053 Load config modelid: deCONZ from db.

14:01:35:053 Load config name: Phoscon-GW from db.

14:01:35:053 Load config netmask: 255.0.0.0 from db.

14:01:35:053 Load config networkopenduration: 60 from db.

14:01:35:053 Load config otauactive: false from db.

14:01:35:053 Load config otaustate: off from db.

14:01:35:053 Load config panid: 0 from db.

14:01:35:053 Load config permitjoin: 0 from db.

14:01:35:053 Load config permitjoinfull: 0 from db.

[Truncated]

14:02:02:788 wait reconnect 3 seconds

14:02:03:789 wait reconnect 2 seconds

14:02:04:791 Daylight now: solarNoon, status: 170, daylight: 1, dark: 0

14:02:04:791 wait reconnect 1 seconds

14:02:04:798 COM: /dev/ttyACM0 : ConBee II (0x1CF1/0x0030)

14:02:04:798 auto connect com /dev/ttyACM0

14:02:04:825 Serial com connected

14:02:05:302 device state timeout ignored in state 2

14:02:05:789 try to reconnect to network try=2

14:02:07:288 device state timeout ignored in state 2

14:02:08:289 device state timeout ignored in state 2

14:02:09:296 device state timeout ignored in state 2

14:02:09:813 COM: /dev/ttyACM0 : ConBee II (0x1CF1/0x0030)

14:02:10:346 device state timeout ignored in state 2

14:02:10:793 try to reconnect to network try=3

14:02:12:290 device state timeout (handled)

14:02:12:290 MASTER kill cmd 0x08 (ERROR)

14:02:12:290 MASTER kill cmd 0x08 (ERROR)

14:02:12:343 Serial com disconnected, reason: 1

14:02:12:343 device disconnected reason: 1, index: 0`

username_1: Have you used version .78? I see .77 in the log...

username_0: I downloaded it this morning and that was the latest... There's no update for the hassio addon.

username_2: @username_0 What version is your HA addon :)?

username_0: 5.3.6. It was 5.3.5 (I think) but as part of my troubleshooting for the IKEA blind I upgraded. Which has only made things worse.

username_2: Okay.

Can you please provide me:

- The output of : https://www.home-assistant.io/integrations/deconz/#debugging-integration that logging

- A screenshot of your configuration page of the HA addon.

username_0: At the moment I have uninstalled the deconz addon for Hassio as part of the troubleshooting I have been trying.

I have Deconz installed on a Windows PC and a Ubuntu VM, I used the Ubunutu VM as the firmware upgrade wouldn't complete on hassio. Neither the UB VM or Windows PC can connect to the ConBeeII since upgrading the firmware.

username_2: https://github.com/dresden-elektronik/deconz-rest-plugin/wiki/Update-deCONZ-manually

Did you follow that guide and read trough it properly?

username_0: Yes, the update does complete successfully, so it says, but the Deconz application will no longer connect.

username_2: The page says:

**Warning: It is strongly advised to use a native installation of one of the update methods. Virtual Machines _may_ work but are not supported, using a VM while trying to update firmware is on your own risk.**

I suggest you to use the deCONZ windows version to update.

username_0: OK so I have the Hassio addon installed, I can VNC to Deconz and I can see the devices. Great :)

However, when I got to the Phoscon webapp I'm missing all the lights (understandable) and when I go to Gateway it's blank...

username_3: Have you tried with another browser ? or with deleting cache ?

On my side I have this bug when I use the wrong ip (the deconz on windows without conbee, instead of using the Pi ip)

username_0: I was just trying that when you replied :) Seems HA cached the page. I'm back in now so I can restore my backup.

username_4: Looks like the REST API resources haven't been created (the nodes still show the NWK address instead of the resource name). Also note the descriptors for the two end devices in the middle haven't been read. Looks like you lost the deCONZ database. Best restore that from backup. Alternatively, you'll need to re-pair each device. Best search for devices in Phoscon and read the _Basic_ cluster attributes in the GUI. When the name of the node changes, the resource has been created and Phoson should show the device. Make sure to wake the end devices when reading the _Basic_ cluster and read the _Simple Descriptor(s)_ from the left drop-down menu to see the clusters.

username_0: Thanks. I only understood some of that, but restoring the backup is exactly what I was just doing. I can see all the names in GUI now and I can see switches and sensors in Phoscon, but not lights... it's empty.

username_0:

The greyed out devices are expected, they died a while ago and I haven't got round to fixing them.

username_3: I think it can solve your "inivisible" light in phoscon.

For grayed Xiaomi device, I think you can re-include them ^^, for them I think it s dead.

username_0: The lights came back, I didn't change anything :)

username_3: joke application ^^

username_0: OK so things aren't stable. When I try to re-pair a switch (that's greyed out) Deconz crashes. The VNC server closes and I can't control any devices.

Log file from docker.

[Copy of addon_core_deconz.xlsx](https://github.com/dresden-elektronik/deconz-rest-plugin/files/4920199/Copy.of.addon_core_deconz.xlsx)

username_2: Could you provide this in a txt file? This reads really bad.

I've asked you before: Can you show me a screenshot of your configuration page of the addon?

username_2: Edited my last comment a bit.

username_0: I could, but I'll have to convert the file. That's how it was outputted from Docker.

The documents say I can use dev/tty*

Here's the config page.

username_0: Bare in mine my system was working perfectly fine, until the blind stopped working a couple of days ago.

I used to use the full string but it would display my device as a RaspBee not ConBeeII.

username_0: OK changing it to the full device string seems to have fixed the crashing. Weird.

username_2: This also is a HA Addon issue.

username_0: That's fine, It was the addon people that sent me here without fully understanding my issue.

Everything seems to working now. Even the blind has started working, even though nothing had changed to stop it from working in the first place.

username_2: @username_0 Where did they send you here?

Can you provide me a url/name?

username_0: I posted in their github first and they closed it and pointed me here.

Tbh I don't know what the root cause was. In troubleshooting the blind not working I made things worse so additional steps where needed to recover. It's all working now :) thank you.

username_2: Can you provide me the URL of that issue :)?

username_0: I opened two :)

https://github.com/home-assistant/hassio-addons/issues/1460#event-3543256625

https://github.com/home-assistant/hassio-addons/issues/1461#issuecomment-658134820

username_2: Found it already:)

Closed #3044

Status: Issue closed

username_0: Yes.

username_2: This is a Synology VM...

You couldve just renamed to .txt.

username_0: It's a Docker VM on Synology.

I could have, that's what I said about converting it. It wasn't needed anyway. |

gang1998/SEBC | 226934367 | Title: Welcome to SEBC

Question:

username_0: It appears your repository files have not yet been published, and the labels and milestones are not yet configured.

Please ask me or @godiswc for assistance if you need it.

Answers:

username_1: I have created the milestones and update the labels, but I can't use git push to update my reposity. I also created a branch sebc-shanghai-2017. When run the command, I got the error blow:

$ git push –u origin sebc-shanghai-2017

error: src refspec origin does not match any.

error: src refspec sebc-shanghai-2017 does not match any.

error: failed to push some refs to '–u'

Is anything I missed? Thanks

username_1: I resolved the issues by reinitialized the reposity.

Status: Issue closed

|

korlibs/korge | 1094864941 | Title: java.lang.IllegalStateException: Can't find resource '/com/soywiz/korge/intellij/generator/gradlew'

Question:

username_0: Upon creating a new Korge project, I receive the following message. I am running IntelliJ Ultimate Edition 2021.3.1 on openSUSE Tumbleweed and Java 17. The korge plugin version is 2.1.1.6.

`java.lang.IllegalStateException: Can't find resource '/com/soywiz/korge/intellij/generator/gradlew'

at com.soywiz.korge.intellij.KorgeResources.getBytes(KorgeResources.kt:6)

at com.soywiz.korge.intellij.module.KorgeModuleConfig.generate$lambda-3$getFileFromGenerator(KorgeModuleConfig.kt:33)

at com.soywiz.korge.intellij.module.KorgeModuleConfig.generate(KorgeModuleConfig.kt:37)

at com.soywiz.korge.intellij.module.KorgeModuleBuilder$setupRootModel$1$1.invokeSuspend(KorgeModuleBuilder.kt:47)

at kotlin.coroutines.jvm.internal.BaseContinuationImpl.resumeWith(ContinuationImpl.kt:33)

at kotlinx.coroutines.DispatchedTask.run(DispatchedTask.kt:106)

at kotlinx.coroutines.EventLoopImplBase.processNextEvent(EventLoop.common.kt:274)

at kotlinx.coroutines.BlockingCoroutine.joinBlocking(Builders.kt:85)

at kotlinx.coroutines.BuildersKt__BuildersKt.runBlocking(Builders.kt:59)

at kotlinx.coroutines.BuildersKt.runBlocking(Unknown Source)

at kotlinx.coroutines.BuildersKt__BuildersKt.runBlocking$default(Builders.kt:38)

at kotlinx.coroutines.BuildersKt.runBlocking$default(Unknown Source)

at com.soywiz.korge.intellij.module.KorgeModuleBuilder$setupRootModel$1.invoke(KorgeModuleBuilder.kt:46)

at com.soywiz.korge.intellij.module.KorgeModuleBuilder$setupRootModel$1.invoke(KorgeModuleBuilder.kt:43)

at com.soywiz.korge.intellij.util.UtilsKt$backgroundTask$1.run(Utils.kt:103)

at com.intellij.openapi.progress.impl.CoreProgressManager.startTask(CoreProgressManager.java:436)

at com.intellij.openapi.progress.impl.ProgressManagerImpl.startTask(ProgressManagerImpl.java:120)

at com.intellij.openapi.progress.impl.CoreProgressManager.lambda$runProcessWithProgressAsync$5(CoreProgressManager.java:496)

at com.intellij.openapi.progress.impl.ProgressRunner.lambda$submit$3(ProgressRunner.java:244)

at com.intellij.openapi.progress.impl.CoreProgressManager.lambda$runProcess$2(CoreProgressManager.java:188)

at com.intellij.openapi.progress.impl.CoreProgressManager.lambda$executeProcessUnderProgress$12(CoreProgressManager.java:624)

at com.intellij.openapi.progress.impl.CoreProgressManager.registerIndicatorAndRun(CoreProgressManager.java:698)

at com.intellij.openapi.progress.impl.CoreProgressManager.computeUnderProgress(CoreProgressManager.java:646)

at com.intellij.openapi.progress.impl.CoreProgressManager.executeProcessUnderProgress(CoreProgressManager.java:623)

at com.intellij.openapi.progress.impl.ProgressManagerImpl.executeProcessUnderProgress(ProgressManagerImpl.java:66)

at com.intellij.openapi.progress.impl.CoreProgressManager.runProcess(CoreProgressManager.java:175)

at com.intellij.openapi.progress.impl.ProgressRunner.lambda$submit$4(ProgressRunner.java:244)

at java.base/java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1700)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

at java.base/java.util.concurrent.Executors$PrivilegedThreadFactory$1$1.run(Executors.java:668)

at java.base/java.util.concurrent.Executors$PrivilegedThreadFactory$1$1.run(Executors.java:665)

at java.base/java.security.AccessController.doPrivileged(Native Method)

at java.base/java.util.concurrent.Executors$PrivilegedThreadFactory$1.run(Executors.java:665)

at java.base/java.lang.Thread.run(Thread.java:829)` |

ScottishCovidResponse/SCRCIssueTracking | 645758427 | Title: Clearly separate inference and prediction configuration

Question:

username_0: Every run of the EERA model uses a common `parameters.ini` file to supply its configuration. This file contains parameters used both in prediction mode and inference mode. This is confusing, as it is not clear which parameters are required for any particular run.

The inference and prediction configuration should be clearly separated, either by separating them into different sections of the file, or alternatively by having them in separate files.

In addition, the I/O functionality in the code should separately read in the inference or prediction configuration, as otherwise there will be parameters in the input data structures which are not initialised, in a non-obvious way.

Related to, but more specific than #437<issue_closed>

Status: Issue closed |

lazychaser/laravel-nestedset | 142301799 | Title: New features for v3 for Laravel 5.1 LTS

Question:

username_0: Hi,

I am wondering if the new (and future) features implemented for v4 will be implemented in v3 as well? Due to LTS and project requirements, I am unable to upgrade to 5.2.

Multi-tenancy will be extremely welcome in my current project.

Answers:

username_1: Sorry, no new features will be implemented in v3. Only bug fixes if any occur.

Status: Issue closed

|

BlackArch/blackarch-config-awesome | 342523000 | Title: Errors in /etc/xdg/awesome/rc.lua

Question:

username_0: It seems to be that some of the code in the configuration file is no longer valid.

When I type mod4 + r:

I get the following error that popups in a red box on the top right corner of my screen:

/etc/xdg/awesome/rc.lua 6466: attempt to index a nil value (field '?')`

Would you have any idea on what could be the cause of this error ?

Here are some information regarding my install:

awesome -version:

awesome v4.2 (Human after all)

• Compiled against Lua 5.3.4 (running with Lua 5.3)

• D-Bus support: ✔

• execinfo support: ✔

• xcb-randr version: 1.5

• LGI version: 0.9.2 |

automatiko-io/automatiko-engine | 921061973 | Title: Create service repositories to be equipped with useful service task implementations to enable simple reuse

Question:

username_0: Many times users require access to certain service types out of the box that can be easily used within the workflows.

Automatiko should build up a service repository that can easily grow with useful operations to be declared in the workflow and simply used instead of require them to be implemented every time.

To start with

- email sending based on template and attachments

- creating zip archives with selected variables

- upload variables into cloud storage like google drive, s3<issue_closed>

Status: Issue closed |

scambra/devise_invitable | 28363208 | Title: mysterious generate_token method

Question:

username_0: `generate_token` is invoked here: https://github.com/username_2/devise_invitable/blob/master/lib/devise_invitable/model.rb#L291

However I can't find any definition of such a method in the devise_invitable, devise, or rails codebases.

I also searched for various things to see if it's generated at runtime and didn't find anything.

I also poked around in Pry and it seems to be undefined.

```

app(dev)> cd User

app(dev)> show-method generate_token

Error: Couldn't locate a definition for generate_token!

app(dev)> show-method invitation_token

From: /Users/john/medstro/devise_invitable/lib/devise_invitable/model.rb @ line 290:

Owner: Devise::Models::Invitable::ClassMethods

Visibility: public

Number of lines: 3

def invitation_token

generate_token(:invitation_token)

end

app(dev)> generate_token(:invitation_token)

NoMethodError: undefined method `generate_token' for User(no database connection):Class

from /Users/john/.rbenv/versions/2.1.1/lib/ruby/gems/2.1.0/gems/activerecord-4.0.3/lib/active_record/dynamic_matchers.rb:22:in `method_missing'

```

Answers:

username_1: I'm using devise_invitable 1.1.8 and here the generate_token method is called but an exception is raised since it can't be found anywhere. This totally breaks the gem and I can't invite any user. I'm running Devise 3.5.10 which is as high as I can go considering other dependencies. Can I upgrade devise_invitable or should I downgrade it? Any recommendations?

username_2: You should upgrade devise_invitable to latest |

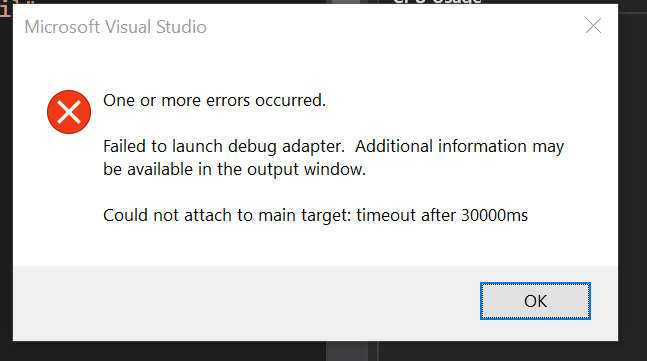

dotnet/aspnetcore | 592213881 | Title: .NET Core Debug Blazor Web Assembly in Chrome leads to forever spinning chrome page with about:blank in address bar

Question:

username_0: ### Describe the bug

In VS Code after starting ".NET Core Launch (Blazor Standalone)" then starting

".NET Core Debug Blazor Web Assembly in Chrome" leads to forever loading chrome page with about:blank in the address bar.

### To Reproduce

I followed the tutorial to get started with the template Microsoft.AspNetCore.Components.WebAssembly.Templates::3.2.0-preview3.20168.3

https://docs.microsoft.com/en-us/aspnet/core/blazor/get-started?view=aspnetcore-3.1&tabs=visual-studio-code

Then moved on to debugging instruction

https://docs.microsoft.com/en-us/aspnet/core/blazor/debug?view=aspnetcore-3.1

The project is the one created by

```dotnet new blazorwasm -o WebApplication1```

Without a single change

In VS Code after starting ".NET Core Launch (Blazor Standalone)" standalone then ".NET Core Debug Blazor Web Assembly in Chrome", it opens a chrome page with about:blank which spins forever and never lead to the website.

Putting a breakpoint in the razor pages (for instance, FetchData.razor on currentCount++;) shows "Unbound breakpoint".

At this point if i press F12 in chrome, the page loads, but still debugging does not work.

Breakpoint start to show the message "No symbols have been loaded for this document"

### Further technical details

- dotnet --info

```.NET Core SDK (reflecting any global.json):

Version: 3.1.201

Commit: <PASSWORD>b4ae7

Runtime Environment:

OS Name: Windows

OS Version: 10.0.18362

OS Platform: Windows

RID: win10-x64

Base Path: C:\Program Files\dotnet\sdk\3.1.201\

Host (useful for support):

Version: 3.1.3

Commit: <PASSWORD>

.NET Core SDKs installed:

3.1.201 [C:\Program Files\dotnet\sdk]

.NET Core runtimes installed:

Microsoft.AspNetCore.App 3.1.3 [C:\Program Files\dotnet\shared\Microsoft.AspNetCore.App]

Microsoft.NETCore.App 3.1.1 [C:\Program Files\dotnet\shared\Microsoft.NETCore.App]

Microsoft.NETCore.App 3.1.3 [C:\Program Files\dotnet\shared\Microsoft.NETCore.App]

Microsoft.WindowsDesktop.App 3.1.1 [C:\Program Files\dotnet\shared\Microsoft.WindowsDesktop.App]

Microsoft.WindowsDesktop.App 3.1.3 [C:\Program Files\dotnet\shared\Microsoft.WindowsDesktop.App]

To install additional .NET Core runtimes or SDKs:

https://aka.ms/dotnet-download

```

- IDE VS Code 1.43.2

- Chrome Version 80.0.3987.162 (Official Build) (64-bit)

Answers:

username_1: @username_0 thanks for contacting us.

We'll look into your issue and get back to you.

username_2: @username_4 can you please have a look at this? Thanks!

username_3: Same here.

Stuck on "about:blank" spinning. If i look at VS Code's call stack window, i can see ".NET Core Debug Blazor Web Assembly in Chrome" with "blank" under it.

After a while (i'm guessing debug timeout), the page does load but the call stack in vs code still says "blank" and breakpoint are obviously not being hit.

I also tried with an hosted app created using this : dotnet new blazorwasm --hosted -o TestApp

Same result.

Maybe interesting: if i open up the Dev tools in chrome (f12), the page does load. I'm guessing that it requeries the page and thus doesn't wait after the debugger to attach ?

But yeah, debugging is unusable with VS Code for now and i'd rather not install a preview version of Visual Studio, i've had too many issues with Preview version "sticking" around and creating issues, in the past. So i'm kinda stuck, debugging wise.

username_4: @username_0 / @username_3 how are you running the VSCode bits? Aka, which launch configurations are you invoking and in what order?

@username_7 the debugging instructions on the get started page for Blazor WASM VSCode aren't great. Could we get those updated?

username_4: @username_5 do you have any idea why opening chrome dev tools would then allow the Debug Enabled chrome instance to load? Is there something @username_0 can do to capture more log information to diagnose further?

username_5: Yep: setting `trace: true` in the launch.json will cause the extension to capture verbose logs. The file location will be printed to the debug console, sharing that should shed some light.

username_3: I'm following the instructions found at https://devblogs.microsoft.com/aspnet/blazor-webassembly-3-2-0-preview-3-release-now-available/

So i'm starting .NET Core Launch (Blazor Standalone) first, then once that's running, i'm starting .NET Core Debug Blazor Web Assembly in Chrome

Here's the verbose log i grabbed by adding "trace": true, hopefully it is what you need.

[verbose log.txt](https://github.com/dotnet/aspnetcore/files/4459686/verbose.log.txt)

username_5: The normal process is that we get a target for about:blank, attach to it, and issue a Page.navigate, and then when it loads we're ready to go. We try to do this in the log that nlz sent, but notice that there's never a response to the Page.navigate CDP call. The request/response pair should look like:

Looking at the logs, I wonder if something isn't quite connecting right inside the Blazor proxy. I also noticed that none of the requests (like Runtime.enable, Debugger.enable, which should have an empty successful replies) issued to the page's target after Page.navigate have responses. The requests/responses that nlz has appear to be equivalent to the ones I have up until Page.navigate.

Is there a way to retrieve telemetry/trace logs from the proxy @username_4?

username_0: I went back to the exact same project and after deleting obj and bin it works. I can now debug blazor webassembly from visual studio code. This is weird...

username_4: Sadly I'm not familiar with the proxy side of things. @username_8 could you assist?

username_3: For the record, i tried deleting the obj/bin folders as suggested by @username_0 and this did NOT fix it for me.

I'll be happy to help with whatever is needed (logs, troubleshooting, etc).

username_6: Same issue here but with Visual Studio Preview Version shown below

With the following launchSettings Profile

With this Exceptions after 30 s wait

```

Request starting HTTP/1.1 GET http://localhost:56261/_framework/debug/ws-proxy?browser=ws%3A%2F%2Flocalhost%3A27931%2Fdevtools%2Fbrowser%2F4a007c8e-3691-43b1-885e-ccdb38c71e2d

WsProxy Starting on ws://localhost:27931/devtools/browser/4a007c8e-3691-43b1-885e-ccdb38c71e2d

WsProxy: IDE waiting for connection on ws://localhost:27931/devtools/browser/4a007c8e-3691-43b1-885e-ccdb38c71e2d

WsProxy: Client connected on ws://localhost:27931/devtools/browser/4a007c8e-3691-43b1-885e-ccdb38c71e2d

WsProxy::Run: Exception System.AggregateException: One or more errors occurred. (The remote party closed the WebSocket connection without completing the close handshake.)

---> System.Net.WebSockets.WebSocketException (0x80004005): The remote party closed the WebSocket connection without completing the close handshake.

---> Microsoft.AspNetCore.Connections.ConnectionResetException: An existing connection was forcibly closed by the remote host.

---> System.Net.Sockets.SocketException (10054): An existing connection was forcibly closed by the remote host.

at Microsoft.AspNetCore.Server.Kestrel.Transport.Sockets.Internal.SocketAwaitableEventArgs.<GetResult>g__ThrowSocketException|7_0(SocketError e)

at Microsoft.AspNetCore.Server.Kestrel.Transport.Sockets.Internal.SocketAwaitableEventArgs.GetResult()

at Microsoft.AspNetCore.Server.Kestrel.Transport.Sockets.Internal.SocketConnection.ProcessReceives()

at Microsoft.AspNetCore.Server.Kestrel.Transport.Sockets.Internal.SocketConnection.DoReceive()

--- End of inner exception stack trace ---

at System.IO.Pipelines.PipeCompletion.ThrowLatchedException()

at System.IO.Pipelines.Pipe.GetReadResult(ReadResult& result)

at System.IO.Pipelines.Pipe.GetReadAsyncResult()

at System.IO.Pipelines.Pipe.DefaultPipeReader.GetResult(Int16 token)

at Microsoft.AspNetCore.Server.Kestrel.Core.Internal.Http.HttpRequestStream.ReadAsyncInternal(Memory`1 buffer, CancellationToken cancellationToken)

at System.Net.WebSockets.ManagedWebSocket.EnsureBufferContainsAsync(Int32 minimumRequiredBytes, CancellationToken cancellationToken, Boolean throwOnPrematureClosure)

at System.Net.WebSockets.ManagedWebSocket.ReceiveAsyncPrivate[TWebSocketReceiveResultGetter,TWebSocketReceiveResult](Memory`1 payloadBuffer, CancellationToken cancellationToken, TWebSocketReceiveResultGetter resultGetter)

at System.Net.WebSockets.ManagedWebSocket.ReceiveAsyncPrivate[TWebSocketReceiveResultGetter,TWebSocketReceiveResult](Memory`1 payloadBuffer, CancellationToken cancellationToken, TWebSocketReceiveResultGetter resultGetter)

at WsProxy.WsProxy.ReadOne(WebSocket socket, CancellationToken token)

--- End of inner exception stack trace ---

at System.Threading.Tasks.Task.ThrowIfExceptional(Boolean includeTaskCanceledExceptions)

at System.Threading.Tasks.Task`1.GetResultCore(Boolean waitCompletionNotification)

at WsProxy.WsProxy.Run(Uri browserUri, WebSocket ideSocket)