repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

GoogleCloudPlatform/nodejs-docs-samples | 700182135 | Title: iot manager list command with null information

Question:

username_0: Running 'node manager.js listDevices my-node-registry' , I get list of my devices and more information, however, some information is null. for example:

```

Current devices in registry:

...

Device 3: { credentials: [],

metadata: {},

id: 'pcTest',

name: '',

numId: '123456789123456',

lastHeartbeatTime: null,

lastEventTime: null,

lastErrorTime: null,

lastErrorStatus: null,

config: null,

lastConfigAckTime: null,

state: null,

lastConfigSendTime: null,

blocked: false,

lastStateTime: null,

logLevel: 'LOG_LEVEL_UNSPECIFIED',

gatewayConfig: null }

Device 4: { credentials: [],

metadata: {},

id: 'pcTest2',

name: '',

numId: '123456789123456',

lastHeartbeatTime: null,

lastEventTime: null,

lastErrorTime: null,

lastErrorStatus: null,

config: null,

lastConfigAckTime: null,

state: null,

lastConfigSendTime: null,

blocked: false,

lastStateTime: null,

logLevel: 'LOG_LEVEL_UNSPECIFIED',

gatewayConfig: null }

```

Why some parameters are null if have information over google cloud platform like heartbeat time? is a problem of IAM permission or is a limit over API?

thks

Answers:

username_1: Hi there! Would you mind sharing your code to triage the issue? Thanks!

username_0: Hi:

Code is the example code from iot/manager from this repository. I just use the command with my repository parameters.

username_1: Hi @username_0,

Without knowing how many devices or requests you've made, I can't say for sure if it's a quota issue. I've linked the documentation [here](https://cloud.google.com/iot/quotas) just in case.

A couple of ideas I have off the top of my head are: (i) have you confirmed the devices are correctly recording the information, and are storing it? (ii) perhaps you needed to wait for the information to become available?

I'm also involving @sgreenberg who may know more!

username_2: @username_0 It is working as intended. have nothing to do with IAM. It just indicates that these variables are not initialized.

username_0: hi:

Attached I send 3 images: first one is the command listdevice (and reason of this ticket); second one is getdevice that show information about devices and get data information, for example lastheartbeatTime; and finally a screenshot over gcp where I can confirm that data exists.

I need a API that check if all my devices is alive (without send any additional state or telemetry message, just mqtt alive ping alias heartbeat), and listdevices is my best option, and my second option is use listdevices to get all devices and check one by one with getdevice. I check the example code and it is fine, for this reason a think that maybe is a problem over gcp or google API.

What do you think, google API could be the problem?

username_2: @username_0 Hmmm, you are right. Let me investigate a bit on more whether it is service related or sample related.

Status: Issue closed

username_3: Looks like you were able to resolve the issue. Closing, please re-open if needed. |

Multiverse/Multiverse-Core | 648043617 | Title: Individual chats per world

Question:

username_0: Suggestion:

I've looked for other plugins that would do this, it's self explanatory, each world has their own chat, and you can't see the entire server chat. I've seen a plugin that does this but it isn't maintained and I think it would be a great addition to MV core.

Answers:

username_0: So I've tried the unmaintained plugin: https://www.spigotmc.org/resources/perworldchat.29897/ . It half works, so you can list the chats that should have a single chat, but doesn't work when it comes to connected worlds (a world with and end and nether). If you're going to use their source code, take this into consideration

username_1: There are many chat plugins out there that has this feature, ranging from plugins like Chat Control, Chat Manager, DeluxeChat, VentureChat and probably more. So there isn't much of a use for mv to create a chat plugin.

Status: Issue closed

|

ContinuumIO/anaconda-issues | 211012278 | Title: cv2.imshow() is not working on ipython console !!!

Question:

username_0: /Users/travis/build/skvark/opencv-python/opencv/modules/highgui/src/window.cpp:583: error: (-2) The function is not implemented. Rebuild the library with Windows, GTK+ 2.x or Carbon support. If you are on Ubuntu or Debian, install libgtk2.0-dev and pkg-config, then re-run cmake or configure script in function cvShowImage

Answers:

username_1: same problem,i have tried two method

1.recompile OpenCV with GTK

2.conda install -c https://conda.anaconda.org/menpo opencv3

doesn't work for me

username_0: @username_1 doesn't work

username_2: mine is the same can anyone help me please :

error: /io/opencv/modules/highgui/src/window.cpp:668: error: (-2) The function is not implemented. Rebuild the library with Windows, GTK+ 2.x or Carbon support. If you are on Ubuntu or Debian, install libgtk2.0-dev and pkg-config, then re-run cmake or configure script in function cvStartWindowThread

username_1: I have found the reason,because i have multiple opencv. use `cv2.__file__` to confirm which opencv is used,then uninstall the opencv that not used. |

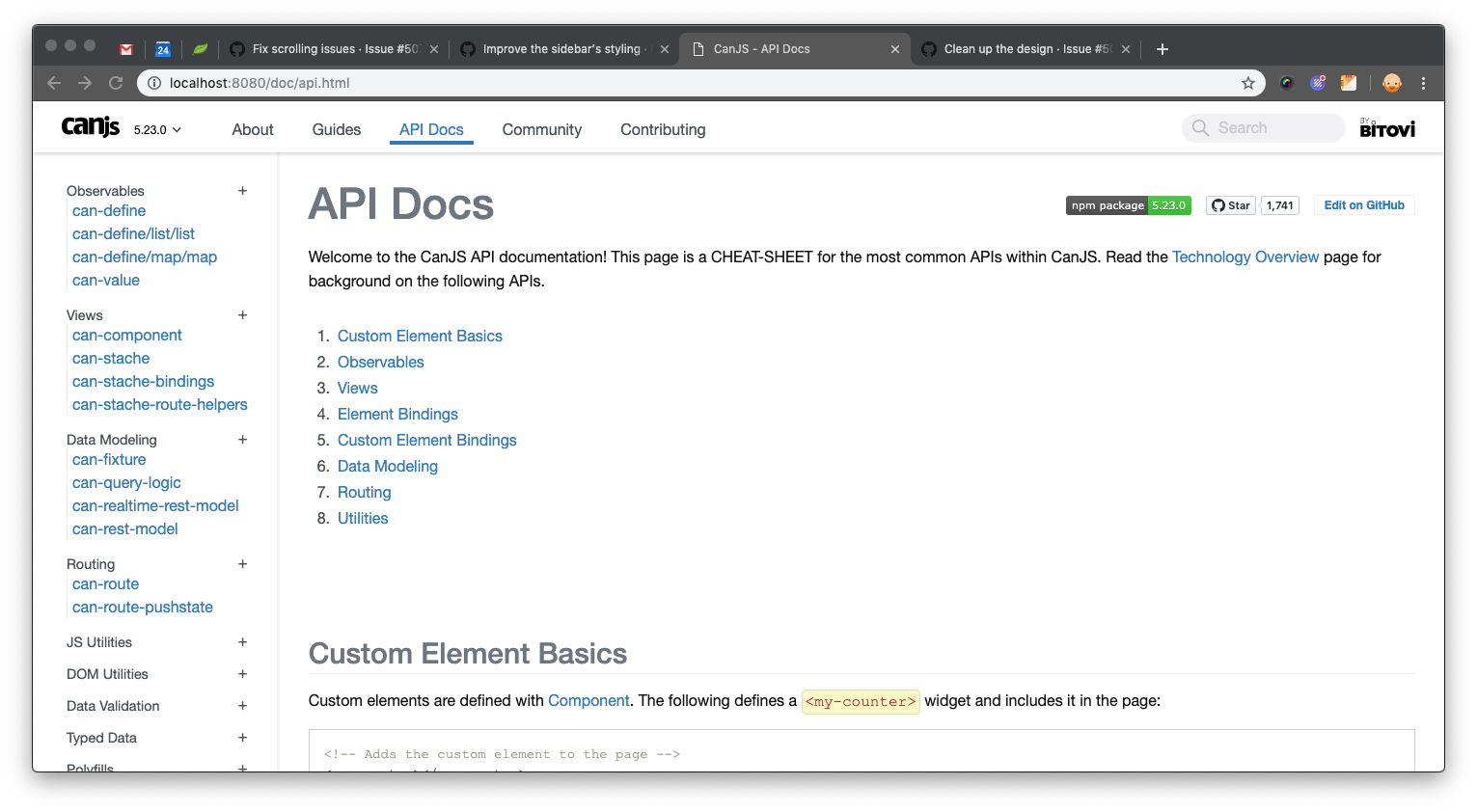

canjs/bit-docs-html-canjs | 436428947 | Title: Clean up the design

Question:

username_0: - [ ] Get rid of Lato

- [ ] Either change the direction of the vertical shadow between the left sidebar & content, or make it a solid gray line?

- [ ] Add spacing to the bottom of the left sidebar to avoid this:

<img width="300" alt="Screen Shot 2019-04-23 at 3 45 39 PM" src="https://user-images.githubusercontent.com/10070176/56620768-e9226000-65de-11e9-94c8-23be2fa20d96.png">

- [ ] Fix the dropdown at 1000px wide

<img width="572" alt="Screen Shot 2019-04-23 at 3 15 59 PM" src="https://user-images.githubusercontent.com/10070176/56620821-153de100-65df-11e9-932f-8acdc1fb1ae9.png">

- [ ] Vertical alignment: make the logo, version, & links share the same baseline? [And same thing with the other stuff in the nav?]

<img width="246" alt="Screen Shot 2019-04-23 at 3 48 59 PM" src="https://user-images.githubusercontent.com/10070176/56620918-6a79f280-65df-11e9-988b-ccf81533db6b.png">

- [ ] Horizontal alignment: tighten the space between the main nav links, use padding instead of margin so the link target area is bigger, & make the spacing between things more cohesive (right now it’s _30px | logo | 15px | version | 60px | nav links_ on the left and _search input | 20px | By Bitovi | 10px_ on the right)

- [ ] Make comps for what the “By Bitovi” logo would look like with black text (just By black, By gray and Bitovi black, & both black)

- [ ] The X in the search input feels huge to me but it looks like it’s the same size as the magnifying glass. Maybe consider making it smaller, or less thick, or…?

- [ ] Consider how we could make the hover & selected states more consistent

<img width="134" alt="Screen Shot 2019-04-23 at 4 03 15 PM" src="https://user-images.githubusercontent.com/10070176/56621459-63ec7a80-65e1-11e9-96ce-4d995c8266e0.png">

Answers:

username_1: RE: sidebar remove shadow and add gray line

<img width="1920" alt="Screen Shot 2019-04-24 at 7 35 49 AM" src="https://user-images.githubusercontent.com/4571457/56656587-e3bb2900-6663-11e9-88d9-6ed33fcf7dad.png">

Option 2 - make sidebar background a light gray color

<img width="1920" alt="Screen Shot 2019-04-24 at 7 36 13 AM" src="https://user-images.githubusercontent.com/4571457/56656597-e87fdd00-6663-11e9-91d5-0fc4102eedb5.png">

username_1: By Bitovi mods

username_1:

Status: Issue closed

|

BabylonJS/Spector.js | 925905860 | Title: Seeing only the last frame with Chrome extension

Question:

username_0: Hey, when using Chrome's SpectorJS it shows only thew last frame, and empty ones before.

Any suggestion for solving it?

Answers:

username_1: The frame buffers are in this case not from a renderable type.

Do you have a repro to confirm ?

username_0: @username_1

What makes you know that?

As it can be seen in the image, the last frame is created from several meshes, but it was not possible to see with the frames, the process that created it (For example which mesh was rendered before others)

username_1: This is one of the only reason we would not capture a frame buffer. I might happen for a shadow map for instance. you could look into the frame buffer setup for the draw call to confirm

Status: Issue closed

|

andreamazza89/amaze | 573275525 | Title: Take a step from within the maze rather than looking at a map of it

Question:

username_0: At the moment, the `takeAStep` api is from the point of view of someone looking down at the maze map.

This is to expose another endpoint, which allows a player to take a step from within the maze; this will require the app tracking the player's direction.

In summary, after this story we should have something like:

- `takeAStepOnTheMap` -> which takes an absolute direction of `North | West | South | East`

- `takeAStepInTheMaze` -> which takes a relative direction of `Forwards | Backwards | Right | Left`

Answers:

username_0: Could be cool for this to be the same Mutation, but with a parameter that specifies which way around you want to communicate the direction. |

joferkington/mplstereonet | 896062939 | Title: Problem with ax.set_azimuth_ticks()

Question:

username_0: I was updating some stereonet subplots in Jupyter (that used to look fine) and after running the cell the degrees around the stereonets did not plot correctly as they used to. Instead of plotting around the edge of the stereonets, they plotted all around the frame of the four subplots. I then tried on a single stereonet and it was better, but the degrees were positioned quite far away from the edge of the stereonet and I could not adjust that position.

Answers:

username_1: I encountered exactly the same issue today, and I am using Spyder. The last time I used mplstereonet was in December 2020 and back then the error did not occur. Here is an example of the ticks and labels:

username_2: Ok, took me a while to figure out what was going on. I can suggest two workarounds to fix this issue:

1. If "ax" is your stereonet axis, add the line "ax._polar.set_position(ax.get_position())" before calling plt.show()

2. Downgrade matplotlib to version <3.4.0

mplstereonet makes a hidden polar axis within the stereonet axis as a bit of a kludge to add azimuth tick labels.

The issue is in mplstereonet/stereonet_axes.py line 291. Prior to matplotlib 3.4.0, fig.add_axes() used to detect if you were attempting to create Axes with the same keyword arguments as already-existing Axes in the current Figure, and if so, it would return the existing Axes. Now it will create a new axis. So changes to the size of the stereonet axis aren't matched by changes in the polar axis anymore. Workaround 2 manually matches any size changes that have occurred in the parent axis.

The matplotlib functionality change is documented here:

https://matplotlib.org/stable/users/prev_whats_new/whats_new_3.4.0.html#changes-to-behavior-of-axes-creation-methods-gca-add-axes-add-subplot |

ZJONSSON/node-etl | 429391888 | Title: ElasticSearch should retry with backoffs if transport error

Question:

username_0: If too much data gets written to ES, it will sometimes fail with this error:

https://stackoverflow.com/questions/37855803/rejected-execution-of-org-elasticsearch-transport-transportservice-error

It'd be nice if this was handled by the node-etl library and automatically retried (after backoffs) |

Azure/azure-functions-host | 754232401 | Title: proxies route value = 'null' when running inside kubernetes

Question:

username_0: I am using Azure Functions proxies to handle CORS requests in a K8 Cluster and my `proxies.json` looks like this.

```

{

"$schema": "http://json.schemastore.org/proxies",

"proxies": {

"proxy1": {

"matchCondition": {

"methods": [

"OPTIONS"

],

"route": "{*path}"

},

"responseOverrides": {

"response.statusCode": "200",

"response.headers.Access-Control-Allow-Origin": "*",

"response.headers.Access-Control-Allow-Methods": "GET, PUT, POST, DELETE, HEAD, OPTIONS",

"response.headers.Access-Control-Allow-Headers": "*",

"response.headers.Access-Control-Allow-Credentials": "true"

},

"debug": true

}

}

}

```

I have built my docker container and when I run the container locally the proxy works as expected when the OPTIONS calls are made, I see the following output.

```

dbug: Microsoft.AspNetCore.Routing.RouteBase[1]

Request successfully matched the route with name 'proxy1' and template '{*path}'

info: Function.proxy1[1]

Executing 'Functions.proxy1' (Reason='This function was programmatically called via the host APIs.', Id=27a6aa40-ca1f-4a56-b1b5-23b9721d1793)

info: Function.proxy1.User[0]

Executing request via Azure Function Proxies

info: Function.proxy1[2]

Executed 'Functions.proxy1' (Succeeded, Id=27a6aa40-ca1f-4a56-b1b5-23b9721d1793, Duration=2ms)

dbug: Microsoft.AspNetCore.Routing.RouteConstraintMatcher[1]

```

However, the same container running in my K8 Cluster gives this output when the OPTIONS request is made

```

dbug: Microsoft.Azure.WebJobs.Script.Workers.Rpc.RpcFunctionInvocationDispatcher[0]

FUNCTIONS_WORKER_RUNTIME=dotnet. Will shutdown all the worker channels that started in placeholder mode

info: Host.General[316]

Host lock lease acquired by instance ID '0000000000000000000000003FA04801'.

dbug: Microsoft.AspNetCore.Routing.RouteConstraintMatcher[1]

Route value '(null)' with key 'httpMethod' did not match the constraint 'Microsoft.AspNetCore.Routing.Constraints.HttpMethodRouteConstraint'

dbug: Microsoft.AspNetCore.Routing.RouteConstraintMatcher[1]

Route value '(null)' with key 'httpMethod' did not match the constraint 'Microsoft.AspNetCore.Routing.Constraints.HttpMethodRouteConstraint'

dbug: Microsoft.AspNetCore.Routing.RouteConstraintMatcher[1]

Route value '(null)' with key 'httpMethod' did not match the constraint 'Microsoft.AspNetCore.Routing.Constrai

```

You can see that the `Route value is '(null)' in the output. I am guessing this must be something to do with the load balancer? Does anyone know when the route value would be null when running inside Kubernetes ?

Answers:

username_0: @pragnagopa any update on this?

username_1: Hi @username_0, Apologies for the delayed response. Were you able find a solution? do let us know if a follow up is required. The issue was somehow lost in the trace. |

asrob-uc3m/robotDevastation-developer-manual | 218310809 | Title: Introduce programming style conventions

Question:

username_0: Started as an idea while taking a closer look at emacs modelines ([robotDevastation#96](https://github.com/asrob-uc3m/robotDevastation/issues/96#issuecomment-290517327)), it could be expanded to encompass further coding style rules.

Answers:

username_0: I find the [official documentation](https://git-scm.com/docs/gitattributes) a bit too sparing of details with this respect, but it might be possible to handle/enforce the indentation thing in a `.gitattributes` file (see [YARP/.gitattributes](https://github.com/robotology/yarp/blob/v2.3.68/.gitattributes)).

username_0: Consider adding header inclusion guidelines:

* asrob-uc3m/robotDevastation@be2a7de

* asrob-uc3m/robotDevastation@e109550

username_1: Maybe it's okay to point to https://github.com/roboticslab-uc3m/best-practices for now. |

PaddlePaddle/Paddle | 269882299 | Title: cnn_output_size Calculation error using dilation in python api

Question:

username_0: The origin func in python api as follows:

```

def cnn_output_size(img_size, filter_size, padding, stride, caffe_mode):

output = (2 * padding + img_size - filter_size) / float(stride)

if caffe_mode:

return 1 + int(math.floor(output))

else:

return 1 + int(math.ceil(output))

```

This doesn't take dilation into account.<issue_closed>

Status: Issue closed |

radicaled/dart-tools | 79513531 | Title: Error reporting's red is difficult to read

Question:

username_0: On styles/dart-tool.less for error reporting, you are using `@text-color-error`, but this color is difficult to read on the dark UI theme of atom, maybe changing it to something lighter like `#FD4848` I've found it becomes easier to my eyes.

Answers:

username_1: 0.9.18 uses the linter package, which has a different linting UI. Is the color still an issue? |

stephanstapel/ZUGFeRD-csharp | 667657723 | Title: ram:BasisAmount in comfort profile

Question:

username_0: The validation of the xml says, that the ram:BasisAmount (in SpecifiedSupplyChainTradeAgreement) will not be evaluated in the comfort profile.

In previous versions the BasisAmount was not written, too.<issue_closed>

Status: Issue closed |

ampproject/amphtml | 717728457 | Title: [amp-story] Allow players to control system UI controls

Question:

username_0: For the amp-story-player, we would like to control the position & visibility of UI elements of the amp-story's system layer (speaker, sharing...).

Since amp-story knows best where to position them in different scenarios (page-attachment open, tweet expanded, etc...), it's appropriate for the player to send a message to the story with the required configuration, and the story takes care of the layout logic.

#### Player to story message

`player.sendRequest('updateSystemUI', config);`

#### Config API

(based on https://github.com/ampproject/amphtml/issues/30031)

```

“header” (optional)

“buttons”: array of objects, each one containing:

“name”: a string with the name of the button. If the name is part of the default set of buttons (close / (un)mute / share) the following fields are optional but overridable.

“icon”: (required when not part of the default set of buttons) accepts URLS and `data:image` URIs like base64 images and svgs.

“event”: (required when not part of the default set of buttons) custom event that will be dispatched when clicked.

“visibility”: “hidden” or “visible”

“position”: “end” or “start” string values

“footer” (coming soon - not available for dev preview)

“logo” (coming soon - not available for dev preview)

```

Example:

```

{

"header": { // (Optional)

"buttons": [ // Required.

{

"name": "mute", // If name is part of default set: the default event/behavior and icon will be used, but can be overridden.

"icon": "icon.jpg", // Supports URLS and `data:image` URIs like base64 images and svgs.

},

{

"name": "close",

"position": "start", // Values: "start" or "end".

},

{

"name": "customButton", // If not part of default set, the following fields will be required.

"icon": "data:image/svg+xml;charset=utf-8,<svg ... ",

"event": "customEvent", // Event that will be dispatched when clicked.

}

]

}

}

```

#### Listening to Custom Events

Players could then subscribe to events coming from the buttons using the existing viewer message `documentStateUpdate` and checking if it is a player UI event.

```

playerMessaging.registerHandler('documentStateUpdate', (event, data) => {

if (data.state === 'PLAYER_UI_EVENT`) {

console.log(data.value); // `amp-story-player-close` (Close button was clicked!)

}

});

```

@ampproject/wg-stories

Answers:

username_1: nit: should we do the listening for `documentStateUpdate` and re-trigger a javascript event? That way the only code necessary is standard event listening (either vanilla JS or from the publisher's framework of choice)

If the `documentStateUpdate` event doesn't match one specifies by the UI Controls API, we can just continue to propagate it.

username_0: Yep, that's what I had in mind for the `amp-story-player`. I didn't mention it in this issue because I think @username_2 wanted this to be something that any publisher could reference to and implement in their own viewer/players.

username_0: Sounds good. Landed on controls, will update all the APIs.

username_2: That all sounds good!

We can make the footer decision later once we know more about our plans but it's great that this API will let us pass its configuration as well, if needed.

username_0: Closed by #30502

Status: Issue closed

|

BEEmod/BEE2-items | 198389141 | Title: Cube Dropper compile failure

Question:

username_0: Looks like something with the Cube Colorizer. What does that even do?

```

[ERROR] cond.core.check_all(): Error in condition:

Traceback (most recent call last):

File "F:\Git\BEE2.4\src\conditions\__init__.py", line 463, in check_all

File "F:\Git\BEE2.4\src\conditions\__init__.py", line 348, in test

File "F:\Git\BEE2.4\src\conditions\__init__.py", line 515, in check_flag

ValueError: "coloredcube" is not a valid condition flag!

```

Answers:

username_1: It lets you colour cubes, so they have a coloured stripe on them to make it possible to tell different droppers and cubes apart (for puzzles where you need to fizzle cubes strategically).

Status: Issue closed

username_0: But what if I don't want that? The puzzle only has 1 cube.

username_1: It only does something when you have that item attached to the cube - except that your compiler version doesn't have the code for it, so it errors out. |

socrata/opendatanetwork.com | 123473065 | Title: idea: use stackexchange for support and to build community

Question:

username_0: I feel like the site needs a way for people to a) ask questions as well as b) provide feedback.

For a few reasons, I think (a) can be covered by StackExchange much like `openfda` has leveraged it. We can also start to try to build a community of people to answer each other's questions and drive organic traffic to the ODN site. I, personally, am willing to monitor the `opendatanetwork` tag on SE if we decide to do this.

For (b), I think a Github Issues or email would be better.

Answers:

username_1: What's the status of this idea now that ODN is open source and Github issues is now used on dev.socrata.com?

Status: Issue closed

|

wfxr/forgit | 896882085 | Title: when using "ga" to git add: zsh:11: parse error near `else', no diff in right box

Question:

username_0: <!-- ISSUES NOT FOLLOWING THIS TEMPLATE WILL BE CLOSED AND DELETED -->

<!-- Check all that apply [x] -->

## Check list

- [x] I have read through the [README](https://github.com/username_2/forgit/blob/master/README.md)

- [x] I have the latest version of forgit

- [x] I have searched through the existing issues

## Environment info

- OS

- [x] Linux

- [ ] Mac OS X

- [ ] Windows

- [ ] Others:

- Shell

- [ ] bash

- [ ] zsh

- [x] fish

## Problem / Steps to reproduce

In any git repo that I've tried, when I run `ga`, I don't see a diff in the right box, just

```

zsh:11: parse error near `else'

```

I installed with the `omf` method

Answers:

username_1: will you try typing `fish` before you try `ga` again, just to be sure you're running it in fish? It's strange to see a zsh error while running the fish version of this plugin! I suspect a configuration error.

Is this just with `ga`, or with other commands too?

username_0: I am running fish for sure, but I see that the SHELL variable is set to /bin/zsh, and if I try to set it with fish as a universal variable (that persists over reboots), it tells me it's overshadowed by a global variable (that is set for all sessions but doesn't persist) of the same name. So somewhere in my config something is setting that variable. If I just set the variable for that session only I can override the global value and `ga` works without any issues. I'll hunt that configuration down on my side and I'll close this issue

Status: Issue closed

username_1: @username_2 Have you ever seen anything like that?

Good luck @username_0, be sure to post a comment if you figure it out, may help someone in the future :)

username_2: I haven't seen this error. The error message looks like the `fish` plugin was sourced in the `zsh` shell

for some reasons.

username_1: Very strange, for sure!!

username_0: I couldn't find why the SHELL variable was set as a global variable anywhere. I have a setup at work where I can't chsh (it gets reset on reboots) so I tell alacritty and guake to run fish instead of the default shell. Maybe zsh still gets called and sets itself as SHELL, or maybe that's just set at login because my default shell is zsh.

My solution was to have `set -gx SHELL /usr/bin/fish` in `~/.config/fish/config.fish` to overwrite it, and it works well. Maybe forgit or something called by forgit reads SHELL at some point to know what it's running on, even if it has been installed via omf ? I imagine my case of having SHELL not match with the shell that's running is not very common

username_1: Very strange! Well, glad you got to the bottom of it. Don't hesitate to reach out if you find any more issues

username_1: @username_0, interestingly enough, this just started happening with me too when I switched to a new job!

I will dig in at some point and see if we can fix this on the forgit side. I can't figure out why I can't update my shell with `chsh`. I figured that would fix it. I'm going to use your hack for now.

Super strange

username_1: <!-- ISSUES NOT FOLLOWING THIS TEMPLATE WILL BE CLOSED AND DELETED -->

<!-- Check all that apply [x] -->

## Check list

- [x] I have read through the [README](https://github.com/username_2/forgit/blob/master/README.md)

- [x] I have the latest version of forgit

- [x] I have searched through the existing issues

## Environment info

- OS

- [x] Linux

- [ ] Mac OS X

- [ ] Windows

- [ ] Others:

- Shell

- [ ] bash

- [ ] zsh

- [x] fish

## Problem / Steps to reproduce

In any git repo that I've tried, when I run `ga`, I don't see a diff in the right box, just

```

zsh:11: parse error near `else'

```

I installed with the `omf` method

username_1: @username_0 I think I have found the fix! It seems like you have to do a little legwork for fish to be identified as a shell

First, make sure fish is listed as a shell in /etc/shells. Here is a quick one liner that can help you do that.

```

which fish | sudo tee -a /etc/shells

```

Then you need to change the shell for your user

```

chsh -s (which fish)

```

You then need to close out of your terminal session, but then when you open a new one everything was good for me!

Will you confirm this works and get back to me?

username_0: I tried but it didn't work for me. I don't think I can change my shell at my work, or rather the shell is always overwritten when I reboot. I don't have that issue on my personal computer though, and fish is recognized as a shell all on its own. I'll just keep this little hack for now

Status: Issue closed

|

agda/agda | 1007379268 | Title: The Agda mode’s version (2.6.3) does not match that of agda (2.6.2).

Question:

username_0: Hey guys,

I've installed the agda2-mode from melpa. Looking inside `/home/username_0/.emacs.d/elpa/agda2-mode-20210903.1114/agda2-mode.el` I see this: `(defvar agda2-version "2.6.3"` The Agda version available via cabal (and listed on Hackage) is 2.6.2.

How can I make sure that I am using the matching versions? Is there a way to dowgrade the agda2-mode to 2.6.2?

Thanks,

Vladimir

Answers:

username_1: Installing agda should also install agda-mode. You shouldn't need to use melpa for that.

username_2: I'd like to ask `melpa` to take off `agda2-mode`, since `agda-moda` is always installed together with `agda`.

@username_0: Does @username_1's reply solve your problem?

username_0: I am not sure what do you mean by that. Are you saying that installing agda with cabal will also install agde-mode for emacs? How does it know about peculiarities of my emacs setup? Which version of agda-mode does it pick then?

What I don't understand is why would the master branch of agda and agda mode use different versions?

username_2: The stable version of Agda on Hackage is 2.6.2. So if you really need an experiment version of Agda, then you need to `git clone` this repo and compile inside the repo.

username_0: I see, thanks, guys. That melpa alternative is so confusing, maybe it could be mentioned somewhere in the docs? I do think though that the melpa package just grabs the master and installs, so if master version of agda-mode was matching master agda version, that all would work as expected.

username_2: Yeah, it does not make sense to install `agda-mode` from `melpa` at all, since it just downloads `*.el` files directly from `master`. I have asked them to take it off.

username_0: What is the reason for having different versions of the master branch between agda and agda-mode? Agda master is 2.6.2, agda-mode under the same branch is 2.6.3. Should not that be the same version and 2.6.3 be "experimental" or something, but not "master"?

username_1: Agda master is 2.6.3: https://github.com/agda/agda/blob/master/Agda.cabal#L2

username_0: Oh, I see. Maybe the issue then is that it's not been updated on hackage yet:

username_3: Hackage does not have Agda 2.6.3 as it has not yet been released. The `master` branch always corresponds to the version of Agda that's currently under development.

username_0: I understand. I guess then the melpa installer is making some wrong assumptions, it should not pick the latest (master), but match the version on hackage, which is a bit harder to do, I can imagine...

username_3: This is exactly why installing `agda` also automatically installs `agda-mode`, so you do not have to bother making sure you have the right version.

username_1: And would still be wrong if people install an older version of Agda

(via their OS's package manager for instance).

username_0: If everybody stuck to a convention that is easiest to maintain across many standards, that would not be necessary to have a custom installer. Such as, if master always matched the version on hackage for example. I can imagine that there are reasons not to do so, which resulted in yet another standard (custom installer). I am not saying it's wrong, it's commonplace. But it's not particularly good either, from the point of view of modularity and separation of concerns for example.

username_4: When the Emacs mode was made available via MELPA @username_5 wrote that he was "available for any questions or concerns on this" (#3360).

username_5: If I understand correctly the issue now is that:

1. melpa fetches agda mode from master.

2. user install agda stable from hackage (maybe cabal install or something).

3. master agda-mode is incompatible with hackage version

So a solution would be to change the recipe on melpa not to use master, but instead to use a stable version as determined by the agda project, for example, the release tarball may work (need to filter out nightly),

I've to look into recipe system to see if it's possible.

It maybe possible to modify that recipe to get it from hackage even (although I suspect the releases on github are easier).

Please let me know if you have any other questions or concerns I may have forgotten to address here :slightly_smiling_face:

username_1: Users may install an older version than the latest available on Hackage

e.g. because they are working with a textbook that is pinned to a specific

version. AFAIU whatever decision is made on melpa's side will be incompatible

with that scenario.

I'm not sure agda-mode needs to be on melpa at all: as we have seen, it is

tied to the corresponding version of Agda & automatically installed when Agda is.

username_5: First of all I'd like to point out that this hasn't been an issue to date.

The issue opened here is for a difference in minor versions,

which will show up in output path but aside from a little confusing shouldn't cause issues.

These patches have landed since I put it on melpa:

+ https://github.com/agda/agda/commit/c79e052a984b5775f2652867ad14c164c89cfdf8#diff-e77962711f1ff4164544af64cc9f08419e5ffc8e33911d84c186998beb215e65

+ https://github.com/agda/agda/commit/98d7f27e8bc5fde716aad7aac62c57080f5564ef#diff-e77962711f1ff4164544af64cc9f08419e5ffc8e33911d84c186998beb215e65

+ https://github.com/agda/agda/commit/a38bf00d7192aad854eb7f434ddd76eca476f647#diff-e77962711f1ff4164544af64cc9f08419e5ffc8e33911d84c186998beb215e65

+ https://github.com/agda/agda/commit/997ca3a842ab743cce69f6aeaef604605644b904#diff-e77962711f1ff4164544af64cc9f08419e5ffc8e33911d84c186998beb215e65 <-- only this one would be noticed

+ https://github.com/agda/agda/commit/1f75261df2dc085dcb0235f6dff346ec62120836#diff-e77962711f1ff4164544af64cc9f08419e5ffc8e33911d84c186998beb215e65

It seems a bit extreme to remove agda-mode from melpa for a hypothetical

issue which hasn't caused any reported problems.

---

But, if you're writing a text book,

I suggest you use a dedicated tool for software pinning,

like nix, docker or vagrant.

I don't think It's not reasonable to assume a version conflict will happen

to *just* the emacs agda-mode and agda.

Agda like any other great software project is build on the shoulders of many giants.

For example if using the haskell backend you depend on whatever version of ghc,

and then whatever version of libc for the runtime executable, dynamically linked.

I just named some of many of such dependencies,

You can have conflicts on all of these.

Unless of course if agda is statically linked binary,

but it's [not](https://github.com/agda/agda/blob/master/src/full/Agda/Compiler/MAlonzo/Compiler.hs#L208)

cabal will not really help you with this because it's not designed to pin a software system.

cabal is a tool for developers to build haskell software.

It assumes you know how to resolve version conflicts and puts all of

the burden on the user.

For example there is no way for cabal to express a version of libc to be used,

or a version for emacs.

If you're speaking in terms of decades, I wouldn't consider the emacs

elisp api that stable

(which is why the melpa maintainer insisted on adding emacs versions).

On wayland for example the [elisp api changes](https://lwn.net/Articles/843896/).

nix, docker and vagrant can pin software in such a way that this isn't an issue.

I'd be happy to help you setup a nix shell for a book if you're writing

that.

Nixos simply abosrbs the entire melpa package archive,

*and* the hackage archive to provide a consistent world state.

Removing the agda-mode package from melpa would break that.

But it would also not really protect users from version

conflicts because some of the many native dependencies may

become incompatible.

Besides most emacs users are sort off aware of potential

versions mismatches when using melpa,

which is why this particular issue was opened up in the first place

(why would you otherwise check?).

Trying to protect this class of users by making it more

difficult to install agda-mode seems ineffective.

I didn't mean to write an essay,

but let me know if I missed some concerns. 🙂

username_1: I suggest you use a dedicated tool for software pinning,

like nix, docker or vagrant.

The tool we use is `cabal` and it installs both `agda` and `agda-mode`.

I don't know of anyone advising users to install `agda-mode` from melpa

instead of using the one provided by the install.

username_4: When you write "`agda-mode`" above, do you mean the Emacs Agda mode or the Haskell program called `agda-mode`? I assume that MELPA is only used to install the Emacs Agda mode, and not the Haskell program.

Is it not possible to have several (released) versions of the Agda Emacs mode in MELPA?

username_6: I'd be happy to learn about a convention that names the branch for the latest release. Usually `master` isn't the latest release, but the _head_, the latest development version. At least in the Haskell universe, this has been commonly adopted practice.

The difference is only when to bump the version:

1. Some leave the version number on `master` the same as the latest release until the next release. This does not reflect reality and is confusing for all that install from `master`: The version number promises a feature set described in the notes of the latest release, but the feature set has actually moved on.

2. Thus, we bump the version number on `master` immediately after the release. This reflects the reality that the version on `master` might differ in its feature set from the released version.

Whenever I encountered a project doing 1., the developers never had strong arguments for their practice and were easily convinced to switch to style 2.

I acknowledge that there is no convention to name the branch with the latest release. What often works is to look for the `vN.M.L` tags and pick the one with the highest version number `N.M.L`.

username_6: I am not familiar with `nix`, so help is appreciated!

Recently @username_7 contributed a nix flake, but it does not include the `agda-mode`:

https://github.com/agda/agda/blob/78ea6d259b10d4751f96924e418dbc1c8cd8b638/flake.nix#L12

I think it would be nice if we maintained a nix-description of the environment that Agda assumes. Then you could restore it when you fetch an older version of Agda from the repo (or maybe from stackage/hackage).

username_7: For the most part, this is just the cabal file. If you want something more concrete, then assuming we test the flake build (ideally including the test suite) on CI then the combination of flake.nix + flake.lock would suffice for this. |

mvandersteen/ha-jemenaoutlook | 357891253 | Title: Error while setting up platform energyeasy

Question:

username_0: Getting the following stack trace.

My sensor data is as per your example with my username and password added. My username is an email address so I've enclosed the username with single quotes (').

I'm using this for https://energyeasy.ue.com.au/ which is using the same platform as https://electricityoutlook.jemena.com.au/

The only thing I've modified is `HOST = 'https://energyeasy.ue.com.au'`.

```

2018-09-07 11:54:44 ERROR (MainThread) [homeassistant.components.sensor] Error while setting up platform energyeasy

Traceback (most recent call last):

File "/usr/local/lib/python3.6/site-packages/homeassistant/helpers/entity_platform.py", line 128, in _async_setup_platform

SLOW_SETUP_MAX_WAIT, loop=hass.loop)

File "/usr/local/lib/python3.6/asyncio/tasks.py", line 358, in wait_for

return fut.result()

File "/usr/local/lib/python3.6/concurrent/futures/thread.py", line 56, in run

result = self.fn(*self.args, **self.kwargs)

File "/config/custom_components/sensor/energyeasy.py", line 148, in setup_platform

jemenaoutlook_data.get_data()

File "/config/custom_components/sensor/energyeasy.py", line 236, in get_data

self._fetch_data()

File "/config/custom_components/sensor/energyeasy.py", line 228, in _fetch_data

self.client.fetch_data()

File "/config/custom_components/sensor/energyeasy.py", line 502, in fetch_data

self._data.update(self._get_tariffs())

File "/config/custom_components/sensor/energyeasy.py", line 326, in _get_tariffs

"controlled_load_cost": self._strip_currency(data["controlledLoadCost"]),

KeyError: 'controlledLoadCost'

```

Answers:

username_1: How did you solve this? I am currently facing a similar problem |

fhstp-mfg/mobilot | 169108851 | Title: Reposition StationComponent info button

Question:

username_0: We should reposition the StationComponent info button, since it takes up quite some space and the WYSIWYG editor for example, doesn't fit anymore.:

Any volunteers? 😄

Screenshot taken on iPhone 5s:

Answers:

username_1: I'll do it!

username_0: @username_1 thank you 😃

Status: Issue closed

username_1: Done! but we should redesign the editor anyway! |

ropensci/iheatmapr | 395793228 | Title: add_row_dendro details

Question:

username_0: Hello, can we color the dendrogram just like those in these pictures?

<img width="1005" alt="skaermbillede-2017-09-22-kl -11 10 06" src="https://user-images.githubusercontent.com/24263046/50671699-77fa7500-100e-11e9-851d-b6332f6c8b1c.png">

Answers:

username_1: Nope not at the moment! I suspect the first style of coloring would be easier to achieve. I think this would be a good capability to add, but am unlikely to do that myself anytime soon (but would be happy to accept PR or give guidance to someone wanting to try implementing). |

klarna/klarna-mobile-sdk | 760614341 | Title: LICENSE file is missing but still specified in podspec

Question:

username_0: **Describe the bug**

My company uses the `cocoapods-acknowledgements` plugin. After pod update to 2.0.30, I see this warning:

```

[!] Unable to read the license file `LICENSE` for the spec `KlarnaMobileSDK (2.0.30)`

```

The podspec still specifies a LICENSE file, but the file appears to have been deleted sometime after 2.0.28.

**To Reproduce**

Steps to reproduce the behavior:

1. `pod update KlarnaMobileSDK`

**Expected behavior**

LICENSE file should be available. Or `license` section in podspec should be removed or fixed.

Answers:

username_1: Hi @username_0. Thanks for reaching out with this warning, we already uploaded the LICENSE file again, sorry for any inconvenience. Please, try again and let us know how did it go.

username_1: Hi @username_0. Thanks for reaching out with this warning, we already uploaded the LICENSE file again, sorry for any inconvenience. Please, try again and let us know how did it go.

Steps to update the pod:

- pod deintegrate

- pod cache clean --all

- pod install --repo-update

Status: Issue closed

username_0: Worked! |

pytorch/glow | 500189463 | Title: Can anyone provide complete detailed information of installing Glow in Ubuntu 16.0.4

Question:

username_0: Please provide installation guide for Glow

Ubuntu 16.0.4

I had installed LLVM 8.0.1 and Clang 8.0.1 but iam unable to configure glow in my system it will be more helpful if i get detailed info!

Answers:

username_1: Hi @username_0, we have instructions [here](https://github.com/pytorch/glow#ubuntu), though they may be a bit out of date. If you can follow those instructions and provide more information/logs on what is causing you issues we can help try to debug. Thanks!

username_0: Hi @username_1 ,

I followed same instructions but the installation went hanging off my system

/Desktop$ cd LLVM/

~/Desktop/LLVM$ cd glow/

~/Desktop/LLVM/glow$ cd build_Debug/

~/Desktop/LLVM/glow/build_Debug$ sudo ninja all

[10/107] Linking CXX executable bin/lenet-loader

At this step system is not responding can you guide me through this.

previously i got so many errors like ERROR: without a user defined constructor

ninja subcommand failed.

username_1: Hm, I don't think you need sudo to run ninja. Can you try building a specific binary, for example `ninja InterpreterOperatorTest`, and then post the build log?

username_0: i finally installed glow, Can you tell me how to run programs using glow?

username_1: Great! We have a wiki section on how to run things [here](https://github.com/pytorch/glow#testing-and-running). Going to close this issue as it has been resolved.

Status: Issue closed

|

staudt/Genius | 177609712 | Title: Error found

Question:

username_0: I do not press a button when game starts but it thinks I do HELP PLZZ On home menu its fine....

Answers:

username_1: Are you using ports 1 or 2? If so, in many Arduinos they are also used for the internal LED, which can send extra signals, triggering button presses. Try ONLY using ports 3 and higher for both digital and analog. Let me know if that works ;)

username_0: Im using pots 9, 10, 11. I am using a smaller chip then a Arduino uno so im thinking that there is not enough memory. Also I found a simpler version and that worked. :)

username_2: Hi @username_0, if you using protoboard like it image, try to join the two board negative.

Status: Issue closed

|

abarnes26/quantified-self-express-backend | 308384060 | Title: Create Endpoint (get all foods for a meal)

Question:

username_0: a get request to "/meals/:meal_id/foods" should return a list of all foods associated with that meal

Status: Issue closed

Answers:

username_0: a get request to "/meals/:meal_id/foods" should return a list of all foods associated with that meal

Status: Issue closed

|

schulz3000/deepstreamNet | 258213069 | Title: Set an array as a new record

Question:

username_0: Hi,

looks like you can not set an array as a record. The DeepStreamRecordBase must be a JContainer. Should it be a JToken so it can support arrays ? Alternatively throw an exception if you try and set an array.

When you try and set an array this line does not merge anything

Data.Merge(item, jsonMergeSettings);

Here is a test

```

[Test]

public async Task TestSetArray()

{

var name = Guid.NewGuid().ToString();

var key = @"my/testrecord/" + name;

var obj = new JArray();

obj.Add("hello");

using (var client1 = await GetConnection("chris", "test"))

{

var record1 = await client1.Records.GetRecordAsync(key);

var res = await client1.Records.SetWithAckAsync(record1, obj);

Assert.IsTrue(res);

var json = JsonConvert.SerializeObject(record1);

Assert.IsTrue(json.StartsWith("["), "SHould be an array");

}

}

``` |

kangwonlee/reposetman | 383699785 | Title: update subtrees in parallel

Question:

username_0: * now a857554 can process chapters in subtrees

* Update is currently in single process

* multiple users at once as subtree pull may not work for multiple chapters of one participant in this case

Status: Issue closed

Answers:

username_0: 4b91090adfc970b8f4e9acb28b3231287795d70a resolves this issue |

emar10/uweather | 378395282 | Title: Swift optional chaining

Question:

username_0: Look into how you could potentially use optional chaining to prevent having to nest `if let`s and `if <var> != nil`s

https://github.com/emar10/uweather/blob/d477062e1c38dfaf2a5a4af9c90cb2db2f405014/uWeather/WeatherModel.swift#L43 |

agehring/CxFlow-Demo | 860075043 | Title: CX Heap_Inspection @ web/Areas/Identity/Pages/Account/Manage/ManageNavPages.cs [agehring-alt]

Question:

username_0: **Heap_Inspection** issue exists @ **web/Areas/Identity/Pages/Account/Manage/ManageNavPages.cs** in branch **username_0-alt**

*Method "ChangePassword"; at line 13 of web\Areas\Identity\Pages\Account\Manage\ManageNavPages.cs defines ChangePassword, which is designated to contain user passwords. However, while plaintext passwords are later assigned to ChangePassword, this variable is never cleared from memory.*

Severity: Medium

CWE:244

[Vulnerability details and guidance](https://cwe.mitre.org/data/definitions/244.html)

[Internal Guidance](https://checkmarx.atlassian.net/wiki/spaces/AS/pages/79462432/Remediation+Guidance)

[Checkmarx](http://CXSAST-9/CxWebClient/ViewerMain.aspx?scanid=1000035&projectid=15&pathid=9)

[Training](https://cxa.codebashing.com/courses/)

[Recommended Fix](http://CXSAST-9/CxWebClient/ScanQueryDescription.aspx?queryID=3772&queryVersionCode=94864652&queryTitle=Heap_Inspection)

Lines: [13](https://github.com/username_0/CxFlow-Demo/blob/username_0-alt/web/Areas/Identity/Pages/Account/Manage/ManageNavPages.cs#L13)

---

[Code (Line #13):](https://github.com/username_0/CxFlow-Demo/blob/username_0-alt/web/Areas/Identity/Pages/Account/Manage/ManageNavPages.cs#L13)

```

public static string ChangePassword => "<PASSWORD>";

```

---<issue_closed>

Status: Issue closed |

maciejtarmas/AlleBlock | 261870570 | Title: Info o kredycie na OLX

Question:

username_0: np tu: https://www.olx.pl/oferta/dzialka-inwestycyjna-gospodarstwo-CID3-IDoEAhU.html#f56ce9749e

Status: Issue closed

Answers:

username_1: Dzięki, usunięte :) |

kevin-brown/blog.kevin-brown.com | 185128531 | Title: What is the licence of code snippets on your blog?

Question:

username_0: Hello!

What is license of code snippets on your blog? Could it be MIT or GPL3? :-)

Thank you for answers!

Answers:

username_1: Which snippets specifically are you looking for the license for?

I'll go back through the posts I've done and determine what the license needs to be. And then I'll add a note in the footer about licensing.

username_0: Hello,

right now I'm very interested in https://blog.username_1.com/programming/2014/09/24/combining-autotools-and-setuptools.html . I would like to use it GPLv3 projekt called [FreeIPA](http://www.freeipa.org/).

Thank you for considering this!

Status: Issue closed

|

pondokit/deJacetre | 423557428 | Title: ENV Example Missing

Question:

username_0: untuk push ke github, memang .env tidak dianjurkan karena ada data, sebaliknya .env.example bisa dipush karena ini data kosong.

jika ada yang pertama kali menggunakan pasti bingung, karena ketika download sourcenya, tidak ada .env.example |

log2timeline/plaso | 436236406 | Title: Crash in image_export.py with missing file_entry

Question:

username_0: Traceback, from an older version of Plaso:

```

Traceback (most recent call last):

File "/usr/bin/image_export.py", line 57, in <module>

if not Main():

File "/usr/bin/image_export.py", line 36, in Main

tool.ProcessSources()

File "/usr/lib/python2.7/dist-packages/plaso/cli/image_export_tool.py", line 770, in ProcessSources

skip_duplicates=self._skip_duplicates)

File "/usr/lib/python2.7/dist-packages/plaso/cli/image_export_tool.py", line 338, in _ExtractWithFilter

skip_duplicates=skip_duplicates)

File "/usr/lib/python2.7/dist-packages/plaso/cli/image_export_tool.py", line 279, in _ExtractFileEntry

for data_stream in file_entry.data_streams:

AttributeError: 'NoneType' object has no attribute 'data_streams'

```

This looks to be because OpenFileEntry in dfvfs can return None.

Answers:

username_0: Also an issue in https://github.com/log2timeline/plaso/blob/master/plaso/filters/file_entry.py#L398 as we check for False in the results, and not for None.

username_0: Ran into another crash while trying to debug this:

```

Traceback (most recent call last):

File "./tools/image_export.py", line 57, in <module>

if not Main():

File "./tools/image_export.py", line 36, in Main

tool.ProcessSources()

File "/usr/lib/python2.7/dist-packages/plaso/cli/image_export_tool.py", line 791, in ProcessSources

skip_duplicates=self._skip_duplicates)

File "/usr/lib/python2.7/dist-packages/plaso/cli/image_export_tool.py", line 350, in _ExtractWithFilter

extraction_engine.knowledge_base, artifact_filters, filter_file)

File "/usr/lib/python2.7/dist-packages/plaso/engine/engine.py", line 328, in BuildFilterFindSpecs

artifact_filter_names, environment_variables=environment_variables)

File "/usr/lib/python2.7/dist-packages/plaso/engine/artifact_filters.py", line 97, in BuildFindSpecs

definition, environment_variables)

File "/usr/lib/python2.7/dist-packages/plaso/engine/artifact_filters.py", line 129, in _BuildFindSpecsFromArtifact

self._knowledge_base.user_accounts)

File "/usr/lib/python2.7/dist-packages/plaso/engine/artifact_filters.py", line 218, in _BuildFindSpecsFromFileSourcePath

path_glob, path_separator, user_accounts):

File "/usr/lib/python2.7/dist-packages/plaso/engine/path_helper.py", line 232, in ExpandUsersVariablePath

path_segments, path_separator, user_accounts)

File "/usr/lib/python2.7/dist-packages/plaso/engine/path_helper.py", line 99, in _ExpandUsersVariablePathSegments

path_segments, path_separator, user_accounts)

File "/usr/lib/python2.7/dist-packages/plaso/engine/path_helper.py", line 64, in _ExpandUsersHomeDirectoryPathSegments

if cls._IsWindowsDrivePathSegment(user_path_segments[0]):

IndexError: list index out of range

```

username_0: It turns out the file causing the original crash is a broken symbolic link, so this was probably triggered by the behavior changed in https://github.com/log2timeline/dfvfs/issues/388

username_1: Changes merged, closing issue.

Status: Issue closed

|

hasura/graphql-engine | 1016567074 | Title: Increase in Hasura idle connections kill database

Question:

username_0: ### Version Information

Server Version: 2.0.7

### Environment

Docker

### What is the expected behaviour?

Do create 15+ connections if we don’t need it.

### What is the current behaviour?

Hasura is creating too many connections and is crashing the database.

### How to reproduce the issue?

I’m not sure

<img width="1366" alt="PNG image" src="https://user-images.githubusercontent.com/52385564/136070008-ef199916-2c50-4477-a6e0-008016afdad7.png">

Answers:

username_0: Update: When you do a BIG query, the query works. But it’s after the fact that makes it fail and blow up.

username_1: The number of connections you see may be because of the connection pool idle timeout. Hasura has a default idle timeout of 180s which means that after a connection is created, it will hold the connection for at max 180s so that it may be reused by another query. You can reduce it if you want by editing the source configuration.

The database unresponsiveness maybe due to some heavy query. What is the CPU/mem of your Postgres db?

username_2: @username_0 I am currently facing the exact same issue. Database sessions (opened from Hasura IP) increase until a complete freeze. Have you made any progress identifying the root cause of this issue ? Any "patch" like what suggests @username_1 ?

username_1: @username_2 Database sessions usually increase in the following situations:

1. Load increases on Hasura because of requests (this will spawn new connections limited by the connection pool setting per instance, on Cloud there can be auto-scaling as well). This can also be because of a burst in event trigger deliveries.

2. Some query is taking up lots of locks and blocking other queries (this is usually the case when some long running query or DDL is performed)

3. It's normal to see many IDLE sessions after a burst of traffic because of connection idle timeout (for reuse of sessions).

The best way to diagnose the root cause is to see the activity on the database during this burst. Do you have any info on this?

username_2: @username_1 thanks, it was indeed right after a large number of events were triggered. However, is there any way we can prevent this from happening ? I set the idle timeout to 60 seconds, though I'm not sure this will fix the issue ? Our app was unusable after this, because database was unreachable. |

slms4redd/portal | 97498707 | Title: Build error

Question:

username_0: Skipping the execution of mvn tests at installing , skipping to run them in order to not fall into the issue-2, would lead you a little further into the following mvn error:

-----------------------------------------------------

[INFO] ------------------------------------------------------------------------

[ERROR] BUILD ERROR

[INFO] ------------------------------------------------------------------------

[INFO] Internal error in the plugin manager executing goal 'ro.isdc.wro4j:wro4j-maven-plugin:1.7.6:run': Unable to load the mojo 'ro.isdc.wro4j:wro4j-maven-plugin:1.7.6:run' in the plugin 'ro.isdc.wro4j:wro4j-maven-plugin'. A required class is missing: org/codehaus/plexus/util/Scanner

org.codehaus.plexus.util.Scanner

[INFO] ------------------------------------------------------------------------

Answers:

username_0: The reason is related to the usage of the version of Mavan used. Maven 3.0 at least is required. For further information please refer to: http://stackoverflow.com/questions/13181322/wro4j-maven-plugin-required-class-is-missing

Status: Issue closed

|

goatfungus/NMSSaveEditor | 1074427873 | Title: After opening save my object count for bases is minimal

Question:

username_0: After touching my nms with the save editor just by opening the save file it caused this on every slot. I can make a base but I get about 45 objects before I can't place anymore which before that the count was 3000 objects. So save editor basically broke my game for now hopefully you guys can fix it so I can fix my save. I actually only copied slot 1 edited and save as slot 2 not sure why just reading the slot broke it but it did. |

ably/ably-java | 137648765 | Title: Library doesn't seem to serialise Map objects properly

Question:

username_0: I'm using the following code to send a presence update:

Map<String,Boolean> payload = new HashMap<>();

payload.put("isTyping",false);

try {

sessionChannel.presence.update(payload,null);

} catch (AblyException e) {

e.printStackTrace();

}

This translates to the following MsgPack hex:

`89A6616374696F6E0EA26964AD4D3754536F6A4A5A35532D3331A5636F756E7415A76368616E6E656CAB6D6F62696C653A63686174AD6368616E6E656C53657269616CB0396335333838303037353736313A3733AC636F6E6E656374696F6E4964AA4D3754536F6A4A5A3553B0636F6E6E656374696F6E53657269616C2CA974696D657374616D70CF000001533354B1D2A870726573656E63659184A8636C69656E744964A573616E6479AC636F6E6E656374696F6E4964AA4D3754536F6A4A5A3553A6616374696F6E04A464617461B07B6973547970696E673D66616C73657D`

Which translates to:

`{"action":14,"id":"M7TSojJZ5S-31","count":21,"channel":"mobile:chat","channelSerial":"9c53880075761:73","connectionId":"M7TSojJZ5S","connectionSerial":44,"timestamp":null,"presence":[{"clientId":"sandy","connectionId":"M7TSojJZ5S","action":4,"data":"{isTyping=false}"}]}

`.

However, when ably.js does a similar thing (I'm testing via the Heroku app), the MsgPack hex is:

`89A6616374696F6E0EA26964AD6E554158777A664E33522D3232A5636F756E7412A76368616E6E656CAB6D6F62696C653A63686174AD6368616E6E656C53657269616CB0396335333838303037353736313A3737AC636F6E6E656374696F6E4964AA6E554158777A664E3352B0636F6E6E656374696F6E53657269616C30A974696D657374616D70CF000001533359AAE1A870726573656E63659185A8636C69656E744964A27363AC636F6E6E656374696F6E4964AA6E554158777A664E3352A6616374696F6E04A8656E636F64696E67A46A736F6EA464617461B27B226973547970696E67223A66616C73657D`

Which translates to:

`{"action":14,"id":"nUAXwzfN3R-22","count":18,"channel":"mobile:chat","channelSerial":"9c53880075761:77","connectionId":"nUAXwzfN3R","connectionSerial":48,"timestamp":null,"presence":[{"clientId":"sc","connectionId":"nUAXwzfN3R","action":4,"encoding":"json","data":"{\"isTyping\":false}"}]}

`

The Java lib doesn't seem to create a valid JSON (IIRC JSON doesn't contain equals signs), that the JS lib can successfully parse. I set breakpoints in the JS presence handler and they do not trigger.

As a consequence of these differences, the Cordova sample app does not recognise presence messages sent from the Android sample app.

The inverse is true - the Android lib successfully parses and displays "isTyping" presence updates coming from JS.

@username_1 @username_2 @username_4

Answers:

username_1: Thanks @username_0 we'll get Gokhan to look at this shortly.

@username_3 this reconfirms our fear that some libraries are not as interoperable as we expect. The related issue is https://github.com/ably/wiki/issues/22, we should consider prioritising this.

@username_4 in the mean time, can you try and figure out why the Hashmap is being encoded as `{isTyping=false}` please and provide a fix?

username_2: Yes, but this case is simply a deviation from the spec, not a difference in encoding that we would only detect by testing different libraries back-to-back.

username_3: So I'm very far from being a java dev, but gson can apparently serialise a HashMap into json [just fine](http://stackoverflow.com/a/12156646). Why not just call `gson.toJson` on anything we get that isn't a `byte[]` or a `String`, wrap it a try-catch, and let gson throw an exception if it's fed something it can't serialize?

username_2: That's possible but that same approach doesn't work when deserialising - we would have to pick something canonical to deserialise to, and the end result would not be the same as the original value.

I think it is more useful on the decode side to allow clients to define their own Gson-serialisable types, and have a way for them to decode specifically to that type, instead of to a generic collection.

username_0: So I tried using a JSONObject like this:

JSONObject payload = new JSONObject();

payload.put("isTyping", false);

That generates a proper JSON as the data: `{"action":14,"id":"zu4ekVHDa6-6","count":21,"channel":"mobile:chat","channelSerial":"e478831912432:13","connectionId":"zu4ekVHDa6","connectionSerial":11,"timestamp":null,"presence":[{"clientId":"sa1","connectionId":"zu4ekVHDa6","action":4,"data":"{\"isTyping\":false}"}]}`

However, my JS presence handler sees the data as a string, instead of an object :/

I noticed that the JS lib sends an additional `"encoding":"json"`member in the presence message, but it's not present in the message that the Java lib sends.

Is this behavior OK?

username_4: @username_0 [this](https://github.com/ably/ably-java/blob/bcde67a28a3b9a5547ac1763e78fa7c54ff88981/lib/src/main/java/io/ably/lib/types/BaseMessage.java#L218) is the root of your problem. We are packing Java `Object.toString()` representation to data field, when we have a subclass of java object. As @username_2 pointed out, we are only properly serialising JSONObject instances to JSON serialised String. For now, using JSONObject is the safest way to use with our library.

@username_2, would you like me to update public method signature to accept only JSONObject as a data argument?

username_2: No.

The library supports raw `data` - which is either a `String` or `byte[]`. This is simply passed unchanged into the structure that gets packed.

The library also supports JSON-encoding of complex types, if it recognises that they can be JSON--encoded. The current implementation requires that these are instances of `JSONElement`, meaning that they are encodable using the gson library: then it encodes the value to the JSON text and also sets the `encoding` member of the message to indicate that it is JSON (and must therefore be decoded by the client).

In the current implementation, if the `data` is not either a `String` or `byte[]` or `JSONElement` then it is coerced to a `String` (in the line linked above) and sent **as a String** to other clients. The `encoding` member is not set, and so it is not decoded by any recipient, and so it is delivered as a `String`.

So @username_4 is is definitely not valid to limit `data` to just a `JSONObject`. The correct behaviour, as I indicated above, is not to coerce values to `String` but to reject them if they are not either a `String`, or `byte[]` or are naturally JSON-encodable.

And @username_0 the demo needs to pass an object that the library will JSON-encode explicitly, not just implicitly coerce to a `String`.

username_1: @username_2 to avoid this unexpected co-erced string behaviour, I definitely vote we reject them. @username_4 can you prioritise getting that to work and issue a PR for this?

username_0: OK, I got the presence updates working.

In my last comment I was using Android's native [org.json.JSONObject](http://developer.android.com/reference/org/json/JSONObject.html) which gets coerced to string as the library checks against `com.google.gson.JsonObject`.

Once I switched over to using GSON's Json objects everything started working fine.

username_1: Ok, that's good @username_0. It would be good to prevent other developers from hitting this snag @username_4 if you can help

username_5: Just bumping this as a user just had problems with this. Maybe this should be prioritized? See ably/wiki#22.

username_2: @username_5 I'm not sure what there is to fix other than a documentation issue? I don't think the java lib should be supporting all available JSON libraries; we picked one (GSON). So if you want to pass `data` values to the library and you want it serialised as JSON, your `data` must implement the relevant interface (eg `com.google.gson.JsonObject`).

username_2: @username_5 ok, agreed

username_1: @username_5 can you help ensure that we reject invalid objects that don't match the expected type then?

username_5: @username_1 Yep, I'll give it a try.

Status: Issue closed

|

jgthms/bulma | 244796601 | Title: Navbar center?

Question:

username_0: <!-- PLEASE READ THE FOLLOWING INSTRUCTIONS -->

Is it about Bulma or about the Docs?

Yes

<!-- Is it a bug/feature/question or do you need help? -->

No

<!-- If it's a bug, is it a browser bug? -->

### Overview of the problem

<!-- UNCOMMENT THE APPROPRIATE LINES -->

This is about the Bulma **CSS framework**

This is about the Bulma **Docs**

I'm using Bulma **version** [0.4.3]

My **browser** is: Chrome

<!-- This is a **Sass** issue: I'm using version [x.x.x] -->

<!-- I am sure this issue is **not a duplicate**? -->

### Description

<!-- Description of the bug, enhancement, or question -->

### Steps to Reproduce

N/A

<!--

1. First Step

2. Second Step

3. and so on...

-->

### Expected behavior

<!-- What you expected to happen -->

### Actual behavior

<!-- What actually happened -->

My issue is I used nav-center in previous versions of bulma and loved it for logo use cases as well as content display. Is there an equivalent in the newest version of bulma with the new navbar?

Status: Issue closed

Answers:

username_1: #839 |

commercialhaskell/stack | 97290775 | Title: docker: stack runghc should pass stdin/stdout through

Question:

username_0: ```

$ cat echo.hs

main = interact id

$ echo 'hello' | runghc echo.hs

hello

$ echo 'hello' | stack runghc echo.hs

```

If stack uses its default (non-docker) configuration, then this will work as expected, with output `hello`. If stack is configured to be inside of a docker container, then there will be no output.

Stack version: 6adb449f1083a0c4768924d59956b46486b31be7

Answers:

username_1: This is an unfortunate case where we can't get a Docker container to behave like a normal process in all cases, and we're stuck between two non-ideal solutions when stdin/stdout/stderr are not connected to a terminal:

1) Use `docker run -i` (but not `-t`), in which case piping standard input works (also nice for running in an Emacs shell) but docker processes never exit when run in Bamboo and other Java-based tools.

2) Use `docker run` (without `-i` or `-t`), in which case Bamboo works but piping standard input doesn't.

So we ended up switching to option (2). We never did do any deep investigation into why Bamboo triggers this problem, so it would be better to have a way to correct the underlying issue

Status: Issue closed

username_2: I'm reading this as wontfix, closing. |

cs-education/classTranscribe | 394540171 | Title: [a11y] Landmarks - Courses

Question:

username_0: Courses URL: https://classtranscribe.ncsa.illinois.edu/courses

All elements should be contained in landmarks. This supports assistive technologies in organizing a page’s content for users who can’t see/scan the page’s layout (see [WCAG 2.0 SC 2.4.1 Bypass Blocks][1]).

Can be implemented either with [HTML5][2] (`<main>`, `<nav>`, `<header>`, `<footer>`, etc.) or [ARIA][3] (`role=main`, `role=navigation`, `role=banner`, `role=contentinfo`, etc.). There should at least be a “main” landmark at a minimum.

Related: #56, #59

[1]: https://www.w3.org/TR/UNDERSTANDING-WCAG20/navigation-mechanisms-skip.html

[2]: http://www.w3.org/TR/html5/sections.html

[3]: http://www.w3.org/TR/WCAG20-TECHS/ARIA11 |

vaadin/flow | 439981946 | Title: Generic DnD is broken in Firefox starting drag inside shadow dom

Question:

username_0: FF issue: https://bugzilla.mozilla.org/show_bug.cgi?id=1521471

Plan: investigate a workaround

Answers:

username_0: The workarounds were not helping enough to be considered to add to Flow.

Thus generic DnD feature has been moved to its own module `flow-dnd` that will be released with Flow 2.0 but it will not be added to the platform in 14.0.

Once the ^ mentioned FF issue has been fixed, we can then included the `flow-dnd` module to the platform in a new minor release of 14. So if you don't need Firefox support for your Flow app using DnD, you can take the `flow-dnd` module and add it to your Vaadin 14 project.

The only workaround for FF that worked was to force the shadow dom polyfill to be used instead of native support with implementing `PageConfigurator` in the main layout and:

```

public void configurePage(InitialPageSettings settings) {

if (settings.getBrowser().isFirefox()) {

settings.addInlineWithContents(InitialPageSettings.Position.PREPEND,

" if (window.customElements) window.customElements.forcePolyfill = true;"

+ " ShadyDOM = { force: true };",

InitialPageSettings.WrapMode.JAVASCRIPT);

}

}

```

It should be noted that this can reduce the performance of the application severely.

Keeping this issue open until the FF bug has been fixed.

username_1: To test with latest Firefox, which contains the fix.

Status: Issue closed

|

kubeflow/manifests | 520230848 | Title: e2e presubmit needs to be updated to use kfctl from kubeflow/kfctl

Question:

username_0: The presubmit for kubeflow/manifests tries to build kfctl and deploy Kubeflow.

This is now failing because kfctl has moved to kubeflow/kfctl but the test hasn't been updated yet.

We need to update the test. We should model it on

https://github.com/kubeflow/kfctl/blob/6c3fbeaf081a481d4324ea1ac1748eb7c6edae82/prow_config.yaml#L20

We probably want to replace the test with a py_func workflow that is imported from a different repo rather than duplicating the test.

As a short term fix we should remove the existing e2e presubmit test

Related to kubeflow/kfctl#7

Answers:

username_0: #660 has been merged.

Here's a presubmit indicating the E2E tests were triggered and run.

https://prow.k8s.io/view/gcs/kubernetes-jenkins/pr-logs/pull/kubeflow_manifests/675/kubeflow-manifests-presubmit/1206529100970201088/

Status: Issue closed

|

RelativeMedia/blog.relative.media | 120758927 | Title: letsencrypt simp_le needs to be updated

Question:

username_0: Notice the cd, the account_key.json and the email field. All of those need to be added to the script for it to work.

````

DOMAIN=%%DOMAIN%%;

sudo mkdir /etc/nginx/ssl/${DOMAIN};

sudo chmod 700 /etc/nginx/ssl/${DOMAIN};

cd /etc/nginx/ssl/${DOMAIN};

sudo simp_le -d ${DOMAIN}:/tmp/letsencrypt -f account_key.json -f key.pem -f cert.pem -f fullchain.pem --email "<EMAIL>" && sudo service nginx reload;

sudo chmod -R 400 /etc/nginx/ssl/${DOMAIN}/;

````

Answers:

username_1: email is optional, account_key.json is only needed if you don't cd into the ssl dir. I do know that you'd need to drop into a root shell in order to cd into `/etc/nginx//${DOMAIN}` .. might add that instead, otherwise all of those flags need to have the full path.

Status: Issue closed

username_0: Actually if you check none of them accept a full path anymore. They only work relative now.

I spent about an hour trying to get it working with the full path and in the end gave up and used the relative as it expects certain params.

username_1: Yeah good point, I opted to drop into root shell to run the commands.. Should be sufficient, I updated the post. |

rlwhitcomb/utilities | 1122380953 | Title: Calc `===` operator is basically unusable with numbers

Question:

username_0: With recent changes to make numbers BigInteger as much as possible, it seems that code like:

`loop over 0..4 { if $_ === 0 ... }`

never works because somehow the loop variables are never EXACTLY equal to the constants.<issue_closed>

Status: Issue closed |

ionic-team/ionic-framework | 675724489 | Title: feat: root config for each component to target platform

Question:

username_0: <!-- Before submitting an issue, please consult our docs (https://ionicframework.com/docs/). -->

<!-- Please make sure you are posting an issue pertaining to the Ionic Framework. If you are having an issue with the Ionic Appflow services (Ionic View, Ionic Deploy, etc.) please consult the Ionic Appflow support portal (https://ionic.zendesk.com/hc/en-us) -->

<!-- Please do not submit support requests or "How to" questions here. Instead, please use one of these channels: https://forum.ionicframework.com/ or http://ionicworldwide.herokuapp.com/ -->

<!-- ISSUES MISSING IMPORTANT INFORMATION MAY BE CLOSED WITHOUT INVESTIGATION. -->

# Feature Request

**Ionic version:**

<!-- (For Ionic 1.x issues, please use https://github.com/ionic-team/ionic-v1) -->

<!-- (For Ionic 2.x & 3.x issues, please use https://github.com/ionic-team/ionic-v3) -->

[x] **5.x**

**Describe the Feature Request**

<!-- A clear and concise description of what the feature request is. Please include if your feature request is related to a problem. -->

I would like to change mode for each component through config.

ex

```

IonicModule.forRoot({

IonToolBarMode: 'ios',

IonSearchBarMode: 'ios',

IonBackButtonMode: 'ios'

})

```

**Describe Preferred Solution**

<!-- A clear and concise description of what you want to happen. -->

option1.

```

IonicModule.forRoot({

IonToolBarMode: 'ios',

IonSearchBarMode: 'ios',

IonBackButtonMode: 'ios'

})

```

option2.

```

IonicModule.forRoot({

iosModeComponent: ['IonToolBarMode', 'IonSearchBarMode'],

mdModeComponent: ['IonBackButtonMode']

})

```

**Describe Alternatives**

<!-- A clear and concise description of any alternative solutions or features you've considered. -->

**Related Code**

<!-- If you are able to illustrate the feature request with an example, please provide a sample application via an online code collaborator such as [StackBlitz](https://stackblitz.com), or [GitHub](https://github.com). -->

**Additional Context**

<!-- List any other information that is relevant to your issue. Stack traces, related issues, suggestions on how to add, use case, Stack Overflow links, forum links, screenshots, OS if applicable, etc. -->

Answers:

username_1: Thanks for the feature request. I am going to close this as per-platform config is already possible in Ionic Framework. Please see: https://ionicframework.com/docs/angular/config#per-platform-config

Status: Issue closed

username_0: Config option does not have ionToolbar option to set it mode: ios for all platforms

username_1: Can you please describe your use case for this feature? Typically we recommend setting the config globally in this instance.

username_0: In my application, I want to use mode="ios" for all toolbar, It is almost done, i hate to go on each page and component to add mode="ios" on ion-toolbar or basically any ion-component.

Why i am using mode="ios" with that my ion-title can be center even if i add more icons at start and end since this is not possible in MD specs. |

open-mpi/mtt | 229187791 | Title: default_check_profile.ini BAT test checks for hardcoded kernel version

Question:

username_0: While testing out the Dockerized Jenkins console, the default_check_profile.ini test was failing due to checking for the wrong kernel version, which was hardcoded into the INI file.

Fix is to parameterize kernel version within the INI and within the console and require users to change this parameter to match their machine.<issue_closed>

Status: Issue closed |

orlp/slotmap | 381895339 | Title: Make hash function of SparseSecondaryMap configurable

Question: