repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

crewjam/saml | 665486839 | Title: IdP wants to know whether crewjam/saml can handle this...I have no clue.

Question:

username_0: I used the SAML package to provide authentication to my app. One of the IdPs asked me this question: "One issue I noticed in the metadata is that it sets WantAssertionsSigned="true"; our default, and the recommended best practice, is for the IdP to sign the entire response, which makes signing the enclosed assertion redundant. Do you know if the SP software can properly deal with that, i.e. requiring the response to be properly signed, instead of the assertion? Our IdP will sign both the response and the assertion if so requested, but a requirement to sign the assertion suggests a possible flaw in the software."

Does anyone know the answer.

Many thanks.

Status: Issue closed

Answers:

username_1: Yes, either should work. |

strapi/strapi | 390686661 | Title: Relation tables not created when multi many-to-many relations

Question:

username_0: <!-- ⚠️ If you do not respect this template your issue will be closed. -->

<!-- =============================================================================== -->

<!-- ⚠️ If you are not using the current Strapi release, you will be asked to update. -->

<!-- Please see the wiki for guides on upgrading to the latest release. -->

<!-- =============================================================================== -->

<!-- ⚠️ Make sure to browse the opened and closed issues before submitting your issue. -->

<!-- ⚠️ Before writing your issue make sure you are using:-->

<!-- Node 10.x.x -->

<!-- npm 6.x.x -->

<!-- The latest version of Strapi. -->

**Informations**

- **Node.js version**: 11.4.0<!-- Please ensure you are using the Node LTS version (v10) -->

- **NPM version**: 6.4.1

- **Strapi version**: 3.0.0-alpha.16<!-- Please make sure you are on the latest version -->

- **Database**: Postgres

- **Operating system**: MacOS

**What is the current behavior?**

When I create two many-to-many relations on a Content Type, only the first one is created in the Postgres database.

**Steps to reproduce the problem**

- Create two Content Types (`post` and `tags` for example)

- Create a post

- Create a many-to-many relation between posts and tags

- Create a many-to-many relation between post and users

- Edit the post

```

{ error: relation "pages_posts__posts_pages" does not exist

at Connection.parseE (/Users/username_0/Desktop/blog/node_modules/pg/lib/connection.js:554:11)

at Connection.parseMessage (/Users/username_0/Desktop/blog/node_modules/pg/lib/connection.js:379:19)

at Socket.<anonymous> (/Users/username_0/Desktop/blog/node_modules/pg/lib/connection.js:119:22)

at Socket.emit (events.js:189:13)

at Socket.EventEmitter.emit (domain.js:441:20)

at addChunk (_stream_readable.js:288:12)

at readableAddChunk (_stream_readable.js:269:11)

at Socket.Readable.push (_stream_readable.js:224:10)

at TCP.onStreamRead [as onread] (internal/stream_base_commons.js:145:17)

From previous event:

at Client_PG._query (/Users/username_0/Desktop/blog/node_modules/knex/lib/dialects/postgres/index.js:240:12)

at Client_PG.query (/Users/username_0/Desktop/blog/node_modules/knex/lib/client.js:192:17)

at Runner.<anonymous> (/Users/username_0/Desktop/blog/node_modules/knex/lib/runner.js:138:36)

From previous event:

at /Users/username_0/Desktop/blog/node_modules/knex/lib/runner.js:47:21

From previous event:

at Runner.run (/Users/username_0/Desktop/blog/node_modules/knex/lib/runner.js:33:30)

at Builder.Target.then (/Users/username_0/Desktop/blog/node_modules/knex/lib/interface.js:23:43)

at processImmediate (timers.js:632:19)

at process.topLevelDomainCallback (domain.js:120:23)

name: 'error',

length: 124,

severity: 'ERROR',

code: '42P01',

[Truncated]

position: '158',

internalPosition: undefined,

internalQuery: undefined,

where: undefined,

schema: undefined,

table: undefined,

column: undefined,

dataType: undefined,

constraint: undefined,

file: 'parse_relation.c',

line: '1180',

routine: 'parserOpenTable' }

```

**What is the expected behavior?**

The relation table should be created.

**Suggested solutions**

If you move up the in second relation in `Page.settings.json`, it will be created.

Answers:

username_1: Are any news here?

username_2: Same problem here, it's really blocking us.

Any progress on this @username_5 ?

username_3: Same problem here :

https://github.com/strapi/strapi/issues/2185#issuecomment-464808490

Also the pages related are not saved as they should be on a MySQL DB. Everything is fine on MongoDB.

Any news about this ?

username_4: seeing the same issue here.. any updates?

Status: Issue closed

username_5: It has been fixed in the beta version

username_6: When will this be released?

Is there a known temporary resolution? I've taken a look at the commit in the Beta branch for this and it looks like a significant change.

Thanks

username_7: @username_6 within this month if everything goes to plan. And yes the beta is a huge change. |

sendgrid/docs | 877516377 | Title: Domain authentication steps for GODADDY are incorrect

Question:

username_0: Sender Authentication -> Domain Authentication

Instructions specific to GoDaddy DNS hosting - the instructions say that the host value should include the root domain.

For example - example.com

The instructions for godaddy.com say that the 3 CNAME HOST / Values should be

| TYPE | HOST | VALUE |

|-------|:-------|:--------|

|CNAME|em####.example.com|u11111111.wl222.sendgrid.net|

|CNAME|s1._domainkey.example.com|s1.domainkey.u11111111.wl222.sendgrid.net|

|CNAME|s2._domainkey.example.com |s2.domainkey.u22222222.wl222.sendgrid.net|

For godaddy, this is incorrect.

These instructions for godaddy should instead be:

| TYPE | HOST | VALUE |

|-------|:-------|:--------|

|CNAME|em####|u11111111.wl222.sendgrid.net|

|CNAME|s1._domainkey|s1.domainkey.u11111111.wl222.sendgrid.net|

|CNAME|s2._domainkey|s2.domainkey.u22222222.wl222.sendgrid.net|

## Supporting link

https://www.godaddy.com/community/Managing-Domains/DNS-TXT-CNAME-records-are-still-not-getting-propagate/m-p/153099

Answers:

username_1: Yes, this should be made much more clear in sendgrid docs, I lost 2 days trying to configure it. There is the notice that some providers do this, but I would have expected that f they do this it is also visually visible so it would show smth like: s1._domainkey.example.com.example.com, but this is not the case with godaddy. So make it clear in your docs that godaddy does this. |

prettier/prettier-vscode | 272924403 | Title: False negatives when saving JSON files

Question:

username_0:

This happens every time I save any JSON file.

If I disable Prettier, it stops happening.

Here's my `.prettierrc`:

```

{

"useTabs": true,

"printWidth": 80,

"singleQuote": true,

"trailingComma": "none",

"bracketSpacing": true,

"jsxBracketSameLine": false,

"parser": "babylon",

"semi": true

}

```

If it matters, I have both `.prettierrc` and `.prettierrc.json` in my repo (various people on the team use various editors, some need one or the other). They are identical.

Answers:

username_1: this seems strange to me.

I can't say which one will be loaded. @username_2 should know that.

username_0: Plugins for atom, sublime, and vscode all need to work the same. This configuration was the best way we were able to do that without having to ask every developer to modify settings after installing the plugin.

username_2: Which version of prettier are you using?

username_3: @username_2 Same problem here with a fresh installation of VSCode and prettier extension:

```

$ yarn info prettier | grep version

version: '1.8.2',

```

On my case, I tried removing `parser` did not help. I tried also `flow` option and it was the same error. Can I help you more somehow?

username_3: Oups, `yarn info` is not the right command to know the actual installed version. It's `1.8.2` nonetheless:

```

$ yarn list --pattern prettier

yarn list v1.2.0

├─ [email protected]

├─ [email protected]

└─ [email protected]

✨ Done in 2.24s.

$ cat node_modules/prettier/package.json | grep version

"version": "1.8.2",

```

username_2: Does the name of the file you are formatting end with `.json`?

username_3: I was formatting the `package.json` file of my project. I said previously that `parser` options was not changing anything on my case, but I realized later that I had a workspace settings that was setting the `parser` option.

But right now, I'm not able to have the format to work anywhere, so I'm unable to reproduce for now. I'm looking into it, will report once I'm able to make it work again :)

username_3: Ok, after restarting VSCode, I was able to format again. I removed `parser` setting from both my workspace and user settings file.

Still the same problem.

username_1: @username_3 please share your settings, package.json/prettier configs

username_4: Perhaps related. With `parser: babylon` and vscode-prettier 1.6.1, I'm finding that a json file which looks like this:

```json

[

{

"id": "9147C594-02B0-4752-B569-3244EE1F7C26"

}

]

```

is prettified on save to:

```json

[

{

id: '9147C594-02B0-4752-B569-3244EE1F7C26',

}

]

```

The underlying project has `prettier: ^1.12.1` but I don't think that matters.

For now, adding json files to my prettierignore until I figure out the issue. |

boostorg/beast | 583400092 | Title: file_body succeeds opening bad file path

Question:

username_0: I'm running into an issue when the server receives a bogus file path like ".//v1/mapview/". file_body is able to open that path without error (see below), later on in Async Write an error code is set with message "Is a directory".

Should file_body.open check if the path is a directory?

```

// the following code snippet succeeds to open bogus ".//v1/mapview/"

beast::error_code ec;

http::file_body::value_type body;

body.open(response->data.c_str(), beast::file_mode::scan, ec);

if(ec == beast::errc::no_such_file_or_directory || !body.is_open()) {

return send(_defaultController.prepareNotFound(std::move(req), response->data));

}

// Handle an unknown error

if (ec) {

return send(_defaultController.prepareServerError(std::move(req), ec.message()));

}

```

Answers:

username_1: That's perplexing... which implementation is it using for `file`?

username_0: I've updated the code snippet with the method preparing the file response.

username_0: I should eventually mention that ./v1/mapview is an existing path on the server. A url to a non existing path like /v1/mapview/test correctly handles file not found.

username_2: Hmm, this is an interesting one.

A file could be a number of things - symlink, Unix device, etc.

Should the file body interface deal in file names (and therefore have to disambiguate) or file descriptors?

username_0: My interpretation is that the file modes described in file_base.hpp need to be considered in file_body.open(). E.g. if file mode is beast::file_mode::scan or beast::file_mode::read then file_body.open() should fail in case the file is not readable.

username_1: Beast _File_ is never intended for directories

username_0: Should it then fail to open?

username_2: @username_0 I'll have a design discussion with Vinnie about the behaviour of our object. I fear that like all seemingly simple design decisions, this one will end up more nuanced than was originally imagined.

In the meantime, are you comfortable adding a workaround in your code to `stat` the file and behave accordingly?

username_0: @username_2 Sure, that will work for me. I already have a workaround in place and was raising it as a general topic.

username_2: I've had a think about this.

The #1 concern around sending files is security. Are you sending the file you intend to send? This applies whether you are acting as client or server.

Checking the program's intent is well beyond the scope of Beast. Beast has to assume that if you offer a file-name, then the expectation is that the file exists, is of the correct type, and that you do intend to send it.

It is therefore my view that checking that the file exists (and may be legally transmitted) should already have been checked by the application. I see no reason to change Beast's behaviour here.

I'll leave the issue open for a few more hours in case someone wants to argue, but I am minded to close it.

username_0: I can follow your thoughts on this generally. Though from an API user perspective, it seems counter intuitive to what the library seems to offer:

// Attempt to open the file

beast::error_code ec;

http::file_body::value_type body;

body.open(response->data.c_str(), beast::file_mode::scan, ec);

// Handle the case where the file doesn't exist

if(ec == beast::errc::no_such_file_or_directory || !body.is_open()) {

return send(_defaultController.prepareNotFound(std::move(req), response->data));

}

I mean the sbove code reads like: Open file at path, otherwise handle the case when there is no such file or directory. It doesn‘t seem very intuitive that this check is never done.

username_2: Can you suggest an alternative that we can implement without breaking compatibility?

username_0: Putting all pieces together from what I as user would assume, design decisions and what Vinnie said („ Beast File is never intended for directories“), I think after a call to open() body.is_open() should be set to false for „directories“.

Arguably, it changes the result. But in my opinion this is not an API change, but rather a corrective measure due to the expectation that file_body is by definition dealing with files only, and an attempt to open a directory should by this definition never have succeeded.

That said, I can live with any decision.

username_1: it isn't meant for directories

username_2: Are you saying that if the call fails, it should be a guarantee that is_open() should return false?

This would seem reasonable to me.

username_0: Yes. |

runelite/runelite | 1017858053 | Title: Add blighted super restore to the "Show prayer dose indicator" prayer plugin option

Question:

username_0: **Is your feature request related to a problem? Please describe.**

Currently normal prayer potions or super restores show a glow around the prayer orb whenever a prayer dose can be used without spilling anything using the option "Show prayer dose indicator" in the Prayer plugin. However the blighted super restore is not supported by this option. [...]

**Describe the solution you'd like**

Add the blighted super restore to the supported items. |

comraq/My-Tetris | 123168430 | Title: Separate frame into 2 classes

Question:

username_0: frame class should just contain the canvas handling methods along with x,y coordinates corresponding to the canvas pixels

a separate game class to handle the number of actual tetris rows, columns, and block movements

Answers:

username_0: resolved with the latest commit

Status: Issue closed

|

microsoft/reverse-proxy | 765337604 | Title: Routing with YARP

Question:

username_0: ### Describe the bug

Im not actually sure this is a bug vs more of a misunderstanding on my part. But I have the default webapi template and I have set up a project with YARP. Basically I want to visit http://poxy-address/api/weatherforecast and have it proxy to http://weatherapi/weatherforecast

### To Reproduce

<!--

Create a new webapi project and a new YARP project. Add the following config to YARP

```json

"ReverseProxy": {

"Routes": [

{

"RouteId": "weatherroute",

"ClusterId": "weather1",

"Match": {

"Path": "/api/"

}

}

],

"Clusters": {

"weather1": {

"Destinations": {

"weather1/destination1": {

"Address": "http://localhost:5033"

}

}

}

}

}```

try and visit http://proxy/api/weatherforecast and the reply I get is 404 not found.

### Further technical details

- .Net 5.0

- Linux<issue_closed>

Status: Issue closed |

cilium/cilium | 930399916 | Title: CI: Suite-k8s-1.21.K8sFQDNTest Validate that multiple specs are working correctly

Question:

username_0: ## CI failure

```

/home/jenkins/workspace/Cilium-PR-K8s-1.21-kernel-4.9/src/github.com/cilium/cilium/test/ginkgo-ext/scopes.go:518

Can't connect to to a valid target when it should work

Expected command: kubectl exec -n default app2-58757b7dd5-nrwln -- curl --path-as-is -s -D /dev/stderr --fail --connect-timeout 5 --max-time 20 --retry 5 http://vagrant-cache.ci.cilium.io -w "time-> DNS: '%{time_namelookup}(%{remote_ip})', Connect: '%{time_connect}',Transfer '%{time_starttransfer}', total '%{time_total}'"

To succeed, but it failed:

Exitcode: 28

Err: exit status 28

Stdout:

time-> DNS: '0.000019()', Connect: '0.000000',Transfer '0.000000', total '5.002315'

Stderr:

command terminated with exit code 28

```

DNS resolution to external fqdns are tricky to handle in tests since the failures could be transient.

[eedec955_K8sFQDNTest_Validate_that_multiple_specs_are_working_correctly.zip](https://github.com/cilium/cilium/files/6718076/eedec955_K8sFQDNTest_Validate_that_multiple_specs_are_working_correctly.zip)

Answers:

username_1: I found that the coredns pod in this environment is configured with endpoint-routes while the rest of the endpoints were not, which suggests a link to #16717 (if not the same root cause). I used this technique: https://github.com/cilium/cilium/issues/16717#issuecomment-871794749

username_2: According to [the CI dashboard](https://datastudio.google.com/s/jmn3FXS9X04), could be the same root cause as https://github.com/cilium/cilium/issues/16713#issuecomment-873293963, since it started failing more often around the same time (June 24th).

Status: Issue closed

|

grpc/grpc-go | 239353596 | Title: Possible goroutine/connection leak

Question:

username_0: Please answer these questions before submitting your issue.

### What version of gRPC are you using?

server: grpc-go 1.4.0, client: Python grpcio 1.4.0

### What version of Go are you using (`go version`)?

go1.8.3

### What operating system (Linux, Windows, …) and version?

Ubuntu 16.04

### What did you do?

Prior to grpc-go 1.3, I'd see connection leakage (they seem to be closed by the client w/o the server knowing about it), and goroutines would start leaking. Post 1.3, the keepalive functionality helped close those "abandoned" connections.

I've currently set the keepalive parameters to: MaxConnectionIdle: 1 hr, Time: 1 hr.

Oddly the rate of leakage happens faster when RPC transaction counts are low. Not sure if this is something w/ grpc on the Python side.

<img width="1036" alt="screen shot 2017-06-28 at 10 20 06 am" src="https://user-images.githubusercontent.com/124396/27669678-ef37954e-5c3c-11e7-9bfc-c0adf9ec76da.png">

<img width="353" alt="screen shot 2017-06-28 at 10 20 37 am" src="https://user-images.githubusercontent.com/124396/27669680-ef3f1bf2-5c3c-11e7-92a1-bb91ae809e65.png">

### What did you expect to see?

Fairly steady connection/goroutine count.

### What did you see instead?

Goroutine count seems to go up as RPC transactions per minute goes down.

Answers:

username_1: Hey,

Thanks for the detailed representation of the problem. However, the data seems very hard to believe. The number of go routines seems to be decreasing proportionally to the increase in number of RPCs. This can't be the case. Would you mind vetting the graphs further?

Also, it'd really helpful if you can provide a reproduction of this issue.

username_0: @username_1 It's odd to me as well. My only guess so far is that the Python/C-core is cycling channel connections frequently when activity is low, causing more broken connections which only gets cleaned up by the keepalive on server side after an hour.

username_1: That doesn't answer why would the goroutines increase during inactivity? Broken connections don't spawn new goroutines on Go server.

Status: Issue closed

username_1: Since it's really hard to pursue this without a reliable reproduction and the fact that we haven't noticed anything similar. I'm going to go ahead and close this issue. Feel free to get back to us if you find something more or perhaps a reproduction. Thanks for your time and effort. |

AntonOkryb/File_Commander | 475021116 | Title: Замечания

Question:

username_0: Опоздание 25 дней -12.5

Не считаешь количество файлов и размер директории -5

Все до жути мерцает. Я же показывал как сделать частичную перерисовку -2

При попытки зайти в папку без доступа, программа падает -2

https://github.com/AntonOkryb/File_Commander/blob/master/FileCommander/Program.cs#L22 - Main большой, стоит разбить на методы и даже на классы -1

https://github.com/AntonOkryb/File_Commander/blob/master/FileCommander/Program.cs#L45 - зачем это делать каждый раз? Достаточно сделать при переключении -1

https://github.com/AntonOkryb/File_Commander/blob/master/FileCommander/Program.cs#L62 - это лучше вынести в саму панель. Пусть сама обрабатывает свои кнопки -1

https://github.com/AntonOkryb/File_Commander/blob/master/FileCommander/Program.cs#L82 - в хелпе лучше бы рассказать про шорткаты, которые не описаны

https://github.com/AntonOkryb/File_Commander/blob/master/FileCommander/Program.cs#L90 - комментарий не к месту. -2 Сильно сбивает с толку.

https://github.com/AntonOkryb/File_Commander/blob/master/FileCommander/CPanel.cs#L212 - ну называл же уже методы с большой буквы, не стоило останавливаться -0.5

https://github.com/AntonOkryb/File_Commander/blob/master/FileCommander/CPanel.cs#L23 - свойства именуются с большой буквы -0.5

https://github.com/AntonOkryb/File_Commander/blob/master/FileCommander/CPanel.cs#L43 - для таких штук в C# свойства -1

https://github.com/AntonOkryb/File_Commander/blob/master/FileCommander/CLocalMenu.cs#L11 - обычно пишешь модификаторы доступа, а здесь опустил. Нужно делать единнобразно -0.5

https://github.com/AntonOkryb/File_Commander/blob/master/FileCommander/CCommon.cs#L110 - это хорошо переписывается на LINQ

Проект пока не принят. |

Sylius/Sylius | 276578131 | Title: Corrupted shipping methods

Question:

username_0: hello

I opened those entities

http://demo.sylius.org/admin/shipping-methods/16339/edit

http://demo.sylius.org/admin/shipping-methods/16337/edit

remove "calculator" from HTML and save it

result is **Server has encountered some errors**. ... and it's not possible to edit this entity anymore

sorry :)

Answers:

username_1: I think it just indicate that we're missing `NotBlank` validation on `ShippingMethod::$calculator` ;)

username_2: Should be solved by #9764.

username_1: Should be fixed now, thank you, @username_0 for reporting this issue :)

Status: Issue closed

|

izhangzhihao/intellij-rainbow-brackets | 501859839 | Title: Crash with nginx.conf files

Question:

username_0: ## Expected Behavior

Well, I guess the plugin shouldn't crash?

## Current Behavior

The plugin crashes every time I open a nginx.conf file. This started happening ever since I installed the nginx support plugin (https://plugins.jetbrains.com/plugin/4415-nginx-support)

Ironically, it does mark the brackets in nginx.conf files when they're both enabled, but doesn't when I disable the nginx-support plugin (it also doesn't crash in this instance).

## Possible Solution

I've disabled the nginx-support plugin.

## Code snippet for reproduce (for bugs)

```

java.lang.RuntimeException: java.lang.NoSuchMethodException: net.ishchenko.idea.nginx.annotator.NginxAnnotatingVisitor.<init>()

at com.intellij.util.ExceptionUtil.rethrow(ExceptionUtil.java:116)

at com.intellij.util.ReflectionUtil.newInstance(ReflectionUtil.java:408)

at com.intellij.util.ReflectionUtil.newInstance(ReflectionUtil.java:376)

at com.intellij.codeInsight.daemon.impl.ThreadLocalAnnotatorMap.cloneTemplates(ThreadLocalAnnotatorMap.java:46)

at com.intellij.codeInsight.daemon.impl.ThreadLocalAnnotatorMap.get(ThreadLocalAnnotatorMap.java:66)

at com.intellij.codeInsight.daemon.impl.CachedAnnotators.get(CachedAnnotators.java:31)

at com.intellij.codeInsight.daemon.impl.DefaultHighlightVisitor.runAnnotators(DefaultHighlightVisitor.java:105)

at com.intellij.codeInsight.daemon.impl.DefaultHighlightVisitor.visit(DefaultHighlightVisitor.java:86)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.runVisitors(GeneralHighlightingPass.java:351)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.lambda$collectHighlights$5(GeneralHighlightingPass.java:284)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.analyzeByVisitors(GeneralHighlightingPass.java:311)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.lambda$analyzeByVisitors$6(GeneralHighlightingPass.java:314)

at com.github.username_1.rainbow.brackets.visitor.RainbowHighlightVisitor.analyze(RainbowHighlightVisitor.kt:32)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.analyzeByVisitors(GeneralHighlightingPass.java:314)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.lambda$analyzeByVisitors$6(GeneralHighlightingPass.java:314)

at com.intellij.codeInsight.daemon.impl.DefaultHighlightVisitor.analyze(DefaultHighlightVisitor.java:70)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.analyzeByVisitors(GeneralHighlightingPass.java:314)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.collectHighlights(GeneralHighlightingPass.java:281)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.collectInformationWithProgress(GeneralHighlightingPass.java:225)

at com.intellij.codeInsight.daemon.impl.ProgressableTextEditorHighlightingPass.doCollectInformation(ProgressableTextEditorHighlightingPass.java:84)

at com.intellij.codeHighlighting.TextEditorHighlightingPass.collectInformation(TextEditorHighlightingPass.java:55)

at com.intellij.codeInsight.daemon.impl.PassExecutorService$ScheduledPass.lambda$null$1(PassExecutorService.java:429)

at com.intellij.openapi.application.impl.ApplicationImpl.tryRunReadAction(ApplicationImpl.java:1106)

at com.intellij.codeInsight.daemon.impl.PassExecutorService$ScheduledPass.lambda$doRun$2(PassExecutorService.java:422)

at com.intellij.openapi.progress.impl.CoreProgressManager.registerIndicatorAndRun(CoreProgressManager.java:591)

at com.intellij.openapi.progress.impl.CoreProgressManager.executeProcessUnderProgress(CoreProgressManager.java:537)

at com.intellij.openapi.progress.impl.ProgressManagerImpl.executeProcessUnderProgress(ProgressManagerImpl.java:59)

at com.intellij.codeInsight.daemon.impl.PassExecutorService$ScheduledPass.doRun(PassExecutorService.java:421)

at com.intellij.codeInsight.daemon.impl.PassExecutorService$ScheduledPass.lambda$run$0(PassExecutorService.java:397)

at com.intellij.openapi.application.impl.ReadMostlyRWLock.executeByImpatientReader(ReadMostlyRWLock.java:164)

at com.intellij.openapi.application.impl.ApplicationImpl.executeByImpatientReader(ApplicationImpl.java:204)

at com.intellij.codeInsight.daemon.impl.PassExecutorService$ScheduledPass.run(PassExecutorService.java:395)

at com.intellij.concurrency.JobLauncherImpl$VoidForkJoinTask$1.exec(JobLauncherImpl.java:161)

at java.base/java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:290)

at java.base/java.util.concurrent.ForkJoinPool$WorkQueue.topLevelExec(ForkJoinPool.java:1020)

at java.base/java.util.concurrent.ForkJoinPool.scan(ForkJoinPool.java:1656)

at java.base/java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1594)

at java.base/java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:177)

```

## Your Environment

* Plugin version: 5.23

* IDE & Operating System version, comment your env as below(go to "About IntelliJ IDEA" -> click the "copy" icon):

```

IntelliJ IDEA 2019.2.3 (Ultimate Edition)

Build #IU-192.6817.14, built on September 24, 2019

Licensed to <NAME>

You have a perpetual fallback license for this version

Subscription is active until September 14, 2020

Runtime version: 11.0.4+10-b304.69 amd64

VM: OpenJDK 64-Bit Server VM by JetBrains s.r.o

Linux 4.15.0-43-generic

GC: ParNew, ConcurrentMarkSweep

Memory: 1886M

Cores: 8

Registry: compiler.automake.allow.when.app.running=true, ide.tree.ui.experimental=false, ide.balloon.shadow.size=0

Non-Bundled Plugins: HOCON Converter, Lombook Plugin, String Manipulation, com.github.redfoos.logstash-intellij-plugin, com.microsoft.vso.idea, com.shellcheck, com.thvardhan.gradianto, org.jetbrains.plugins.hocon, org.jetbrains.kotlin, com.chrisrm.idea.MaterialThemeUI, com.intellij.plugins.html.instantEditing, com.jetbrains.php, org.intellij.scala, Dart, io.flutter, username_1.rainbow.brackets, Pythonid, com.intellij.kubernetes, com.jetbrains.edu, de.mariushoefler.flutter_enhancement_suite, org.zalando.intellij.swagger

```

Answers:

username_1: First of all, this is a bug of the 'Nginx-Support' plugin. You can report an issue [here](https://github.com/ishchenko/idea-nginx).

As a workaround, you could disable Nginx support by [this](https://github.com/username_1/intellij-rainbow-brackets#disable-rainbow-brackets-for-specific-languages)

```xml

<application>

<component name="RainbowSettings">

<option name="languageBlacklist">

<array>

<option value="nginx" />

</array>

</option>

</component>

</application>

```

Status: Issue closed

|

goharbor/harbor | 836373244 | Title: Unable to send emails using Office 356 smtp server.

Question:

username_0: **Expected behavior and actual behavior:**

Unable to send emails using Office 356 smtp server.

**Steps to reproduce the problem:**

* Email Server: smtp.office365.com

* Email Server Port: 587

* Email Username: username

* Email Password: <PASSWORD>

* Email From: admin <<EMAIL>>

* Email SSL: True

* Verify Certificate: True

Reference: https://support.microsoft.com/en-us/office/pop-imap-and-stmp-settings-8361e398-8af4-4e97-b147-6c6c4ac95353

**Versions:**

- harbor version: v2.2.0-ec0ba116

- docker engine version: -

- docker-compose version: -

**Additional context:**

- **Log Files**

```

2021-03-19T21:19:19Z [ERROR] [/core/api/email.go:113]: failed to ping email server: tls: first record does not look like a TLS handshake

2021-03-19T21:19:21Z [ERROR] [/core/api/email.go:113]: failed to ping email server: tls: first record does not look like a TLS handshake

2021-03-19T21:22:34Z [ERROR] [/core/api/email.go:113]: failed to ping email server: tls: first record does not look like a TLS handshake

```

#5757

Answers:

username_1: Hi. You can repoduce it as well, setting Port 25 and check/uncheck Email SSL. If you disable it will Try TLS but cor.log throws:

`core[684]: 2021-04-20T11:47:44Z [ERROR] [/core/api/email.go:113]: failed to ping email server: 535 5.7.8 Error: authentication failed: no mechanism available`

if you enable ssl on Port 25 it throws known reported error...

username_2: We also suffering from this.

username_3: Same here.

username_4: We have the same issue using Office365 smtp.office365.com on Port 587

```

2021-12-10T23:55:41Z [ERROR] [/core/api/email.go:115]: failed to ping email server: 504 5.7.4 Unrecognized authentication type [CO1PR15CA0097.namprd15.prod.outlook.com]

2021-12-10T23:51:01Z [ERROR] [/core/api/email.go:115]: failed to ping email server: tls: first record does not look like a TLS handshake

2021-12-10T23:49:59Z [ERROR] [/core/api/email.go:115]: failed to ping email server: 504 5.7.4 Unrecognized authentication type [MW4PR03CA0043.namprd03.prod.outlook.com]

```

username_3: I think this issue is really important because many companies use Exchange.

username_5: I'm seeing the same issue with SMTP setup with Office 365 on port 587 with a failed ping error. Like @username_4 I am also getting the failed ping. The same credentials work in other applications to send mail via SMTP via Office 365/ Exchange. |

mattphillips/deep-object-diff | 715973793 | Title: Comparing object with "hasOwnProperty" keys fails

Question:

username_0: I'm trying to use deep-object-diff to compare different copies of https://github.com/mdn/browser-compat-data, which is a dataset describing the web platform itself. As such, it has [a key called "hasOwnProperty"](https://github.com/mdn/browser-compat-data/blob/d3e87462355e61d24aa9bf8398b0be2d1ef61306/javascript/builtins/Object.json#L930) to describe that method. deep-object-diff uses code like `obj.hasOwnProperty(key)` a lot, and `obj.hasOwnProperty` will in this case be an object in the BCD data, not `Object.prototype.hasOwnProperty`.

This causes deep-object-diff to throw an exception, the first place being here.

https://github.com/username_3/deep-object-diff/blob/45549c8225fc21c178dc6370e8028e4d08498e7b/src/diff/index.js#L12

Simplified a bit, here's a subset of the data:

```json

{

"javascript": {

"builtins": {

"Object": {

"hasOwnProperty": {

"__compat": "an object with more stuff here"

}

}

}

}

}

```

Repro script showing the problem:

```js

const { diff } = require("deep-object-diff");

diff({"hasOwnProperty": 1}, {"hasOwnProperty": 2});

```

This will throw "Uncaught TypeError: r.hasOwnProperty is not a function".

A possible fix for this would be to add a `hasOwnProperty` wrapper to https://github.com/username_3/deep-object-diff/blob/master/src/utils/index.js and always use that, but there may other ways.

Answers:

username_1: https://eslint.org/docs/rules/no-prototype-builtins would catch that.

username_2: @username_0 Review #59?

<sup>(Posting this because I think you don't get a notification when a PR links to your issue.)</sup>

username_0: @username_2 thanks for posting the fix, I've tried it and can confirm it fixes my problem.

Status: Issue closed

username_3: Available in [v1.1.5](https://www.npmjs.com/package/deep-object-diff/v/1.1.5) |

openssl/openssl | 361140116 | Title: set_ciphersuites function should be static or renamed ssl_internal_set_ciphersuites

Question:

username_0: See ssl_ciph.c:1304

`int set_ciphersuites(STACK_OF(SSL_CIPHER) **currciphers, const char *str)`

This should be static and renamed ssl_internal_set_ciphersuites ...

Answers:

username_1: I can make that change for you. However, there are other static int functions which don't carry the `internal` in their name. So I would leave the name unchanged if that's ok for you.

username_1: E.g. `static int update_cipher_list_by_id(...)` and `static int update_cipher_list(...)`.

username_1: See #7253.

Status: Issue closed

|

DynamikArray/Ciima | 506043225 | Title: Close and Cancel buttons on Inventory Dialog Form

Question:

username_0: Add close and cancel buttons, along with helper text to the Inventory dialog form.

Status: Issue closed

Answers:

username_0: We've gone ahead and reworked the inline editing and have built a better solution. beebb3aa8daeced9e4c29b8d41e780f385a99ab7 |

catboost/catboost | 863678039 | Title: Predicting after ranking fails / is poorly documented

Question:

username_0: Problem: Predicting after ranking fails / is poorly documented

catboost version: 0.24.4 (Python 3.8.5)

Operating System: Ubuntu 20.04

CPU: Intel i9 i9-9900K

I have set up a pool `train` and a pool `test` like so:

```

train = Pool(X_train.values, y_train.values, group_id=g_train)

```

Where `X_train` contains my features, `y_train` contains my scores, and `g_train` contains all the groups. Everything is sorted to match the group order.

I create the model and fit it like so:

```

model = CatBoost({"loss_function":"YetiRank"})

model.fit(train, eval_set=(test), use_best_model=True, early_stopping_rounds=100)

```

Then when I try to predict:

```

model.predict(test, prediction_type="Class")

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/sakuya/.local/lib/python3.8/site-packages/catboost/core.py", line 2033, in predict

return self._predict(data, prediction_type, ntree_start, ntree_end, thread_count, verbose, 'predict')

File "/home/sakuya/.local/lib/python3.8/site-packages/catboost/core.py", line 1981, in _predict

predictions = self._base_predict(data, prediction_type, ntree_start, ntree_end, thread_count, verbose)

File "/home/sakuya/.local/lib/python3.8/site-packages/catboost/core.py", line 1310, in _base_predict

return self._object._base_predict(pool, prediction_type, ntree_start, ntree_end, thread_count, verbose)

File "_catboost.pyx", line 4308, in _catboost._CatBoost._base_predict

File "_catboost.pyx", line 4323, in _catboost._CatBoost._base_predict

File "_catboost.pyx", line 1456, in _catboost.transform_predictions

File "_catboost.pyx", line 5086, in _catboost._convert_to_visible_labels

IndexError: index 0 is out of bounds for axis 0 with size 0

```

My groups are strings, and there are 5 groups in total. Which makes predicting probability even more confusing, because I only get 2 outputs per row instead of the 5 I was expecting:

```

model.predict(test, prediction_type="Probability")

array([[0.53883141, 0.46116859],

[0.52118767, 0.47881233],

[0.53749065, 0.46250935],

...,

[0.54306105, 0.45693895],

[0.52692491, 0.47307509],

[0.52297527, 0.47702473]])

```

I expected to see predicted classes per row, and predicted probabilities for each class per row. But I don't get either of those.

Where is this documented? The msrank guide only covers training a model for ranking, and nowhere does it even mention how to use the model afterwards.

Answers:

username_1: You're right.

1) Documentation and tutorials do not make it clear how to predict for ranking problems.

In fact a model trained for ranking will just predict one real value for a sample (corresponding to prediction type `RawFormulaVal`) and you should rank samples according to the predicted value (i.e. `predict` function does not return rank, only value that you can use to rank samples).

Something like that (for a single group):

```py

from operator import itemgetter

...

predictions = model.predict(X_group)

...

# print group samples with predictions, ranked by predictions

print (sorted(zip(predictions, X_group), key=itemgetter(0)))

```

Prediction does not use group or label data, only features, so it is enough to have only features data as an argument to `predict` but full `Pool` data is also accepted (but only features data from it is used for prediction).

You can predict for all groups in the dataset in one call to `predict` but additional postprocessing (in your - user - code) to sort all samples in each group according to the predicted value to get ranked samples is required.

2) prediction types `Class` and `Probability` make sense only for Classification problems. In case of non-Classification problems error message should clearly state that. We'll fix this inconsistency. |

gphotosuploader/google-photos-api-client-go | 474417482 | Title: Refactor how Google response is checked

Question:

username_0: We are relying in status message response, we should use code instead as it's suggested [here](https://github.com/googleapis/google-api-go-client/blob/master/GettingStarted.md).

https://github.com/gphotosuploader/google-photos-api-client-go/blob/f16c909f308c4a17c89cecc894cc9597cdf4cc2a/lib-gphotos/client.go#L326<issue_closed>

Status: Issue closed |

SlicerIGT/SlicerIGT | 167883256 | Title: MarkupsToModel auto update checkbox on makes using the module error-prone

Question:

username_0: It's quite unexpected (and unprecedented in Slicer) that simply switching to a module initiates processing.

Also I lost data by removing the automatically created model while auto-update was still on.

I think it would be better if the auto-update combobox was off by default.

Answers:

username_1: @username_2 has been working on a complete GUI rework - it's almost-ready for integration (https://github.com/SlicerIGT/SlicerIGT/pull/90). We should test the behavior again after his changes have been integrated.

Probably the issue is that "None" option is not enabled in the output node selector and so it selects the first model node in the scene.

username_0: OK thanks! I wasn't aware of the UI rework.

Why is auto-update on by default? I think the problem is not (only) the None option, but more the auto-update, as I suggest in the ticket title.

username_1: Auto-update is good, because that's what you need most of the time. Auto-update is enabled by default in Fiducial registration wizard, too, and nobody complained. The problem is that the module randomly selects a node as you enter the module and you don't have a chance to turn off auto-update or choose a different output node.

username_0: Adding the None options would help for sure.

I think it is very unusual in Slicer that by changing selection in a node combobox immediately changes the content of the node without explicit action (may it be enabling auto-update or clicking manual update). I didn't see any module operating like this before outside SlicerIGT.

username_1: Yes, SlicerIGT is "unusual" because it mainly operates on nodes that are changing in real-time, that's why auto-update is the default for most modules (but it can be disabled for a few modules such as for this one).

username_0: If for SlicerIGT's use cases this "real-time behaviour" is better, and it is accepted that the modules in the extension don't work the same way as Slicer core, then auto-update is fine by me.

In this case adding None to the output combobox is enough.

username_2: The GUI update will address this issue by allowing "None" in the output combobox.

username_2: (And "None" will be selected by default)

username_2: Should this be closed?

username_0: Has the above discussed None option been added?

username_2: It was merged in this commit:

https://github.com/SlicerIGT/SlicerIGT/commit/c14531826551f9c0e6ae8e7a5aabaa64bc83ae95

Status: Issue closed

username_0: Thanks! In that case I think this issue can be closed. I'm closing it now. |

nyupcs/pcs-sp21-lab4-server | 840265950 | Title: exploit-main

Question:

username_0: -----BEGIN PGP MESSAGE-----

<KEY>

<KEY>

=xdIZ

-----END PGP MESSAGE-----

Answers:

username_0: My NetID is km3947, <NAME>, and my pub key id is 97E0D099241F8CA3

username_0: -----BEGIN PGP MESSAGE-----

<KEY>

-----END PGP MESSAGE-----

username_0: -----BEGIN PGP PUBLIC KEY BLOCK-----

<KEY>mc<KEY>

-----END PGP PUBLIC KEY BLOCK-----

username_1: This submission has been verified. Well done! |

LBNL-UCB-STI/beam | 384019948 | Title: Harmonize field names for coordinates in output events

Question:

username_0: Right now our events use very different conventions for coordinates, e.g.:

<event type="PathTraversal" start.y="0.02995" end.x="0.02995" start.x="0.03995" .....

<event destinationY="0.01995" originY="0.01995" destinationX="0.01005" ....

This is just plain messy. Please look through all events (note only Beam events have coordinates) and change the format to be consistent with the rest of our event field names and use "start" and "end" in all cases where there are 2 coordinates:

startX, startY

endX, endY

Note, following MATSim convention, please also replace all underscore field names with cameCase, e.g. PathTraversal event is a big offender here.<issue_closed>

Status: Issue closed |

ZacBlanco/hwx-tutorials | 121713166 | Title: Import "Processing streaming data in Hadoop with Apache Storm"

Question:

username_0: http://hortonworks.com/hadoop-tutorial/processing-streaming-data-near-real-time-apache-storm/

Status: Issue closed

Answers:

username_0: Reopening this issue. Closed accidentally

username_0: http://hortonworks.com/hadoop-tutorial/processing-streaming-data-near-real-time-apache-storm/ |

imabug/raddb | 204400920 | Title: Validation errors when adding new survey recommendations

Question:

username_0: validation errors were produced when adding a new survey recommendation. These should not be checked for unless the `resolved` checkbox is set.

Status: Issue closed

Answers:

username_0: validation errors were produced when adding a new survey recommendation. These should not be checked for unless the `resolved` checkbox is set.

username_0: Looks like I should be able to do something by using https://laravel.com/docs/5.4/validation#conditionally-adding-rules

Status: Issue closed

|

WarEmu/WarBugs | 179051591 | Title: So, I got all the possible influence and quest rewards for a time.

Question:

username_0: A while back, the same day when I created my sorcerer, I had a very strange bug. When I got to the first destro camp, where you meet your first rally master, and completed all the quests, as well as did the local PQ, I went back to claim my rewards. I noticed suddenly that after having selected my reward, I couldn't turn in the quest. For some time I was confused, until I tried to select the other reward and it seemed to have selected both of them. I clicked accept, and found both of the rewards in my inventory. The same for the influence rewards, I had every single one of them.

I'm still not sure what caused this bug to happen and it was a few weeks ago. I didn't really think to report it back then. I still have all the possible dark elf quest trophies in my inventory.

Status: Issue closed

Answers:

username_1: No longer applies, bug was fixed. |

eclipse/microprofile-open-api | 277151437 | Title: TCK tests for openapi endpoint

Question:

username_0: We will be using the `/openapi` endpoint for most of our TCK tests already, but this issue will track the inclusion of specific endpoint tests that will use different `Accept` headers for json and yaml.

Answers:

username_1: @username_2, @username_4 and I are working on this.

username_2: We'll want to write assertions which cover each of the annotations. I've copied the checklist below.

Annotation list:

- [ ] Callback

- [ ] Callbacks

- [ ] Components

- [ ] Explode

- [ ] ParameterIn

- [ ] ParameterStyle

- [ ] SecuritySchemeIn

- [ ] SecuritySchemeType

- [ ] Extension

- [ ] Extensions

- [ ] ExternalDocumentation

- [ ] Header

- [ ] Contact

- [ ] Info

- [ ] License

- [ ] Link

- [ ] LinkParameter

- [ ] ArraySchema

- [ ] Content

- [ ] DiscriminatorMapping

- [ ] Encoding

- [ ] ExampleObject

- [ ] Schema

- [ ] OpenAPIDefinition

- [ ] Operation

- [ ] Parameter

- [ ] Parameters

- [ ] RequestBody

- [ ] APIResponse

- [ ] APIResponses

- [ ] OAuthFlow

- [ ] OAuthFlows

- [ ] OAuthScope

- [ ] SecurityRequirement

- [ ] SecurityRequirements

- [ ] SecurityScheme

- [ ] SecuritySchemes

- [ ] Server

- [ ] Servers

- [ ] ServerVariable

- [ ] Tag

- [ ] Tags

username_2: Going to start with the Info, Contact and License annotations.

username_3: I am covering Component, Tag, Tags and Header.

username_4: Starting with Schema

username_5: doing

- [ ] Operation

username_1: I'm covering the following annotations:

- ExternalDocumentation

- Server

- Servers

- ServerVariable

username_6: I'll do SecurityScheme and SecurityRequirement

username_0: thanks everyone. I updated the checkboxes based on the comments.

username_2: I'm now working on Operation.

username_6: I'm working on Link, Encoding

username_0: updated checkboxes

username_4: I'll handle Extension, Extensions, Example Object

username_0: updated

username_6: Also doing OAuthFlow, OAuthFlows, OAuthScope, as these relate to SecurityScheme (confirmed this with Jana @username_5).

@username_0 Also completed LinkParameter, and SecuritySchemeIn/SecuritySchemeType (these are enums for SecurityScheme annotation)

username_3: I am working on Content

username_4: I am working on DiscriminatorMapping

username_0: All done - thanks everyone!

Status: Issue closed

|

simonbyrne/WinReg.jl | 282111250 | Title: WinReg.jl uses deprecated Array(::Type[T], m::Integer)

Question:

username_0: WARNING: Array(::Type{T}, m::Integer) where T is deprecated, use Array{T}(Int(m)) instead.

Stacktrace:

[1] depwarn(::String, ::Symbol) at .\deprecated.jl:70

[2] Array(::Type{UInt8}, ::UInt32) at .\deprecated.jl:57

[3] querykey(::UInt32, ::String) at .\.julia\v0.6\WinReg\src\WinReg.jl:78

[4] querykey(::UInt32, ::String, ::String) at .\.julia\v0.6\WinReg\src\WinReg.jl:113

Answers:

username_1: Now fixed.

Status: Issue closed

|

SiegeEngineers/aoe2techtree | 834851254 | Title: MeleeArmor/PierceArmor differs from Base Melee/Pierce

Question:

username_0: I have found that for some siege units the MeleeArmor/PierceArmor differs from Base Melee/Pierce in the Armours dict.

e.g. Battering Ram

https://github.com/SiegeEngineers/aoe2techtree/blob/master/data/data.json#L13305

```

"MeleeArmor": 0,

...,

"PierceArmor": 180,

```

vs.

```

"Armours": [

{

"Amount": -3,

"Class": 4

},

{

"Amount": 180,

"Class": 3

},

...

],

```

Other siege units affected:

Capped Ram

https://github.com/SiegeEngineers/aoe2techtree/blob/master/data/data.json#L6269

Siege Ram

https://github.com/SiegeEngineers/aoe2techtree/blob/master/data/data.json#L7536

Siege Tower

https://github.com/SiegeEngineers/aoe2techtree/blob/master/data/data.json#L11638

Answers:

username_1: Those properties are actually named "Displayed[Property]" in the game files.

I guess they only influence what is being displayed in-game, but not what is actually calculated. https://github.com/SiegeEngineers/aoe2techtree/blob/master/scripts/generateDataFiles.py#L569 |

N1NTENDO1999/homepage | 442823798 | Title: Скласти додаткові інформаційні блоки

Question:

username_0: Можна зробити резюме менш формальним, додавши додаткові блоки, які містять корисну інформацію, але подають її в "розважальній" формі. Наприклад, що подобається і не подобається, сильні і слабкі сторони, перелік навиків чи хобі, інфографіка або ж інтерактивні модулі, тощо. Варто написати контент такого блоку раніше, ніж починати верстку, бо його розміри впливатимуть на розміщення елементів на сторінці<issue_closed>

Status: Issue closed |

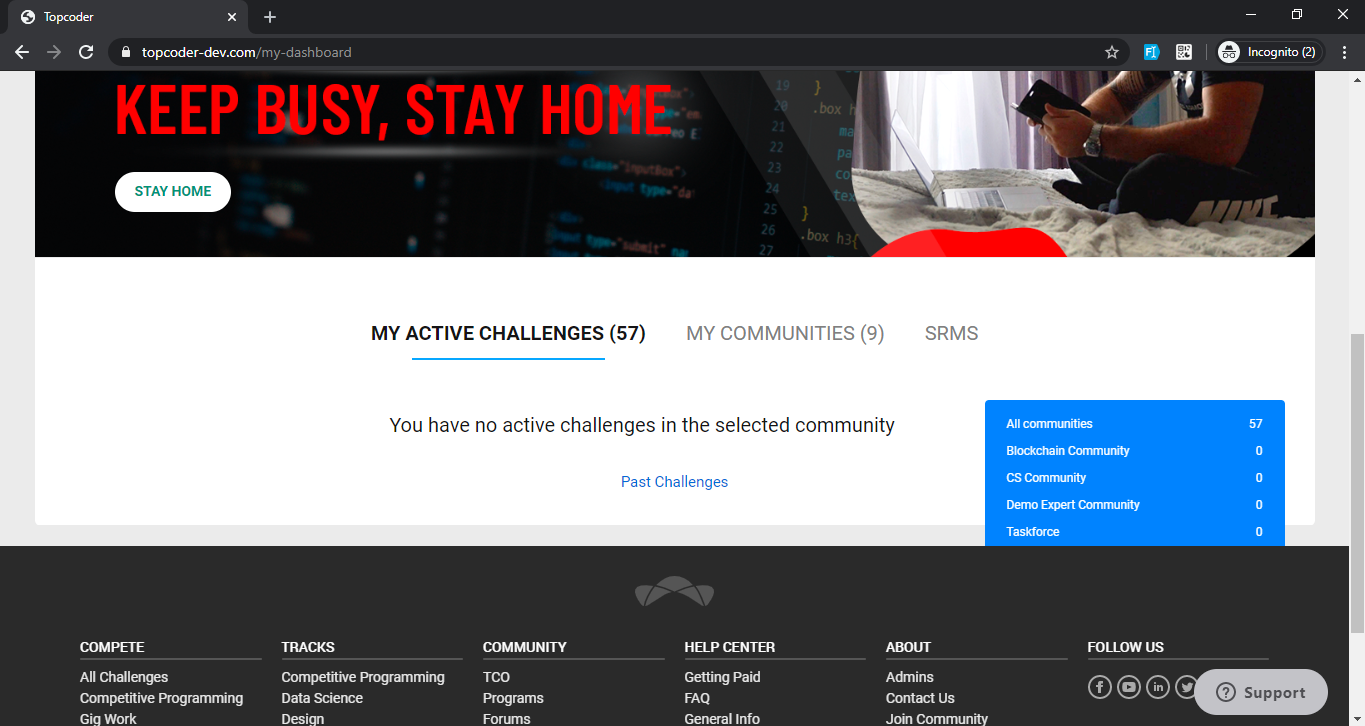

topcoder-platform/community-app | 607245507 | Title: [Dashboard] All the filter options are not displayed when clicking on any filter with 0 challenges.

Question:

username_0: In the filter, when there is even only one challenges, all the list in the filter is displayed but if there is 0 challenges, the list is hidden.

Attached screenshot with challenges and without any challenges

**With Challenges**

**Without challenges**:

Status: Issue closed

Answers:

username_1: This issue is not applicable now. |

upspin/upspin | 243287007 | Title: dir/server: snapshot creation should block until snapshot is created

Question:

username_0: The magic MakeDirectory("TakeSnapshot") that creates a snapshot directory for a user returns immediately. It spins off the creation and returns successfully. This makes it impossible to know when the backup exists (if it ever does!).

I propose it blocks until the snapshot is actually created, for some definition of created.

Answers:

username_1: It's not impossible to know; if you watch the snapshot tree you will see it created. That's how the snapshot tests in package `upspin.io/test` work.

But I agree that this is how it *should* work.

username_2: The goroutine was used because it's a "deamon" that periodically takes snapshots. There is no technical reason it can't block when asked directly by the magic.

username_0: I think watch is overkill. MakeDirectory blocks until the directory is made, and snapshot should too, as it's just a fancier MakeDirectory. |

mitodl/bootcamp-ecommerce | 645873502 | Title: HomePage on CI is broken

Question:

username_0: However it's working on RC so it may not be critical to MVP if we can just fix the home page configuration in the database

Answers:

username_1: Doesn't look totally broken to me, but there's plenty of missing content. We should copy back the content from RC

username_2: @username_1 both of the pages seem alike. I have checked it now.

username_3: Replicated the HomePage contents from RC to CI (https://bootcamp-ecommerce-ci.herokuapp.com/).

anyone can verify it ?

username_3: @username_0 @username_1 any update over it ? Can I close it ?

Status: Issue closed

username_1: I'm getting 500 errors trying to load the images on the home page. I think this happened before, but I don't remember the solution.

For example, https://bootcamp-ci.odl.mit.edu/images/3fGSOcMgMVRZo0bgtyFX3K6dm8k=/3/fill-475x300/Innovation_LeadershipSMALL.original.jpg?v=061f2c7e48685bdf841f9feb96fa340876626ee1 |

manala/ansible-role-shorewall | 192532066 | Title: cannot parse config.yml

Question:

username_0: No matter what i do, even with an empty

```

manala_shorewall_config:

```

or this simple rule

```

manala_shorewall_config:

rules:

- Access to SSH

- { action: ACCEPT, source: net, dest: fw, proto: tcp, dest_port: 2201 }

```

i always get this error

```

{"failed": true, "msg": "the field 'args' has an invalid value, which appears to include a variable that is undefined. The error was: 'None' has no attribute 'keys'\n\nThe error appears to have been in '/opt/boxen/homebrew/etc/ansible/roles/manala.shorewall/tasks/config.yml': line 2, column 3, but may\nbe elsewhere in the file depending on the exact syntax problem.\n\nThe offending line appears to be:\n\n\n- name: config > File\n ^ here\n"}

```

I am using ansible 2.2 on osx

Answers:

username_1: Our readme was outdated, your simple rule should be :

```

manala_shorewall_configs:

- file: rules

config:

# Access to SSH

- ACCEPT: net fw tcp 2201 - -

```

`manala_shorewall_config` is now only used to configure directives in `/etc/shorewall/shorewall.conf`file

Status: Issue closed

|

gokcehan/lf | 925656967 | Title: lf becomes unresponsive after a graphical shell command

Question:

username_0: I have a command in my `lfrc` file like this:

```

cmd img_view ${{

sxiv "$f"

echo "done"

}}

```

Sometimes when I run this command on an image file and quit sxiv afterwards lf completetly freezes (and I only see a blank terminal screen in most cases). I would say this happens about 10-50% of the time. (Sometimes it happens more frequently and sometimes less frequently. I don't know why.)

The wierd thing is, when I switch the `echo` to a `notify-send` this does not happen anymore. (I use dunst, if that is relevant.) (Removing the `echo` command makes no difference.)

The same goes for, when I switch the `$` to a `!` or `%`: the freeze simply does not happen now.

There are some other wierd niche cases, when this also does not happen, e.g. when I have window swallowing activated in my window manager.

This doesn't seem to be related to sxiv, since I get a simmilar behaviour when replacing `sxiv` to `zathura` and viewing pdf files. The problem seems to happen with all graphical programms.

When this happens the shell already exited and is no longer alive. (I used htop to check this.) So the issue seems to come from what lf does after a shell command.

Remote commands send via `lf -remote` also do nothing. This indicates to me, that lf is somehow stuck and frozen and not simply "invisible".

Answers:

username_1: Sounds like #621. The problem is (likely) `lf` getting resized while reinitializing.

Status: Issue closed

|

jlippold/tweakCompatible | 414592145 | Title: `JailProtect` working on iOS 12.1.1

Question:

username_0: ```

{

"packageId": "com.julioverne.jailprotect",

"action": "working",

"userInfo": {

"arch32": false,

"packageId": "com.julioverne.jailprotect",

"deviceId": "iPhone8,2",

"url": "http://cydia.saurik.com/package/com.julioverne.jailprotect/",

"iOSVersion": "12.1.1",

"packageVersionIndexed": true,

"packageName": "JailProtect",

"category": "Tweaks",

"repository": "julioverne's Repo",

"name": "JailProtect",

"installed": "0.0~beta4a",

"packageIndexed": true,

"packageStatusExplaination": "This package version has been marked as Likely working based on feedback from users in the community. The current positive rating is 50% with 1 working reports.",

"id": "com.julioverne.jailprotect",

"commercial": false,

"packageInstalled": true,

"tweakCompatVersion": "0.1.0",

"shortDescription": "No Substrate Mode Alternative",

"latest": "0.0~beta4a",

"author": "julioverne",

"packageStatus": "Likely working"

},

"base64": "<KEY>

"chosenStatus": "working",

"notes": ""

}

```<issue_closed>

Status: Issue closed |

kdclaw3/ram-oracle | 426911879 | Title: how to get ORACLE SCHEMA??

Question:

username_0: Please help me to find Oracle schema as it is not working with JDOE .

let matches = ram.match('JDOE','587F72032A3C828E','password');

console.log('The input matches the Oracle Database password: ' + matches + '.');<issue_closed>

Status: Issue closed |

dkahle/ggmap | 748926873 | Title: remove rjson dependency?

Question:

username_0: It doesn't look like it's used:

```

grep -Er "((to|from)JSON)|rjson" ggmap

# ggmap/DESCRIPTION: rjson,

# ggmap/R/ggmap-package.R:#' @importFrom rjson fromJSON

# ggmap/NAMESPACE:importFrom(rjson,fromJSON)

# ggmap/NEWS:New depends - 1. rjson

```

At first I was going to offer to migrate it to `jsonlite`, but it seems that's unnecessary.

Answers:

username_1: Good call, thanks!

Status: Issue closed

|

toptal/chewy | 212687598 | Title: Load results from aggregation & paginate throuth them

Question:

username_0: Hi guys,

I'm using the following aggregation to remove duplicates from my query based on a certain field:

`scope = scope.aggregations("dedup": {"terms": {"field": "my_id"}, "aggregations": {"dedup_docs": {"top_hits": {"size": 1}}}})`

This works fine, when I look at the new scope, I see that the results (hits) are grouped by my_id in buckets and only the single top result is in the "_source" field - great.

However, how can I paginate through these results? Usually I would simply do a

`scope = scope.per(params[:limit]).page(params[:page]).load`

and then render out the results. But in this case this gives me the the original results without the aggregation applied.

I also tried

`scope = scope.aggs.per(params[:limit]).page(params[:page]).load`

but this did not work either.

How can I achieve this?

Many thanks and all the best,

Michael

Answers:

username_1: @username_2 Could you help us with this when you have some time? Thanks!

username_2: Oh guys, sorry for being late. This definitely looks like a bug. To bypass it for now - try to use Kaminari as you would use it for array. https://github.com/kaminari/kaminari#paginating-a-generic-array-object. Also, you can always submit a patch, that would be really appreciated. If not - I'll find a time some day for deal with it. |

PaddlePaddle/Paddle | 246277239 | Title: wutai01 MPI的paddle v

Question:

username_0: Thank you for contributing to PaddlePaddle. Submitting an issue is a great help for us.

Both Chinese and English issues are welcome.

It's hard to solve a problem when important details are missing.

Before submitting the issue, look over the following criteria before handing your request in.

- [ ] Was there a similar issue submitted or resolved before ? You could search issue in the github.

- [ ] Did you retrieve your issue from widespread search engines ?

- [ ] Is my description of the issue clear enough to reproduce this problem?

* If some errors occurred, we need details about `how do you run your code?`, `what system do you use?`, `Are you using GPU or not?`, etc.

* If you use an recording [asciinema](https://asciinema.org/) to show what you are doing to make it happen, that's awesome! We could help you solve the problem more quickly.

- [ ] Is my description of the issue use the github markdown correctly?

* Please use the proper markdown syntaxes for styling all forms of writing, e.g, source code, error information, etc.

* Check out [this page](https://guides.github.com/features/mastering-markdown/) to find out much more about markdown.

Answers:

username_1: 从配置看,网络的激活全部是relu,我怀疑是relu的问题。模型存储没有这么脆弱。

- 在训练的时候,ReLU单元比较脆弱并且可能“死掉”。举例来说,当一个很大的梯度流过ReLU的神经元的时候,可能会导致梯度更新到一种特别的状态,在这种状态下神经元将无法被其他任何数据点再次激活。如果这种情况发生,那么从此所以流过这个神经元的梯度将都变成0。

username_0: 嗯,还在验证是不是relu导致的dead unit问题。

但是,

1. 能成功训练,在测试时出现了问题;

2. 跑了三次都是同样的问题;

3. 之前一直采用这样的网络是没有问题的(数据处理逻辑都是一样的,本地运行也部分印证了)

username_1: 请还原会之前一样的数据,一样的配置,一样的设置,确认是paddle的问题。否则我们无从查起。

Status: Issue closed

username_0: ```

I0727 09:40:11.928058 8975 TrainerInternal.cpp:165] Batch=145700 samples=18649600 AvgCost=0 CurrentCost=0 Eval: CurrentEval:

```

1. 训练全程中,cost都是0这个问题出现了有段时间了;

2. 在wutai01的MPI V1训练的模型,因为网络中用到cosine计算两个生成向量的相似度```cos_sim(a=user_dim, b=view_dim, scale=1)```,当把成功训练结束的model在本地做test计算的时候,出现生成的那两个向量都是0向量(训练的数据也测试了,情况一样),导致做cosine时失败。而模型在训练的时候计算相似度都是正常,只能推断是不是集群在save模型的时候的bug。(此外,这个网络在local模式下一切都是正常的,且在前段时间在MPI上也是正常的)

MPI任务的链接:http://10.87.137.36:8920/fileview.html?path=/home/disk1/normandy/maybach/40968/

username_2: AvgCost=0 CurrentCost=0 这个问题有trace么? 会干扰判断。

username_1: AvgCost=0 CurrentCost=0 的啥问题?

username_0: 参数都没有变化,但之前的数据字典更新了,训练数据无法恢复到原样。 出现这样的情况,貌似和训练时出现avgcost=0 currentcost= 这个问题是同时出现的,具体还待tanh的结果出来,看是否是一样的情况来判断。

username_1: 1. 怎么排除数据的问题?

2. 不可能怎么改变都不能改变 `avgcost=0 currentcost= 0`,什么条件不出现 `avgcost=0 currentcost=0` ?

username_1: 数据处理逻辑都是一样的,不能排除数据无异常。

username_0: ```

I0728 17:24:47.582365 25615 TrainerInternal.cpp:165] Batch=110 samples=56320 AvgCost=0.161602 CurrentCost=0.0999691 Eval: CurrentEval:

I0728 17:24:48.212803 25615 TrainerInternal.cpp:165] Batch=120 samples=61440 AvgCost=0.15781 CurrentCost=0.1161 Eval: CurrentEval:

I0728 17:24:49.328068 25615 TrainerInternal.cpp:165] Batch=130 samples=66560 AvgCost=0.156435 CurrentCost=0.139936 Eval: CurrentEval:

I0728 17:24:50.238536 25615 TrainerInternal.cpp:165] Batch=140 samples=71680 AvgCost=0.155359 CurrentCost=0.141367 Eval: CurrentEval:

I0728 17:24:51.023105 25615 TrainerInternal.cpp:165] Batch=150 samples=76800 AvgCost=0.153028 CurrentCost=0.120404 Eval: CurrentEval:

I0728 17:24:51.955370 25615 TrainerInternal.cpp:165] Batch=160 samples=81920 AvgCost=0.151533 CurrentCost=0.129097 Eval: CurrentEval:

I0728 17:24:52.876628 25615 TrainerInternal.cpp:165] Batch=170 samples=87040 AvgCost=0.150316 CurrentCost=0.130859 Eval: CurrentEval:

I0728 17:24:53.815397 25615 TrainerInternal.cpp:165] Batch=180 samples=92160 AvgCost=0.149469 CurrentCost=0.135065 Eval: CurrentEval:

I0728 17:24:54.696384 25615 TrainerInternal.cpp:165] Batch=190 samples=97280 AvgCost=0.147974 CurrentCost=0.121052 Eval: CurrentEval:

```

这是脚本本地跑part的日志。

MPI的当把sparse_update关闭,cost好像就正常了。

其实,问题是怀疑是不是wutai01的paddle环境问题。所以也尝试了提交到wutai02,wutai的集群,一直报错connet的问题。

username_1: sparse_update 一般只对 embeding层用。其它层已经不再是sparse的了。

username_0: 嗯,就是关闭稀疏层的

username_2: sparse_update 一般只对 embeding层用。其它层已经不再是sparse的了。

--

尝试下来就是这样。另外一个任务数据量小,所以一样的配置和数据,用单节点训练, cost的log就正常。可以看这个issue的说明 https://github.com/PaddlePaddle/Paddle/issues/2987

username_3: I'm closing this due to low activity, feel free to reopen.

Status: Issue closed

|

jlippold/tweakCompatible | 484982032 | Title: `TextEmojis` working on iOS 12.4

Question:

username_0: ```

{

"packageId": "se.nosskirneh.textemojis",

"action": "working",

"userInfo": {

"arch32": false,

"packageId": "se.nosskirneh.textemojis",

"deviceId": "iPhone9,2",

"url": "http://cydia.saurik.com/package/se.nosskirneh.textemojis/",

"iOSVersion": "12.4",

"packageVersionIndexed": false,

"packageName": "TextEmojis",

"category": "Tweaks",

"repository": "henrikssonbrothers",

"name": "TextEmojis",

"installed": "1.3~beta3",

"packageIndexed": true,

"packageStatusExplaination": "A matching version of this tweak for this iOS version could not be found. Please submit a review if you choose to install.",

"id": "se.nosskirneh.textemojis",

"commercial": false,

"packageInstalled": true,

"tweakCompatVersion": "0.1.5",

"shortDescription": "Search and input emojis by text shortcodes",

"latest": "1.3~beta3",

"author": "<NAME>",

"packageStatus": "Unknown"

},

"base64": "<KEY>",

"chosenStatus": "working",

"notes": ""

}

```<issue_closed>

Status: Issue closed |

Amertz08/drf_ujson2 | 577893607 | Title: Not working with ujson 2.0.0

Question:

username_0: ujson 2.0.0 has removed ```double_precision``` so this lib crashes now.

https://github.com/ultrajson/ultrajson/releases/tag/2.0.0

Answers:

username_1: Yeah just ran into this myself. Going to do a bug fix release pinning `ujson<2` then implement a fix.

username_2: Any progress on this one ???

Status: Issue closed

|

turnkeylinux/tracker | 205167109 | Title: LDAP sync for customer user

Question:

username_0: Hey guys, I've managed to enable the LDAP authentication for customer users successfully. Well... Almost.

The customers user can login using their AD-username and their AD-password - but I have to set a customer user with the exact username in the OTRS-Webinterface first.

So it appears that authentication is working, but my turnkey OTRS does not sync the users (they are all members of a specific AD-group) to OTRS.

Is there a way to enable all my AD-users to log into OTRS without adding them one by one to the OTRS customer user database manually?

Kind regards

Answers:

username_1: Hi,

This is actually our Issue tracker. I.e. for reporting bugs and feature requests. Your problem sounds more like something you need support with, which is probably a better candidate for our [forums](https://www.turnkeylinux.org/forum/) (that is unless there is actually a bug causing your issue).

As for your question; I'm almost sure that there is a way, but TBH I have no idea. LDAP is something that I have only a very basic grasp of.

I recall a few years ago, I used a PHP module that allowed a remote LDAP DB to provide authentication to a web app I was working on. I never really understood how it worked, but it did (it was running within a LAN though - not sure if it would be secure running online?) I'm assuming that there is probably some similar Perl module that OTRS could leverage to provide authentication?

Alternatively, perhaps there is some way to have a cron job which updates the local DB with LDAP users?

Anyway, sorry I'm not much help to you. I'm going to close this now. Please feel free to reopen if you discover that it actually is caused by a bug. Also please feel free to open a new thread on the forums.

Status: Issue closed

|

KhronosGroup/SPIRV-Tools | 910463727 | Title: spirv-opt: Support branch flatten?

Question:

username_0: Hi, i come from [SPIRV-Cross](https://github.com/KhronosGroup/SPIRV-Cross/issues/1684)

I'm trying to cross compile like this: HLSL -> SPIR-V -> SPIRV-OPT -> ESSL

I found physical loop unroll works because SPIRV-OPT supports this feature.

But branch flatten is not supported because like ESSL it needs a [extension](https://github.com/KhronosGroup/GLSL/blob/master/extensions/ext/GL_EXT_control_flow_attributes.txt) and i think not all mobile device support this extension.

Will SPIRV-OPT support this physical branch flatten feature in the future?

I think it's still necessary for platforms like mobile.

Status: Issue closed

Answers:

username_0: We decide to use the solution provide by https://github.com/KhronosGroup/SPIRV-Cross/issues/1684

Using control flow hints seems more reasonable for now. |

tastybento/ASkyBlock-Bugs-N-Features | 284914570 | Title: Problem with the challenges!!

Question:

username_0: hello, the menu of the challenges works but the challenges in itself when clicking it does not work, it does not show an error or something similar, since I saw in other servers that the challenges work, I do not know because in my case it does not give can you help me, please!!

Answers:

username_1: I suspect it's another plugin interfering with the clicking. Remove other plugins one by one until it works.

Status: Issue closed

|

mobxjs/mobx | 1015357522 | Title: Wrong event type in spy when changing an array

Question:

username_0: I am writing a loggin library for Mobx. When I push to observable array Mobx generates spy report with type `splice`. It looks like a mistake.

**Actual outcome:**

The spy report is following:

```

observableKind: "array"

object: Array(1)

debugObjectName: "[email protected]"

type: "splice" // <- why it is splice if I used .push method on array?

index: 0

removed: Array(0)

added: Array(1)

removedCount: 0

addedCount: 1

spyReportStart: true

```

**Intended outcome:**

Either `push` or `array`. It is up to library developers, I don't know why the event type is `splice`

**How to reproduce the issue:**

Go to https://codesandbox.io/s/hungry-resonance-hfegb?file=/index.js and open console.

**Versions**

Mobx 6 |

auth0/passport-linkedin-oauth2 | 18000774 | Title: Retreiving user email with `r_emailaddress`?

Question:

username_0: Authenticating with `scope: ['r_emailaddress', 'r_basicprofile']` the basic profile is returned, except the value for email is undefined, and not in the raw JSON returned.

Per the [LinkedIn API Docs](http://developer.linkedin.com/documents/authentication) the email requires a call to a different endpoint `GET /people/~/email-address` vs `GET /people/~`. Might there be a configuration step I missed?

``` json

{

provider: 'linkedin',

id: 'qBP4vNlwf5',

displayName: '<NAME>',

name: { familyName: 'Doe', givenName: 'John' },

emails: [ { value: undefined } ],

_raw: '{\n "firstName": "John",\n "formattedName": "<NAME>",\n "id": "qBP4vNlwf5",\n "lastName": "Doe",\n "pictureUrl": "http://s.c.lnkd.licdn.com/scds/common/u/images/themes/katy/ghosts/person/ghost_person_60x60_v1.png"\n}',

_json:

{

firstName: 'John',

formattedName: '<NAME>,

id: 'qBP4vNlwf5',

lastName: 'Doe',

pictureUrl: 'http://s.c.lnkd.licdn.com/scds/common/u/images/themes/katy/ghosts/person/ghost_person_60x60_v1.png' }

}

```

Answers:

username_1: thanks,it really helped |

jasmine/jasmine | 372118818 | Title: Error: Expected [ 'Array', 'Contents' ] to be [ 'Array', 'Contents' ].

Question:

username_0: When comparing two arrays in my test framework `expect(['Array', 'Contents']).toBe(['Array', 'Contents']);` Jasmine reports an error in that `Expected [ 'Array', 'Contents' ] to be [ 'Array', 'Contents' ]. ` Jasmine is truly mad about the fact that the address pointers for both of these arrays is not the same regardless of their contents. The first `[ 'Array', 'Contents' ]` is *not* the second `[ 'Array', 'Contents' ]`. While it isn't a huge deal, I would maybe expect some sort of error message explaining that.

## Expected Behavior

I would expect some error message or some way of implying that the base address of my arrays is not the same and therefore they are not the same object.

<!--- If you're describing a bug, tell us what should happen -->

<!--- If you're suggesting a change/improvement, tell us how it should work -->

## Current Behavior

Right now jasmine reports `Expected [ 'Array', 'Contents' ] to be [ 'Array', 'Contents' ]. `

<!--- If describing a bug, tell us what happens instead of the expected behavior -->

<!--- If suggesting a change/improvement, explain the difference from current behavior -->

## Possible Solution

A new error message that tells me the base addresses of my arrays is not the same and they are not the same object because of that. Ideally, I think there could be something that suggests that I should use toEqual to compare the contents of my arrays instead, maybe if it notices their contents are similar.

<!--- Not obligatory, but suggest a fix/reason for the bug, -->

<!--- or ideas how to implement the addition or change -->

## Suite that reproduces the behavior (for bugs)

<!--- Provide a sample suite that reproduces the bug. -->

```javascript

describe("sample", function() {

expect(['Array', 'Contents']).toBe(['Array', 'Contents']);

});

```

## Context

<!--- How has this issue affected you? What are you trying to accomplish? -->

<!--- Providing context helps us come up with a solution that is most useful in the real world -->

Again, this isn't a huge deal. Maybe I shouldn't assume the worst of people, but I feel like had I not been more informed and actually known what the issue was, this could have been a very frustrating error to come across. In every way, it looks like the first array should be the second array as reported by Jasmine, and I think it would be a frustrating experience to see something that looks like it should be working, but is still upset and wrong.

Answers:

username_1: If you want a deep equality of your objects you should be using `toEqual` instead of `toBe`. As I write this up _again_ though, you're probably correct, that an additional note for `toBe` that mentions deep equality vs object equality would be useful. I'd be happy to review a pull request to update the failure message for `toBe`.

Hope this helps. Thanks for using Jasmine!

Status: Issue closed

|

rscustom/rocksmith-custom-song-toolkit | 780825024 | Title: Cannot download any builds

Question:

username_0: Current build or older builds on builds tab (Windows) on rscustom.net generate same return message:

{"message":"Artifact not found or access denied."}

Answers:

username_1: Appveyor removes artifacts after 6 months. Since there hasn't been any activity in awhile to generate new builds they've all been removed.

A new build has been pushed & I published it as a github release which won't have the same 6 month limitation.

Stable builds should be released on github to avoid this in the future.

Status: Issue closed

|

vanatteveldt/frogr | 64035468 | Title: unable to handle large datasets

Question:

username_0: When a large (>150) character vector is used as input, an error is thrown:

Error in textConnection(output) : all connections are in use

In `frog.R` a textConnection is opened (line 50) but it is not closed, causing the number of open file handles to increase and increase.

Answers:

username_0: A workaround is to use

install_github("username_0/frogr")

which will be available until the fix is accepted, see pull request #2

username_0: Fixed by 0afd07a8

Status: Issue closed

|

jupyter/notebook | 283723716 | Title: directory completion causes the jupyter stuck when there are lots of files

Question: