repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

opentoonz/opentoonz | 876871286 | Title: shortcut to select parent column

Question:

username_0: A shortcut to select parent/children colluumn linked in the schematic view is another much used thing for cut-out animation

it speeds up process to animate different parts

a shortcut to select parent/children of the elements

example tof toonboom

Answers:

username_1: The schematic is a great way to select parent/child columns. And you can also use the skeleton tool to select the drawing in any column for moving, which looking like the image you shared.

username_0: just think about a simple button to press instead of going in other menus or tools each time

also being able to click on any node in the chain to go to the desired element

username_2: a simple shortcut is even more practical

in Maya you use the arrow keys to move up/down a hierarchy in the viewport (even left/right ones travels you through siblings). |

Azure/azure-sdk-for-python | 707104166 | Title: Data Tables Readme and Samples issues

Question:

username_0: 1.

Section [Link](https://github.com/Azure/azure-sdk-for-python/tree/master/sdk/tables/azure-data-tables#general):

Suggestion:

1).Add

```

from azure.data.tables import TableServiceClient

from azure.core.exceptions import HttpResponseError

table_name='your_table_name'

```

2).Update `TableServiceClient(connection_string)`to `TableServiceClient.from_connection_string(connection_string)`.

2.

Section [Link](https://github.com/Azure/azure-sdk-for-python/blob/master/sdk/tables/azure-data-tables/samples/sample_insert_delete_entities.py#L62):

Reason:

AttributeError: 'InsertDeleteEntity' object has no attribute 'account_url' and 'access_key'

Suggestion:

Add Class attribute `access_key` and `account_url`:

```

access_key = os.getenv("AZURE_TABLES_KEY")

account_url = os.getenv("AZURE_TABLES_ACCOUNT_URL")

```

3.

Section [Link](https://github.com/Azure/azure-sdk-for-python/blob/master/sdk/tables/azure-data-tables/samples/async_samples/sample_query_table_async.py#L69):

Reason:

for entity_chosen in queried_entities:

TypeError: 'AsyncItemPaged' object is not iterable.

Suggestion:

Update to:

```

async for entity_chosen in table_client.query_entities(filter=name_filter, select=["Brand", "Color"]):

print(entity_chosen )

```

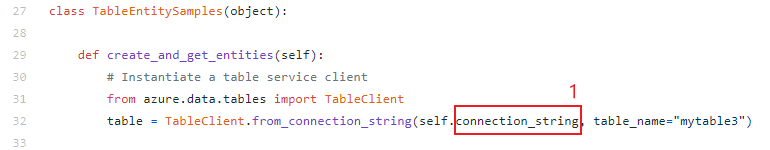

4.

Section [Link](https://github.com/Azure/azure-sdk-for-python/blob/master/sdk/tables/azure-data-tables/samples/sample_update_upsert_merge_entities.py):

Note: There are the same questions 3 and 4 in [asynchronous Sample](https://github.com/Azure/azure-sdk-for-python/blob/master/sdk/tables/azure-data-tables/samples/async_samples/sample_update_upsert_merge_entities_async.py).

Suggestion 1:

Add Class attribute connection_string definition in Class TableEntitySamples:

connection_string = os.getenv("AZURE_TABLES_CONNECTION_STRING")

Reason 2:

AttributeError: 'TableEntitySamples' object has no attribute 'set_access_policy' and 'upsert_entities'.

Suggestion 2:

Remove `sample.set_access_policy()` and `sample.upsert_entities()`.

[Truncated]

6.

Section [Link](https://github.com/Azure/azure-sdk-for-python/blob/master/sdk/tables/azure-data-tables/samples/async_samples/sample_update_upsert_merge_entities_async.py#L60):

Suggestion 1:

Update to `await table.create_table()`

Reason 2:

entities = list(table.list_entities())

TypeError: 'AsyncItemPaged' object is not iterable.

Suggestion 2:

Update to:

```

entities = list()

async for n in table.list_entities():

entities.append(n)

```

@jongio for notification.<issue_closed>

Status: Issue closed |

stoicflame/enunciate | 108645672 | Title: Docs export fail on second run

Question:

username_0: I'm working on a Gradle plugin for Enunciate, so this problem may be because I don't understand the Enunciate programmatic API rather than a bug per se.

I'm trying to generate docs output only, so I add an export for 'docs' to a folder.

On first run, enunciate will generate the documentation. So everything is peachy.

But on second run, it outputs:

```

[ENUNCIATE] Skipping documentation source generation as everything appears up-to-date...

[ENUNCIATE] Unknown artifact 'docs'. Artifact will not be exported.

```

I can see why it would not do anything, as everything is already done (in respect to the enunciate build dir, anyway).

But it then fails to handle the docs export.

When I delete the export target folder, I would expect a re-export.

Am I missing something?

Answers:

username_1: Fixed at 4f7463f. Thanks for the report.

Status: Issue closed

|

lianlilin/blog | 853581916 | Title: 排序算法

Question:

username_0: # 冒泡排序

O(n^2)

# 选择排序

O(n^2)

# 插入排序

O(n^2)

# 希尔排序

# 归并排序

## 参考文献

- [这或许是东半球分析十大排序算法最好的一篇文章](https://mp.weixin.qq.com/s?__biz=MzUyNjQxNjYyMg==&mid=2247485556&idx=1&sn=344738dd74b211e091f8f3477bdf91ee&chksm=fa0e67f5cd79eee3139d4667f3b94fa9618067efc45a797b69b41105a7f313654d0e86949607&scene=21#wechat_redirect)

- [原来插入排序、希尔排序是这样的](https://zhuanlan.zhihu.com/p/217904206) |

code4nara/covid19 | 597888870 | Title: 「新型コロナウィルス感染症が心配なときに」印刷ページの連絡先が前バージョンと重なってしまっている

Question:

username_0: ## 起こっている問題 / The Problem

- 「新型コロナウィルス感染症が心配なときに」印刷ページの連絡先が前バージョンと重なってしまっている

## スクリーンショット / Screenshot

<!-- バグであればdeveloper toolからコンソールも合わせて添付 -->

<!-- If it's a bug, attach a screenshot of the developer tool console -->

## 期待する見せ方・挙動 / Expected Behavior

- きれいに表示

## 起こっている問題の再現手段 / Steps to Reproduce

1. https://stopcovid19.code4nara.org/print/flow/ にアクセス

## 動作環境・ブラウザ / Environment

- macOS

- Chrome

Answers:

username_0: おそらくGitHubがマージの際にsvgのコードを置き換えではなく、うまいこと(?)新旧両方残してしまったのかなと

username_1: 開発ページのフローが表示出来なくなっているのは別問題でしょうか?

https://upbeat-volhard-740574.netlify.com/naracity

username_0: https://upbeat-volhard-740574.netlify.com/flow

開発ページはURLの階層がおかしいみたいですね。別問題ですね。

username_1: 単に回線が遅かっただけみたいです。

開発ページでも同様になっているので、おそらくこれを修正で解消出来るでしょう。

Status: Issue closed

username_1: ## 起こっている問題 / The Problem

- 「新型コロナウィルス感染症が心配なときに」印刷ページの連絡先が前バージョンと重なってしまっている

## スクリーンショット / Screenshot

<!-- バグであればdeveloper toolからコンソールも合わせて添付 -->

<!-- If it's a bug, attach a screenshot of the developer tool console -->

## 期待する見せ方・挙動 / Expected Behavior

- きれいに表示

## 起こっている問題の再現手段 / Steps to Reproduce

1. https://stopcovid19.code4nara.org/print/flow/ にアクセス

## 動作環境・ブラウザ / Environment

- macOS

- Chrome

username_1: 該当部分のコードが削除されるように見えたのですが、開発用ページでは変化ないようです。明日でも詳しく見てみます

username_0: 最新の `development` ブランチで、`yarn dev` したものと `yarn run generate:deploy --fail-on-page-error` したものを見てみましたが反映されているようでした。netlifyのキャッシュか何かかな...?

参考: [netlifyのキャッシュ削除方法 \- Qiita](https://qiita.com/taichi0514/items/76800bc801c7759c74bc)

username_1: 参考ページの手順で3度ほどnetlifyのキャッシュクリアをしても変化がみられなかったが、データ更新のマージをすると正しく表示されるようになりました。

どこかのキャッシュの影響かもしれませんが、詳細不明。

Status: Issue closed

|

rancher/rancher | 725469589 | Title: Monitoring V2 Grafana storage causes "breaks non-root policy" error

Question:

username_0: <!--

Please search for existing issues first, then read https://rancher.com/docs/rancher/v2.x/en/contributing/#bugs-issues-or-questions to see what we expect in an issue

For security issues, please email <EMAIL> instead of posting a public issue in GitHub. You may (but are not required to) use the GPG key located on Keybase.

-->

**What kind of request is this (question/bug/enhancement/feature request):**

Bug

**Steps to reproduce (least amount of steps as possible):**

Install rancher-monitoring with selected storage for grafana using `Statefulset template` and use fix for this bug #29638.

**Result:**

Volume for grafana will be created but grafana cannot start due to error `Containers with incomplete status: [init-chown-data grafana-sc-datasources]` and `Error: container's runAsUser breaks non-root policy`.

**Other details that may be helpful:**

We use nfs storage with nfs-client-provisioner.

If we add `initChownData.enabled` to grafana while installing rancher-monitoring it works.

**Environment information**

- Rancher version (`rancher/rancher`/`rancher/server` image tag or shown bottom left in the UI):

v2.5.1/k8s 1.19.3

- Installation option (single install/HA):

Single install

<!--

If the reported issue is regarding a created cluster, please provide requested info below

-->

**Cluster information**

- Cluster type (Hosted/Infrastructure Provider/Custom/Imported):

- Machine type (cloud/VM/metal) and specifications (CPU/memory):

- Kubernetes version (use `kubectl version`):

```

Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.2", GitCommit:"<PASSWORD>", GitTreeState:"clean", BuildDate:"2020-04-16T11:56:40Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.3", GitCommit:"<PASSWORD>", GitTreeState:"clean", BuildDate:"2020-10-14T12:41:49Z", GoVersion:"go1.15.2", Compiler:"gc", Platform:"linux/amd64"}

```

- Docker version (use `docker version`):

```

Nodes have version from 17.9.1, 18.9.0 and 19.3.5

Rancher server is docker version 17.03.2-ce

```

Answers:

username_1: I hit the same error when installing Monitoring v2 in v2.5-61a8a3691063108bc775c8e4e6f6db971541c253-head

- install Longhorn

- install Monitoring v2 `9.4.201`

- When installing monitoring v2, enable the persistent storage for Grafana

Results:

```

Warning | Failed | Error: container's runAsUser breaks non-root policy

```

<img width="1378" alt="Screen Shot 2020-10-21 at 2 43 41 PM" src="https://user-images.githubusercontent.com/6218999/96791841-0e4b8680-13ae-11eb-8bff-01ba5ab44c54.png">

username_2: I have the same issue, and when using StatefulSet, I'm not allowed to update the "Run as non-root":

`StatefulSet.apps "rancher-monitoring-grafana" is invalid: spec: Forbidden: updates to statefulset spec for fields other than 'replicas', 'template', and 'updateStrategy' are forbidden`

username_3: @username_1 Fix is merged, can you retest this? Also make sure the chart can pass CIS again as I modified some securityContext which might impact CIS.

username_1: The bug fix is validated in rancerh `v2.5-d87f58ac300192c90377faef694efb796a824520-head` with the latest monitoring v2 chart `9.4.201`

Monitoring v2 is installed successfully in a hardened cluster and is functional.

<img width="1373" alt="Screen Shot 2020-10-23 at 11 53 58 AM" src="https://user-images.githubusercontent.com/6218999/97042832-7a50fa80-1526-11eb-9fb2-624e3475efef.png">

related UI bug: https://github.com/rancher/dashboard/issues/1689

Status: Issue closed

|

PowerDNS/parent-signals-dot | 606871502 | Title: Is DoT good enough for an AD bit?

Question:

username_0: First, we need to decide on this (and I trust that the WG will pick a fight over this).

Second, if the conclusion is 'yes', we need to write some words on what that means for validators that talk to an upstream resolver, because we cannot forward them DNSKEYs and RRSIGs that allow them to do their own validation.

Answers:

username_1: My thoughts:

We should say something along the lines of "In theory this could partially replace DNSSEC in the future, however this document does not cover that, due to the complexity it adds in regards to the current abundance of validators that use an upstream resolver. If a future standard does decide to implement this a concern it should address is how a validator with an upstream resolver an still determine data authenticity."

username_2: So this is a tricky one. In some cases, it may be good enough but not for all cases.

Take an example where an organization generate their zone and host their DNS servers. DoT ensure that the content has not been changed in flight and given that this is the same organization creating the zone, pretty much you could validate the content. I think it would give you roughly the same level of authenticity as online signing.

Now, take a case where an organization owns their zone, dnssec signed it and has third-party secondaries serving the content. Even though you can authenticate the server, there is no guarantee that the third-party is not modifying the content you have given them.

username_3: Agreed. It seems to me that, even with a public key protected by DNSSEC, DoT only protects the transport and that's not enough to authenticate the content, which is what the AD bit is about.

username_1: Lets close this, I do not think this draft should imply that DoT can be used to verify well enough to set the AD bit.

Status: Issue closed

|

STEllAR-GROUP/phylanx | 325063111 | Title: @Phylanx passes by value, not by reference

Question:

username_0: @Phylanx copies the values in its input arguments instead of passing them by reference. This means we do unnecessary copying.

```

from phylanx.ast import Phylanx

import numpy as np

def change(n):

n[3] = 4

av = np.ones((10))

change(av) # changes av[3] to be 4

print(av)

@Phylanx()

def change2(n):

n[4] = 5

change2(av) # produces no change

print(av)

```

Answers:

username_1: We will never have references across the Python/PhySL interface. I wouldn't even know how to implement that properly in the general case.

username_2: Duplicate of #376 |

jz4o/codingames | 254876083 | Title: 2017/09/03時点で作成済みのソースをコミットする

Question:

username_0: 以下が作成済み

- CLASSIC PUZZLE

- EASY

- mars-lander-episode-1

- defibrillators

- horse-racing-duals

- mime-type

- chuck-norris

- ascii-art

- onboarding

- temperatures

- power-of-thor-episode-1

- the-descent

- MEDIUM

- don't-panic-episode-1

- shadows-of-the-knight-episode-1

- skynet-revolution-episode-1

- there-is-no-spoon-episode-1

- COMMUNITY PUZZLES

- the-fastest

- ddcg-mapper |

zdj0311/myOwn | 353603376 | Title: 本地项目上传到Github

Question:

username_0: 1.在本地创建一个版本库(即文件夹),通过git init把它变成Git仓库

2.把项目复制到这个文件夹里面,再通过git add .把项目添加到仓库

3.再通过git commit -m "注释内容"把项目提交到仓库

4.在Github上设置好SSH密钥后,新建一个远程仓库TEST2,通过git remote add origin https://gitgub.com/username_0/TEST2.git将本地仓库和远程仓库进行关联

5.最后通过git push -u origin master把本地仓库的项目推送到远程仓库(也就是Github)上 |

GEOS-ESM/MAPL | 631616912 | Title: Bring MAPL_RecordAlarmsIsRinging from CVS

Question:

username_0: We need to bring MAPL_RecordAlarmsIsRinging from the s2sv3 branch of CVS. @yvikhlya reminded me that we need this feature to properly support "dual ocean"

Answers:

username_0: @yvikhlya , I looked again and MAPL and this functionality is already there. There no need to bring any code from CVS. I also confirmed that your branch, feature/yury/s2s3-merge, compiles successfully. I will wait until some time next week, so we can talk, but my plan is to close this issue. |

codacy/codacy-codesniffer | 667721903 | Title: Parameters not showing in the UI

Question:

username_0: Parameters are defined in the `patterns.json`, but not in the `description.json` file.

This makes the UI skip them.

codacy-plugins-test json tests are skipped in CI:

https://github.com/codacy/codacy-codesniffer/blob/5ce7ee089b825543f7e3debf71ab4c09a2e1fc9d/.circleci/config.yml#L38

### Acceptance criteria

No parameters are deleted and codacy-plugins-test json test is enabled and green in CI

Status: Issue closed

Answers:

username_1: This problem should be already fixed in production. |

MerlinDS/Gimmick | 93810862 | Title: Gimmick initialization

Question:

username_0: Add method for post initialization actions.

Add callback that will execute after completion of initialization.

Status: Issue closed

Answers:

username_0: Not implemented yet

username_0: Add method for post initialization actions.

https://github.com/username_0/Gimmick/blob/master/src/org/gimmick/managers/GimmickConfig.as

Add callback that will execute after completion of initialization.

https://github.com/username_0/Gimmick/blob/master/src/org/gimmick/core/GimmickEngine.as#L57-76

Status: Issue closed

|

gardener/autoscaler | 493176804 | Title: Clean up the repo to make rebasing with upstream as automatic as possible

Question:

username_0: ### Issue

The current changes in this fork makes it hard to automatically rebase to upstream changes. Due to this further manual changes are required every time we want to take in changes from upstream.

This following list is the chronological order of our commits as far as I can see. It needs to be verified to see if I have missed anything.

1. https://github.com/gardener/autoscaler/commit/c828a5be1afcd707421ec3e11dcd41200a848a90

2. https://github.com/gardener/autoscaler/commit/f07b4d50c3767b985b7a484620b52f29a83b59a6

3. https://github.com/gardener/autoscaler/commit/915aca84f5e14e1d9e5390ddc2b28ff0db572d20

4. https://github.com/gardener/autoscaler/commit/40285d16008ab3803fdedd201d27e2f2754a5e80

5. https://github.com/gardener/autoscaler/commit/da5e1bc8188a333a949d51310fa7daf4fe24c913

6. https://github.com/gardener/autoscaler/commit/d3886a5a60e6855b558ff7614703482c341bd14c

7. https://github.com/gardener/autoscaler/commit/13d61043df474a6b8f9eb47144c18e50f813b9f0

8. https://github.com/gardener/autoscaler/commit/5167fdde8e278eaea19ac7a32318e8accc068a2c

9. https://github.com/gardener/autoscaler/commit/1ce7d24eff25cc66a53db70b9e7a191836ddccb0

10. https://github.com/gardener/autoscaler/commit/6f9a4ac90b79ffcb66097bb85246487d8a1af093

11. https://github.com/gardener/autoscaler/commit/b8fb38745265e91ade9376a90d97b2860cbe6823

12. https://github.com/gardener/autoscaler/commit/da7c71678535048676326657533e94c50da23dbc

13. https://github.com/gardener/autoscaler/commit/1ea00cd6b6b9699babe98f3bd936d5c2455a3f0b

14. https://github.com/gardener/autoscaler/commit/7bd5249cd9b485a3c11f9081c38e17ae9cb3e236

15. https://github.com/gardener/autoscaler/commit/a128cc96de5366706083997a0a0e4c6f4b4d2366

16. https://github.com/gardener/autoscaler/commit/333fcb9bcfd5dbed18a5b86da751790a9ba66e1b

17. https://github.com/gardener/autoscaler/commit/ddf86929460aedc9297435d82b5521d9e19ddc3a

18. https://github.com/gardener/autoscaler/commit/55e365efd9e5cade4d72a2e6f8aa8c27ecdf6ab7

19. https://github.com/gardener/autoscaler/commit/0db747e2cbb352ccff4ebca671af28ba89f5ce24

20. https://github.com/gardener/autoscaler/commit/3168a9f9338b6ddc40db8f4f4035000b19b24c6a

21. https://github.com/gardener/autoscaler/commit/aeee88e94e400727a77bcc769a96c3e64812bfd2

22. https://github.com/gardener/autoscaler/commit/1b9574f8685308be4675d215b10321618f73e7ea

23. https://github.com/gardener/autoscaler/commit/dde3cfb5192a645c448c957f8867e77713e94534

24. https://github.com/gardener/autoscaler/commit/3f3a734a5ef72b117648f0f2670b30c8eba37ea0

25. https://github.com/gardener/autoscaler/commit/7f4f7ba2b2cfae8736d7d827a4a072a0f9fa647a

26. https://github.com/gardener/autoscaler/commit/41c804969eace6b6c5a3b26691125c88587baaea

27. https://github.com/gardener/autoscaler/commit/03b20e55ec709595419d5046944ab9524edff8dc

28. https://github.com/gardener/autoscaler/commit/f454c48fa536e42ecaf494043b95c59a59793270

29. https://github.com/gardener/autoscaler/commit/1bfd5b7e9fafe7db1b303e98e02caf618ded3a3a

30. https://github.com/gardener/autoscaler/commit/beb681d5e9e46229481c26709efc5fba128c7b9a

31. https://github.com/gardener/autoscaler/commit/01144f3ab8d273f0eb3ad652cd86e095ad0afee8

32. https://github.com/gardener/autoscaler/commit/bb50fb3af1d369618087b0867686bbf1aa58e2ca

33. https://github.com/gardener/autoscaler/commit/f0ceff81b267ec2c48ee73ad0f2ff03b9749d375

34. https://github.com/gardener/autoscaler/commit/af07a756598f1d96e31239f0e4ba6bf455e540b2

35. https://github.com/gardener/autoscaler/commit/f0810742f73547ccd2ef261be54bdde33b6db497

36. https://github.com/gardener/autoscaler/commit/e33a0c4599f0d2615520e6250bb5dff8e16c16dd

37. https://github.com/gardener/autoscaler/commit/fc07919d001127ea00f759079899fa39e7e9669d

38. https://github.com/gardener/autoscaler/commit/55d7cc1c6dd74b594237de3b7c0ea980c77dd844

### Solution

We need to refactor the repo to avoid some changes (like package renaming, deletion of folders etc.) and mark other unavoidable changes explicitly so that we can automate much of the work while rebasing on upstream.

Answers:

username_1: close in favour of https://github.com/gardener/autoscaler/issues/51

Status: Issue closed

username_2: ### Issue

The current changes in this fork make it hard to automatically rebase to upstream changes. Due to this, further manual changes are required every time we want to take in changes from upstream.

This following list is the chronological order of our commits as far as I can see. It needs to be verified to see if I have missed anything.

1. https://github.com/gardener/autoscaler/commit/c828a5be1afcd707421ec3e11dcd41200a848a90

Deleting addon-resizer and vertical-pod-autoscaler makes it hard to rebase in the future. And we would need to keep rebasing in the near future until the machine-api is integrated with cluster-autoscaler. It would be better to retain them until then. So, may be better to remove this commit during the cleanup.

2. https://github.com/gardener/autoscaler/commit/f07b4d50c3767b985b7a484620b52f29a83b59a6

3. https://github.com/gardener/autoscaler/commit/915aca84f5e14e1d9e5390ddc2b28ff0db572d20

4. https://github.com/gardener/autoscaler/commit/40285d16008ab3803fdedd201d27e2f2754a5e80

5. https://github.com/gardener/autoscaler/commit/da5e1bc8188a333a949d51310fa7daf4fe24c913

6. https://github.com/gardener/autoscaler/commit/d3886a5a60e6855b558ff7614703482c341bd14c

7. https://github.com/gardener/autoscaler/commit/13d61043df474a6b8f9eb47144c18e50f813b9f0

8. https://github.com/gardener/autoscaler/commit/5167fdde8e278eaea19ac7a32318e8accc068a2c

9. https://github.com/gardener/autoscaler/commit/1ce7d24eff25cc66a53db70b9e7a191836ddccb0

10. https://github.com/gardener/autoscaler/commit/6f9a4ac90b79ffcb66097bb85246487d8a1af093

11. https://github.com/gardener/autoscaler/commit/b8fb38745265e91ade9376a90d97b2860cbe6823

12. https://github.com/gardener/autoscaler/commit/da7c71678535048676326657533e94c50da23dbc

13. https://github.com/gardener/autoscaler/commit/1ea00cd6b6b9699babe98f3bd936d5c2455a3f0b

14. https://github.com/gardener/autoscaler/commit/7bd5249cd9b485a3c11f9081c38e17ae9cb3e236

15. https://github.com/gardener/autoscaler/commit/a128cc96de5366706083997a0a0e4c6f4b4d2366

Retaining the package name/path would ease future rebases. Might be better to remove this commit during the cleanup.

16. https://github.com/gardener/autoscaler/commit/333fcb9bcfd5dbed18a5b86da751790a9ba66e1b

17. https://github.com/gardener/autoscaler/commit/ddf86929460aedc9297435d82b5521d9e19ddc3a

18. https://github.com/gardener/autoscaler/commit/55e365efd9e5cade4d72a2e6f8aa8c27ecdf6ab7

19. https://github.com/gardener/autoscaler/commit/0db747e2cbb352ccff4ebca671af28ba89f5ce24

20. https://github.com/gardener/autoscaler/commit/3168a9f9338b6ddc40db8f4f4035000b19b24c6a

21. https://github.com/gardener/autoscaler/commit/aeee88e94e400727a77bcc769a96c3e64812bfd2

22. https://github.com/gardener/autoscaler/commit/1b9574f8685308be4675d215b10321618f73e7ea

23. https://github.com/gardener/autoscaler/commit/dde3cfb5192a645c448c957f8867e77713e94534

24. https://github.com/gardener/autoscaler/commit/3f3a734a5ef72b117648f0f2670b30c8eba37ea0

25. https://github.com/gardener/autoscaler/commit/7f4f7ba2b2cfae8736d7d827a4a072a0f9fa647a

26. https://github.com/gardener/autoscaler/commit/41c804969eace6b6c5a3b26691125c88587baaea

27. https://github.com/gardener/autoscaler/commit/03b20e55ec709595419d5046944ab9524edff8dc

Retaining the package name/path would ease future rebases. Might be better to remove this commit during the cleanup.

28. https://github.com/gardener/autoscaler/commit/f454c48fa536e42ecaf494043b95c59a59793270

29. https://github.com/gardener/autoscaler/commit/1bfd5b7e9fafe7db1b303e98e02caf618ded3a3a

30. https://github.com/gardener/autoscaler/commit/beb681d5e9e46229481c26709efc5fba128c7b9a

31. https://github.com/gardener/autoscaler/commit/01144f3ab8d273f0eb3ad652cd86e095ad0afee8

32. https://github.com/gardener/autoscaler/commit/bb50fb3af1d369618087b0867686bbf1aa58e2ca

33. https://github.com/gardener/autoscaler/commit/f0ceff81b267ec2c48ee73ad0f2ff03b9749d375

34. https://github.com/gardener/autoscaler/commit/af07a756598f1d96e31239f0e4ba6bf455e540b2

35. https://github.com/gardener/autoscaler/commit/f0810742f73547ccd2ef261be54bdde33b6db497

36. https://github.com/gardener/autoscaler/commit/e33a0c4599f0d2615520e6250bb5dff8e16c16dd

37. https://github.com/gardener/autoscaler/commit/fc07919d001127ea00f759079899fa39e7e9669d

38. https://github.com/gardener/autoscaler/commit/55d7cc1c6dd74b594237de3b7c0ea980c77dd844

### Solution

We need to refactor the repo to avoid some changes (like package renaming, deletion of folders etc.) and mark other unavoidable changes explicitly so that we can automate much of the work while rebasing on upstream.

Also, it is will be a good idea to make commits that deviate from upstream easily identified. Preferably, it is better to keep them always together and on top of the upstream commit so that future rebasing goes as smoothly as possible. I.e. We should avoid cherry picking.

username_2: I think, this issue is about the larger rebase, considering all the patches. While the one you linked above is only about one very specific update from upstream.

I'd prefer to keep this open.

username_1: /assign @username_2

username_2: Here's the new branch - https://github.com/gardener/autoscaler/tree/machine-controller-manager-provider

- for a cleaner history.

- Easier rebase in the future.

This is also now a default branch for the fork.

username_2: cc @rewiko

username_2: /close with the new branch https://github.com/gardener/autoscaler/tree/machine-controller-manager-provider |

waives/waives.net | 501427667 | Title: Pipeline does not complete if `OnPipelineCompleted()` throws

Question:

username_0: The current implementation of `OnPipelineCompleted` does not handle exceptions, and so will cause a deadlock if an exception is thrown here.

```csharp

void OnPipelineComplete()

{

_onPipelineCompletedUserAction();

Logger.Info("Pipeline complete");

taskCompletion.SetResult(true);

}

```<issue_closed>

Status: Issue closed |

saltstack/salt | 182104739 | Title: Install mysql and ensure that a DB exists

Question:

username_0: ### Description of Issue/Question

I've been struggling for tens of hours now trying to create a super simple state that just installs mysql-server, python-mysqldb, mysql-client and creates a DB. I'm completely new to salt, so I might be doing something very wrong, but after chatting with multiple others at #salt on IRC nobody seems to know what the problem is. Others can reproduce the problem too.

I've been told by multiple people that I need reload_modules to make it work, but it doesn't help, it still looks as if python-mysqldb is undetected/not available at runtime(?).

What am I doing wrong? It seems like such a simple task, but I'm stuck and nobody knows.

### Setup

Using 2016.3.3 on Ubuntu 16.04, clean minimal install.

SLS file:

```

database-packages:

pkg.installed:

- pkgs:

- python-mysqldb

- mysql-server

- mysql-client

- reload_modules: true

mysql-is-running:

service.running:

- name: mysql

- enable: True

has-db:

mysql_database.present:

- name: example

- order: last

```

Error:

```

ID: has-db

Function: mysql_database.present

Name: example

Result: False

Comment: An exception occurred in this state: Traceback (most recent call last):

File "/usr/lib/python2.7/dist-packages/salt/state.py", line 1733, in call

**cdata['kwargs'])

File "/usr/lib/python2.7/dist-packages/salt/loader.py", line 1652, in wrapper

return f(*args, **kwargs)

File "/usr/lib/python2.7/dist-packages/salt/states/mysql_database.py", line 55, in present

existing = __salt__['mysql.db_get'](name, **connection_args)

File "/usr/lib/python2.7/dist-packages/salt/modules/mysql.py", line 897, in db_get

dbc = _connect(**connection_args)

File "/usr/lib/python2.7/dist-packages/salt/modules/mysql.py", line 330, in _connect

dbc = MySQLdb.connect(**connargs)

File "/usr/lib/python2.7/dist-packages/pymysql/__init__.py", line 88, in Connect

return Connection(*args, **kwargs)

File "/usr/lib/python2.7/dist-packages/pymysql/connections.py", line 679, in __init__

self.connect()

File "/usr/lib/python2.7/dist-packages/pymysql/connections.py", line 891, in connect

self._request_authentication()

File "/usr/lib/python2.7/dist-packages/pymysql/connections.py", line 1097, in _request_authentication

auth_packet = self._read_packet()

File "/usr/lib/python2.7/dist-packages/pymysql/connections.py", line 966, in _read_packet

packet.check_error()

File "/usr/lib/python2.7/dist-packages/pymysql/connections.py", line 394, in check_error

err.raise_mysql_exception(self._data)

[Truncated]

mysql-python: Not Installed

pycparser: Not Installed

pycrypto: 2.6.1

pygit2: Not Installed

Python: 2.7.12 (default, Jul 1 2016, 15:12:24)

python-gnupg: Not Installed

PyYAML: 3.11

PyZMQ: 15.2.0

RAET: Not Installed

smmap: 0.9.0

timelib: Not Installed

Tornado: 4.2.1

ZMQ: 4.1.4

System Versions:

dist: Ubuntu 16.04 xenial

machine: x86_64

release: 4.4.0-38-generic

system: Linux

version: Ubuntu 16.04 xenial

Answers:

username_1: We need to take a look at why the pymysql plugin isn't working for this, but if you do

`apt-get remove python-pymysql`

before running the above command, your states work correctly. |

Chia-Network/chia-blockchain | 875561589 | Title: [help] Debug.log file

Question:

username_0: I am new in chia , please advice me how to fixed the problems in my debug.log file , thanks

|

hsu-feedback/hsu-vertebrate-reptiles | 752199051 | Title: Monthly VertNet data use report for 2019-12, resource hsu-vertebrate-reptiles

Question:

username_0: Your monthly VertNet data use report is ready!

You can see the HTML rendered version of this report at:

http://tools-usagestats.vertnet-portal.appspot.com/reports/1e165f83-b718-428f-802e-62b0839ea54c/201912/

Raw text and JSON-formatted versions of the report are also available for

download from this link.

A copy of the text version has also been uploaded to your GitHub

repository under the "reports" folder at:

https://github.com/hsu-feedback/hsu-vertebrate-reptiles/tree/master/reports

A full list of all available reports can be accessed from:

http://tools-usagestats.vertnet-portal.appspot.com/reports/1e165f83-b718-428f-802e-62b0839ea54c/

You can find more information on the reporting system, along with an

explanation of each metric, at:

http://www.vertnet.org/resources/usagereportingguide.html

Please post any comments or questions to:

http://www.vertnet.org/feedback/contact.html

Thank you for being a part of VertNet. |

SHU-2016-SummerPractice/AlgorithmExerciseIssues | 173120125 | Title: 编码专题 2016-8-25

Question:

username_0: [91. Decode Ways](https://leetcode.com/problems/decode-ways/)

[89. Gray Code](https://leetcode.com/problems/gray-code/)

Status: Issue closed

Answers:

username_1: [91. Decode Ways](https://leetcode.com/problems/decode-ways/)

[89. Gray Code](https://leetcode.com/problems/gray-code/)

username_1: ```js

/**

* [AC] LeetCode 89 Gray Code

* @param {number} n

* @return {number[]}

*/

var grayCode = function(n) {

if(n <= 0) return [0];

var ans = ['0','1'], i, j;

for(i = 1; i < n; i++){

for(j = Math.pow(2,i) - 1; j >= 0 ; j--){

ans.push('1' + ans[j]);

ans[j] = '0' + ans[j];

}

}

return ans.map(function(s){

return parseInt(s,2);

});

};

```

username_2: ## - 91 - C#

```csharp

public class Solution

{

public int NumDecodings(string s)

{

if (String.IsNullOrEmpty(s)) return 0;

if (s[0] == '0') return 0;

if (s.Length == 1) return 1;

if (Convert.ToInt32(s.Substring(0, 2)) > 21 && Convert.ToInt32(s.Substring(0, 2)) % 10 == 0) return 0;

if (s.Length == 2) return Convert.ToInt32(s) > 26 || Convert.ToInt32(s) < 11|| Convert.ToInt32(s) % 10 == 0 ? 1 : 2;

int[] dp = new int[s.Length];

dp[0] = 1;

dp[1] = Convert.ToInt32(s.Substring(0,2)) > 26 || Convert.ToInt32(s.Substring(0, 2)) < 11 ? 1 : 2;

for(int i=2; i<s.Length; i++)

{

int ele = Convert.ToInt32(s.Substring(i - 1, 2));

if (s[i] == '0')

{

if (s[i - 1] == '0' || s[i-1] > '2') return 0;

dp[i] = dp[i - 2];

}

else dp[i] = (ele > 26 || ele < 10) ? s[i-1] == '0' ? dp[i-2] : dp[i - 1] : dp[i - 1]+dp[i-2];

}

return dp[s.Length - 1];

}

}

```

username_2: ## 89 - C#

```chsarp

public class Solution

{

public IList<int> GrayCode(int n)

{

List<int> res = new List<int>();

res.Add(0);

if (n > 0)

res.Add(1);

for(int i = 1; i<n; i++)

{

for(int j=res.Count-1; j>=0; j--)

{

res.Add((int)Math.Pow(2, i) + res[j]);

}

}

return res;

}

}

``` |

Project-OSRM/osrm-backend | 625094763 | Title: Build Dependencies and Run Dependencies

Question:

username_0: This is not a bug. I just wanted to know within the below dependent tools which can be defined as a build dependency and which can be marked as a run dependency. I have a plan to remove the run dependency tools which doesn't need to run OSRM-Backend

```

sudo apt install build-essential git cmake pkg-config \

libbz2-dev libxml2-dev libzip-dev libboost-all-dev \

lua5.2 liblua5.2-dev libtbb-dev

```

Answers:

username_1: this list looks correct i think

username_0: @username_1 Sir, I actually didn't mean the list is correct or not. I have successfully installed the OSRM backend with the provided list. But now I want to remove those tools from this list which have no dependency while running OSRM. Thanks

username_1: I understood you incorrectly :) to run OSRM you do not need any of those, *but* you need their runtime counterparts:

* libbz2-1.0

* libxml2

* libzip5

* liblua5.2-0

* libtbb2

* libboost-atomic1.71.0

* libboost-chrono1.71.0

* libboost-date-time1.71.0

* libboost-filesystem1.71.0

* libboost-iostreams1.71.0

* libboost-program-options1.71.0

* libboost-regex1.71.0

* libboost-system1.71.0

* libboost-thread1.71.0

package names are valid for latest ubuntu.

Status: Issue closed

|

moebooru/moebooru | 168599860 | Title: Error: Uncaught TypeError: Cannot read property 'text' of null

Question:

username_0: when i try to batch upload something i get this error in the "user_errors.log"

`Error: Uncaught TypeError: Cannot read property 'text' of null

UA: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.103 Safari/537.36

URL: https://<website>/

Cookies: wp-settings-1=libraryContent=browse; wp-settings-time-1=1468717497; firstVisit=1468723681719; vote=1; hide-news-ticker=20130822; login=username_0; show_defaults_to_edit=1; mode=view; tag-script=; __utma=240815900.91403891.1468985611.1469975383.1469999523.17; __utmz=240815900.1468985611.1.1.utmcsr=(direct)|utmccn=(direct)|utmcmd=(none); forum_post_last_read_at="2016-07-19T05:13:10.602Z"; country=DE; user_id=1; user_info=1;50;0; has_mail=0; comments_updated=0; mod_pending=0; block_reason=; resize_image=0; show_advanced_editing=0; my_tags=; held_post_count=0; reported_error=1

File: https://<website>/assets/application-7b4990ee69ade56f45feb7871fa4226804d28627e47caa1cbc3b5ef081cf950e.js line 6`

Answers:

username_1: Fixed.

Status: Issue closed

username_0: Problem persists with different error

Error: Uncaught TypeError: Cannot read property 'value' of undefined

username_1: That'd be a different bug.

username_0: File: https://<website>/assets/moe-legacy/application-3a4cd5a74b2c27bc0eb8607f13f0849e50353e03eccc0ec4ac8acb542c308ab7.js line 11

username_1: That doesn't help. At least which page causes the error is needed.

username_0: UA: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.103 Safari/537.36

URL: https://website/batch/create?url=https%3A%2F%2Fimgur.com%2Fa%2Fanjym%2338&commit=Load+file+index

File: https://website/assets/moe-legacy/application-3a4cd5a74b2c27bc0eb8607f13f0849e50353e03eccc0ec4ac8acb542c308ab7.js line 11

username_1: I can't reproduce the error. I can guess what it is but without precise location of the error it'll take a long time for me to fix (and I don't quite have time for that).

Post the full stack trace in chrome. Or even better run in development mode so I can see which file is causing the error.

username_0: running in dev. mode. where do i find the error now?

username_1: browser console

username_0: the console shows nothing for me

username_1: You to visit the page again and do same action.

username_0: my bad. had to tick the "preserve log" box :D

https://i.imgur.com/jo26HuQ.png

username_1: Should be fixed in 6e04283.

username_0: new errors~

https://i.imgur.com/D9DDrsK.png

username_1: Try a full reload first.

username_0: i did. error persists

username_1: Fixed in e2ab9a5.

username_0: next error

https://i.imgur.com/jJgGZHg.png

username_0: applied your fix. no more error messages but its still not working.

"Status:pending"

username_1: As I said, the error is not related to batch upload.

Check `/job_task`, if it's empty, run `bundle exec rake create_jobs`.

Run `bundle exec rake job:start`.

username_1: try `bundle install && gem pristine -a`

username_0: no more errors.

`/job_task` shows only that its started.

stil on pending

username_0: its looping this entry in the development.log

http://pastebin.com/eRNUxSgw |

szabgab/code-maven.com | 174718246 | Title: Episode date format invalid / Invalid feed.

Question:

username_0: Really enjoying the podcast but I would like to subscribe with Pocket Casts so I don't miss an episode.

Answers:

username_1: This is totally the right place though the actual source code of the site is here: https://github.com/username_1/Perl-Maven I'll look into it soon.

username_1: I think I've fixed it. Please check.

username_0: Thanks. I've checked again and it's giving the same error. I had a look at the feed and noticed a couple of things that may need changing to make it work:

pubDate is missing a comma after the day:

`Sat Sep 3 09:19:24 2016 GMT`

should be

`Sat**,** Sep 3 09:19:24 2016 GMT`

enclosure type is different:

`<enclosure url="http://code-maven.com/media/cmos-3-joel-berger-mojolicious.mp3" length="23645783" type="audio/x-mp3" />`

should be

`<enclosure url="http://code-maven.com/media/cmos-3-joel-berger-mojolicious.mp3" length="23645783" type="**audio/mpeg**" />`

Pocket casts is quite picky with its feed formatting it would seem :)

username_1: Does this feed work: http://feeds.5by5.tv/changelog ?

username_1: Could you check the CMOS feed again?

Status: Issue closed

username_1: Phew. 😄 |

pingcap/tidb | 1073181484 | Title: report "update partition record fails" error when upgrade from v4.0.16 to v5.2.0

Question:

username_0: ## Bug Report

Please answer these questions before submitting your issue. Thanks!

### 1. Minimal reproduce step (Required)

upgrade from v4.0.16 to v5.2.0

after upgrade, run stmtflow test ddl/all.jsonnet test. found following error in log:

2021/12/07 17:08:16.565 +08:00] [INFO] [domain.go:129] ["diff load InfoSchema success"] [currentSchemaVersion=4402] [neededSchemaVersion=4403] ["start time"=898.82µss

] [phyTblIDs="[]"] [actionTypes="[]"]

[2021/12/07 17:08:16.567 +08:00] [INFO] [schema_validator.go:291] ["the schema validator enqueue, queue is too long"] ["delta max count"=1024] ["remove schema version"=1863]

[2021/12/07 17:08:16.630 +08:00] [INFO] [domain.go:129] ["diff load InfoSchema success"] [currentSchemaVersion=4403] [neededSchemaVersion=4404] ["start time"=602.692µ�

s] [phyTblIDs="[]"] [actionTypes="[]"]

[2021/12/07 17:08:16.886 +08:00] [INFO] [domain.go:129] ["diff load InfoSchema success"] [currentSchemaVersion=4404] [neededSchemaVersion=4405] ["start time"=4.286041ms] [phyTblIDs="[2515,2516,2517]"] [actionTypes="[8,8,8]"]

[2021/12/07 17:08:16.965 +08:00] [ERROR] [partition.go:1218] ["update partition record fails"] [message="new record inserted while old record is not removed"] [error="EncodeRow error: data and columnID count not match 4 vs 3"]

### 2. What did you expect to see? (Required)

no error

### 3. What did you see instead (Required)

### 4. What is your TiDB version? (Required)

v5.2.0

Answers:

username_0: [tidb-5000.tar.gz](https://github.com/pingcap/tidb/files/7667567/tidb-5000.tar.gz)

username_0: data collected by clinic: "https://clinic.pingcap.com:4433/diag/files?uuid=9813e1e4294438a6-116e126a69cc4232-45efdaf37f025cbd"

username_0: this tidb node is scale-out after upgrade

username_1: PTAL @username_4 @username_2

username_0: the testcases report this error:

[negative.tar.gz](https://github.com/pingcap/tidb/files/7668728/negative.tar.gz)

table_partition__drop_partition_2

table_partition__drop_partition_3

table_partition__truncate_partition_2

table_partition__truncate_partition_3

username_1: @username_0 What is the configuration of the cluster? I noticed that 'amend transaction' is enabled but not sure if it is related to this error.

It would be helpful if we can have a configuration for the cluster as well.

username_2: This is the same issue with https://github.com/pingcap/tidb/issues/28292

It's introduced in 5.1 by the cherry-pick PR https://github.com/pingcap/tidb/pull/21148

It's a critical problem and not easy to fix, see our internal document https://pingcap.feishu.cn/docs/doccnDrJ22mWwkFi9NkUfzwlADc

username_3: dup of https://github.com/pingcap/tidb/issues/28292

Status: Issue closed

username_0: also exists in v5.3.1

username_0: --- /tmp/ddl__negative__table_partition__truncate_partition_2.531154131/a 2022-03-22 07:50:37.956115775 +0000

+++ /tmp/ddl__negative__table_partition__truncate_partition_2.531154131/b 2022-03-22 07:50:37.956115775 +0000

@@ -9,7 +9,7 @@

/* txn */ begin;

-- txn >> 0 rows affected

/* txn */ update t set id = 7 where id = 1;

--- txn >> 2 rows affected

+-- txn >> E1105: EncodeRow error: data and columnID count not match 4 vs 3

/* ddl */ alter table t truncate partition p0;

-- ddl >> 0 rows affected

/* txn */ commit; -- E8028

2022/03/22 07:50:37 [ddl/all.jsonnet#ddl/negative/table_partition__truncate_partition_2] failed: event#11 mismatch: txn:return(/* txn */ update t set id = 7 where id = 1;): expect a result, got (E1105: EncodeRow error: data and columnID count not match 4 vs 3)

github.com/zyguan/tidb-test-util/cmd/stmtflow/core.(*matchHistory).Assert

/go/tidb-test-util/cmd/stmtflow/core/test.go:113

github.com/zyguan/tidb-test-util/cmd/stmtflow/core.(*Test).Assert

/go/tidb-test-util/cmd/stmtflow/core/test.go:46

github.com/zyguan/tidb-test-util/cmd/stmtflow/command.testOne

/go/tidb-test-util/cmd/stmtflow/command/test.go:117

github.com/zyguan/tidb-test-util/cmd/stmtflow/command.Test.func1

/go/tidb-test-util/cmd/stmtflow/command/test.go:72

github.com/spf13/cobra.(*Command).execute

/go/pkg/mod/github.com/spf13/[email protected]/command.go:852

github.com/spf13/cobra.(*Command).ExecuteC

/go/pkg/mod/github.com/spf13/[email protected]/command.go:960

github.com/spf13/cobra.(*Command).Execute

/go/pkg/mod/github.com/spf13/[email protected]/command.go:897

main.main

/go/tidb-test-util/cmd/stmtflow/main.go:29

username_0: --- /tmp/ddl__negative__table_partition__truncate_partition_3.699959318/a 2022-03-22 07:50:38.351116125 +0000

+++ /tmp/ddl__negative__table_partition__truncate_partition_3.699959318/b 2022-03-22 07:50:38.351116125 +0000

@@ -9,7 +9,7 @@

/* txn */ begin;

-- txn >> 0 rows affected

/* txn */ update t set id = 2 where id = 5;

--- txn >> 2 rows affected

+-- txn >> E1105: EncodeRow error: data and columnID count not match 4 vs 3

/* ddl */ alter table t truncate partition p0;

-- ddl >> 0 rows affected

/* txn */ commit; -- E8028

2022/03/22 07:50:38 [ddl/all.jsonnet#ddl/negative/table_partition__truncate_partition_3] failed: event#11 mismatch: txn:return(/* txn */ update t set id = 2 where id = 5;): expect a result, got (E1105: EncodeRow error: data and columnID count not match 4 vs 3)

github.com/zyguan/tidb-test-util/cmd/stmtflow/core.(*matchHistory).Assert

/go/tidb-test-util/cmd/stmtflow/core/test.go:113

github.com/zyguan/tidb-test-util/cmd/stmtflow/core.(*Test).Assert

/go/tidb-test-util/cmd/stmtflow/core/test.go:46

github.com/zyguan/tidb-test-util/cmd/stmtflow/command.testOne

/go/tidb-test-util/cmd/stmtflow/command/test.go:117

github.com/zyguan/tidb-test-util/cmd/stmtflow/command.Test.func1

/go/tidb-test-util/cmd/stmtflow/command/test.go:72

github.com/spf13/cobra.(*Command).execute

/go/pkg/mod/github.com/spf13/[email protected]/command.go:852

github.com/spf13/cobra.(*Command).ExecuteC

/go/pkg/mod/github.com/spf13/[email protected]/command.go:960

github.com/spf13/cobra.(*Command).Execute

/go/pkg/mod/github.com/spf13/[email protected]/command.go:897

main.main

/go/tidb-test-util/cmd/stmtflow/main.go:29

runtime.main

username_0: --- /tmp/ddl__negative__table_partition__drop_partition_3.222860356/a 2022-03-22 07:50:34.110112366 +0000

+++ /tmp/ddl__negative__table_partition__drop_partition_3.222860356/b 2022-03-22 07:50:34.110112366 +0000

@@ -9,7 +9,7 @@

/* txn */ begin;

-- txn >> 0 rows affected

/* txn */ update t set id = 2 where id = 5;

--- txn >> 2 rows affected

+-- txn >> E1105: EncodeRow error: data and columnID count not match 4 vs 3

/* ddl */ alter table t drop partition p0;

-- ddl >> 0 rows affected

/* txn */ commit; -- E8028

2022/03/22 07:50:34 [ddl/all.jsonnet#ddl/negative/table_partition__drop_partition_3] failed: event#11 mismatch: txn:return(/* txn */ update t set id = 2 where id = 5;): expect a result, got (E1105: EncodeRow error: data and columnID count not match 4 vs 3)

github.com/zyguan/tidb-test-util/cmd/stmtflow/core.(*matchHistory).Assert

/go/tidb-test-util/cmd/stmtflow/core/test.go:113

github.com/zyguan/tidb-test-util/cmd/stmtflow/core.(*Test).Assert

/go/tidb-test-util/cmd/stmtflow/core/test.go:46

github.com/zyguan/tidb-test-util/cmd/stmtflow/command.testOne

/go/tidb-test-util/cmd/stmtflow/command/test.go:117

github.com/zyguan/tidb-test-util/cmd/stmtflow/command.Test.func1

/go/tidb-test-util/cmd/stmtflow/command/test.go:72

github.com/spf13/cobra.(*Command).execute

/go/pkg/mod/github.com/spf13/[email protected]/command.go:852

github.com/spf13/cobra.(*Command).ExecuteC

/go/pkg/mod/github.com/spf13/[email protected]/command.go:960

github.com/spf13/cobra.(*Command).Execute

/go/pkg/mod/github.com/spf13/[email protected]/command.go:897

main.main

/go/tidb-test-util/cmd/stmtflow/main.go:29

runtime.main

username_0: 2022/03/22 07:50:31 [ddl/all.jsonnet#ddl/negative/table_partition__drop_partition_1] passed

--- /tmp/ddl__negative__table_partition__drop_partition_2.291812921/a 2022-03-22 07:50:32.535110970 +0000

+++ /tmp/ddl__negative__table_partition__drop_partition_2.291812921/b 2022-03-22 07:50:32.535110970 +0000

@@ -9,7 +9,7 @@

/* txn */ begin;

-- txn >> 0 rows affected

/* txn */ update t set id = 7 where id = 1;

--- txn >> 2 rows affected

+-- txn >> E1105: EncodeRow error: data and columnID count not match 4 vs 3

/* ddl */ alter table t drop partition p0;

-- ddl >> 0 rows affected

/* txn */ commit; -- E8028

2022/03/22 07:50:32 [ddl/all.jsonnet#ddl/negative/table_partition__drop_partition_2] failed: event#11 mismatch: txn:return(/* txn */ update t set id = 7 where id = 1;): expect a result, got (E1105: EncodeRow error: data and columnID count not match 4 vs 3)

github.com/zyguan/tidb-test-util/cmd/stmtflow/core.(*matchHistory).Assert

/go/tidb-test-util/cmd/stmtflow/core/test.go:113

github.com/zyguan/tidb-test-util/cmd/stmtflow/core.(*Test).Assert

/go/tidb-test-util/cmd/stmtflow/core/test.go:46

github.com/zyguan/tidb-test-util/cmd/stmtflow/command.testOne

/go/tidb-test-util/cmd/stmtflow/command/test.go:117

github.com/zyguan/tidb-test-util/cmd/stmtflow/command.Test.func1

/go/tidb-test-util/cmd/stmtflow/command/test.go:72

github.com/spf13/cobra.(*Command).execute

/go/pkg/mod/github.com/spf13/[email protected]/command.go:852

github.com/spf13/cobra.(*Command).ExecuteC

/go/pkg/mod/github.com/spf13/[email protected]/command.go:960

github.com/spf13/cobra.(*Command).Execute

/go/pkg/mod/github.com/spf13/[email protected]/command.go:897

main.main

/go/tidb-test-util/cmd/stmtflow/main.go:29

username_0: ## Bug Report

Please answer these questions before submitting your issue. Thanks!

### 1. Minimal reproduce step (Required)

upgrade from v4.0.16 to v5.2.0

after upgrade, run stmtflow test ddl/all.jsonnet test. found following error in log:

2021/12/07 17:08:16.565 +08:00] [INFO] [domain.go:129] ["diff load InfoSchema success"] [currentSchemaVersion=4402] [neededSchemaVersion=4403] ["start time"=898.82µss

] [phyTblIDs="[]"] [actionTypes="[]"]

[2021/12/07 17:08:16.567 +08:00] [INFO] [schema_validator.go:291] ["the schema validator enqueue, queue is too long"] ["delta max count"=1024] ["remove schema version"=1863]

[2021/12/07 17:08:16.630 +08:00] [INFO] [domain.go:129] ["diff load InfoSchema success"] [currentSchemaVersion=4403] [neededSchemaVersion=4404] ["start time"=602.692µ�

s] [phyTblIDs="[]"] [actionTypes="[]"]

[2021/12/07 17:08:16.886 +08:00] [INFO] [domain.go:129] ["diff load InfoSchema success"] [currentSchemaVersion=4404] [neededSchemaVersion=4405] ["start time"=4.286041ms] [phyTblIDs="[2515,2516,2517]"] [actionTypes="[8,8,8]"]

[2021/12/07 17:08:16.965 +08:00] [ERROR] [partition.go:1218] ["update partition record fails"] [message="new record inserted while old record is not removed"] [error="EncodeRow error: data and columnID count not match 4 vs 3"]

### 2. What did you expect to see? (Required)

no error

### 3. What did you see instead (Required)

### 4. What is your TiDB version? (Required)

v5.2.0

username_0: reopen it because issue still exist in v6.0.0

username_4: i'm still analysing this, but I think there are more issues that can trigger this. I moved the check for equal length for row and colIDs to be done in RemoveRecord which than will fail for several other cases, like TestInsertOnDuplicateKey, with a read row longer than number of colIDs. The test is just not using binary log, so that is why it has no issues.

username_4: These tests are also using `set @@tidb_enable_amend_pessimistic_txn = 1` which is not compatible with binlog (according to the [docs](https://docs.pingcap.com/tidb/dev/system-variables#tidb_enable_amend_pessimistic_txn-span-classversion-marknew-in-v407span)).

Also there exists bugs with amending pessimistic transactions (not sure if it is related to this): https://github.com/pingcap/tidb/issues/20996.

I have not found an easy reproducible test case, but the issue can be triggered by QA, but it needs to be reduced for further investigation.

So my current conclusion is that binlog has similar issues (data and columnID count not match), see https://github.com/pingcap/tidb/issues/33608.

And `tidb_enable_amend_pessimistic_txn` is not compatible with binlog, so I will stop investing for now.

@username_1 & @username_0 should we keep this open or close it as duplicate on any of the above bugs? If we keep it open, can we lower the severity? |

tensorflow/tensorflow | 192334826 | Title: Tensorboard not showing charts after upgrade to 0.12rc0

Question:

username_0: Tensorboard charts (scalars, distributions, histograms) don't show up after upgrading to 0.12rc0.

No errors are logged on the server.

Check the images below to get a sense of the problem.

Operating System: Ubuntu 15.10

1. pip installation from here:

Ubuntu/Linux 64-bit, CPU only, Python 3.4

$ export TF_BINARY_URL=https://storage.googleapis.com/tensorflow/linux/cpu/tensorflow-0.12.0rc0-cp34-cp34m-linux_x86_64.whl

2. The output from `python -c "import tensorflow; print(tensorflow.__version__)"`.

0.12.0-rc0

Answers:

username_1: @username_0 We'll look into this this week.

Can you check if this reproduces using python2?

username_0: Same thing on python2.

Don't know if this helps or not but here it goes. The images below show the html generated for both cases.

username_0: I was using an older version of Chromium.

Moved to Firefox and no problems there.

Closing the issue.

Status: Issue closed

username_2: How old was the version of Chromium you were using?

username_0: I was using: Version 48.0.2564.116 Ubuntu 15.10 (64-bit) |

admb-project/admb | 672411842 | Title: Provide more information on "debug" releases or on debugging in general?

Question:

username_0: Thanks @username_1 for the ADMB 12.2 release and for providing a larger set of download options at https://github.com/admb-project/admb/releases.

For those of us less familiar with debugging tools, I think it would be helpful for users to provide more information about the debug versions of ADMB (what does the debug version get you other than a much larger executable?). It could be useful to include a sentence added to the release description, some text in the QuickStart documents, or links from those places to a more complete description elsewhere.

Answers:

username_1: Can you check if http://www.admb-project.org/downloads/admb-12.2/QuickStartMacOSZip.html is enough info?

username_0: Looks good to me, thank you.

Status: Issue closed

|

PF4Public/gentoo-overlay | 789768934 | Title: www-plugins/chrome-binary-plugins / dependency conflict

Question:

username_0: Welp... well I just received this warning from emerge about a dependency conflict....

```

WARNING: One or more updates/rebuilds have been skipped due to a dependency conflict:

www-plugins/chrome-binary-plugins:stable

(www-plugins/chrome-binary-plugins-88.0.4324.96:stable/stable::gentoo, ebuild scheduled for merge) USE="" ABI_X86="(64)" conflicts with

~www-plugins/chrome-binary-plugins-87.0.4280.141 required by (www-client/ungoogled-chromium-87.0.4280.141_p1:0/0::pf4public, installed)

```

Widevine rearing its ugly head again. Let me know if I can test anything.

Thanks

Answers:

username_1: Are you trying to install it again, or is it a warning? It seems, that they've bumped chrome-binary-plugins with the arrival of new chromium version. I suggest waiting, till ungoogled-chromium bumps to 88 version and see if the warning persists. By the look of it, this seems to be harmless.

Status: Issue closed

username_0: No, just just came up with during my emerge world update, thanks for the response :) |

saltstack/salt | 152525557 | Title: No JSON object could be decoded: if diff contains latin1 Umlaut

Question:

username_0: ### Description of Issue/Question

```

Traceback (most recent call last):

File "/tmp/.root_483e1e_salt/salt-call", line 15, in <module>

salt_call()

File "/tmp/.root_483e1e_salt/py2/salt/scripts.py", line 339, in salt_call

client.run()

File "/tmp/.root_483e1e_salt/py2/salt/cli/call.py", line 58, in run

caller.run()

File "/tmp/.root_483e1e_salt/py2/salt/cli/caller.py", line 148, in run

self.opts)

File "/tmp/.root_483e1e_salt/py2/salt/output/__init__.py", line 86, in display_output

display_data = try_printout(data, out, opts)

File "/tmp/.root_483e1e_salt/py2/salt/output/__init__.py", line 39, in try_printout

return get_printout(out, opts)(data).rstrip()

File "/tmp/.root_483e1e_salt/py2/salt/output/json_out.py", line 57, in output

return json.dumps(data, default=repr, indent=4)

File "/usr/lib64/python2.7/json/__init__.py", line 238, in dumps

**kw).encode(obj)

File "/usr/lib64/python2.7/json/encoder.py", line 203, in encode

chunks = list(chunks)

File "/usr/lib64/python2.7/json/encoder.py", line 428, in _iterencode

for chunk in _iterencode_dict(o, _current_indent_level):

File "/usr/lib64/python2.7/json/encoder.py", line 402, in _iterencode_dict

for chunk in chunks:

File "/usr/lib64/python2.7/json/encoder.py", line 402, in _iterencode_dict

for chunk in chunks:

File "/usr/lib64/python2.7/json/encoder.py", line 402, in _iterencode_dict

for chunk in chunks:

File "/usr/lib64/python2.7/json/encoder.py", line 402, in _iterencode_dict

for chunk in chunks:

File "/usr/lib64/python2.7/json/encoder.py", line 384, in _iterencode_dict

yield _encoder(value)

UnicodeDecodeError: 'utf8' codec can't decode byte 0xfc in position 477: invalid start byte

[ERROR ] No JSON object could be decoded

```

### Setup

monitioring.sls (Snippet)

```

monitoring__bashrc:

file.managed:

- name: {{ pillar['monitoring']['home'] }}/.bashrc

- source: salt://monitoring/files/.bashrc

```

### Steps to Reproduce Issue

```

salt-ssh w123 state.sls monitoring test=True

```

Since I use `test=True` the problem is reproducible. Otherwise it would disappear after the first call.

### Versions Report

current develop branch af7593ae53b3f7902cad2e14aee0850dc57c2966

### UnicodeError

The old .bashrc contained a latin1 Umlaut. I guess this is the reason.

### repr(data) attached

I attached the repr(data) which I created by modifying json_out.py on the remote host.

Answers:

username_0: [data.txt](https://github.com/saltstack/salt/files/244834/data.txt)

username_1: @username_0, thanks for reporting. This should probably be [`sdecode`d](https://github.com/saltstack/salt/blob/v2016.3.0rc2/salt/utils/locales.py#L35) at the `source` parameter in file.managed. |

Code4HR/hrt-bus-api | 83725758 | Title: Alert maintainers when fleet management feed goes down.

Question:

username_0: Companion issue to https://github.com/Code4HR/hrt-bus-finder/issues/145

When the feed is stale (let's say 30+ minutes), it would be helpful if the API could email the maintainers of the project at <EMAIL>

Answers:

username_1: need to revisit this and automate it so that users aren't the one that are reporting when the feed goes down.

actionable tasks

1. Should load (ftp://172.16.58.3/Anrd/hrtrtf.txt)

2. If data is (stale) hasn't reported changes within 5 minutes notify maintainers

3. If data is absent (just the headers) notify maintainers

4. If times out, notify maintainers.

This can be accomplished some sort of cron but simple uptime notifications aren't useful.

this task can also fire an email off <EMAIL>

username_2: After notification of failure, how long should we wait before we check again / send another notification if its still down?

username_1: notifications probably a hour or two, checking could probably be however as responsive we want to make it, the feed refreshes every ~5 minutes so even 5 is cool but we could do minute wise.

I figure we could just stick this easily in a cron

username_1: @username_2 wrote something for this, i don't rememberwhere this is commited, do you have a copy. I want to mark and close this done :)

username_2: Hey sorry I completely forgot to reply to this last week, here it is: https://github.com/username_2/hrtStatus |

JoeMayo/LinqToTwitter | 119388809 | Title: Document Exception

Question:

username_0: API calls contain validations on input parameters that throw exceptions. The documentation doesn't cover these cases. The closest thing that exists is to declare whether a parameter is required or not. It would be nice to have a table to show these exceptions.

The Wiki is open for modification, so any help in this area is welcome.

Answers:

username_1: heelo i run test demo sent message but error The remote server returned an error: (403) Forbidden . can u help me.

Status: Issue closed

|

watson-developer-cloud/java-sdk | 227183264 | Title: [Speech-to-text] Websocket remains open after inputstream is closed

Question:

username_0: Hi all,

When I close my inputpipe, the Websocket keeps streaming and eventually times out after 30 seconds of inactivity. Throwing this following exception:

SEVERE: Session timed out, no data received in the last 30 seconds.

java.lang.RuntimeException: Session timed out, no data received in the last 30 seconds.

at com.ibm.watson.developer_cloud.speech_to_text.v1.websocket.WebSocketManager$SpeechToTextWebSocketListener.onMessage(WebSocketManager.java:140)

at okhttp3.internal.ws.RealWebSocket.onReadMessage(RealWebSocket.java:307)

at okhttp3.internal.ws.WebSocketReader.readMessageFrame(WebSocketReader.java:222)

at okhttp3.internal.ws.WebSocketReader.processNextFrame(WebSocketReader.java:101)

at okhttp3.internal.ws.RealWebSocket.loopReader(RealWebSocket.java:262)

at okhttp3.internal.ws.RealWebSocket$2.onResponse(RealWebSocket.java:201)

at okhttp3.RealCall$AsyncCall.execute(RealCall.java:141)

at okhttp3.internal.NamedRunnable.run(NamedRunnable.java:32)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

My question is: Is there a manually way to close the websocket?

Using the latest version:

<dependency>

<groupId>com.ibm.watson.developer_cloud</groupId>

<artifactId>java-sdk</artifactId>

<version>3.8.0</version>

</dependency>

Answers:

username_1: I'm working on this @username_0.

username_0: Thanks @username_1 - Looking forward to see your solution.

Status: Issue closed

username_1: @username_0 Once I merge #691 it will be in sonatype snapshots.

Check the readme for instructions on how to use the snapshot repository. It will be great if you can validate that this issue was fixed |

waywardgeek/sonic | 647174533 | Title: Build failure on Mac

Question:

username_0: Undefined symbols for architecture x86_64:

"_fftw_destroy_plan", referenced from:

_sonicAddPitchPeriodToSpectrogram in spectrogram.o

"_fftw_execute", referenced from:

_sonicAddPitchPeriodToSpectrogram in spectrogram.o

"_fftw_plan_dft_r2c_1d", referenced from:

_sonicAddPitchPeriodToSpectrogram in spectrogram.o

ld: symbol(s) not found for architecture x86_64

I had to run make with USE_SPECTROGRAM=0 to get it to build on Mac, but I don't know what functionality this will impact.

Answers:

username_1: Are you on the latest version of sonic? Are you possibly on the [espeak fork](https://github.com/espeak-ng/sonic)?

There was a [fix in 2018](https://github.com/username_2/sonic/commit/0501f109f3eec42120cd209153e58aabdbed8842) in this repo to link in libfftw3. The espeak repo fork is out of date and doesn't have that fix.

The build works for me after that fix. Before the fix, you can see that it failed on

```

gcc -Wall -Wno-unused-function -O3 -ansi -fPIC -pthread -DSONIC_SPECTROGRAM -shared -Wl,-install_name,libsonic.so.0 sonic.o spectrogram.o -o libsonic.so.0.3.0

```

username_2: Hi, Kevin. Sorry I missed your email from June! The spectrogram

functionality should be disabled. I'll update the Makefile to set it this

way. Espeak does not use the spectrogram feature. I only include this

feature since I believe we may be able to significantly improve speech

recognition using more efficient and higher quality signal analysis on the

front-end.

Also, Espeak should be enhanced to include the heart of the sonic algorithm

for high-speed speech (> 2.0 times speedup) directly in the vocoder. I

believe most commercial TTS engines have already done this. This improves

the speech quality, since sonic introduces about -40 db of noise. It also

reduces the CPU load which is due to sonic trying to find the best

fundamental pitch estimate for every pitch epoch. Espeak's vocoder knows

the pitch already.

Bill

username_1: Hey Bill @username_2, thanks for the response and the insight on Espeak! Not a big deal, but minor correction - you actually missed Daniel's message from June. I, Kevin, only commented on this issue a few days ago.

So if Espeak-ng doesn't use the spectrogram feature, will you be disabling the spectrogram in this upstream or in the Espeak-ng fork? Do you have maintainer access to the Espeak-ng forked repo?

username_1: Oh nevermind. I see you've made a [commit](https://github.com/username_2/sonic/commit/4a052d9774387a9d9b4af627f6a74e1694419960) to this repo. I guess somebody needs to fast-forward the Espeak-ng fork to be in sync with this upstream repo. |

GetStream/react-activity-feed | 539791164 | Title: Override LoadingSpinner in StreamApp component

Question:

username_0: As far as I can tell, there is no way to override the Loading Spinner component in the StreamApp component, so it is difficult to customize the loading state of the stream app. The css is also written inline for the LoadingSpinner component, so it can't be targeted and removed via css directly.

Answers:

username_1: Hi @username_0 I have just released version `0.9.25` which includes the prop LoadingIndicator on the FlatFeed which allows you to override the LoadingIndicator component. I also removed the inline styles so you can update them as well.

username_0: amazing! thank you!

Status: Issue closed

|

appirio-tech/topcoder-app | 206121465 | Title: Inactive submission button at the Challenge Specs page, when submission phase is late.

Question:

username_0: When submission phase is late (i.e. we are past the deadline for submission, but the submission phase has been re-opened by admin for some very special reason, to allow somebody to contribute past the deadline), the `Submit` button at the Challenge Specs page remains inactive. Submission through the OR page is available though.

Answers:

username_1: @username_0 Any test challenge active currently with above conditions?

username_0: @username_1 Nope, I've tried to rapidly create one for the remote dev env, but failed :( I believe though, this one might be a simple fix, which we can do without test. Most probably, the button condition checks that challenge phase is Submission, and the time till the end of the phase is positive, thus we only need to remove the second condition.

I'll look more into creating of the test challenge tomorrow.

username_1: @username_0 current logic is simply to check if `submissionEndDate` is positive and the user has registered to the challenge.

It would be better to check what's causing that issue and then fix that.

username_0: @ajefts Can you help me a bit here? I don't know how to make a mock billing account in `www.topcoder-dev.com/direct`, and without billing account development version of Direct does not allow to activate challenges.

username_0: @username_1

FYI: I believe, using this https://github.com/appirio-tech/tc-common-tutorials, especially `docker/direct-app` folder there, you can setup locally everything necessary to test challenge-phases-related functionality. I have not yet tried it now, but I believe, I was using that in some challenge a while ago, and it was working like a charm (after you make it through the setup :)

username_0: ### Update

Test challenge set up at dev site: https://www.topcoder-dev.com/challenge-details/30050681/?type=develop&noncache=true. Submission phase is manually set open, but it is late (phase end date is in the past already).

`dan_developer` test user is registered to the challenge as competitor. He can upload submission using Online Review, but he can't from the challenge details page, as the button is inactive.

Status: Issue closed

|

dotnet/machinelearning-samples | 473236127 | Title: Unable to install VSIX Installer extension

Question:

username_0: Problem encountered on https://dotnet.microsoft.com/learn/machinelearning-ai/ml-dotnet-get-started-tutorial/install

Operating System: windows

26/07/2019 01:46:53 PM - Microsoft VSIX Installer

26/07/2019 01:46:53 PM - -------------------------------------------

26/07/2019 01:46:53 PM - vsixinstaller.exe version:

26/07/2019 01:46:53 PM - 15.9.3041

26/07/2019 01:46:53 PM - -------------------------------------------

26/07/2019 01:46:53 PM - Command line parameters:

26/07/2019 01:46:53 PM - C:\Program Files (x86)\Microsoft Visual Studio\Installer\resources\app\ServiceHub\Services\Microsoft.VisualStudio.Setup.Service\VSIXInstaller.exe,C:\Users\z003v57f\Downloads\MLNET_Model_Builder.vsix

26/07/2019 01:46:53 PM - -------------------------------------------

26/07/2019 01:46:53 PM - Microsoft VSIX Installer

26/07/2019 01:46:53 PM - -------------------------------------------

26/07/2019 01:46:54 PM - Initializing Install...

26/07/2019 01:46:54 PM - Extension Details...

26/07/2019 01:46:54 PM - Identifier : FE96D051-645F-4309-AE99-107A776B0DA2

26/07/2019 01:46:54 PM - Name : ML.NET Model Builder (Preview)

26/07/2019 01:46:54 PM - Author : Microsoft

26/07/2019 01:46:54 PM - Version : 16.0.1907.1703

26/07/2019 01:46:54 PM - Description : Simple UI tool to build custom machine learning models.

26/07/2019 01:46:54 PM - Locale : en-US

26/07/2019 01:46:54 PM - MoreInfoURL :

26/07/2019 01:46:54 PM - InstalledByMSI : False

26/07/2019 01:46:54 PM - SupportedFrameworkVersionRange : [4.5,)

26/07/2019 01:46:54 PM -

26/07/2019 01:46:58 PM - SignatureState : ValidSignature

26/07/2019 01:46:58 PM - SignedBy : Microsoft Corporation

26/07/2019 01:46:58 PM - Certificate Info :

26/07/2019 01:46:58 PM - -------------------------------------------------------

26/07/2019 01:46:58 PM - [Subject] : CN=Microsoft Corporation, OU=OPC, O=Microsoft Corporation, L=Redmond, S=Washington, C=US

26/07/2019 01:46:58 PM - [Issuer] : CN=Microsoft Code Signing PCA 2010, O=Microsoft Corporation, L=Redmond, S=Washington, C=US

26/07/2019 01:46:58 PM - [Serial Number] : 330000026ECE6AE5984BFC96A900000000026E

26/07/2019 01:46:58 PM - [Not Before] : 07/09/2018 02:30:30 AM

26/07/2019 01:46:58 PM - [Not After] : 07/09/2019 02:30:30 AM

26/07/2019 01:46:58 PM - [Thumbprint] : 99B6246883B4B32EA59AE18B36945D205A876800

26/07/2019 01:46:58 PM -

26/07/2019 01:46:58 PM - Supported Products :

26/07/2019 01:46:58 PM - Microsoft.VisualStudio.Community

26/07/2019 01:46:58 PM - Version : [15.0.28307.665,17.0)

26/07/2019 01:46:58 PM - Microsoft.VisualStudio.Enterprise

26/07/2019 01:46:58 PM - Version : [15.0.28307.665,17.0)

26/07/2019 01:46:58 PM - Microsoft.VisualStudio.Pro

26/07/2019 01:46:58 PM - Version : [15.0.28307.665,17.0)

26/07/2019 01:46:58 PM -

26/07/2019 01:46:58 PM - References :

26/07/2019 01:46:58 PM - Prerequisites :

26/07/2019 01:46:58 PM - -------------------------------------------------------

26/07/2019 01:46:58 PM - Identifier : Microsoft.VisualStudio.Component.CoreEditor

26/07/2019 01:46:58 PM - Name : Visual Studio core editor

26/07/2019 01:46:58 PM - Version : [15.0,17.0)

26/07/2019 01:46:58 PM -

26/07/2019 01:46:58 PM - -------------------------------------------------------

26/07/2019 01:46:58 PM - Identifier : Microsoft.VisualStudio.Workload.NetCoreTools

26/07/2019 01:46:58 PM - Name : .NET Core cross-platform development

26/07/2019 01:46:58 PM - Version : [15.0,17.0)

26/07/2019 01:46:58 PM -

26/07/2019 01:46:58 PM - -------------------------------------------------------

26/07/2019 01:46:58 PM - Identifier : Microsoft.NetCore.ComponentGroup.DevelopmentTools.2.1

26/07/2019 01:46:58 PM - Name : .NET Core 2.1 development tools

26/07/2019 01:46:58 PM - Version : [15.0,17.0)

26/07/2019 01:46:58 PM -

26/07/2019 01:46:58 PM - Signature Details...

26/07/2019 01:46:58 PM - Extension is signed with a valid signature.

26/07/2019 01:46:58 PM -

26/07/2019 01:46:58 PM - Searching for applicable products...

26/07/2019 01:46:58 PM - Found installed product - Microsoft Visual Studio Premium 2012

26/07/2019 01:46:58 PM - Found installed product - Microsoft Visual Studio Professional 2012

26/07/2019 01:46:58 PM - Found installed product - Microsoft Visual Studio 2012 Shell (Integrated)

26/07/2019 01:46:58 PM - Found installed product - Global Location

26/07/2019 01:46:58 PM - Found installed product - Visual Studio Enterprise 2017

26/07/2019 01:46:58 PM - VSIXInstaller.NoApplicableSKUsException: This extension is not installable on any currently installed products.

at VSIXInstaller.ExtensionService.GetInstallableData(String vsixPath, String extensionPackParentName, Boolean isRepairSupported, IStateData stateData, IEnumerable`1& skuData)

at VSIXInstaller.ExtensionPackService.IsExtensionPack(IStateData stateData, Boolean isRepairSupported)

at VSIXInstaller.ExtensionPackService.ExpandExtensionPackToInstall(IStateData stateData, Boolean isRepairSupported)

at VSIXInstaller.App.Initialize(Boolean isRepairSupported)

at VSIXInstaller.App.Initialize()

at System.Threading.Tasks.Task`1.InnerInvoke()

at System.Threading.Tasks.Task.Execute()

--- End of stack trace from previous location where exception was thrown ---

at Microsoft.VisualStudio.Telemetry.WindowsErrorReporting.WatsonReport.GetClrWatsonExceptionInfo(Exception exceptionObject)

Answers:

username_1: Hi @username_0 - it appears your issue relates to Model Builder rather than ML.NET samples. In that case, your issue would be better-suited for the Model Builder repo: https://github.com/dotnet/machinelearning-modelbuilder/issues. Please let me know if you are having any issues with the samples/samples repo itself! |

jackofsporks/blockit | 302513976 | Title: Shouldn't there ALWAYS be something there?!?! At least in this particular case where we're in an element. But now we're abstracted!!!!

Question:

username_0: if (!matchGuaranteed && !match)

----

*opened via [imdone.io](https://imdone.io) from a code comment on [20ae47f7](https://github.com/username_0/blockit/commit/20ae47f7) by username_0*

----

https://github.com/username_0/blockit/blob/70af5f49ac3561692612ecc5d71cae8977c5e35d/lib/control/Locator.js#L391-L397

Answers:

username_0: QUESTION Shouldn't there ALWAYS be something there?!?! At least in this particular case where we're in an element. But now we're abstracted!!!! id:62 gh:68 ic:gh

----

https://github.com/username_0/blockit/blob/70af5f49ac3561692612ecc5d71cae8977c5e35d/lib/control/Locator.js#L391-L397

username_0: QUESTION Shouldn't there ALWAYS be something there?!?! At least in this particular case where we're in an element. But now we're abstracted!!!! id:62 gh:68 ic:gh

----

https://github.com/username_0/blockit/blob/11745c0692aa22c71879cbf9f303cf52e27f3ba7/lib/control/Locator.js#L392-L398

username_0: QUESTION Shouldn't there ALWAYS be something there?!?! At least in this particular case where we're in an element. But now we're abstracted!!!! id:62 gh:68 ic:gh

----