repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

jonathan-laurent/AlphaZero.jl | 970880804 | Title: Performance Docs

Question:

username_0: You should really update the docs and example launch commands to explain to people the proper settings to get the most performance.

Specifically

using -t <V_cpus>

and

adjusting batchsize to get the most gpu usage possible

Answers:

username_1: This is a good suggestion.

I think using -t <V_cpus> is not necessary with Julia 1.6+ as it uses as many threads as there are CPU available by default.

But you are right I could tell something about modifying batch size.

By the way, if you find something missing in the doc, don't hesitate to have a go at adding it yourself and submit a PR!

username_0: Ok once I wrap my head around this and manage to fully train I will :)

Status: Issue closed

|

SharePoint/sp-dev-docs | 604457697 | Title: Are shared documents Lists?

Question:

username_0: ## Category

- [x] Question

- [ ] Typo

- [ ] Additional article idea

## Question

In the doc https://docs.microsoft.com/en-us/sharepoint/dev/apis/webhooks/overview-sharepoint-webhooks, it has been mentioned that webhooks can only be applied to lists. But currently we are uploading our data to the Site's root Documents (in a folder within Shared Documents). When I search for all lists in the site through a Graph Explorer, it seems to have one as Shared Documents as well. So are these shared documents a list as well and can I apply webhooks on these items?

Answers:

username_1: Hello @username_0

in SharePoint, the term list means document libraries and lists (e.g. custom lists). The documentation says that webhooks are only enabled for SP list items which doesn't exclude document libraries! Since Shared Documents is a document library, you can also apply webhooks on these items.

I hope it helps to clarify your question.

Regards,

Jarbas

Status: Issue closed

|

bazelbuild/tulsi | 735871524 | Title: Ease of Install: Brew Cask

Question:

username_0: Tulsi/Bazel folks, thanks for releasing a great tool to the world!

I was wondering if you'd consider making a brew cask for Tulsi. That'd make install and update even smoother.

Thanks,

Chris

Answers:

username_0: Self editing: Maybe even better would be to just use bazel as the package manager, and to load tulsi from the WORKSPACE, like XCHammer intends to?

username_0: After some more thought, perhaps importing through WORKSPACE and generating/running via a rule would be equally easy but more in the spirit of bazel?

username_1: You can add it to your WORKSPACE and run it with Bazel.

```

bazel run @build_bazel_tulsi//:tulsi -- -- [flags]

````

username_0: @username_1's suggestion is totally the way to go. Thank you @username_1!

As an example for anyone else reading, just add [rules_apple](https://github.com/bazelbuild/rules_apple) to your WORKSPACE, followed by a http_archive for tulsi. So at the time of writing:

```

http_archive(

name = "build_bazel_tulsi",

# Grabbing master for this example, so it always points to the latest. Do *not* add a sha256, or you'll break the autoupdate!

urls = ["https://github.com/bazelbuild/tulsi/archive/master.zip"],

strip_prefix = "tulsi-master"

)

# You may well need/want to bump this version. Check https://github.com/bazelbuild/tulsi/blob/master/WORKSPACE

http_archive(

name = "build_bazel_rules_apple",

sha256 = "734813e44eb5a2fcba5ffd45de9fe5d05325420a5aa1f6c97a3d88fe2c525b17",

url = "https://github.com/bazelbuild/rules_apple/releases/download/0.21.1/rules_apple.0.21.1.tar.gz",

)

load("@build_bazel_rules_apple//apple:repositories.bzl", "apple_rules_dependencies")

apple_rules_dependencies()

load("@build_bazel_rules_swift//swift:repositories.bzl", "swift_rules_dependencies")

swift_rules_dependencies() # Needed for Tulsi. Seems to also pull in protobuf

load("@build_bazel_apple_support//lib:repositories.bzl", "apple_support_dependencies")

apple_support_dependencies()

```

Still think it'd be sweet if this were a more official way to install--perhaps with a starlark function to check for dependencies, rules_apple-style? |

tensorflow/tensorflow | 463271691 | Title: TFlite conversion of Conv1D with dilation !=1

Question:

username_0: **System information**

- Have I written custom code (as opposed to using a stock example script provided in TensorFlow): Yes

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Google Colab

- TensorFlow installed from (source or binary): binary

- TensorFlow version (use command below): tensorflow==2.0.0-beta1

- Python version: python3

**Describe the current behavior**

After converting a Conv1D op to tensorflow lite the interpreter cannot allocate tensors:

` tensorflow/lite/kernels/space_to_batch_nd.cc:96 NumDimensions(op_context.input) != kInputDimensionNum (3 != 4)Node number 0 (SPACE_TO_BATCH_ND) failed to prepare.

`

**Describe the expected behavior**

Tflite model should be able to load and execute.

**Code to reproduce the issue**

```

!pip install -q tensorflow==2.0.0-beta1

import tensorflow as tf

from tensorflow.keras.models import Model

from tensorflow.keras.layers import *

def get_model():

input = tf.keras.Input(shape=(10,40))

#No error when dilation rate == 1

layer = Conv1D(32, (3),dilation_rate =2, padding='same',use_bias=False) (input)

layer = GlobalMaxPooling1D()(layer)

output = Dense(2) (layer)

model = Model(inputs=[input], outputs=[output])

return model

model = get_model()

converter = tf.lite.TFLiteConverter.from_keras_model(model)

tflite_model = converter.convert()

open("./trained_model.tflite", "wb").write(tflite_model)

interpreter = tf.lite.Interpreter(model_path="./trained_model.tflite")

interpreter.allocate_tensors()

```

**Other info / logs**

The problem does not occur when dilation_rate ==1

Answers:

username_1: This issue is solved with PRs #28410, #27867 & #28179. Thanks!

Status: Issue closed

username_2: Closing the issue as it has been resolved

username_2: **System information**

- Have I written custom code (as opposed to using a stock example script provided in TensorFlow): Yes

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Google Colab

- TensorFlow installed from (source or binary): binary

- TensorFlow version (use command below): tensorflow==2.0.0-beta1

- Python version: python3

**Describe the current behavior**

After converting a Conv1D op to tensorflow lite the interpreter cannot allocate tensors:

` tensorflow/lite/kernels/space_to_batch_nd.cc:96 NumDimensions(op_context.input) != kInputDimensionNum (3 != 4)Node number 0 (SPACE_TO_BATCH_ND) failed to prepare.

`

**Describe the expected behavior**

Tflite model should be able to load and execute.

**Code to reproduce the issue**

```

!pip install -q tensorflow==2.0.0-beta1

import tensorflow as tf

from tensorflow.keras.models import Model

from tensorflow.keras.layers import *

def get_model():

input = tf.keras.Input(shape=(10,40))

#No error when dilation rate == 1

layer = Conv1D(32, (3),dilation_rate =2, padding='same',use_bias=False) (input)

layer = GlobalMaxPooling1D()(layer)

output = Dense(2) (layer)

model = Model(inputs=[input], outputs=[output])

return model

model = get_model()

converter = tf.lite.TFLiteConverter.from_keras_model(model)

tflite_model = converter.convert()

open("./trained_model.tflite", "wb").write(tflite_model)

interpreter = tf.lite.Interpreter(model_path="./trained_model.tflite")

interpreter.allocate_tensors()

```

**Other info / logs**

The problem does not occur when dilation_rate ==1

username_3: Was able to reproduce the issue with [TF v2.1](https://colab.research.google.com/gist/username_3/5216cdcb0582cc62e6a6518ea5334e2e/2-1-template.ipynb) and [TF-nightly](https://colab.research.google.com/gist/username_3/14ab31228c0693ab78b78ed73f0145d0/tf-nightly.ipynb#scrollTo=ieAW-NK5iqpf) i.e. v2.2.0-dev20200327. Please find the attached gist. Thanks!

username_4: @username_5 can you take a look?

username_5: @username_3 Can you share the sample code ?

The example in the original issue works when i tried it.

Thanks

username_3: @username_5,

Sure, below are the links of the gist using

- TF v2.1

https://colab.research.google.com/gist/username_3/5216cdcb0582cc62e6a6518ea5334e2e/2-1-template.ipynb

- TF-nightly

https://colab.research.google.com/gist/username_3/14ab31228c0693ab78b78ed73f0145d0/tf-nightly.ipynb#scrollTo=ieAW-NK5iqpf

username_6: @username_5 Hi, what tensorflow version were you using?

username_5: Thanks @username_3 I can reproduce it on the 2.1 but works with nightly. Can you please retry.

@username_6 i was using tf-nightly

username_6: I can confirm that this works with ```'2.2.0-dev20200414```

username_3: @username_5,

Works without any issues with the latest TF-nightly i.e. v2.2.0-dev20200415. Please find the gist [here](https://colab.research.google.com/gist/username_3/67ba5ecd35433f7e61e5102f8d74611a/30315-tf-nightly.ipynb#scrollTo=ieAW-NK5iqpf). Thanks!

username_5: Thanks for confirming. I am closing the issue. Please feel free to reopen/create a new one if you have any problems.

Thanks

Status: Issue closed

username_7: Issue still exists in TF 2.2 stable. |

idris-lang/Idris-dev | 224571604 | Title: Resource aware types?

Question:

username_0: I'm wondering if it would be possible to have 'types' that are resource aware, similar to raml (resource aware ml)?

Answers:

username_1: Could you give a brief synopsis of what this means / what raml is?

username_0: The raml website explains it a bit better: http://www.raml.co

> On Apr 26, 2017, at 12:41 PM, <NAME> <<EMAIL>> wrote:

>

> Could you give a brief synopsis of what this means / what raml is?

>

>

username_2: @username_0 Edwin had made a [Resource DSL](https://edwinb.wordpress.com/2011/09/16/resource-safe-systems-programming-with-embedded-domain-specific-languages/). See also, the [Uniqueness Types](http://docs.idris-lang.org/en/latest/reference/uniqueness-types.html) and Linear Types extensions.

Note: Could you kindly use the mailing list or IRC next time for questions, instead of the issue tracker?

Status: Issue closed

|

DaveGamble/cJSON | 315763793 | Title: cJSON is more wasteful of memory

Question:

username_0: char *valuestring;

int valueint;

double valuedouble;

all above can use void *value

If void * is used, support for uint64 will be better

Answers:

username_0: cJSON.h

`/*

Copyright (c) 2009-2017 <NAME> and cJSON contributors

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

in the Software without restriction, including without limitation the rights

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

copies of the Software, and to permit persons to whom the Software is

furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in

all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

THE SOFTWARE.

*/

#ifndef cJSON__h

#define cJSON__h

#ifdef __cplusplus

extern "C"

{

#endif

/* project version */

#define CJSON_VERSION_MAJOR 1

#define CJSON_VERSION_MINOR 7

#define CJSON_VERSION_PATCH 5

#include <stddef.h>

/* cJSON Types: */

#define cJSON_Invalid (0)

#define cJSON_False (1 << 0)

#define cJSON_True (1 << 1)

#define cJSON_NULL (1 << 2)

#define cJSON_INT64 (1 << 3)

#define cJSON_UINT64 (1 << 4)

#define cJSON_DOUBLE (1 << 5)

#define cJSON_String (1 << 6)

#define cJSON_Array (1 << 7)

#define cJSON_Object (1 << 8)

#define cJSON_Raw (1 << 9) /* raw json */

#define cJSON_IsReference (1 << 30)

#define cJSON_StringIsConst (1 << 31)

/* The cJSON structure: */

typedef struct cJSON

{

/* next/prev allow you to walk array/object chains. Alternatively, use GetArraySize/GetArrayItem/GetObjectItem */

struct cJSON *next;

struct cJSON *prev;

[Truncated]

{

return 0;

}

double value = 0;

value = *((double *)item->value);

return value;

}

CJSON_PUBLIC(void *) cJSON_malloc(size_t size)

{

return global_hooks.allocate(size);

}

CJSON_PUBLIC(void) cJSON_free(void *object)

{

global_hooks.deallocate(object);

}

`

username_1: If only there was a way to concisely show the differences between two versions of a file ...

username_2: @username_0 I'm not entirely sure what you are trying to say.

I know that the `cJSON` struct is quite wasteful, this is why I have plans on how to handle that in the future, see https://github.com/DaveGamble/cJSON/issues/63#issuecomment-364743833.

That change would reduce the size to 32-48 bytes on x86_64 and even down to 20 bytes with 16 bit architectures (if I calculated that correctly). **And** it would easily allow supporting diffent types for representing numbers.

And the idea to use a union is not new, see #16.

Status: Issue closed

|

akamensky/argparse | 1095590339 | Title: Question about returned parameters (not a bug report)

Question:

username_0: Congratulations on porting the ArgParse concept from Python. ArgParse is one of the Python packages that I have used heavily so this was quite welcome to find. If I find any bugs or suggestions for enhancements, I'll write up a separate issue.

1. Since Parser.X is returning something, why return a pointer to the something? Why not simply return the something itself? Yes, argparse is consistent which is always a good thing.

2. When will argparse be part of the standard package library? i.e. ```import argparse``` without the github references.

Answers:

username_1: Probably never as I am not one of Google developers who decide what goes into standard library. It is better to ask them perhaps.

Status: Issue closed

|

composer/composer | 57969097 | Title: HTTP Header

Question:

username_0: Please integrate the possibility to set the http header for individual repositories. Thx

Answers:

username_1: What do you mean by http header exactly?

username_0: By adding an individual repository the option to set its header when curling to the packages.json file. If you want to have an authentication / user based (e.g. with an api token) packages.json file.

If you could define header fields for the repository (e.g. API-TOKEN) than you could generate a user based packages.json file. Nevertheless by settings a repository with https, the header fields would be encrypted, too. That would be very useful!

username_1: You can configure that per-domain using the http-basic config https://getcomposer.org/doc/04-schema.md#config for http basic authentication.

username_0: Right, but there is no option to set other header fields

username_1: I think you can actually configure it in the repository using stream options like in https://getcomposer.org/doc/articles/handling-private-packages-with-satis.md#security but providing http > headers instead of ssh2. Haven't tried but it should work if we don't do anything dumb later on to prevent it (which could well be..).

username_0: Do you have an example / does it work? Tried things like:

```json

{

"type": "composer",

"url": "http://ex.com/",

"options": {

"headers":{

"API":"asd"

}

}

}

```

OR

```json

{

"type": "composer",

"url": "http://ex.com/",

"options": {

"http":

"headers":{

"API":"asd"

}

}

}

}

```

each with "headers" and "header" and it didn't work :(

username_0: OK, found it :D It does already work! But please put that in your documentation! :)

```json

{

"type": "composer",

"url": "http://ex.com/",

"options": {

"http":{

"header":[

"API-TOKEN: asd"

]

}

}

}

```

Status: Issue closed

|

lovell/sharp | 485099525 | Title: Error: read ECONNRESET

Question:

username_0: info sharp Downloading https://github.com/username_1/sharp-libvips/releases/download/v8.8.1/libvips-8.8.1-win32-x64.tar.gz

D:\NodeBB-master\node_modules\sharp\install\libvips.js:82

throw err;

^

Error: read ECONNRESET

at TLSWrap.onStreamRead (internal/stream_base_commons.js:183:27) {

errno: 'ECONNRESET',

code: 'ECONNRESET',

syscall: 'read'

}

D:\NodeBB-master\node_modules\sharp>if not defined npm_config_node_gyp (node "C:\Program Files\nodejs\node_modules\npm\node_modules\npm-lifecycle\node-gyp-bin\\..\..\node_modules\node-gyp\bin\node-gyp.js" rebuild ) else (node "C:\Program Files\nodejs\node_modules\npm\node_modules\node-gyp\bin\node-gyp.js" rebuild )

Building the projects in this solution one at a time. To enable parallel build, please add the "/m" switch.

VError.cpp

VInterpolate.cpp

VImage.cpp

win_delay_load_hook.cc

LINK : fatal error LNK1104: cannot open file '..\vendor\lib\libvips.lib' [D:\NodeBB-master\node_modules\sharp\build\lib

vips-cpp.vcxproj]

gyp ERR! build error

gyp ERR! stack Error: `C:\Program Files (x86)\MSBuild\14.0\bin\msbuild.exe` failed with exit code: 1

gyp ERR! stack at ChildProcess.onExit (C:\Program Files\nodejs\node_modules\npm\node_modules\node-gyp\lib\build.js:262:23)

gyp ERR! stack at ChildProcess.emit (events.js:200:13)

gyp ERR! stack at Process.ChildProcess._handle.onexit (internal/child_process.js:272:12)

gyp ERR! System Windows_NT 10.0.14393

gyp ERR! command "C:\\Program Files\\nodejs\\node.exe" "C:\\Program Files\\nodejs\\node_modules\\npm\\node_modules\\node-gyp\\bin\\node-gyp.js" "rebuild"

gyp ERR! cwd D:\NodeBB-master\node_modules\sharp

gyp ERR! node -v v12.5.0

gyp ERR! node-gyp -v v3.8.0

gyp ERR! not ok

npm WARN [email protected] requires a peer of eslint@^4.19.1 || ^5.3.0 but none is installed. You must install peer dependencies yourself.

npm WARN [email protected] requires a peer of nodebb-plugin-emoji@^2.0.0 but none is installed. You must install peer dependencies yourself.

npm WARN [email protected] requires a peer of textcomplete@^0.14.2 but none is installed. You must install peer dependencies yourself.

npm ERR! code ELIFECYCLE

npm ERR! errno 1

npm ERR! [email protected] install: `(node install/libvips && node install/dll-copy && prebuild-install) || (node-gyp rebuild && node install/dll-copy)`

npm ERR! Exit status 1

npm ERR!

npm ERR! Failed at the [email protected] install script.

npm ERR! This is probably not a problem with npm. There is likely additional logging output above.

npm ERR! A complete log of this run can be found in:

npm ERR! C:\Users\chen29\AppData\Roaming\npm-cache\_logs\2019-08-26T07_37_06_046Z-debug.log

D:\NodeBB-master>npm install sharp -> installlog.txt

npm ERR! code E404

npm ERR! 404 Not Found - GET https://registry.npmjs.org/- - Not found

npm ERR! 404

npm ERR! 404 '-@latest' is not in the npm registry.

npm ERR! 404 You should bug the author to publish it (or use the name yourself!)

npm ERR! 404

npm ERR! 404 Note that you can also install from a

npm ERR! 404 tarball, folder, http url, or git url.

npm ERR! A complete log of this run can be found in:

npm ERR! C:\Users\chen29\AppData\Roaming\npm-cache\_logs\2019-08-26T07_45_32_153Z-debug.log

Answers:

username_1: `ECONNRESET` is due to a (temporary? firewall?) network problem connecting to https://github.com/.

username_0: https://github.com/ is accessible in the web browser.

The firewall is closed.

How can the sharp be installed when the sharp and libvips are downloaded.

username_0: Yes. It's related to a network problem connecting to https://github.com/ in windows command line.

I test with the following command in two different computer. One is working. The server with nodebb is not working.

D:\11>git clone https://github.com/username_1/sharp

Cloning into 'sharp'...

fatal: unable to access 'https://github.com/username_1/sharp/': Unknown SSL protocol error in connection to github.com:443

username_1: Glad you worked it out.

Status: Issue closed

|

magma/magma | 1077131900 | Title: Inconsistent Node version

Question:

username_0: ### Your Environment

- **Version:** Magma v1.6.1

- **Affected Component:** NMS

- **Affected Subcomponent:** all

- **Deployment Environment:** all

### Describe the Issue

There are significant differences between Node versions specified in different places. This likely causes downstream bugs. For example, dependent packages may target one of the referenced versions but not the other.

**To Reproduce**

/magma/nms/packages/magmalte/Dockerfile:

line 1: `FROM node:12.18-alpine as builder`

line 20: `FROM node:10-alpine`

/magma/.github/workflows/nms-workflow.yml

line 99: `node-version: 16`

**Expected behavior**

1. The same version of Node should be used in the build and runtime environment

2. The target version of Node should be used by developers when running tests, for example by using nvm

3. The target version of Node should be made known in developer documentation

4. Packages with version dependencies should match the target version of Node

5. The engines field in package.json should match the target version. That might include:

- ./nms/package.json

- ./nms/packages/magmalte/package.json

- ./orc8r/cloud/package.json |

OneHalf3544/Japan-crossword | 335178082 | Title: Scale the nonogram image to the window size

Question:

username_0: **Is your feature request related to a problem? Please describe.**

When I choose a big nonogram, it doesn't fit into the monitor resolution, and I have to scroll the window content up and down. It is very annoying.

**Describe the solution you'd like**

Automatically scale a content to the main window size.

Also, we can add an option to hide metadata parts of a nonogram. It will save a lot of view's space. |

Delgan/loguru | 939138194 | Title: not able to show module name

Question:

username_0: ```python

from loguru import logger

logger.info('hello world')

```

it only shows `<module>` placeholder

```python

2021-07-08 02:07:15.378 | INFO | __main__:<module>:3 - hello world

```

Answers:

username_1: The default format uses the `{name}` which is replaced with the `__name__` variable at runtime. Usually, the `__name__` represents the module name but it's not the case for your entry file, [see official Python documentation](https://docs.python.org/3/library/__main__.html) about that.

The `<module>` you're seeing is supposed to represent the function from where is called the log method. As your `logger.info()` is not in any function, it is say to be in the module itself.

See documentation about possible `format` fields, maybe you want to use `{file.name}` instead: [The record dict](https://loguru.readthedocs.io/en/stable/api/logger.html#record).

Status: Issue closed

|

julianhyde/sqlline | 400902797 | Title: Unable to see instruction to quit or read more when run !manual through sqlline CLI

Question:

username_0: Observed this problem while running !manual command from sqlline CLI.

As per the code https://github.com/julianhyde/sqlline/commit/f3263e95c4c981515edc02a730d472d8d6d043cd#diff-4151e9ed27b575a9e975057af55c5378R1736 , it should display the following message "[ Hit "enter" for more ("q" to exit) ]" but it is not there on the terminal.

Answers:

username_1: Indeed it does not. This code works only on Windows OS. In case of Linux/Mac there will be used jline3's `less` implementation which is much better.

Unfortunately it does not work well under Windows that is why the old code is used there e.g. there are several issues while Windows https://github.com/jline/jline3/issues/344, https://github.com/jline/jline3/issues/304

username_1: By the way here it is a comment in the code related to the topic

https://github.com/julianhyde/sqlline/blob/dc969a28f009b18a9dfb1026f96cbec0450338da/src/main/java/sqlline/Commands.java#L1704-L1709

username_0: Thanks! How do I test my changes https://github.com/julianhyde/sqlline/pull/263 with CLI that uses same function call?

username_1: Could you please explain a bit more what are going to test that is not covered by tests from the mentioned issue?

username_0: I wanted to see things are working fine and as expected when I run DROP/DELETE command from SQLline and confirm flag set to true. #263

username_0: Also, is this fix in the roadmap?

username_1: I think there could be added a way to specify if a symbols should be printed or not after key pressed while confirmation step required.

If it matches your expectations I would suggest to rename the issue. |

ikedaosushi/tech-news | 761856367 | Title: Airflow 2.0 でDAG定義をよりシンプルに TaskFlow APIの紹介Dentsu Digital Tech Blognote

Question:

username_0: Airflow 2.0 でDAG定義をよりシンプルに! TaskFlow APIの紹介|Dentsu Digital Tech Blog|note<br>

<br>

https://ift.tt/33Yvpe1 |

izhangzhihao/intellij-rainbow-brackets | 868645245 | Title: [Auto Generated Report]java.lang.Throwable: Stub index points to a file without PSI: file = jrt:///usr/lib/jvm/jdk-11.0.10!/jdk.scripting.nashorn/jdk/nashorn/internal/objects/NativeJSON$Constructor.class, file type = com.intellij.ide.highlighter.JavaClassFileType@64767844, indexed file type = com.intellij.ide.highlighter.JavaClassFileType@64767844, used scope = com.intellij.psi.impl.search.JavaSourceFilterScope[Module-with-dependencies:bigc-admin-business compile-only:true include-libraries:true include-other-modules:true include-tests

Question:

username_0: - Plugin Name:

- Plugin Version: 6.17

- OS Name: Linux

- OS Version: 5.8.0-50-generic

- Java Version: 11.0.10

- App Name: IDEA

- App Full Name: IntelliJ IDEA

- Is Snapshot: false

- App Build: IU-211.6693.111

- StackTrace:

```

java.lang.Throwable: Stub index points to a file without PSI: file = jrt:///usr/lib/jvm/jdk-11.0.10!/jdk.scripting.nashorn/jdk/nashorn/internal/objects/NativeJSON$Constructor.class, file type = com.intellij.ide.highlighter.JavaClassFileType@64767844, indexed file type = com.intellij.ide.highlighter.JavaClassFileType@64767844, used scope = com.intellij.psi.impl.search.JavaSourceFilterScope[Module-with-dependencies:bigc-admin-business compile-only:true include-libraries:true include-other-modules:true include-tests:true]

at com.intellij.openapi.diagnostic.Logger.error(Logger.java:161)

at com.intellij.psi.stubs.StubProcessingHelperBase.processStubsInFile(StubProcessingHelperBase.java:52)

at com.intellij.psi.stubs.StubIndexImpl.lambda$processElements$2(StubIndexImpl.java:284)

at com.intellij.psi.stubs.StubIndexImpl.processElements(StubIndexImpl.java:330)

at com.intellij.psi.impl.PsiShortNamesCacheImpl.processFieldsWithName(PsiShortNamesCacheImpl.java:185)

at com.intellij.psi.impl.CompositeShortNamesCache.processFieldsWithName(CompositeShortNamesCache.java:228)

at com.intellij.codeInsight.daemon.impl.quickfix.StaticImportConstantFix.getMembersToImport(StaticImportConstantFix.java:73)

at com.intellij.codeInsight.daemon.impl.quickfix.StaticImportMemberFix.<init>(StaticImportMemberFix.java:52)

at com.intellij.codeInsight.daemon.impl.quickfix.StaticImportConstantFix.<init>(StaticImportConstantFix.java:35)

at com.intellij.codeInsight.daemon.impl.quickfix.DefaultQuickFixProvider.registerFixes(DefaultQuickFixProvider.java:40)

at com.intellij.codeInsight.daemon.impl.quickfix.DefaultQuickFixProvider.registerFixes(DefaultQuickFixProvider.java:24)

at com.intellij.codeInsight.quickfix.UnresolvedReferenceQuickFixProvider.registerReferenceFixes(UnresolvedReferenceQuickFixProvider.java:27)

at com.intellij.codeInsight.daemon.impl.analysis.HighlightMethodUtil.checkAmbiguousMethodCallIdentifier(HighlightMethodUtil.java:800)

at com.intellij.codeInsight.daemon.impl.analysis.HighlightVisitorImpl.visitReferenceExpression(HighlightVisitorImpl.java:1410)

at com.intellij.psi.impl.source.tree.java.PsiReferenceExpressionImpl.accept(PsiReferenceExpressionImpl.java:778)

at com.intellij.codeInsight.daemon.impl.analysis.HighlightVisitorImpl.visit(HighlightVisitorImpl.java:189)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.runVisitors(GeneralHighlightingPass.java:335)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.lambda$collectHighlights$5(GeneralHighlightingPass.java:268)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.analyzeByVisitors(GeneralHighlightingPass.java:294)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.lambda$analyzeByVisitors$6(GeneralHighlightingPass.java:297)

at com.github.izhangzhihao.rainbow.brackets.visitor.RainbowHighlightVisitor.analyze(RainbowHighlightVisitor.kt:35)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.analyzeByVisitors(GeneralHighlightingPass.java:297)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.lambda$analyzeByVisitors$6(GeneralHighlightingPass.java:297)

at com.intellij.codeInsight.daemon.impl.analysis.HighlightVisitorImpl.lambda$analyze$1(HighlightVisitorImpl.java:214)

at com.intellij.codeInsight.daemon.impl.analysis.RefCountHolder.analyze(RefCountHolder.java:369)

at com.intellij.codeInsight.daemon.impl.analysis.HighlightVisitorImpl.analyze(HighlightVisitorImpl.java:213)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.analyzeByVisitors(GeneralHighlightingPass.java:297)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.lambda$analyzeByVisitors$6(GeneralHighlightingPass.java:297)

at com.intellij.codeInsight.daemon.impl.DefaultHighlightVisitor.analyze(DefaultHighlightVisitor.java:96)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.analyzeByVisitors(GeneralHighlightingPass.java:297)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.collectHighlights(GeneralHighlightingPass.java:265)

at com.intellij.codeInsight.daemon.impl.GeneralHighlightingPass.collectInformationWithProgress(GeneralHighlightingPass.java:211)

at com.intellij.codeInsight.daemon.impl.ProgressableTextEditorHighlightingPass.doCollectInformation(ProgressableTextEditorHighlightingPass.java:84)

at com.intellij.codeHighlighting.TextEditorHighlightingPass.collectInformation(TextEditorHighlightingPass.java:56)

at com.intellij.codeInsight.daemon.impl.PassExecutorService$ScheduledPass.lambda$doRun$1(PassExecutorService.java:400)

at com.intellij.openapi.application.impl.ApplicationImpl.tryRunReadAction(ApplicationImpl.java:1096)

at com.intellij.codeInsight.daemon.impl.PassExecutorService$ScheduledPass.lambda$doRun$2(PassExecutorService.java:393)

at com.intellij.openapi.progress.impl.CoreProgressManager.registerIndicatorAndRun(CoreProgressManager.java:688)

at com.intellij.openapi.progress.impl.CoreProgressManager.executeProcessUnderProgress(CoreProgressManager.java:634)

at com.intellij.openapi.progress.impl.ProgressManagerImpl.executeProcessUnderProgress(ProgressManagerImpl.java:64)

at com.intellij.codeInsight.daemon.impl.PassExecutorService$ScheduledPass.doRun(PassExecutorService.java:392)

at com.intellij.codeInsight.daemon.impl.PassExecutorService$ScheduledPass.lambda$run$0(PassExecutorService.java:368)

at com.intellij.openapi.application.impl.ReadMostlyRWLock.executeByImpatientReader(ReadMostlyRWLock.java:167)

at com.intellij.openapi.application.impl.ApplicationImpl.executeByImpatientReader(ApplicationImpl.java:178)

at com.intellij.codeInsight.daemon.impl.PassExecutorService$ScheduledPass.run(PassExecutorService.java:366)

at com.intellij.concurrency.JobLauncherImpl$VoidForkJoinTask$1.exec(JobLauncherImpl.java:188)

at java.base/java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:290)

at java.base/java.util.concurrent.ForkJoinPool$WorkQueue.topLevelExec(ForkJoinPool.java:1020)

at java.base/java.util.concurrent.ForkJoinPool.scan(ForkJoinPool.java:1656)

at java.base/java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1594)

at java.base/java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:183)

``` |

TooBug/wemark | 377138608 | Title: 疑问:我仅仅在json配置文件中加入usingComponents说明后整个页面就全部乱了?

Question:

username_0: 我仅仅在json配置文件中加入usingComponents说明:

"usingComponents": {

"wemark": "utils/wemark/wemark"

},

然后整个页面就没有数据显示了?我都还没有实际加入wemark的控件呢,什么情况

Answers:

username_1: 看一下详细的报错信息,要不然无法排查。

username_0: 感谢回复,不过应该不是你这个控件的原因,我尝试了一下其余的任何控件,只要一申明 usingComponents ,整个页面就没有任何输出了,非常奇怪,不知道是不是小程序本身的问题。

Status: Issue closed

|

phansible/phansible | 167637070 | Title: Getting errors on vagrant up

Question:

username_0: Hi,

Trying to run a box and after vagrant up I'm getting the following errors:

....

==> default: stdin: is not a tty

....

==> default: gpg:

==> default: keyring `/tmp/tmprfhgdqk1/secring.gpg' created

==> default: gpg:

==> default: keyring `/tmp/tmprfhgdqk1/pubring.gpg' created

==> default: gpg:

==> default: requesting key 7BB9C367 from hkp server keyserver.ubuntu.com

==> default: gpg:

==> default: /tmp/tmprfhgdqk1/trustdb.gpg: trustdb created

==> default: gpg:

==> default: key 7BB9C367: public key "Launchpad PPA for Ansible, Inc." imported

==> default: gpg:

==> default: Total number processed: 1

==> default: gpg:

==> default: imported: 1

==> default: (RSA: 1)

....

==> default: dpkg-preconfigure: unable to re-open stdin: No such file or directory

....

==> default: cp:

==> default: cannot stat ‘/vagrant/ansible/inventories/dev’

==> default: : No such file or directory

==> default: cat:

==> default: /vagrant/ansible/files/authorized_keys

==> default: : No such file or directory

==> default: ERROR! the playbook: /vagrant/ansible/playbook.yml could not be found

The SSH command responded with a non-zero exit status. Vagrant

assumes that this means the command failed. The output for this command

should be in the log above. Please read the output to determine what

went wrong.

I usually used already pre-made vagrant boxes before and creating my own for the first time.

Answers:

username_1: @username_0 that's interesting, could you please share the package you downloaded? By the look of it the errors indicate you are missing most of the essential files. I'd like to make a comparison between I what I have and what you got.

username_0: Sure!

I've unfortunately closed the window with the settings that I chose but here are the 2 packages that I got from it:

[phansible_default.zip](https://github.com/phansible/phansible/files/385858/phansible_default.zip)

[phansible_default (1).zip](https://github.com/phansible/phansible/files/385859/phansible_default.1.zip)

username_0: Just made another one in Firefox, here's the file and screenshots:

[phansible__box_php5.4_xdebug.zip](https://github.com/phansible/phansible/files/385890/phansible__box_php5.4_xdebug.zip)

username_1: @username_0 I just tried the first one of the zips you linked and it worked for me. I'm running vagrant 1.8.5 and the VirtualBox 5.0.20 at the moment, I'm going to have a go with the latest version of VirtualBox too and report back.

username_1: @username_0 it works also with VirtualBox 5.1.2 over here and the same package. Just out of curiosity what OS are your running? I'm on OSX here.

username_0: @username_1 OS X 10.11.5, VirtualBox 5.0.22

I'll try updating VirtualBox later today and will see if that changes anything. :(

username_1: @username_0 please keep me poste as of know it looks more like a glitch of sort mostly related with ansible itself not detecting path properly for some weird reasons. The zips you share with me contains all the required files including the one your error log sais are not found.

username_0: Hm, updated VirtualBox to 5.0.26, still the same. I guess there's probably something wrong with my system?

username_1: @username_0 the latest virtualbox version is 5.1.2. Yes the problem appears to be related to something else in your setup. Basically what is happening in your case is that the shared folder between your host and guest doesn't get shared or, if it does, it suddenlly disappear so that ansible can't find the files it needs.

In fairness there is one thing that seems strange about your setup, although it doesn't seem to affect my test. So basically if I look at the vagrant file you generated you are sharing the following:

```shell

config.vm.synced_folder "/Users/vitalibokov/GitHub/", "/vagrant", type: "nfs"

```

And the error message is reporting the following:

```shell

cannot stat ‘/vagrant/ansible/inventories/dev’

```

now the thing is that the ansible folder is not in your home right? The ansible folder is in the path from where you launch or should launch `vagrant up` and you can see that in these lines:

```shell

config.vm.provision "ansible" do |ansible|

ansible.playbook = "ansible/playbook.yml"

ansible.inventory_path = "ansible/inventories/dev"

ansible.limit = 'all'

end

```

So my question is, how are you executing all this? Are the file in the right and expected location or did you accidentally moved things around?

Could you also post a gist of the entire log? I'd like to see at what step you error is actually getting thrown also because I can't see that output in my terminal (I'm referring about the gpg import)

username_1: @username_0 did you have any luck with this? Any progress? |

alpinejs/alpine | 616341171 | Title: Following a coding standard

Question:

username_0: Continuation of #475

What coding standard are you willing to follow?

Answers:

username_1: Some people started a discussion on this thread #190. At the moment, no coding style is enforced.

username_2: Like @username_1 said there's an existing issue about this, in addition the only person with write access to the repository (ie. Only person who can merge anything) is @calebporzio so I dunno if automated style checking or event having a style guide is useful in that context.

username_0: Okay-okay.

I think liberalism can kill, following a style guide is a must for principled developer.

@douglascrockford leads the ways with https://github.com/douglascrockford/JSLint

Although it hurts feeling which is very important in a collaborative project!

Status: Issue closed

username_2: Hmmm the velocity of contributions is low on the project, since everything has to be merged by Caleb. In that context, I dunno what value automated style checks bring.

username_0: I think without a style guide contributions are merged easier and code quality is a bit lower. |

intesar/Fx-Test-Data | 317020371 | Title: Fx : vault_findById

Question:

username_0: Project : Fx

Job : Stg

Env : Stg

Region : FxLabs/US_WEST_1

Result : fail

Status Code : 503

Headers : {Cache-Control=[no-cache], Connection=[close], Content-Type=[text/html]}

Endpoint : http://stg1.fxlabs.io/api/v1/vault/

Request :

Response :

null

Logs :

Assertion [@Response.errors == false] failed, expected value [false] but found []Assertion [@StatusCode == 200] failed, expected value [200] but found [503]Assertion [@vault_create_init_Response.data.id == @Response.data.id] passed, expected [] and found []Assertion [@vault_create_init_Request.key == @Response.data.key] failed, expected value [] but found [rxoHR4]

Status: Issue closed

Answers:

username_0: Project : Fx

Job : Stg

Env : Stg

Region : FxLabs/US_WEST_1

Result : pass

Status Code : 200

Headers : {X-Content-Type-Options=[nosniff], X-XSS-Protection=[1; mode=block], Cache-Control=[no-cache, no-store, max-age=0, must-revalidate], Pragma=[no-cache], Expires=[0], X-Frame-Options=[DENY], Set-Cookie=[SESSION=MmYxYzNhYmMtNTE1ZC00ZTllLTk2ZWMtYjQwNGIwYWU5YWQx; Path=/; HttpOnly], Content-Type=[application/json;charset=UTF-8], Transfer-Encoding=[chunked], Date=[Mon, 23 Apr 2018 23:45:37 GMT]}

Endpoint : http://stg1.fxlabs.io/api/v1/vault/8a80827362f4e1660162f4e5f89b0737

Request :

Response :

{

"requestId" : "None",

"requestTime" : "2018-04-23T23:45:37.757+0000",

"errors" : false,

"messages" : [ ],

"data" : {

"id" : "8a80827362f4e1660162f4e5f89b0737",

"createdBy" : "8a80829061c17be10161c18999480002",

"createdDate" : "2018-04-23T23:45:34.875+0000",

"modifiedBy" : "8a80829061c17be10161c18999480002",

"modifiedDate" : "2018-04-23T23:45:34.875+0000",

"version" : null,

"inactive" : false,

"org" : {

"id" : "8a80829061c17be10161c189993d0001",

"createdBy" : "anonymousUser",

"createdDate" : "2018-02-23T07:21:15.837+0000",

"modifiedBy" : "anonymousUser",

"modifiedDate" : "2018-02-23T07:21:15.837+0000",

"version" : null,

"inactive" : false,

"name" : "FxLabs"

},

"key" : "h1On4i",

"val" : "value",

"description" : "",

"visibility" : "PRIVATE"

},

"totalPages" : 1,

"totalElements" : 1

}

Logs :

Assertion [@vault_create_init_Request.key == @Response.data.key] passed, expected [h1On4i] and found [h1On4i]Assertion [@vault_create_init_Response.data.id == @Response.data.id] passed, expected [8a80827362f4e1660162f4e5f89b0737] and found [8a80827362f4e1660162f4e5f89b0737]Assertion [@Response.errors == false] passed, expected [false] and found [false]Assertion [@StatusCode == 200] passed, expected [200] and found [200] |

digio/terraform-google-gitlab-runner | 770909120 | Title: Requested disk size cannot be smaller than the image size (20 GB)

Question:

username_0: ```

Error creating instance: googleapi: Error 400: Invalid value for field 'resource.disks[0].initializeParams.diskSizeGb': '10'. Requested disk size cannot be smaller than the image size (20 GB), invalid

```

Answers:

username_1: Fixed in https://github.com/digio/terraform-google-gitlab-runner/commit/85e8ef21bc7d5f3864f773c462456445467c4189

Status: Issue closed

|

BlueMond/LifeMC | 680416257 | Title: Add tempban counter

Question:

username_0: Add tempban countdown or date/time of revival on the screen displayed when a player is denied access to the server for being dead and tempban is enabled and also when a player is kicked for having lost their last life. |

LonamiWebs/Telethon | 1118596032 | Title: Unable to run 2 or more sessions

Question:

username_0: Trying to make parsing from 4 different chats using 2 different accounts - 2 chats per each account. In different programs it works but in same nothing appears:

Tried multiple variants, including event loops but wasn't able to make it working. Could anyone show the code of event loops how it would work or other way

```

import asyncio

from telethon import TelegramClient, sync, events

import asyncio

import string

import sys

client1 = TelegramClient('session1', api_id1, api_hash1)

client2 = TelegramClient('session2', api_id1, api_hash1)

#Chats block

chats1 =("chat1",

"chat2")

chats2 =("chat3",

"chat4")

@client1.on(events.NewMessage(chats=tuple(chats1)))

async def normal_handler1(event):

user_message = event.message.to_dict()['message']

print(user_message)

@client2.on(events.NewMessage(chats=tuple(chats2)))

async def normal_handler2(event):

user_message = event.message.to_dict()['message']

print(user_message)

client1.start()

client2.start()

async def main():

return await asyncio.gather(

client1.run_until_disconnected(),

client2.run_until_disconnected()

)

asyncio.run(main())

```

Answers:

username_1: What's the issue? Is there an error? Are you using the development version?

username_0: The problem is there is no error it just doesn't work and that's it. There was alike issue on stackoverflow -> https://stackoverflow.com/questions/70320400/run-multiple-telethon-clients

But I wasn't able to properly rewrite code for event loops, maybe you could help me to make it work ? Would be appreciated

username_0: @username_1 still wasn't able to find solution, could you help with code ? I really don't know how to rewrite it with event loops to make it work

username_0: @username_1 do you know how to solve it ?

username_2: I just run clients in different threads. |

MiguelRipoll23/homebridge-securitysystem | 534313177 | Title: No Response from Siren switch when Security System is disarmed.

Question:

username_0: When the security system is Off and you hit the Siren Switch, I keep getting a "No Response" from the switch until I Arm the system. Maybe that's because the switch is just there for the purpose of the Armed states triggering automatons, but it's really nice to have a manual toggle even when the Security System is off.

Answers:

username_1: This was intended behavior due to #6 (creating an automation without needing to add conditions) but it can be reverted if people in here would like to add a manual trigger button too.

username_0: Manual toggle would be nice but not if it’ll undo the previous work you’ve done.

username_1: A new option could be added just to ignore the disarmed state and would work fine for both sides without having to revert it, I'll get this done soon.

username_1: A new version has been released that adds this option, sorry for the delay.

Status: Issue closed

|

jupyterlab/jupyterlab | 302191853 | Title: pipe operator (%>%) shortcut doesn't work in jupyterlab

Question:

username_0: pipe operator(%>%) works when 'ctrl + shift + M' in **jupyter notebook**

But, in **jupyterlab**, 'ctrl + shift + M' invokes chrome 'login' popup

Answers:

username_1: I implemented that in [IRkernel’s kernel.js](https://github.com/IRkernel/IRkernel/blob/dfa7f4ef2c855d289df451cb8af18b3fd0c96fda/inst/kernelspec/kernel.js)

With the new JS API it needs to be done in a different way. I’d be glad about pointers in that direction!

username_2: This behaviour still occurs both on chrome and MS Edge. This is quite annoying. Apparantly no solution until so far. Is a workaround available (besides just typing the characters?)

username_3: See https://github.com/jupyterlab/jupyterlab/issues/4519 for a way to do this (and a simple change to jlab that needs to happen to make this easy - PRs welcome!).

username_4: @username_3 it would be nice see this fixed in a stable & sensible way.

All IDEs allow to customise *easily* keyboard shortcuts.

The absence of a customiseable shortcut for `<-` and %>% is a put off for R users.

In my humple view a way to achieve this `should be offered in jpyterlab`, not pushed down to be add-ons implementers responsibility.

username_3: I think maybe there is a misunderstanding. I'm proposing what we offer in core JupyterLab (see https://github.com/jupyterlab/jupyterlab/issues/4519) is a way for any user to customize a keyboard shortcut to insert any text they want.

username_4: Apologies for any misunderstanding from my part!

username_3: JLab 2 offers a way for a user to customize the keyboard shortcuts to insert any text they like into editors: https://jupyterlab.readthedocs.io/en/stable/getting_started/changelog.html#user-facing-changes

Status: Issue closed

|

tarantool/cartridge-cli | 582843666 | Title: drop "rocks pack support"

Question:

username_0: There are several problems in such sphere:

- cartridge-cli is an additional level between `tarantoolctl rocks pack` and it's hard-maintainable to support opportune interface.

- rocks pack supports several modes ".all.rock"/".src.rock"/... and cartridge-cli knows nothing about it.

- `tarantoolctl rocks pack` could be dependent on Makefile/CMakeLists and cartridge-cli couldn't verify correctness of such files - `cartridge pack` simply has a different packaging flow.

So, we have a function that is already completely broken and could return some unpredictable result after packaging. So, I suggest simply drop it - if user wants to get ".rock" (s)he should be able to call `tarantoolctl rocks pack` directly.<issue_closed>

Status: Issue closed |

ikedaosushi/tech-news | 386834313 | Title: プロジェクト計画について思うこと

Question:

username_0: プロジェクト計画について思うこと<br>

この記事は、はてなエンジニア Advent Calendar 2018 の3日目の記事です。 昨日の記事は, id:t_kyt の「time_zone設定の違うMySQLのレプリケーションについて - 角待ちは対空」でした。<br>

https://ift.tt/2DUm4Ym |

ant-design/pro-components | 982029638 | Title: 🐛[BUG] EditableProTable 开启了 expandRowByClick 后, 简直是灾难

Question:

username_0: ### 🐛 bug 描述

<!--

详细地描述 bug,让大家都能理解

-->

由于开启expandRowByClick 导致在edit数据(切换焦点), 点击 保存 或 取消 时, 均会触发当前行的toggle expand

### 📷 复现步骤

官方的"有子列表格"例子, 加入expandRowByClick即可

<!--

清晰描述复现步骤,让别人也能看到问题

-->

### 🏞 期望结果

将event透传到onCancel, onSave等等上, 可以手动stopPropagation(), 或者其他更好的办法

<!--

描述你原本期望看到的结果

-->

### 💻 复现代码

<!--

提供可复现的代码,仓库,或线上示例

-->

### © 版本信息

- ProComponents 版本: [e.g. 4.0.0]

- umi 版本

- 浏览器环境

- 开发环境 [e.g. mac OS]

### 🚑 其他信息

<!--

如截图等其他信息可以贴在这里

--><issue_closed>

Status: Issue closed |

redisson/redisson | 874301730 | Title: While putting value into Rmap It throws io.netty.util.IllegalReferenceCountException: refCnt: 0

Question:

username_0: <!--

Сonsider Redisson PRO https://redisson.pro version for advanced features and support by SLA.

-->

**Expected behavior**

RMap.put should succeed

**Actual behavior**

RMap.put throws io.netty.util.IllegalReferenceCountException: refCnt: 0 when a java bean is set as value in RMap

**Steps to reproduce or test case**

**Redission Initialization**

```

private RMap<String, Expression> expressionMap;

this.expressionMap = this.redissonClient.getMap("ExpressionMap");

```

**Expression Class**

```

@Data

@NoArgsConstructor

@AllArgsConstructor

@Builder

@JsonInclude(JsonInclude.Include.NON_NULL)

public class Expression {

private String expression;

private boolean custom;

}

```

**setting key and value**

```

Expression expression = new Expression();

expression.setExpression("a > 20");

expression.setCustom(false);

this.expressionMap.put(uuid, expression);

```

**System Setup**

My application is a spring-boot application. Tried Running on both Tomcat and Undertow embedded server but the result is the same.

My SpringBoot version is 2.4.3 but have tried updating it to 2.4.2 and similarly 3.15.3 but the result is the same.

**Exception Trace**

```

io.netty.util.IllegalReferenceCountException: refCnt: 0

at io.netty.buffer.AbstractByteBuf.ensureAccessible(AbstractByteBuf.java:1456) ~[netty-buffer-4.1.59.Final.jar:4.1.59.Final]

at io.netty.buffer.AbstractByteBuf.ensureWritable0(AbstractByteBuf.java:291) ~[netty-buffer-4.1.59.Final.jar:4.1.59.Final]

at io.netty.buffer.AbstractByteBuf.ensureWritable(AbstractByteBuf.java:282) ~[netty-buffer-4.1.59.Final.jar:4.1.59.Final]

at io.netty.buffer.AbstractByteBuf.writeBytes(AbstractByteBuf.java:1075) ~[netty-buffer-4.1.59.Final.jar:4.1.59.Final]

at org.redisson.codec.MarshallingCodec$ByteOutputWrapper.write(MarshallingCodec.java:129) ~[redisson-3.15.3.jar:3.15.3]

at org.jboss.marshalling.SimpleDataOutput.flush(SimpleDataOutput.java:336) ~[jboss-marshalling-2.0.11.Final.jar:2.0.11.Final]

at org.jboss.marshalling.SimpleDataOutput.finish(SimpleDataOutput.java:378) ~[jboss-marshalling-2.0.11.Final.jar:2.0.11.Final]

at org.jboss.marshalling.AbstractMarshaller.finish(AbstractMarshaller.java:126) ~[jboss-marshalling-2.0.11.Final.jar:2.0.11.Final]

at org.redisson.codec.MarshallingCodec.lambda$new$1(MarshallingCodec.java:169) ~[redisson-3.15.3.jar:3.15.3]

at org.redisson.command.CommandAsyncService.encodeMapValue(CommandAsyncService.java:715) ~[redisson-3.15.3.jar:3.15.3]

at org.redisson.RedissonObject.encodeMapValue(RedissonObject.java:305) ~[redisson-3.15.3.jar:3.15.3]

at org.redisson.RedissonMap.putOperationAsync(RedissonMap.java:1336) ~[redisson-3.15.3.jar:3.15.3]

at org.redisson.RedissonMap.putAsync(RedissonMap.java:1322) ~[redisson-3.15.3.jar:3.15.3]

at org.redisson.RedissonMap.put(RedissonMap.java:644) ~[redisson-3.15.3.jar:3.15.3]

```

[Truncated]

idleConnectionTimeout: 10000

connectTimeout: 10000

timeout: 3000

retryAttempts: 3

retryInterval: 1500

password: <PASSWORD>

subscriptionsPerConnection: 5

clientName: null

address: "redis://127.0.0.1:6379"

subscriptionConnectionMinimumIdleSize: 1

subscriptionConnectionPoolSize: 50

connectionMinimumIdleSize: 10

connectionPoolSize: 20

database: 0

dnsMonitoringInterval: 5000

threads: 16

nettyThreads: 32

codec: !<org.redisson.codec.MarshallingCodec> {}

transportMode: "NIO"

```

Answers:

username_1: Unable to reproduce. Seems there is some issue with bytebuffer allocation.

try to add these setting in jvm args:

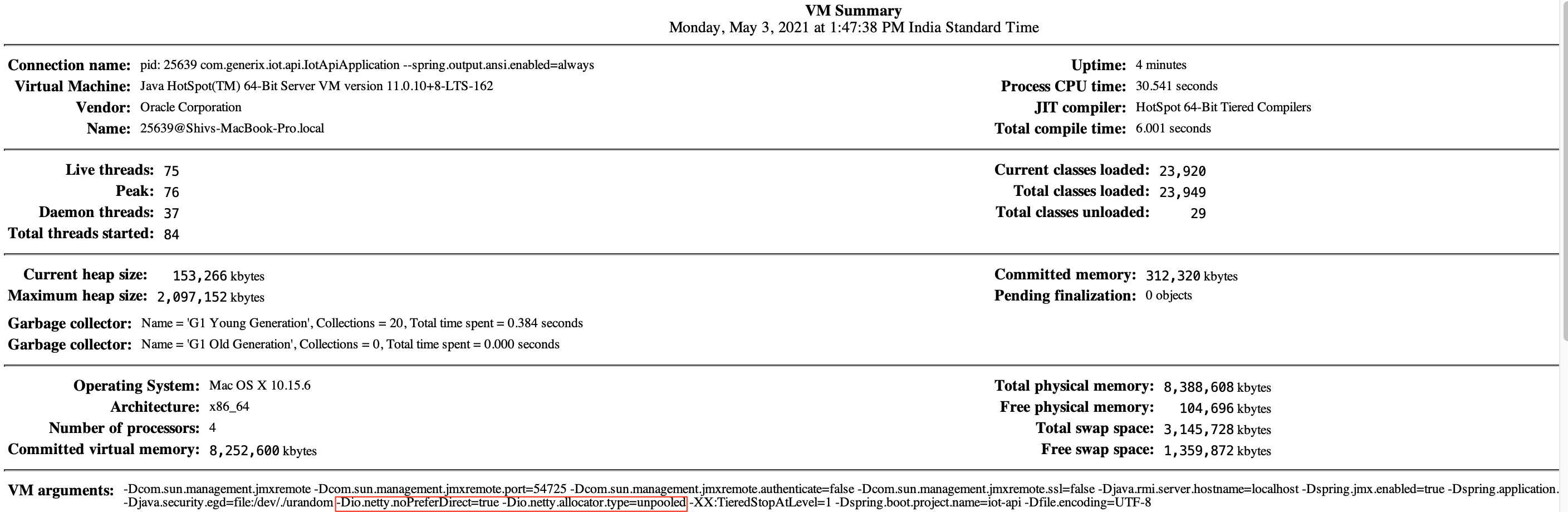

`-Dio.netty.noPreferDirect=true -Dio.netty.allocator.type=unpooled`

username_0: I still see the same error after applying the above JVM arguments.

username_1: Can you provide sample code which I can run?

username_0: Thanks @username_1

Please find the unit test code which is failing on my system

[redission-issue.zip](https://github.com/redisson/redisson/files/6419130/redission-issue.zip)

Junit Failure:

username_1: It works. Here is my output with JDK 13:

```

"C:\Program Files\Java\jdk1.8.0_201\bin\java.exe" -javaagent:C:\Devel\IntelliJ\lib\idea_rt.jar=51554:C:\Devel\IntelliJ\bin -Dfile.encoding=UTF-8 -classpath "C:\Program Files\Java\jdk1.8.0_201\jre\lib\charsets.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\deploy.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\ext\access-bridge-64.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\ext\cldrdata.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\ext\dnsns.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\ext\jaccess.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\ext\jfxrt.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\ext\localedata.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\ext\nashorn.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\ext\sunec.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\ext\sunjce_provider.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\ext\sunmscapi.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\ext\sunpkcs11.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\ext\zipfs.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\javaws.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\jce.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\jfr.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\jfxswt.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\jsse.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\management-agent.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\plugin.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\resources.jar;C:\Program Files\Java\jdk1.8.0_201\jre\lib\rt.jar;C:\Downloads\issue\target\classes;C:\Users\root\.m2\repository\org\redisson\redisson-all\3.15.0\redisson-all-3.15.0.jar;C:\Users\root\.m2\repository\org\springframework\boot\spring-boot-devtools\2.3.7.RELEASE\spring-boot-devtools-2.3.7.RELEASE.jar;C:\Users\root\.m2\repository\org\springframework\boot\spring-boot\2.3.7.RELEASE\spring-boot-2.3.7.RELEASE.jar;C:\Users\root\.m2\repository\org\springframework\spring-core\5.2.12.RELEASE\spring-core-5.2.12.RELEASE.jar;C:\Users\root\.m2\repository\org\springframework\spring-jcl\5.2.12.RELEASE\spring-jcl-5.2.12.RELEASE.jar;C:\Users\root\.m2\repository\org\springframework\spring-context\5.2.12.RELEASE\spring-context-5.2.12.RELEASE.jar;C:\Users\root\.m2\repository\org\springframework\boot\spring-boot-autoconfigure\2.3.7.RELEASE\spring-boot-autoconfigure-2.3.7.RELEASE.jar;C:\Users\root\.m2\repository\org\projectlombok\lombok\1.18.14\lombok-1.18.14.jar;C:\Users\root\.m2\repository\org\springframework\boot\spring-boot-starter-web\2.3.7.RELEASE\spring-boot-starter-web-2.3.7.RELEASE.jar;C:\Users\root\.m2\repository\org\springframework\boot\spring-boot-starter\2.3.7.RELEASE\spring-boot-starter-2.3.7.RELEASE.jar;C:\Users\root\.m2\repository\org\springframework\boot\spring-boot-starter-logging\2.3.7.RELEASE\spring-boot-starter-logging-2.3.7.RELEASE.jar;C:\Users\root\.m2\repository\ch\qos\logback\logback-classic\1.2.3\logback-classic-1.2.3.jar;C:\Users\root\.m2\repository\ch\qos\logback\logback-core\1.2.3\logback-core-1.2.3.jar;C:\Users\root\.m2\repository\org\slf4j\slf4j-api\1.7.30\slf4j-api-1.7.30.jar;C:\Users\root\.m2\repository\org\apache\logging\log4j\log4j-to-slf4j\2.13.3\log4j-to-slf4j-2.13.3.jar;C:\Users\root\.m2\repository\org\apache\logging\log4j\log4j-api\2.13.3\log4j-api-2.13.3.jar;C:\Users\root\.m2\repository\org\slf4j\jul-to-slf4j\1.7.30\jul-to-slf4j-1.7.30.jar;C:\Users\root\.m2\repository\jakarta\annotation\jakarta.annotation-api\1.3.5\jakarta.annotation-api-1.3.5.jar;C:\Users\root\.m2\repository\org\yaml\snakeyaml\1.26\snakeyaml-1.26.jar;C:\Users\root\.m2\repository\org\springframework\boot\spring-boot-starter-json\2.3.7.RELEASE\spring-boot-starter-json-2.3.7.RELEASE.jar;C:\Users\root\.m2\repository\com\fasterxml\jackson\core\jackson-databind\2.11.3\jackson-databind-2.11.3.jar;C:\Users\root\.m2\repository\com\fasterxml\jackson\core\jackson-annotations\2.11.3\jackson-annotations-2.11.3.jar;C:\Users\root\.m2\repository\com\fasterxml\jackson\core\jackson-core\2.11.3\jackson-core-2.11.3.jar;C:\Users\root\.m2\repository\com\fasterxml\jackson\datatype\jackson-datatype-jdk8\2.11.3\jackson-datatype-jdk8-2.11.3.jar;C:\Users\root\.m2\repository\com\fasterxml\jackson\datatype\jackson-datatype-jsr310\2.11.3\jackson-datatype-jsr310-2.11.3.jar;C:\Users\root\.m2\repository\com\fasterxml\jackson\module\jackson-module-parameter-names\2.11.3\jackson-module-parameter-names-2.11.3.jar;C:\Users\root\.m2\repository\org\springframework\boot\spring-boot-starter-tomcat\2.3.7.RELEASE\spring-boot-starter-tomcat-2.3.7.RELEASE.jar;C:\Users\root\.m2\repository\org\apache\tomcat\embed\tomcat-embed-core\9.0.41\tomcat-embed-core-9.0.41.jar;C:\Users\root\.m2\repository\org\glassfish\jakarta.el\3.0.3\jakarta.el-3.0.3.jar;C:\Users\root\.m2\repository\org\apache\tomcat\embed\tomcat-embed-websocket\9.0.41\tomcat-embed-websocket-9.0.41.jar;C:\Users\root\.m2\repository\org\springframework\spring-web\5.2.12.RELEASE\spring-web-5.2.12.RELEASE.jar;C:\Users\root\.m2\repository\org\springframework\spring-beans\5.2.12.RELEASE\spring-beans-5.2.12.RELEASE.jar;C:\Users\root\.m2\repository\org\springframework\spring-webmvc\5.2.12.RELEASE\spring-webmvc-5.2.12.RELEASE.jar;C:\Users\root\.m2\repository\org\springframework\spring-aop\5.2.12.RELEASE\spring-aop-5.2.12.RELEASE.jar;C:\Users\root\.m2\repository\org\springframework\spring-expression\5.2.12.RELEASE\spring-expression-5.2.12.RELEASE.jar;C:\Users\root\.m2\repository\javax\annotation\javax.annotation-api\1.3.2\javax.annotation-api-1.3.2.jar" org.issueRedisson.springBoot.StartRedisTestBoot

. ____ _ __ _ _

/\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \

( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \

\\/ ___)| |_)| | | | | || (_| | ) ) ) )

' |____| .__|_| |_|_| |_\__, | / / / /

=========|_|==============|___/=/_/_/_/

:: Spring Boot :: (v2.3.7.RELEASE)

2021-05-04 09:09:14.426 INFO 4024 --- [ restartedMain] o.i.springBoot.StartRedisTestBoot : Starting StartRedisTestBoot on Computer with PID 4024 (C:\Downloads\issue\target\classes started by root in C:\Downloads\issue)

2021-05-04 09:09:14.429 INFO 4024 --- [ restartedMain] o.i.springBoot.StartRedisTestBoot : No active profile set, falling back to default profiles: default

2021-05-04 09:09:14.482 INFO 4024 --- [ restartedMain] .e.DevToolsPropertyDefaultsPostProcessor : Devtools property defaults active! Set 'spring.devtools.add-properties' to 'false' to disable

2021-05-04 09:09:14.482 INFO 4024 --- [ restartedMain] .e.DevToolsPropertyDefaultsPostProcessor : For additional web related logging consider setting the 'logging.level.web' property to 'DEBUG'

2021-05-04 09:09:15.417 INFO 4024 --- [ restartedMain] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat initialized with port(s): 8080 (http)

2021-05-04 09:09:15.427 INFO 4024 --- [ restartedMain] o.apache.catalina.core.StandardService : Starting service [Tomcat]

2021-05-04 09:09:15.427 INFO 4024 --- [ restartedMain] org.apache.catalina.core.StandardEngine : Starting Servlet engine: [Apache Tomcat/9.0.41]

2021-05-04 09:09:15.486 INFO 4024 --- [ restartedMain] o.a.c.c.C.[Tomcat].[localhost].[/] : Initializing Spring embedded WebApplicationContext

2021-05-04 09:09:15.487 INFO 4024 --- [ restartedMain] w.s.c.ServletWebServerApplicationContext : Root WebApplicationContext: initialization completed in 1004 ms

2021-05-04 09:09:16.145 DEBUG 4024 --- [sson-netty-2-21] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@560565971 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x758d3ca6, L:/127.0.0.1:51625 - R:/127.0.0.1:6379], currentCommand=null]

2021-05-04 09:09:16.145 DEBUG 4024 --- [sson-netty-2-23] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@1710482627 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0xfb62d088, L:/127.0.0.1:51627 - R:/127.0.0.1:6379], currentCommand=null]

2021-05-04 09:09:16.145 DEBUG 4024 --- [sson-netty-2-20] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@1773270043 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x25f61d78, L:/127.0.0.1:51626 - R:/127.0.0.1:6379], currentCommand=null]

2021-05-04 09:09:16.145 DEBUG 4024 --- [sson-netty-2-16] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@1036087503 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x51d1db38, L:/127.0.0.1:51631 - R:/127.0.0.1:6379], currentCommand=null]

2021-05-04 09:09:16.145 DEBUG 4024 --- [sson-netty-2-17] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@966637890 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0xe5958ec5, L:/127.0.0.1:51633 - R:/127.0.0.1:6379], currentCommand=null]

2021-05-04 09:09:16.145 DEBUG 4024 --- [sson-netty-2-13] o.r.connection.ClientConnectionsEntry : new pubsub connection created: RedisPubSubConnection@790605312 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x0269ba46, L:/127.0.0.1:51630 - R:/127.0.0.1:6379], currentCommand=null]

2021-05-04 09:09:16.145 DEBUG 4024 --- [sson-netty-2-15] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@584908344 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x3f5fede3, L:/127.0.0.1:51629 - R:/127.0.0.1:6379], currentCommand=null]

2021-05-04 09:09:16.145 DEBUG 4024 --- [sson-netty-2-18] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@1323797976 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x05f3e864, L:/127.0.0.1:51632 - R:/127.0.0.1:6379], currentCommand=null]

2021-05-04 09:09:16.145 DEBUG 4024 --- [sson-netty-2-22] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@612699270 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0xe949bdef, L:/127.0.0.1:51628 - R:/127.0.0.1:6379], currentCommand=null]

2021-05-04 09:09:16.145 DEBUG 4024 --- [sson-netty-2-14] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@648927375 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x21914d2f, L:/127.0.0.1:51624 - R:/127.0.0.1:6379], currentCommand=null]

2021-05-04 09:09:16.145 DEBUG 4024 --- [sson-netty-2-19] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@753784165 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x5066cfc9, L:/127.0.0.1:51623 - R:/127.0.0.1:6379], currentCommand=null]

2021-05-04 09:09:16.152 INFO 4024 --- [sson-netty-2-13] o.r.c.pool.MasterPubSubConnectionPool : 1 connections initialized for /127.0.0.1:6379

2021-05-04 09:09:16.155 DEBUG 4024 --- [isson-netty-2-2] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@1752841329 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x245f4c97, L:/127.0.0.1:51634 - R:/127.0.0.1:6379], currentCommand=CommandData [promise=RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@3356e82a(incomplete)], command=(PING), params=[], codec=org.redisson.client.codec.StringCodec]]

2021-05-04 09:09:16.155 DEBUG 4024 --- [isson-netty-2-3] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@90257875 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x23fde960, L:/127.0.0.1:51635 - R:/127.0.0.1:6379], currentCommand=CommandData [promise=RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@7c414c(success: PONG)], command=(PING), params=[], codec=null]]

2021-05-04 09:09:16.155 DEBUG 4024 --- [isson-netty-2-4] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@1505320775 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0xd4f119dc, L:/127.0.0.1:51636 - R:/127.0.0.1:6379], currentCommand=CommandData [promise=RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@459d9abb(incomplete)], command=(PING), params=[], codec=org.redisson.client.codec.StringCodec]]

2021-05-04 09:09:16.159 DEBUG 4024 --- [sson-netty-2-10] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@134386209 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x18886829, L:/127.0.0.1:51638 - R:/127.0.0.1:6379], currentCommand=CommandData [promise=RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@728e38c3(incomplete)], command=(PING), params=[], codec=org.redisson.client.codec.StringCodec]]

2021-05-04 09:09:16.159 DEBUG 4024 --- [isson-netty-2-8] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@1018666403 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x74f05e90, L:/127.0.0.1:51637 - R:/127.0.0.1:6379], currentCommand=CommandData [promise=RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@796491e5(incomplete)], command=(PING), params=[], codec=org.redisson.client.codec.StringCodec]]

2021-05-04 09:09:16.159 DEBUG 4024 --- [isson-netty-2-9] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@30448953 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x46151cdf, L:/127.0.0.1:51639 - R:/127.0.0.1:6379], currentCommand=CommandData [promise=RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@1df41459(incomplete)], command=(PING), params=[], codec=org.redisson.client.codec.StringCodec]]

2021-05-04 09:09:16.160 DEBUG 4024 --- [sson-netty-2-12] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@71649161 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0xd8430c00, L:/127.0.0.1:51640 - R:/127.0.0.1:6379], currentCommand=CommandData [promise=RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@29b716b4(incomplete)], command=(PING), params=[], codec=org.redisson.client.codec.StringCodec]]

2021-05-04 09:09:16.160 DEBUG 4024 --- [sson-netty-2-13] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@1780299143 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x99a32bc5, L:/127.0.0.1:51641 - R:/127.0.0.1:6379], currentCommand=CommandData [promise=RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@71caa1f1(incomplete)], command=(PING), params=[], codec=org.redisson.client.codec.StringCodec]]

2021-05-04 09:09:16.162 DEBUG 4024 --- [sson-netty-2-14] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@743814143 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x6c085cde, L:/127.0.0.1:51642 - R:/127.0.0.1:6379], currentCommand=CommandData [promise=RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@69572811(incomplete)], command=(PING), params=[], codec=org.redisson.client.codec.StringCodec]]

2021-05-04 09:09:16.162 DEBUG 4024 --- [sson-netty-2-16] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@926489535 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x64a35433, L:/127.0.0.1:51644 - R:/127.0.0.1:6379], currentCommand=CommandData [promise=RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@723b833c(incomplete)], command=(PING), params=[], codec=org.redisson.client.codec.StringCodec]]

2021-05-04 09:09:16.162 DEBUG 4024 --- [sson-netty-2-15] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@673486152 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x4f10008b, L:/127.0.0.1:51647 - R:/127.0.0.1:6379], currentCommand=CommandData [promise=RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@6d260e28(incomplete)], command=(PING), params=[], codec=org.redisson.client.codec.StringCodec]]

2021-05-04 09:09:16.162 DEBUG 4024 --- [sson-netty-2-17] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@1635158518 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0xe6b0611d, L:/127.0.0.1:51646 - R:/127.0.0.1:6379], currentCommand=CommandData [promise=RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@4d293cac(incomplete)], command=(PING), params=[], codec=org.redisson.client.codec.StringCodec]]

2021-05-04 09:09:16.163 DEBUG 4024 --- [sson-netty-2-18] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@975442831 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0xe99fd6c6, L:/127.0.0.1:51645 - R:/127.0.0.1:6379], currentCommand=CommandData [promise=RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@485fd05e(incomplete)], command=(PING), params=[], codec=org.redisson.client.codec.StringCodec]]

2021-05-04 09:09:16.163 DEBUG 4024 --- [sson-netty-2-20] o.r.connection.ClientConnectionsEntry : new connection created: RedisConnection@1665769323 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x9ba6a183, L:/127.0.0.1:51643 - R:/127.0.0.1:6379], currentCommand=CommandData [promise=RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@264b0d66(incomplete)], command=(PING), params=[], codec=org.redisson.client.codec.StringCodec]]

2021-05-04 09:09:16.163 INFO 4024 --- [sson-netty-2-20] o.r.c.pool.MasterConnectionPool : 24 connections initialized for /127.0.0.1:6379

2021-05-04 09:09:16.165 DEBUG 4024 --- [ restartedMain] org.redisson.connection.DNSMonitor : DNS monitoring enabled; Current masters: {redis://127.0.0.1:6379=/127.0.0.1:6379}, slaves: {}

publishing...

2021-05-04 09:09:16.216 DEBUG 4024 --- [ restartedMain] org.redisson.command.RedisExecutor : acquired connection for command (PUBLISH) and params [xxx, PooledUnsafeDirectByteBuf(ridx: 0, widx: 91, cap: 256)] from slot NodeSource [slot=0, addr=null, redisClient=null, redirect=null, entry=null] using node /127.0.0.1:6379... RedisConnection@560565971 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x758d3ca6, L:/127.0.0.1:51625 - R:/127.0.0.1:6379], currentCommand=null]

2021-05-04 09:09:16.218 DEBUG 4024 --- [sson-netty-2-11] org.redisson.command.RedisExecutor : connection released for command (PUBLISH) and params [xxx, PooledUnsafeDirectByteBuf(ridx: 0, widx: 91, cap: 256)] from slot NodeSource [slot=0, addr=null, redisClient=null, redirect=null, entry=null] using connection RedisConnection@560565971 [redisClient=[addr=redis://127.0.0.1:6379], channel=[id: 0x758d3ca6, L:/127.0.0.1:51625 - R:/127.0.0.1:6379], currentCommand=CommandData [promise=RedissonPromise [promise=ImmediateEventExecutor$ImmediatePromise@1c689972(success: 1)], command=(PUBLISH), params=[xxx, PooledUnsafeDirectByteBuf(ridx: 0, widx: 91, cap: 256)], codec=org.redisson.client.codec.StringCodec]]

published...

2021-05-04 09:09:16.404 INFO 4024 --- [ restartedMain] o.s.s.concurrent.ThreadPoolTaskExecutor : Initializing ExecutorService 'applicationTaskExecutor'

2021-05-04 09:09:16.532 INFO 4024 --- [ restartedMain] o.s.b.d.a.OptionalLiveReloadServer : LiveReload server is running on port 35729

2021-05-04 09:09:16.550 INFO 4024 --- [ restartedMain] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat started on port(s): 8080 (http) with context path ''

2021-05-04 09:09:16.559 INFO 4024 --- [ restartedMain] o.i.springBoot.StartRedisTestBoot : Started StartRedisTestBoot in 2.429 seconds (JVM running for 2.803)

2021-05-04 09:09:21.165 DEBUG 4024 --- [sson-netty-2-21] org.redisson.connection.DNSMonitor : Request sent to resolve ip address for master host: 127.0.0.1

2021-05-04 09:09:21.167 DEBUG 4024 --- [sson-netty-2-19] org.redisson.connection.DNSMonitor : Resolved ip: /127.0.0.1 for master host: 127.0.0.1

2021-05-04 09:09:26.167 DEBUG 4024 --- [sson-netty-2-22] org.redisson.connection.DNSMonitor : Request sent to resolve ip address for master host: 127.0.0.1

2021-05-04 09:09:26.167 DEBUG 4024 --- [sson-netty-2-19] org.redisson.connection.DNSMonitor : Resolved ip: /127.0.0.1 for master host: 127.0.0.1

```

username_1: did you try to run it with openjdk?

username_0: I am using Oracle jdk-11.0.10. Do you advise checking this in Open JDK?

The spring version which you are using is 2.3.7, whereas I am using 2.4.3.

It seems you are running on JDK 8 from the logs.