repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

anjlab/android-inapp-billing-v3 | 109921235 | Title: multidex with google play service lib

Question:

username_0: Dear you,

i am using android-inapp-billing-v3, but i have a problem error multidex : com/android/vending/billing/IInAppBillingService

i configed multiDexEnabled true but still can't resolve this problem, could you please help me or please suggest for me anything ?

thank you so much

tran

Answers:

username_1: extend application class from MultiDexApplication?

Status: Issue closed

username_0: thank you for your support, i soloved problem, your lib is work very great, again thanks. |

acceptbitcoincash/acceptbitcoincash | 327247757 | Title: Add 'KiT systems' to the 'Air conditioning equipment and security systems' category

Question:

username_0: Requesting to add 'KiT systems' to the 'Air conditioning equipment and security systems' category.

Details follow:

```yml

- name: KiT systems

url: https://www.kitsystem.ru/

img:

email_address: <EMAIL>

country: RU

bch: Yes

btc: No

othercrypto: Yes

doc: https://www.kitsystem.ru/blog/info/oplachivayte-tovary-i-uslugi-kriptovalyutoy

```

Resources for adding this merchant:

Logo Provided:

[Link to KiT systems](https://www.kitsystem.ru/)

Maybe you might want to check their Alexa Rank: [https://www.alexa.com/siteinfo/www.kitsystem.ru/](https://www.alexa.com/siteinfo/www.kitsystem.ru/)

- Verify site is legitimate and safe to list.

- Correct data in form if any is inaccurate.

If everything looks okay, Add it to the site:

- Assign to yourself when you begin work.

- Download and resize the image and put it into the proper img folder.

- Add listing alphabetically to proper .yml file.

- Commit changes mentioning this issue number with `closes #[ISSUE NUMBER HERE]`.

Answers:

username_1: I removed the country tag as this vendor does ship to CIS member states. I also added the lang tag because this page is only in Russian. And I added the Facebook page.

Status: Issue closed

|

justinzm/gopup | 765502025 | Title: 青岛市市南区哪有特殊服务的洗浴_凤凰时尚

Question:

username_0: 青岛市市南区妹子真实找上门服务╋薇:781372524美女】余位明星,亿红包。双十一苏宁大红包,你抢到了吗日晚点半,湖南卫视苏宁易购嗨爆夜,王一博、肖战、杨洋、江疏影、赵薇、黄晓明、周冬雨,吴亦凡等众多顶级流量助阵,陪众多粉丝双十一买买买,娱乐、剁手双丰收。 更有百亿补贴震撼归来神仙水、、资生堂、戴森等网红产品直接对标行业最低价。包含空调、冰洗、厨卫、数码、母婴、体育、通讯、百货等全品类的点爆发会场,持续高能。 此次华为手机拿下了湖南卫视苏宁易购嗨爆夜总冠名,场内外会员免费抽台华为手机,搏一搏,变 在现场嘉宾表演的同时,苏宁易购同步开启明星专属红包雨,亿红包共分轮送给所有用户,今夜是幸福的饭圈女孩们,看着偶像,抢着红包,就把东西买了,获得双倍的快乐。 现场可以看到,在这场盛宴中,火箭少女的火辣群舞超女周笔畅、张靓颖、尚雯婕再聚首李玟、蔡依林、吴青峰现场演绎经典金曲。从后到后,都能找到属于自己的青春。 除此之外,中国女排教练郎平携队员丁霞、朱婷和张常宁现身,与苏宁控股集团副总裁张康阳同台互动,支持中国造,为观众送出一整个集装箱的“中国制造大礼包”,包含红旗、海尔对开多门冰箱、联想笔记本电脑、美的空调等奖品,燃爆全场。网友感慨:民族品牌走向世界,那些中奖的小伙伴,真让人实名酸了。敖戳侠牙埔https://github.com/justinzm/gopup/issues/11912?D6yON <br />https://github.com/trailofbits/manticore/issues/1866 <br />https://github.com/justinzm/gopup/issues/11788?hjtef <br />https://github.com/justinzm/gopup/issues/12088?68750 <br />https://github.com/getmeli/meli/issues/20?49734 <br /> |

blunden/DoNotDisturbSync | 449453579 | Title: App missing in Wear OS 2.6

Question:

username_0: Recently updated my watch to Wear OS 2.6 and the app is no longer in the list. Google Play shows it still as installed though. Also it's not syncing anymore. This is a great little piece of software but unfortunately seems like broken in newer versions.

Answers:

username_1: It's not working for me either on Wear OS 2.8, I have the same problems listed above.

Status: Issue closed

username_2: Yes, it was removed from the Play Store because it uses legacy APIs.

See my response linked below for how to make it work on newer Wear OS versions before a new version is published.

https://github.com/username_2/DoNotDisturbSync/issues/6#issuecomment-1012545839 |

Holzhaus/mixxx-gh-issue-migration | 873232016 | Title: Cannot export or import playlists, blank file selection dialog

Question:

username_0: I've had this issue since 1.10, and with 1.11 it's still there. Essentially, whenever I try to export a playlist, the file selection dialog appears as a blank window, making it impossible to continue (see attached screenshot).

A couple of notes:

- It seems someone already reported it as bug 887429, which seems to have expired a while ago

- I'm using Ubuntu 13.10, and with 13.04 I could reproduce it as well

- Exporting crates shows exactly the same problem

- Importing a playlist shows a similar problem, although it seems there there is a small area in the file dialog where the file system is shown. Nevertheless, it also prevents from using the dialog, so playlists cannot be imported either (see the other attached screenshot) |

discordjs/discord.js | 1008345321 | Title: "Unknown Interaction" but the interaction isn't "Unknown"

Question:

username_0: ### Issue description

1. Create a awaitMessageComponent

2. update it

### Code sample

```typescript

let battleMenuResponse = await battleMessage.awaitMessageComponent({

filter, componentType: "SELECT_MENU", time: ms("50 seconds")

})

console.log(battleMenuResponse.update)

await battleMenuResponse.update({ content: "yes" })

console:

```

[AsyncFunction: update]

DiscordAPIError: Unknown interaction

```

this does not make sense, i also logged `battleMenuResponse` and it logged

```

SelectMenuInteraction {

type: 'MESSAGE_COMPONENT',

id: '892081287748259920',

applicationId: '886550857926213633',

channelId: '886479373753004045',

guildId: '886479373753004042',

```

```

### discord.js version

13

### Node.js version

16

### Operating system

windows

### Priority this issue should have

High (immediate attention needed)

### Which partials do you have configured?

No Partials

### Which gateway intents are you subscribing to?

GUILDS, GUILD_MEMBERS, GUILD_PRESENCES, GUILD_MESSAGES, GUILD_MESSAGE_REACTIONS

### I have tested this issue on a development release

_No response_<issue_closed>

Status: Issue closed |

zhuochun/md-writer | 123983895 | Title: Consider changing some of your default keybindings

Question:

username_0: Your keybindings for indenting lists and continuing lists on enter override the autocomplete feature in atom, which can be a quite painful an issue to track down because the atom-global keys for auto-completion (enter or tab) just wouldn't work on any markdown-files because of markdown-writer's bindings. I fixed it now by disabling all your keybindings and putting this in my keymap:

```cson

"atom-workspace atom-text-editor:not([mini])[data-grammar~='gfm']":

"ctrl-enter": "markdown-writer:insert-new-line"

"ctrl-tab" : "markdown-writer:indent-list-line"

```

but I just wanted to suggest you also change those bindings. May confuse people updating the package then, but I think badness-by-default is not a good idea.

Status: Issue closed

Answers:

username_1: Duplicated of #98 . Will be fixed in next release. |

canjs/can-define-stream | 183565029 | Title: Complete stream API

Question:

username_0: Complete the `stream` property definition behavior from https://github.com/canjs/can-stream/issues/2

```js

stream( stream ) {

const fooStream = this.stream('foo')

.map( foo => foo.toUpperCase() );

return stream

.merge(fooStream)

.combineLatest(this.stream('bar'));

},

```<issue_closed>

Status: Issue closed |

jetstack/version-checker | 684009823 | Title: Give info statement on runtime if test-all-containers is false

Question:

username_0: If the argument `test-all-containers` is false (default) , chances are that it won't process a thing. Therefore place a clear info message in the logs that it's running on false. That gives a better indication on what is going on. |

MiniMau5/AirBNBFakesDataReport | 209045811 | Title: possible text

Question:

username_0: ***Please do not book instant book before contact me!***

mim@ bnbvacation . rentals

Remove the spaces from the email address so you can contact me

All the bookings made without prior contact will be canceled !

To see if the dates are available and so I can send you more photos email me at:

m<EMAIL>. rentals

The calendar is updating so please send me first your dates at:

mim@<EMAIL> .rentals |

elasticdog/transcrypt | 312250931 | Title: Document creating your own adapter

Question:

username_0: Omg I’m so sorry. I had two tabs open on my phone and created all these issues on the wrong repo! I’ll close these when I get on. So sorry about that!

>

Answers:

username_1: Adapter? Can you explain what you mean by this request?

username_0: Omg I’m so sorry. I had two tabs open on my phone and created all these issues on the wrong repo! I’ll close these when I get on. So sorry about that!

>

username_1: No problem...I can close them out.

Status: Issue closed

|

dbeaver/dbeaver | 565198502 | Title: Hot key F3 doesn't work for the fisrt time - continuance (#7792)

Question:

username_0: **System information:**

Windows 7 (64-bit) Build 7601 (Service Pack 1)

DBeaver 6.3.4.202002011957

**Connection specification:**

PostgreSQL 10.10

PostgreSQL JDBC Driver 42.2.9

**Describe the problem you're observing:**

Occasionally after the first (initial) press of hot key F3 ("SQL Editor") on Database Navigator over Connection, the window of "SQL Scripts" temporary appears and immediately closes. After the second press F3, it appears again and stays permanently.

However, I observe, a click "SQL Editor" from the right-mouse pop-up menu never causes such behaviour.

See https://youtu.be/ALuZm5EDDaU for more details.

Answers:

username_1: thanks for the bug report

username_2: Fixed.

This bug was caused by new tooltips renderer. It forces focus change on tooltip hide and thus close popup panel.

Status: Issue closed

username_3: verified |

skroutz/elasticsearch-analysis-turkishstemmer | 233811311 | Title: How to install

Question:

username_0: How can i install stemmer plugin on Elastic 5.x ? is it compatible ?

Answers:

username_1: Thanks for getting in touch. I've updated our README with a quick installation guide. Yes it's compatible with ES5.4. I hope it helps.

Status: Issue closed

|

Azure/azure-sdk-for-net | 238232095 | Title: ArgumentException on Mono

Question:

username_0: We have some components that target .NET Framework 4.6 when built, but run on Linux via Mono. In one of those components, we use the Azure KeyVault client, which uses the `Microsoft.Rest.ServiceClient` class from here.

We had [one exception with ADAL](https://github.com/AzureAD/azure-activedirectory-library-for-dotnet/issues/509), which we have a workaround for and they are fixing for the next version.

However we also have another exception, only when running on Linux:

```

Unhandled Exception:

System.ArgumentException: Value does not fall within the expected range.

at System.Net.Http.Headers.Parser+Token.Check (System.String s) [0x00019] in <f9ac0c719f3449a0aa7ac0136a1ad250>:0

at System.Net.Http.Headers.ProductHeaderValue..ctor (System.String name, System.String version) [0x0000a] in <f9ac0c719f3449a0aa7ac0136a1ad250>:0

at System.Net.Http.Headers.ProductInfoHeaderValue..ctor (System.String productName, System.String productVersion) [0x00006] in <f9ac0c719f3449a0aa7ac0136a1ad250>:0

at Microsoft.Rest.ServiceClient`1[T].get_DefaultUserAgentInfoList () [0x0003f] in <7d943830d9bc44fab4bd01d7a9130a9c>:0

at Microsoft.Rest.ServiceClient`1[T].SetUserAgent (System.String productName, System.String version) [0x00010] in <7d943830d9bc44fab4bd01d7a9130a9c>:0

at Microsoft.Rest.ServiceClient`1[T].InitializeHttpClient (System.Net.Http.HttpClient httpClient, System.Net.Http.HttpClientHandler httpClientHandler, System.Net.Http.DelegatingHandler[] handlers) [0x00092] in <7d943830d9bc44fab4bd01d7a9130a9c>:0

at Microsoft.Rest.ServiceClient`1[T].InitializeHttpClient (System.Net.Http.HttpClientHandler httpClientHandler, System.Net.Http.DelegatingHandler[] handlers) [0x00000] in <7d943830d9bc44fab4bd01d7a9130a9c>:0

at Microsoft.Rest.ServiceClient`1[T]..ctor (System.Net.Http.HttpClientHandler rootHandler, System.Net.Http.DelegatingHandler[] handlers) [0x00006] in <7d943830d9bc44fab4bd01d7a9130a9c>:0

at Microsoft.Rest.ServiceClient`1[T]..ctor (System.Net.Http.DelegatingHandler[] handlers) [0x00006] in <7d943830d9bc44fab4bd01d7a9130a9c>:0

at Microsoft.Azure.KeyVault.KeyVaultClient..ctor (System.Net.Http.DelegatingHandler[] handlers) [0x00000] in <b66b7a9568464c98a370d795acdef11c>:0

at Microsoft.Azure.KeyVault.KeyVaultClient..ctor (Microsoft.Rest.ServiceClientCredentials credentials, System.Net.Http.DelegatingHandler[] handlers) [0x00000] in <b66b7a9568464c98a370d795acdef11c>:0

at Microsoft.Azure.KeyVault.KeyVaultClient..ctor (Microsoft.Azure.KeyVault.KeyVaultClient+AuthenticationCallback authenticationCallback, System.Net.Http.DelegatingHandler[] handlers) [0x00007] in <b66b7a9568464c98a370d795acdef11c>:0

at KeyVaultSecrets.KeyVaultHelper.RetrieveSecret (System.String secretKey) [0x00081] in <23498eee002143eda79164cb7d001b9d>:0

at KeyVaultSecrets.Program.Main (System.String[] args) [0x0001c] in <23498eee002143eda79164cb7d001b9d>:0

[ERROR] FATAL UNHANDLED EXCEPTION: System.ArgumentException: Value does not fall within the expected range.

at System.Net.Http.Headers.Parser+Token.Check (System.String s) [0x00019] in <f9ac0c719f3449a0aa7ac0136a1ad250>:0

at System.Net.Http.Headers.ProductHeaderValue..ctor (System.String name, System.String version) [0x0000a] in <f9ac0c719f3449a0aa7ac0136a1ad250>:0

at System.Net.Http.Headers.ProductInfoHeaderValue..ctor (System.String productName, System.String productVersion) [0x00006] in <f9ac0c719f3449a0aa7ac0136a1ad250>:0

at Microsoft.Rest.ServiceClient`1[T].get_DefaultUserAgentInfoList () [0x0003f] in <7d943830d9bc44fab4bd01d7a9130a9c>:0

at Microsoft.Rest.ServiceClient`1[T].SetUserAgent (System.String productName, System.String version) [0x00010] in <7d943830d9bc44fab4bd01d7a9130a9c>:0

at Microsoft.Rest.ServiceClient`1[T].InitializeHttpClient (System.Net.Http.HttpClient httpClient, System.Net.Http.HttpClientHandler httpClientHandler, System.Net.Http.DelegatingHandler[] handlers) [0x00092] in <7d943830d9bc44fab4bd01d7a9130a9c>:0

at Microsoft.Rest.ServiceClient`1[T].InitializeHttpClient (System.Net.Http.HttpClientHandler httpClientHandler, System.Net.Http.DelegatingHandler[] handlers) [0x00000] in <7d943830d9bc44fab4bd01d7a9130a9c>:0

at Microsoft.Rest.ServiceClient`1[T]..ctor (System.Net.Http.HttpClientHandler rootHandler, System.Net.Http.DelegatingHandler[] handlers) [0x00006] in <7d943830d9bc44fab4bd01d7a9130a9c>:0

at Microsoft.Rest.ServiceClient`1[T]..ctor (System.Net.Http.DelegatingHandler[] handlers) [0x00006] in <7d943830d9bc44fab4bd01d7a9130a9c>:0

at Microsoft.Azure.KeyVault.KeyVaultClient..ctor (System.Net.Http.DelegatingHandler[] handlers) [0x00000] in <b66b7a9568464c98a370d795acdef11c>:0

at Microsoft.Azure.KeyVault.KeyVaultClient..ctor (Microsoft.Rest.ServiceClientCredentials credentials, System.Net.Http.DelegatingHandler[] handlers) [0x00000] in <b66b7a9568464c98a370d795acdef11c>:0

at Microsoft.Azure.KeyVault.KeyVaultClient..ctor (Microsoft.Azure.KeyVault.KeyVaultClient+AuthenticationCallback authenticationCallback, System.Net.Http.DelegatingHandler[] handlers) [0x00007] in <b66b7a9568464c98a370d795acdef11c>:0

```

I've traced the problem to [this code](https://github.com/Azure/azure-sdk-for-net/blob/d6c9636ef100346afee80619beb191d31c5cba07/src/SdkCommon/ClientRuntime/ClientRuntime/ServiceClient.cs#L134-L137), which assumes that its safe to get the OS Name and Version from the registry if compiled with `FullNetFx`. However, that directive only tells you that the compilation target was the full framework. It doesn't tell you that the runtime environment is Windows. It's not safe to call Win32 functions from there, which the `OsName` and `OsVersion` properties do [here](https://github.com/Azure/azure-sdk-for-net/blob/71c19f830bcaffdacfb043b3f8f4278c39f653b4/src/SdkCommon/ClientRuntime/ClientRuntime/ServiceClient.cs#L64-L100).

I've got a temporary workaround, which is to create fake entries in Mono's emulated registry that return something for these values, but the real fix would be to not rely on registry at all, but rather use the information in `System.Environment.OSVersion` for the full framework, or from `System.Runtime.InteropServices.RuntimeInformation` if you were to target .NET Standard.

Answers:

username_1: @username_0 thank you for reporting this.

We will have this in our plans for our next release, but seems you have a workaround that unblocks you but generating the required entries in your virtual hive

username_0: Yes, thanks. :)

username_2: I'm experiencing this exact issue when using the KeyVaultClient running Mono on macOS.

username_2: @username_0 Do you happen to know how to create fake entries in the Mono virtual registry on macOS? The path seems to be `/Library/Frameworks/Mono.framework/Versions/Current/etc/mono/registry`, but that directory only contains an empty `LocalMachine` directory on my machine.

How exactly do you create the fake entries to get around this issue?

username_2: Never mind, I figured it out! If anyone else is having this problem, you can add the fake registry keys by doing something along these lines:

`Registry.LocalMachine.CreateSubKey("SOFTWARE\\Microsoft\\Windows NT\\CurrentVersion").SetValue("ProductName", "Windows 8.1 Pro")`

username_3: @username_0, I have the same issue while trying to use Azure Service Bus library on Mono on Linux.

How did you do the workaround?

How to add the fake entries in Mono's emulated registry?

username_0: @username_3 - see the comment above yours for how to create them programatically. Or, if desired, you can create the files manually. They sit in `/etc/mono/registry`. I don't want to write a tutorial here, but I'm sure you can find some examples on the web.

username_4: I also stumbled on this problem when creating a `ServiceBusManagementClient` instance (from the [Microsoft.Azure.Management.ServiceBus](https://www.nuget.org/packages/Microsoft.Azure.Management.ServiceBus/) package).

Here is what I did to workaround the problem on macOS.

1. Create the fake registry directory structure:

```

$ cd /Library/Frameworks/Mono.framework/Versions/Current/etc/mono/registry/LocalMachine

$ sudo mkdir -p "SOFTWARE/Microsoft/Windows NT/CurrentVersion"

$ cd "SOFTWARE/Microsoft/Windows NT/CurrentVersion"

```

2. Check my actual system values:

```

$ cat /System/Library/CoreServices/SystemVersion.plist

```

```xml

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>ProductBuildVersion</key>

<string>16G1314</string>

<key>ProductCopyright</key>

<string>1983-2018 Apple Inc.</string>

<key>ProductName</key>

<string>Mac OS X</string>

<key>ProductUserVisibleVersion</key>

<string>10.12.6</string>

<key>ProductVersion</key>

<string>10.12.6</string>

</dict>

</plist>

```

Create a `values.xml` file in the previously created directory structure, i.e.`/Library/Frameworks/Mono.framework/Versions/Current/etc/mono/registry/LocalMachine/SOFTWARE/Microsoft/Windows NT/CurrentVersion`:

```xml

<values>

<value name="ProductName" type="string">Mac OS X</value>

<value name="CurrentVersion" type="string">10.12.6</value>

<value name="CurrentBuild" type="string">16G1314</value>

</values>

```

After saving this file, I was able to successfully create a `ServiceBusManagementClient`.

username_5: This is no longer a problem in the new Azure.Core that replaces this library. All libraries will be moving to be based on Azure.Core over time. Hopefully this year for many of the management libraries. Until then, we recommend using the workaround.

Status: Issue closed

|

Nordstrom/kafka-connect-lambda | 607141368 | Title: AwsRegionProviderChain class not found

Question:

username_0: I get this error when I try to create a connector:

```

"java.lang.NoClassDefFoundError: org/apache/commons/logging/LogFactory\n\tat com.amazonaws.regions.AwsRegionProviderChain.<clinit>(AwsRegionProviderChain.java:33)\n\tat com.amazonaws.client.builder.AwsClientBuilder.<clinit>(AwsClientBuilder.java:60)\n\tat com.nordstrom.kafka.connect.lambda.InvocationClient$Builder.<init>(InvocationClient.java:126)\n\tat com.nordstrom.kafka.connect.lambda.InvocationClientConfig.<init>(InvocationClientConfig.java:55)\n\tat com.nordstrom.kafka.connect.lambda.LambdaSinkConnectorConfig.<init>(LambdaSinkConnectorConfig.java:52)\n\tat com.nordstrom.kafka.connect.lambda.LambdaSinkConnector.start(LambdaSinkConnector.java:31)\n\tat org.apache.kafka.connect.runtime.WorkerConnector.doStart(WorkerConnector.java:110)\n\tat org.apache.kafka.connect.runtime.WorkerConnector.start(WorkerConnector.java:135)\n\tat org.apache.kafka.connect.runtime.WorkerConnector.transitionTo(WorkerConnector.java:195)\n\tat org.apache.kafka.connect.runtime.Worker.startConnector(Worker.java:257)\n\tat org.apache.kafka.connect.runtime.distributed.DistributedHerder.startConnector(DistributedHerder.java:1183)\n\tat org.apache.kafka.connect.runtime.distributed.DistributedHerder.access$1400(DistributedHerder.java:125)\n\tat org.apache.kafka.connect.runtime.distributed.DistributedHerder$15.call(DistributedHerder.java:1199)\n\tat org.apache.kafka.connect.runtime.distributed.DistributedHerder$15.call(DistributedHerder.java:1195)\n\tat java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)\n\tat java.base/java.lang.Thread.run(Thread.java:834)\nCaused by: java.lang.ClassNotFoundException: org.apache.commons.logging.LogFactory\n\tat java.base/java.net.URLClassLoader.findClass(URLClassLoader.java:471)\n\tat java.base/java.lang.ClassLoader.loadClass(ClassLoader.java:589)\n\tat org.apache.kafka.connect.runtime.isolation.PluginClassLoader.loadClass(PluginClassLoader.java:104)\n\tat java.base/java.lang.ClassLoader.loadClass(ClassLoader.java:522)\n\t... 18 more\n"

```

Connector config:

```

{

"tasks.max": "1",

"connector.class": "com.nordstrom.kafka.connect.lambda.LambdaSinkConnector",

"topics": "<my-topic>",

"key.converter": "org.apache.kafka.connect.storage.StringConverter",

"value.converter": "org.apache.kafka.connect.storage.StringConverter",

"aws.region": "us-east-1",

"aws.lambda.function.arn": "arn:aws:lambda:us-east-1:<my-arn>",

"aws.lambda.batch.enabled": "true",

"aws.lambda.invocation.mode": "ASYNC",

"aws.lambda.invocation.failure.mode": "DROP"

}

```

v1.2.2 of connector and Kafka Connect from Kafka v2.12-2.4.1 on Ubuntu 18.04.04. I get my AWS credentials from the EC2 instance profile.

Answers:

username_1: I do not recognize this. Do you have other Connect plugins installed?

username_0: Yes I have the Confluent S3 and Lambda sinks both installed and working.

username_2: `LogFactory` is a class found in the `commons-logging-1.2.jar` which is usually part of the Kakfa Connect distribution. For example, it can be found in `$CONFLUENT_HOME/share/java/kafka. common-logging-1.2.ja`r It is not included in the kafka-connect-lambda jar to avoid version conflict at runtime.

username_0: Makes sense. Is there anything I can troubleshoot to figure out why it wouldn't be finding the library? I am using some confluent connector plugins but I am running the community Kafka Connect binary. The confluent connector plugins are not distributed as uber jars and I notice that `common-loggings` is included in their `lib/` directories. I don't see it in my `kafka/libs` though.

username_2: You are right. I don't see `commons-logging-1.2.jar` in the Apache tarball, but do see it in several places (including in connector directories) in a Confluent install.

As a work-around, you can put the logging jar file somewhere on your kafka-connect classpath, or in the same directory as the `kafka-connect-lambda.jar`.

We'll look into including the logging jar into a future release.

username_0: Thanks, that work-around worked

username_1: This is addressed with 1.3.0 release.

Status: Issue closed

|

noamt/elasticsearch-grails-plugin | 160325306 | Title: Error on Grails V2.5.0 with Elasticsearch plugin V0.1.0

Question:

username_0: org.springframework.beans.factory.BeanCreationException: Error creating bean with name 'searchableClassMappingConfigurator': Invocation of init method failed; nested exception is java.lang.IllegalArgumentException: Mapper for [applicableSeries] conflicts with existing mapping in other types[object mapping [applicableSeries] can't be changed from nested to non-nested]

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.lang.IllegalArgumentException: Mapper for [applicableSeries] conflicts with existing mapping in other types[object mapping [applicableSeries] can't be changed from nested to non-nested]

at org.elasticsearch.index.mapper.MapperService.checkNewMappersCompatibility(MapperService.java:363)

at org.elasticsearch.index.mapper.MapperService.merge(MapperService.java:319)

at org.elasticsearch.index.mapper.MapperService.merge(MapperService.java:265)

at org.elasticsearch.cluster.metadata.MetaDataMappingService$2.execute(MetaDataMappingService.java:444)

at org.elasticsearch.cluster.service.InternalClusterService$UpdateTask.run(InternalClusterService.java:388)

at org.elasticsearch.common.util.concurrent.PrioritizedEsThreadPoolExecutor$TieBreakingPrioritizedRunnable.runAndClean(PrioritizedEsThreadPoolExecutor.java:231)

at org.elasticsearch.common.util.concurrent.PrioritizedEsThreadPoolExecutor$TieBreakingPrioritizedRunnable.run(PrioritizedEsThreadPoolExecutor.java:194)

... 3 more

Config:

Status: Issue closed

Answers:

username_0: Other Domain has the same name but different nodes

```

class C{

static searchable={

....

applicableSeries component: 'inner'

}

}

``` |

famsf/pecl-pdo-4d | 214881741 | Title: 'fourd.h' file not found

Question:

username_0: `pecl install pdo_4D-0.3`

Generates error:

In file included from /private/tmp/pear/temp/pdo_4d/pdo_4d.c:28:

'fourd.h' file not found

Does anyone know what is causing this?

My PHP version is: 5.6.2<issue_closed>

Status: Issue closed |

swsnu/swpp2019-team10 | 520899445 | Title: [New API Needed] About user backend

Question:

username_0: I think we need some modification / additional api:

1. I think our servcies doesn't **have to** need user's `age`, `phone_number`, `gender`. It is good for remain optional fields. I think we can let user select they can submit addtional (not-essential) information on signing-up or edit it on their `my page`.

2. We need the api which returns user's `id` when the user's `username` (not `nickname`) is given. (`-1` when not found). We have to develop duplicated ID check feature.

Answers:

username_1: Should I make branch from dev or master?

username_0: I think making branch is good.

I realized we should modify the `POST` api of `signup` to return the dict of

`{"id": int, "username":string, "<PASSWORD>":<PASSWORD>, "phone_number":string, "age":int, "gender":string}`

so that I can implement the uploading profile picture feature.

username_0: I think we need to modify the `signup` POST method to return the dictionary of newly created user information with given ID. Using that we can implement uploading of profile picture.

username_2: I have changed codes @username_0 mentioned.

1. signup post method returns id

2. signup post method and user put method don't requires ```age```, ```phone_number```, ```gender```.

3. new signup_dupcheck post method added.

url : /api/signup_dupcheck/

requires : content-type = application/json, ```{'username': string}```

returns : json type ```{'id': some integer if username exist, -1 o.w.}``` |

ropensci/tidyhydat | 330731191 | Title: Battery Voltage Information

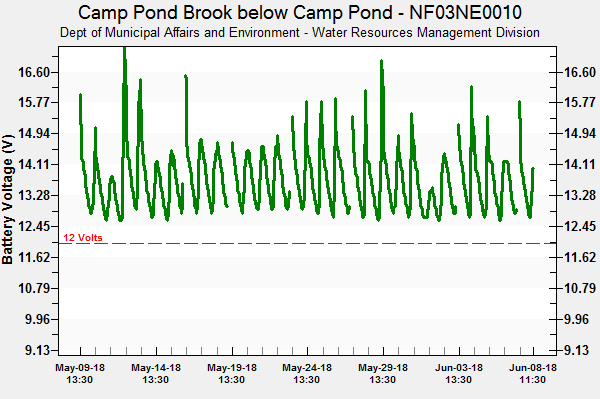

Question:

username_0: Is it possible to view the Battery Voltage data from tidyhydat.

Sometimes the transmission error in flow and level values can be captured using battery voltage information. An example of how battery voltage graph appears is shown below:

Answers:

username_1: Does you have a public facing data source for this?

username_0: From our Provincial site but not through water survey canada:Stn# - NF03OB0042

|

|

|

| | |

|

|

|

| |

Stn# - NF03OB0042

|

|

|

Status: Issue closed

username_1: Until this is a public facing data source it will have to stay out of tidyhydat. |

creativecommons/creativecommons.github.io-source | 568985162 | Title: Back to top button not working on small screens once the user has reached footer.

Question:

username_0: ## Describe the bug

Back to top button is not working for the small screen(around 700px or less) once the user navigates to the footer of the page.

## To Reproduce

Steps to reproduce the behaviour:

1. Visit any page which has Back to top button visible, scroll down to the footer of the page and try clicking on the Back to top button, it will get stuck.

## Expected behavior

It must take back to top.

## Screenshots

## Desktop (please complete the following information)

- OS: (ex. iOS)

- Browser (ex. chrome, safari)

- Version (ex. 22)

## Smartphone (please complete the following information)

- Device: (ex. iPhone6)

- OS: (ex. iOS8.1)

- Browser (ex. stock browser, safari)

- Version (ex. 22)

## Additional context

Add any other context about the problem here.

Answers:

username_0: @username_1 Whenever Back to top button floats over footer part, It stops working for **Small screen devices**, I have verified this from my phone and also by minimizing the browser on minelaptop.

username_1: Thanks for reporting this @username_0

username_2: Hey! I would like to work on this issue.

username_0: @username_2 please go ahead. To edit the content you will have to go to **templates** folder and then into **layout.html** file.

username_2: Hey, I think that this could be fixed by changing the `z-index` css property of the back-to-top button. But I realised that the CSS files are built and are not part of the repository. How should I modify the css file that is built?

username_1: CSS is built from this file in the repo: https://github.com/creativecommons/creativecommons.github.io-source/blob/master/webpack/sass/main.scss

username_0: @username_2 Yeah, that's perfect.

username_2: Waiting for review!

Status: Issue closed

|

peak/s5cmd | 611167113 | Title: Add 'Why s5cmd' section to README

Question:

username_0: We should keep a list of

- why this tool is written and why we think it's awesome

- why people should prefer it over awscli

- written articles about `s5cmd`. [1](https://aws.amazon.com/blogs/opensource/parallelizing-s3-workloads-s5cmd/), [2](https://medium.com/@joshua_robinson/s5cmd-for-high-performance-object-storage-7071352cc09d), [3](https://medium.com/@joshua_robinson/s5cmd-hits-v1-0-and-intro-to-advanced-usage-37ad02f7e895)

Status: Issue closed

Answers:

username_1: Closed by: #164 |

indiana-university/rivet-source | 400319633 | Title: Combobox/typeahead lookup component

Question:

username_0: ## New component

Another frequent request and Q3 priority is a typeahead lookup component where the user can start typing a search term and be presented with a list of matches from a set of data. This is another design pattern that is very tricky to actually make accessible to screen readers and keyboard-only users. The gov.UK team has done a lot of work and testing on their solution, which they have also open-sourced here:

https://github.com/alphagov/accessible-autocomplete

You can see some examples of the different configuration options here:

https://alphagov.github.io/accessible-autocomplete/examples/

@ahlord did some testing with this a while back, and it seems to be a pretty good solution to a difficult problem. The gov.uk plugin has a lot of nice options including the ability to pass in a `cssNamespace` option that can be used for theming. I've done a bit more on the theming and a simplified use case based on Austin's original prototype here:

https://codepen.io/levimcg/pen/gjRaLB

Answers:

username_1: [mtwagner commented on Oct 25, 2018](https://github.iu.edu/UITS/rivet-source/issues/332#issuecomment-25367)

***

will the source be able to be the returned results of an ajax call? Thanks. Sorry about the duplicate.

username_1: [lmcgrana commented on Oct 25, 2018](https://github.iu.edu/UITS/rivet-source/issues/332#issuecomment-25368)

***

@mtwagner No problem at all! That's a good question. There My initial thoughts are to make the source/data that gets filtered part of a config option. This one will be a bit different form other Rivet JavaScript components/plugins in that each autocomplete/combo box will need to be instantiated and configured so that you can control things like the data source.

So I'm thinking some thing like:

```js

const myFirstAutocomplete = new RivetAutoComplete({

data: sourceData,

// Other config options

});

I think we could design it so that sourceData is either:

```

An array of synchronous/static results (Strings) e.g. `['<NAME>', '<NAME>', '<NAME>']

A function that returns an array of results (Strings)

That way if data in the example above is a function you could do your ajax calls there. Haven't given this tons of thought, but that's what I'd like to end up with. I'm still working my way through this one as it's a real beast in terms of making it accessible/usable for folks using screen readers.

Here's a link to a very early prototype I started. There's still a lot of work to be done in terms of accessibility (keyboard navigation, labeling, etc.).

https://codepen.io/levimcg/pen/XPyxyW

Would love to hear some thoughts on how you see it working and what all types of options you'd like to have. Thanks! 🙌

Status: Issue closed

|

lerna/lerna | 350556857 | Title: How to ignore node_modules of internal dependencies on bootstrapping packages

Question:

username_0: First thank you for the nice mono repo manager. I'm working on angular applications as my packages.For internal dependencies when the package1 is install in "node_module" of package2, if it( package1 ) includes "node_modules", I get an error that mentions like this "not found index.ts " and after adding it in tsConfig.json I face another issue "No NgModule metadata found for 'AppModule'" when I'm trying to run the app or build it, but if I remove "node_modules" folder from package2/node_modules/@MyPackages/package1/**node_modules** , every thing is fine. So how can I ignore "node_modules" of internal dependencies on bootstrapping packages?

## Environment

"@angular/core": "^6.1.2",

"@angular/cli": "^6.1.3",

"typescript": "~2.9.2"

| Executable | Version |

| ---: | :--- |

| `lerna --version` | ^2.11.0|

| `npm --version` | 5.6.0|

| `node --version` | v8.11.3|

| OS | Version |

| --- | --- |

| Windows7| |

-->

Answers:

username_1: This looks like a TypeScript or Angular bug, not Lerna.

One thing to note is that lerna manages npm packages, not magical ng-packagr artifacts. Wherever your leaf package.json's [`main`](https://docs.npmjs.com/files/package.json#main) property points to is where node will resolve `import` (or `require()`) paths.

username_0: Your right. Because after I upgrade angular from 5 to 6, this issue appeared. But can I bootstrap the internal packages while ignore "node_modules" folder in Lerna? because this approach is going to solve my issue. Right now I'm doing manually

username_1: I'm afraid I don't understand what you mean by "bootstrap internal packages while ignoring `node_modules`".

username_0: Actually the packages that I'm work on them they are runnable application as well, and I face the issue only when I want to run each packages. I think there is nothing that Lerna can do for me because the concept of Lerna is deferent as my expectoration. Lerna Only creates a shortcut of each internal dependencies. Thanks again for your quick response.

username_1: Ah, yes, that sounds like it. Happy to help clarify usage expectations.

Status: Issue closed

username_2: @username_0, So how you fixed it? cause I got same error and for now I'm fixing it by removing node_modules manually |

buildkite/lifecycled | 614403655 | Title: Using lifecycled to handle Autoscaling terminations and spot terminations

Question:

username_0: Is using a single lifecycled daemon to listen and respond to Autoscaling terminations and Spot terminations a use case that is expected to be working?

I'm seeing some inconsistent results when attempting to use it in this fashion.

When I start the daemon with `--no-spot` it is able to handle the Autoscaling terminations just fine, so thanks! However, when I remove that flag and attempt to have it handle the Spot termination warning events as well, I'm not having success.

Additionally, when I restart the daemon (without changing configurations) sometimes I will see it logging that the `spot` listener is polling sqs for messages, which to me, is unexpected. Other times the `spot` listener will log that it is polling ec2 metadata for spot termination notices, which is more of what I expect. This happens on the same host, not changing configurations, it appears that just stopping and starting the daemon results in different behavior.

Example log excerpt with expected log entries:

```

time="2020-05-07T18:35:39Z" level=info msg="Looking up instance id from metadata service"

time="2020-05-07T18:35:39Z" level=info msg="Starting listener" instanceId=i-instanceid listener=spot

time="2020-05-07T18:35:39Z" level=info msg="Starting listener" instanceId=i-instanceid listener=autoscaling

time="2020-05-07T18:35:39Z" level=info msg="Waiting for termination notices" instanceId=i-instanceid

time="2020-05-07T18:35:39Z" level=debug msg="Creating sqs queue" instanceId=i-instanceid listener=autoscaling queue=lifecycled-i-instanceid

time="2020-05-07T18:35:39Z" level=debug msg="Subscribing queue to sns topic" instanceId=i-instanceid listener=autoscaling topic="arn:aws:sns:us-east-1:account:terminate-hook-name"

time="2020-05-07T18:35:40Z" level=debug msg="Polling sqs for messages" instanceId=i-instanceid listener=autoscaling queueURL="https://sqs.us-east-1.amazonaws.com/account/lifecycle

d-i-instanceid"

time="2020-05-07T18:35:44Z" level=debug msg="Polling ec2 metadata for spot termination notices" instanceId=i-instanceid listener=spot

time="2020-05-07T18:35:49Z" level=debug msg="Polling ec2 metadata for spot termination notices" instanceId=i-instanceid listener=spot

time="2020-05-07T18:35:54Z" level=debug msg="Polling ec2 metadata for spot termination notices" instanceId=i-instanceid listener=spot

time="2020-05-07T18:35:59Z" level=debug msg="Polling ec2 metadata for spot termination notices" instanceId=i-instanceid listener=spot

time="2020-05-07T18:36:00Z" level=debug msg="Polling sqs for messages" instanceId=i-instanceid listener=autoscaling queueURL="https://sqs.us-east-1.amazonaws.com/account/lifecycle

d-i-instanceid"

```

Example log excerpt with unexpected log entries:

```

time="2020-05-07T23:09:21Z" level=info msg="Looking up instance id from metadata service"

time="2020-05-07T23:09:21Z" level=info msg="Starting listener" instanceId=i-instanceid listener=spot

time="2020-05-07T23:09:21Z" level=info msg="Starting listener" instanceId=i-instanceid listener=autoscaling

time="2020-05-07T23:09:21Z" level=info msg="Waiting for termination notices" instanceId=i-instanceid

time="2020-05-07T23:09:21Z" level=debug msg="Creating sqs queue" instanceId=i-instanceid listener=autoscaling queue=lifecycled-i-instanceid

time="2020-05-07T23:09:21Z" level=debug msg="Creating sqs queue" instanceId=i-instanceid listener=spot queue=lifecycled-i-instanceid

time="2020-05-07T23:09:21Z" level=debug msg="Subscribing queue to sns topic" instanceId=i-instanceid listener=autoscaling topic="arn:aws:sns:us-east-1:account:terminate-hook-name"

time="2020-05-07T23:09:21Z" level=debug msg="Subscribing queue to sns topic" instanceId=i-instanceid listener=spot topic="arn:aws:sns:us-east-1:account:terminate-hook-name"

time="2020-05-07T23:09:21Z" level=debug msg="Polling sqs for messages" instanceId=i-instanceid listener=spot queueURL="https://sqs.us-east-1.amazonaws.com/account/lifecycled-i-instanceid"

time="2020-05-07T23:09:22Z" level=debug msg="Polling sqs for messages" instanceId=i-instanceid listener=autoscaling queueURL="https://sqs.us-east-1.amazonaws.com/account/lifecycled-i-instanceid"

```

Answers:

username_1: Hmmm, that is odd @username_0. Did #85 fix the issue for you?

username_0: Yes, it did. :)

Status: Issue closed

|

kubevirt/cluster-network-addons-operator | 650127872 | Title: [ref_id:1182]Probes for readiness should succeed [test_id:1200][posneg:positive]with working HTTP probe and http server

Question:

username_0: /kind bug

/triage build-officer

**What happened**:

`[ref_id:1182]Probes for readiness should succeed [test_id:1200][posneg:positive]with working HTTP probe and http server

/root/go/src/kubevirt.io/kubevirt/vendor/github.com/onsi/ginkgo/extensions/table/table_entry.go:43 Timed out after 60.000s. Expected <v1.ConditionStatus>: False to equal <v1.ConditionStatus>: True /root/go/src/kubevirt.io/kubevirt/tests/probes_test.go:82`

[Prow job log](https://prow.apps.ovirt.org/view/gcs/kubevirt-prow/pr-logs/pull/kubevirt_kubevirt/3726/pull-kubevirt-e2e-k8s-1.18/1278299846477877248)

**Lane:**

pull-kubevirt-e2e-k8s-1.18

Answers:

username_0: /close

@username_1 - why this is appearing on the CNAO flakefinder report page?

https://storage.googleapis.com/kubevirt-prow/reports/flakefinder/kubevirt/cluster-network-addons-operator/flakefinder-2020-07-02-024h.html

username_1: Because the [job configuration](https://github.com/kubevirt/project-infra/blob/master/github/ci/prow/files/jobs/kubevirt/cluster-network-addons-operator/cluster-network-addons-operator-periodics.yaml) misses required parameters, `org` and `repo` have to be set, otherwise they both default to `kubevirt`

username_1: /cc @phoracek @qinqon |

RustAudio/cpal | 834415997 | Title: PipeWire support

Question:

username_0: PipeWire will soon be replacing both JACK and PulseAudio on Linux starting with Fedora 34 which will be released in a few weeks. Arch currently has packages that make it easy to switch to PipeWire. I am unclear about Ubuntu's plans and just [asked them about that](https://bugs.launchpad.net/ubuntu/+source/pipewire/+bug/1802533/comments/36).

PipeWire reimplements the JACK and PulseAudio APIs. Currently the PipeWire developer is not recommending to use the new PipeWire API if applications already work well with PipeWire via JACK or PulseAudio. I am not familiar enough with cpal to know, but there might be some benefits to using the PipeWire API instead of using PipeWire via the JACK API.

I have attempted to test cpal's JACK backend with PipeWire but have not gotten it to work. The `pw-uninstalled.sh` script sets the `LD_LIBRARY_PATH` environment variable so PipeWire's reimplementation of libjack is loaded before JACK's original library. I know Rust links statically to other Rust libraries but I am unclear how Rust links to dynamic C libraries. Maybe the `LD_LIBRARY_PATH` trick does not work with Rust?

```

cpal on master is 📦 v0.13.2 via 🦀 v1.49.0

❯ cargo run --release --example beep --features jack

Finished release [optimized] target(s) in 0.03s

Running `target/release/examples/beep`

Output device: default

Default output config: SupportedStreamConfig { channels: 2, sample_rate: SampleRate(44100), buffer_size: Range { min: 170, max: 1466015503 }, sample_format: F32 }

memory allocation of 5404319552844632832 bytes failed

fish: “cargo run --release --example b…” terminated by signal SIGABRT (Abort)

cpal on master is 📦 v0.13.2 via 🦀 v1.49.0

❯ ~/sw/pipewire/pw-uninstalled.sh

Using default build directory: /home/be/sw/pipewire/build

Welcome to fish, the friendly interactive shell

Type `help` for instructions on how to use fish

cpal on master is 📦 v0.13.2 via 🦀 v1.49.0

❯ cargo run --release --example beep --features jack

Finished release [optimized] target(s) in 0.03s

Running `target/release/examples/beep`

Output device: default

Default output config: SupportedStreamConfig { channels: 2, sample_rate: SampleRate(44100), buffer_size: Range { min: 170, max: 1466015503 }, sample_format: F32 }

memory allocation of 5404319552844632832 bytes failed

fish: “cargo run --release --example b…” terminated by signal SIGABRT (Abort)

```

Related discussions regarding PipeWire and PortAudio:

https://gitlab.freedesktop.org/pipewire/pipewire/-/issues/130

https://github.com/PortAudio/portaudio/issues/425

Answers:

username_0: The PipeWire developer is very responsive and working hard on PipeWire every day. If anyone is interested in working on this for cpal, I recommend getting in touch with him through [PipeWire's issue tracker](https://gitlab.freedesktop.org/pipewire/pipewire/-/issues) and/or #pipewire on Freenode.

username_1: I'm running Arch + pipewire{,-jack,-pulse} and the jack example works here. What distro do you use?

```

Running `target/release/examples/beep`

Output device: default

Default output config: SupportedStreamConfig { channels: 2, sample_rate: SampleRate(44100), buffer_size: Range { min: 3, max: 4194304 }, sample_format: F32 }

```

username_1: Oh, actually I made a mistake; the above one is probably using PipeWire's ALSA integration.

When testing JACK you should run with `cargo run --release --example beep --features jack -- --jack`.

username_1: When I run with pipewire-jack it doesn't crash but doesn't make sound either. Strange.

username_1: Seems that we have the same regex problem as PortAudio. Fix incoming.

username_0: Hopefully it doesn't take 3 days to figure out how to find and replace a string in Rust like it did for me in C :laughing:

username_0: I recommend reading the [PipeWire wiki](https://gitlab.freedesktop.org/pipewire/pipewire/-/wikis/FAQ). There is a lot of good information there.

username_1: FYI it's https://github.com/RustAudio/cpal/blob/5cfa09042c629c1ba39bbac1a6ae9278847a9ac1/src/host/jack/stream.rs#L152 and https://github.com/RustAudio/cpal/blob/5cfa09042c629c1ba39bbac1a6ae9278847a9ac1/src/host/jack/stream.rs#L178. But I'm planning to change to a different logic based on [mpv's](https://github.com/mpv-player/mpv/blob/5824d9fff829f8bb2beebe0b82df88c8e0330115/audio/out/ao_jack.c#L146).

username_0: Hardcoding using the `system` ports in JACK is definitely not right and won't work with PipeWire at all. I am wondering why that was done. Was it just a quick hack to get something working or does this reflect a bigger problem in the cpal API? PortAudio's JACK implementation is quite quirky. Normally JACK applications create all their ports which any application communicating with the server can connect to arbitrarily. But PortAudio's abstractions flip that relationship upside down so PortAudio creates a port when the application asks to start a stream; all of the PortAudio application's ports are not available for other applications to connect to until the PortAudio application opens the stream. Does cpal's API have the same problem?

username_1: As usual, our development effort is limited and the JACK backend is quite close to a proof-of-concept. We do care about making the abstractions not leaky, though.

We register a port when you create a `Stream`... I hope it's fine? You can then start or stop the `Stream` through the methods.

https://github.com/RustAudio/cpal/blob/5cfa09042c629c1ba39bbac1a6ae9278847a9ac1/src/host/jack/stream.rs#L37

username_0: As long as the application can create JACK/PipeWire ports for other applications or the session manager to connect to without the cpal application necessarily using all of them, I think it can work. There may need to be a mechanism for signaling the cpal application when another application or hardware device has connected to/disconnected from the cpal application's port, but I'm not familiar enough with any of these APIs to be sure about that. That may be related to hotplug support (#373).

username_0: This means that a CPAL application using PipeWire could request a small buffer size for low latency. When the application goes idle, it could call Stream::pause and yield control of the buffer size back to PipeWire. This would allow keeping a low latency application open in the background, pausing it, then watching a YouTube video in Firefox without having to close any applications or mess with reconfiguring anything. If I force a JACK application using the PipeWire JACK reimplementation to use a small buffer size with the PIPEWIRE_LATENCY environment variable, YouTube videos in Firefox have glitchy audio while the other application remains running.

JACK has an API somewhat like this with [int jack_deactivate (jack_client_t * client)](https://jackaudio.org/api/group__ClientFunctions.html#ga2c6447b766b13d3aa356aba2b48e51fa), but this does not fit with CPAL's abstractions. To use this, CPAL would need to track the state of each Device's streams and call `jack_deactivate` when all the CPAL Streams for a Device are paused.

Related discussion on the PipeWire issue tracker: https://gitlab.freedesktop.org/pipewire/pipewire/-/issues/722

username_0: @username_1 if you or anyone else works on a new PipeWire backend for CPAL, I would be happy to help test it. I am afraid writing it myself would be above my skill level though.

username_2: It kinda sounds like you have the most domain expertise here. Why not have a go?

username_0: I think that would be a bit ambitious for a first Rust project.

username_0: FWIW, here is SDL's PipeWire backend: https://github.com/libsdl-org/SDL/pull/4094

username_0: This would be great for [Whisperfish](https://gitlab.com/whisperfish/whisperfish/-/issues/73)!

username_3: Any updates on this?

username_1: As far as I have tested, it seems the JACK backend (+pw-jack) works fine for low-latency use cases on PipeWire. If you have any specific thing missing that requires directly integrating with PipeWire, then please let me know with a comment.

username_4: I've been looking at developing a PW backend, but one major problem I see is the main loop.

A PipeWire application requires a main loop object to be created. An application calls the run method, which never returns (it can be terminated but you do it through sources). This would be a major problem with CPALand anything consuming it since it would never allow anything to run beyond the PW main loop. I'm guessingother audio backendsdo this, but I can't seem to find any way of stepping through the loop without using sources (e.g. timers). Thoughts about getting around this?

username_1: I don't really know, but can you run that loop on another thread? It's common to create additional helper threads in cpal backends.

username_4: I'll look at doing that -- I didn't think of that lol.

username_0: With https://github.com/RustAudio/rust-jack/pull/154 I confirm that the cpal examples work with PipeWire's implementation of JACK.

The `enumerate` example is only listing the ports cpal crates. I don't know if that's an issue with the `enumerate` example or cpal:

```

cpal on HEAD (59ee8ee) [!] is 📦 v0.13.4 via 🦀 v1.56.1

❯ cargo run --release --example enumerate --features jack

Finished release [optimized] target(s) in 0.05s

Running `target/release/examples/enumerate`

Supported hosts:

[Jack, Alsa]

Available hosts:

[Jack, Alsa]

JACK

Default Input Device:

Some("cpal_client_in")

Default Output Device:

Some("cpal_client_out")

Devices:

1. "cpal_client_in"

Default input stream config:

SupportedStreamConfig { channels: 2, sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

All supported input stream configs:

1.1. SupportedStreamConfigRange { channels: 1, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.2. SupportedStreamConfigRange { channels: 2, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.3. SupportedStreamConfigRange { channels: 4, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.4. SupportedStreamConfigRange { channels: 6, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.5. SupportedStreamConfigRange { channels: 8, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.6. SupportedStreamConfigRange { channels: 16, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.7. SupportedStreamConfigRange { channels: 24, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.8. SupportedStreamConfigRange { channels: 32, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.9. SupportedStreamConfigRange { channels: 48, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.10. SupportedStreamConfigRange { channels: 64, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

Default output stream config:

SupportedStreamConfig { channels: 2, sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

All supported output stream configs:

1.1. SupportedStreamConfigRange { channels: 1, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.2. SupportedStreamConfigRange { channels: 2, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.3. SupportedStreamConfigRange { channels: 4, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.4. SupportedStreamConfigRange { channels: 6, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.5. SupportedStreamConfigRange { channels: 8, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.6. SupportedStreamConfigRange { channels: 16, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.7. SupportedStreamConfigRange { channels: 24, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.8. SupportedStreamConfigRange { channels: 32, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.9. SupportedStreamConfigRange { channels: 48, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

1.10. SupportedStreamConfigRange { channels: 64, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2. "cpal_client_out"

Default input stream config:

SupportedStreamConfig { channels: 2, sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

All supported input stream configs:

2.1. SupportedStreamConfigRange { channels: 1, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.2. SupportedStreamConfigRange { channels: 2, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.3. SupportedStreamConfigRange { channels: 4, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.4. SupportedStreamConfigRange { channels: 6, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.5. SupportedStreamConfigRange { channels: 8, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.6. SupportedStreamConfigRange { channels: 16, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.7. SupportedStreamConfigRange { channels: 24, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.8. SupportedStreamConfigRange { channels: 32, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.9. SupportedStreamConfigRange { channels: 48, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.10. SupportedStreamConfigRange { channels: 64, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

[Truncated]

SupportedStreamConfig { channels: 2, sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

All supported output stream configs:

2.1. SupportedStreamConfigRange { channels: 1, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.2. SupportedStreamConfigRange { channels: 2, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.3. SupportedStreamConfigRange { channels: 4, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.4. SupportedStreamConfigRange { channels: 6, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.5. SupportedStreamConfigRange { channels: 8, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.6. SupportedStreamConfigRange { channels: 16, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.7. SupportedStreamConfigRange { channels: 24, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.8. SupportedStreamConfigRange { channels: 32, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.9. SupportedStreamConfigRange { channels: 48, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

2.10. SupportedStreamConfigRange { channels: 64, min_sample_rate: SampleRate(48000), max_sample_rate: SampleRate(48000), buffer_size: Range { min: 1024, max: 1024 }, sample_format: F32 }

ALSA

Default Input Device:

Some("default")

Default Output Device:

Some("default")

Devices:

...

```

username_0: https://github.com/RustAudio/cpal/pull/624 fixes the build using Pipewire for the jack feature.

username_5: I'm started working on a PipeWire host here: https://github.com/username_5/cpal/tree/pipewire-host

Any help/thoughts would be appreciated.

I think I'm fairly far along, (I haven't pushed some changes yet), but I can't find any way aside from the PipeWire Stream api to actually write/read data, and I'm not sure if my node creation code is correct.

username_0: Cool! Could you make a draft pull request?

username_0: The pw_filter API does not have Rust bindings yet. This is in progress: https://gitlab.freedesktop.org/pipewire/pipewire-rs/-/merge_requests/112

username_0: If you're creating pw_nodes manually then I don't think you strictly need pw_filter, but my understanding is that using pw_filter would be easier to implement. |

ppb/pursuedpybear | 605828244 | Title: Gamepad Support

Question:

username_0: We should support gamepads.

* [SDL docs](https://wiki.libsdl.org/CategoryGameController)

* [MDN on gamepad api](https://developer.mozilla.org/en-US/docs/Web/API/Gamepad_API)

This should include:

* Normalization of layout (SDL: [GameControllerDB](https://github.com/gabomdq/SDL_GameControllerDB)

* Hotplug support

* Metadata about button labels

* Multiple device handling

Note that this does not include joysticks, basically because none of the underlying libraries seem to care about them any more.

This might be significantly easier with #97.

Answers:

username_0: Pardon, SDL does have a separate [joystick api](https://wiki.libsdl.org/CategoryJoystick) but the web does not have a distinct API.

username_1: Also, the SDL_GameControllerDatabase: https://github.com/gabomdq/sdl_gamecontrollerdb |

simple-icons/simple-icons | 873097743 | Title:

Question:

username_0: <!--

We won't add non-brand icons or anything related to illegal services.

If in doubt, open an issue and we'll have a look.

Before opening a new issue please search for duplicate or closed

issues or PRs. If you find one for the brand your requesting then

leave a comment on it or add a reaction to the original post.

When requesting a new icon please provide the following information:

-->

**Brand Name:**

**Website:**

**Alexa rank:**

<!-- The Alexa rank can be retrieved at https://www.alexa.com/siteinfo/

Please see our contributing guidelines for more details on how we

assess a brand's popularity. -->

**Official resources for icon and color:**

<!-- for example media kits, brand guidelines, SVG files, etc. -->

<!-- For more details on our processes please see our contributing

guidelines, which can be found at

https://github.com/simple-icons/simple-icons/blob/develop/CONTRIBUTING.md -->

Answers:

username_1: Hi @username_0 - could you please elaborate on what your issue is?

PostgreSQL is already part of our library, and is actually in discussion for update at the moment (see #5561). I'll look to close this issue out over the weekend as a duplicate, unless there's another reason for you opening it.

Status: Issue closed

|

Azure/custom-script-extension-linux | 507332348 | Title: Does not work for Azure VM scale set

Question:

username_0: Using a command

````

az vmss extension set --resource-group $resourceGroup

--vmss-name $ssName

--settings customscript.json

--protected-settings customscript-protected.json

--name CustomScript --publisher Microsoft.Azure.Extensions --version 2.0

````

to install a Custom Script and execute initializing script on every Linux (Ubuntu 18 LTS) Vm in a scale set and nothing happens. The folder /var/log/azure/custom-script/ is empty, and the waagent.log does not contain any single line pertaining Custom Script extension. On the other hand, command against a single VM `az vm extension set` works fine and does what's required.

Any thoughts on how to fix that?

Answers:

username_0: CustomScript only executes automatically when the VM scale set is created with an automatic instance upgrade option. If not, each instance must be manually upgraded to execute the Custom Script.

Status: Issue closed

|

bagustris/SER_ICSigSys2019 | 913615429 | Title: model.add(LSTM(512, return_sequences=True, input_shape=(100, 34)))

Question:

username_0: Why input_shape equals to (100, 34)? 100 means time_steps? How to understand it? Thank you very much!

Answers:

username_0: I am very glad to receive your reply. I got it. Thank you very much!

I want to consult you that how long is per frame. Between 20 and 30 ms?

username_1: Typically the frame between 15-30 ms. But in this paper I used 200 ms. See the [save_feature.py code](../blob/master/code/python_files/save_feature.py) in this repo (window_n = 0.2).

So it makes sense that the number of time steps is only 200 sequences.

In my other research, I used 25 ms of frame resulting around 3000 timesteps, for instance here:

https://github.com/username_1/ravdess_song_speech/ |

vijayrkn/AzFunTools | 749951545 | Title: Better experience when adding bindings

Question:

username_0: https://twitter.com/gabrymartinez/status/1327695777058189312

One more +1000 to a better experience when adding bindings, also could you please add VS Code for this one?

Answers:

username_0: https://twitter.com/uploadtocloud/status/1327681929597116416

Would be amazing if Visual Studio would have a better binding support.

E.g. when you type “[Table” VS would suggest/autocomplete the table binding. |

ceres-solver/ceres-solver | 1034409139 | Title: jet.h has some errors

Question:

username_0: When i try to run the helloworld.cc,It shows the following error, could someone please teach me how to fix it?

Answers:

username_1: I am going to close this issue, if you have more questions/concerns please feel free to re-open it.

Status: Issue closed

|

iterative/dvc | 753900428 | Title: dvc install --use-pre-commit doesn't install pre-push and post-checkout

Question:

username_0: ## Bug Report

`dvc install` can't be used if a repository is already using `pre-commit`. While you can use `dvc install --use-pre-commit`, the `pre-push` and `post-checkout` hooks are not added to the git hooks. This behavior is not documented and feels misleading. It may be personal opinion but, I would expect there to be a way to add those hooks when using `pre-commit` and right now that doesn't appear to be the case. In order to get all of the hooks I had to delete the `pre-commit` hooks and run the following.

```console

dvc install

pre-commit install

dvc install --use-pre-commit

```

This seems overly difficult and needless.

Please let me know if I am just missing something obvious.

```console

DVC version: 1.10.2 (pip)

---------------------------------

Platform: Python 3.8.2 on macOS-10.16-x86_64-i386-64bit

Supports: http, https, s3

Cache types: reflink, hardlink, symlink

Caches: local

Remotes: s3

Repo: dvc, git

```

Answers:

username_1: This is really a pre-commit behavior thing, and is not DVC specific.

The `--use-pre-commit` flag just modifies your existing `.pre-commit-config.yaml` and adds the DVC configuration. If you are already using pre-commit then you still have to tell pre-commit to install the hook stages you wish to use. By default pre-commit only ever installs the `pre-commit` hook, and will ignore any other stages unless you explicitly tell pre-commit to install them.

So for a repo which is already using pre-commit you should be running:

```

pre-commit install --hook-type pre-push,post-checkout

dvc install --use-pre-commit

```

(this can be done in either order)

username_1: This is something that we can note in the DVC docs though, so I'll transfer this issue to the dvc.org docs repo |

facebook/buck | 517501349 | Title: Kotlin internal modifier wired incorrectly

Question:

username_0: The -module-name argument used to specify the name mangling used for Kotlin bytecode generation is incorrect. It's currently using the invokingRule shortname, which usually results in methods like

`myMethod$src_release()`

This will frequently conflict and allow expanded access from Java.

The documentation states "a Gradle source set (with the exception that the test source set can access the internal declarations of main);"

Gradle uses the fully qualified module, we should probably use the full invokingRule sans the specific action after the ":" to allow tests and all other targets within one BUCK file to have access.

[KotlincToJarStepFactory.java](https://github.com/facebook/buck/blob/master/src/com/facebook/buck/jvm/kotlin/KotlincToJarStepFactory.java)

Answers:

username_0: @IanChilds @kageiit @thalescm @kurtisnelson @leland-takamine

username_1: I believe this issue was fixed by https://github.com/facebook/buck/commit/7ddc43c4ab5883d17208e930207028144ec5b856#diff-311c95e6c277e00e1457a5ba290e0739

```

private static String getModuleName(BuildTarget invokingRule) {

return new StringBuilder()

.append(invokingRule.getBasePath().toString().replace(File.separatorChar, '.'))

.append(".")

.append(invokingRule.getShortName())

.toString();

}

```

Status: Issue closed

|

theloopkr/loopchain | 293179681 | Title: Radiostation RESTful API documentation spec error

Question:

username_0: 안녕하세요. loopchain을 테스트 해보고 있는데, 문서상에 명시된 스펙과 다른 부분이 있네요.

[GET /api/v1/peer/status?peer_id={Peer의 ID}&group_id={Peer의 group ID}&channel={channel_name}](https://github.com/theloopkr/loopchain/blob/master/radiostation_proxy_restful_api.md#get-apiv1peerstatuspeer_idpeer%EC%9D%98-idgroup_idpeer%EC%9D%98-group-idchannelchannel_name)

에는 Response Body의 스펙이 아래와 같이 명시되어 있습니다.

```json

{

"response_code": "int : RES_CODE",

"data": {

"made_block_count": "해당 Peer가 생성한 block의 수",

"status": "Peer의 연결 상태",

"audience_count": "해당 Peer를 subscription 중인 Peer의 수",

"consensus": "합의 방식(none:0, default:1, siever:2)",

"peer_id": "Peer의 ID",

"peer_type": "Leader Peer 여부",

"block_height": "Blockchain 내에서 생성된 Block 수",

"total_tx": "int: Blockchain 내에서 생성된 Transaction 수",

"peer_target": "Peer의 gRPC target 정보"

}

}

```

그런데 테스트 서버를 띄우고 API 에 request 날려 보면, 아래와 같이 던져 주고 있습니다.

```json

{

"made_block_count": 0,

"status": "Service is online: 0",

"peer_type": "0",

"audience_count": "0",

"consensus": "siever",

"peer_id": "8ab941<PASSWORD>",

"block_height": 1,

"total_tx": 1,

"peer_target": "192.168.1.80:7103",

"leader_complaint": 1

}

``` |

dotnet/maui | 626950938 | Title: Is MVU really necessary?

Question:

username_0: I think MAUI should stick with only one way of designing UI, that's: **XAML**

**Blazor Syntex** is okay, but MVU seems totally unnecessary mess to me. If it is to attract Flutter Devs, please, let them stay with Flutter; DO NOT destroy the beauty of XAML;

Answers:

username_1: Flutter has an entire [page](https://flutter.dev/docs/get-started/flutter-for/xamarin-forms-devs) dedicated to attracting people from Xamarin.Forms. You are saying we should ignore competition. Really?

username_2: Blazor bindings are beautiful! I'm just starting out with them and they offer the simplicity that Flutter does.

username_3: @davidortinau as I said in the other thread. The MAUI blog post created massive confussion. People now seem to think MVU = view as code/DSL.

But this is completely independent from what MVU is. MVU is perfectly possible with XAML. It has nothing to do with how you write the view.

It's only about creating an immutable model + update function which takes a model and msg and builds a new model and also a view function which does not mutate the model directly but sends new commands (messages) into the update loop.

username_4: It is meant for C# and .NET dev.

username_5: It has never been one way only. Code-based UIs have been supported through Xamarin.Forms from the beginning. Making that more approachable makes sense. And by the way: MVU can be used [easily with XAML](https://fsprojects.github.io/Fabulous/Fabulous.StaticView/).

username_0: I know. Sometimes we do write `new Button() { .... }`, but this [post](https://devblogs.microsoft.com/dotnet/introducing-net-multi-platform-app-ui/) (**Image 1**) confused me, and many other, I believe.

username_1: LOL. Imagine a page dedicated to "Windows Forms for WPF devs".

username_6: XAML is just a "tool" on top of the object model... You can use xaml, c#. You can architect your app using MVVM (with or without XAML) or with MVU (to be fair the examples provided were not "real" MVU but this is another topic).

If you dont like coded ui or the MVU approach just ignore it :) There is no need to push it back.

I dont think this is just to attract flutter developer. The MVU pattern is on the rise, and is very well suited for mobile development.

username_6: Also coded UI is on the rise... react, flutter, swiftUI, ecc... theyy are gaining a LOT of popularity

username_0: # What I wanted to say:

What will we choose between **Flutter/Swift/Coded-UI** thing and **WPF/XAML** with a **GUI Editor** like **Blend for Visual Studio**?

username_5: Sometimes people write entire XF apps without touching XAML – and they are happy about it ;-).

username_0: @username_5

I'm surprised..!! 😢

However, not for them, but for people like me, who wants **Blend** for Xamarin/MAUI, is unhappy:

Android Studio **Motion Editor**

https://developer.android.com/studio/write/motion-editor

https://developer.android.com/studio/images/write/motion_animation_preview.gif

Status: Issue closed

username_3: @username_0 I wonder if you still want to have blend support once you worked in a system with properly working hot reload. Usually people prefer that a lot

username_7: Coming from a XAML / Blend background, my initial thoughts around UI in code was to recoil but once I tried it, there were many benefits that I saw that I had simply not considered. The removal of the need for - what now seems like massively overly-complex but at the time felt totally reasonable - features such as converters, resources and similar have made me a real believe in code-first UIs.

username_8: @username_0 - while a capable designer sounds like a great productivity tool, if you've been around for a while, you might have worked on "legacy" codebases, where the designer has been broken and stopped working a few Visual Studio versions back, and you'd have to understand and edit thousands of lines in .designer.cs by hand. As making even the tiniest of changes (like aligning a button) in such codebases might take a day or two - all those productivity benefits get reconsidered. (Had those experiences with both WinForms and WebForms previously).

@dsyme talks about reliance on heavy tooling in [this talk about Fabulous](https://youtu.be/ZCRYBivH9BM?t=922) with a section dedicated to "The Problem with XAML". Even though Fabulous has lots of problems, it's hard to disagree with many of the points being raised.

username_9: blazor binding has multiple tipps and issue

1- difference syntax between 1 way binding and two-way binding ( Value = @Value For one Way and Value-Changed = @Value for two-Way )

2- Binding does not support IValueConverter and we must convert values inline. and this is not good for reusing code.

3- we can control UI updates by INotifyPropertyChanged from viewmodel or model. but in blazor, this is done automatically and this is an performance issue