repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

fede1024/rust-rdkafka | 233936978 | Title: Using messages beyond lifetime of consumer hangs thread

Question:

username_0: If you try to collect messages and use them after dropping the consumer the thread hangs indefinitely.

This test (in `roduce_consume_base_test.rs`) reproduces the issue:

```rust

// All produced messages should be consumed.

#[test]

fn test_produce_consume_messages_beyond_consumer_lifetime() {

let _r = env_logger::init();

let topic_name = rand_test_topic();

let _message_map = produce_messages(&topic_name, 100, &value_fn, &key_fn, None, None);

let mut messages = Vec::new();

// Drop consumer before checking vector contents

{

let mut consumer = create_stream_consumer(&rand_test_group(), None);

consumer.subscribe(&vec![topic_name.as_str()]).unwrap();

let _consumer_future = consumer.start()

.take(100)

.for_each(|message| {

match message {

Ok(m) => messages.push(m),

Err(e) => panic!("Error receiving message: {:?}", e)

};

Ok(())

})

.wait();

}

assert_eq!(100, messages.len());

}

```

Answers:

username_1: Thanks for reporting the issue! This is probably because [the correct termination sequence](https://github.com/edenhill/librdkafka/wiki/Proper-termination-sequence) for librdkafka requires to destroy the messages before the consumer or the client are destroyed. This ordering is not currently enforced in rust rdkafka.

The right approach here is probably to have the lifetime of messages bound to the one of the consumer. I hope it won't make the API much more complicated.

username_1: Each [Message](https://username_1.github.io/rust-rdkafka/rdkafka/message/struct.Message.html) now hold a (phantom) reference to the consumer who created it. Your example won't compile anymore.

Status: Issue closed

|

Azure/autorest | 178407206 | Title: [C#] Generated ServiceClient does not set HttpClient.Timeout nor allow it to be set

Question:

username_0: Pre-AutoRest Azure SDKs (generated using Hyak) set `HttpClient.Timeout` to 300 seconds in the generated `ServiceClient`-derived class constructors. In AutoRest-generated code it is left unset, resulting in the lower default of 100 seconds. This is causing some customers to experience premature timeouts on very long-running operations that would have otherwise succeeded. (One might argue that such operations should be modeled as LROs, but I'm talking about data plane operations here where the vast majority of requests are completed in less than a second.)

Generated ServiceClient-derived classes should restore the old default timeout of 300 seconds and expose a property to allow for changing the timeout.

Status: Issue closed

Answers:

username_1: Adding to the vNext design (should get this along with the stuff storage is doing) |

mlp6/ADPL | 156085205 | Title: gasOn Reporting Oddly

Question:

username_0: Looks like getting the gasOn boolean using the ondemand infrastructure gives us an odd number. In the response below, it's returning `988209275` from ADPLKenyaNorth0437. From the lab electron it was returning `true` as expected. Might this have to do with firmware changes? 0.5.0 on the lab electron and 0.4.8 on the KenyaNorth. Always thought a bool was just the same as an int (all one byte storage in memory set to either a 1 or 0, but perhaps with this firmware that is not the case). Note that this number can't be stored in a byte of storage.

Will investigate. Thoughts @username_1 ?

```

{

"cmd": "VarReturn",

"name": "gasOn",

"result": 988209275,

"coreInfo": {

"last_app": "",

"last_heard": "2016-05-21T05:23:27.979Z",

"connected": true,

"last_handshake_at": "2016-05-20T21:44:09.224Z",

"deviceID": "1b0048000c51343334363138",

"product_id": 10

}

}```

Answers:

username_1: I was able to update the system firmware to 0.5.0 OTA for ADPLKenyaCentral9822. ADPLKenyaNorth0437 timed out during the same operations, so that is still running 0.4.8.

username_1: Just got ``gasOn`` from ADPLKenyaCentral9822 and received a ``true``.

Status: Issue closed

username_1: I think this has also been fixed, so going to close. |

NJIT-CODEPATH-SP-2021-PomodoroFriends/PomodoroFriends | 849814663 | Title: Pomodoro Timer Persistence

Question:

username_0: * User logs in to access previously defined Pomodoro Timers

Must decide how to store timers and manage storage on local android device.

Sqlite?

Must implement custom datatype and fields similar to one defined in schema.

Such datatype must be ready to convert to upload into server side via API.

Status: Issue closed

Answers:

username_1: IMPLEMENTED TIMER SAVING TO PARSE

https://github.com/NJIT-CODEPATH-SP-2021-PomodoroFriends/PomodoroFriends/pull/12 |

crate/crate | 968836763 | Title: Support setting a different endpoint for COPY TO / FROM for DNS-style S3 bucket adresses

Question:

username_0: **Use case**:

I want to be able to export data from CrateDB using `COPY TO / FROM` with (AWS) S3-compatible technologies like Minio, so that I don't rely on AWS for my storage setup.

**Feature description**:

With AWS SDK the endpoint defaults to `<bucket>.s3.amazonaws.com`. In order to use my Minio S3 bucket, I am able to enter my own endpoint in the URI for COPY FROM / TO commands. The ability to use AWS S3 is not affected.

---

Origin: https://community.crate.io/t/export-table-using-copy-to-command-to-alternate-s3-endpoint/246/4

S3 Client Helper: https://github.com/crate/crate/blob/b8f5bfc1ae1d34b0426f2a01217fde663505225b/server/src/main/java/io/crate/external/S3ClientHelper.java

Answers:

username_1: Would be nice to rely on S3 compatible storage. Google Cloud Platform storage has S3 compatibility, there's Digital Ocean, Linode, and Vultr as well that offer S3 compatible object storage as well.

As-is, I am using s3fs as a bandaid with `file://`.

username_1: Looks like changes would be needed somewhere in these files.

https://github.com/crate/crate/blob/df77bd0147b960da9e905351dc6a8c833c5ac26b/server/src/main/java/io/crate/execution/engine/export/OutputS3.java#L91

https://github.com/crate/crate/blob/df77bd0147b960da9e905351dc6a8c833c5ac26b/server/src/main/java/io/crate/external/S3ClientHelper.java#L88-L118

username_2: Somewhat related to this - which can be done either before or as a follow-up: We should move the COPY/FROM/TO S3 related logic outside of `server` into a dedicated module.

We already have [FileInputFactory](https://github.com/crate/crate/blob/master/server/src/main/java/io/crate/execution/engine/collect/files/FileInputFactory.java) and [FileInput](https://github.com/crate/crate/blob/master/server/src/main/java/io/crate/execution/engine/collect/files/FileInput.java)

Currently there are loaded via the [FileCollectModule](https://github.com/crate/crate/blob/master/server/src/main/java/io/crate/execution/engine/collect/files/FileCollectModule.java) but we could turn this into a `CopyPlugin` or so which uses a pull pattern.

username_0: @username_2 do you think it might be possible to reuse the files shares from the snapshot repositories?

username_2: It would be feasible to factor out some common base between the two, but not sure if we'd gain enough from that to warrant putting in the effort.

username_3: In addition to the above, there are also other S3-compatible storage systems such as [Nutanix Object Storage](https://www.nutanix.com/products/objects) which I'm using in one of my clients (so far it works as expected with utilities such as [s5cmd](https://github.com/peak/s5cmd) and [Cyberduck](https://cyberduck.io/).)

Therefore it'll be very good if CrateDB provides enough flexibility with different S3-compatible object storage systems.

Status: Issue closed

username_4: Closing this issue, all necessary changes are now merged.

The scope of this issue was to delegate the provided s3 endpoints to AWS SDK. There still could be problems between SDK and the s3 compatible storage, ex) #12094. So, please try this feature with the latest nightly build.

The latest nightly build can be found at: https://cdn.crate.io/downloads/releases/nightly/

The steps to install and run: https://crate.io/docs/crate/tutorials/en/latest/install.html#ad-hoc-unix-macos-windows

Also have a few sample copy from/to statements, [here](https://github.com/crate/crate/pull/12052#issue-826108977) and [here](https://github.com/crate/crate/pull/12052#issuecomment-1027158755).

Thank you! |

stevenlovegrove/Pangolin | 452795650 | Title: Whether Pangolin could be build in QNX?

Question:

username_0: I want to compile the Pangolin in QNX,whether pangolin could be build in QNX?

Thanks a lot!

Answers:

username_1: I'm not familiar with QNX - I expect you would at a minimum require some CMake changes, but if it is a Unix flavor and has OpenGL, then you could probably get it to work. If it uses Wayland or X11, then it should be quite straight-forward.

Status: Issue closed

username_2: @username_1 hi. In my qnx system, opengles_v2 and openesl is existing. By the way the graph frame in the qnx is called "screen". Is I should implement the display device interface with screen when we use Pangolin's GUI? |

flauted/TF-DNC | 437298805 | Title: Softmax allocation weighting --> all weightings are constants

Question:

username_0: My own implementation of the DNC with the adjustment invented by <NAME>

& <NAME>, (at https://ttic.uchicago.edu/~klivescu/MLSLP2017/MLSLP2017_ben-ari.pdf)

namely the change of **allocation weighting** to a pure **softmax of non-usage**, has resulted in the **memory matrix,** at every timestep, being filled by a **single word copied over the entire address space**. This happens because the content similarity has the same results for the whole initial memory matrix (zeroes) and for any arbitrary key (with or without masking) and the allocation weighting also starts off by being made of a single constant (initial usage is zeroes).

Interpolating between these gives us the **write weighting**, which is therefore **constant** and the memory is updated using it, resulting in, again, a matrix of a word copied across the entire matrix.

Using tf.Print on the memory matrix with your implementation of the softmax allocation yields the same results. Have you been experimenting with this yourself? If you did, please share any insights. I like the idea of softmax allocation, but afaik, it is not working. (At least not with initial memory of constants and initial usage of constants, which are zeroes)

I've included the tf.Print output in a text file. It contains the state of the memory for the first batch member, where memory length (number of words) = 20 and bit length (word size) = 8, for 19 consecutive timesteps from somewhere in the middle of the forward pass.

[memory_state.txt](https://github.com/username_1/TF-DNC/files/3117970/memory_state.txt)

Answers:

username_1: That's really interesting. No, I hadn't noticed that and haven't really given it any thought. Your explanation makes a lot of sense. I haven't looked at the DNC in a while so I really can't say if it's due to what you're describing or it's an implementation detail. But disclaimer, I can't guarantee this implementation is correct. Once it converged, I was satisfied. I didn't benchmark the scores. Hope this helps! |

Codeception/Codeception | 169556269 | Title: c3 is copied to project root when codeception/c3 is removed from composer

Question:

username_0: #### What are you trying to achieve?

Remove cocedeption/c3 from my project by doing `composer remove codeception/c3`

#### What do you get instead?

c3.php is copied to my project root

### Details

* Codeception version: 2.2.3

* PHP Version: 7.0.4

* Operating System: OSX

* Installation type: Composer

* List of installed packages: Sorry, no can do.

* Suite configuration: not applicable

<img width="482" alt="screen shot 2016-08-05 at 10 28 38 am" src="https://cloud.githubusercontent.com/assets/318089/17431051/283e12c4-5af8-11e6-9c81-813f2b894550.png"><issue_closed>

Status: Issue closed |

spacetelescope/calcos | 350512610 | Title: Retire Python 2

Question:

username_0: Python 2 will not be maintained past Jan 1, 2020 (see https://pythonclock.org/). Please remove all Python 2 compatibility and move this package to Python 3 only.

For conda recipe (including `astroconda-contrib`), please include the following to prevent packaging it for Python 2 (https://conda.io/docs/user-guide/tasks/build-packages/define-metadata.html?preprocessing-selectors#skipping-builds):

```

build:

skip: true # [py2k]

```

Please close this issue if it is irrelevant to your repository. This is an automated issue. *If this is opened in error, please let username_0 know!*

Answers:

username_1: @stscirij this issue can be closed, correct? I believe we moved everything to Python 2 last year.

username_0: To be clear, retiring also means you remove the `six` and compat stuff and also disallow installation of this in Python 2. I don't think that part is in yet. |

JelteF/PyLaTeX | 25714092 | Title: NumPy matrix/array conversion

Question:

username_0: A simple way to convert NumPy matrices and arrays to LaTeX formatted matrices or vectors.

Answers:

username_1: Is this already done? I see the Matrix and VectorName classes in numpy.py.

username_0: Yes, the matrix and vector name classes work. I have kept the issue open because the code above hasn't been included in the project yet.

You could check the example directory for the numpy example if you want to use it yourself. |

phantomas1234/GurobiML | 20673520 | Title: logging to /tmp/GurobiML.log ...

Question:

username_0: ... seems to be the problem with running computations in parallel.

https://github.com/username_0/GurobiML/blob/master/gurobi_mathlink.c#L143

Status: Issue closed

Answers:

username_0: ... seems to be the problem with running computations in parallel.

https://github.com/username_0/GurobiML/blob/master/gurobi_mathlink.c#L143 |

stuartlangridge/ColourPicker | 205872815 | Title: Blurry zoom in the preview

Question:

username_0: The line [\_\_main__.py#L1014](https://github.com/stuartlangridge/ColourPicker/blob/app/pick/__main__.py#L1014) causes everything to be blurry, even though the preview was upscaled before with `GdkPixbuf.InterpType.NEAREST`.

Fun fact: this is getting even worse on HiDPI screens, as the cursor is then scaled up again. |

jhipster/jhipster-kotlin | 444118466 | Title: Use the JHipster release instead of master in CI

Question:

username_0: Currently, we are using JHipster's master branch as the base when testing in the CI.

Instead use JHipster release version for testing the CI. This will make the build more stable.

cc: @username_1 this is might be something that interests you ❤️

Answers:

username_1: Yes, switch this value to `release` should normally work (I hope !!):

https://github.com/jhipster/jhipster-kotlin/blob/master/.travis.yml#L46-L48

username_2: It seems that we cannot use release without also altering the copying part as we re-use the test scripts and examples(more specifically `$JHI_HOME` should be set appropriately). But I think it is better to build against the specific version we support as future releases of JHipster could also potentially brake the build. @username_0 + @username_1 What do you think?

Status: Issue closed

|

alibaba-fusion/next | 690748842 | Title: [Pagination]Pagination Size selector 不能根据页面位置自动改变弹层位置

Question:

username_0: ### Component

Pagination

### Steps to reproduce

Select 的例子是正常的,但 Pagination 里就失效了

<!-- generated by alibaba-fusion-issue-helper. DO NOT REMOVE -->

<!-- component: Pagination --> |

sul-dlss/system-package-tracker | 153124985 | Title: Standardize more security vulnerability information

Question:

username_0: Now that we have several examples of different data sources, go through the existing mappings and database fields and see if there's more information we could fill in, or fields we could standardize on. Examples: CVEs, standard vulnerability ratings.<issue_closed>

Status: Issue closed |

revel/cmd | 656326960 | Title: Mode flag failing

Question:

username_0: When mode flag is specified the app path is incorrect

Answers:

username_0: This isnt a mode flag issue, it is a run issue where the `watch=true` vs `watch=false` When `true` the application starts the harness using the `start` function https://github.com/revel/cmd/blob/master/revel/run.go#L140 when `false` the run command is called directly which is not properly initiating the run command

username_0: Fixed in commit https://github.com/revel/cmd/pull/188/commits/ebc9c73ba0099fdbf18ed9223088eedcc81b7f23

```

./revel run revel-test-app prod

Revel executing: run a Revel application

Parsing packages, (may require download if not cached)... Completed

Revel engine is listening on.. localhost:9000

```

Status: Issue closed

|

aaronnech/CheckYourBias | 139188672 | Title: Cannot read property 'category' of undefined on "Your Candidates" page

Question:

username_0: @ravnon and @SonjaKhan were complaining about this issue for a while now, but I was only able to reproduce the bug once. After I deleted all of my rated issues in the database, the bug went away and I was not able to further reproduce it.

As you can see, this message will pop up every time the user tries to access Your Candidates, or tries to change the category on that same page.

HOWEVER... I then examined the rated issues for the users in the database. I found out that those users suffering from this bug had issues that were no longer present in the database. My conjecture based on the seen evidence is that having rated non-existent issues will trigger this error every time. This *can* be dangerous, especially in production if we have to delete (approved) issues from the database for some reason. However, if we maintain the invariant that an issue can be created but never deleted, this, in theory, should not be a problem. Furthermore, new users to the system as of the final release should not experience this problem, so long as we do not delete issues.

It is possible to fix this by tweaking the backend code, but at this stage of development I think it will be easier to clear everybody's rated issues, and avoid deleting any issues unless absolutely necessary. The backend team (@rhwilk @huynick @toddis ) are certainly welcome to try and reproduce this bug (repoen this issue if you choose to do so), but I will say it is not necessary for the final release.

Status: Issue closed

Answers:

username_0: Deleted everybody's rated issues and skipped issues. No user should experience this problem again (unless we delete issues of course). |

RPi-Distro/repo | 352230186 | Title: linux-perf for the 4.14 kernel

Question:

username_0: Can this be added to the stretch release please.

Answers:

username_1: Is this something that needs to be rebuilt for every minor version of the kernel or will a single package work for all 4.14 kernels?

username_0: Sorry - I am not really sure. I was wanting to use perf to track down packet loss and was pointed in the general direction of perf. I haven't hacked on Unix kernel code since about 1977.

However, current versions of perf are distributed as /usr/bin/perf_4.9 - and the actual perf command is a shell script which checks machine version against available binaries. So my guess is that a version called perf_4.14 would do the trick.

username_1: Alright, I'll add it to the list, but it fairly low priority.

username_0: Thanks.

username_2: @username_1 , is there a way for someone to contribute a patch to update the build version for linux-perf? Thanks.

username_1: In debian, linux-perf comes from the linux source package. We don't use that yet, so there's not much we can do for now. It has been on my todo list forever, but something more important always comes along.

If somebody wants to help, I think the debian kernel package needs to be updated to generate each of the pi kernels without unnecessary debian patches to minimise the delta from the kernels distributed by rpi-update, a common package for the overlays and so on and the legacy raspberrypi-kernel package should pull in all the new kernels. It would also need to handle installs to /boot by copying the files from another location in postinst and make sure all the initramfs stuff is handled properly. |

frappe/frappe_docker | 284365371 | Title: Multiple issue with rendering, updating and so on

Question:

username_0: Hi,

I deployed docker image and all of them are running as it should:

```

dockerhost:~/gitrepos/frappe_docker# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a381e3123a51 frappedocker_frappe "/bin/bash" 20 hours ago Up 10 minutes 0.0.0.0:6787->6787/tcp, 0.0.0.0:8000->8000/tcp, 0.0.0.0:9000->9000/tcp frappe

81b27de35ff4 redis:alpine "docker-entrypoint..." 20 hours ago Up 10 minutes 6379/tcp redis-cache

836037a84ce1 redis:alpine "docker-entrypoint..." 20 hours ago Up 10 minutes 6379/tcp redis-queue

ec758d0d4631 redis:alpine "docker-entrypoint..." 20 hours ago Up 10 minutes 6379/tcp redis-socketio

d97f12fff7ef mariadb "docker-entrypoint..." 20 hours ago Up 10 minutes 0.0.0.0:3307->3306/tcp mariadb

```

# Issue no. 1:

trying to access to external_ip:8000 gives a blank page.

# Issue no. 2:

Trying to update the bench gave the following error:

```

dockerhost:~/gitrepos/frappe_docker# ./dbench -c update

This update will remove Celery config and prepare the bench to use Python RQ.

And it will overwrite Procfile and supervisor.conf.

If you don't know what this means, type Y ;)

Do you want to continue? [y/N]: y

This update will replace ERPNext's Redis configuration files to fix a major security issue.

If you don't know what this means, type Y ;)

Do you want to continue? [y/N]: y

/bin/sh: 1: redis-server: not found

Traceback (most recent call last):

File "/usr/local/bin/bench", line 11, in <module>

load_entry_point('bench', 'console_scripts', 'bench')()

File "/home/frappe/bench-repo/bench/cli.py", line 40, in cli

bench_command()

File "/usr/local/lib/python2.7/dist-packages/click/core.py", line 722, in __call__

return self.main(*args, **kwargs)

File "/usr/local/lib/python2.7/dist-packages/click/core.py", line 697, in main

rv = self.invoke(ctx)

File "/usr/local/lib/python2.7/dist-packages/click/core.py", line 1066, in invoke

return _process_result(sub_ctx.command.invoke(sub_ctx))

File "/usr/local/lib/python2.7/dist-packages/click/core.py", line 895, in invoke

return ctx.invoke(self.callback, **ctx.params)

File "/usr/local/lib/python2.7/dist-packages/click/core.py", line 535, in invoke

return callback(*args, **kwargs)

File "/home/frappe/bench-repo/bench/commands/update.py", line 31, in update

patches.run(bench_path='.')

File "/home/frappe/bench-repo/bench/patches/__init__.py", line 21, in run

result = execute(bench_path)

File "/home/frappe/bench-repo/bench/patches/v3/redis_bind_ip.py", line 10, in execute

generate_config(bench_path)

File "/home/frappe/bench-repo/bench/config/redis.py", line 39, in generate_config

"redis_version": get_redis_version(),

File "/home/frappe/bench-repo/bench/config/redis.py", line 59, in get_redis_version

version_string = subprocess.check_output('redis-server --version', shell=True).decode().strip()

File "/usr/lib/python2.7/subprocess.py", line 574, in check_output

raise CalledProcessError(retcode, cmd, output=output)

subprocess.CalledProcessError: Command 'redis-server --version' returned non-zero exit status 127

```

Answers:

username_1: Issue # 3 reported & not resolved 10 months ago https://discuss.erpnext.com/t/problem-with-bench-update-redis-server-not-found/19793/6

sudo denies permission to update itself!?

Preparing to unpack .../sudo_1.8.16-0ubuntu1.5_amd64.deb ...

Unpacking sudo (1.8.16-0ubuntu1.5) ...

dpkg: error processing archive /var/cache/apt/archives/sudo_1.8.16-0ubuntu1.5_amd64.deb (--unpack):

error setting permissions of './usr/bin/sudo': Permission denied

dpkg-deb: error: subprocess paste was killed by signal (Broken pipe)

username_2: Please close as this is no longer relevant.

Status: Issue closed

|

msys2/MINGW-packages | 463273268 | Title: Request: add lzfse support

Question:

username_0: https://github.com/lzfse/lzfse

Answers:

username_1: Well you can create package yourself and provide pull request there for it, or wait while someone want to do it.

username_2: Should be simple enough to do, I've tested it and it does compile, just a matter of making the package.

username_3: mark this, I may contribute |

mattvonrocketstein/smash | 50607415 | Title: load bash functions from arbitrary source file

Question:

username_0: currently the only way to load bash functions is to have them declared somewhere in the normal bash bootstrap (bashrc, profile, whatever). it might be a good idea to allow loading from arbitrary files<issue_closed>

Status: Issue closed |

GoogleChrome/lighthouse | 196410051 | Title: Running the extension inside an incognito window does not open generated report

Question:

username_0: Running Lighthouse on a website in an incognito window, when the extension generated it opens a new tab with an URL such as `blob:chrome-extension://<EXTENSION-ID>/<REPORT-ID>`. However, incognito disallows accessing this output and displays a HTTP ERROR 404 page. Consequently copying the URL back into a normal Chrome window does show the correct report.

I run Lighthouse inside an incognito window because I have other extensions running that I do not want to interfere with my performance tests.

Answers:

username_1: Another reason to possibly switch away from blob urls is that we never revoke them. From multiple runs of LH:

<img width="659" alt="screen shot 2016-12-19 at 8 49 24 am" src="https://cloud.githubusercontent.com/assets/238208/21321610/6554da70-c5ca-11e6-9485-6ea7c3757e22.png">

Perhaps we can put a close handler `onbeforeunload` that calls `URL.revokeObjectURL`. Although if you load that URL again, you'd expect it to still work.

username_0: @patrickhulce Your PR mentioned this issue and it has been merged. Does that mean this issue has been resolved and can be closed? Thanks for your work!

username_1: This one is still open. That PR is for https://github.com/GoogleChrome/lighthouse/issues/1173

username_2: Still reproducible on Chrome 58.0.3016.0 canary with Lighthouse extension 1.5.2. Enabling it in Incognito performs the whole run and then opens a second tab with a blob url that leads to a 404 error.

username_3: Bug still present in Lighthouse 2.2.1 @ Chrome 59, OSX

Status: Issue closed

|

azzlack/Microsoft.AspNet.WebApi.HelpPage.Ex | 113477393 | Title: Translate FluentValidation?

Question:

username_0: Finally found one that works perfectly. Thanks.

In case you are still following this. I saw you got DataAnnotation works everywhere. Is there a way to translate FluentValidation attached to model into the documentation? |

go-gitea/gitea | 235064434 | Title: CodeMirror Keymaps

Question:

username_0: ## Description

**Feature Request**: Allow the user to choose a [keymap](https://github.com/codemirror/CodeMirror/tree/master/keymap) for the web editor, from a dropdown menu.

Answers:

username_0: Shush.

username_1: I think this issue has to be renamed as we no longer use CodeMirror but Monaco Editor

username_0: Then maybe deleted altogether, since the description also has a link to CodeMirror's keymap files.

I couldn't find keymap files for Monaco Editor so, this means that if they still have to be made from scratch by some generous soul, this issue is going nowhere fast.

Let me know if I'm wrong before I close it.

username_1: There are vim binding https://github.com/brijeshb42/monaco-vim

Status: Issue closed

|

cdterry87/Proma | 512290917 | Title: Fix issue with duplicate GET requests to pull file list after uploading a file.

Question:

username_0: **Explanation of issue:**

The event listeners that listens on 'dataRefresh' is listening in three different components: Project, Issue, and Client. If the event kicks off in any of these components, it triggers in the other components, as well if they have been loaded already.

Answers:

username_0: **Explanation of issue:**

The event listeners that listens on 'dataRefresh' is listening in three different components: Project, Issue, and Client. If the event kicks off in any of these components, it triggers in the other components, as well if they have been loaded already. |

NakedObjectsGroup/NakedObjectsFramework | 1176950185 | Title: Issue navigating to child property where the foreign key is not the primary key of the parent

Question:

username_0: If this is not the right forum, please let me know. Any insight

The message on the UI is

```

Object does not exist

Message: The requested object might have been deleted by you or another user. If not, please contact your system administrator.

```

And partial output from the server is:

```

System.ArgumentException: Entity type 'BestPracticeCriteriaScore' is defined with a single key property, but 3 values were passed to the 'Find' method.

at Microsoft.EntityFrameworkCore.Internal.EntityFinder`1.FindTracked(Object[] keyValues, IReadOnlyList`1& keyProperties)

at Microsoft.EntityFrameworkCore.Internal.EntityFinder`1.Find(Object[] keyValues)

at Microsoft.EntityFrameworkCore.Internal.EntityFinder`1.Microsoft.EntityFrameworkCore.Internal.IEntityFinder.Find(Object[] keyValues)

at Microsoft.EntityFrameworkCore.DbContext.Find(Type entityType, Object[] keyValues)

at NakedFramework.Persistor.EFCore.Component.EFCoreObjectStore.FindByKeys(Type type, Object[] keys)

at NakedFramework.Core.Component.ObjectPersistor.FindByKeys(Type type, Object[] keys)

at NakedFramework.Facade.Impl.Utility.EntityOidStrategy.GetDomainObject(String[] keys, Type type)

NakedFramework.Facade.Impl.Utility.EntityOidStrategy: 2022-03-22 09:22:06,745 [.NET ThreadPool Worker] WARN NakedFramework.Facade.Impl.Utility.EntityOidStrategy - Domain Object not found keys: 14 4 1 type: SolutionArchitecture.Model.BestPracticeCriteriaScore

NakedObjects.Rest.App.Demo.RestfulObjectsController: 2022-03-22 09:22:06,752 [.NET ThreadPool Worker] ERROR NakedObjects.Rest.App.Demo.RestfulObjectsController - Context: NakedObjects.Rest.App.Demo.RestfulObjectsController.GetObject (Template.Server) State SolutionArchitecture.Model.BestPracticeCriteriaScore;14--4--1;false;

NakedFramework.Facade.Error.ObjectResourceNotFoundNOSException: No such domain object SolutionArchitecture.Model.BestPracticeCriteriaScore-14--4--1: null adapter

```

The system seems to be passing the primary key (14) and the values used as the foreign key in the child object (4 and 1)

See information about the model below. Issue happens when navigating to the SystemBestPracticeScore.BestPracticeCriteriaScore property

```C#

public partial class BestPracticeCriteriaScore

{

public virtual int Id { get; set; }

[Hidden]

public virtual int BestPracticeId { get; set; }

public virtual int Score { get; set; }

public virtual string Criteria { get; set; } = null!;

public virtual BestPractice BestPractice { get; set; } = null!;

public virtual ICollection<SystemBestPracticeScore> SystemBestPracticeScores { get; set; } = new HashSet<SystemBestPracticeScore>();

}

public partial class SystemBestPracticeScore

{

#region Injected Services

//An implementation of this interface is injected automatically by the framework

public IDomainObjectContainer Container { set; protected get; }

#endregion

[Hidden]

public virtual int Id { get; set; }

[Hidden]

public virtual int SystemId { get; set; }

public virtual int BestPracticeId { get; set; }

public virtual int Score { get; set; }

[Truncated]

entity.Property(e => e.Progress)

.HasColumnType("character varying")

.HasColumnName("progress")

.HasComment("detail of progress made since the last assessment");

entity.HasOne(d => d.System)

.WithMany(p => p.SystemBestPracticeScores)

.HasForeignKey(d => d.SystemId)

.OnDelete(DeleteBehavior.Restrict)

.HasConstraintName("system_best_practice_score_fk_system_id");

entity.HasOne(d => d.BestPracticeCriteriaScore)

.WithMany(p => p.SystemBestPracticeScores)

.HasPrincipalKey(p => new {p.BestPracticeId, p.Score})

.HasForeignKey(d => new { d.BestPracticeId, d.Score })

.OnDelete(DeleteBehavior.Cascade)

.HasConstraintName("system_best_practice_score_fk_best_practice_id__score");

});

```

Answers:

username_1: This is the right place to post. Based on a quick look, this does look like a bug in our code.

We think the problem stems from this line in the mapping:

`entity.HasAlternateKey(e => new { e.BestPracticeId, e.Score });`

We've never used `HasAlternateKey `in our own applicationbs which is why we haven't ever seen this problem. Within the framework we use an API function called `GetKeys()` to find the key to build the url. However this method returns

both the primary and alternate key, so it is building a faulty key for the object.

I will flag this as a Bug for now and we will fix it for the next release.

Meantime, to allow you to get going again, I suggest you take the alternate key out of the mapping for the time being.

username_1: Well if EF is introducing an alternate key by convention then the problem is essentially the same. I think we can safely say that _at present_ NakedObjects does not work with alternate keys. It should be possible to fix this - we need to change the way we access the API such that we are just getting the primary key instead of all the keys.

If you want to progress before that ...

I am assuming that the reason you have done this is because you want to display different 'identifiers' for associated objects in different contexts - is that correct? If so, I suggest you just use the object property, with no associated FK property (or with an FK property to the primary key, `Hidden `from the user). Then add a derived (`readonly`) property that displays the desired identifier from the associated object.

username_2: Need to cherry-pick fix back to NO12/NF1

username_1: Stef has fixed the issue, and we have uploaded a new version of the NakedObjects.Server package (v12.0.1) to the Nuget public gallery. Please try this out and report back if it fixes your particular model.

username_0: Thank you. Will this issue be fixed in v13 beta as well soon? I am using the Template Server project downloaded from this repository, but that project is referencing v13 beta packages

username_1: v13.0.0-beta02 released with the fix. Please confirm back that it is now working for you. |

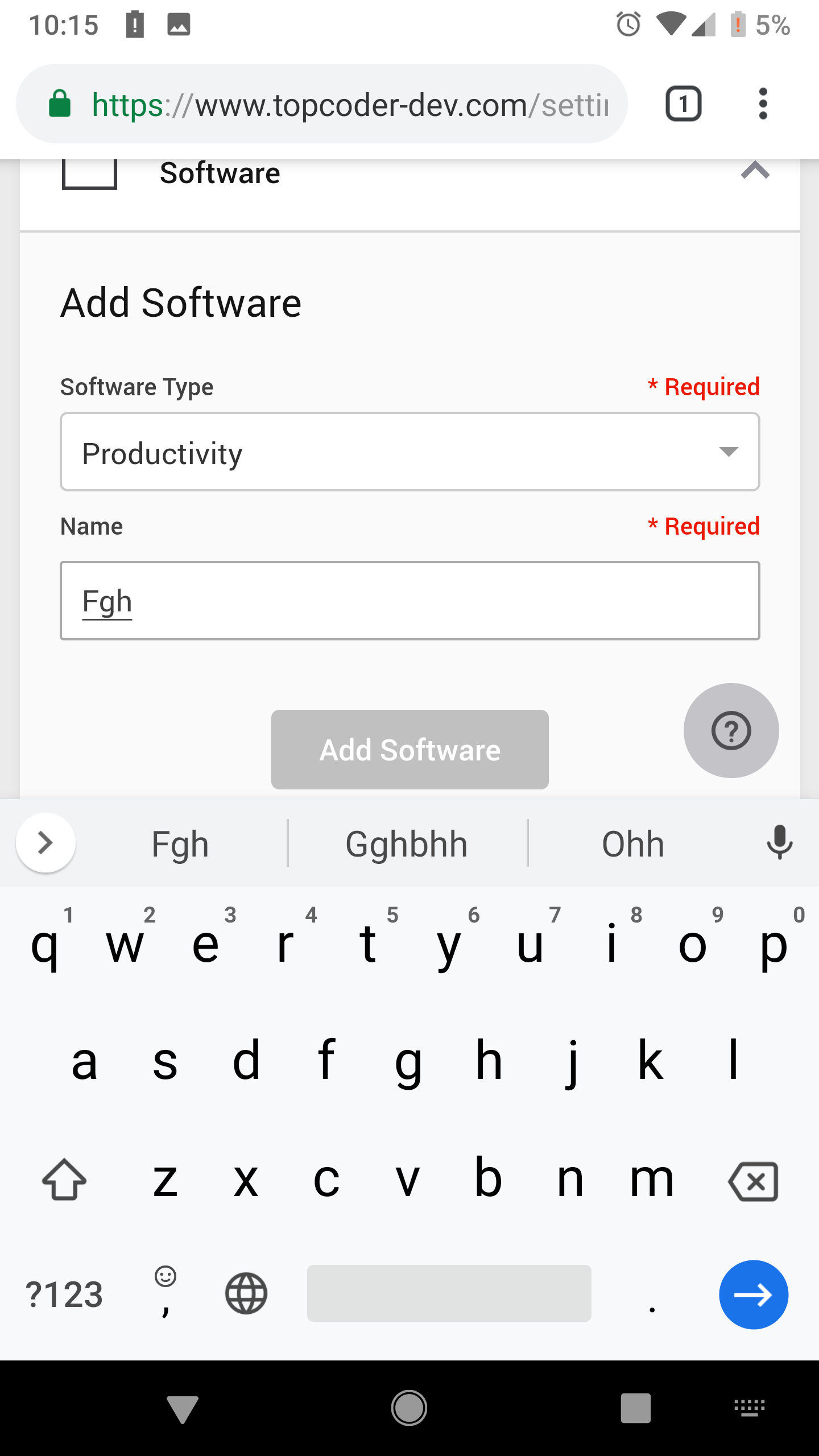

topcoder-platform/community-app | 421763583 | Title: Settings > Tools: Sometimes long time taken to enable the add button after adding an item

Question:

username_0: 1. Open the application and login as TonyJ

2. Hover over the profile avatar > Click Settings

3. Click Tools Tab > Devices/Software/Service Providers/Subscriptions (Any Tab)

4. Add an item

5. Enter data to the empty form again as soon as you created the item

6. Look at the add button and the data in the form

**Actual:** Sometimes long time taken to enable the add button after adding an item. You can see the item is added to the list as soon as the user clicks on the add button but the add button is in the disabled state for sometime

**Expected:** Should able to add another item as soon as user added an item.

**Reproducibility Rate:** 3/3

**Environment:** Google Pixel XL, Android 9.0 | Browser: Google Chrome 73.0.3683.75

Answers:

username_0:

username_1: can't reproduce

Status: Issue closed

|

miguelgrinberg/Flask-Migrate | 293610653 | Title: Auto generate rev-id

Question:

username_0: One thing that is not very friendly on flask-migrate is that revision id is a hash of sorts. Quite hard to track changes in a series if they're identified by a hash value. What if rev-id could be generated as a increasing number by default or as a option?

Answers:

username_0: Just scripted myself into it.

Status: Issue closed

username_1: Flask-Migrate does not create revision ids, that is done by Alembic, so this needs to be discussed in the context of that project.

The idea of hashes as migration ids is not new though, git does the same thing. And sequential numbers do not work well if you have multiple branches. |

dbca-wa/wastd | 668335654 | Title: Entering Occurrences - Report Plant Count section - Estimated area of pop field

Question:

username_0: ## Problem

‘Estimated area of pop’ field in the TSC database which I assume relates to ‘Area of pop m2’ on the Threatened Priority Report Form falls under the Quadrats section of 'report plant count' but should relate to all three sections: Plant Count (Detailed), Plant Count (Simple) and Quadrats.

If it remains under Quadrats it is unlikely people will fill it out if they didn’t use quadrats in their survey.

Are you able to please shuffle the fields around so this field isn't pigeon-holed?<issue_closed>

Status: Issue closed |

ayrtonvwf/lite-admin | 334584225 | Title: Regular size .material-icons inside label

Question:

username_0: The `.material-icons` class creates an element with a font-size slightly bigger. This may be good in most cases, but when it's inside an input's label, it would be better if it kept the label font size.<issue_closed>

Status: Issue closed |

logstash-plugins/logstash-output-elasticsearch | 587342306 | Title: [Doc]Add more explicit documentation for `upsert` and `doc_as_upsert`

Question:

username_0: From #881:

" I think that the `update a document if not already present` only works with the doc_as_upsert option. The upsert option adds data to the document if the document does not exist I think.

**Some more explicit documentation for both upsert and doc_as_upsert would also be helpful**."

Answers:

username_0: @robin13 I added this issue for tracking your request from #881. I'd like to sync with you to hear more about the additional you'd like to see in the docs. |

josefnpat/roguecraft-squadron | 358880380 | Title: Ticket Template

Question:

username_0: Using templates can help make sure a lot of the basic questions are answered on a ticket before it is deemed acceptable and something that can be finished before the due date.

**1) Do multiple people need to touch this ticket before the due date?**

I.E. Design needs to done, then given to programmer

A) If this is a waterfall ticket, write down dates each person will get the assets on or before the end of sprint.

B) If this requires multiple input write down days people will agree to review or check on the ticket.

**2) How many estimated assets will be required to finish this ticket?**

A) If this is a waterfall ticket mark what assets need to be done first.

B) If less then 10 assets write them down and break them up further if needed.

C) If more then 10 assets this should either be broken up into another ticket or the assets should be extremely related and an estimated time for each asset should be noted and understood for the time frame. The keynote is to write what assets will be needed and understand the time constraints.

**3) Does this ticket depend on another ticket?**

A) If this a waterfall ticket, better mark a due date for when assets are expected for that ticket.

B) If this based on an older ticket, make sure that past ticket is clear and still related.

**4) Can I walk through what I will do first on the ticket?**

A) If you are struggling, the ticket needs more input and clarity.

B) If you are not sure what to do after the first step, try to break down the ticket further (i.e. more clarity)

**5) What will this ticket look like finished? What does it need to be complete?**

A) Can list assets, number of reviews, voting, ready to test, etc.

B) Do you know all the assets listed or will just a few do?

**6) Can I test this ticket?**

A) List a way to test if it complete or if it applies.

B) Does the design seem functional? Do the crop marks look correct? Is it running as expected?

Answers:

username_0: This is in part because I have to make sure I get my tickets done very early in the sprint so I can hand them off to the programmer half way before sprint is over to allow time to implement.

username_1: @username_0 please move this to the wiki

username_1: @username_0 please move this to the wiki

username_1: @username_0 please move this to the wiki

Status: Issue closed

username_0: Moved to wiki

username_1: https://github.com/username_1/roguecraft-squadron/wiki/Ticket-guide |

bubkoo/html-to-image | 604414745 | Title: Slow Download and Error File when Download From Server

Question:

username_0: I have code:

`htmlToImage.toPng(document.querySelector('.orgchart'))

.then((dataUrl) => {

download(dataUrl, `${fileNameExportHierarchy}.png`, 'image/png');

});`

When i try in local, no problem. but when code deployed to server, popup download too long and file error like this photo.

How i solving this problem?

|

ingenieux/awseb-deployment-plugin | 176714580 | Title: Does not report failed deployment correctly

Question:

username_0: If a deployment failed, EB will return status green with a previous build deployed. This causes the plugin to think deployment is successful, but it is not. It should instead check to make sure the version labels match after the deployment turns green. If they don't match, then the deployment has failed.

Answers:

username_1: [Deploy Beanstalk] $ /bin/bash -x /tmp/hudson737544988919118642.sh

++ git rev-parse --short HEAD

+ echo GIT_SHORT_HASH=8eb5aa5

++ date -u +%Y-%m-%dT%H:%M:%SZ

+ echo DEPLOY_TIME=2016-11-14T19:27:31Z

[EnvInject] - Injecting environment variables from a build step.

[EnvInject] - Injecting as environment variables the properties file path 'env.props'

[EnvInject] - Variables injected successfully.

AWSEB Deployment Plugin Version 0.3.10

Zipping contents of Root File Object (Deploy Beanstalk) into tmp file awseb-4080026641504629791.zip (includes=, excludes=)

Uploading file awseb-4080026641504629791.zip as s3://elasticbeanstalk-us-east-1-redact/redact/redact-8eb5aa5-2016-11-14T19:27:31Z.zip

Creating application version 8eb5aa5-2016-11-14T19:27:31Z for application redact for path s3://elasticbeanstalk-us-east-1-redact/redact/redact-8eb5aa5-2016-11-14T19:27:31Z.zip

Created version: 8eb5aa5-2016-11-14T19:27:31Z

Using environmentId 'e-rm2ptmfham'

No pending Environment Updates. Proceeding.

Checking health/status of environmentId e-rm2ptmfham attempt 1/30

Environment Status is 'Ready'. Moving on.

Updating environmentId 'e-rm2ptmfham' with Version Label set to '8eb5aa5-2016-11-14T19:27:31Z'

Checking health/status of environmentId e-rm2ptmfham attempt 1/30

Versions reported: (current=8eb5aa5-2016-11-14T16:15:08Z, underDeployment: 8eb5aa5-2016-11-14T19:27:31Z). Should I move on? false

Checking health/status of environmentId e-rm2ptmfham attempt 2/30

Versions reported: (current=8eb5aa5-2016-11-14T16:15:08Z, underDeployment: 8eb5aa5-2016-11-14T19:27:31Z). Should I move on? false

Checking health/status of environmentId e-rm2ptmfham attempt 3/30

Versions reported: (current=8eb5aa5-2016-11-14T16:15:08Z, underDeployment: 8eb5aa5-2016-11-14T19:27:31Z). Should I move on? false

Environment Status is 'Ready' and Health is 'Green'. Moving on.

Deployment marked as 'successful'. Starting post-deployment cleanup.

Cleaning up temporary file /tmp/awseb-4080026641504629791.zip

Finished: SUCCESS

```

and the corresponding EB log:

```

2016-11-14 13:33:38 UTC-0600 ERROR Failed to deploy application.

2016-11-14 13:33:38 UTC-0600 ERROR Service:AmazonCloudFormation, Message:TemplateURL must reference a valid S3 object to which you have access.

2016-11-14 13:29:48 UTC-0600 INFO Environment update is starting.

```

username_2: Hm... interesting. Ok, I'll keep that in mind and push a change for it shortly ok?

username_3: another case with the same problem:

`Versions reported: (current=[Jenkins]-[Integration]-[08-03-2017 1924], underDeployment: [BK-API-17]-[Integration]-[20-03-2017 1242]). Should I move on? false

Mon Mar 20 10:45:08 UTC 2017 [INFO] Started Application Update

Mon Mar 20 10:45:08 UTC 2017 **[ERROR] Deployment Failed: Unexpected Exception**

Mon Mar 20 10:44:59 UTC 2017 [INFO] Deploying new version to instance(s).

Checking health/status of environmentId e-kbhp2qmi3b attempt 2/30

Versions reported: (current=[Jenkins]-[Integration]-[08-03-2017 1924], underDeployment: [BK-API-17]-[Integration]-[20-03-2017 1242]). Should I move on? false

Environment Status is 'Ready' and Health is 'Green'. Moving on.

Deployment marked as 'successful'. Starting post-deployment cleanup.

Cleaning up temporary file C:\Users\ADMINI~1\AppData\Local\Temp\2\awseb-5418100666623237368.zip

Finished: SUCCESS`

username_4: We currently use the following script after deploy-step to verify the correct version was deployed.

where ${VERSION}-timestamp-${BUILD_TIMESTAMP} is our _Version Label Format_

ELB_VERSION=`aws elasticbeanstalk describe-environments --profile our-profile --region our-region --environment-names $ENVIRONMENT --query 'Environments[0].VersionLabel' | sed 's/"//g'`

if [ "$ELB_VERSION" = "${VERSION}-timestamp-${BUILD_TIMESTAMP}" ]; then

echo "correct version is deployed on ELB"

else

echo "ELB version: $ELB_VERSION do not match deployment version: ${VERSION}-timestamp-${BUILD_TIMESTAMP}"

exit 1

fi

Posting as it might be useful for someone.

username_1: In our bot (python) I do something similar:

```

events = client.describe_events(ApplicationName=eb_app, StartTime=deploy_start_time_utc, EnvironmentName=eb_env, Severity='ERROR')['Events']

if len([event for event in events if event['Message'] == 'Failed to deploy application.']) == 0:

register_deployment(eb_env, commit_hash)

else:

exit(1)

``` |

darryldecode/laravelshoppingcart | 558671881 | Title: Remove not working

Question:

username_0: THe remove method removes the item that is first in the cart kindly let me know of any fix

Code in View:

@foreach(Cart::session(Auth::id())->getContent() as $item)

<a href="{{ route('cosplay.cart.destroy',$item->id) }}" onclick="event.preventDefault(); document.getElementById('delete-form').submit();"><span class="lnr lnr-trash text-theme display-5"></span></a>

<form id="delete-form" action="{{ route('cosplay.cart.destroy',$item->id) }}" method="post" style="display: none;">

@method('DELETE')

@csrf

</form>

@endforeach()

Code in Controller:

public function destroy($id)

{

Cart::session(Auth::id())->remove($id);

Alert::toast('Costume Removed!', 'success')->autoclose(800);

return redirect()->back();

} |

jef/streetmerchant | 757997837 | Title: Trouble adding a store.

Question:

username_0: I followed the instructions [here](https://github.com/username_2/streetmerchant/wiki/Help:-Configuration:-Adding-a-store), and viewed the example [here](https://github.com/username_2/streetmerchant/commit/af96c5f2e808af7496f3c3299e4cf173105de48b), but I keep getting errors like this:

`src/store/lookup.ts(191,4): error TS7053: Element implicitly has an 'any' type because expression of type 'Series' can't be used to index type '{ 3070: number; 3080: number; 3090: number; rx6800: number; rx6800xt: number; rx6900xt: number; ryzen5600: number; ryzen5800: number; ryzen5900: number; ryzen5950: number; sf: number; sonyps5c: number; sonyps5de: number; 'test:series': number; xboxss: number; xboxsx: number; }'.

Property 'throttles' does not exist on type '{ 3070: number; 3080: number; 3090: number; rx6800: number; rx6800xt: number; rx6900xt: number; ryzen5600: number; ryzen5800: number; ryzen5900: number; ryzen5950: number; sf: number; sonyps5c: number; sonyps5de: number; 'test:series': number; xboxss: number; xboxsx: number; }'.`

I'm unsure what I'm doing wrong. So unsure that I can't even form a very well thought out question or provide info that I think will be useful. I don't even know where to start. (Yes, I'm TOTALLY new to Node.JS...)

I DO think this will be useful:

[Store I'm trying to add.](https://flyhoneycomb.com/)

Items I'm trying to add - [1](https://flyhoneycomb.com/products/alpha-flight-controls), https://flyhoneycomb.com/collections/honeycomb-flight-sim-hardware/products/bravo-throttle-quadrant, https://flyhoneycomb.com/collections/honeycomb-flight-sim-hardware/products/airbus-throttle-handles

Happy to provide more info if you need. Just let me know.

Thank you!

Answers:

username_1: having the same problem

username_2: Do you mind posting the code you're trying to add? Specifically the new store? I can help!

username_0: Absolutely! Thanks for writing/releasing such an awesome utility, too. :-D

`import {Store} from './store';

export const Honeycomb: Store = {

labels: {

inStock: {

container: '#product_form_4533457682541',

text: ['Add to cart']

},

},

links: [

{

brand: 'test:brand',

model: 'test:model',

series: 'test:series',

url: 'https://flyhoneycomb.com'

},

{

brand: 'honeycomb',

model: 'AlphaFlightYoke',

series: 'yoke',

url: 'https://flyhoneycomb.com/collections/honeycomb-flight-sim-hardware/products/alpha-flight-controls'

},

{

brand: 'honeycomb',

model: 'BravoThrottleQuadrant',

series: 'quadrant',

url: 'https://flyhoneycomb.com/collections/honeycomb-flight-sim-hardware/products/bravo-throttle-quadrant'

},

{

brand: 'honeycomb',

model: 'ThrottlePackForAirbus',

series: 'throttles',

url: 'https://flyhoneycomb.com/collections/honeycomb-flight-sim-hardware/products/airbus-throttle-handles'

},

],

name: 'honeycomb'

};`

username_3: I drove myself crazy over this one for a longtime but figured out how to debug. I believe there is some confusion here. I'm not a coder at all but from fiddling around I ran into this. This current error is not a store error but a product error. You need to add the model, series and brand to the store.TS file. Then you will most likely get a max price error next, so then you have to add to the config.ts the max price series. also update the .env file for this series and model in the max price section. finally it should all run.

What confusion I see here though is that you're adding a store before adding a model or series for the product. you need to build both. A store and a product as the flight stick is not a tracked product by default.

I ran into this trying to Track the pulse 3d headset by Sony. Also using "git" vs command prompt to run your code will literally tell you a debug of what codes the issue is.

username_3: The key to your error btw is this. "Property 'throttles' does not exist on type" Throttles is missing from the Store.ts file.

username_1: Wow totally solved my problem!

Thanks so much

@username_2 should take a look at this

username_2: username_2.codes/streetmerchant/help/general/#adding-a-store

username_0: Hmm...not sure I follow. I added a store (honeycomb.ts) with the code shared with Jef, added the necessary bits into index.ts:

`import {Evga} from './evga';

import {EvgaEu} from './evga-eu';

import {Galaxus} from './galaxus';

import {Game} from './game';

import {Gamestop} from './gamestop';

// import {Honeycomb} from './honeycomb';

import {Kabum} from './kabum';

import {Mediamarkt} from './mediamarkt';

import {MemoryExpress} from './memoryexpress';

import {MicroCenter} from './microcenter';

import {Mindfactory} from './mindfactory';`

...(again, commented out so the code will still run), and added all the stuff into store.ts as pasted above. Following Jef's writeup and viewing the example given, as linked to in my initial post, I thought I checked all the boxes. This "readme.md" thing though...this is new.

```

username_0: Hmm...not sure I follow. I added a store (honeycomb.ts) with the code shared with Jef, added the necessary bits into index.ts:

```

import {Evga} from './evga';

import {EvgaEu} from './evga-eu';

import {Galaxus} from './galaxus';

import {Game} from './game';

import {Gamestop} from './gamestop';

// import {Honeycomb} from './honeycomb';

import {Kabum} from './kabum';

import {Mediamarkt} from './mediamarkt';

import {MemoryExpress} from './memoryexpress';

import {MicroCenter} from './microcenter';

import {Mindfactory} from './mindfactory';`

```

...(again, commented out so the code will still run), and added all the stuff into store.ts as pasted above. Following Jef's writeup and viewing the example given, as linked to in my initial post, I thought I checked all the boxes. This "readme.md" thing though...this is new.

username_3: Ok lets go one at a time, The location of the readme is on the root of the street merchant folder where you edit the .env fix that part first. the other important thing I did was mark down what my series is, model and brand, as all these need to sync up across the grid.

see what errors come after the readme file update.

As for a store, is honeycomb the store? you need to create a Store.TS file that pulls from a element on the webpage that determines if the item is in stock of not, this is a process in itself, different from adding another item. that's what i was trying to say. you have 2 build outs needed to accomplish this. the current error however points to the readme file missing your model, series and brand.

username_3: for the store it would b laid out, Honeycomb.ts, within that file it would be the following. This is Walmart's for example.

import {Store} from './store';

export const Walmart: Store = {

labels: {

inStock: {

container: '.button.spin-button.prod-ProductCTA--primary.button--primary',

text: ['add to cart']

},

maxPrice: {

container: 'span[class*="price-characteristic"]'

}

},

links: [

{

brand: 'test:brand',

model: 'test:model',

series: 'test:series',

url:

'https://www.walmart.com/ip/Keurig-K-compact-Brewer-Black-Coffee-Maker/806217614'

},

{

brand: 'sony',

model: 'ps5 console',

series: 'sonyps5c',

url: 'https://www.walmart.com/ip/PlayStation5-Console/363472942'

},

{

brand: 'sony',

model: 'ps5 digital',

series: 'sonyps5de',

url:

'https://www.walmart.com/ip/PlayStation5-Console/493824815'

},

{

brand: 'microsoft',

model: 'xbox series x',

series: 'xboxsx',

url: 'https://www.walmart.com/ip/Xbox-Series-X/443574645'

},

{

brand: 'microsoft',

model: 'xbox series s',

series: 'xboxss',

url: 'https://www.walmart.com/ip/Xbox-Series-S/606518560'

},

{

brand: 'corsair',

model: '750 platinum',

series: 'sf',

url: 'https://www.walmart.com/ip/SF750-Power-Supply/197046151'

},

{

brand: 'corsair',

model: '600 platinum',

series: 'sf',

url:

'https://www.walmart.com/ip/Corsair-SF-Series-600W-80-Platinum-Power-Supply/250717047'

},

{

brand: 'amd',

model: '5900x',

series: 'ryzen5900',

url:

'https://www.walmart.com/ip/AMD-Ryzen-9-5900X-12-core-24-thread-Desktop-Processor/159710953'

}

],

name: 'walmart'

};

This file goes in SRC/store/ model.

Status: Issue closed

|

top-think/framework | 166965765 | Title: 关于路由配置的一点疑问

Question:

username_0: 经过测试, 发现目前框架暂不支持模块路由配置.

但是在 `config.php` 的 `extra_config_list` 参数中指定了路由配置, 模块下也同样会去加载 模块下的 `route` 配置文件

此时的 `route` 配置并不会生效

Answers:

username_1: 加载模块配置的时候路由检测已经完成了 当然不会生效了

username_0: @username_1

那该如何设置分组的路由呢?

我在路由中配置了如下规则:

```

'[admin]' => [

'index' => 'admin/index/index', // 后台首页

'login' => 'admin/login/index', // 登录页面

]

```

上面的规则能够正常的生成对应的URL, 也能正常访问.

但是在访问后台首页时, 用 `http://www.xxx.com/admin/` 访问的页面却是 `/index/index/index.html` 的内容, 而不是后台首页

username_1: @username_0 路由分组和模块没有直接关系

username_2: route.php是要统一配置的.如果在模块中可以使用动态注册路由呀.

Status: Issue closed

|

chashnikov/IntelliJ-presentation-assistant | 559703622 | Title: Custom shorcuts not displayed

Question:

username_0: Hello.

I have bind the custom shorcut **Main menu | Window | Editor Tabs | Split Vertically** to **ALT + V**.

When I use the shortcut, the **Split Vertically** text is correctly displayed but the shorcut **ALT + V** is not displayed.

I am using **IntelliJ IDEA Ultimate 2019.3.2** and **Presentation Assistant 1.0.3** on **Ubuntu 18.04**.

Answers:

username_1: By default the plugin shows shortcut from the standard keymaps. If you want to show shortcuts from your own keymap, choose it in File | Settings | Appearance & Behavior | Presentation Assistant.

username_0: Oh perfect, it was the option **Default Copy**.

Thank you :)

Status: Issue closed

|

jump-dev/MathOptInterface.jl | 986101945 | Title: supports AbstractConstraintAttribute broken for bridges

Question:

username_0: HiGHS doesn't support `ConstraintDualStart`, so any bridged constraint shouldn't either. But yet:

```julia

julia> model = MOI.Bridges.full_bridge_optimizer(HiGHS.Optimizer(), Float64)

MOIB.LazyBridgeOptimizer{HiGHS.Optimizer}

with 0 variable bridges

with 0 constraint bridges

with 0 objective bridges

with inner model A HiGHS model with 0 columns and 0 rows.

julia> MOI.supports(model, MOI.ConstraintDualStart(), MOI.ConstraintIndex{MOI.VectorOfVariables,MOI.Nonnegatives})

true

julia> MOI.supports(model.model, MOI.ConstraintDualStart(), MOI.ConstraintIndex{MOI.VectorOfVariables,MOI.Nonnegatives})

false

```

Here's the offending code:

https://github.com/jump-dev/MathOptInterface.jl/blob/9a54ba742ed1f0dbe63f7548c3c960dcac1dc8c1/src/Bridges/bridge_optimizer.jl#L1218-L1234

Answers:

username_1: Here is the fix for a similar problem with the slack bridge https://github.com/jump-dev/MathOptInterface.jl/pull/1383/files

username_0: Yeah is it sufficient to just look at the inner optimizer? If so, why is #1383 still a draft?

username_1: Because it needs tests ^^

username_0: I've opened a few PRs to fix these

- [ ] #1580

- [ ] #1579

- [ ] #1578

- [ ] #1577

- [ ] #1576

- [ ] #1575

- [ ] #1574

I still have the following to go

- [ ] geomean_to_relentr.jl

- [ ] function_conversion.jl

- [ ] det.jl

Status: Issue closed

username_0: Closing because this is now fixed. The IndicatorSOS1Bridge PR is different. |

OHIF/Viewers | 607103271 | Title: OHIF Viewer hangs when Orthanc Dicomweb "StudiesMetadata" set to "MainDicomTags"

Question:

username_0: Im using the latest version of Orthanc and OHIF Viewer. When Orthanc Dicomweb's "StudiesMetadata" parameter is set to "MainDicomTags" OHIF Viewer hangs after the fetching metadata stage when opening a study. Changing the value of "StudiesMetadata" parameter to "Full" fixes the issue.

Answers:

username_1: I'm not sure I have enough information to action this one way or the other. Generally, the OHIF Viewer should work with and support DICOMWeb features. If you can point which part of the spec we're not handling correctly, then I know we have a bug to fix. If this is a general integration / Orthanc compatibility issue, it may be outside of scope.

username_0: It seems to get stuck after reading all the metadata from Orthanc...doesnt move to the next step

username_1: Unfortunately this is not enough information for me to go off of.

username_2: This reason for this issue can be found here: https://github.com/OHIF/Viewers/issues/1638

I also mentioned my test case using orthanc with maindicomtags and it's not only orthanc that has problems since v3.7.8

username_1: We'll continue to follow in #1638, as it captures this issue more clearly. |

mher/flower | 165371181 | Title: task not saved to database when running inside a docker

Question:

username_0: I tried to run the flower insider a docker container, but the tasks are not properly saved to the database file I specified on the mounted volume. However, there is no problem at all when running flower locally.

my shell command:

flower -A dtasks --port=5555 --db=/path/to/database/file --persistent=True

Actually, there are some tasks were saved, but only a few. Most of the tasks record were gone.

I appreciate any helps.

Answers:

username_1: This doesn't sound a flower related issue. I'd suggest to debug using local database file inside docker.

Status: Issue closed

|

allure-framework/allure-python | 464241509 | Title: [feature request]Where could i find the document for Allure python commons?

Question:

username_0: That's an amazing work and i tried to use it in my code. But i found that i could not find the API document for it neither the usage. What i could find is [this document](https://docs.qameta.io/allure/#_about) without introducing Allure python commons. Maybe it's better to maintain a document for it.

Thanks very much. I am sorry if i made some misunderstanding.

#### Please tell us about your environment:

- Allure version: 2.1.0

- Test framework: None

- Allure adaptor: None |

nikitavoloboev/ama | 548546734 | Title: How do you organize todos across multiple apps?

Question:

username_0: According to your wiki, you use Trello, 2Do, and MindNone to store todos, which seems to be quite overlapping. How do you decide where to put a new todo? And in general, how do you track projects, which might have many todos and other corresponding information?

Answers:

username_1: I use 2Do for most of my tasks. Projects tasks are usually done via GitHub (issues) and sometimes Trello (general projects). MindNode I use as a thinking board. Just put things in there, work on it, then erase the entire map.

Status: Issue closed

|

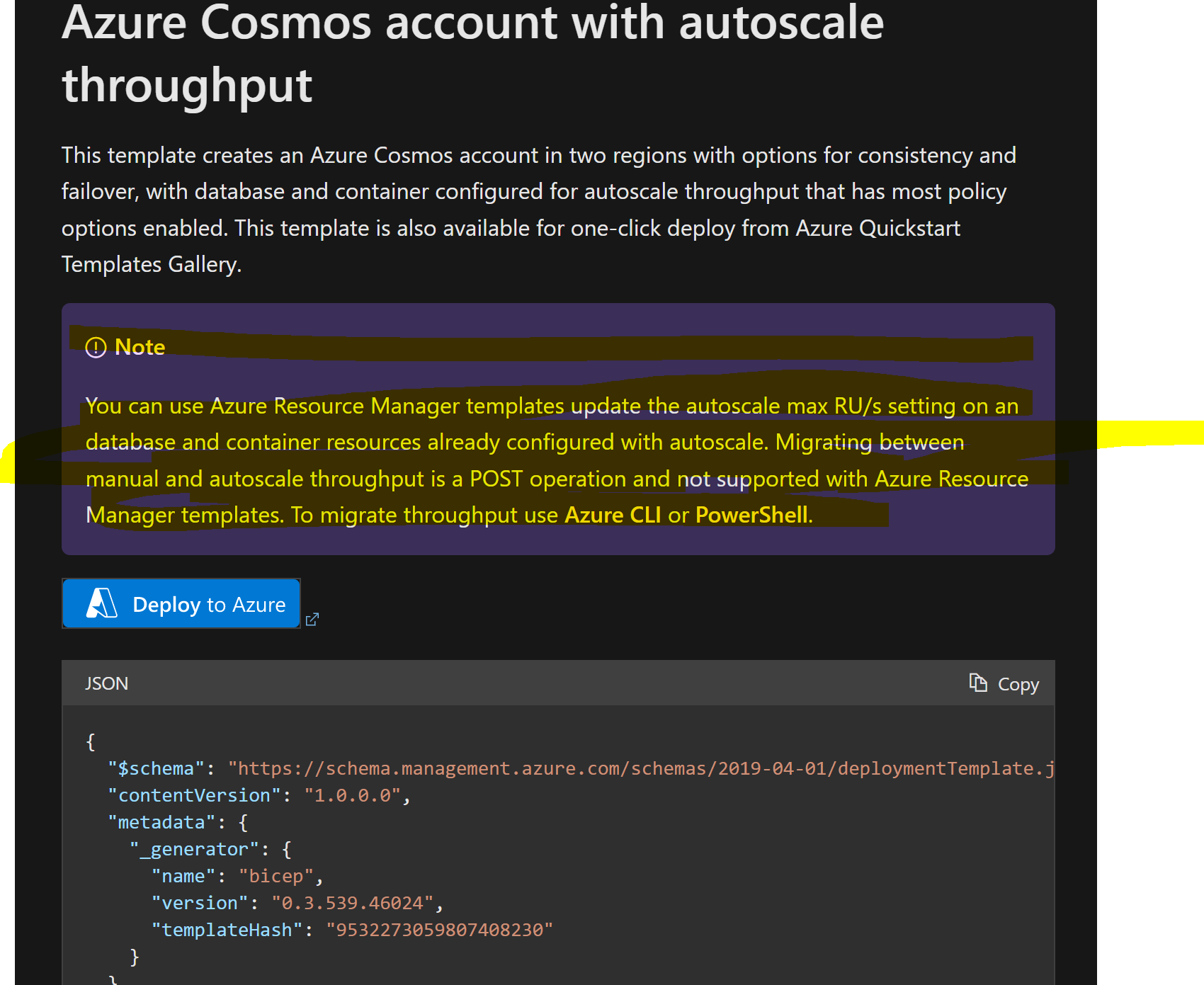

geneontology/go-ontology | 103124519 | Title: Can we strengthen our axiomatization to have spatial disjointness work for occurs_in

Question:

username_0: cc @hdietze @kltm

We currently have spatial disjointness axioms, e.g.

(partOf some nucleus) DisjointFrom (partOf some cytosol)

This will correctly identify any continuant with contradictory partOf axioms

However, this isn't detecting some invalid biology in some of the lego models, where we have the same activity occurring in both nucleus and cytosol

for this we would need

(occursIn some nucleus) DisjointFrom (occursIn some cytosol)

But obviously we don't want to redundantly maintain parallel axioms

Assigned to @username_1 to see if he has any ideas here, I feel I'm missing something, but can't think of the elegant solution. We can certainly write a script that would generate one from the other but ugh.

The other approach would be to declare a superproperty that subsumes both occursIn and partOf, and use that in the axioms, effectively doing double duty (a weaker relation inside an axiom involving negation can result in a stronger axiom).

Within FOL we would just say occursIn -> occursIn o partOf (which is valid for locally reflexive partOf) which would yield the desired inferences, but we can't say this in OWL

unless I'm missing something?

Answers:

username_1: I think i like the new, weaker OP solution. The one aspect that feels hacky is that it relies on a po relation with domain encompassing continuant and occurrent even though we would never allow occurrent po continuant. Could fall foul of any axiomatisation added in future to flag this this as inconsistent. If we follow this route, new rel should live in gorel with gorel uri and a comment on usage.

username_0: I would opt for RO, as we'd want to use it in Uberon, PO and even ENVO

Wherever it lives we would need mechanisms to stop it being used in (non-disjointness) assertions, because there is no way to do the 'push-down' inference to the more specific relation without the ugly [self-restriction pattern](https://github.com/oborel/obo-relations/wiki/ROGuideDraft#interaction-relations) which lies outside EL.

username_1: Are you sure self restriction is outside of EL? Looks like its not: http://www.w3.org/TR/owl2-profiles/#OWL_2_EL_2 (although ELK may not support)

username_0: You're right, it is in EL, but Elk doesn't appear to support it (I

didn't test, just going by

https://code.google.com/p/elk-reasoner/wiki/OwlFeatures )

Status: Issue closed

username_1: This issue was moved to geneontology/design_patterns#5 |

lillik/magento2-price-decimal | 263642520 | Title: Frontend shows round

Question:

username_0: Frontend shows 4 decimals but round every price to 2 decimals (e.g. price 36.9050 in the database becomes 36.9100 on the frontend).

Answers:

username_1: @username_0 Any update on this issue? Seems like a blocking issue

username_2: @username_0 this should be fixed in 1.0.3 |

volcano-sh/volcano | 949492492 | Title: the volcano-development.yaml has an additional configuration parameter

Question:

username_0: when i install whit yaml files,I get an error msg when I execute the following command:

kubectl apply -f https://raw.githubusercontent.com/volcano-sh/volcano/master/installer/volcano-development.yaml

err msg:unknown flag: --admission-conf

And then I'm going to get rid of this argument and I'm not going to report any errors.

Answers:

username_1: I think it's because of the latest image is not updated.

/cc @huone1

username_2: @username_0 please use the latest code to build the admission image. Or use the version 1.3.0 installer yaml https://github.com/volcano-sh/volcano/blob/v1.3.0/installer/volcano-development.yaml

username_3: @username_4 please help to update the lastest image on the docker hub.

username_4: OK.

username_0: @username_2 I tried 1.3.0 and It works fine,thx

username_4: /close |

broadinstitute/cromwell | 330911743 | Title: Aborted jobs still submits additional preemtible tasks to JES

Question:

username_0: After I have aborted a job, VM's are not being apropriately killed. Manually killing the VM does not have the desire effect, as if the task was preemptible PAPI will launch another VM until all of your preemptible tries have been consumed

Answers:

username_0: Hey @username_1

I am using 31.1. And i aborted them with the rest endpoint first. But a day later vms were still running. Manually killing these caused the above behaviour

username_1: Hey Patrick, I just ran a tiny test and was able to confirm jobs getting aborted.

- How many jobs were started from your workflow, and did any of the jobs from your workflow abort?

- Do you have a general sense at the stage your jobs were on when they were aborted? Were they all mostly executing the command when you aborted them?

- Did Cromwell ever report the workflow to have been successfully Aborted? Any errors thrown in the server logs?

Would you mind posting the operation metadata from one of the jobs that you tried aborting using the rest endpoint? Or simply the events reported for that operation?

username_1: Hey @username_0 I'm able to reproduce this behavior today. We will look into why this is happening, there's a definitely some path that's not killing jobs upon abort.

username_1: <img width="795" alt="screen shot 2018-06-15 at 9 44 14 am" src="https://user-images.githubusercontent.com/14941133/41471529-e9b665be-7081-11e8-86e3-1a4804d71adf.png">

Workflow status `Aborted`, executionStatus/backendStatus `Running`, PAPI Operation status `done:false`

username_0: @username_1 Thanks alot for looking into this, I have not really had bandwidth to get those Operation logs yet, I can look into it later today hopefully

username_1: @username_0 It turned out it was a different issue entirely in our production environment that had the symptoms of abort failures. We've not had success recreating this -- but let us know what you end up observing! |

Mozzo1000/movielst | 372303684 | Title: Remove use of external libraries

Question:

username_0: At the moment, invoking the web interface gets a lot of files from external parties like bootstrap.

These files should probably be downloaded the first time when running web interface and saved in static folder.<issue_closed>

Status: Issue closed |

huggingface/tokenizers | 742649134 | Title: AddedVocabulary does not play well with the Model

Question:

username_0: ### Current state

The `AddedVocabulary` adds new tokens on top of the `Model`, making the following assumption: "The Model will never change".

So, this makes a few things impossible:

- Re-training/changing the `Model` after tokens were added

- Adding tokens manually at the very beginning of the vocab, for example before training

- We can't extract these added tokens during the pre-processing step of the training, which would be desirable to fix issues like #438

### Goal

Make the `AddedVocabulary` more versatile and robust toward the change/update of the `Model`, and also capable of having tokens at the beginning and at the end of the vocabulary.

This would probably require that the `AddedVocabulary` is always the one doing the conversion tokens<->ids, providing the vocab etc. effectively letting us keep the `Model` unaware of its existence.

Answers:

username_1: Sorry to have taken forever on this issue! I looked into it and I think, I understand the problem now more or less.

As I understood it, the assumption that "the model never changes" is cemented in this line: https://github.com/huggingface/tokenizers/blob/ae6534f12db021aeed9938d2a46b045dc85fdb77/tokenizers/src/tokenizer/added_vocabulary.rs#L252 => When adding a token to the `AddedVocabulary` the id corresponds to the size of the model's vocab at the time of adding the token, but cannot change anymore if the model's vocab changes afterward.

Currently, the class flow when doing the tokens<->ids conversion is implemented as follows (please correct me if I'm wrong). Ask `TokenizerImpl` to convert `token_to_id`. The `TokenizerImpl` then just forwards the call to its `self.added_vocabulary`, but also adds a read-only reference to `self.model` to `self.added_vocabulary`. Then the `AddedVocabulary` checks if the token is in the Model's vocab (usually `self.vocab`) and if it's not checks in its own map.

I see three different approaches for now:

1)

As proposed, to make `AddedVocaburaly` more versatile, instead of passing `self.model` to the `AddedVocabulary` we could pass `self.model.vocab` to the `AddedVocabulary` for the `token_to_id` and `id_to_token` method. This way, we are always aware of the current vocab's size.

Following this approach, I guess it makes more sense then to save "relative" token_ids in `AddedVocabulary` instead of absolut ones for those tokens that are appended to the end of the vocabulary, no? Also, the `add_tokens` method of `AddedVocabulary` would not need a reference to the model anymore. Tokens that are appended to the beginning of the vocabulary could get absolute ids and tokens that are appended to the end could get relative ids => so `tokens_to_id` in `AddedVocabulary` could look something like:

```rust

token_to_id(token, vocab):

if token in vocab:

return vocab[token]

elif token in self.begin_added_tokens:

return self.begin_added_tokens[token]

elif token in self.end_added_tokens:

return len(vocab) + self.end_added_tokens[token]

```

One small problem I see here is that not all modes have the same type of "vocabulary". BPE, Wordpiece and Wordlevel all have `self.vocab` and `self.vocab_r`, but Unigram seems to use a `self.tokens_to_ids` `TokenMap`.

2) Or should we give `AddedVocabulary` a mutable reference to the vocab so that the vocab can be changed directly? The previous idea sounds better to me, but not 100% sure.

3) Or a completely different method would be to let the 'TokenizerImpl` have more responsible for `token_to_id` so that the `TokenizerImpl` class actually checks if the token is in self.model.vocab_size and if not it just passes the size of `self.model.vocab` to the `AddedVocabulary`'s `token_to_id` method. This approach actually seems like the most logical to me since both `AddedVocabulary` and `Model` are members of `TokenizerImpl`, so that ideally `AddedVocabulary` should not handle any `Model` logic but just get the minimal information from `model.vocab` it needs (which is just the size of the vocab IMO) for `token_to_id` from `TokenizerImpl`.

Both 1) & 3) seem reasonable to me...would be super happy about some misunderstanding I have and your feedback @username_0 :-)

username_0: Awesome! There's no rush so take the time you need!

I'll add a few pieces of information that may help with the decision:

- Every `Model` is different, and so we can't rely on their internals. The only thing we have to deal with all of them is the interface described in the `trait Model`. Also, any `Model` can be used independently of the `TokenizerImpl` so it makes sense to keep all these methods.

- The `AddedVocabulary` exists because we can't really modify the vocabulary of the `Model` in many cases. For example with `BPE`, we can't add a new token because it would require us to add all the necessary merge operations too, but we can't do it. Same with `Unigram`. So the `AddedVocabulary` works by adding vocabulary on top of the `Model` and it has the responsibility of choosing which one to use.

So I think approach 1) is probably the most likely to work, but we'll have to stick with what's available on `trait Model`.

One thing that is very important and might be tricky to handle (I didn't dig this at all for now) is the serialization/deserialization of the `TokenizerImpl` and `AddedVocabulary`. We need to keep all the existing tokenizers out in the wild to keep working, while probably having to add some info to handle whether a token is at the beginning or the end of the vocabulary. (Will probably be necessary to add some tests there.) I'll be able to help on this side of course! |

fullcalendar/fullcalendar | 147232840 | Title: Demos aren't working

Question:

username_0: Demo files are not working because of broken links in head section.

Status: Issue closed

Answers:

username_1: can you point me to the link where you are seeing this?

username_2: I have the same issue, when "basic-views.html" loading the next files:

`<link href='../dist/fullcalendar.css' rel='stylesheet' />`

`<link href='../dist/fullcalendar.css' rel='stylesheet' />`

`<link href='../dist/fullcalendar.print.css' rel='stylesheet' media='print' />`

`<script src='../lib/moment/moment.js'></script>`

`<script src='../lib/jquery/dist/jquery.js'></script>`

`<script src='../dist/fullcalendar.js'></script>`

Attach image

username_0: Yes demo files don't work becuse dist folder doesn't exist so the only solution is use cdn.

http://fullcalendar.io/download/

username_1: yes, use the built download, or if you want to use the dev repo, you'll need to run the build scripts to build the dist folder |

aws/amazon-vpc-cni-k8s | 349678795 | Title: Minutely cronjob fails after swapping out workers with new ASG

Question:

username_0: We use blue/green worker groups to update our AMIs for patching and other fixes. I've noticed that when pods are evicted during the draining, that the CNI starts having problems and the error rate of the cronjob skyrockets unless I delete and re-add it. I see these errors on multiple nodes and not consistently. The job succeeds many times between these errors. This is seemingly a dupe of #59 but I am running v1.1.0 here which supposedly fixed this issue?

With `kubectl get events`, I see these errors:

```