repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

bazelbuild/rules_rust | 351479687 | Title: rules_rust broken by bazel@HEAD

Question:

username_0: Hello dear rules owners,

I see that bazel@HEAD breaks rules_rust: https://buildkite.com/bazel/bazel-with-downstream-projects-bazel/builds/386#183d2d56-2b16-4010-9373-ef1b3b66b6eb

Would you mind taking a look? Thank you very much!

This is the announcement email:

We are very close to remove in-memory //tools/default* package in favor of using @bazel_tools//tools/jdk: and @bazel_tools//tools/cpp: instead.

The changes will be ready with next release.

Motivation:

//tools/default was initially created as virtual in-memory package. It generates content dynamically based on current configuration. There is no need of having //tools/defaults any more as LateBoundAlias can generate dynamic configuration-based label resolving. Also, having //tools/default makes negative impact on performance, and introduces unnecessary code complexity.

All references to //tools/defaults:* targets should be removed or replaced to corresponding target in @bazel_tools//tools/jdk: and @bazel_tools//tools/cpp: packages.

Scope of changes and impact:

All targets in //tools/default will not exist any more. If you have any references inside your BUILD or *.bzl files to any of its, then bazel will fail to resolve.

Migration plan:

Please replace all occurrences:

//tools/defaults:jdk

-- by @bazel_tools//tools/jdk:current_java_runtime

-- or/and @bazel_tools//tools/jdk:current_host_java_runtime

//tools/defaults:java_toolchain

-- by @bazel_tools//tools/jdk:current_java_toolchain

//tools/defaults:crosstool

-- by @bazel_tools//tools/cpp:current_cc_toolchain

-- or/and @bazel_tools//tools/cpp:current_cc_host_toolchain

-- if you need reference to libc_top, then @bazel_tools//tools/cpp:current_libc_top

These targets will not be supported any more:

//tools/defaults:coverage_report_generator

//tools/defaults:coverage_support |

prestodb/presto | 271401118 | Title: Create reusable type mapping tests for JDBC connectors

Question:

username_0: Type mapping between Presto and external database accessed by JDBC connector is done in `BaseJdbcClient` (`presto-base-jdbc`). While implementation is shared, correctness should be integration-tested against each database we have connector for (currently: postgresql, sqlserver, mysql). This should be implemented as reusable test (like `AbstractTestQueries`) with possibility to disable selected tests by a concrete connector e.g. for unsupported types.

Context https://github.com/prestodb/presto/pull/9285#issuecomment-341991161

Answers:

username_1: Also relevant for the Redshift connector.

username_2: [This and the 4 following tests](https://github.com/prestodb/presto/blob/master/presto-mysql/src/test/java/com/facebook/presto/plugin/mysql/TestMySqlDistributedQueries.java#L97) might be of help/inspiration :)

username_0: @username_2 thanks! Do you recall why the data mapping tests are distributed?

(That's probably why I didn't find them 🙂 )

username_2: No idea, probably because the original tests that were refactored into that DSL were distributed. As far as I see, there's no dependency on the tests being distributed. But maybe @username_3 will know? He started the CHAR/VARCHAR work.

username_3: I don't thing there is any particular need for them to be distributed.

username_3: Also if the test don't need to be distributed then we could possibly extend `LocalQueryRunner` to have method similar to: `Pair<MaterializedResult, Page> LocalQueryRunner::execute`. Then we could additionally test if connector writes correct representation of data types. We could for instance validate that char blocks returned by connector don't have trailing spaces.

CC: @username_2

username_2: Don't the current tests check that?

username_3: I don't think so, they only check that `MaterializedResult` is equal to inserted value? I don't think this implies that Slice representation is correct for instance.

username_0: Surely the current tests do not do this. Hence #9282 (the bug was not about incorrect semantics, but about incorrect cast implementation).

Status: Issue closed

|

harfbuzz/harfbuzz | 761149264 | Title: Compilation issue with ghs arm compilator

Question:

username_0: Here is the error message :

"..\..\..\..\..\..\..\..\..\..\ptf_gfi_core\System\harfbuzz\Dev\LibSources\hb-open-type.hh", line 524: error #304:

no instance of overloaded function

"OT::SortedUnsizedArrayOf<Type>::as_array" matches the argument list

operator hb_sorted_array_t<const Type> () const { return as_array (); }

^

The c++ compilator (compatible with c++11) seems to be strict not finding a method without argument.

As far i understand the cast operator shall return &arrayZ[0] ? So one fix could be to call_array (0) ?<issue_closed>

Status: Issue closed |

awslabs/aws-crt-nodejs | 876792669 | Title: electron error Error: node_modules/aws-crt/dist/bin/darwin-x64/aws-crt-nodejs

Question:

username_0: Hi,

I am trying to use `aws-iot-device-sdk-v2` in an electron app.

When I run `electron-forge start` I get this error:

```

App threw an error during load

Error: Cannot find module '/Users/jake/Code/mir-kiosk-electron/electron-kiosk/node_modules/aws-crt/dist/bin/darwin-x64/aws-crt-nodejs'

at webpackEmptyContext (/Users/jake/Code/mir-kiosk-electron/electron-kiosk/.webpack/main/index.js:111944:10)

at Object.<anonymous> (/Users/jake/Code/mir-kiosk-electron/electron-kiosk/.webpack/main/index.js:112436:91)

at Object../node_modules/aws-crt/dist/native/binding.js (/Users/jake/Code/mir-kiosk-electron/electron-kiosk/.webpack/main/index.js:112445:30)

````

After some googling I fixed this by adding this to `webpack.main.config.js`

It will be good I don't have to use this. I think the problem is `binding.js` uses `__dirname`

```

node: {

__dirname: true, // I

},

target: "node", // in order to ignore built-in modules like path, fs, etc.

externals: [nodeExternals()],

````

The problem is I get a missing module error if I run the packaged app. I guess node_module is not packaged.

```

Uncaught Exception:

Error: Cannot find module 'macaddress'

````

Do you have any idea as to how to fix it?

https://github.com/username_0/mir-kiosk-electron/tree/master/electron-kiosk

Status: Issue closed

Answers:

username_1: @username_0 How did you solve this?

username_0: Hi,

I made a patch.

https://github.com/username_0/mir-kiosk-electron/blob/node-ipc/electronKiosk/patches/aws-crt%2B1.8.0.patch

https://github.com/awslabs/aws-crt-nodejs/pull/220/commits/44faba53400fb0e993665b0fad79eb4a435fce72

By the way, this does not work in windows. `aws-crt` is not supported in electron on Windows. |

linebender/norad | 830401812 | Title: Python bindings and mutation patterns

Question:

username_0: This is a quick sketch of what would be involved in adapting norad so that it would be useable from languages like python, *as a drop-in replacement for existing libraries*; the particular challenge here is getting norad objects to have the same semantics ('reference semantics') as objects in those languages, and making that work with Rust's ownership model.

This is follow-up from the discussion in https://github.com/fonttools/fonttools/issues/1095.

I am going to make a bunch of assumptions about how some of the python tools work, so please correct me wherever I'm wrong!

My understanding of what we would want in a python API is basically: everything is an 'object', which reference semantics. If I get a glyph from a layer, and I change its outline, and then I go and get another copy of that glyph from the layer, those glyphs will be identical; they point to the same underlying data.

This is not how things currently work in norad; in norad mutation works through one of two mechanisms, which i'll call "borrowing" and "check-out/check-in":

- *borrowing*: This is how layers currently work in norad. To mutate a layer, you call a method that returns a reference to a layer object, and you can mutate this layer; however you *cannot* hold on to a copy of this layer, or really pass it anywhere else.

- *check-in/check-out*: This how glyphs currently work: *norad doesn't even let you get a mutable reference to a glyph, at all*. In norad, if you want to mutate a glyph, you get a glyph from a layer, you modify it, and then you add it back to that layer. You can think of this as being like a check-out/check-in mechanism.

### A design to support bindings

I think that trying to make norad *as it is currently written* fit into the python model will be tricky, but I think there's a reasonably straight-forward answer, which is that we have a separate set of types and interfaces explicitly designed to work with python. This should let us continue to share all of the base types and parsing/validation/serialization logic, while letting us build two separate APIs that will respect the two distinct use-cases.

So basically: we add a python-specific wrapper, in rust, for each type, like:

```rust

pub struct PyUfo {

meta: PyMetaInfo,

font_info: PyFontInfo,

...etc

}

pub struct PyLayer {

glyphs: Rc<RefCell<Map<String, PyGlyph>>,

...etc

}

pub struct PyGlyph {

inner: Rc<RefCell<Glyph>>

}

```

etcetera.

Note: This assumes that the glyph is a 'leaf' type, that is it is the finest granularity object that you're allowed to mutate and expect those mutations to show up elsewhere. This might not be the case; for instance you might expect to be able to get a `Contour` out of a glyph and change its properties and have those be reflected everywhere; in this case we could also need a `PyContour` type, and `PyGlyph` would look more like the `Glyph` that's already in norad.

You can mostly ignore the `Rc<RefCell<_>>` bit. The `Rc` means is that we're using a `R`eference `c`ounted pointer, and the `RefCell` means basically that the internal data is not subject to rust's borrowing rules at compile-time.

(`Rc` + `RefCell` is assuming that this object will not be shared between OS threads, which seems like a reasonable assumption for python; if we *do* want that behaviour then we would instead use `Arc` + `Mutex`, which ensures that our reference counts and data access are thread-safe).

### Borrowing problems

One possible concern with this approach involves borrowing expectations; the rule with `RefCell` is that when you actually want to *mutate* the data, you acquire a kind of 'lock'. If this object is already borrowed, you can't get that lock. In practice I think we can avoid this completely by ensuring all of that acquire/release happens on the rust side; I'd have to look into this a bit, though, to make sure. It might mean we have to write something to generate the python bindings ourselves, to ensure that things like setters and getters are doing that borrowing under the covers.

If we *do* have to expose this somehow, what we would do is to just throw a python exception if something was already borrowed. I was initially thinking this would be a larger part of the design, as a sort of safety valve; when folks migrated existing python code to this library they might hit some new exceptions, but I actually think we can probably avoid this altogether?

### other thoughts

*an alternative design based on proxy objects*: I think if we want a drop-in replacement for an existing tool written in python, something like what I describe here will be the best route. There are options, like having 'proxy objects' that just hold a reference to the font or layer as well as a method for mapping mutations on themselves to mutations on the shared object. This honestly has a certain nerdy appeal, especially since we could do cool stuff like having a `def delete:` on a `Glyph` object that removes it from the layer and updates the layer_contents, but I think it's probably a bit more complicated and it's a bit less clear to me how well it would work, although I'm more curious as I end this paragraph than I was when I began?

### next steps

This is intended as a sketch, and an actual design will require a bit more thought and research. I'm going to hold off on doing that work until I have a better sense of how much of a priority is this, and whether it's my priority or someone else's. If @username_1 is interested in doing the work then I'm happy to offer whatever advice and guidance I can. Otherwise if @davelab6 thinks that this is worth a week or two of my time then I'm confident we can get something working pretty quickly; the only part I'm unsure of is how to generate the python bindings in a way that would play nicely with with this interior-mutability pattern.

Answers:

username_1: Another suggestion that Raph had was the only object you expose to Python is the font, and you pass around with a path-key to access or mutate deeper structures. So changing the X position of a point is actually done by the moral equivalent of `font.set_value(“public.glyphs/a/2/1/x”, -5) `

That may help to put all the locking in the same place.

username_0: @username_1 that sounds like approximately what I was thinking about with 'proxy objects', as an alternative design, although I probably could have expressed it more clearly. :)

username_1: Incidentally we've since discovered that UFO loading is not the bottleneck we thought it was (yay profiling!) so I would not suggest this was a very high priority... What I have with iondrive (creating ufoLib2 objects in Rust) is fast enough for my needs.

username_0: okay, sounds good! |

Icinga/ipl-html | 816261461 | Title: SelectElement default value is ignored

Question:

username_0: When creating/adding a form element of type `select`, the order of options is significant. The order should **not** be significant, i.e. it shouldn't matter if `value` is passed in first.

The following will ignore the default value:

```php

$this->addElement('select', 'name', [

'value' => 'bar',

'options' => [

'foo' => 'foo',

'bar' => 'bar'

]

);

```

The following will pre-select `bar` as expected: (Note the different placement of the `value` attribute)

```php

$this->addElement('select', 'name', [

'options' => [

'foo' => 'foo',

'bar' => 'bar'

],

'value' => 'bar'

);

```<issue_closed>

Status: Issue closed |

CollaboratingPlatypus/PetaPoco | 139416988 | Title: Problem when using bracket on order by clause

Question:

username_0: I have a column named like user in my table and I need using it in my order by clause, but user in sql server is a reserve word, so I need use brackets in my sql query.

My query looks like that:

```SELECT [Level], [Description], [CreatedAt], [User]

FROM MyTable

ORDER BY [User]```

When I try execute a query like the above query in the PetaPoco I get a exception because I'm using brackets in my order by clause and whether I trying execute without brackets the result is not like expected.

Answers:

username_1: until fixed, maybe just use a non-reserved alias like SELECT [Level], [Description], [CreatedAt], [User] as MyUser FROM MyTable ORDER BY MyUser

Status: Issue closed

username_2: Added integration test. Confirms this has already been fixed |

dart-lang/language | 888921366 | Title: How does static metaprogramming handle incomplete code for auto-complete?

Question:

username_0: We discussed this in a meeting, but I wanted to capture it in an issue so we don't forget.

Currently, analyzer is happy to analyze incomplete or incorrect programs in order to make auto-complete work while the user is in the middle of typing code. For example:

<img width="543" alt="Screen Shot 2021-05-11 at 3 23 55 PM" src="https://user-images.githubusercontent.com/46275/117892096-f154a300-b26c-11eb-9d80-1cf5e9bea219.png">

Here, even though the class is syntactically incorrect, it can still type check the class enough to show `bar()` as an auto-complete option. If something like macros are applied to that class, though, this can break down. If the analyzer/compiler only applies macros when the code is in a valid state, then auto-complete will stop working while they are typing in a class that uses macros. If analysis can apply macros to incomplete code, then we need to define how that works or what that means.

One idea is that the language basically ignores the issue. It only specifies the behavior of applying macros to correct programs. When the user is in the middle of typing code, analyzer takes their current incomplete program and discards whatever incomplete stuff it needs to get to a valid source file. Then it runs the macros and type checks that. Maybe something like that would work.

Whatever we do, it's critical that static metaprogramming doesn't degrade the IDE user experience.

@username_1 @username_4

Answers:

username_1: @devoncarew, @username_5 and I recently had the first in a series of meetings about some of the UX issues around static metaprogramming and I'm in the process of writing up a doc with what we had thought about. Our intent is to share it with you as soon as it's reasonably coherent as a way of furthering discussions like this. Code completion is one of the topic areas, and I'll be sure to add thoughts about incomplete code.

username_2: Definitely looking forward to @username_1's doc. As someone who's been hacking on the analyzer for 7 years, I have a bunch of thoughts on this topic too. I'll try to give my personal perspective here briefly; I'm happy to follow up off-line if that would be useful.

The issue IMHO is better characterized as how to handle *code with static errors* rather than *incomplete* code. (Some incomplete code is free of static errors and that code can be analyzed normally; some code with static errors isn't incomplete but actually has extra junk, or stuff that's simply wrong (like code that fails to type check) and we need to handle that too). So really what we are talking about here is error recovery.

I like the simplicity of Bob's suggestion that we discard code until we reach a valid program, but I think in order to reduce the amount of discarding we need to do, we will need some escape hatches to allow invalid code to be represented. As a trivial example, if a method's return type is misspelled, we shouldn't just discard that method from the model; the code generator should still be able to see that the method exists. But if it queries its return type, it should either get `dynamic`, or a special "invalid type" with some well-defined behaviors. Personally I favor the "invalid type" approach because it makes it easier to avoid cascading errors, but that may be more implementation effort because currently the analyzer uses `dynamic`.

Similarly, if we allow code generators to peek at the expressions and statements inside a method declaration, it would be nice to have "invalid statement" and "invalid expression" nodes so that we can show the code generator as much of the method body as possible without forcing it to deal directly with the invalid code.

A particularly tricky area is what to do with loops in class hierarchies. The language spec forbids the class hierarchy from looping in order to avoid non-termination of algorithms like type inference, subtype checking, etc. The analyzer's implementation of these algorithms has been carefully written to avoid non-termination even in the presence of class hierarchy loops. I don't think we can expect writers of code generators to exercise the same care, and if we're going to be running user-supplied code generators during analysis, it's really important to avoid non-termination issues. So I think we should ensure that when their code generator queries the code structure, it sees a class hierarchy without loops. We *could* handle this by discarding "extends" and "implements" clauses until no loops remain, but I think this would lead to a poor user experience because code generators often have expectations about the class structure of the code they're processing, and will issue errors if they don't see the class structure they expect. It would be really confusing if our efforts to "fix" class hierarchy loops caused code generators to output nonsense error messages. I think a better approach here is the "invalid type" approach: if there's a loop in the class hierarchy, the analyzer should synthetically change one of the "extends" or "implements" clasuses to e.g. "extends <invalid-type>"; that way either the code generator can choose to suppress its error message, or even if it doesn't and the error message makes it to the user, the name "invalid-type" should provide the user with better clues about what went wrong.

Side question: in order to fix loops in the class hierarchy, the analyzer will need to make arbitrary choices about which edge to cut. How consistent should its choices be? IMHO it would be best for it to be deterministic and independent of the order of queries from the code generator.

One final note: thus far, we have left the design of error recovery pretty much entirely up to the analyzer team, and kept any discussion of error recovery out of the spec. I think that's a really good general principle because it allows the analyzer team more creative freedom and lets them pivot faster when they learn things about how error recovery works in real-world scenarios. But I think it would be good to classify our errors into some broad categories and specify what categories of invalid code (if any) user-provided code should be expected to deal with. I think it should be something like: user-provided code generators won't ever see syntax errors, class hierarchy loops, or self-referential typedefs. But they may see special "invalid type" nodes. They may also see: unimplemented abstract methods, type mismatches between a class method and the method it overrides, and naming conflicts (e.g. classes with duplicate names). Also, when looking inside method bodies, they may see "invalid expression" and "invalid statement" nodes, unterminated switch cases, mismatched return types, use of uninitialized variables, and failure to return a value from a method with a non-nullable return type.

(That's just my first impression though. I'm fairly open to discussion, especially on that last sentence about method bodies)

username_1: That's an interesting recovery strategy, but it's not consistent with the approach used by the analyzer for other kinds of errors. I'm not saying that it has to be consistent, just making an observation. I think it would be informative to try to write a couple of macros as a concrete way of thinking about which recovery approach would provide the best UX for both end users and for macro authors.

username_0: This is a great discussion. Trying to synthesize your comments, how does something like this sound:

* Tools may run macros even on code that contains some kind of errors. It's entirely up to tools to choose whether and in which circumstances that they do. The language doesn't specify the boundary.

* But, if the code isn't valid, it will never end up being run. The macros are only invoked in order to provide a better IDE experience.

* The API that macros use to work with code has a notion of erroneous pieces of code. Introspecting over code in a macro may yield "invalid type", "invalid statement", etc. Macro authors can choose to handle those gracefully if they choose in order to provide a better experience when the macro is invoked on invalid code. If they don't the macro may fail, which essentially means it generates some cascaded compile errors. It's up to tools to decide if they want to hide those or not.

In other words tools *may* eagerly run macros on ill-defined code. The API gives macro authors affordances to handle that gracefully if they so choose. But the resulting code never gets actually executed, so the language doesn't have to get in the business of specifying its behavior.

Does that seem like a reasonable set of trade-offs?

username_3: Why will it stop working?

Consider a macro that generates a data class. Let's assume the input for a macro is declared as "template class", which is easily recognized by the analyzer as a template (as opposed to a normal code).

```dart

@dataClass

template class Foo {

int x;

String y;

// etc.

}

```

Further, suppose we already have a state with a valid template. The macro has been called already, and the resulting code is known. What happens when we move the cursor into the definition of the template and start editing?

The straightforward answer is: nothing. The macro doesn't get called when the code of the template is incomplete. But the previous results of a macro are not discarded - at least until the cursor leaves the template block. So all suggestions work as if nothing had happened. If the user exits the block leaving the template in a bad state - I'm not sure even in this case the results of prior macro invocation should be invalidated (thus causing an avalanche of other errors), but maybe.

However, if the macro gets called with incomplete code (as proposed) and has to decide how to handle the errors "gracefully" - it's painful even to think of how the macro has to be coded to behave predictably. The only possibility I see for the macro is to memorize the input and output of the last correct invocation and somehow figure out what has changed and how this affects the result - but why bother? There's no guarantee that this behavior will be more intuitive than the straightforward algorithm outlined above.

username_1: Yep, that's part of the proposal I'm writing up.

username_4: There is actually one case which I think might fall into this same bucket as what we are discussing here. The case is where a macro introspects on an api, but the api relies on type inference to be filled in, and the macro itself needs to be ran in order for that type inference to work. Consider this class:

```dart

class Thing {

final name = someMacroGeneratedField;

}

```

If the macro generating `someMacroGeneratedField` introspects on `Thing` and asks for the type of `name`, what does it get?

The issue is actually a lot more subtle/interesting than even this contrived example, because even if `someMacroGeneratedField` can be resolved to some identifier, some other macro could run and change the identifier that should have been resolved to by shadowing it.

This is a general problem that we are working through right now but it seems likely that macros will need to at least have to deal with introspecting on apis where the type is _not yet known_, and never will be whenever that macro runs, because it hasn't been confidently inferred yet.

My assumption at this point is some macros would want to fail and require a type on the left hand side (although this is not ideal, I don't see a good workaround at this time). Other macros might be ok with just treating it as dynamic. I think we need to give them some special notion of an "unknown type" to choose how to handle this.

username_1: I think there's an interesting requirement implied by that statement: while applying the macro M, the introspection API must reveal a state that's consistent with the state the code would be in if the macros were being run for the first time. In other words, if the analyzer caches any macro results and then has to re-apply M, the introspection API can't reveal any code in the cache that would have been generated after M was run the first time.

@devoncarew @username_5

username_5: The requirement that every time is like the first time looks reasonable to me. |

BragaAndrei/testing | 548562966 | Title: The font in the news bar does not work properly

Question:

username_0: Description

Defect nr.ID: 001

Title: Font Text Issues

macOS Mojave version 10.14.6, Safari Version 12.1.2 (14607.3.9)

Pre-condition: The font is shown to the customer in a glitchy way

Steps to reproduce:

1. Go to www.airport.md

2. Scroll down to the news bar

Expected result: The news text font is shown as glitchy

Severity: Low

Priority: 1

Environment

macOS Mojave version 10.14.6 , Safari Version 12.1.2 (14607.3.9)

<img width="980" alt="Screen Shot 2020-01-11 at 13 00 17" src="https://user-images.githubusercontent.com/59793996/72218719-c0644100-3546-11ea-9324-60a477fc1e48.png"><issue_closed>

Status: Issue closed |

Fatal1ty/mashumaro | 815895565 | Title: Inconsistent checks for invalid value type for str type

Question:

username_0: If a class based on DataClassDictMixin has a field with type str it will construct instances from data that contains data of other types for that field, including numbers, lists, and dicts. However fields of other types, eg int, do not accept other non-compatible types. Not sure if this is intentional and I'm missing something here, but it kinda seems like unexpected/undesirable behaviour when you want the input data to be validated.

The following example only throws an error on the very last line:

```python

from dataclasses import dataclass

from mashumaro import DataClassDictMixin

@dataclass

class StrType(DataClassDictMixin):

a: str

StrType.from_dict({'a': 1})

StrType.from_dict({'a': [1, 2]})

StrType.from_dict({'a': {'b': 1}})

@dataclass

class IntType(DataClassDictMixin):

a: int

IntType.from_dict({'a': 'blah'})

```

Answers:

username_1: There is no strict validation at the moment for the sake of performance. It's not needed in many cases but I'm going to add optional validation. It will be turned on in the field or config options. |

Vulnerator/Vulnerator | 373638609 | Title: Multiple CKL Files do not show up in the Vulnerator Excel Report

Question:

username_0: The vulnerator tool does not import all finding details into the vulnerator spreadsheet which should show multiple entries from a several CKL files.

I attempted to import 3 separate CKL files generated from STIG VIEWER into the Vulnerator to consolidate my findings in to one report. The vunerator spreadsheet only logged one of three entries into the mitigation column leaving the other two entries out.

For example if I had three CKL files, and all three files had three separate comments and finding entries pertaining to one CCI, the vulnerator tool will randomly pick one of the three files and import the data it sees into the spreadsheet rather than importing all three entries into the report.

Answers:

username_1: @username_0 I've updated how vulnerabilities are parsed for v6.2.0, and am currently verifying the accuracy of all data now - I will ensure that all relevant information is captured for every item in the checklist.

Thank you for the bug report! |

godotengine/godot | 403148414 | Title: Inspector dock changes size when selected

Question:

username_0: <!-- Please search existing issues for potential duplicates before filing yours:

https://github.com/godotengine/godot/issues?q=is%3Aissue

-->

**Godot version:**

5b5db08

**Issue description:**

When clicking the Inspector dock, it will change its size. It only affects this dock alone, no matter its current location:

Answers:

username_1: Might be related to #25281.

username_2: Im trying to fix [#25281](https://github.com/godotengine/godot/issues/25281) and i found that the problem might be related to tab_container resizeing.. in tab_container.cpp there are these lines:

```

for (int i = 0; i < tabs.size(); i++) {

Control *c = tabs[i];

if (!c->is_visible_in_tree())

continue;

Size2 cms = c->get_combined_minimum_size();

ms.x = MAX(ms.x, cms.x);

ms.y = MAX(ms.y, cms.y);

}

```

Not skipping invisible tabs solve this for me:

```

for (int i = 0; i < tabs.size(); i++) {

Control *c = tabs[i];

Size2 cms = c->get_combined_minimum_size();

ms.x = MAX(ms.x, cms.x);

ms.y = MAX(ms.y, cms.y);

}

```

However, i think this only makes the symptoms go away.. i think i need some feedback to go on.

username_3: Related, if not duplicate, to #24572

username_4: Still happens in Godot 3.1 RC1

username_2: Yes, i made a pull request with a possible fix, but i think it was kicked to 3.2

username_5: @username_2 where is your pull, I can't find it?

username_2: Here!

https://github.com/godotengine/godot/pull/25353

Status: Issue closed

|

angular/angular | 1165882638 | Title: Tour of Heroes Docs: Missing CSS Styles in src/styles.css

Question:

username_0: ### Description

Tour of Heroes (TOH) Part 0 is missing button styles in `src/styles.css`. As a result, when developers go through the TOH, button CSS styles that are present in the live examples in src/styles.css are not present in the code snippets at the end of the lesson. This is most apparent in [TOH part-2](https://angular.io/tutorial/toh-pt2), where a list of buttons are shown without the expected styles.

### What is the affected URL?

https://stackblitz.com/edit/angular-ivy-3vttek?file=src%2Fstyles.css

### Please provide the steps to reproduce the issue

1. Create new project in TOH Part 0 and apply code snippets to project

2. Follow steps in TOH part 1 and apply code snippets

3. Follow steps in TOH part 2 and apply code snippets

4. View output project styling for heroes.component.html without the expected button styling

Minimal Reproduction

https://stackblitz.com/edit/angular-ivy-3vttek?file=src%2Fstyles.css

### Please provide the expected behavior vs the actual behavior you encountered

**Expected**: heroes.component.html will have expected button styles applied as seen in the [live example ](https://stackblitz.com/run?file=src/app/heroes/heroes.component.html)for TOH part 2

**Actual**: heroes.component.html does not have button styles applied.

### Please provide a screenshot if possible

Actual

<img width="331" alt="TOH-p2-button-styles-actual" src="https://user-images.githubusercontent.com/20045743/157781153-7268ae58-6ac6-4110-a9a8-a2420ce76340.png">

Expected

<img width="331" alt="TOH-p2-button-styles-expected" src="https://user-images.githubusercontent.com/20045743/157781181-0bdf8ea3-80aa-45a2-a935-1bcaf9f953f2.png">

### Please provide the exception or error you saw

```true

N/A

```

### Is this a browser-specific issue? If so, please specify the device, browser, and version.

```true

N/A

```

Answers:

username_1: There is already a dedicated section in TOH part 2 explaining how to add styles after adding buttons: https://angular.io/tutorial/toh-pt2#style-the-heroes

username_0: @username_1 It explains how to add, but not what to add. Giving a code snippet provides an example for this section and reinforces differences between component-styles and global-styles.

I'd be happy to update this issue to instead suggest a code snippet addition to the Final code review in TOH part 2, rather than TOH part 0, since that flows better with the rest of the documentation.

username_1: The `what to add ` is in the last part of the section (with related explanation about component vs global styles):

```

Open the heroes.component.css file and paste in the private CSS styles for the HeroesComponent. You'll find them in the [final code review](https://angular.io/tutorial/toh-pt2#final-code-review) at the bottom of this guide.

```

About:

```

I'd be happy to update this issue to instead suggest a code snippet addition to the Final code review in TOH part 2

```

The code snippet is already in the final code review part 2

username_1: Oh, i see sorry, I thought it was about button styles from the component not being featured in part 2.

Then I would be in favor of fixing it in part 0 as such styles are details beginners shouldn't have to care about to focus on Angular framework itself for this tutorial.

username_0: No worries friend, I could have explained it better.

And agreed 👍🏻

Status: Issue closed

|

amagovpt/kit-selo | 533357570 | Title: Refererência desnecessária a software específico

Question:

username_0: O texto leva a crer que o uso específico dessa aplicação é necessário para cumprir aquele requisito. Sendo o PDF uma norma aberta, o teste para a sua acessibilidade, por um lado, não depende do uso de um software em específico, e, por outro lado, não se cinge ao funcionamento da função de cópia nesse mesmo software. |

quasarframework/quasar | 243213913 | Title: validation for form in the popup Dialog

Question:

username_0: feature suggestion/request

when I create a Dialog with form components,such as :

```

import { Dialog, Toast } from 'quasar'

Dialog.create({

title: 'Prompt',

form: {

name: {

type: 'text',

label: 'Textbox',

model: ''

},

mobilephone: {

type: 'text',

label: 'Textbox',

model: ''

},

},

buttons: [

'Cancel',

{

label: 'Ok',

handler (data) {

Toast.create('Returned ' + JSON.stringify(data))

}

}

]

})

```

can I do some validation for the mobilephone number?

I need some feature to support vuelidate. or can I pass a @blur event handler to mobilephone ?

thx very much

Status: Issue closed

Answers:

username_1: Hi,

Check http://beta.quasar-framework.org/components/dialog.html#Prevent-Closing-the-Dialog

This will allow you to use validations and prevent closing the dialog until all data is filled in correctly. If you need something more specific, create your own Modal.

username_2: @username_1 , can you show some code snippet? I can not know how validate from dialogs. |

Azure/AKS | 287428773 | Title: Cannot get logs of running pod via kubectl and kube-svc, kubernetes-dashboard restarted many times

Question:

username_0: In azure kubernetes cluster, to get logs or exec to interactive of any pods giving error timed out and after `kubectl get pods --all-namespaces -o wide` . The namespace kube-system's pods kube-svc, kubernetes-dashboard restarted many times.

logs of `kubectl cluster-info dump` as

`==== START logs for container heapster of pod kube-system/heapster-75667786bb-m74tg ====

Request log error: an error on the server ("unknown") has prevented the request from succeeding (get pods heapster-75667786bb-m74tg)

==== END logs for container heapster of pod kube-system/heapster-75667786bb-m74tg ====

==== START logs for container heapster-nanny of pod kube-system/heapster-75667786bb-m74tg ====

I0110 11:57:54.533538 1 pod_nanny.go:56] Invoked by [/pod_nanny --cpu=80m --extra-cpu=0.5m --memory=140Mi --extra-memory=4Mi --threshold=5 --deployment=heapster --container=heapster --poll-period=300000 --estimator=exponential]

I0110 11:57:54.626367 1 pod_nanny.go:68] Watching namespace: kube-system, pod: heapster-75667786bb-m74tg, container: heapster.

I0110 11:57:54.626427 1 pod_nanny.go:69] cpu: 80m, extra_cpu: 0.5m, memory: 140Mi, extra_memory: 4Mi, storage: MISSING, extra_storage: 0Gi

I0110 11:57:54.627246 1 pod_nanny.go:110] Resources: [{Base:{i:{value:80 scale:-3} d:{Dec:<nil>} s:80m Format:DecimalSI} ExtraPerNode:{i:{value:5 scale:-4} d:{Dec:<nil>} s: Format:DecimalSI} Name:cpu} {Base:{i:{value:146800640 scale:0} d:{Dec:<nil>} s:140Mi Format:BinarySI} ExtraPerNode:{i:{value:4194304 scale:0} d:{Dec:<nil>} s:4Mi Format:BinarySI} Name:memory}]

E0110 11:58:24.628329 1 reflector.go:205] k8s.io/contrib/addon-resizer/nanny/kubernetes_client.go:108: Failed to list *v1.Node: Get https://10.0.0.1:443/api/v1/nodes?resourceVersion=0: dial tcp 10.0.0.1:443: i/o timeout

E0110 11:58:54.627514 1 nanny_lib.go:87] timed out waiting for the condition

E0110 11:58:55.629076 1 reflector.go:205] k8s.io/contrib/addon-resizer/nanny/kubernetes_client.go:108: Failed to list *v1.Node: Get https://10.0.0.1:443/api/v1/nodes?resourceVersion=0: dial tcp 10.0.0.1:443: i/o timeout

E0110 11:59:26.629759 1 reflector.go:205] k8s.io/contrib/addon-resizer/nanny/kubernetes_client.go:108: Failed to list *v1.Node: Get https://10.0.0.1:443/api/v1/nodes?resourceVersion=0: dial tcp 10.0.0.1:443: i/o timeout

E0110 11:59:57.630452 1 reflector.go:205] k8s.io/contrib/addon-resizer/nanny/kubernetes_client.go:108: Failed to list *v1.Node: Get https://10.0.0.1:443/api/v1/nodes?resourceVersion=0: dial tcp 10.0.0.1:443: i/o timeout

E0110 12:00:04.628441 1 nanny_lib.go:87] timed out waiting for the condition

E0110 12:00:28.631175 1 reflector.go:205] k8s.io/contrib/addon-resizer/nanny/kubernetes_client.go:108: Failed to list *v1.Node: Get https://10.0.0.1:443/api/v1/nodes?resourceVersion=0: dial tcp 10.0.0.1:443: i/o timeout

E0110 12:00:59.632082 1 reflector.go:205] k8s.io/contrib/addon-resizer/nanny/kubernetes_client.go:108: Failed to list *v1.Node: Get https://10.0.0.1:443/api/v1/nodes?resourceVersion=0: dial tcp 10.0.0.1:443: i/o timeout

E0110 12:01:14.726064 1 nanny_lib.go:87] timed out waiting for the condition

E0110 12:01:30.632856 1 reflector.go:205] k8s.io/contrib/addon-resizer/nanny/kubernetes_client.go:108: Failed to list *v1.Node: Get https://10.0.0.1:443/api/v1/nodes?resourceVersion=0: dial tcp 10.0.0.1:443: i/o timeout

E0110 12:02:01.726960 1 reflector.go:205] k8s.io/contrib/addon-resizer/nanny/kubernetes_client.go:108: Failed to list *v1.Node: Get https://10.0.0.1:443/api/v1/nodes?resourceVersion=0: dial tcp 10.0.0.1:443: i/o timeout

E0110 12:02:24.726631 1 nanny_lib.go:87] timed out waiting for the condition

E0110 12:02:32.727699 1 reflector.go:205] k8s.io/contrib/addon-resizer/nanny/kubernetes_client.go:108: Failed to list *v1.Node: Get https://10.0.0.1:443/api/v1/nodes?resourceVersion=0: dial tcp 10.0.0.1:443: i/o timeout

==== END logs for container heapster-nanny of pod kube-system/heapster-75667786bb-m74tg ====

==== START logs for container kubedns of pod kube-system/kube-dns-v20-6c8f7f988b-t8p97 ====

I0110 11:40:58.678123 1 dns.go:48] version: 1.14.4-2-g5584e04

I0110 11:40:58.703856 1 server.go:70] Using configuration read from directory: /kube-dns-config with period 10s

I0110 11:40:58.703908 1 server.go:113] FLAG: --alsologtostderr="false"

I0110 11:40:58.703919 1 server.go:113] FLAG: --config-dir="/kube-dns-config"

I0110 11:40:58.703926 1 server.go:113] FLAG: --config-map=""

I0110 11:40:58.703931 1 server.go:113] FLAG: --config-map-namespace="kube-system"

I0110 11:40:58.703936 1 server.go:113] FLAG: --config-period="10s"

I0110 11:40:58.703942 1 server.go:113] FLAG: --dns-bind-address="0.0.0.0"

I0110 11:40:58.703947 1 server.go:113] FLAG: --dns-port="10053"

I0110 11:40:58.703954 1 server.go:113] FLAG: --domain="cluster.local."

I0110 11:40:58.703961 1 server.go:113] FLAG: --federations=""

I0110 11:40:58.703968 1 server.go:113] FLAG: --healthz-port="8081"

I0110 11:40:58.703973 1 server.go:113] FLAG: --initial-sync-timeout="1m0s"

I0110 11:40:58.703977 1 server.go:113] FLAG: --kube-master-url=""

I0110 11:40:58.703984 1 server.go:113] FLAG: --kubecfg-file="/config/kubeconfig"

I0110 11:40:58.703988 1 server.go:113] FLAG: --log-backtrace-at=":0"

I0110 11:40:58.704003 1 server.go:113] FLAG: --log-dir=""

I0110 11:40:58.704008 1 server.go:113] FLAG: --log-flush-frequency="5s"

I0110 11:40:58.704012 1 server.go:113] FLAG: --logtostderr="true"

I0110 11:40:58.704017 1 server.go:113] FLAG: --nameservers=""

I0110 11:40:58.704021 1 server.go:113] FLAG: --stderrthreshold="2"

I0110 11:40:58.704026 1 server.go:113] FLAG: --v="2"

I0110 11:40:58.704030 1 server.go:113] FLAG: --version="false"

I0110 11:40:58.704038 1 server.go:113] FLAG: --vmodule=""

I0110 11:40:58.704080 1 server.go:176] Starting SkyDNS server (0.0.0.0:10053)

I0110 11:40:58.704134 1 server.go:200] Skydns metrics not enabled

I0110 11:40:58.704142 1 dns.go:147] Starting endpointsController

I0110 11:40:58.704147 1 dns.go:150] Starting serviceController

I0110 11:40:58.704261 1 logs.go:41] skydns: ready for queries on cluster.local. for tcp://0.0.0.0:10053 [rcache 0]

I0110 11:40:58.704272 1 logs.go:41] skydns: ready for queries on cluster.local. for udp://0.0.0.0:10053 [rcache 0]

I0110 11:40:59.204376 1 dns.go:171] Initialized services and endpoints from apiserver

I0110 11:40:59.204403 1 server.go:129] Setting up Healthz Handler (/readiness)

I0110 11:40:59.204412 1 server.go:134] Setting up cache handler (/cache)

I0110 11:40:59.204438 1 server.go:120] Status HTTP port 8081

[Truncated]

W0110 11:40:15.322895 1 server.go:191] WARNING: all flags other than --config, --write-config-to, and --cleanup are deprecated. Please begin using a config file ASAP.

time="2018-01-10T11:40:15Z" level=warning msg="Running modprobe ip_vs failed with message: ``, error: exec: \"modprobe\": executable file not found in $PATH"

time="2018-01-10T11:40:15Z" level=error msg="Could not get ipvs family information from the kernel. It is possible that ipvs is not enabled in your kernel. Native loadbalancing will not work until this is fixed."

W0110 11:40:15.385440 1 server_others.go:263] Flag proxy-mode="" unknown, assuming iptables proxy

I0110 11:40:15.387256 1 server_others.go:117] Using iptables Proxier.

I0110 11:40:15.554170 1 server_others.go:152] Tearing down inactive rules.

I0110 11:40:15.706742 1 conntrack.go:98] Set sysctl 'net/netfilter/nf_conntrack_max' to 131072

I0110 11:40:15.711494 1 conntrack.go:52] Setting nf_conntrack_max to 131072

I0110 11:40:15.712763 1 conntrack.go:83] Setting conntrack hashsize to 32768

I0110 11:40:15.712986 1 conntrack.go:98] Set sysctl 'net/netfilter/nf_conntrack_tcp_timeout_established' to 86400

I0110 11:40:15.713096 1 conntrack.go:98] Set sysctl 'net/netfilter/nf_conntrack_tcp_timeout_close_wait' to 3600

I0110 11:40:15.731308 1 config.go:202] Starting service config controller

I0110 11:40:15.731336 1 controller_utils.go:1041] Waiting for caches to sync for service config controller

I0110 11:40:15.731544 1 config.go:102] Starting endpoints config controller

I0110 11:40:15.731555 1 controller_utils.go:1041] Waiting for caches to sync for endpoints config controller

I0110 11:40:15.832032 1 controller_utils.go:1048] Caches are synced for endpoints config controller

I0110 11:40:15.832141 1 controller_utils.go:1048] Caches are synced for service config controller

==== END logs for container kube-proxy of pod kube-system/kube-proxy-qj987 ====

==== START logs for container redirector of pod kube-system/kube-svc-redirect-b8hd6 ====

`

Answers:

username_1: I am experiencing the same issue - timeout when retrieving logs.

username_2: I also get timeouts and the request times are very slow :(

username_3: Same issue here in US East.

```

root@aks-default-15610861-0:~# kubectl logs -f tiller-deploy-7b87468d9-p4sv4 -n kube-system

Error from server: Get https://aks-default-15610861-1:10250/containerLogs/kube-system/tiller-deploy-7b87468d9-p4sv4/tiller?fo

llow=true: dial tcp 10.125.8.9:10250: getsockopt: connection timed out

root@aks-default-15610861-0:~# telnet 10.125.8.9 10250

Trying 10.125.8.9...

Connected to 10.125.8.9.

Escape character is '^]'.

^]

telnet> quit

Connection closed.

root@aks-default-15610861-0:~#

```

In addition my dns pods are crashing.

```

root@aks-default-15610861-0:~# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system heapster-2574232661-lxmn7 2/2 Running 0 1h

kube-system kube-dns-v20-2253765213-djdt9 1/3 CrashLoopBackOff 34 1h

kube-system kube-dns-v20-2253765213-j9g5r 2/3 CrashLoopBackOff 43 1h

kube-system kube-proxy-9lkbm 1/1 Running 0 1h

kube-system kube-proxy-qnl26 1/1 Running 0 1h

kube-system kube-proxy-x56f4 1/1 Running 0 1h

kube-system kube-svc-redirect-2kr8h 1/1 Running 0 1h

kube-system kube-svc-redirect-wdg8f 1/1 Running 0 1h

kube-system kube-svc-redirect-z6h6l 1/1 Running 0 1h

kube-system kubernetes-dashboard-6d9c57c89c-xldlg 1/1 Running 0 1h

kube-system tiller-deploy-7b87468d9-p4sv4 1/1 Running 0 38m

kube-system tunnelfront-5ff8ddff6d-87t86 1/1 Running 0 1h

```

username_4: Same issue here in eastus.

username_5: Same issue, West Europe

username_6: I was able to connect my AKS cluster with kubectl but now it stopped responding. What ever kubectl commands I try, it gives "Unable to connect to the server: dial tcp: i/o timeout". Any hints how to get over this? There is no way any more to monitor my pods :-(

username_7: I am seeing similar issues. I am able to retrieve pods, deployments, but I can't get logs from any pod, can't connect to the dashboard, can't run busybox inside the cluster.

username_8: Ran into this issue recently in the East U.S. region. I'm using the cluster for testing and am turning the VMs off and back on as part of this testing (to work around other suspected AKS issues). This last time it came back up `kubectl logs` and `kubectl exec` were both not working, with the same timeout message as in the original report.

After turning VMs off and back on yet again, `kubectl logs` and `kubectl exec` are now working again.

Status: Issue closed

username_9: Closing due inactivity. Feel free to re-open if still an issue.

username_10: I had this problem and it turned out that I had a resource that used the subnet dedicated to AKS. you have to check this and if so, remove the resource.

username_11: I ran into the same problem, I am running on bare-metal using kubeadm & ubuntu 16.x. In my case, the master was unable access the kubelet because firewall ports were not open on one of my nodes, opening them up resolved the issue.

Command

ufw status --to list open ports

ufw allow 10250/tcp

ufw allow 30000:32767/tcp |

dlang/dub | 162050070 | Title: single file package, random failure

Question:

username_0: Program exited with code 1

error: the process (/home/basile/bin/dub) has returned the signal 512

Answers:

username_1: Could it be that two DUB instances are running in parallel? It appears that the compiled executable gets modified while it is still being executed. A possible fix within DUB in that case would be to `rm` the file before invoking the linker.

username_0: This wouldn't be logical. On linux, executable files are not locked when executed and we can recompile them even if the previous version is being run. I will invistigate more but I keep this issue opened as a reminder.

Status: Issue closed

|

sockeqwe/fragmentargs | 242352665 | Title: New release that allows to obtain a bundle from builder

Question:

username_0: Hello @username_1,

our team really likes fragmentargs and we use it in a multiple projects already.

Recently we where looking for a way to use fragmentargs to generate a Bundle that we can use where we can not instantiate a Fragment directly.

If have seen that this functionality is available in the snapshot version since may 2016.

Is there any release planned within the next few days/weeks that will provide this feature in a non-snapshot version?

Best Regards,

Andreas

Answers:

username_1: Oh boy, I'm really sorry ... I just have focused my open source time on other project as they have had higher priority for me since we use FragmentArgs version 3 at work and it fits our needs.

There are still some tasks / issues open for a stable 4.0 version: https://github.com/username_1/fragmentargs/milestone/4

The most important ones are #46 #44 #73 #83 #82 and #17 . The remaining ones could also be "fixed" in a minor version like `4.0.1`

I can't give any serious estimation when I will have time to work on this, but "within few days" is not possible for me. I think first week of August is realistic.

If someone wants to contribute, pull requests are very welcome!

username_0: Thanks for the quick reply!

I will try to support you getting tasks/issues fixed for version 4.0 👍

Status: Issue closed

|

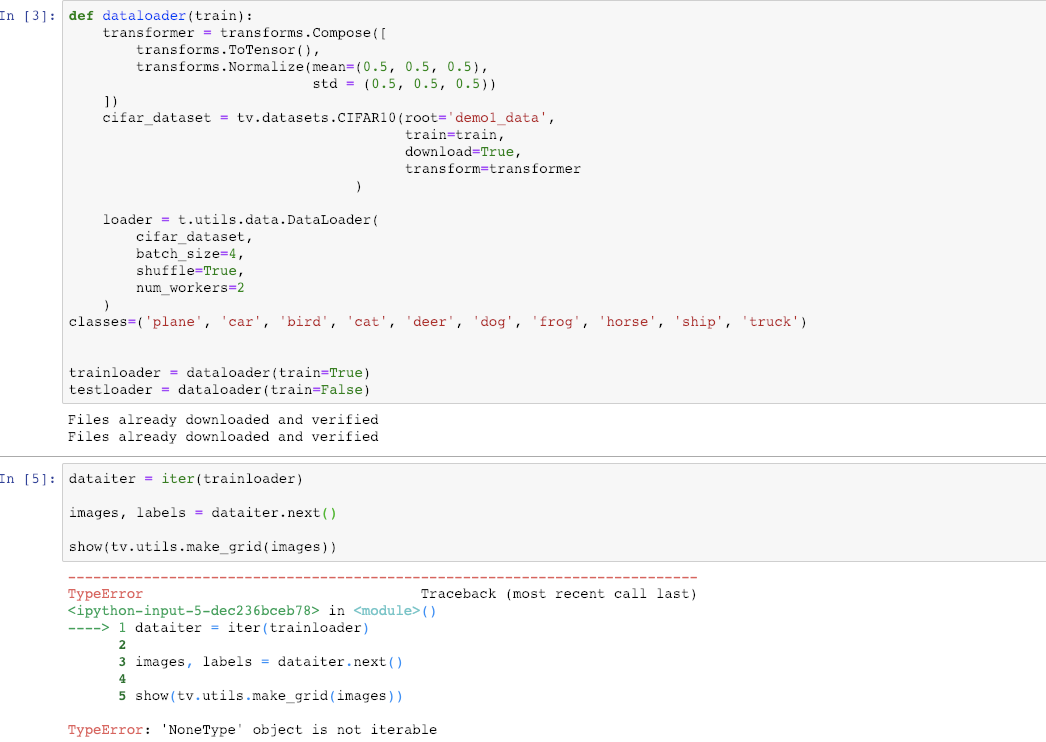

pytorch/pytorch | 399614338 | Title: I run the correct official demo TypeError: 'NoneType' object is not iterable

Question:

username_0: I run the correct official demo

code:

I don't know why will quote this built-in function error

Answers:

username_0: I'm sorry, but I know where I wrong

Status: Issue closed

|

don/cordova-plugin-ble-central | 127625798 | Title: ios not discover phone with android

Question:

username_0: Hello,

since few days I was trying to figure it out and I failed. When I use scan command it nor discover my android phone, but in my iPad bluetooth settings it is discovered (see screen bellow). I need only to get uuid, I username_2't want to connect/write etc - generally I only want to discover devices, which are in bluetooth range.

Please help.

Kind regards.

Answers:

username_1: I encountered the same problem as well. ble.scan is unable to detect an android or iOS phone. Can anyone help?

username_2: In order for this plugin to discover another phone, that phone needs to be *advertising*. The easiest way to do this is to have the target phone advertising as a Bluetooth Low Energy peripheral. It's also possible to advertise without actually offering services.

username_1: Forgive me for my ignorance. Is it just a phone setting to get the phone (both Android and iOS) to advertise as a peripheral, or an app is necessary to achieve that? If an app need to be written, are there Cordova plugins available, particularly for Android phones? I think we can do this with native Android code, but I am using Cordova. Thanks.

username_2: For now I think you need native code to make iOS and Android advertise Bluetooth services. I username_2't know of any Cordova plugins to do this. I've been working on cordova-plugin-ble-peripheral but it's not complete.

username_1: Thank you. Hope to see your plugin soon.

Status: Issue closed

|

CatServer/CatServer | 427356593 | Title: 加入夸克(Quark)无法启动

Question:

username_0: 服务端加入Quark之后启动失败,官方服务端+Forge启动没问题

`[12:22:31] [main/INFO] [LaunchWrapper]: Loading tweak class name catserver.server.launcher.username_2Tweaker

[12:22:31] [main/INFO] [LaunchWrapper]: Using primary tweak class name catserver.server.launcher.username_2Tweaker

[12:22:31] [main/INFO] [LaunchWrapper]: Calling tweak class catserver.server.launcher.username_2Tweaker

[12:22:32] [main/INFO] [FML]: Forge Mod Loader version 14.23.5.2815 for Minecraft 1.12.2 loading

[12:22:32] [main/INFO] [FML]: Java is Eclipse OpenJ9 VM, version 1.8.0_202, running on Windows 10:amd64:10.0, installed at C:\Program Files\AdoptOpenJDK\jdk-8.0.202.08\jre

[12:22:32] [main/ERROR] [FML]: Apache Maven library folder was not in the format expected. Using default libraries directory.

[12:22:32] [main/ERROR] [FML]: Full: C:\Users\lxu36\Desktop\username_2\libraries\maven-artifact-3.5.3.jar

[12:22:32] [main/ERROR] [FML]: Trimmed: c:/users/lxu36/desktop/catserver/

[12:22:33] [main/INFO] [FML]: Searching C:\Users\lxu36\Desktop\username_2\.\mods for mods

[12:22:33] [main/INFO] [FML]: Searching C:\Users\lxu36\Desktop\username_2\.\mods\1.12.2 for mods

[12:22:33] [main/WARN] [FML]: Found FMLCorePluginContainsFMLMod marker in AppleCore-mc1.12.2-3.2.0.jar. This is not recommended, @Mods should be in a separate jar from the coremod.

[12:22:33] [main/WARN] [FML]: The coremod AppleCore (squeek.applecore.AppleCore) is not signed!

[12:22:33] [main/WARN] [FML]: Found FMLCorePluginContainsFMLMod marker in Aroma1997Core-1.12.2-2.0.0.2.jar. This is not recommended, @Mods should be in a separate jar from the coremod.

[12:22:33] [main/WARN] [FML]: The coremod aroma1997.core.coremod.CoreMod does not have a MCVersion annotation, it may cause issues with this version of Minecraft

[12:22:33] [main/WARN] [FML]: Found FMLCorePluginContainsFMLMod marker in astralsorcery-1.12.2-1.10.11.jar. This is not recommended, @Mods should be in a separate jar from the coremod.

[12:22:33] [main/WARN] [FML]: The coremod hellfirepvp.astralsorcery.core.AstralCore does not have a MCVersion annotation, it may cause issues with this version of Minecraft

[12:22:33] [main/INFO] [Astral Core]: [AstralCore] Initialized.

[12:22:33] [main/INFO] [FML]: Loading tweaker guichaguri.betterfps.tweaker.BetterFpsTweaker from BetterFps-1.4.8.jar

[12:22:33] [main/WARN] [FML]: Found FMLCorePluginContainsFMLMod marker in BetterWithLib-1.12-1.5.jar. This is not recommended, @Mods should be in a separate jar from the coremod.

[12:22:33] [main/WARN] [FML]: The coremod LoadingPlugin (betterwithmods.library.core.LoadingPlugin) is not signed!

[12:22:33] [main/WARN] [FML]: The coremod com.bloodnbonesgaming.bnbgamingcore.core.BNBGamingCorePlugin does not have a MCVersion annotation, it may cause issues with this version of Minecraft

[12:22:33] [main/WARN] [FML]: The coremod BNBGamingCore (com.bloodnbonesgaming.bnbgamingcore.core.BNBGamingCorePlugin) is not signed!

[12:22:33] [main/INFO] [FML]: Loading tweaker codechicken.asm.internal.Tweaker from ChickenASM-1.12-1.0.2.7.jar

[12:22:33] [main/WARN] [FML]: Found FMLCorePluginContainsFMLMod marker in CTM-MC1.12.2-0.3.3.22.jar. This is not recommended, @Mods should be in a separate jar from the coremod.

[12:22:33] [main/WARN] [FML]: The coremod team.chisel.ctm.client.asm.CTMCorePlugin does not have a MCVersion annotation, it may cause issues with this version of Minecraft

[12:22:33] [main/WARN] [FML]: The coremod CTMCorePlugin (team.chisel.ctm.client.asm.CTMCorePlugin) is not signed!

[12:22:33] [main/WARN] [FML]: Found FMLCorePluginContainsFMLMod marker in Farseek-1.12-2.3.1.jar. This is not recommended, @Mods should be in a separate jar from the coremod.

[12:22:33] [main/WARN] [FML]: The coremod farseek.core.FarseekCoreMod does not have a MCVersion annotation, it may cause issues with this version of Minecraft

[12:22:33] [main/WARN] [FML]: The coremod FarseekCoreMod (farseek.core.FarseekCoreMod) is not signed!

[12:22:33] [main/WARN] [FML]: Found FMLCorePluginContainsFMLMod marker in foamfix-0.10.3-1.12.2.jar. This is not recommended, @Mods should be in a separate jar from the coremod.

[12:22:33] [main/WARN] [FML]: The coremod pl.asie.foamfix.coremod.FoamFixCore does not have a MCVersion annotation, it may cause issues with this version of Minecraft

[12:22:33] [main/WARN] [FML]: Found FMLCorePluginContainsFMLMod marker in Forgelin-1.8.2.jar. This is not recommended, @Mods should be in a separate jar from the coremod.

[12:22:33] [main/WARN] [FML]: The coremod net.shadowfacts.forgelin.preloader.ForgelinPlugin does not have a MCVersion annotation, it may cause issues with this version of Minecraft

[12:22:33] [main/WARN] [FML]: The coremod ForgelinPlugin (net.shadowfacts.forgelin.preloader.ForgelinPlugin) is not signed!

[12:22:34] [main/WARN] [FML]: Found FMLCorePluginContainsFMLMod marker in InventoryTweaks-1.64+dev.146.jar. This is not recommended, @Mods should be in a separate jar from the coremod.

[12:22:34] [main/WARN] [FML]: The coremod invtweaks.forge.asm.FMLPlugin does not have a MCVersion annotation, it may cause issues with this version of Minecraft

[12:22:34] [main/WARN] [FML]: The coremod ivorius.ivtoolkit.IvToolkitLoadingPlugin does not have a MCVersion annotation, it may cause issues with this version of Minecraft

[12:22:34] [main/WARN] [FML]: The coremod IvToolkit (ivorius.ivtoolkit.IvToolkitLoadingPlugin) is not signed!

[12:22:34] [main/INFO] [FML]: Loading tweaker org.spongepowered.asm.launch.MixinTweaker from JustEnoughIDs-1.0.2-26.jar

[12:22:34] [main/WARN] [FML]: The coremod micdoodle8.mods.miccore.MicdoodlePlugin does not have a MCVersion annotation, it may cause issues with this version of Minecraft

[12:22:34] [main/WARN] [FML]: The coremod MicdoodlePlugin (micdoodle8.mods.miccore.MicdoodlePlugin) is not signed!

[12:22:34] [main/ERROR] [FML]: The coremod api.player.forge.PlayerAPIPlugin is requesting minecraft version 1.12.1 and minecraft is 1.12.2. It will be ignored.

[12:22:34] [main/WARN] [FML]: Found FMLCorePluginContainsFMLMod marker in Quark-r1.5-146.jar. This is not recommended, @Mods should be in a separate jar from the coremod.

[12:22:34] [main/WARN] [FML]: The coremod vazkii.quark.base.asm.LoadingPlugin does not have a MCVersion annotation, it may cause issues with this version of Minecraft

[12:22:34] [main/WARN] [FML]: The coremod LoadingPlugin (vazkii.quark.base.asm.LoadingPlugin) is not signed!

[12:22:34] [main/WARN] [FML]: Found FMLCorePluginContainsFMLMod marker in ResourceLoader-MC1.12.1-1.5.3.jar. This is not recommended, @Mods should be in a separate jar from the coremod.

[12:22:34] [main/WARN] [FML]: The coremod lumien.resourceloader.asm.LoadingPlugin does not have a MCVersion annotation, it may cause issues with this version of Minecraft

[12:22:34] [main/WARN] [FML]: The coremod blusunrize.immersiveengineering.common.asm.IELoadingPlugin does not have a MCVersion annotation, it may cause issues with this version of Minecraft

[12:22:34] [main/INFO] [LaunchWrapper]: Loading tweak class name net.minecraftforge.fml.common.launcher.FMLInjectionAndSortingTweaker

[12:22:34] [main/INFO] [LaunchWrapper]: Loading tweak class name guichaguri.betterfps.tweaker.BetterFpsTweaker

[12:22:34] [main/INFO] [LaunchWrapper]: Loading tweak class name codechicken.asm.internal.Tweaker

[12:22:34] [main/INFO] [LaunchWrapper]: Loading tweak class name org.spongepowered.asm.launch.MixinTweaker

[12:22:34] [main/INFO] [mixin]: SpongePowered MIXIN Subsystem Version=0.7.11 Source=file:/C:/Users/lxu36/Desktop/username_2/./mods/JustEnoughIDs-1.0.2-26.jar Service=LaunchWrapper Env=SERVER

[12:22:34] [main/WARN] [FML]: The coremod JEIDLoadingPlugin (org.dimdev.jeid.JEIDLoadingPlugin) is not signed!

[12:22:34] [main/INFO] [mixin]: Compatibility level set to JAVA_8

[12:22:34] [main/INFO] [LaunchWrapper]: Loading tweak class name net.minecraftforge.fml.common.launcher.FMLDeobfTweaker

[12:22:34] [main/INFO] [LaunchWrapper]: Calling tweak class net.minecraftforge.fml.common.launcher.FMLInjectionAndSortingTweaker

[Truncated]

[12:22:38] [main/INFO] [BetterFps]: Patching net.minecraft.block.Block... (aow)

[12:22:39] [main/INFO] [mixin]: A re-entrant transformer 'guichaguri.betterfps.transformers.PatcherTransformer' was detected and will no longer process meta class data

[12:22:39] [main/INFO] [STDOUT]: [team.chisel.ctm.client.asm.CTMTransformer:preTransform:230]: Transforming Class [net.minecraft.block.Block], Method [getExtendedState]

[12:22:39] [main/INFO] [STDOUT]: [team.chisel.ctm.client.asm.CTMTransformer:finishTransform:242]: Transforming net.minecraft.block.Block Finished.

[12:22:39] [main/INFO] [STDOUT]: [pl.asie.patchy.helpers.ConstructorReplacingTransformer$FFMethodVisitor:visitTypeInsn:75]: Replaced NEW for net/minecraft/util/ClassInheritanceMultiMap to pl/asie/foamfix/coremod/common/FoamyClassInheritanceMultiMap

[12:22:39] [main/INFO] [STDOUT]: [pl.asie.patchy.helpers.ConstructorReplacingTransformer$FFMethodVisitor:visitMethodInsn:87]: Replaced INVOKESPECIAL for net/minecraft/util/ClassInheritanceMultiMap to pl/asie/foamfix/coremod/common/FoamyClassInheritanceMultiMap

[12:22:39] [main/INFO] [mixin]: A re-entrant transformer '$wrapper.pl.asie.foamfix.coremod.FoamFixTransformer' was detected and will no longer process meta class data

[12:22:39] [main/INFO] [Astral Core]: [AstralTransformer] Transforming alm : net.minecraft.enchantment.EnchantmentHelper with 1 patches!

[12:22:39] [main/INFO] [Astral Core]: [AstralTransformer] Applied patch PATCHMODIFYENCHANTMENTLEVELS

[12:22:39] [main/INFO] [Astral Core]: [AstralTransformer] Transforming amu : net.minecraft.world.World with 2 patches!

[12:22:39] [main/INFO] [Astral Core]: [AstralTransformer] Skipping PATCHSUNBRIGHTNESSWORLDCLIENT as it can't be applied for side SERVER

[12:22:39] [main/INFO] [Astral Core]: [AstralTransformer] Applied patch PATCHSUNBRIGHTNESSWORLDCOMMON

[12:22:39] [main/INFO] [STDOUT]: [pl.asie.foamfix.coremod.FoamFixTransformer:spliceClasses:112]: Added INTERFACE: pl/asie/foamfix/coremod/patches/IFoamFixWorldRemovable

[12:22:39] [main/INFO] [STDOUT]: [pl.asie.foamfix.coremod.FoamFixTransformer:spliceClasses:149]: Added METHOD: net.minecraft.world.World.foamfix_removeUnloadedEntities

[12:22:39] [main/INFO] [FML]: [Quark ASM] Transforming WorldServer

[12:22:39] [main/INFO] [FML]: [Quark ASM] Applying Transformation to method (Names [areAllPlayersAsleep, func_73056_e, g] Descriptor ()Z / ()Z)

[12:22:39] [main/INFO] [FML]: [Quark ASM] Located Method, patching...

[12:22:39] [main/INFO] [FML]: [Quark ASM] Located patch target node L0

[12:22:39] [main/INFO] [FML]: [Quark ASM] Patch result: true

`

Answers:

username_1: paste.ubuntu.com是个好东西

username_0: 抱歉,头一次提交BUG,没考虑到那么多,之前顺手给删了,有时间我在试试吧

Status: Issue closed

|

lampepfl/dotty | 108887623 | Title: AbstractMethodError with outer links

Question:

username_0: Dotty compiled by dotty has compiled hello world, so I am looking now into more complicated phases:

```

Exception in thread "main" java.lang.AbstractMethodError: dotty.tools.dotc.transform.PatternMatcher$Translator$OptimizingMatchTranslator.dotty$tools$dotc$transform$PatternMatcher$Translator$MatchTranslator$$$outer()Ldotty/tools/dotc/transform/PatternMatcher$Translator;

```

Answers:

username_0: How to reproduce: compile dotty by dotty(is part of test suite).

Than compile such file:

```scala

object A {

def main(args: Array[String]): Unit = {

args match { case Array() => println("empty"); case _ => println(args)}

}

}

```

```

Exception in thread "main" java.lang.AbstractMethodError: dotty.tools.dotc.transform.PatternMatcher$Translator$OptimizingMatchTranslator.dotty$tools$dotc$transform$PatternMatcher$Translator$MatchTranslator$$$outer()Ldotty/tools/dotc/transform/PatternMatcher$Translator;

at dotty.tools.dotc.transform.PatternMatcher$Translator$MatchTranslator.translateMatch(PatternMatcher.scala:1166)

at dotty.tools.dotc.transform.PatternMatcher$Translator$OptimizingMatchTranslator.translateMatch(PatternMatcher.scala:75)

at dotty.tools.dotc.transform.PatternMatcher.transformMatch(PatternMatcher.scala:51)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.goMatch(TreeTransform.scala:724)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.transformUnnamed(TreeTransform.scala:1114)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.liftedTree7$1(TreeTransform.scala:1213)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.$anonfun$transform$10(TreeTransform.scala:1205)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer$$Lambda$689/746074699.apply(Unknown Source)

at dotty.tools.dotc.reporting.Reporting.traceIndented(Reporter.scala:148)

at dotty.tools.dotc.core.Contexts$Context.traceIndented(Contexts.scala:53)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.transform(TreeTransform.scala:1204)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.transformUnnamed(TreeTransform.scala:1087)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.liftedTree7$1(TreeTransform.scala:1213)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.$anonfun$transform$10(TreeTransform.scala:1205)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer$$Lambda$689/746074699.apply(Unknown Source)

at dotty.tools.dotc.reporting.Reporting.traceIndented(Reporter.scala:148)

at dotty.tools.dotc.core.Contexts$Context.traceIndented(Contexts.scala:53)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.transform(TreeTransform.scala:1204)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.transformNamed(TreeTransform.scala:1004)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.liftedTree7$1(TreeTransform.scala:1212)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.$anonfun$transform$10(TreeTransform.scala:1205)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer$$Lambda$689/746074699.apply(Unknown Source)

at dotty.tools.dotc.reporting.Reporting.traceIndented(Reporter.scala:148)

at dotty.tools.dotc.core.Contexts$Context.traceIndented(Contexts.scala:53)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.transform(TreeTransform.scala:1204)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.transformStat$2(TreeTransform.scala:1238)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.$anonfun$662(TreeTransform.scala:1242)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer$$Lambda$690/596470015.apply(Unknown Source)

at dotty.tools.dotc.core.Decorators$ListDecorator$.loop$4(Decorators.scala:51)

at dotty.tools.dotc.core.Decorators$ListDecorator$.mapconserve$extension(Decorators.scala:67)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.transformStats(TreeTransform.scala:1242)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.transformUnnamed(TreeTransform.scala:1183)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.liftedTree7$1(TreeTransform.scala:1213)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.$anonfun$transform$10(TreeTransform.scala:1205)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer$$Lambda$689/746074699.apply(Unknown Source)

at dotty.tools.dotc.reporting.Reporting.traceIndented(Reporter.scala:148)

at dotty.tools.dotc.core.Contexts$Context.traceIndented(Contexts.scala:53)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.transform(TreeTransform.scala:1204)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.transformNamed(TreeTransform.scala:1011)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.liftedTree7$1(TreeTransform.scala:1212)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.$anonfun$transform$10(TreeTransform.scala:1205)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer$$Lambda$689/746074699.apply(Unknown Source)

at dotty.tools.dotc.reporting.Reporting.traceIndented(Reporter.scala:148)

at dotty.tools.dotc.core.Contexts$Context.traceIndented(Contexts.scala:53)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.transform(TreeTransform.scala:1204)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.transformStat$2(TreeTransform.scala:1238)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.$anonfun$662(TreeTransform.scala:1242)

[Truncated]

at dotty.tools.dotc.core.Phases$Phase.runOn(Phases.scala:273)

at dotty.tools.dotc.transform.TreeTransforms$TreeTransformer.runOn(TreeTransform.scala:474)

at dotty.tools.dotc.Run.$anonfun$$anonfun$compileUnits$1$1(Run.scala:59)

at dotty.tools.dotc.Run$$Lambda$194/388357135.applyVoid(Unknown Source)

at scala.compat.java8.JProcedure1.apply(JProcedure1.java:18)

at scala.compat.java8.JProcedure1.apply(JProcedure1.java:10)

at scala.collection.IndexedSeqOptimized$class.foreach(IndexedSeqOptimized.scala:33)

at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:186)

at dotty.tools.dotc.Run.$anonfun$compileUnits$1(Run.scala:57)

at dotty.tools.dotc.Run$$Lambda$185/501187768.apply$mcV$sp(Unknown Source)

at scala.compat.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:12)

at dotty.tools.dotc.util.Stats$.monitorHeartBeat(Stats.scala:58)

at dotty.tools.dotc.Run.compileUnits(Run.scala:65)

at dotty.tools.dotc.Run.compileSources(Run.scala:49)

at dotty.tools.dotc.Run.compile(Run.scala:33)

at dotty.tools.dotc.Driver.doCompile(Driver.scala:21)

at dotty.tools.dotc.Driver.process(Driver.scala:44)

at dotty.tools.dotc.Driver.main(Driver.scala:48)

at dotty.tools.dotc.Main.main(Main.scala)

```

Status: Issue closed

|

shankarpandala/lazypredict | 1005312125 | Title: Custom metric

Question:

username_0: Hi there,

Thank you for this code.

It would be nice if you could provide an example of how to use custom metrics.

Answers:

username_1: I second this. All examples use `custom_metrics=None`. I tried assigning a series of strings looking at the names in the source code but no luck. |

surbhit21/GSoC-2019 | 471522833 | Title: File not found error

Question:

username_0: Hi,

I tried running the optimization and it seems that there is some issue with the unzipping. I am adding the error trace.

`Internal Server Error: /tasks/Optimization/

Traceback (most recent call last):

File "/home/surbhit/Work/GSoC-2019/venv/lib/python3.6/site-packages/django/core/handlers/exception.py", line 34, in inner

response = get_response(request)

File "/home/surbhit/Work/GSoC-2019/venv/lib/python3.6/site-packages/django/core/handlers/base.py", line 115, in _get_response

response = self.process_exception_by_middleware(e, request)

File "/home/surbhit/Work/GSoC-2019/venv/lib/python3.6/site-packages/django/core/handlers/base.py", line 113, in _get_response

response = wrapped_callback(request, *callback_args, **callback_kwargs)

File "/home/surbhit/Work/GSoC-2019/venv/lib/python3.6/site-packages/django/views/decorators/csrf.py", line 54, in wrapped_view

return view_func(*args, **kwargs)

File "/home/surbhit/Work/GSoC-2019/venv/lib/python3.6/site-packages/rest_framework/viewsets.py", line 116, in view

return self.dispatch(request, *args, **kwargs)

File "/home/surbhit/Work/GSoC-2019/venv/lib/python3.6/site-packages/rest_framework/views.py", line 495, in dispatch

response = self.handle_exception(exc)

File "/home/surbhit/Work/GSoC-2019/venv/lib/python3.6/site-packages/rest_framework/views.py", line 455, in handle_exception

self.raise_uncaught_exception(exc)

File "/home/surbhit/Work/GSoC-2019/venv/lib/python3.6/site-packages/rest_framework/views.py", line 492, in dispatch

response = handler(request, *args, **kwargs)

File "/home/surbhit/Work/GSoC-2019/GSoC-2019/REST_FindSim/tasks/views.py", line 68, in create

os.mkdir(os.path.join(BASE_FILE_PATH, 'tsv/')+file_label)

FileNotFoundError: [Errno 2] No such file or directory: 'media/files/tsv/surbhit11563865698.6517096'`

Answers:

username_1: Hi, I think this happens when using os.mkdir() and pyrhon fails to find the directory"media/files/tsv/". I will add this empty directory into repo. Hope this can fix the problem.

username_0: I am having the "media/files/tsv/" directory and the zip is also getting stored there but the specific folder is not created in this case the `surbhit11563865698.6517096` folder

username_1: Maybe it's because the path here is a relative path rather than a absolute path?

username_1: I knew the reason. It's because os.mkdir() can not create dir in a complicated path. I changed this into os.makedirs(). I think this will solve the problem.

username_0: The os.makedirs(), did not solve the error on my machine. Is there anything else I have to do besides pulling the last commit and running it? Also, the folders are created but they are empty.

`FileNotFoundError at /tasks/Optimization/

[Errno 2] No such file or directory: 'media/files/tsv/surbhit11563881005.749884/testop.zip'`

username_1: I think you need to migrate. Because the models are changed and the tsv files and model files are uploading into media/files/tsv/ and media/files/model/ respectively.

Status: Issue closed

username_0: Thanks Chen, migrating worked like a charm. It is running on my machine now. :) |

Zrips/CMI | 426963307 | Title: itemname and itemlore can't find the specified player

Question:

username_0: **Description of issue or feature request:**

I want to use these commands from the console:

/cmi itemlore [playerName] [linenumber] [new lore line]

/cmi itemname [playerName] [new name]

I receive the message that the player not found. The player is online, the nickname is correct.

---

**Cmi Version (using`/cmi version`):**

192.168.3.11

**Server Type (Spigot/Paperspigot/etc):**

Paper

**Server Version (using `/ver`):**

Paper(571) 1.13.2

Answers:

username_1: [14:04:32 INFO]: Can't find player with this name!

```

Which resulted in player not found showing twice.

https://i.imgur.com/dXVy980.png

Status: Issue closed

username_2: Format was changed to -p:[playerName] when indicating player, just to simplify player name detection |

openstates/openstates.org | 302391978 | Title: Bill search does not "remember" the search term used in main search

Question:

username_0: Example flow:

1. On the homepage, search a term in the header's search box

2. On the search results page, click the `View all bill results` button

3. The bill-search page will come up, but it won't remember what the search term was (ie, it'll display all bills for the country, unfiltered, and the `Search text` field on the left side panel will not contain the original search term)

Answers:

username_1: dupe of #56

Status: Issue closed

|