repo_name

stringlengths 4

136

| issue_id

stringlengths 5

10

| text

stringlengths 37

4.84M

|

|---|---|---|

pytorch/pytorch | 544843137 | Title: A strange issue about detection of tensor in-place modification

Question:

username_0: I was implementing CTC in pure python PyTorch for fun (and possible modifications). I do alpha computation by modifying a tensor inplace. If torch.logsumexp is used in logadd, everything works fine but slower (especially on CPU). If custom logadd lines are used, I receive:

```

File "ctc.py", line 76, in <module>

custom_ctc_grad, = torch.autograd.grad(custom_ctc.sum(), logits, retain_graph = True)

File "/miniconda/lib/python3.7/site-packages/torch/autograd/__init__.py", line 157, in grad

inputs, allow_unused)

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.cuda.FloatTensor [4, 10]], which is output 0 of SliceBackward, is at version 17; expected version 16 instead. Hint: enable anomaly detection to find the operation that failed to compute its gradient, with torch.autograd.set_detect_anomaly(True).

```

It is strange because neither logadd version does inplace, so it's not clear why version tracking is much different.

Another issues is absence of fast logsumexp (for two/three arguments) on CPU (related https://github.com/pytorch/pytorch/issues/27522). A custom version is considerably faster on CPU, not sure why. It would be nice if we have all reduction functions supporting lists of tensors to do without temporary torch.stack and not roll custom implementations.

```python

import torch

import torch.nn.functional as F

def logadd(x0, x1, x2):

# everything works if the next line is uncommented

# return torch.logsumexp(torch.stack([x0, x1, x2]), dim = 0)

# keeping the following 4 lines uncommented causes an exception

m = torch.max(torch.max(x0, x1), x2)

m = m.masked_fill(m == float('-inf'), 0)

res = (x0 - m).exp() + (x1 - m).exp() + (x2 - m).exp()

return res.log().add(m)

def ctc_alignment_targets(log_probs, targets, input_lengths, target_lengths, logits, blank = 0):

ctc_loss = F.ctc_loss(log_probs, targets, input_lengths, target_lengths, blank = blank, reduction = 'sum')

ctc_grad, = torch.autograd.grad(ctc_loss, (logits,), retain_graph = True)

temporal_mask = (torch.arange(len(log_probs), device = input_lengths.device, dtype = input_lengths.dtype).unsqueeze(1) < input_lengths.unsqueeze(0)).unsqueeze(-1)

return (log_probs.exp() * temporal_mask - ctc_grad).detach()

def ctc_loss(log_probs, targets, input_lengths, target_lengths, blank : int = 0, reduction : str = 'none', alignment : bool = False):

# https://github.com/pytorch/pytorch/blob/master/aten/src/ATen/native/LossCTC.cpp#L37

# https://github.com/skaae/Lasagne-CTC/blob/master/ctc_cost.py#L162

B = torch.arange(len(targets), device = input_lengths.device)

targets_ = torch.cat([targets, targets[:, :1]], dim = -1)

targets_ = torch.stack([torch.full_like(targets_, blank), targets_], dim = -1).flatten(start_dim = -2)

diff_labels = torch.cat([torch.as_tensor([[False, False]], device = targets.device).expand(len(B), -1), targets_[:, 2:] != targets_[:, :-2]], dim = 1)

zero, zero_padding = torch.tensor(float('-inf'), device = log_probs.device, dtype = log_probs.dtype), 2

log_probs_ = log_probs.gather(-1, targets_.expand(len(log_probs), -1, -1))

log_alpha = torch.full((len(log_probs), len(B), zero_padding + targets_.shape[-1]), zero, device = log_probs.device, dtype = log_probs.dtype)

log_alpha[0, :, zero_padding + 0] = log_probs[0, :, blank]

log_alpha[0, :, zero_padding + 1] = log_probs[0, B, targets_[:, 1]]

for t in range(1, len(log_probs)):

log_alpha[t, :, 2:] = log_probs_[t] + logadd(log_alpha[t - 1, :, 2:], log_alpha[t - 1, :, 1:-1], torch.where(diff_labels, log_alpha[t - 1, :, :-2], zero))

l1l2 = log_alpha[input_lengths - 1, B].gather(-1, torch.stack([zero_padding + target_lengths * 2 - 1, zero_padding + target_lengths * 2], dim = -1))

loss = -torch.logsumexp(l1l2, dim = -1)

if not alignment:

return loss

path = torch.zeros(len(log_alpha), len(B), device = log_alpha.device, dtype = torch.int64)

path[input_lengths - 1, B] = zero_padding + 2 * target_lengths - 1 + l1l2.max(dim = -1).indices

for t in range(len(path) - 1, 1, -1):

indices = path[t]

indices_ = torch.stack([(indices - 2) * diff_labels[B, (indices - zero_padding).clamp(min = 0)], (indices - 1).clamp(min = 0), indices], dim = -1)

path[t - 1] += (indices - 2 + log_alpha[t - 1, B].gather(-1, indices_).max(dim = -1).indices).clamp(min = 0)

return torch.zeros_like(log_alpha).scatter_(-1, path.unsqueeze(-1), 1.0)[..., 3::2]

[Truncated]

builtin_ctc = F.ctc_loss(log_probs, targets, input_lengths, target_lengths, blank = 0, reduction = 'none')

print('Built-in CTC loss seconds:', tictoc() - tic)

ce_ctc = (-ctc_alignment_targets(log_probs, targets, input_lengths, target_lengths, blank = 0, logits = logits) * log_probs)

tic = tictoc()

custom_ctc = ctc_loss(log_probs, targets, input_lengths, target_lengths, blank = 0, reduction = 'none')

print('Custom CTC loss seconds:', tictoc() - tic)

builtin_ctc_grad, = torch.autograd.grad(builtin_ctc.sum(), logits, retain_graph = True)

custom_ctc_grad, = torch.autograd.grad(custom_ctc.sum(), logits, retain_graph = True)

ce_ctc_grad, = torch.autograd.grad(ce_ctc.sum(), logits, retain_graph = True)

print('Device:', device)

print('Log-probs shape:', 'x'.join(map(str, log_probs.shape)))

print('Custom loss matches:', torch.allclose(builtin_ctc, custom_ctc, rtol = rtol))

print('Grad matches:', torch.allclose(builtin_ctc_grad, custom_ctc_grad, rtol = rtol))

print('CE grad matches:', torch.allclose(builtin_ctc_grad, ce_ctc_grad, rtol = rtol))

print(builtin_ctc_grad[:, 0, :], custom_ctc_grad[:, 0, :])

```

Answers:

username_1: Hi,

This happens because in this for-loop:

```python

```

username_0: In other words, logsumexp does allocate new memory (akin to clone()) inside?

Would you have an advise how to rewrite this code to avoid this problem within framework of computing a full `log_alpha` matrix (I have a workaround that does not preserve the full matrix explicitly, but I think the full matrix iterative computation case is also an important one) without cloning and keeping PyTorch version tracking happy? E.g. would prior `torch.unbind()` and later inplace modification work?

username_1: Given that you never actually use `log_alpha` as a full Tensor, I would keep the first dimension in a list. That way, no need to do inplace on Tensor, just change the entry in the list.

username_0: Yeah, the unbind idea was also to this end! I just wanted to make sure that the tensor is allocated as a chunk (if possible) and might even be reused. When dealing with big sequences, these quadratic matrices can be huge, so allocator quirks can bite. That's why I thought of allocating one big storage (within a tensor) and then unbinding. I guess an explicit storage offset computation tricks would allow to use unbind without relying on buggy version tracking of unbind?

username_1: If you do the same allocations repeatedly on CUDA, the allocation is basically for-free (using our caching allocator). So you should not worry too much about this.

username_0: Oh, you are right! :)

Btw the buggy unbind maybe should stay that way, the semantics of untying version tracking is sometimes important and maybe worth exposing to the user (I think I have filed a similar issue but in another context)

Status: Issue closed

username_1: The only issue is that it will silently compute wrong gradients if you modify both the base and an output :'(

We are looking into ways to extend the version check to handle these non-overlapping cases. But that adds a lot of complexity and is quite hard.

Let's continue the discussion in the other issue for version counter improvement.

username_0: Sure @username_1! Thanks for looking into this case!

username_0: @username_1 I remembered also why I wanted to do it inplace: I'm filling only the part of the tensor, the rest must be padded by some padded value. But it should be possible to achieve the same without a giant pre-filled tensor. |

metacurrency/holoSkel | 304433584 | Title: Holo Coherence Call starts March 12 2018 at 04:00PM

Question:

username_0: Title: Holo Coherence Call<br>

<br>

Description: Given the current fluid nature of our organization, and in lieu of our weekly sprint this meeting is intended for: - Celebration - Information sharing - Weekly Intention There will be no Daily Standup on Holo Coherence Call days.<br>

<br>

Location: ceptr.org/salon<br>

<br>

Starts: March 12, 2018 at 04:00PM<br>

<br>

Ends: March 12, 2018 at 05:00PM<br>

<br>

@username_1<issue_closed>

Status: Issue closed |

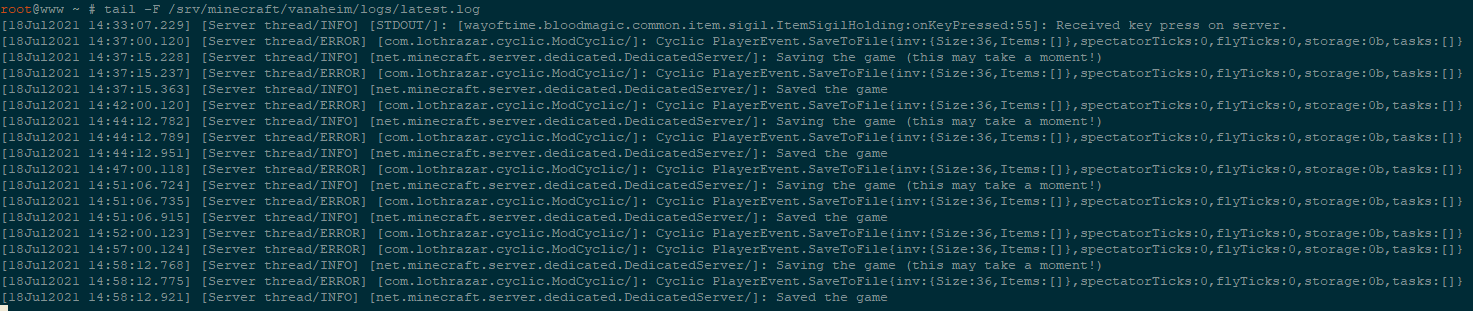

Lothrazar/Cyclic | 947053397 | Title: Server thread error on PlayerEvent.SaveToFile

Question:

username_0: Minecraft Version: 1.16.5

Forge Version: 36.1.32

Mod Version: 1.3.2

Single Player or Server: Server

Describe problem (what you were doing; what happened; what should have happened):

Constant log entries on every `save-all` command: `[Server thread/ERROR] [com.lothrazar.cyclic.ModCyclic/]: Cyclic PlayerEvent.SaveToFile{inv:{Size:36,Items:[]},spectatorTicks:0,flyTicks:0,storage:0b,tasks:[]}`

Log file link:

[latest.log](https://github.com/username_2/Cyclic/files/6836481/latest.log)

Video/images/gifs (direct upload or link):

Answers:

username_1: Having this error in ATM6 1.7.1 modpack (Cyclic-1.16.5-1.3.2)

The error did not occur in ATM6 1.6.14 (Cyclic-1.16.5-1.2.11))

username_0: The offending line of code. Stuff like this ought to be a debug level log entry, not an error.

https://github.com/username_2/Cyclic/blob/d7e9e73618c1edb75a61c288ed121dc66f18aa6d/src/main/java/com/lothrazar/cyclic/event/PlayerDataEvents.java#L45

Status: Issue closed

|

KhronosGroup/MoltenVK | 527735948 | Title: Request support VK_EXT_inline_uniform_block

Question:

username_0: From the [spec](https://www.khronos.org/registry/vulkan/specs/1.1-extensions/html/vkspec.html#VK_EXT_inline_uniform_block), looks like it would be very handy for small descriptors (personally looking at using a storage buffer for dynamic lighting, and would like to use this for the light count). Suspect this should be fairly straightforward to implement via `set[Vertex/Fragment/Compute]Bytes`, which I know is used elsewhere in MoltenVK for various quantities.

Answers:

username_0: To head anyone off who might start this, think I might have this implemented. Just a few gaps to fill and to test.

Status: Issue closed

|

pouchdb/pouchdb | 468404447 | Title: allDocs with startkey

Question:

username_0: ```

const db = new new PouchDB('test')

const doc = {_id: 'user_111', username: 'someone'}

await db.put(doc)

await db.allDocs({startkey: 'user1_'})

// this will get the `doc` above, which should not

```

tested in FireFox 70, chrome 75, same performance.

Answers:

username_1: Just wanna add a little bit more info,

you can play around with this here http://jsbin.com/lotaroqila/edit?html,js,output

The behavior is the same in all pouchdb versions back to 3.6.0 (could not test earlier right now)

Seems unexpected for sure!

username_0: @username_1 Why use such robot rules? |

jlippold/tweakCompatible | 339175826 | Title: `BatteryLife` working on iOS 11.3.1

Question:

username_0: ```

{

"packageId": "com.rbt.batterylife",

"action": "working",

"userInfo": {

"arch32": false,

"packageId": "com.rbt.batterylife",

"deviceId": "iPhone7,2",

"url": "http://cydia.saurik.com/package/com.rbt.batterylife/",

"iOSVersion": "11.3.1",

"packageVersionIndexed": true,

"packageName": "BatteryLife",

"category": "Utilities",

"repository": "BigBoss",

"name": "BatteryLife",

"packageIndexed": true,

"packageStatusExplaination": "This package version has been marked as Working based on feedback from users in the community. The current positive rating is 100% with 8 working reports.",

"id": "com.rbt.batterylife",

"commercial": false,

"packageInstalled": true,

"tweakCompatVersion": "0.0.7",

"shortDescription": "Displays useful information about battery health",

"latest": "1.7.0",

"author": "rbt",

"packageStatus": "Working"

},

"base64": "<KEY>

"chosenStatus": "working",

"notes": ""

}

``` |

ajkerr0/kappa | 178454509 | Title: Missing analytical Hessian calculation

Question:

username_0: Just an idea for a feature that _could_ be added. Very low priority. Since the analytical gradients are calculated, right now the Hessian is found via finite differences.

Analytical Hessian may give only slight enhancements to speed and accuracy.

Answers:

username_0: Refer to http://onlinelibrary.wiley.com/doi/10.1002/(SICI)1096-987X(19960715)17:9%3C1132::AID-JCC5%3E3.0.CO;2-T/abstract for the dihedral contribution

Status: Issue closed

|

Shopify/js-buy-sdk | 166646920 | Title: Fetching products by hanles

Question:

username_0: But, 'product-287' is not a valid product Id and I can only get the actual productId from shopify's dashboard. It could have been great If I could fetch 'product-287' instead.

Answers:

username_1: hey @username_0, your situation is not exactly clear.

however, if it is that you are trying to fetch a product using an identification other than the ID, you may want to consider maintaining a local mapping of this identification to the ID locally on your end.

You also have the option of fetching a product using it's `handle`. The handle is "a human-friendly unique string for the Product automatically generated from its title." e.g A product with title `6 Panel - Lumberjack` can be retrieved using `client.fetchQueryProducts({ handle: '6-panel-lumberjack' })`

username_2: Closing as http://shopify.github.io/js-buy-sdk/api/classes/ShopClient.html#method-fetchQueryProducts exists.

Status: Issue closed

|

nielstron/quantulum3 | 353082290 | Title: Automatically generate SI-prefixed versions of units

Question:

username_0: Generally the tool yet lacks the ability to automatically infer SI-prefixed (kilo, mega, etc) versions of all units.

As not all units ate prefixable (i.e. there are no kiloinches, there are megabytes but no millibytes), prefixes should not be able to be parsed anywhere.

all applicable SI-prefixes for a unit could be defined by a separate list inside units.json. Special or additional entities can either be assigned inside that list or by creating a separate unit.

The value "all" , "positive" (kilo and upwards) and "negative" (milli and downwards) or something similar should be available to reduce redundancy.<issue_closed>

Status: Issue closed |

FWAJL/FieldWorkManager | 96367436 | Title: Create a FT webform for the "Collect Data" button.

Question:

username_0: If there are no field forms, create a webform from the Field Data parameters.

The FT will fill in the form and the data shall be uploaded to the database.

If data has been collected, show the data on the form.

Refresh the page every time the FT clicks "OK" so they can verify their data was collected.

If possible, show fields that were retrieved from the database in red as a way of verifying that data was saved.

Maybe do not use the arrays for this?

Related to Issue #1098. See screenshot.

Answers:

username_1: I don't think so. We would query the data each time.

Please let me know your feedback.

username_2: @username_0

A few other suggestions:

- what about preventing the FT to go to the next location until the current one done?

- show the locations done with a marker like a "checked" green marker icon to clearly show the FT it is done.

username_0: We are already doing this. The PM sees a different color marker at `/activetask/map`. Currently, the condition of having a location being "complete" is tied to forms, so we will need to add having a value entered for each of the field parameters as part of that condition, but this is outside the scope of this Issue. Once I have this working like I want for the PM map, I think we could add it to the FT map.

username_0: I

username_1: So as long as locations not completed are clearly in red as not done, then a FT can move to another location if he can save the data at the particular moment for a given location? Maybe then, ask him, like you said, to write down on paper the values?

username_0: So as long as locations not completed are clearly in red as not done, then a FT can move to another location if he can save the data at the particular moment for a given location? Maybe then, ask him, like you said, to write down on paper the values?

a) the FT can move wherever they need to and the website won't be able to stop them. They may complete the form, partially complete the form, or open the form but enter no values. We have to accommodate all three instances.

b) No. The FT would only be notified if the data (which might be a partially completed form) was not successfully saved.

username_1: Ok, so the most important matter is that the data that the FT inputs and saves is really saved. If he does not complete the job, it is his problem, right?

username_0: If he does not complete the job, it is his problem, right?

That is a good question. The best answer I have is that this needs to be addressed with the Build Measure Learn model. I need to first start recording data in the field and learn from that experience to know what to do when real life conditions arise.

username_0: @username_1

I was thinking about this and I realize that what I want is a very common behavior for web sites, so don't overthink it. For example, suppose I am trying to buy something on the web and I am filling out the "new customer" form. Often times, the login name I pick is already chosen, so the page refreshes and it asks me for a now login name. However, all the other fields that I already filled out, like my address and phone number, are pre-poplutated. Otherwise, I have to fill in all the fields again (very annoying). Same idea here. The FT can partially complete the form at any time then come back and finish.

username_1: @username_0

Ok. Now I think we have all the requirements with your last comment. So, what needs to be done is:

1. FT enters the data in the input(s) of each parameter.

2. FT clicks "OK" or "Save" which initiate a request to the server.

3. On the server, the data is saved to the DB.

4. We handle the response after saving the data to the DB. a. If the data is saved, we can highlight the data saved in red, grey or any way you want using CSS. If the data is not saved, we notify the FT.

5. Every time a FT opens the form to collect data for a or several parameter(s), we load the data saved if any.

That concludes the specification discussion.

Can you validate it (repost the points above modified if necessary) and assign it, please?

Thank you.

username_3: @username_1 @username_0

I've understood the requirement, thank you for explaining it point wise and elaborately. I just have one point to confirm. So the web form in question would have rows with the field analyte name and the text box for entering the parameter. Something like the following?

FA1 [ text box for parameter ]

FA2 [ text box for parameter ]

.....................

.....................

[ Save button ] [ Exit button ]

Please confirm and I'm ready to start with this issue.

Thank you.

username_0: @username_3

Yes.

username_3: @username_0 @username_1

I've coded the new Web form. The old code for showing the PDF is present but commented out. I've coded it exactly like the requirement was listed. So that may serve as steps to test. Code is pushed to `feature_devsupport`.

Please review and let me know. Thank you.

username_0: @username_3

Perfect!

Status: Issue closed

|

MDE4CPP/MDE4CPP | 283498230 | Title: evaluate upper / lower bound

Question:

username_0: add .toLowerFirst() for namespace definition.

Problem: uml-Reflection model(s ecore.uml / uml.uml use) UpperFirst Namespace to differ ecor.ecore/uml.ecore

Answers:

username_0: add .toLowerFirst() for namespace definition.

Problem: uml-Reflection model(s ecore.uml / uml.uml use) UpperFirst Namespace to differ ecor.ecore/uml.ecore

username_1: 1. What has an lower and upper bound (of Property multiplicites) to do with upper / lower case of namespaces?

2. Why is a new solution necessary? Is the already existing solution not acceptable?

username_0: uodates handling of multiplicity

Status: Issue closed

|

vdvm/nginx-watermark | 179476196 | Title: how to use it?

Question:

username_0: I build 1.9.15 with this module

yes with --with-http_image_filter_module

sadly I can't make it run

invalid parameter "watermark" in

my config file has a line like this

image_filter watermark "/var/www/logo2.png" "bottom-right";

also I've tried without quotation marks

Answers:

username_1: This is patch for existing http_image_filter_module

```

cd ..../nginx-$NGINX_VERSION && patch -p0 < ...../nginx-watermark/nginx-watermark.patch

```

(last tested with version 1.10.2)

username_0: Works as you stated. Another question is how you would do a variable?

image_filter watermark /etc/nginx/html/$watermarkUrl center; doesn't work

any combination with variable has problems :(

username_1: Try branch "vars" https://github.com/username_2/nginx-watermark/tree/vars

username_0: Works like a charm. Thank you!

Status: Issue closed

|

Azure/azure-storage-azcopy | 469444798 | Title: Azcopy10 - Is it possilbe to copy files within a ADLS gen2 storage account from one folder to another folder

Question:

username_0: ### Which version of the AzCopy was used?

Azcopy 10.2.1

##### Note: The version is visible when running AzCopy without any argument

### Which platform are you using? (ex: Windows, Mac, Linux)

Windows

### What command did you run?

Azcopy cp Storage_account_A Storage_account_A --recursive true

##### Note: Please remove the SAS to avoid exposing your credentials. If you cannot remember the exact command, please retrieve it from the beginning of the log file.

### What problem was encountered?

failed to parse user input due to error: the inferred source/destination combination is currently not supported. Please post an issue on Github if support for this scenario is desired

### How can we reproduce the problem in the simplest way?

### Have you found a mitigation/solution?

Answers:

username_1: AzCopy can't figure out the type of the source or destination, based on the URLs that you've used for the source and destination. There's a known bug where that happens with HTTP. You can add this to the command line to tell it exactly what you mean:

--from-to BlobBlob

Also try putting double quotes around the two URLs, to make sure they parse correctly.

BTW, you said storage_account_a for both source and dest. Is that right?

username_0: Hello, I am trying to copy files with Azure Data Lake Storage Gen2

Here is how the command would looks like from PS command promopt

PS C:\Program Files (x86)\AzCopy>>> .\azcopy cp "" "https://mkdssdedldev212.dfs.core.windows.net/msdsd-dssds-fssdsdys-edfffl-dev-001/refidfdfned/Asdsds/dbo/DisdsdsdResdsdsursdsde_Adff.txt “https://mkdssdedldev212.dfs.core.windows.net/msdsd-dssds-fssdsdys-edfffl-dev-001/refidfdfned/Asdsds/dbo/DisdsdsdResdsdsursdsde_Adff.txt/.replacedfiles/"

I am getting the following error…. Please help ASAP!

failed to parse user input due to error: the inferred source/destination combination is currently not supported. Please post an issue on Github if support for this scenario is desired

Thanks,

<NAME>

username_0: Just updating the command to make it more clear

PS C:\Program Files (x86)\AzCopy>>> .\azcopy cp "https://mkdssdedldev212.dfs.core.windows.net/msdsd-dssds-fssdsdys-edfffl-dev-001/refidfdfned/Asdsds/dbo/DisdsdsdResdsdsursdsde_Adff.txt" “https://mkdssdedldev212.dfs.core.windows.net/msdsd-dssds-fssdsdys-edfffl-dev-001/refidfdfned/Asdsds/dbo/DisdsdsdResdsdsursdsde_Adff.txt/.replacedfiles/"

I am getting the following error…. Please help ASAP!

failed to parse user input due to error: the inferred source/destination combination is currently not supported. Please post an issue on Github if support for this scenario is desired

Thanks,

<NAME>

Markel - IT

Data Services

4501 Highwoods Parkway, Glen Allen, VA 23060

Direct: (804) 525-7041

www.markelcorp.com<http://www.markelcorp.com/>

From: <NAME>

Sent: Thursday, July 18, 2019 8:58 AM

To: 'Azure/azure-storage-azcopy' <<EMAIL>>; Azure/azure-storage-azcopy <<EMAIL>>

Cc: Author <<EMAIL>>

Subject: RE: [Azure/azure-storage-azcopy] Azcopy10 - Is it possilbe to copy files within a ADLS gen2 storage account from one folder to another folder (#507)

Hello, I am trying to copy files with Azure Data Lake Storage Gen2

Here is how the command would looks like from PS command promopt

PS C:\Program Files (x86)\AzCopy>>> .\azcopy cp "" "https://mkdssdedldev212.dfs.core.windows.net/msdsd-dssds-fssdsdys-edfffl-dev-001/refidfdfned/Asdsds/dbo/DisdsdsdResdsdsursdsde_Adff.txt “https://mkdssdedldev212.dfs.core.windows.net/msdsd-dssds-fssdsdys-edfffl-dev-001/refidfdfned/Asdsds/dbo/DisdsdsdResdsdsursdsde_Adff.txt/.replacedfiles/<https://mkdssdedldev212.dfs.core.windows.net/msdsd-dssds-fssdsdys-edfffl-dev-001/refidfdfned/Asdsds/dbo/DisdsdsdResdsdsursdsde_Adff.txt%20“https:/mkdssdedldev212.dfs.core.windows.net/msdsd-dssds-fssdsdys-edfffl-dev-001/refidfdfned/Asdsds/dbo/DisdsdsdResdsdsursdsde_Adff.txt/.replacedfiles/>"

I am getting the following error…. Please help ASAP!

failed to parse user input due to error: the inferred source/destination combination is currently not supported. Please post an issue on Github if support for this scenario is desired

Thanks,

<NAME>

Status: Issue closed

username_1: Ah, I see. Sorry, I did not realise you were copying between dfs endpoints. Unfortunately, that is not currently supported. For account to account copies, we only support blob endpoints (non-hierarchical).

The reason for the limited support is that interoperability, between blob and dfs, is coming in the Storaeg Service, so that you'll be able to use the existing blob to blob copy feature even on hierarchical accounts. That's why we have not written dfs-to-dfs copy (since soon it won't be needed). In fact, interop just went into preview in some regions a few days ago. Here is the link: https://docs.microsoft.com/en-us/azure/storage/blobs/data-lake-storage-multi-protocol-access

To copy account to account right now (without interop) you'll need to either download the data to disk then upload it to the target (only works for small-to-moderate data volumes, due to disk space requirements) or use something like Azure Data Factory (I think it as Gen2-to-Gen2 copy). Sorry about the limited support right now, and doubly sorry that I didn't previously see "gen2" right there in the subject line of this issue!!!! Yesterday I just followed the link in from the notification email, and didn't read the title!

username_2: Hello there !

2 days ago, interop general availability has been announced:

https://azure.microsoft.com/fr-fr/blog/multi-protocol-access-on-data-lake-storage-now-generally-available/

However I tried an azcopy between two gen2 storage account but it failed woith same error:

"the inferred source/destination combination is not currently supported."

Do you know when it will be fix ?

username_1: Did you use blob endpoint urls for source and destination?

username_2: Hey John ! Thanks for your answer. Indeed I was only using the DFS ones ! Thanks for pointing that !

With blob, it goes further:

```

~/azcopy_windows_amd64_10.3.2

$ export AZCOPY_SPA_CLIENT_SECRET=XXXXXX

$ ./azcopy.exe login --service-principal --application-id XXXXXXX --tenant-id= XXXXXXX

INFO: SPN Auth via secret succeeded.

$ ./azcopy.exe copy 'https://mystorageaccount.blob.dfs.core.windows.net/fs1/test ' 'https://otherstorageaccount.blob.dfs.core.windows.net/fs2/test' --recursive

INFO: Scanning...

INFO: Using OAuth token for authentication.

failed to perform copy command due to error: a SAS token (or S3 access key) is required as a part of the source in S2S transfers, unless the source is a public resource

```

Unfortunately, my company doesn't allow public resources for security reasons.

I will have to figure out how to generate a SAS Token for 2 storages Account. I know how to do it for one, it's easy on the portal. But for 2 storage Account, I need to find... Or maybe you know John ?

Best regards

username_1: OAuth is fine for the destination. You only need a sas for the source account. The easiest place to generate them is in Storage Explorer. Hopefully it will let you generate a blob style one for your adls gen 2 container. If that doesn't work, Powershell might. Remember you need a blob sas url, not an adls gen 2 sas url.

Let us know if your can't get it working

Btw a future version of AzCopy will remove the need for a sas on the source. (by using OAuth for both source and dest) Choose "watch" on our releases page to be notified when it comes out.

username_3: i am trying to Run Azcopy in ADF V2 custom activity command ,but it's not working .

**ADF Command : Azcopy.exe copy @pipeline().parameters.Source @pipeline().parameters.Dest**

May i know how to pass dynamic source and destination path though the ADF pipeline parameters or Varaibles in AZcopy?

username_0: Thank you, coping files from one folder to another folder with ADLS gen2 storage account.

I have couple of more questions:

1) Is it possible to move the files from one folder to another folder thru AzCopy? my requirement is not keep the files in source folder once they have been moved from source folder to destination.

username_1: We don't currently support "move" (i.e. we don't have support for deleting the source). That's an open feature request here: See https://github.com/Azure/azure-storage-azcopy/issues/45

username_2: Error: unknown flag: --log-level

I have added --log-level DEBUG at the end of my command, but I don't more than before, only:

"

INFO: Scanning...

INFO: Using OAuth token for authentication.

"

How Can I debug more the calls ?

I would to know exactly why it hangs, which calls it is making...

username_1: I think both problems are caused by the same thing. The URLs you're using, as you posted above, contain: "accountname.blob.dfs.core.windows.net". They should have blob OR dfs. Remove the dfs section of the URL, and I think both your test scenarios will work.

username_2: Ohh ! Thank you so much John :-) !

I just had a last struggle by understanding a 403 Error.

In fact, it was the IP Range. I had put 0.0.0.0-255.255.255.255 and it didn't worked, I had 403. I then left the field "IP Range" empty and generated the SAS Token and now it work like a charm !

I successfully copied 1 file from 1 Adlsgen2 storage Account to another Adlsgen2 storage Account ! Perfect !

That's awesome :-) Thanks much again John !

Note: To understand 403, there is several things to check (Blob Data Owner Role, Time of SAS Token, IP Range ...), so if there were explanation on the 403, it would be perfect.

username_0: Hello John, Do we still need to use Blob SAS key to move files from one folder to another folder within Gen2 Storage account? Or AzCopy latest version does not require that?

username_1: It will be version 10.4 that gives the feature you're asking for. You can track the pull request here: https://github.com/Azure/azure-storage-azcopy/pull/689 Note that 10.4 includes three big features, of which this is the smallest (and this SAS issue has been held up behind the other two features a little, but the plan is for all three to be in 10.4).

username_0: Thank you. Do you know when 10.4 will be released?

username_1: We don't pre-announce dates, sorry.

username_2: Thanks for the news, what are the 2 others bigs features for azcopy 10.4 please ?

username_1: Preserving properties (including ACLS) when transferring to and from Azure Files; and preserving folder properties (including ACLS) and existence (including empty folders) when transferring between folder-aware sources. (File systems and Azure Files are folder-aware. Blob storage is not because folders there are just virtual).

ACL preservation is highly-demanded, and folder preservation is necessary to make ACL preservation logically consistent.

username_0: Thank you. Do you know when 10.4 will be released?

From: <NAME>

Sent: Tuesday, January 21, 2020 4:23 PM

To: 'Azure/azure-storage-azcopy' <<EMAIL>>

Subject: RE: [Azure/azure-storage-azcopy] Azcopy10 - Is it possilbe to copy files within a ADLS gen2 storage account from one folder to another folder (#507)

Thank you. Do you know when 10.4 will be released?

username_1: We don't announce release dates in advance, sorry. There's still quite a lot of work to do on 10.4.

username_0: Hello John, I am using 10.4 version, but the flowing function is still not working… Copying files from between Gen2 storage accounts, it is still asking for SAS key. Can you please help?

failed to perform copy command due to error: a SAS token (or S3 access key) is required as a part of the source in S2S transfers, unless the source is a public resource

From: <NAME>

Sent: Thursday, January 23, 2020 11:44 AM

To: 'Azure/azure-storage-azcopy' <<EMAIL>>

Subject: RE: [Azure/azure-storage-azcopy] Azcopy10 - Is it possilbe to copy files within a ADLS gen2 storage account from one folder to another folder (#507)

Thank you. Do you know when 10.4 will be released?

From: <NAME>

Sent: Tuesday, January 21, 2020 4:23 PM

To: 'Azure/azure-storage-azcopy' <<EMAIL><mailto:<EMAIL>>>

Subject: RE: [Azure/azure-storage-azcopy] Azcopy10 - Is it possilbe to copy files within a ADLS gen2 storage account from one folder to another folder (#507)

Thank you. Do you know when 10.4 will be released?

username_4: Hi

I want to copy a bunch of ORC files (about 700 of them) from ADLS Gen 2 to Azure Blob.

Is it not possible? Is it too complex?

thanks.

username_1: @username_4 This looks like a new question. I'd suggest you post it as a new issue.

username_5: @username_1 - like other users in this thread we are trying to do s2s copy (from ADLS Gen2 to ADLS Gen2) and can only do so with a SAS token on the source. Allowing users to create SAS tokens forces us to give these users access permissions (far?) beyond "read", which is a concern for our security folks. What is the best practice to do what we need to do? What are the minimal additional access permissions so that users can get SAS tokens? How are your other customers working with what looks to us like a pretty serious limitation?

You also refer to a solution to the issue in version 10.4 - did that solution end up not making it into the release? Has the referenced PR (https://github.com/Azure/azure-storage-azcopy/pull/689) been abandoned?

username_1: @username_5 I'm on a different project now, and no longer involved in AzCopy. (I only saw this because GitHub notified me when you @-mentioned me.)

@username_6 or @amishra-dev should be able to answer your question.

username_5: @username_6 or @amishra-dev - would you be able to help with the questions above?

username_6: Hi @username_5, it seems that there are some confusions here. In general, your customers should not be in the business of creating SAS tokens, as only the account owner (the one with the storage account key) should do it. If you have users who need a SAS token, you should perhaps have your service hand them the tokens ready.

Please create a new issue if you have any further questions. Thanks!

username_5: @username_6: before I create a new issue, please help me with 2 questions:

(1) What is PR https://github.com/Azure/azure-storage-azcopy/pull/689 about? I thought it would support seamless copy based on OAuth authentication only. Am I mistaken?

(2) Are you suggesting that we build a service that provides users with SAS tokens whenever they need one?

username_6: 1) Yes, the PR needs to be reworked but basically the user can authentication with OAuth, and we'd generate an identity SAS for them. They have to have the right permissions on the account.

2) I'm not aware of your application so I cannot say. I made the comment because typically your users shouldn't be the ones generating SAS using account keys. In some applications, the service hands a token to an end user (ex: a mobile app user) who can use it upload/download blobs. You can also use [RBAC with Storage](https://docs.microsoft.com/en-us/azure/storage/common/storage-auth-aad-rbac-portal). |

matrix-org/synapse | 358016305 | Title: VOIP crashes under Python 3

Question:

username_0: ```

2018-09-07 12:33:00,847 - synapse.http.server - 101 - ERROR - b'GET'-83- Failed handle request via <function JsonResource._async_render at 0x7f3dc8904158>: <XForwardedForRequest at 0x7f3dc633c198 method=b'GET' uri=b'/_matrix/client/r0/voi

p/turnServer?' clientproto=b'HTTP/1.1' site=8008>: Traceback (most recent call last):

File "/home/krombel/synapse/venv35/lib/python3.5/site-packages/Twisted-18.7.0-py3.5-linux-x86_64.egg/twisted/internet/defer.py", line 1418, in _inlineCallbacks

result = g.send(result)

File "/home/krombel/synapse/synapse/http/server.py", line 295, in _async_render

callback_return = yield callback(request, **kwargs)

File "/home/krombel/synapse/venv35/lib/python3.5/site-packages/Twisted-18.7.0-py3.5-linux-x86_64.egg/twisted/internet/defer.py", line 1613, in unwindGenerator

return _cancellableInlineCallbacks(gen)

File "/home/krombel/synapse/venv35/lib/python3.5/site-packages/Twisted-18.7.0-py3.5-linux-x86_64.egg/twisted/internet/defer.py", line 1529, in _cancellableInlineCallbacks

_inlineCallbacks(None, g, status)

--- <exception caught here> ---

File "/home/krombel/synapse/synapse/http/server.py", line 81, in wrapped_request_handler

yield h(self, request)

File "/home/krombel/synapse/synapse/http/server.py", line 295, in _async_render

callback_return = yield callback(request, **kwargs)

File "/home/krombel/synapse/venv35/lib/python3.5/site-packages/Twisted-18.7.0-py3.5-linux-x86_64.egg/twisted/internet/defer.py", line 1418, in _inlineCallbacks result = g.send(result)

File "/home/krombel/synapse/synapse/rest/client/v1/voip.py", line 45, in on_GET

mac = hmac.new(turnSecret, msg=username, digestmod=hashlib.sha1)

File "/home/krombel/synapse/venv35/lib/python3.5/hmac.py", line 144, in new return HMAC(key, msg, digestmod)

File "/home/krombel/synapse/venv35/lib/python3.5/hmac.py", line 42, in __init__

raise TypeError("key: expected bytes or bytearray, but got %r" % type(key).__name__)

builtins.TypeError: key: expected bytes or bytearray, but got 'str'

```

Status: Issue closed

Answers:

username_0: Fixed. Thanks @krombel |

GoogleCloudPlatform/container-engine-accelerators | 411068991 | Title: Pod Unschedulable

Question:

username_0: I am getting two errors after deploying my object detection model for prediction using GPUs:

1.PodUnschedulable Cannot schedule pods: Insufficient nvidia

2.PodUnschedulable Cannot schedule pods: com/gpu.

I have followed this post for deploying of my prediction model:

https://github.com/kubeflow/examples/blob/master/object_detection/tf_serving_gpu.md

and [this](https://cloud.google.com/kubernetes-engine/docs/how-to/gpus#installing_drivers) one for installing nvidia drives to my nodes.

I haven't used nvidia-docker.

This is the output of the `kubectl describe pods` command:

```

ame: xyz-v1-5c5b57cf9c-ltw9m

Namespace: default

Node: <none>

Labels: app=xyz

pod-template-hash=1716137957

version=v1

Annotations: <none>

Status: Pending

IP:

Controlled By: ReplicaSet/xyz-v1-5c5b57cf9c

Containers:

xyz:

Image: tensorflow/serving:1.11.1-gpu

Port: 9000/TCP

Host Port: 0/TCP

Command:

/usr/bin/tensorflow_model_server

Args:

--port=9000

--model_name=xyz

--model_base_path=gs://xyz_kuber_app-xyz-identification/export/

Limits:

cpu: 4

memory: 4Gi

nvidia.com/gpu: 1

Requests:

cpu: 1

memory: 1Gi

nvidia.com/gpu: 1

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-b6dpn (ro)

xyz-http-proxy:

Image: gcr.io/kubeflow-images-public/tf-model-server-http-proxy:v20180606-9dfda4f2

Port: 8000/TCP

Host Port: 0/TCP

Command:

python

/usr/src/app/server.py

--port=8000

--rpc_port=9000

--rpc_timeout=10.0

Limits:

cpu: 1

memory: 1Gi

Requests:

cpu: 500m

memory: 500Mi

Environment: <none>

[Truncated]

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulMountVolume 9m51s kubelet, gke-kuberflow-xyz-gpu-pool-a25f1a36-pvf7 MountVolume.SetUp succeeded for volume "boot"

Normal SuccessfulMountVolume 9m51s kubelet, gke-kuberflow-xyz-gpu-pool-a25f1a36-pvf7 MountVolume.SetUp succeeded for volume "root-mount"

Normal SuccessfulMountVolume 9m51s kubelet, gke-kuberflow-xyz-gpu-pool-a25f1a36-pvf7 MountVolume.SetUp succeeded for volume "dev"

Normal SuccessfulMountVolume 9m51s kubelet, gke-kuberflow-xyz-gpu-pool-a25f1a36-pvf7 MountVolume.SetUp succeeded for volume "default-token-n5t8z"

Normal Pulled 7m1s (x4 over 9m50s) kubelet, gke-kuberflow-xyz-gpu-pool-a25f1a36-pvf7 Container image "gke-nvidia-installer:fixed" already present on machine

Normal Created 7m1s (x4 over 9m50s) kubelet, gke-kuberflow-xyz-gpu-pool-a25f1a36-pvf7 Created container

Normal Started 7m1s (x4 over 9m50s) kubelet, gke-kuberflow-xyz-gpu-pool-a25f1a36-pvf7 Started container

Warning BackOff 4m44s (x12 over 8m30s) kubelet, gke-kuberflow-xyz-gpu-pool-a25f1a36-pvf7 Back-off restarting failed container

```

Error log from the pod `kubectl logs nvidia-driver-installer-p8qqj -n=kube-system` :

`Error from server (BadRequest): container "pause" in pod "nvidia-driver-installer-zxn2m" is waiting to start: PodInitializing`

How can I fix this?

Answers:

username_0: It got fixed after I deleted all of the nvidia pods and deleted the node and recreated it and installed the nvidia drivers and plugin again.

Status: Issue closed

|

TechReborn/TechReborn | 545338947 | Title: Easier way to get Chrome

Question:

username_0: Industrial machine frames and casings call for a LOT of chrome. More than what would be collectible without serious grinding.

Example for blast furnace with industrial machine casings:

- 34 industrial machine casing

- 34*6 chrome plates + 34 * 4 chrome plates (industrial machine frame) => 340 Chrome plates

- 9 ruby dust for 1 Chrome dust in Electrolyzer => 3060 Ruby dust

- 2,5 Rubies per Ruby Ore in Industrial Grinder => 7650 Ruby ore

That's... a lot of ruby ore.

Though there are other means of getting ruby dust (for example 3 for 32 redstone dust, Uvarovite dust, or UU-Matter) it still would take days of playing just for a few casings.<issue_closed>

Status: Issue closed |

JanDitzen/xtdcce2 | 531307996 | Title: ARDL with pooled coefficients and id vector with missing

Question:

username_0: Problem xtdcce_m_lrcalc assigns varnames for variables as varname_1...rows(beta vector); need to add id variable argument to lr program.

Answers:

username_0: Fixed error. program now loops through all id names to create output name lists

Status: Issue closed

|

airn/Rentr | 64430153 | Title: Tests: assertNotEqual for get_invalid_rentable?

Question:

username_0: WTF IS THIS BULLSHIT

Answers:

username_1: I don't know why that is there.

username_1: So i changed the assertion but it fails now. I don't understand why, the store invalid test case is is passing and is the exact same code... The only thing I could see is since the rentables aren't getting deleted after every test there is one for the pk we are passing it since it is returning a 200. I did try and make the pk 900000000000 and i still got a 200.

Status: Issue closed

|

summernote/summernote | 160328384 | Title: onPaste -- pulling out images

Question:

username_0: I have an onImageUpload event that works perfectly for images dropped in (it uploads to a server and changes the url as it should). How do I replicate this for pasted images within data from word or other outside programs (onenote, etc) from the clipboard?

#### steps to reproduce

1. Add image and some text to word document

2. Copy images and text

3. Paste in Summernote

#### browser version and os version

What is your browser and OS? Chrome/Firefox, W10

Answers:

username_1: This is something I'd like to implement into summernote-cleaner. It's something I've been playing with, with the plugin. The ability to convert as much of the formatting to HTML (including getting the images). I think this would be a good option to help client's with editing, and save them time, instead of just stripping all the formatting.

username_2: This is one of the feature that I am looking for as well. Any ideas on what is possible and what can be done?

Ideally when you copy paste text and image, the text formatting should be retained and image could be inlined or uploaded, inserting the link for uploaded image.

Thanks

username_3: I have the text and image working separately but I am also working on combining with JS getdata. I am also looking forward to this feature in summernote as it would be time saving not to have to copy all text then copy images separately to paste.

username_1: @username_3 please keep us updated on this, as this is something I've also been trying to work on for client's, and so far without success. If you wish to integrate it into summernote-cleaner, that would be awesome too.

So far I've been able to stop the img tags from being removed, but the image data is gone, so that's a small step I suppose. If I copy from a website text that has referenced images (referenced from a file on a server), that works as expected, but getting inlined or embedded images to remain in the data is another matter.

Anywho, work progresses.

username_3: So far I have been able to use the text/html to retain the html formatting. Since OneNote or possibly word stores the copied and pasted image into a local appdata temp file, need to work on extracting the image and utilizing summernote's pasteHTML or insertImage to workaround Chrome's Not allowed to load local resource message

Warm regards.

username_4: I've added this to my todo list as you can see above. I'll close this now, as it's been open for a while now.

Status: Issue closed

username_5: any update to this, it is really getting messi as the users are pasting images that goes to the Dbs, so far I limited the amount of characters that can be added, but I would like to just filter out images from clipboard on paste, or better to be able to replace images on clipboard with corresponding links. TY

username_6: Hi,

Is this abandoned? There is some progress?

username_4: Not abandoned as such, just been busy with other things.

username_7: @username_4 you have the steps or logic to do the Pulling out of images on paste?

If have, can you share to be implemented by someone?

I am looking for this segregation too but not found a way to identify when images go to OnImageUpload to ignore OnPaste action.

Thank you :-)

username_4: Not yet, sorry, been very busy with other things to be able to sit down and look at this.

username_8: Hello! I'm not understanding what you are saying

username_8: Please the message above was only trying .I want also to integrate summernote in my web site using that method |

facebookresearch/ParlAI | 228986483 | Title: Add Dockerfile

Question:

username_0: Add Dockerfile and a docker image which might make trying out ParlAI easier.

Answers:

username_1: @username_0 Sure, we are working on that.

CC/ @username_2

username_2: Low priority for the moment, but will add when we get the chance.

Status: Issue closed

username_2: closing for now without dockerfile support |

jendiamond/vue2 | 312757972 | Title: Showing the Player Controls Conditionally

Question:

username_0: Show the sections based on whether the game has started or not.

```html

<section class='row controls' v-if ='!gameOn'>

<div class='small-12 columns'>

<button id='start-game'>START NEW GAME</button>

</div>

</section>

<section class='row controls' v-else ='gameOn'>

<div class='small-12 columns'>

<button id='attack'>ATTACK</button>

<button id='special-attack'>SPECIAL ATTACK</button>

<button id='heal'>HEAL</button>

<button id='give-up'>GIVE UP</button>

</div>

</section>

```

```js

new Vue({

el: '#monsterslayer',

data: {

gameOn: false,

playerHealth: 100,

monsterHealth: 100,

},

});

```

Status: Issue closed

Answers:

username_0: Show the sections based on whether the game has started or not.

```html

<section class='row controls' v-if ='!gameOn'>

<div class='small-12 columns'>

<button id='start-game'>START NEW GAME</button>

</div>

</section>

<section class='row controls' v-else ='gameOn'>

<div class='small-12 columns'>

<button id='attack'>ATTACK</button>

<button id='special-attack'>SPECIAL ATTACK</button>

<button id='heal'>HEAL</button>

<button id='give-up'>GIVE UP</button>

</div>

</section>

```

```js

new Vue({

el: '#monsterslayer',

data: {

gameOn: false,

playerHealth: 100,

monsterHealth: 100,

},

});

```

Status: Issue closed

|

haskell-nix/hnix | 1039726911 | Title: `Prelude` module clashes with `base`

Question:

username_0: v0.15.0 exposes a new `Prelude` module that makes it difficult to use `hnix`, e.g.:

```

src/Dhall/Nix.hs:87:8: error:

Ambiguous module name ‘Prelude’:

it was found in multiple packages: base-4.14.3.0 hnix-0.15.0

|

87 | module Dhall.Nix (

| ^^^^^^^^^

```

Could this `Prelude` be made internal or alternatively be exposed under a different name, say, `Nix.Prelude`?

Answers:

username_1: Woops.

Oh, yes.

Was thinking about it while making it.

Would fix it in nearest time.

Probably making it internal would just work.

Actually I was more surprised/impressed that what I had in mind - I made it work. The setup combines the mixing loaded `relude`s `Prelude` & project local `Utils` into 1 `Prelude` module.

Yep. Serious structural thing.

username_1: Ye....

Cabal works only this way & does not allow to not export the `Prelude` :man_shrugging: ...

Probably would need to go `NoImplicitPrelude` route & import it as `Nix.Prelude` everywhere ...

Today is late, would do it tomorrow.

I made a minor release beforehand, so if some stuff like this happens - there is a backup option.

username_0: Sounds like a `Cabal` bug to me. Might be worth reporting.

In any case this isn't an urgent issue for me. `dhall-nix*` can simply continue using v0.14 for now.

username_1: But as an active HNix maintainer - it is one for me. It is a disservice to make Cabal promote a broken release.

The proper thing in these cases - is to demote that version right away.

I going to make a new release & then open a Cabal report ... ("So many houses, so little time")

username_1: Deprecated the `0.15.0`.

username_2: The `0.15.0` version is still being chosen by `cabal` for build plans, so this is forcing all packages using `hnix` to add an upper-bound constraint of `< 0.13`. Is there a timeframe for a fix (and what is the fix.. rolling back to `Prelude`?)?

username_0: Can you give an example?

It might be helpful to add an impossible constraint like `base < 0` to `v0.15.0`.

username_1: Well. Nix 2.3.15 released with a breakage without documentation & then Nix 2.4 was released with regressions in "stable" features & without increasing the `languageVersion` shipped undocumented changes in the handling of the language. That is not just problem for me, but central Nixpkgs maintainers started & gather signings in the declaration: https://discourse.nixos.org/t/nix-2-4-and-what-s-next/16257 to stop these sudden undocumented top-down breakages

& HNix has & required to follow Nix bug2bug if that is needed & so uses Nix for golden tests. From the tests enabled there is currently breakage in 1 escaping mechanism moment that Nix changed. Because it is not documented, I hunted through the Nix git logs & found no explicit place where escaping was changed, & read through a bunch of C++ at the best of my possibilities, tried to bisect the issue, but you get the picture. The is a need for someone who knows C++ better than me.

I tried to adjust the HNix behavior in https://github.com/haskell-nix/hnix/pull/1009 but failed. & then I decided that if nobody really cares for this - I better do some work in HLS then, which I do currently.

Seems like HNix does the representation of this by running the `iterNValue` directly. I'd loved to see the C++ code - to know in what part of the process the escaping changes.

username_1: & I'd personally put those tests as broken for a time & worked on other things. Because there is a number of new tests to take from Nix that do work & other ones are easier to do for me.

username_1: Set a suggested impossible bound on the `0.15`.

username_2: The main project is not public, but here's a simple reproduction. First, the basic setup of a method to test this:

```

$ mkdir hnix-test

$ cd hnix-test

$ cabal --version

cabal-install version 3.6.0.0

compiled using version 3.6.1.0 of the Cabal library

$ ghc --version

The Glorious Glasgow Haskell Compilation System, version 8.10.7

$ cabal init

...

$ cabal run

...

Hello, Haskell!

```

Then I add hnix as a dependency. With no constraints, cabal selects 0.14.0.5, but alas, this does not build:

```

$ sed -i -e '/build-depends:/s/build-depends: .*/build-depends: base, hnix/' hnix-test.cabal

$ cabal run

Build profile: -w ghc-8.10.7 -O1

...

- hnix-0.14.0.5 (lib) (requires download & build)

- megaparsec-9.1.0 (lib)

...

Starting hnix-0.14.0.5 (lib)

Building hnix-0.14.0.5 (lib)

Failed to build hnix-0.14.0.5

...

[32 of 50] Compiling Paths_hnix ( dist/build/autogen/Paths_hnix.hs, dist/build/Paths_hnix.o, dist/build/Paths_hnix.dyn_o )

dist/build/autogen/Paths_hnix.hs:66:22: error:

* 'last' works with 'NonEmpty', not ordinary lists.

...

66| | isPathSeparator (last dir) = dir ++ fname

```

It works fine for hnix 0.12.0.1:

```

$ cabal run --constraint 'hnix < 0.13'

...

- hnix-0.12.0.1 (lib) (requires download & build)

- megaparsec-9.0.1 (lib)

...

Hello, Haskell!

```

Trying 0.13.1 fails:

```

$ cabal run --constraint 'hnix < 0.14'

...

- hnix-0.13.1 (lib) (requires download & build)

- megaparsec-9.0.1 (lib)

...

[same error as 0.14: 'last' works with 'NonEmpty', not ordinary lists. at line 66 of Paths_hnix.hs]

```

[Truncated]

```

$ sed -i -e '/build-depends:/s/build-depends: .*/build-depends: base, hnix, megaparsec, servant/' hnix-test.cabal

$ cabal run --constraint 'hnix >= 0.11'

...

- servant-0.18.3 (lib) (requires build)

- hnix-0.15.0 (lib) (requires build)

...

Building library for hnix-0.15.0..

[ 1 of 52] Compiling Nix.Utils ( src/Nix/Utils.hs, dist/build/Nix/Utils.o, dist/build/Nix/Utils.o, dist/build/Nix/Utils.dyn_o )

[ 2 of 52] Compiling Prelude ( src/Prelude.hs, dist/build/Prelude.o, dist/build/Prelude.dyn_o )

[ 3 of 52] Compiling Paths_hnix ( dist/build/autogen/Paths_hnix.hs, dist/build/Paths_hnix.o, dist/build/Paths_hnix.dyn_o )

dist/build/autogen/Paths_hnix.hs:66:22: error:

* 'last' works with 'NonEmpty', not ordinary lists

...

```

Based on the above, it appears that:

* all of the releases after 0.12.0.1 are failing (due the switch to `relude` ?) and

* there are some package combinations that will select hnix 0.15.0 without explicit upper-bounds preventing this

username_1: `0.15` supported `megaparsec` `0.9.2`.

That may be the thing that makes it choose it.

What the hell is `Path_hnix` error, if that module is autogenerated.

I would look into it when I have time & would try to make a release soon, but it is probably no earlier then I'm a week.

But since I currenly do not know that is the cause of the syndrome - do not know if new release would fix the autogeneration of the module.

username_0: @username_2 If you `cabal update`, do you still get build plans with `hnix-0.15`? You shouldn't.

Regarding the weird build failures in `Paths_hnix.hs`, I think you're running into a `cabal` bug. See https://github.com/haskell/cabal/blob/master/release-notes/cabal-install-3.6.2.0.md#significant-changes.

If you update your `cabal` installation, it should be gone.

username_2: Running a new `cabal update` seems to resolve the selection of 0.15:

```

$ cabal update 'hackage.haskell.org,2021-12-03T15:52:06Z'

$ cabal run --constraint 'hnix >= 0.11'

...

- hnix-0.15.0

...

$ cabal update 'hackage.haskell.org,2021-12-04T14:47:15Z'

$ cabal run --constraint 'hnix >= 0.11'

...

- hnix-0.14.0.5

...

```

Also, upgrading cabal from 3.6.0.0 to 3.6.2.0 does fix the `Paths_hnix` compile problem and I can successfully build the 0.14.0.5 version.

Interestingly, without the lower bound constraint and when an unconstrained `megaparsec` is part of the build dependencies, cabal still chooses the very old `hnix-0.2.1` (and version 9.2.0 of `megaparsec`). Removing `megaparsec` changes the build plan back to 0.14.0.5. Shrug.

Thank you both, @username_0 and @username_1 for your help on this issue (and your work on hnix). I now have a better plan for using a much more recent version of hnix than 0.12.

username_1: @username_2 Thank you for contributing information & reporting the situation.

Thank you Simon for help here, he is closer to the center of the Haskell stack & knows the specifics. Do not know your current situation hope you would get a living out of it.

Marking these messages as resolved.

This topic overall is still open, as somebody (I) still needs to unbork the bork.

username_1: Currently resolved in `master`.

username_1: Released `0.16.0`, which uses `NoImplicitPrelude` & prelude is in `Nix.Prelude`. |

redisson/redisson | 1042029916 | Title: Redisson client should be created even without all connections being initialized

Question:

username_0: The problem we are facing now with Redisson is that we create the Redisson client at the application start-up and quite often we get an error like the following:

```

Unable to init enough connections amount! Only 49 of 50 were initialized.

```

or for a different use case:

```

Unable to init enough connections amount! Only 1 of 2 were initialized.

```

This leads to the client not being created.

We don't want to retry synchronously because it would slow down the application start-up which in turn would slow down the AutoScalingGroup outscaling.

We would like to get an instance of the Redisson client even if none of the connections are initialized. There should be some async retry mechanism for re-establishing the connections.

We currently use a Dummy client as a fallback (when the Redis is not critical)) or manage the client creation async retry by ourselves, but we need to replicate this retry mechanism for each Redisson client (we have different use cases with different Redis clusters).

Would be very useful to have such a functionality built-in. We used to have Aerospike before Redis and Aerospike had such a functionality (you were able to set [this](https://github.com/aerospike/aerospike-client-java/blob/master/client/src/com/aerospike/client/policy/ClientPolicy.java#L206) property to false) |

laravel/lumen-framework | 430188385 | Title: 127.0.0.1 is not reachable when init server with localhost

Question:

username_0: - Lumen Version: 5.8.4

- PHP Version: 7.1.23

### Description:

127.0.0.1:8000 doesn’t work when I init the server with `php -S localhost:8000 -t public`. Only localhost:8000 works.

If I init it with `php -S 127.0.0.1:8000 -t public`, both 127.0.0.1:8000 and localhost:8000 works.

I found this while playing with a nuxt app making calls with axios. Though I’m not sure if issue is from Lumen making the 127.0.0.1 inaccessible. Or axios converting localhost to 127.0.0.1 which make it impossible to access the API server.

Both Lumen and nuxt is freshly install

Answers:

username_1: Both work for me when I try them out. I believe this might be related to something on your machine specifically. Can you first please try one of the following support channels? If you can actually identify this as a bug, feel free to report back and I'll gladly help you out.

- [Laracasts Forums](https://laracasts.com/discuss)

- [Laravel.io Forums](https://laravel.io/forum)

- [StackOverflow](https://stackoverflow.com/questions/tagged/laravel)

- [Discord](https://discordapp.com/invite/KxwQuKb)

- [Larachat](https://larachat.co)

- [IRC](https://webchat.freenode.net/?nick=laravelnewbie&channels=%23laravel&prompt=1)

Thanks!

Status: Issue closed

|

voila-dashboards/voila | 552439674 | Title: voila dashboard on mybinder 500 error

Question:

username_0: My voila dashbaord example that runs on MyBinder stopped working: https://github.com/ismms-himc/codex_dashboard

It used to display the interactive widget correctly, but not it now shows a 500 internal server error. I'm not sure it it is an issue with MyBinder or Voila. I have myself pinned to voila 0.1.9 (https://github.com/ismms-himc/codex_dashboard/blob/master/requirements.txt) so I think it might be a mybinder problem.

The same Codex dashboard is still working on the voila gallery (https://github.com/voila-gallery/voila-gallery.github.io/blob/master/_data/gallery.yaml#L43, https://voila-gallery.org/)

Answers:

username_1: Could it be another package that got upgraded?

Maybe it is not related, but I tried executing your Notebook from the classic Jupyter Notebook on binder and it seems to hang at this cell:

```python

net.load_df(df['tile-neighbor'])

net.normalize(axis='row', norm_type='zscore')

net.clip(-5,5)

net.widget()

net.widget_instance.observe(on_value_change, names='value')

```

username_1: Sorry, actually it works. But it took more than 20 seconds to execute.

I wonder if that would be possible to start Voila from a terminal on binder, so you can see the actual error message in the terminal.

username_2: The one in the gallery uses this commit: https://github.com/ismms-himc/codex_dashboard/commit/b8c8fb820c918d4fc343cf6d8a25bd81cad837c9

While the one in the repo is built from `master`, so as of today this commit: https://github.com/ismms-himc/codex_dashboard/commit/aa7f8fdd5fb3cac5321a064f33097cf725bee5e1

So it's possible the two Binders were built at different times, and something changed in between.

Have you tried to reproduce the issue locally using [repo2docker](https://repo2docker.readthedocs.io/en/latest/)? mybinder.org is usually using the latest version of it: https://github.com/jupyterhub/mybinder.org-deploy/blob/c1440f66c4c05ca421b53c49a045771e963d4d4d/mybinder/values.yaml#L72

username_2: @username_0 have you tried changing the dashboard, for example by updating voila to 0.1.20?

This would trigger a new build on mybinder.org the next time the binder link is used, and might give some more information on whether something was wrong with the previous build.

username_0: Hi @username_2 I updated the version of voila (https://github.com/ismms-himc/codex_dashboard/blob/master/requirements.txt#L3) but now it is getting stuck on cell 9. I'll try to run it locally and let you all know what happens.

username_2: What does cell 9 do?

username_0: It defines a function see: https://github.com/ismms-himc/codex_dashboard/blob/master/index.ipynb

It runs on my computer, but I want to try the repo2docker suggestion you made above.

username_0: The notebook was getting stuck at cell 10, which does the hierarchical clustering and generate the widget.

I tried running the notebook (index.ipynb) using mybinder (https://mybinder.org/v2/gh/ismms-himc/codex_dashboard/master) and the notebook ran correctly. However clicking the voila button in the classic notebook did not render the Voila dashboard correctly (it got stuck at cell 9 again).

Suspecting the mybinder instance ran out of memory, I lowered the number of matrix columns that were being clustered from 5,000 to 3,000 and then the voila button worked correctly. I updated the current notebook to reduce the size of the matrix and now it is rendering correctly using the launch binder button.

So I suspect that the mybinder instance is running out memory.

Status: Issue closed

username_2: Thanks @username_0 for the update.

Memory on mybinder.org Instances is indeed [limited to 1GB](https://mybinder.readthedocs.io/en/latest/faq.html#how-much-memory-am-i-given-when-using-binder).

Ideally there should be some indication when the kernel is not available anymore (https://github.com/voila-dashboards/voila/issues/67). |

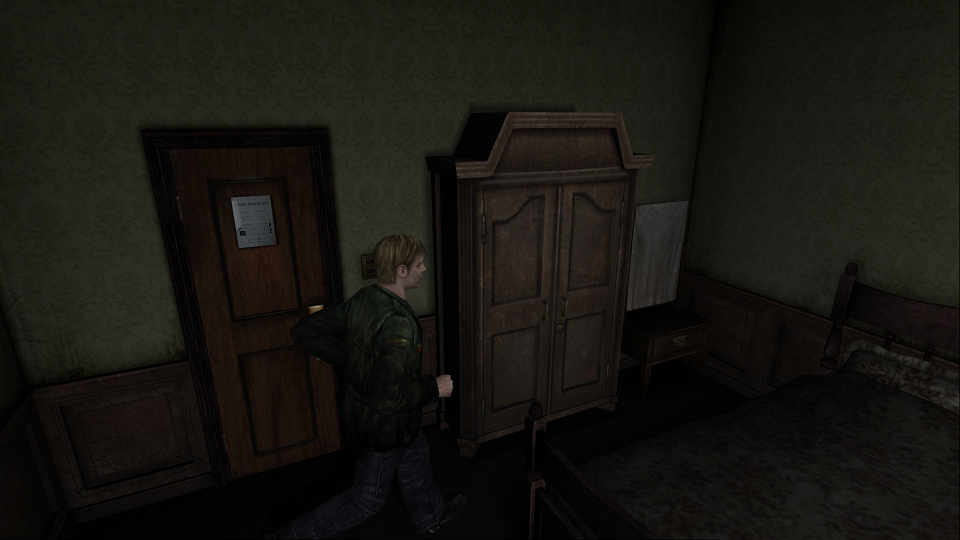

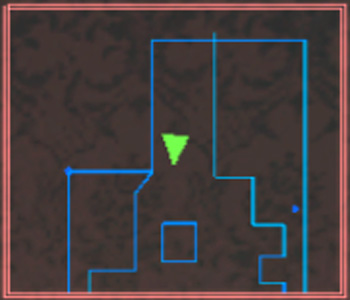

elishacloud/Silent-Hill-2-Enhancements | 421953718 | Title: Invisible wall in Room 312

Question:

username_0: I hope this isn't annoying but..

In the well-known room 312 there is an invisible wall in pass between a bed and a case. Pass rather wide that James could come into it. I do not know for what reason developers did not give such chance. It's a shame at such important point of a game a little. Whether it is possible to correct it? Yes, I do not understand modding of games, so I apologize if my question seems too naive.

Sorry for my bad English.

Answers:

username_1: Hi @username_0 ,

No worries, you're not annoying anyone. :)

As far as I know, I don't believe any one on the team knows how to make new collision boundaries for certain objects.

username_0: Thanks for fast reply:)

username_2: It's possible to find addresses that control collisions of a location by extensive memory scanning (and changing values on the found addresses) and using a hidden minimap as an indicator of change since it draws collisions.

username_2: It's possible to find addresses that control collisions of a location by extensive memory scanning (and changing values on the found addresses) and using a hidden minimap as an indicator of change since it draws collisions. Nekorun says he knows how to activate minimap on PC version.

username_2: Collision data is stored in .cld files. Room 312 data is in `rr91.cld` file.

From what I understand collision data file contains infromation on point pairs that get connected by a line automatically.

This would be a portion (all float values apart from the first several single bytes) that describes one point pair, it has X and Z coordinates for each point and that is needed to move points around:

`01 01 00 00 04 00 00 00 05 00 00 00 00 00 00 00 00 9E 63 47 00 80 F7 C3 00 74 9F 46 00 00 80 3F 00 25 64 47 00 80 F7 C3 00 74 9F 46 00 00 80 3F 00 25 64 47 00 80 F7 C3 00 0C 9E 46 00 00 80 3F 00 9E 63 47 00 80 F7 C3 00 0C 9E 46 00 00 80 3F`

I don't know how to add new data to a .cld file (to create new collision points) that has all the data already stacked together, I can only change existing points position.

Since hidden minimap draws collisions it can be used to track changes made in the file.

I guess some of the room's outer borders or other lines inside the room could be used to create new portion of the area in question.

username_2: https://youtu.be/4LJg80Bs-ww

I used three outer borders that a player doesn't connect with during regular gameplay and one piece of single point geometry that is also unreachable and therefore safe to use without breaking existing room design.

If you like it, I will make a new `rr91.cld` file.

username_1: You put a lot of work into this and the results look great. We should definitely include it then!

username_2: [rr91.zip](https://github.com/username_3/Silent-Hill-2-Enhancements/files/3000501/rr91.zip)

I would advice on using original .cld file for any visual effects research in this room since it's unknown if any effects are tied to the room's collisions (if any of those unreachable borders / single point collisions are needed for something else).

username_1: Thanks for your work here, username_2.

We'll add this to the next update release coming soon. If I turns out we need to revert collision data for other visual effects in this room, we can add the original `rr91.cld` file to a future update to replace the file.

@username_0 you can download username_2's edited file above and place it in `KONAMI\Silent Hill 2\sh2e\bg\rr\`

username_0: Wow, username_2, it is really impressive, thank you👍

Status: Issue closed

|

ppy/osu | 329187686 | Title: In the playlist menu box, dragging down or up on a point in the song lags the game and distorts the audio

Question:

username_0: On the song select box in the top right, obviously the current part of the song can be dragged ahead or behind, but when you drag this yellow bar down, it lags terribly, distorting the audio as well

https://youtu.be/4_OLOe1XrAY

Answers:

username_1: I assume the dragging down causes rapid seeking at nearly the same point.

username_0: That sounds right, it just taxes my hardware when I do it so much that the game lags and the audio also freaks out

username_2: Has since been fixed.

Status: Issue closed

|

angular/material | 58688708 | Title: forms: validation. don't show errors until the user has had a chance to do something

Question:

username_0: When a user opens a page that has a bunch of required fields, all they see is a bunch of red. Not very inviting. I would much rather that validation is hidden until `$touched === true`. I would also like to have the ability to control when validation is shown. I appreciate how helpful angular-material is, but I really like that bootstrap allows me to specify when to show validation errors by requiring me to add the `has-error` class myself. This gives me a lot of flexibility. I haven't looked at the implementation for angular-material, but perhaps I could specify a function which would determine whether validation should be visible for a specific field? However it's done, I just don't want to invite users to my page with a bunch of invalid fields. Let them touch the fields first, then we'll tell them it's invalid if it still is after they've had a chance to do something with it.

Answers:

username_0: Sorry, just realized that it doesn't greet the user with a bunch of red. However, it goes red as soon as focus comes into the input. I would rather wait until `$touched` is set to true which means the user has blurred the input.

username_1: There is a way to specify when to show an error: By using the [`md-is-error` attribute][1].

However there are some issues/bugs with properly detecting the `$touched` state (there are several issues about this, I believe).

[1]: https://material.angularjs.org/#/api/material.components.input/directive/mdInputContainer

username_0: I hadn't ever heard of issues with the `$touched` state. I switched my demo to bootstrap and everything is working really well: http://jsbin.com/zuguxi/edit

username_0: Sorry, just realized I never gave the original example here: http://jsbin.com/cadeko/edit

username_1: This turned out to be longer than anticipated, so long story short:

Due to some bugs in the current implementation:

1. Any input inside an `mdInputContainer` gets set to `touched` upon focus.

2. This state change is not properly propagated (because of improper digest lifecycle handling).

---

This is a bug with setting the touched state of the input. It can be found in [input.js#L183-192][1]:

```js

element

.on('focus', function(ev) {

containerCtrl.setFocused(true);

// Error text should not appear before user interaction with the field.

// So we need to check on focus also

ngModelCtrl.$setTouched();

if ( isErrorGetter() ) containerCtrl.setInvalid(true);

})

```

Here is what is going on:

1. There is the `mdIsError` attribute which can be used to determine when the error should be "applied"/shown.

2. If it is present it is `$parse`d and (incorrectly) used for determining if the error should be applied using the `isErrorGetter()` function.

3. If it is not present, a default function is created that returns `ngModelCtrl.$invalid && ngModelCtrl.$touched`. <-- (this is the case in your example)

4. The `isErrorGetter()` is `$watched` and `setInvalid()` is called on the `mdInputContainer`'s controller according to its return value.

5. For reasons that are not clear to me, upon focusing the input, the following happens:

`ngModelCtrl.$setTouched();`

`if ( isErrorGetter() ) containerCtrl.setInvalid(true);`

As a result of (5):

* The element's state is set to `touched` (although this is not consistent with the semantics of default `$touched`).

* `isErrorGetter()` (which checks the controller's internal state) evaluates to `true`, which results in calling `setInvalid(true)` on the `mdInputContainer`'s controller.

* `mdInputContainer` receives the `md-input-invalid` class, thus stying the containing input with the `warn-500` color (by default `red`).

* Since the event happens outside of Angular's `$digest` cycle and there is not explicit `$apply`, the new state is not properly propagated, thus:

- The input does not receive the `ng-touched` class.

- The `ngShow` on the messages' div is not re-evaluated (it would evaluate to `true` and show the errors, if a digest cycle was properly triggered).

Both of the above can be "fixed" by triggering a digest "manually" from the console (for illustration pusposes only).

[1]: https://github.com/angular/material/blob/efbd414a4d5af7b5144f1d08522e46cc043b627d/src/components/input/input.js#L183-192

username_1: (Needless to say, that the Bootstrap version (which does not rely on `mdInputContainer`) works as expected as it avoids the aforementioned bugs.)

username_0: Thanks for the explanation @username_1. Totally makes sense where this came from. Obviously triggering the digest manually is not an option. In `angular-formly` we have logic like this:

```javascript

// scope.fc = the ngModelController

// scope.options = something that the directive user can manipulate

scope.$watch(function() {

if (typeof scope.options.validation.show === 'boolean') {

return scope.fc.$invalid && scope.options.validation.show;

} else {

return scope.fc.$invalid && scope.fc.$touched;

}

}, function(show) {

options.validation.errorExistsAndShouldBeVisible = show;

scope.showError = show; // <-- just a shortcut for the longer version for use in templates

});

```

This is really handy because in templates, people can do something like this (contrived bootstrap example):

```html

<div class="form-group" ng-class="{'has-error': showError}"> <!-- <-- notice that -->

<label for="my-input" class="control-label">My Label</label>

<input ng-model="my.model" name="myForm" class="form-control" id="my-input" />

<div ng-messages="fc.$error" ng-if="showError"> <!-- <-- and that -->

<!-- messages here -->

</div>

</div>

```

I think the `md-is-error` works great because it gives the same kind of control that `options.validation.show` gives, where you can show errors even if the user hasn't touched an input which is useful sometimes. But the more common case is after the user has interacted with the field.

If it were me, I would do what we're doing in `angular-formly`. Don't explicitly set the `ngModelController` to invalid and `$touched` on focus, but instead only show error state if it's `$invalid && ($touched || isErrorGetter())`.