repo

stringclasses 358

values | pull_number

int64 6

67.9k

| instance_id

stringlengths 12

49

| issue_numbers

sequencelengths 1

7

| base_commit

stringlengths 40

40

| patch

stringlengths 87

101M

| test_patch

stringlengths 72

22.3M

| problem_statement

stringlengths 3

256k

| hints_text

stringlengths 0

545k

| created_at

stringlengths 20

20

| PASS_TO_PASS

sequencelengths 0

0

| FAIL_TO_PASS

sequencelengths 0

0

|

|---|---|---|---|---|---|---|---|---|---|---|---|

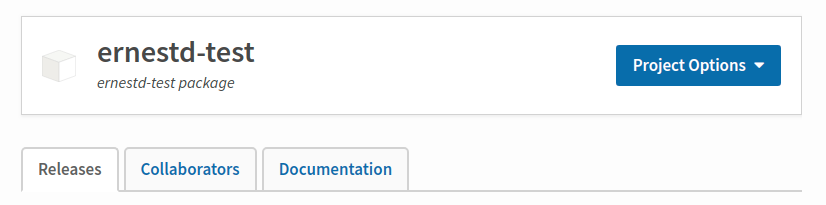

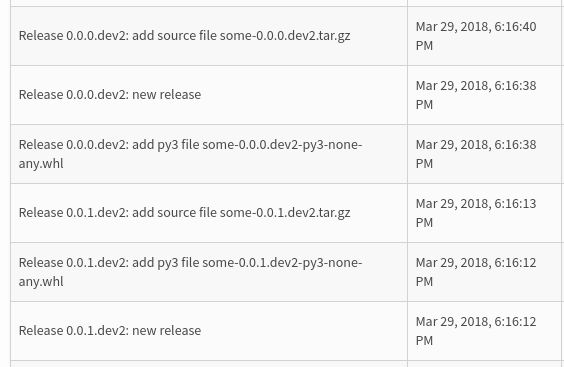

pypi/warehouse | 2,051 | pypi__warehouse-2051 | [

"162"

] | fb3ec3f9eabed2b60565bcb0efac74f694ac65c8 | diff --git a/warehouse/config.py b/warehouse/config.py

--- a/warehouse/config.py

+++ b/warehouse/config.py

@@ -93,8 +93,11 @@ def activate_hook(request):

return True

-def template_view(config, name, route, template):

- config.add_route(name, route)

+def template_view(config, name, route, template, route_kw=None):

+ if route_kw is None:

+ route_kw = {}

+

+ config.add_route(name, route, **route_kw)

config.add_view(renderer=template, route_name=name)

diff --git a/warehouse/forklift/__init__.py b/warehouse/forklift/__init__.py

--- a/warehouse/forklift/__init__.py

+++ b/warehouse/forklift/__init__.py

@@ -45,3 +45,11 @@ def includeme(config):

"doc_upload",

domain=forklift,

)

+

+ if forklift:

+ config.add_template_view(

+ "forklift.index",

+ "/",

+ "upload.html",

+ route_kw={"domain": forklift},

+ )

diff --git a/warehouse/routes.py b/warehouse/routes.py

--- a/warehouse/routes.py

+++ b/warehouse/routes.py

@@ -21,6 +21,10 @@ def includeme(config):

# Simple Route for health checks.

config.add_route("health", "/_health/")

+ # Internal route to make it easier to force a particular status for

+ # debugging HTTPException templates.

+ config.add_route("force-status", "/_force-status/{status:[45]\d\d}/")

+

# Basic global routes

config.add_route("index", "/", domain=warehouse)

config.add_route("robots.txt", "/robots.txt", domain=warehouse)

diff --git a/warehouse/views.py b/warehouse/views.py

--- a/warehouse/views.py

+++ b/warehouse/views.py

@@ -14,8 +14,9 @@

from pyramid.httpexceptions import (

HTTPException, HTTPSeeOther, HTTPMovedPermanently, HTTPNotFound,

- HTTPBadRequest,

+ HTTPBadRequest, exception_response,

)

+from pyramid.renderers import render_to_response

from pyramid.view import (

notfound_view_config, forbidden_view_config, view_config,

)

@@ -60,10 +61,41 @@

)

+# 403, 404, 410, 500,

+

+

@view_config(context=HTTPException)

@notfound_view_config(append_slash=HTTPMovedPermanently)

def httpexception_view(exc, request):

- return exc

+ # This special case exists for the easter egg that appears on the 404

+ # response page. We don't generally allow youtube embeds, but we make an

+ # except for this one.

+ if isinstance(exc, HTTPNotFound):

+ request.find_service(name="csp").merge({

+ "frame-src": ["https://www.youtube-nocookie.com"],

+ "script-src": ["https://www.youtube.com", "https://s.ytimg.com"],

+ })

+

+ try:

+ response = render_to_response(

+ "{}.html".format(exc.status_code),

+ {},

+ request=request,

+ )

+ except LookupError:

+ # We don't have a customized template for this error, so we'll just let

+ # the default happen instead.

+ return exc

+

+ # Copy over the important values from our HTTPException to our new response

+ # object.

+ response.status = exc.status

+ response.headers.extend(

+ (k, v) for k, v in exc.headers.items()

+ if k not in response.headers

+ )

+

+ return response

@forbidden_view_config()

@@ -79,8 +111,7 @@ def forbidden(exc, request, redirect_to="accounts.login"):

# If we've reached here, then the user is logged in and they are genuinely

# not allowed to access this page.

- # TODO: Style the forbidden page.

- return exc

+ return httpexception_view(exc, request)

@view_config(

@@ -311,3 +342,11 @@ def health(request):

# Nothing will actually check this, but it's a little nicer to have

# something to return besides an empty body.

return "OK"

+

+

+@view_config(route_name="force-status")

+def force_status(request):

+ try:

+ raise exception_response(int(request.matchdict["status"]))

+ except KeyError:

+ raise exception_response(404) from None

| diff --git a/tests/unit/forklift/test_init.py b/tests/unit/forklift/test_init.py

--- a/tests/unit/forklift/test_init.py

+++ b/tests/unit/forklift/test_init.py

@@ -26,6 +26,7 @@ def test_includeme(forklift):

get_settings=lambda: settings,

include=pretend.call_recorder(lambda n: None),

add_legacy_action_route=pretend.call_recorder(lambda *a, **k: None),

+ add_template_view=pretend.call_recorder(lambda *a, **kw: None),

)

includeme(config)

@@ -49,3 +50,14 @@ def test_includeme(forklift):

domain=forklift,

),

]

+ if forklift:

+ config.add_template_view.calls == [

+ pretend.call(

+ "forklift.index",

+ "/",

+ "upload.html",

+ route_kw={"domain": forklift},

+ ),

+ ]

+ else:

+ config.add_template_view.calls == []

diff --git a/tests/unit/test_config.py b/tests/unit/test_config.py

--- a/tests/unit/test_config.py

+++ b/tests/unit/test_config.py

@@ -97,15 +97,20 @@ def test_activate_hook(path, expected):

assert config.activate_hook(request) == expected

-def test_template_view():

[email protected]("route_kw", [None, {}, {"foo": "bar"}])

+def test_template_view(route_kw):

configobj = pretend.stub(

add_route=pretend.call_recorder(lambda *a, **kw: None),

add_view=pretend.call_recorder(lambda *a, **kw: None),

)

- config.template_view(configobj, "test", "/test/", "test.html")

+ config.template_view(configobj, "test", "/test/", "test.html",

+ route_kw=route_kw)

- assert configobj.add_route.calls == [pretend.call("test", "/test/")]

+ assert configobj.add_route.calls == [

+ pretend.call(

+ "test", "/test/", **({} if route_kw is None else route_kw)),

+ ]

assert configobj.add_view.calls == [

pretend.call(renderer="test.html", route_name="test"),

]

diff --git a/tests/unit/test_routes.py b/tests/unit/test_routes.py

--- a/tests/unit/test_routes.py

+++ b/tests/unit/test_routes.py

@@ -73,6 +73,7 @@ def add_policy(name, filename):

assert config.add_route.calls == [

pretend.call("health", "/_health/"),

+ pretend.call("force-status", "/_force-status/{status:[45]\d\d}/"),

pretend.call('index', '/', domain=warehouse),

pretend.call("robots.txt", "/robots.txt", domain=warehouse),

pretend.call("opensearch.xml", "/opensearch.xml", domain=warehouse),

diff --git a/tests/unit/test_views.py b/tests/unit/test_views.py

--- a/tests/unit/test_views.py

+++ b/tests/unit/test_views.py

@@ -24,7 +24,7 @@

from warehouse import views

from warehouse.views import (

SEARCH_BOOSTS, SEARCH_FIELDS, current_user_indicator, forbidden, health,

- httpexception_view, index, robotstxt, opensearchxml, search

+ httpexception_view, index, robotstxt, opensearchxml, search, force_status,

)

from ..common.db.accounts import UserFactory

@@ -34,18 +34,79 @@

)

-def test_httpexception_view():

- response = context = pretend.stub()

- request = pretend.stub()

- assert httpexception_view(context, request) is response

+class TestHTTPExceptionView:

+

+ def test_returns_context_when_no_template(self, pyramid_config):

+ pyramid_config.testing_add_renderer("non-existent.html")

+

+ response = context = pretend.stub(status_code=499)

+ request = pretend.stub()

+ assert httpexception_view(context, request) is response

+

+ @pytest.mark.parametrize("status_code", [403, 404, 410, 500])

+ def test_renders_template(self, pyramid_config, status_code):

+ renderer = pyramid_config.testing_add_renderer(

+ "{}.html".format(status_code))

+

+ context = pretend.stub(

+ status="{} My Cool Status".format(status_code),

+ status_code=status_code,

+ headers={},

+ )

+ request = pretend.stub()

+ response = httpexception_view(context, request)

+

+ assert response.status_code == status_code

+ assert response.status == "{} My Cool Status".format(status_code)

+ renderer.assert_()

+

+ @pytest.mark.parametrize("status_code", [403, 404, 410, 500])

+ def test_renders_template_with_headers(self, pyramid_config, status_code):

+ renderer = pyramid_config.testing_add_renderer(

+ "{}.html".format(status_code))

+

+ context = pretend.stub(

+ status="{} My Cool Status".format(status_code),

+ status_code=status_code,

+ headers={"Foo": "Bar"},

+ )

+ request = pretend.stub()

+ response = httpexception_view(context, request)

+

+ assert response.status_code == status_code

+ assert response.status == "{} My Cool Status".format(status_code)

+ assert response.headers["Foo"] == "Bar"

+ renderer.assert_()

+

+ def test_renders_404_with_csp(self, pyramid_config):

+ renderer = pyramid_config.testing_add_renderer("404.html")

+

+ csp = {}

+ services = {"csp": pretend.stub(merge=csp.update)}

+

+ context = HTTPNotFound()

+ request = pretend.stub(find_service=lambda name: services[name])

+ response = httpexception_view(context, request)

+

+ assert response.status_code == 404

+ assert response.status == "404 Not Found"

+ assert csp == {

+ "frame-src": ["https://www.youtube-nocookie.com"],

+ "script-src": ["https://www.youtube.com", "https://s.ytimg.com"],

+ }

+ renderer.assert_()

class TestForbiddenView:

- def test_logged_in_returns_exception(self):

- exc, request = pretend.stub(), pretend.stub(authenticated_userid=1)

+ def test_logged_in_returns_exception(self, pyramid_config):

+ renderer = pyramid_config.testing_add_renderer("403.html")

+

+ exc = pretend.stub(status_code=403, status="403 Forbidden", headers={})

+ request = pretend.stub(authenticated_userid=1)

resp = forbidden(exc, request)

- assert resp is exc

+ assert resp.status_code == 403

+ renderer.assert_()

def test_logged_out_redirects_login(self):

exc = pretend.stub()

@@ -450,3 +511,14 @@ def test_health():

assert health(request) == "OK"

assert request.db.execute.calls == [pretend.call("SELECT 1")]

+

+

+class TestForceStatus:

+

+ def test_valid(self):

+ with pytest.raises(HTTPBadRequest):

+ force_status(pretend.stub(matchdict={"status": "400"}))

+

+ def test_invalid(self):

+ with pytest.raises(HTTPNotFound):

+ force_status(pretend.stub(matchdict={"status": "599"}))

| Properly style the error pages

This includes (but is probably not limited too):

- [ ] 500

- [ ] 404

- [ ] 410 (Maybe? Not sure if we'll ever use it.)

- [ ] 403

| One thing that I think would be kind of cute to do, is on the 404 page, embed a link to https://www.youtube.com/watch?v=cWDdd5KKhts as an easter-egg referencing both the "unofficial" name of PyPI and the fact that the skit itself is about a cheeseshop that's missing any cheese... essentially a 404 ;)

Bonus points if we stick the real 404 content at the top, then lead into the embedded youtube with a "And now for something completely different".

| 2017-05-28T20:24:20Z | [] | [] |

pypi/warehouse | 2,054 | pypi__warehouse-2054 | [

"1912"

] | f2b4484e374f3de0acff717bef90d0522083336d | diff --git a/warehouse/config.py b/warehouse/config.py

--- a/warehouse/config.py

+++ b/warehouse/config.py

@@ -284,11 +284,14 @@ def configure(settings=None):

renderers.JSON(sort_keys=True, separators=(",", ":")),

)

+ # Configure retry support.

+ config.add_settings({"retry.attempts": 3})

+ config.include("pyramid_retry")

+

# Configure our transaction handling so that each request gets its own

# transaction handler and the lifetime of the transaction is tied to the

# lifetime of the request.

config.add_settings({

- "tm.attempts": 3,

"tm.manager_hook": lambda request: transaction.TransactionManager(),

"tm.activate_hook": activate_hook,

"tm.annotate_user": False,

diff --git a/warehouse/tasks.py b/warehouse/tasks.py

--- a/warehouse/tasks.py

+++ b/warehouse/tasks.py

@@ -21,6 +21,7 @@

import celery

import celery.backends.redis

import pyramid.scripting

+import pyramid_retry

import transaction

import venusian

@@ -56,7 +57,8 @@ def run(*args, **kwargs):

try:

return original_run(*args, **kwargs)

except BaseException as exc:

- if request.tm._retryable(exc.__class__, exc):

+ if (isinstance(exc, pyramid_retry.RetryableException)

+ or pyramid_retry.IRetryableError.providedBy(exc)):

raise obj.retry(exc=exc)

raise

| diff --git a/tests/unit/test_config.py b/tests/unit/test_config.py

--- a/tests/unit/test_config.py

+++ b/tests/unit/test_config.py

@@ -319,6 +319,7 @@ def __init__(self):

),

]

] + [

+ pretend.call("pyramid_retry"),

pretend.call("pyramid_tm"),

pretend.call("pyramid_services"),

pretend.call("pyramid_rpc.xmlrpc"),

@@ -365,8 +366,8 @@ def __init__(self):

]

assert configurator_obj.add_settings.calls == [

pretend.call({"jinja2.newstyle": True}),

+ pretend.call({"retry.attempts": 3}),

pretend.call({

- "tm.attempts": 3,

"tm.manager_hook": mock.ANY,

"tm.activate_hook": config.activate_hook,

"tm.annotate_user": False,

@@ -377,7 +378,7 @@ def __init__(self):

},

}),

]

- add_settings_dict = configurator_obj.add_settings.calls[1].args[0]

+ add_settings_dict = configurator_obj.add_settings.calls[2].args[0]

assert add_settings_dict["tm.manager_hook"](pretend.stub()) is \

transaction_manager

assert configurator_obj.add_tween.calls == [

diff --git a/tests/unit/test_tasks.py b/tests/unit/test_tasks.py

--- a/tests/unit/test_tasks.py

+++ b/tests/unit/test_tasks.py

@@ -18,6 +18,7 @@

from celery import Celery

from pyramid import scripting

+from pyramid_retry import RetryableException

from warehouse import tasks

from warehouse.config import Environment

@@ -240,7 +241,7 @@ def run(arg_, *, kwarg_=None):

assert request.tm.__exit__.calls == [pretend.call(None, None, None)]

def test_run_retries_failed_transaction(self):

- class RetryThisException(Exception):

+ class RetryThisException(RetryableException):

pass

class Retry(Exception):

@@ -259,7 +260,6 @@ def run():

tm=pretend.stub(

__enter__=pretend.call_recorder(lambda *a, **kw: None),

__exit__=pretend.call_recorder(lambda *a, **kw: None),

- _retryable=pretend.call_recorder(lambda *a, **kw: True),

),

)

@@ -273,9 +273,6 @@ def run():

assert request.tm.__exit__.calls == [

pretend.call(Retry, mock.ANY, mock.ANY),

]

- assert request.tm._retryable.calls == [

- pretend.call(RetryThisException, mock.ANY),

- ]

def test_run_doesnt_retries_failed_transaction(self):

class DontRetryThisException(Exception):

@@ -294,7 +291,6 @@ def run():

tm=pretend.stub(

__enter__=pretend.call_recorder(lambda *a, **kw: None),

__exit__=pretend.call_recorder(lambda *a, **kw: None),

- _retryable=pretend.call_recorder(lambda *a, **kw: False),

),

)

@@ -308,9 +304,6 @@ def run():

assert request.tm.__exit__.calls == [

pretend.call(DontRetryThisException, mock.ANY, mock.ANY),

]

- assert request.tm._retryable.calls == [

- pretend.call(DontRetryThisException, mock.ANY),

- ]

def test_after_return_without_pyramid_env(self):

obj = tasks.WarehouseTask()

| Update pyramid-tm to 2.0

There's a new version of [pyramid-tm](https://pypi.python.org/pypi/pyramid-tm) available.

You are currently using **1.1.1**. I have updated it to **2.0**

These links might come in handy: <a href="http://pypi.python.org/pypi/pyramid_tm">PyPI</a> | <a href="https://pyup.io/changelogs/pyramid-tm/">Changelog</a> | <a href="http://docs.pylonsproject.org/projects/pyramid-tm/en/latest/">Homepage</a>

### Changelog

>

>### 2.0

>^^^^^^^^^^^^^^^^

>Major Features

>~~~~~~~~~~~~~~

>- The ``pyramid_tm`` tween has been moved **over** the ``EXCVIEW`` tween.

> This means the transaction is open during exception view execution.

> See https://github.com/Pylons/pyramid_tm/pull/55

>- Added a ``pyramid_tm.is_tm_active`` and a ``tm_active`` view predicate

> which may be useful in exception views that require access to the database.

> See https://github.com/Pylons/pyramid_tm/pull/60

>Backward Incompatibilities

>~~~~~~~~~~~~~~~~~~~~~~~~~~

>- The ``tm.attempts`` setting has been removed and retry support has been moved

> into a new package named ``pyramid_retry``. If you want retry support then

> please look at that library for more information about installing and

> enabling it. See https://github.com/Pylons/pyramid_tm/pull/55

>- The ``pyramid_tm`` tween has been moved **over** the ``EXCVIEW`` tween.

> If you have any hacks in your application that are opening a new transaction

> inside your exception views then it's likely you will want to remove them

> or re-evaluate when upgrading.

> See https://github.com/Pylons/pyramid_tm/pull/55

>- Drop support for Pyramid < 1.5.

>Minor Features

>~~~~~~~~~~~~~~

>- Support for Python 3.6.

*Got merge conflicts? Close this PR and delete the branch. I'll create a new PR for you.*

Happy merging! 🤖

| This probably needs to wait until Pyramid 1.9 is released so that we can switch to pyramid_retry to handle retry logic. | 2017-05-29T18:10:36Z | [] | [] |

pypi/warehouse | 2,473 | pypi__warehouse-2473 | [

"2471"

] | da5dc63136c4f351c2ac8d4e93878db7ea8caabe | diff --git a/warehouse/admin/views/projects.py b/warehouse/admin/views/projects.py

--- a/warehouse/admin/views/projects.py

+++ b/warehouse/admin/views/projects.py

@@ -24,6 +24,9 @@

from warehouse.accounts.models import User

from warehouse.packaging.models import Project, Release, Role, JournalEntry

from warehouse.utils.paginate import paginate_url_factory

+from warehouse.forklift.legacy import MAX_FILESIZE

+

+ONE_MB = 1024 * 1024 # bytes

@view_config(

@@ -101,7 +104,13 @@ def project_detail(project, request):

)

]

- return {"project": project, "maintainers": maintainers, "journal": journal}

+ return {

+ "project": project,

+ "maintainers": maintainers,

+ "journal": journal,

+ "ONE_MB": ONE_MB,

+ "MAX_FILESIZE": MAX_FILESIZE

+ }

@view_config(

@@ -218,18 +227,27 @@ def set_upload_limit(project, request):

# If the upload limit is an empty string or othrwise falsy, just set the

# limit to None, indicating the default limit.

if not upload_limit:

- project.upload_limit = None

+ upload_limit = None

else:

try:

- project.upload_limit = int(upload_limit)

+ upload_limit = int(upload_limit)

except ValueError:

raise HTTPBadRequest(

- f"Invalid value for upload_limit: {upload_limit}, "

+ f"Invalid value for upload limit: {upload_limit}, "

f"must be integer or empty string.")

+ # The form is in MB, but the database field is in bytes.

+ upload_limit *= ONE_MB

+

+ if upload_limit < MAX_FILESIZE:

+ raise HTTPBadRequest(

+ f"Upload limit can not be less than the default limit of "

+ f"{MAX_FILESIZE / ONE_MB}MB.")

+

+ project.upload_limit = upload_limit

+

request.session.flash(

- f"Successfully set the upload limit on {project.name!r} to "

- f"{project.upload_limit!r}",

+ f"Successfully set the upload limit on {project.name!r}.",

queue="success",

)

| diff --git a/tests/unit/admin/views/test_projects.py b/tests/unit/admin/views/test_projects.py

--- a/tests/unit/admin/views/test_projects.py

+++ b/tests/unit/admin/views/test_projects.py

@@ -354,21 +354,21 @@ def test_sets_limitwith_integer(self, db_request):

flash=pretend.call_recorder(lambda *a, **kw: None),

)

db_request.matchdict["project_name"] = project.normalized_name

- db_request.POST["upload_limit"] = "12345"

+ db_request.POST["upload_limit"] = "90"

views.set_upload_limit(project, db_request)

assert db_request.session.flash.calls == [

pretend.call(

- "Successfully set the upload limit on 'foo' to 12345",

+ "Successfully set the upload limit on 'foo'.",

queue="success"),

]

- assert project.upload_limit == 12345

+ assert project.upload_limit == 90 * views.ONE_MB

def test_sets_limit_with_none(self, db_request):

project = ProjectFactory.create(name="foo")

- project.upload_limit = 12345

+ project.upload_limit = 90 * views.ONE_MB

db_request.route_path = pretend.call_recorder(

lambda *a, **kw: "/admin/projects/")

@@ -381,13 +381,13 @@ def test_sets_limit_with_none(self, db_request):

assert db_request.session.flash.calls == [

pretend.call(

- "Successfully set the upload limit on 'foo' to None",

+ "Successfully set the upload limit on 'foo'.",

queue="success"),

]

assert project.upload_limit is None

- def test_sets_limit_with_bad_value(self, db_request):

+ def test_sets_limit_with_non_integer(self, db_request):

project = ProjectFactory.create(name="foo")

db_request.matchdict["project_name"] = project.normalized_name

@@ -395,3 +395,12 @@ def test_sets_limit_with_bad_value(self, db_request):

with pytest.raises(HTTPBadRequest):

views.set_upload_limit(project, db_request)

+

+ def test_sets_limit_with_less_than_minimum(self, db_request):

+ project = ProjectFactory.create(name="foo")

+

+ db_request.matchdict["project_name"] = project.normalized_name

+ db_request.POST["upload_limit"] = "20"

+

+ with pytest.raises(HTTPBadRequest):

+ views.set_upload_limit(project, db_request)

| Admin package upload size text control should use MB instead of bytes

Context: https://github.com/pypa/warehouse/pull/2470#issuecomment-334852617

Decision:

> The input in should be in MB and should just be converted to/from bytes in the backend.

Also:

> It also might be a good idea to flash an error and redirect back to the detail page if you try to set a limit less then the current default minimum.

Additionally:

> [The form field] would be a bit nicer as <input type="number" min={{ THE MINMUM SIZE }} ...> instead of as a text field. It might also make sense to include step="10" or something

| @dstufft I'm a PyPA member but not a member on this project so I can't assign myself. | 2017-10-06T21:39:21Z | [] | [] |

pypi/warehouse | 2,524 | pypi__warehouse-2524 | [

"2489"

] | 4419dcd714a7f29294e7f4ca03bb75a42ee40216 | diff --git a/warehouse/accounts/forms.py b/warehouse/accounts/forms.py

--- a/warehouse/accounts/forms.py

+++ b/warehouse/accounts/forms.py

@@ -29,6 +29,18 @@ class CredentialsMixin:

"a username with under 50 characters."

)

),

+ # the regexp below must match the CheckConstraint

+ # for the username field in accounts.models.User

+ wtforms.validators.Regexp(

+ r'^[a-zA-Z0-9][a-zA-Z0-9._-]*[a-zA-Z0-9]$',

+ message=(

+ "The username is invalid. Usernames "

+ "must be composed of letters, numbers, "

+ "dots, hyphens and underscores. And must "

+ "also start and finish with a letter or number. "

+ "Please choose a different username."

+ )

+ )

],

)

| diff --git a/tests/unit/accounts/test_forms.py b/tests/unit/accounts/test_forms.py

--- a/tests/unit/accounts/test_forms.py

+++ b/tests/unit/accounts/test_forms.py

@@ -311,6 +311,28 @@ def test_username_exists(self):

"Please choose a different username."

)

+ @pytest.mark.parametrize("username", ['_foo', 'bar_', 'foo^bar'])

+ def test_username_is_valid(self, username):

+ form = forms.RegistrationForm(

+ data={"username": username},

+ user_service=pretend.stub(

+ find_userid=pretend.call_recorder(lambda _: None),

+ ),

+ recaptcha_service=pretend.stub(

+ enabled=False,

+ verify_response=pretend.call_recorder(lambda _: None),

+ ),

+ )

+ assert not form.validate()

+ assert (

+ form.username.errors.pop() ==

+ "The username is invalid. Usernames "

+ "must be composed of letters, numbers, "

+ "dots, hyphens and underscores. And must "

+ "also start and finish with a letter or number. "

+ "Please choose a different username."

+ )

+

def test_password_strength(self):

cases = (

("foobar", False),

| Validate username format before attempting to flush it to the db

Validate a valid username prior to trying to save it to the database, using the same logic as is already in the database.

---

https://sentry.io/python-software-foundation/warehouse-production/issues/258353802/

```

IntegrityError: new row for relation "accounts_user" violates check constraint "accounts_user_valid_username"

File "sqlalchemy/engine/base.py", line 1182, in _execute_context

context)

File "sqlalchemy/engine/default.py", line 470, in do_execute

cursor.execute(statement, parameters)

IntegrityError: (psycopg2.IntegrityError) new row for relation "accounts_user" violates check constraint "accounts_user_valid_username"

(30 additional frame(s) were not displayed)

...

File "warehouse/raven.py", line 41, in raven_tween

return handler(request)

File "warehouse/cache/http.py", line 69, in conditional_http_tween

response = handler(request)

File "warehouse/cache/http.py", line 33, in wrapped

return view(context, request)

File "warehouse/accounts/views.py", line 226, in register

form.email.data

File "warehouse/accounts/services.py", line 152, in create_user

self.db.flush()

IntegrityError: (psycopg2.IntegrityError) new row for relation "accounts_user" violates check constraint "accounts_user_valid_username"

DETAIL: Failing row contains

```

| 2017-10-21T13:19:53Z | [] | [] |

|

pypi/warehouse | 2,574 | pypi__warehouse-2574 | [

"2217"

] | ef47a00b7d16c94244ab1991957aea9b90e12dd3 | diff --git a/warehouse/legacy/api/simple.py b/warehouse/legacy/api/simple.py

--- a/warehouse/legacy/api/simple.py

+++ b/warehouse/legacy/api/simple.py

@@ -10,6 +10,8 @@

# See the License for the specific language governing permissions and

# limitations under the License.

+

+from packaging.version import parse

from pyramid.httpexceptions import HTTPMovedPermanently

from pyramid.view import view_config

from sqlalchemy import func

@@ -73,7 +75,7 @@ def simple_detail(project, request):

request.response.headers["X-PyPI-Last-Serial"] = str(project.last_serial)

# Get all of the files for this project.

- files = (

+ files = sorted(

request.db.query(File)

.options(joinedload(File.release))

.filter(

@@ -84,8 +86,8 @@ def simple_detail(project, request):

.with_entities(Release.version)

)

)

- .order_by(File.filename)

- .all()

+ .all(),

+ key=lambda f: (parse(f.version), f.packagetype)

)

return {"project": project, "files": files}

| diff --git a/tests/unit/legacy/api/test_simple.py b/tests/unit/legacy/api/test_simple.py

--- a/tests/unit/legacy/api/test_simple.py

+++ b/tests/unit/legacy/api/test_simple.py

@@ -161,3 +161,64 @@ def test_with_files_with_serial(self, db_request):

"files": files,

}

assert db_request.response.headers["X-PyPI-Last-Serial"] == str(je.id)

+

+ def test_with_files_with_version_multi_digit(self, db_request):

+ project = ProjectFactory.create()

+ releases = [

+ ReleaseFactory.create(project=project)

+ for _ in range(3)

+ ]

+ release_versions = [

+ "0.3.0rc1", "0.3.0", "0.3.0-post0", "0.14.0", "4.2.0", "24.2.0"

+ ]

+

+ for release, version in zip(releases, release_versions):

+ release.version = version

+

+ tar_files = [

+ FileFactory.create(

+ release=r,

+ filename="{}-{}.tar.gz".format(project.name, r.version),

+ packagetype="sdist"

+ )

+ for r in releases

+ ]

+ wheel_files = [

+ FileFactory.create(

+ release=r,

+ filename="{}-{}.whl".format(project.name, r.version),

+ packagetype="bdist_wheel"

+ )

+ for r in releases

+ ]

+ egg_files = [

+ FileFactory.create(

+ release=r,

+ filename="{}-{}.egg".format(project.name, r.version),

+ packagetype="bdist_egg"

+ )

+ for r in releases

+ ]

+

+ files = []

+ for files_release in \

+ zip(egg_files, wheel_files, tar_files):

+ files += files_release

+

+ db_request.matchdict["name"] = project.normalized_name

+ user = UserFactory.create()

+ je = JournalEntryFactory.create(name=project.name, submitted_by=user)

+

+ # Make sure that we get any changes made since the JournalEntry was

+ # saved.

+ db_request.db.refresh(project)

+ import pprint

+ pprint.pprint(simple.simple_detail(project, db_request)['files'])

+ pprint.pprint(files)

+

+ assert simple.simple_detail(project, db_request) == {

+ "project": project,

+ "files": files,

+ }

+

+ assert db_request.response.headers["X-PyPI-Last-Serial"] == str(je.id)

| Improve sorting on simple page

I'd like to submit a patch for this but I have a few questions :)

First I'll describe what I'd like to do...

## sort by version number

See https://pypi.org/simple/pre-commit/

You'll notice that `0.10.0` erroneously sorts *before* `0.2.0` (I'd like to fix this)

## investigation

I've found the code which does this sorting [here](https://github.com/pypa/warehouse/blob/3bdfe5a89cc9a922ee97304c98384c24822a09ee/warehouse/legacy/api/simple.py#L76-L89)

This seems to just sort by filename, but by inspecting and viewing [this page](https://pypi.org/simple/pre-commit-mirror-maker/) I notice it seems to ignore `_` vs. `-` (which is good, that's what I want to continue to happen but I'm just not seeing it from the code!)

## other questions

The `File` objects which come back from the database contain a `.version` attribute that I'd like to use to participate in sorting, my main question is: **Can I depend on this version to be a valid [PEP440](https://www.python.org/dev/peps/pep-0440/) version and use something like `pkg_resources.parse_version`?**

I'd basically like to replicate something close to the sorting which @chriskuehl's [dumb-pypi](https://github.com/chriskuehl/dumb-pypi) does [here](https://github.com/chriskuehl/dumb-pypi/blob/fd0f93fc2e82cbd9bae41b3c60c5f006b2319c60/dumb_pypi/main.py#L77-L91).

Thanks in advance :)

---

**Good First Issue**: This issue is good for first time contributors. If there is not a corresponding pull request for this issue, it is up for grabs. For directions for getting set up, see our [Getting Started Guide](https://warehouse.pypa.io/development/getting-started/). If you are working on this issue and have questions, please feel free to ask them here, [`#pypa-dev` on Freenode](https://webchat.freenode.net/?channels=%23pypa-dev), or the [pypa-dev mailing list](https://groups.google.com/forum/#!forum/pypa-dev).

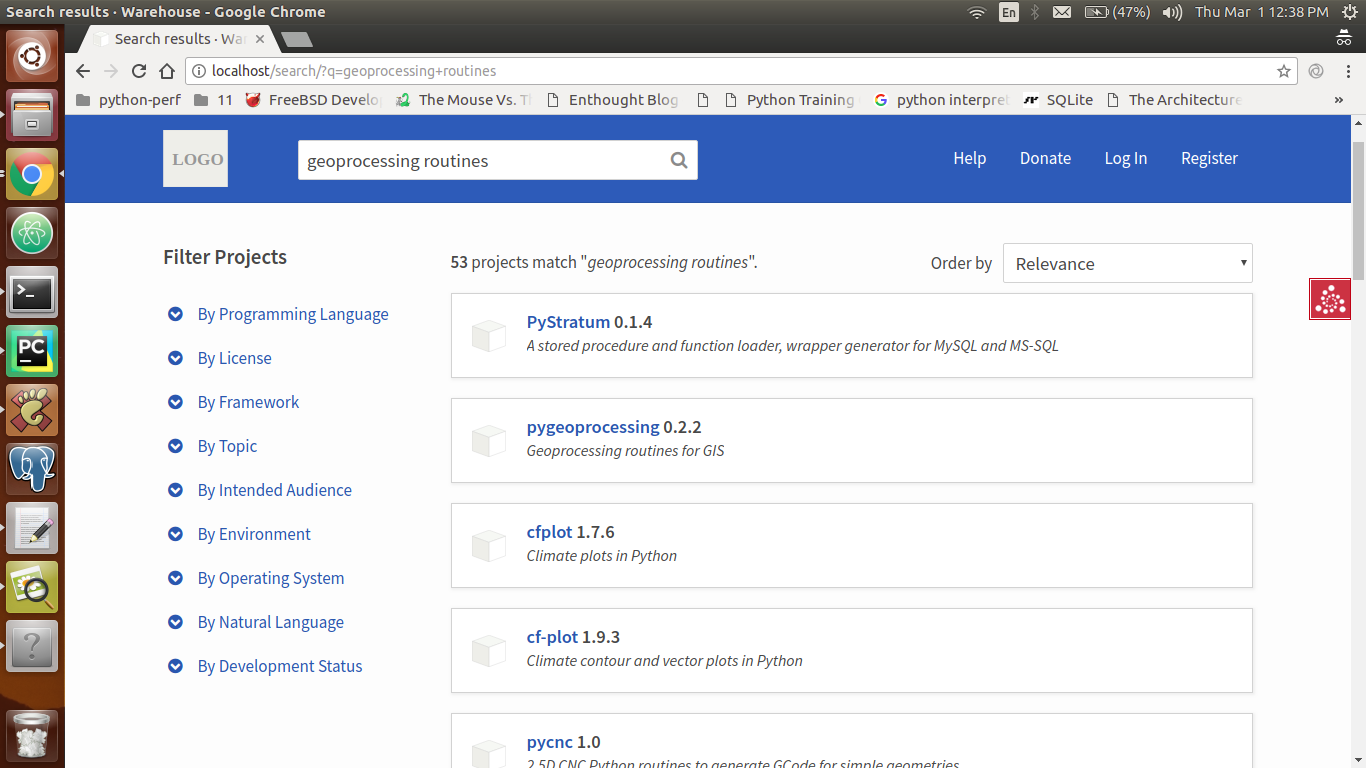

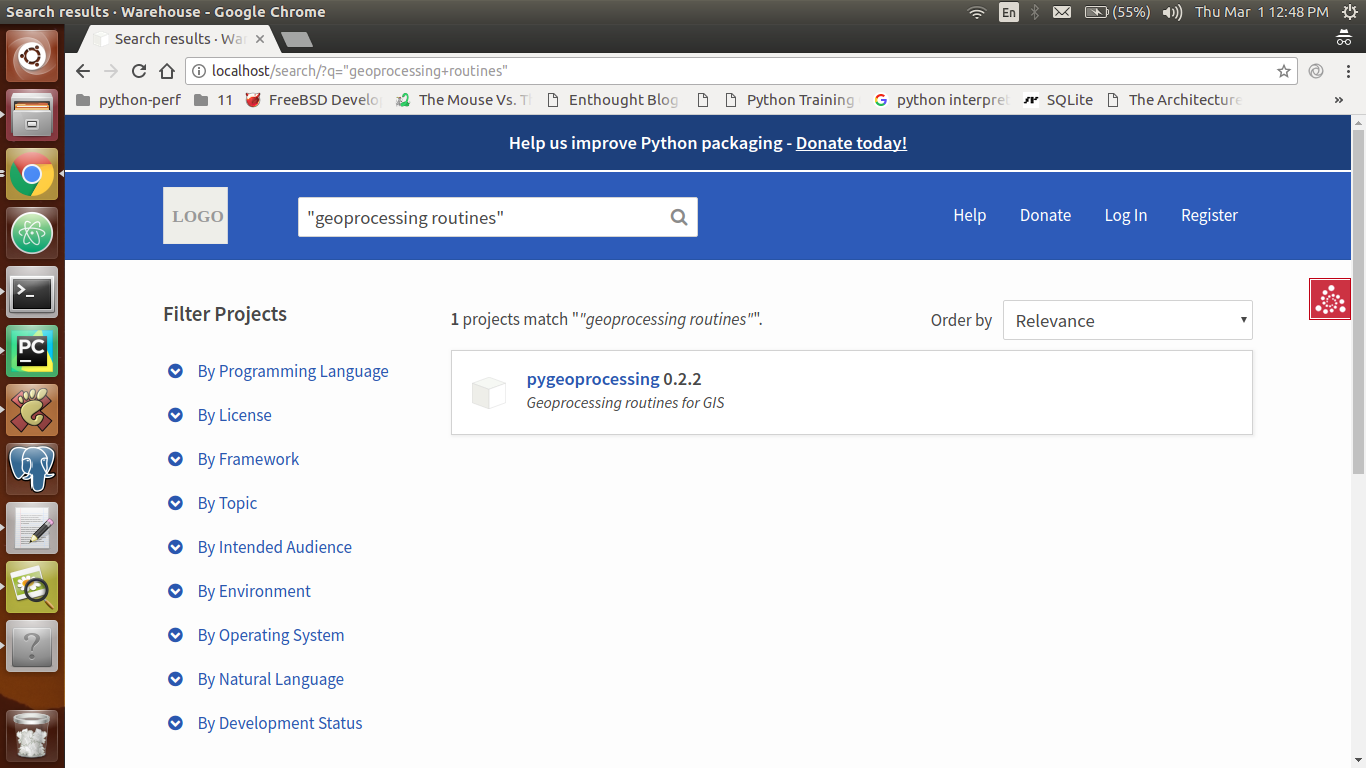

| It does just sort by filename, largely because the order doesn't *really* matter, as long as it's stable between page loads. That being said, I wouldn't turn down a PR that does this. The version attributes are not guaranteed to be PEP 440 compatible (some of them are old enough that they predate PEP 440). Ideally you're use ``packaging.version.parse_version`` which will handle that case fine.

@dstufft if it sorts by filename, I would expect the following sorting on this page: https://pypi.org/simple/pre-commit-mirror-maker/

```python

[

'pre-commit-mirror-maker-0.1.0.tar.gz',

'pre-commit-mirror-maker-0.1.1.tar.gz',

'pre-commit-mirror-maker-0.1.2.tar.gz',

'pre-commit-mirror-maker-0.2.0.tar.gz',

'pre-commit-mirror-maker-0.3.0.tar.gz',

'pre-commit-mirror-maker-0.3.1.tar.gz',

'pre-commit-mirror-maker-0.3.2.tar.gz',

'pre-commit-mirror-maker-0.4.0.tar.gz',

'pre_commit_mirror_maker-0.3.2-py2-none-any.whl',

'pre_commit_mirror_maker-0.4.0-py2-none-any.whl',

'pre_commit_mirror_maker-0.4.0-py3-none-any.whl',

]

```

but the wheel files are properly sorted alongside their source distributions -- does postgres normalize this?

I don't honestly recall the specifics of how it sorts other than it was by filename.

Awesome, thanks for the prompt replies :) I'll do some more digging! | 2017-11-12T14:46:03Z | [] | [] |

pypi/warehouse | 2,705 | pypi__warehouse-2705 | [

"2654",

"956"

] | 63fff23ea23ff67d3535404567c26123a93c8bb0 | diff --git a/warehouse/accounts/auth_policy.py b/warehouse/accounts/auth_policy.py

--- a/warehouse/accounts/auth_policy.py

+++ b/warehouse/accounts/auth_policy.py

@@ -34,7 +34,7 @@ def unauthenticated_userid(self, request):

# want to locate the userid from the IUserService.

if username is not None:

login_service = request.find_service(IUserService, context=None)

- return login_service.find_userid(username)

+ return str(login_service.find_userid(username))

class SessionAuthenticationPolicy(_SessionAuthenticationPolicy):

diff --git a/warehouse/accounts/views.py b/warehouse/accounts/views.py

--- a/warehouse/accounts/views.py

+++ b/warehouse/accounts/views.py

@@ -323,15 +323,6 @@ def reset_password(request, _form_class=ResetPasswordForm):

return {"form": form}

-@view_config(

- route_name="accounts.edit_gravatar",

- renderer="accounts/csi/edit_gravatar.csi.html",

- uses_session=True,

-)

-def edit_gravatar_csi(user, request):

- return {"user": user}

-

-

def _login_user(request, userid):

# We have a session factory associated with this request, so in order

# to protect against session fixation attacks we're going to make sure

diff --git a/warehouse/config.py b/warehouse/config.py

--- a/warehouse/config.py

+++ b/warehouse/config.py

@@ -362,6 +362,9 @@ def configure(settings=None):

# Register our authentication support.

config.include(".accounts")

+ # Register logged-in views

+ config.include(".manage")

+

# Allow the packaging app to register any services it has.

config.include(".packaging")

diff --git a/warehouse/manage/__init__.py b/warehouse/manage/__init__.py

new file mode 100644

--- /dev/null

+++ b/warehouse/manage/__init__.py

@@ -0,0 +1,15 @@

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+

+def includeme(config):

+ pass

diff --git a/warehouse/manage/forms.py b/warehouse/manage/forms.py

new file mode 100644

--- /dev/null

+++ b/warehouse/manage/forms.py

@@ -0,0 +1,57 @@

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import wtforms

+

+from warehouse import forms

+

+

+class RoleNameMixin:

+

+ role_name = wtforms.SelectField(

+ 'Select a role',

+ choices=[

+ ('Owner', 'Owner'),

+ ('Maintainer', 'Maintainer'),

+ ],

+ validators=[

+ wtforms.validators.DataRequired(message="Must select a role"),

+ ]

+ )

+

+

+class UsernameMixin:

+

+ username = wtforms.StringField(

+ validators=[

+ wtforms.validators.DataRequired(message="Must specify a username"),

+ ]

+ )

+

+ def validate_username(self, field):

+ userid = self.user_service.find_userid(field.data)

+

+ if userid is None:

+ raise wtforms.validators.ValidationError(

+ "No user found with that username. Please try again."

+ )

+

+

+class CreateRoleForm(RoleNameMixin, UsernameMixin, forms.Form):

+

+ def __init__(self, *args, user_service, **kwargs):

+ super().__init__(*args, **kwargs)

+ self.user_service = user_service

+

+

+class ChangeRoleForm(RoleNameMixin, forms.Form):

+ pass

diff --git a/warehouse/manage/views.py b/warehouse/manage/views.py

new file mode 100644

--- /dev/null

+++ b/warehouse/manage/views.py

@@ -0,0 +1,257 @@

+# Licensed under the Apache License, Version 2.0 (the "License");

+

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+from collections import defaultdict

+

+from pyramid.httpexceptions import HTTPSeeOther

+from pyramid.security import Authenticated

+from pyramid.view import view_config

+from sqlalchemy.orm.exc import NoResultFound

+

+from warehouse.accounts.interfaces import IUserService

+from warehouse.accounts.models import User

+from warehouse.manage.forms import CreateRoleForm, ChangeRoleForm

+from warehouse.packaging.models import JournalEntry, Role

+

+

+@view_config(

+ route_name="manage.profile",

+ renderer="manage/profile.html",

+ uses_session=True,

+ effective_principals=Authenticated,

+)

+def manage_profile(request):

+ return {}

+

+

+@view_config(

+ route_name="manage.projects",

+ renderer="manage/projects.html",

+ uses_session=True,

+ effective_principals=Authenticated,

+)

+def manage_projects(request):

+ return {}

+

+

+@view_config(

+ route_name="manage.project.settings",

+ renderer="manage/project.html",

+ uses_session=True,

+ permission="manage",

+ effective_principals=Authenticated,

+)

+def manage_project_settings(project, request):

+ return {"project": project}

+

+

+@view_config(

+ route_name="manage.project.roles",

+ renderer="manage/roles.html",

+ uses_session=True,

+ require_methods=False,

+ permission="manage",

+)

+def manage_project_roles(project, request, _form_class=CreateRoleForm):

+ user_service = request.find_service(IUserService, context=None)

+ form = _form_class(request.POST, user_service=user_service)

+

+ if request.method == "POST" and form.validate():

+ username = form.username.data

+ role_name = form.role_name.data

+ userid = user_service.find_userid(username)

+ user = user_service.get_user(userid)

+

+ if (request.db.query(

+ request.db.query(Role).filter(

+ Role.user == user,

+ Role.project == project,

+ Role.role_name == role_name,

+ )

+ .exists()).scalar()):

+ request.session.flash(

+ f"User '{username}' already has {role_name} role for project",

+ queue="error"

+ )

+ else:

+ request.db.add(

+ Role(user=user, project=project, role_name=form.role_name.data)

+ )

+ request.db.add(

+ JournalEntry(

+ name=project.name,

+ action=f"add {role_name} {username}",

+ submitted_by=request.user,

+ submitted_from=request.remote_addr,

+ ),

+ )

+ request.session.flash(

+ f"Added collaborator '{form.username.data}'",

+ queue="success"

+ )

+ form = _form_class(user_service=user_service)

+

+ roles = (

+ request.db.query(Role)

+ .join(User)

+ .filter(Role.project == project)

+ .all()

+ )

+

+ # TODO: The following lines are a hack to handle multiple roles for a

+ # single user and should be removed when fixing GH-2745

+ roles_by_user = defaultdict(list)

+ for role in roles:

+ roles_by_user[role.user.username].append(role)

+

+ return {

+ "project": project,

+ "roles_by_user": roles_by_user,

+ "form": form,

+ }

+

+

+@view_config(

+ route_name="manage.project.change_role",

+ uses_session=True,

+ require_methods=["POST"],

+ permission="manage",

+)

+def change_project_role(project, request, _form_class=ChangeRoleForm):

+ # TODO: This view was modified to handle deleting multiple roles for a

+ # single user and should be updated when fixing GH-2745

+

+ form = _form_class(request.POST)

+

+ if form.validate():

+ role_ids = request.POST.getall('role_id')

+

+ if len(role_ids) > 1:

+ # This user has more than one role, so just delete all the ones

+ # that aren't what we want.

+ #

+ # TODO: This branch should be removed when fixing GH-2745.

+ roles = (

+ request.db.query(Role)

+ .filter(

+ Role.id.in_(role_ids),

+ Role.project == project,

+ Role.role_name != form.role_name.data

+ )

+ .all()

+ )

+ removing_self = any(

+ role.role_name == "Owner" and role.user == request.user

+ for role in roles

+ )

+ if removing_self:

+ request.session.flash(

+ "Cannot remove yourself as Owner", queue="error"

+ )

+ else:

+ for role in roles:

+ request.db.delete(role)

+ request.db.add(

+ JournalEntry(

+ name=project.name,

+ action=f"remove {role.role_name} {role.user_name}",

+ submitted_by=request.user,

+ submitted_from=request.remote_addr,

+ ),

+ )

+ request.session.flash(

+ 'Successfully changed role', queue="success"

+ )

+ else:

+ # This user only has one role, so get it and change the type.

+ try:

+ role = (

+ request.db.query(Role)

+ .filter(

+ Role.id == request.POST.get('role_id'),

+ Role.project == project,

+ )

+ .one()

+ )

+ if role.role_name == "Owner" and role.user == request.user:

+ request.session.flash(

+ "Cannot remove yourself as Owner", queue="error"

+ )

+ else:

+ request.db.add(

+ JournalEntry(

+ name=project.name,

+ action="change {} {} to {}".format(

+ role.role_name,

+ role.user_name,

+ form.role_name.data,

+ ),

+ submitted_by=request.user,

+ submitted_from=request.remote_addr,

+ ),

+ )

+ role.role_name = form.role_name.data

+ request.session.flash(

+ 'Successfully changed role', queue="success"

+ )

+ except NoResultFound:

+ request.session.flash("Could not find role", queue="error")

+

+ return HTTPSeeOther(

+ request.route_path('manage.project.roles', name=project.name)

+ )

+

+

+@view_config(

+ route_name="manage.project.delete_role",

+ uses_session=True,

+ require_methods=["POST"],

+ permission="manage",

+)

+def delete_project_role(project, request):

+ # TODO: This view was modified to handle deleting multiple roles for a

+ # single user and should be updated when fixing GH-2745

+

+ roles = (

+ request.db.query(Role)

+ .filter(

+ Role.id.in_(request.POST.getall('role_id')),

+ Role.project == project,

+ )

+ .all()

+ )

+ removing_self = any(

+ role.role_name == "Owner" and role.user == request.user

+ for role in roles

+ )

+

+ if not roles:

+ request.session.flash("Could not find role", queue="error")

+ elif removing_self:

+ request.session.flash("Cannot remove yourself as Owner", queue="error")

+ else:

+ for role in roles:

+ request.db.delete(role)

+ request.db.add(

+ JournalEntry(

+ name=project.name,

+ action=f"remove {role.role_name} {role.user_name}",

+ submitted_by=request.user,

+ submitted_from=request.remote_addr,

+ ),

+ )

+ request.session.flash("Successfully removed role", queue="success")

+

+ return HTTPSeeOther(

+ request.route_path('manage.project.roles', name=project.name)

+ )

diff --git a/warehouse/packaging/models.py b/warehouse/packaging/models.py

--- a/warehouse/packaging/models.py

+++ b/warehouse/packaging/models.py

@@ -59,6 +59,16 @@ class Role(db.Model):

user = orm.relationship(User, lazy=False)

project = orm.relationship("Project", lazy=False)

+ def __gt__(self, other):

+ '''

+ Temporary hack to allow us to only display the 'highest' role when

+ there are multiple for a given user

+

+ TODO: This should be removed when fixing GH-2745.

+ '''

+ order = ['Maintainer', 'Owner'] # from lowest to highest

+ return order.index(self.role_name) > order.index(other.role_name)

+

class ProjectFactory:

@@ -147,8 +157,10 @@ def __acl__(self):

for role in sorted(

query.all(),

key=lambda x: ["Owner", "Maintainer"].index(x.role_name)):

- acls.append((Allow, role.user.id, ["upload"]))

-

+ if role.role_name == "Owner":

+ acls.append((Allow, str(role.user.id), ["manage", "upload"]))

+ else:

+ acls.append((Allow, str(role.user.id), ["upload"]))

return acls

@property

diff --git a/warehouse/routes.py b/warehouse/routes.py

--- a/warehouse/routes.py

+++ b/warehouse/routes.py

@@ -97,11 +97,36 @@ def includeme(config):

"/account/reset-password/",

domain=warehouse,

)

+

+ # Management (views for logged-in users)

+ config.add_route("manage.profile", "/manage/profile/", domain=warehouse)

+ config.add_route("manage.projects", "/manage/projects/", domain=warehouse)

config.add_route(

- "accounts.edit_gravatar",

- "/user/{username}/edit_gravatar/",

- factory="warehouse.accounts.models:UserFactory",

- traverse="/{username}",

+ "manage.project.settings",

+ "/manage/project/{name}/settings/",

+ factory="warehouse.packaging.models:ProjectFactory",

+ traverse="/{name}",

+ domain=warehouse,

+ )

+ config.add_route(

+ "manage.project.roles",

+ "/manage/project/{name}/collaboration/",

+ factory="warehouse.packaging.models:ProjectFactory",

+ traverse="/{name}",

+ domain=warehouse,

+ )

+ config.add_route(

+ "manage.project.change_role",

+ "/manage/project/{name}/collaboration/change/",

+ factory="warehouse.packaging.models:ProjectFactory",

+ traverse="/{name}",

+ domain=warehouse,

+ )

+ config.add_route(

+ "manage.project.delete_role",

+ "/manage/project/{name}/collaboration/delete/",

+ factory="warehouse.packaging.models:ProjectFactory",

+ traverse="/{name}",

domain=warehouse,

)

diff --git a/warehouse/views.py b/warehouse/views.py

--- a/warehouse/views.py

+++ b/warehouse/views.py

@@ -16,10 +16,12 @@

HTTPException, HTTPSeeOther, HTTPMovedPermanently, HTTPNotFound,

HTTPBadRequest, exception_response,

)

+from pyramid.exceptions import PredicateMismatch

from pyramid.renderers import render_to_response

from pyramid.response import Response

from pyramid.view import (

- notfound_view_config, forbidden_view_config, view_config,

+ notfound_view_config, forbidden_view_config, exception_view_config,

+ view_config,

)

from elasticsearch_dsl import Q

from sqlalchemy import func

@@ -107,6 +109,7 @@ def httpexception_view(exc, request):

@forbidden_view_config()

+@exception_view_config(PredicateMismatch)

def forbidden(exc, request, redirect_to="accounts.login"):

# If the forbidden error is because the user isn't logged in, then we'll

# redirect them to the log in page.

| diff --git a/tests/unit/accounts/test_auth_policy.py b/tests/unit/accounts/test_auth_policy.py

--- a/tests/unit/accounts/test_auth_policy.py

+++ b/tests/unit/accounts/test_auth_policy.py

@@ -11,6 +11,7 @@

# limitations under the License.

import pretend

+import uuid

from pyramid import authentication

from pyramid.interfaces import IAuthenticationPolicy

@@ -74,7 +75,7 @@ def test_unauthenticated_userid_with_userid(self, monkeypatch):

add_vary_cb = pretend.call_recorder(lambda *v: vary_cb)

monkeypatch.setattr(auth_policy, "add_vary_callback", add_vary_cb)

- userid = pretend.stub()

+ userid = uuid.uuid4()

service = pretend.stub(

find_userid=pretend.call_recorder(lambda username: userid),

)

@@ -83,7 +84,7 @@ def test_unauthenticated_userid_with_userid(self, monkeypatch):

add_response_callback=pretend.call_recorder(lambda cb: None),

)

- assert policy.unauthenticated_userid(request) is userid

+ assert policy.unauthenticated_userid(request) == str(userid)

assert extract_http_basic_credentials.calls == [pretend.call(request)]

assert request.find_service.calls == [

pretend.call(IUserService, context=None),

diff --git a/tests/unit/accounts/test_views.py b/tests/unit/accounts/test_views.py

--- a/tests/unit/accounts/test_views.py

+++ b/tests/unit/accounts/test_views.py

@@ -559,15 +559,6 @@ def test_reset_password(self, db_request, user_service, token_service):

]

-class TestClientSideIncludes:

-

- def test_edit_gravatar_csi_returns_user(self, db_request):

- user = UserFactory.create()

- assert views.edit_gravatar_csi(user, db_request) == {

- "user": user,

- }

-

-

class TestProfileCallout:

def test_profile_callout_returns_user(self):

diff --git a/tests/unit/manage/__init__.py b/tests/unit/manage/__init__.py

new file mode 100644

--- /dev/null

+++ b/tests/unit/manage/__init__.py

@@ -0,0 +1,11 @@

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

diff --git a/tests/unit/manage/test_forms.py b/tests/unit/manage/test_forms.py

new file mode 100644

--- /dev/null

+++ b/tests/unit/manage/test_forms.py

@@ -0,0 +1,71 @@

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import pretend

+import pytest

+import wtforms

+

+from webob.multidict import MultiDict

+

+from warehouse.manage import forms

+

+

+class TestCreateRoleForm:

+

+ def test_creation(self):

+ user_service = pretend.stub()

+ form = forms.CreateRoleForm(user_service=user_service)

+

+ assert form.user_service is user_service

+

+ def test_validate_username_with_no_user(self):

+ user_service = pretend.stub(

+ find_userid=pretend.call_recorder(lambda userid: None),

+ )

+ form = forms.CreateRoleForm(user_service=user_service)

+ field = pretend.stub(data="my_username")

+

+ with pytest.raises(wtforms.validators.ValidationError):

+ form.validate_username(field)

+

+ assert user_service.find_userid.calls == [pretend.call("my_username")]

+

+ def test_validate_username_with_user(self):

+ user_service = pretend.stub(

+ find_userid=pretend.call_recorder(lambda userid: 1),

+ )

+ form = forms.CreateRoleForm(user_service=user_service)

+ field = pretend.stub(data="my_username")

+

+ form.validate_username(field)

+

+ assert user_service.find_userid.calls == [pretend.call("my_username")]

+

+ @pytest.mark.parametrize(("value", "expected"), [

+ ("", "Must select a role"),

+ ("invalid", "Not a valid choice"),

+ (None, "Not a valid choice"),

+ ])

+ def test_validate_role_name_fails(self, value, expected):

+ user_service = pretend.stub(

+ find_userid=pretend.call_recorder(lambda userid: 1),

+ )

+ form = forms.CreateRoleForm(

+ MultiDict({

+ 'role_name': value,

+ 'username': 'valid_username',

+ }),

+ user_service=user_service,

+ )

+

+ assert not form.validate()

+ assert form.role_name.errors == [expected]

diff --git a/tests/unit/manage/test_views.py b/tests/unit/manage/test_views.py

new file mode 100644

--- /dev/null

+++ b/tests/unit/manage/test_views.py

@@ -0,0 +1,505 @@

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import uuid

+

+import pretend

+

+from pyramid.httpexceptions import HTTPSeeOther

+from webob.multidict import MultiDict

+

+from warehouse.manage import views

+from warehouse.accounts.interfaces import IUserService

+from warehouse.packaging.models import JournalEntry, Role

+

+from ...common.db.packaging import ProjectFactory, RoleFactory, UserFactory

+

+

+class TestManageProfile:

+

+ def test_manage_profile(self):

+ request = pretend.stub()

+

+ assert views.manage_profile(request) == {}

+

+

+class TestManageProjects:

+

+ def test_manage_projects(self):

+ request = pretend.stub()

+

+ assert views.manage_projects(request) == {}

+

+

+class TestManageProjectSettings:

+

+ def test_manage_project_settings(self):

+ request = pretend.stub()

+ project = pretend.stub()

+

+ assert views.manage_project_settings(project, request) == {

+ "project": project,

+ }

+

+

+class TestManageProjectRoles:

+

+ def test_get_manage_project_roles(self, db_request):

+ user_service = pretend.stub()

+ db_request.find_service = pretend.call_recorder(

+ lambda iface, context: user_service

+ )

+ form_obj = pretend.stub()

+ form_class = pretend.call_recorder(lambda d, user_service: form_obj)

+

+ project = ProjectFactory.create(name="foobar")

+ user = UserFactory.create()

+ role = RoleFactory.create(user=user, project=project)

+

+ result = views.manage_project_roles(

+ project, db_request, _form_class=form_class

+ )

+

+ assert db_request.find_service.calls == [

+ pretend.call(IUserService, context=None),

+ ]

+ assert form_class.calls == [

+ pretend.call(db_request.POST, user_service=user_service),

+ ]

+ assert result == {

+

+ "project": project,

+ "roles_by_user": {user.username: [role]},

+ "form": form_obj,

+ }

+

+ def test_post_new_role_validation_fails(self, db_request):

+ project = ProjectFactory.create(name="foobar")

+ user = UserFactory.create(username="testuser")

+ role = RoleFactory.create(user=user, project=project)

+

+ user_service = pretend.stub()

+ db_request.find_service = pretend.call_recorder(

+ lambda iface, context: user_service

+ )

+ db_request.method = "POST"

+ form_obj = pretend.stub(validate=pretend.call_recorder(lambda: False))

+ form_class = pretend.call_recorder(lambda d, user_service: form_obj)

+

+ result = views.manage_project_roles(

+ project, db_request, _form_class=form_class

+ )

+

+ assert db_request.find_service.calls == [

+ pretend.call(IUserService, context=None),

+ ]

+ assert form_class.calls == [

+ pretend.call(db_request.POST, user_service=user_service),

+ ]

+ assert form_obj.validate.calls == [pretend.call()]

+ assert result == {

+ "project": project,

+ "roles_by_user": {user.username: [role]},

+ "form": form_obj,

+ }

+

+ def test_post_new_role(self, db_request):

+ project = ProjectFactory.create(name="foobar")

+ user = UserFactory.create(username="testuser")

+

+ user_service = pretend.stub(

+ find_userid=lambda username: user.id,

+ get_user=lambda userid: user,

+ )

+ db_request.find_service = pretend.call_recorder(

+ lambda iface, context: user_service

+ )

+ db_request.method = "POST"

+ db_request.POST = pretend.stub()

+ db_request.remote_addr = "10.10.10.10"

+ db_request.user = UserFactory.create()

+ form_obj = pretend.stub(

+ validate=pretend.call_recorder(lambda: True),

+ username=pretend.stub(data=user.username),

+ role_name=pretend.stub(data="Owner"),

+ )

+ form_class = pretend.call_recorder(lambda *a, **kw: form_obj)

+ db_request.session = pretend.stub(

+ flash=pretend.call_recorder(lambda *a, **kw: None),

+ )

+

+ result = views.manage_project_roles(

+ project, db_request, _form_class=form_class

+ )

+

+ assert db_request.find_service.calls == [

+ pretend.call(IUserService, context=None),

+ ]

+ assert form_obj.validate.calls == [pretend.call()]

+ assert form_class.calls == [

+ pretend.call(db_request.POST, user_service=user_service),

+ pretend.call(user_service=user_service),

+ ]

+ assert db_request.session.flash.calls == [

+ pretend.call("Added collaborator 'testuser'", queue="success"),

+ ]

+

+ # Only one role is created

+ role = db_request.db.query(Role).one()

+

+ assert result == {

+ "project": project,

+ "roles_by_user": {user.username: [role]},

+ "form": form_obj,

+ }

+

+ entry = db_request.db.query(JournalEntry).one()

+

+ assert entry.name == project.name

+ assert entry.action == "add Owner testuser"

+ assert entry.submitted_by == db_request.user

+ assert entry.submitted_from == db_request.remote_addr

+

+ def test_post_duplicate_role(self, db_request):

+ project = ProjectFactory.create(name="foobar")

+ user = UserFactory.create(username="testuser")

+ role = RoleFactory.create(

+ user=user, project=project, role_name="Owner"

+ )

+

+ user_service = pretend.stub(

+ find_userid=lambda username: user.id,

+ get_user=lambda userid: user,

+ )

+ db_request.find_service = pretend.call_recorder(

+ lambda iface, context: user_service

+ )

+ db_request.method = "POST"

+ db_request.POST = pretend.stub()

+ form_obj = pretend.stub(

+ validate=pretend.call_recorder(lambda: True),

+ username=pretend.stub(data=user.username),

+ role_name=pretend.stub(data=role.role_name),

+ )

+ form_class = pretend.call_recorder(lambda *a, **kw: form_obj)

+ db_request.session = pretend.stub(

+ flash=pretend.call_recorder(lambda *a, **kw: None),

+ )

+

+ result = views.manage_project_roles(

+ project, db_request, _form_class=form_class

+ )

+

+ assert db_request.find_service.calls == [

+ pretend.call(IUserService, context=None),

+ ]

+ assert form_obj.validate.calls == [pretend.call()]

+ assert form_class.calls == [

+ pretend.call(db_request.POST, user_service=user_service),

+ pretend.call(user_service=user_service),

+ ]

+ assert db_request.session.flash.calls == [

+ pretend.call(

+ "User 'testuser' already has Owner role for project",

+ queue="error",

+ ),

+ ]

+

+ # No additional roles are created

+ assert role == db_request.db.query(Role).one()

+

+ assert result == {

+ "project": project,

+ "roles_by_user": {user.username: [role]},

+ "form": form_obj,

+ }

+

+

+class TestChangeProjectRoles:

+

+ def test_change_role(self, db_request):

+ project = ProjectFactory.create(name="foobar")

+ user = UserFactory.create(username="testuser")

+ role = RoleFactory.create(

+ user=user, project=project, role_name="Owner"

+ )

+ new_role_name = "Maintainer"

+

+ db_request.method = "POST"

+ db_request.user = UserFactory.create()

+ db_request.remote_addr = "10.10.10.10"

+ db_request.POST = MultiDict({

+ "role_id": role.id,

+ "role_name": new_role_name,

+ })

+ db_request.session = pretend.stub(

+ flash=pretend.call_recorder(lambda *a, **kw: None),

+ )

+ db_request.route_path = pretend.call_recorder(

+ lambda *a, **kw: "/the-redirect"

+ )

+

+ result = views.change_project_role(project, db_request)

+

+ assert role.role_name == new_role_name

+ assert db_request.route_path.calls == [

+ pretend.call('manage.project.roles', name=project.name),

+ ]

+ assert db_request.session.flash.calls == [

+ pretend.call("Successfully changed role", queue="success"),

+ ]

+ assert isinstance(result, HTTPSeeOther)

+ assert result.headers["Location"] == "/the-redirect"

+

+ entry = db_request.db.query(JournalEntry).one()

+

+ assert entry.name == project.name

+ assert entry.action == "change Owner testuser to Maintainer"

+ assert entry.submitted_by == db_request.user

+ assert entry.submitted_from == db_request.remote_addr

+

+ def test_change_role_invalid_role_name(self, pyramid_request):

+ project = pretend.stub(name="foobar")

+

+ pyramid_request.method = "POST"

+ pyramid_request.POST = MultiDict({

+ "role_id": str(uuid.uuid4()),

+ "role_name": "Invalid Role Name",

+ })

+ pyramid_request.route_path = pretend.call_recorder(

+ lambda *a, **kw: "/the-redirect"

+ )

+

+ result = views.change_project_role(project, pyramid_request)

+

+ assert pyramid_request.route_path.calls == [

+ pretend.call('manage.project.roles', name=project.name),

+ ]

+ assert isinstance(result, HTTPSeeOther)

+ assert result.headers["Location"] == "/the-redirect"

+

+ def test_change_role_when_multiple(self, db_request):

+ project = ProjectFactory.create(name="foobar")

+ user = UserFactory.create(username="testuser")

+ owner_role = RoleFactory.create(

+ user=user, project=project, role_name="Owner"

+ )

+ maintainer_role = RoleFactory.create(

+ user=user, project=project, role_name="Maintainer"

+ )

+ new_role_name = "Maintainer"

+

+ db_request.method = "POST"

+ db_request.user = UserFactory.create()

+ db_request.remote_addr = "10.10.10.10"

+ db_request.POST = MultiDict([

+ ("role_id", owner_role.id),

+ ("role_id", maintainer_role.id),

+ ("role_name", new_role_name),

+ ])

+ db_request.session = pretend.stub(

+ flash=pretend.call_recorder(lambda *a, **kw: None),

+ )

+ db_request.route_path = pretend.call_recorder(

+ lambda *a, **kw: "/the-redirect"

+ )

+

+ result = views.change_project_role(project, db_request)

+

+ assert db_request.db.query(Role).all() == [maintainer_role]

+ assert db_request.route_path.calls == [

+ pretend.call('manage.project.roles', name=project.name),

+ ]

+ assert db_request.session.flash.calls == [

+ pretend.call("Successfully changed role", queue="success"),

+ ]

+ assert isinstance(result, HTTPSeeOther)

+ assert result.headers["Location"] == "/the-redirect"

+

+ entry = db_request.db.query(JournalEntry).one()

+

+ assert entry.name == project.name

+ assert entry.action == "remove Owner testuser"

+ assert entry.submitted_by == db_request.user

+ assert entry.submitted_from == db_request.remote_addr

+

+ def test_change_missing_role(self, db_request):

+ project = ProjectFactory.create(name="foobar")

+ missing_role_id = str(uuid.uuid4())

+

+ db_request.method = "POST"

+ db_request.user = pretend.stub()

+ db_request.POST = MultiDict({

+ "role_id": missing_role_id,

+ "role_name": 'Owner',

+ })

+ db_request.session = pretend.stub(

+ flash=pretend.call_recorder(lambda *a, **kw: None),

+ )

+ db_request.route_path = pretend.call_recorder(

+ lambda *a, **kw: "/the-redirect"

+ )

+

+ result = views.change_project_role(project, db_request)

+

+ assert db_request.session.flash.calls == [

+ pretend.call("Could not find role", queue="error"),

+ ]

+ assert isinstance(result, HTTPSeeOther)

+ assert result.headers["Location"] == "/the-redirect"

+

+ def test_change_own_owner_role(self, db_request):

+ project = ProjectFactory.create(name="foobar")

+ user = UserFactory.create(username="testuser")

+ role = RoleFactory.create(

+ user=user, project=project, role_name="Owner"

+ )

+

+ db_request.method = "POST"

+ db_request.user = user

+ db_request.POST = MultiDict({

+ "role_id": role.id,

+ "role_name": "Maintainer",

+ })

+ db_request.session = pretend.stub(

+ flash=pretend.call_recorder(lambda *a, **kw: None),

+ )

+ db_request.route_path = pretend.call_recorder(

+ lambda *a, **kw: "/the-redirect"

+ )

+

+ result = views.change_project_role(project, db_request)

+

+ assert db_request.session.flash.calls == [

+ pretend.call("Cannot remove yourself as Owner", queue="error"),

+ ]

+ assert isinstance(result, HTTPSeeOther)

+ assert result.headers["Location"] == "/the-redirect"

+

+ def test_change_own_owner_role_when_multiple(self, db_request):

+ project = ProjectFactory.create(name="foobar")

+ user = UserFactory.create(username="testuser")

+ owner_role = RoleFactory.create(

+ user=user, project=project, role_name="Owner"

+ )

+ maintainer_role = RoleFactory.create(

+ user=user, project=project, role_name="Maintainer"

+ )

+

+ db_request.method = "POST"

+ db_request.user = user

+ db_request.POST = MultiDict([

+ ("role_id", owner_role.id),

+ ("role_id", maintainer_role.id),

+ ("role_name", "Maintainer"),

+ ])

+ db_request.session = pretend.stub(

+ flash=pretend.call_recorder(lambda *a, **kw: None),

+ )

+ db_request.route_path = pretend.call_recorder(

+ lambda *a, **kw: "/the-redirect"

+ )

+

+ result = views.change_project_role(project, db_request)

+

+ assert db_request.session.flash.calls == [

+ pretend.call("Cannot remove yourself as Owner", queue="error"),

+ ]

+ assert isinstance(result, HTTPSeeOther)

+ assert result.headers["Location"] == "/the-redirect"

+

+

+class TestDeleteProjectRoles:

+

+ def test_delete_role(self, db_request):

+ project = ProjectFactory.create(name="foobar")

+ user = UserFactory.create(username="testuser")

+ role = RoleFactory.create(

+ user=user, project=project, role_name="Owner"

+ )

+

+ db_request.method = "POST"

+ db_request.user = UserFactory.create()

+ db_request.remote_addr = "10.10.10.10"

+ db_request.POST = MultiDict({"role_id": role.id})

+ db_request.session = pretend.stub(

+ flash=pretend.call_recorder(lambda *a, **kw: None),

+ )

+ db_request.route_path = pretend.call_recorder(

+ lambda *a, **kw: "/the-redirect"

+ )

+

+ result = views.delete_project_role(project, db_request)

+

+ assert db_request.route_path.calls == [

+ pretend.call('manage.project.roles', name=project.name),

+ ]

+ assert db_request.db.query(Role).all() == []

+ assert db_request.session.flash.calls == [

+ pretend.call("Successfully removed role", queue="success"),

+ ]

+ assert isinstance(result, HTTPSeeOther)

+ assert result.headers["Location"] == "/the-redirect"

+

+ entry = db_request.db.query(JournalEntry).one()

+

+ assert entry.name == project.name

+ assert entry.action == "remove Owner testuser"

+ assert entry.submitted_by == db_request.user

+ assert entry.submitted_from == db_request.remote_addr

+

+ def test_delete_missing_role(self, db_request):

+ project = ProjectFactory.create(name="foobar")

+ missing_role_id = str(uuid.uuid4())

+

+ db_request.method = "POST"

+ db_request.user = pretend.stub()

+ db_request.POST = MultiDict({"role_id": missing_role_id})

+ db_request.session = pretend.stub(

+ flash=pretend.call_recorder(lambda *a, **kw: None),

+ )

+ db_request.route_path = pretend.call_recorder(

+ lambda *a, **kw: "/the-redirect"

+ )

+

+ result = views.delete_project_role(project, db_request)

+

+ assert db_request.session.flash.calls == [

+ pretend.call("Could not find role", queue="error"),

+ ]

+ assert isinstance(result, HTTPSeeOther)

+ assert result.headers["Location"] == "/the-redirect"

+

+ def test_delete_own_owner_role(self, db_request):

+ project = ProjectFactory.create(name="foobar")

+ user = UserFactory.create(username="testuser")

+ role = RoleFactory.create(

+ user=user, project=project, role_name="Owner"

+ )

+

+ db_request.method = "POST"

+ db_request.user = user

+ db_request.POST = MultiDict({"role_id": role.id})

+ db_request.session = pretend.stub(

+ flash=pretend.call_recorder(lambda *a, **kw: None),

+ )

+ db_request.route_path = pretend.call_recorder(

+ lambda *a, **kw: "/the-redirect"

+ )

+

+ result = views.delete_project_role(project, db_request)

+

+ assert db_request.session.flash.calls == [

+ pretend.call("Cannot remove yourself as Owner", queue="error"),

+ ]

+ assert isinstance(result, HTTPSeeOther)

+ assert result.headers["Location"] == "/the-redirect"

diff --git a/tests/unit/packaging/test_models.py b/tests/unit/packaging/test_models.py

--- a/tests/unit/packaging/test_models.py

+++ b/tests/unit/packaging/test_models.py

@@ -27,6 +27,21 @@

)

+class TestRole:

+

+ def test_role_ordering(self, db_request):

+ project = DBProjectFactory.create()

+ owner_role = DBRoleFactory.create(

+ project=project,

+ role_name="Owner",

+ )

+ maintainer_role = DBRoleFactory.create(

+ project=project,

+ role_name="Maintainer",

+ )

+ assert max([maintainer_role, owner_role]) == owner_role

+

+

class TestProjectFactory:

@pytest.mark.parametrize(

@@ -95,10 +110,10 @@ def test_acl(self, db_session):

assert project.__acl__() == [

(Allow, "group:admins", "admin"),

- (Allow, owner1.user.id, ["upload"]),

- (Allow, owner2.user.id, ["upload"]),

- (Allow, maintainer1.user.id, ["upload"]),

- (Allow, maintainer2.user.id, ["upload"]),

+ (Allow, str(owner1.user.id), ["manage", "upload"]),

+ (Allow, str(owner2.user.id), ["manage", "upload"]),

+ (Allow, str(maintainer1.user.id), ["upload"]),

+ (Allow, str(maintainer2.user.id), ["upload"]),

]

diff --git a/tests/unit/test_config.py b/tests/unit/test_config.py

--- a/tests/unit/test_config.py

+++ b/tests/unit/test_config.py

@@ -336,6 +336,7 @@ def __init__(self):

pretend.call(".cache.http"),

pretend.call(".cache.origin"),

pretend.call(".accounts"),

+ pretend.call(".manage"),

pretend.call(".packaging"),

pretend.call(".redirects"),

pretend.call(".routes"),

diff --git a/tests/unit/test_routes.py b/tests/unit/test_routes.py

--- a/tests/unit/test_routes.py

+++ b/tests/unit/test_routes.py

@@ -126,10 +126,41 @@ def add_policy(name, filename):

domain=warehouse,

),

pretend.call(

- "accounts.edit_gravatar",

- "/user/{username}/edit_gravatar/",

- factory="warehouse.accounts.models:UserFactory",

- traverse="/{username}",

+ "manage.profile",

+ "/manage/profile/",

+ domain=warehouse

+ ),

+ pretend.call(

+ "manage.projects",

+ "/manage/projects/",

+ domain=warehouse

+ ),

+ pretend.call(

+ "manage.project.settings",

+ "/manage/project/{name}/settings/",

+ factory="warehouse.packaging.models:ProjectFactory",

+ traverse="/{name}",

+ domain=warehouse,

+ ),

+ pretend.call(

+ "manage.project.roles",

+ "/manage/project/{name}/collaboration/",

+ factory="warehouse.packaging.models:ProjectFactory",

+ traverse="/{name}",

+ domain=warehouse,

+ ),

+ pretend.call(

+ "manage.project.change_role",

+ "/manage/project/{name}/collaboration/change/",

+ factory="warehouse.packaging.models:ProjectFactory",

+ traverse="/{name}",

+ domain=warehouse,

+ ),

+ pretend.call(

+ "manage.project.delete_role",

+ "/manage/project/{name}/collaboration/delete/",

+ factory="warehouse.packaging.models:ProjectFactory",