package

stringlengths 1

122

| pacakge-description

stringlengths 0

1.3M

|

|---|---|

anqa-events

|

anqa-events

|

anqa-rest

|

anqa-rest

|

anqr

|

No description available on PyPI.

|

anrg.saga

|

SagaSaga:SchedulingAlgorithmsGathered.IntroductionThis repository contains a collection of scheduling algorithms.

The algorithms are implemented in python using a common interface.

Scripts for validating the schedules produced by the algorithms are also provided.

Scripts for comparing the performance of the algorithms are also provided.AlgorithmsThe following algorithms are implemented:Common:HEFT: Heteregeneous Earliest Finish TimeCPoP: Critical Path on ProcessorFastestNode: Schedule all tasks on the fastest nodeStochastic: (stochastic task cost, data size, compute speed, and communication strength)SHEFTImproved SHEFTStochastic HEFTMean HEFTUsageInstallationClone the repository and install the requirements:pipinstallanrg.sagaRunning the algorithmsThe algorithms are implemented as python modules.

The following example shows how to run the HEFT algorithm on a workflow:fromsaga.common.heftimportHeftSchedulerscheduler=HeftScheduler()network:nx.Graph=...task_graph:nx.DiGraph=...scheduler.schedule(network,task_graph)

|

anritsu-lightning

|

anritsu_lightningPython interface to the Anritsu Lightning 37xxxD VNAInstallation> pip install anritsu_lightningUsage>>>fromanritsu_lightningimportCommChannel>>>withCommChannel(address=6)asvna:...vna.ch3.parameter="S21"...s21=vna.read(channel=3,data_status="corrected")>>>It is also possible to read the S-parameters inTouchstoneSnP format.>>>fromanritsu_lightningimportCommChannel>>>withCommChannel(address=6)asvna:...vna.measurement_setup.start=40e6# Hz...vna.measurement_setup.stop=20e9# Hz...vna.measurement_setup.data_points=401...vna.ch1.parameter="S11"...vna.ch1.graph_type="log magnitude"...vna.ch1.graph_scale=20.0# dB/div...vna.ch2.parameter="S12"...vna.ch3.parameter="S21"...vna.ch3.graph_type="log magnitude"...vna.ch3.graph_scale=2.0# dB/div...vna.ch4.parameter="S22"...vna.display_mode="dual channels 1 & 3"...withopen(<file>,"wt")asf:...f.write(vna.get_s2p(previous=False))>>>It is also possible to use the markers to find the -3 dB point of a filter. In this

example, the VNA measured the -3 dB bandwidth of a Mini-Circuits VLF-1000+ low pass

filter with nominal specification of 1.3 GHz. The VNA was already setup to measure

S21 on channel 3 between 40 MHz and 5 GHz.In[1]:fromanritsu_lightningimportCommChannelIn[2]:cc=CommChannel(address=6)In[3]:vna=cc.get_instrument()In[4]:vna.markers.mode="normal"In[5]:vna.markers.enable([1,2])In[6]:vna.markers.set_active(1)In[7]:vna.markers.set_xaxis_location(1,"40 MHz")In[8]:vna.markers.delta_reference=1In[9]:vna.markers.set_active(2)In[10]:bw=vna.markers.search("-3 dB",reference="delta reference",timeout=5000)In[11]:print(f"{bw/1e9:.2f}GHz")1.26GHzSupported features:Measurement setup: frequency sweep, data points, etc.Channel setup: parameter (S11, S12, ...), graph type, etc.Graph setup: scale, reference, offsetData transfer: channel data, screen bitmap, S2P fileMarkers

|

anritsu-ms2090a-ams

|

No description available on PyPI.

|

anritsu-pwrmtr

|

anritsu_pwrmtrPython interface to the Anritsu power metersInstallation>pip install anritsu_pwrmtrUsageFor ML243xA models that use GPIB:>>>fromanritsu_pwrmtrimportCommChannel>>>withCommChannel(13)aspm:...pm.ch1.read()...-10.1For MA243x0A models that use USB:>>>fromanritsu_pwrmtrimportCommChannel>>>withCommChannel('<USB0::0x...::RAW>')aspm:...pm.read()...-10.1Supported models:ML243xAMA243x0ASupported features:Channel configuration for Readout modeSensor calibration and zeroingMeasuring power in Readout mode

|

ans

|

ANS: Ambient Noise Seismologyans is a python wrapper for ambient noise seismology tasks and it has a GUI for easier configuration of ambient-noise seismology projects. In its backend, this package depends on Perl interpreter, GMT (Generic Mapping Tools), and SAC (Seismic Analysis Code) as well as python modules including ObsPy etc. ans is successfully tested on Python 3.6.* and 3.8.* versions.Version 0.0.1This version include all the necessary commands and tools required for generating Rayleigh wave (ZZ and RR cross-correlations) and Love wave (TT cross-correlation component) Empirical Green's Functions (EGFs). A brief description of most useful CLI commands is given below:- $> ans init <maindir>

Description: initialize ans project at project main directory i.e. <maindir>

- $> ans config

$> ans config --maindir <maindir>

Description: open the program GUI to configure the ans project

Note: default <maindir> is current working directory

- $> ans download stations

Description: download list of available stations at the given

region boundary and dates that was previously set using the GUI.

Datacenters, desired station components etc should also be set using '$> ans config'.

- $> ans download metadata

Description: download station metadata files (xml file format) that will be used for

instrument response removal and updating sac headers.

- $> ans download mseeds

Description: main data acquisition module; download seismograms in mseed format

- $> ans mseed2sac <mseeds_dir> <sacs_dir>

Description: convert mseed to sac files while applying the listed processing steps

in project configuration mseed2sac tab (i.e., '$> ans config').

<mseeds_dir>: input mseed dataset directory

<sacs_dir>: output sac files dataset directory

- $> ans sac2ncf <sacs_dir> <ncfs_dir>

Description: process the input sacfiles in <sacs_dir> and output NCFs (noise cross-correlation functions)

while applying the list of sac2ncf processes defined in project configuration file.

Note: At this step it is necessary that all sac headers are updated and this can be done by either

performing instrument response removal or adding "write headers" process to the list of processes.

- $> ans ncf2egf <ncfs> <egfs_dir>

<ncfs>: either path to the <ncfs_dir> (full stack EGFs) or an ASCII datalist containing a one-column data format

list of paths to event directories (seasonal EGFs; e.g., "14001000000" i.e. 2014/01/01)

<egfs_dir>: path to output stacked EGF

|

ansaittua

|

ansaittuaEarned Value Management (Finnish: ansaittua arvonhallintaa) when coding swiftly.License: MITDocumentationUser and developerdocumentation of ansaittua.Bug TrackerFeature requests and bug reports are bested entered in thetodos of ansaittua.Primary Source repositoryThe primary source repository ofansaittua is at sourcehuta collection of tools useful for software development.StatusExperimental.Note: The default branch isdefault.

|

ansaotuvi

|

Mã nguồn mở chương trình an sao Tử vi ansaotuvi[x] Fork code từ reponsity của doannguyen/lasotuvi

[x] Cập nhật version mới của các thư viện

[x] Deploy ansaotuvi và ansaotuvi-website lên pypi

[x] Chạy thử dự án tích hợp ansaotuvi-website

[x] Điều chỉnh vị trí miếu hãm và ngũ hành của sao

[-] Chuẩn hóa vị trí sao và logic an sao

|

ansaotuvi-website

|

Mã nguồn mở chương trình an sao Tử vi ansaotuviThis is a wrapper of ansaotuvi into django application.Create virtual environment and activate itpip install virtualenvvirtualenv .envIf you are *nix userssource .env/bin/activateif you are Window users, just type.env/Scripts/activate.batand make sure you are working on command prompt (cmd.exe) not PowerShellNow you are working on the virtual environmentIf you do not have Django project, go and install django and ansaotuvi applicationspip install django ansaotuvi_websiteIf you have django project already, just install the ansaotuvi_website applicationpip install ansaotuvi_websiteAdd theansaotuvi_websiteapplication to your INSTALLED_APPS

by adding# settings.py

INSTALLED_APPS = [

# Your others applications

'ansaotuvi_website',

]Add router to theurls.py# urls.py

from django.urls import path, include

urlpatterns = [

# ....

path('ansaotuvi/', include('ansaotuvi_website.urls'))

]Here is a tutorial to show you how to add ansaotuvi app into a django project.Hope this help!

|

ansar-connect

|

ansar-connectTheansar-connectlibrary implements sophisticated asynchronous network messaging. It builds

on the features of theansar-encode <https://pypi.org/project/ansar-encode>_

andansar-create <https://pypi.org/project/ansar-create>_ libraries to integrate network

messaging into the asynchronous model of execution. The result of this approach is that sending

a message across a network is no different to sending a message between any two async objects.

There is also complete "portability" of messages - an application data object read from the disk

usingansar.encodecan immediately be sent across a network, with zero additional effort.This essential messaging capability is combined with the multi-process capabilities

ofansar.create, to deliver practical, distributed computing. Designs for software

solutions can now consider;multi-process compositions that run on a host,compositions of processes distributed across a LAN,and compositions where processes may be anywhere on the Internet.Smaller projects will use this library to deliver multi-process solutions on a single host.

The library will arrange for all the network messaging that binds the processes together,

into a single operational unit.The most ambitious projects will involve processes spread across the Internet, e.g. between

desktop PCs at different branches of an organization, or between head-office servers and mobile

personnel on laptops, or between those same servers and SBCs operating as weather stations. As

long as there is a reasonably up-to-date Python runtime (>=3.10) and Internet connectivity, this

library will arrange for full, asynchronous messaging between any 2 members of a composition.FeaturesImplements full-duplex, network messaging over asynchronous sockets.Inherits the complete type-sophistication ofansar.encode.Seamlessly extends the async model of operation to span local and wide-area networks.

|

ansar-create

|

ansar-createTheansar-createlibrary uses multi-threading and multi-processing to solve difficult

software challenges such as concurrency, interruption and cancellation. It wraps those

platform facilities in a standard runtime model, giving developers the ability to express

that type of software in a clear and consistent manner.This type of software is often referred to as asynchronous, event-driven or reactive

software. It acknowledges the fundamental fact that significant events can occur at

any time, and that software must be able to respond to those events in a reliable

and timely manner.FeaturesBased on a standard model for complex software operations (SDL)Uniform management of threads, processes and state machinesBuilt-in runtime facilities such as timers and logging.Persistent application configuration.Process orchestration.Development automation.

|

ansar-encode

|

ansar-encodeTheansar-encodelibrary provides for the convenient storage and recovery of

application data using system files. Files are created using standard encodings - the

default is JSON - and are human readable. Complex application data can be stored

including containers, instances of classes and object graphs.FeaturesBroad suite of primitive types, e.g. integers, floats, strings, times and enumerations.Structured data, e.g. an 8-by-8 table of user-defined class instances.Recovered data is fully-typed, e.g. reading aclass Userproduces aUserinstance.Graphs and graphs that include cycles, e.g. circular lists, syntax trees and state diagrams.Polymorphism, e.g. read an object of unknown type.Type-checking.Plain text files.Managed folders of files.Object versioning.

|

ansatz

|

ansatzA physics Python library

|

anscenter

|

backend-moduleMain backend module, which is used for developing web-app logic and deploying AI model.InstallationRun the following to install:pipinstallanscenterUsagefromanscester.predictimportMachineLearningModel# Generate modelmodel=MachineLearningModel()# Using Exampledata_input=[1.0,2.0,3.0,4.0]result=model.predict(data_input)print(result)Developing HelloGTo install helloG, along with the tools you need to develop and run tests, run the following in your virtualenv:$pipinstallanscenter[dev]

|

anschlusspruefer

|

This is a dummy package intended to prevent any dependency confusion attacks against our internal Python packages atSBB. For now this is the only foolproof way to prevent some dependency confusion attacks until the following PEP has been implemented:PEP-708For any questions regarding this package feel free to reach out [email protected].

|

ansciier

|

AnsciierMimic video or image to your terminalOverviewHow to use itFirst you needpython 3, I reccomend the latest version but I think it would run on older version too.Install the package with$ pip install ansciierorpip3if pip is point to python 2 version.Run it with$ ansciier /path/to/image-or-video.mp4.Type$ ansciier -hfor help.Example usage$ ansciier ~/Videos/rickroll.mp4 --aspect-ratio 20:9 --fps 24Command above will draw frame from rickroll.mp4 in Videos folder with aspect ratio of 20:9 and max fps at 24$ ansciier ~/Video/frame{0}.png --ascii --char @ --dim 200x50 --start-frame 59This command will draw image with@character, 200x50 square block dimension from frame59.png, frame60.png, until it reaches the highest frame number. It'll automatically find the last frame when--last-frameis not specified, it'll still continue if a frame is missing.$ ansciier camerawill use your cameraNote : Support only truecolor terminal (Because majority of terminals nowadays are truecolor I think you already have one).Should work on Windows and Linux

|

ansel

|

Codecs for reading/writing documents in the ANSEL character set.Free software: MIT licenseDocumentation:https://python-ansel.readthedocs.io.FeaturesAdds support for character set encodingsANSEL(ANSI/NISO Z39.47) andGEDCOM.Re-orders combining characters for consistency with the ANSEL specification.CreditsThis package was created withCookiecutterand theaudreyr/cookiecutter-pypackageproject template.

|

anserializer

|

anserializerA module for serializing and deserializing complex data structures to/from json. It allows the user to (de)serialize a complex dictionary/list structure in one go by defining serializers/deserializers for arbitrary sets of classes.Tested with python3.Serializer can be utilized either as instantiated or non-instantiated.Installpip3 install anserializerExamplesInstantiated examplefrom anserializer import Serializer, DatetimeSerializer, ObjectSerializer

class MyObjectClass(object):

pass

# instantiate the serializer

s = s = Serializer([ DatetimeSerializer(), ObjectSerializer(MyObjectClass) ])

# create object

o = MyObjectClass()

print(o)

# serialize object

x = s.get_serialized(o)

print(x)

# deserialize object

o = s.get_deserialized(x)

print(o)Non-instantiated examplefrom anserializer import Serializer, DatetimeSerializer, ObjectSerializer

class MyObjectClass(object):

pass

# put our list of serializer classes available for use into a variable

serializers = [ DatetimeSerializer(), ObjectSerializer(MyObjectClass) ]

# create object

o = MyObjectClass()

print(o)

# serialize object

x = Serializer.serialize(o, serializers)

print(x)

# deserialize object

o = Serializer.deserialize(x, serializers)

print(o)Allow children to be serialized by a serializer defined for their ancestorfrom anserializer import Serializer, DatetimeSerializer, ObjectSerializer

class MyObjectClass(object):

pass

# instantiate the serializer

s = s = Serializer([ DatetimeSerializer(), ObjectSerializer(object) ], serialize_children=True)

# create object

o = MyObjectClass()

print(o)

# serialize object

x = s.get_serialized(o)

print(x)Use your own serializerfrom anserializer import Serializer, DatetimeSerializer, ObjectSerializer, BaseSerializer

class MyObjectClass(object):

pass

# create your serializer

class MySerializer(BaseSerializer):

def __init__(self):

super().__init__([MyObjectClass], '^!MyObjectClass\(\)$')

def serialize(self, obj):

# do the magic and return a serialized element

return { '!MyObjectClass()': {

# insert object data here

}

}

def deserialize(self, serialized_obj):

# do the magic and return an object with the data given in serialized format

kwargs = {}

return MyObjectClass(**kwargs)

# instantiate the serializer

s = Serializer([ DatetimeSerializer(), MySerializer(), ObjectSerializer(object) ])

# create object

o = MyObjectClass()

print(o)

# serialize object

x = s.get_serialized(o)

print(x)

|

anser-module-upload

|

Failed to fetch description. HTTP Status Code: 404

|

ansh997

|

SpreadGSpreadG is a small wrapper around the Google Sheets API (v4) and gspread 3.6.0 to provide more convenient access to Google Sheets from Python scripts and plot graphs with the help of Matplotlib.Turn on the API, download an OAuth client ID as JSON file, and start working.Installation# pip install -r requirement.txtpipinstallspreadgThis will also install google-api-python-client and its dependencies, notably httplib2 and oauth2client, as required dependencies.Quickstartfromspreadgimport*data=dataloader('credentials.json',"Sheetname")df=to_df(data)fig=plotter(df,X,y)fig.savefig("something.png")author: Himanshu Palmailto: palhimanshu997[at]gmail[.]com

|

anshika

|

This package has Multiple Accounts.

|

anshils

|

No description available on PyPI.

|

anshitsu

|

AnshitsuA tiny digital photographic utility."Anshitsu" means a darkroom in Japanese.InstallRun this command in an environment where Python 3.10 or higher is installed.We have tested it on Windows, Mac, and Ubuntu on GitHub Actions, but we have not tested it on Macs with Apple Silicon, so please use it at your own risk on Macs with Apple Silicon.pipinstallanshitsuUsageIt is as described in the following help.INFO:Showinghelpwiththecommand'anshitsu -- --help'.

NAMEanshitsu-ProcessRunnnerforCommandLineInterface

SYNOPSISanshitsuPATH<flags>

DESCRIPTIONThisutilityconvertsthecolorsofimagessuchasphotos.Ifyouspecifyadirectorypath,itwillconverttheimagefilesinthespecifieddirectory.Ifyouspecifyafilepath,itwillconvertthespecifiedfile.Ifyouspecifyanoption,thespecifiedconversionwillbeperformed.TosakamodeisamodethatexpressesthepreferenceofTosaka-senpai,acharacterin"Kyūkyoku Chōjin R",for"photos taken with Tri-X that look like they wereburned onto No. 4 or No. 5 photographic paper".Onlyusefloating-pointnumberswhenusingthismode;numbersaround2.4willmakeitlookright.

POSITIONALARGUMENTSPATHType:strDirectoryorFilePath

FLAGS--colorautoadjust=COLORAUTOADJUSTType:boolDefault:FalseUsecolorautoadjustalgorithm.DefaultstoFalse.--colorstretch=COLORSTRETCHType:boolDefault:FalseUsecolorstretchalgorithm.DefaultstoFalse.--grayscale=GRAYSCALEType:boolDefault:FalseConverttograyscale.DefaultstoFalse.--invert=INVERTType:boolDefault:FalseInvertcolor.DefaultstoFalse.--tosaka=TOSAKAType:Optional[Optional]Default:NoneUseTosakamode.DefaultstoNone.--outputrgb=OUTPUTRGBType:boolDefault:FalseOutputsamonochromeimageinRGB.DefaultstoFalse.--noise=NOISEType:Optional[Optional]Default:NoneAddGaussiannoise.DefaultstoNone.

NOTESYoucanalsouseflagssyntaxforPOSITIONALARGUMENTSIf a directory is specified in the path, anoutdirectory will be created in the specified directory, and the converted JPEG and PNG images will be stored in PNG format.If you specify a JPEG or PNG image file as the path, anoutdirectory will be created in the directory where the image is stored, and the converted image will be stored in PNG format.Note:If you specify a file in any other format in the path, be aware there is no error handling. The program will terminate abnormally.AlgorithmsThe following algorithms are available in this tool.RGBA to RGB ConvertConverts an image that contains Alpha, such as RGBA, to image data that does not contain Alpha.

Transparent areas will be filled with white.This algorithm is performed on any image file.invertInverts the colors of an image using Pillow's built-in algorithm.In the case of negative film, color conversion that takes into account the film base color is not performed, but we plan to follow up with a feature to be developed in the future.colorautoajustWe will use the "automatic color equalization" algorithm described in the following paper to apply color correction.This process is more time consuming than the algorithm used in "colorstretch", but it can reproduce more natural colors.(References)A. Rizzi, C. Gatta and D. Marini, "A new algorithm for unsupervised global and local color correction.", Pattern Recognition Letters, vol. 24, no. 11, 2003.colorstretchThe "gray world" and "stretch" algorithms described in the following paper are combined to apply color correction.This process is faster than the algorithm used in "colorautoajust".(References)D. Nikitenko, M. Wirth and K. Trudel, "Applicability Of White-Balancing Algorithms to Restoring Faded Colour Slides: An Empirical Evaluation.", Journal of Multimedia, vol. 3, no. 5, 2008.grayscaleConvert a color image to grayscale using the algorithm described in the following article.Python でグレースケール(grayscale)化Note: This article is written in Japanese.Tosaka modeTosaka mode is a mode that expresses the preference of Tosaka-senpai, a character in "Kyūkyoku Chōjin R", for "photos taken with Tri-X that look like they were burned onto No. 4 or No. 5 photographic paper".Only use floating-point numbers when using this mode; numbers around 2.4 will make it look right.When this mode is specified, color images will also be converted to grayscale.outputrgbOutputs a monochrome image in RGB.noiseAdd Gaussian noise.To add noise, you need to specify a floating-point number; a value of about 10.0 will be just right.Special ThanksWe are using the following libraries.shunsukeaihara/colorcorrect

|

anshls

|

Failed to fetch description. HTTP Status Code: 404

|

anshudi

|

No description available on PyPI.

|

anshukak

|

No description available on PyPI.

|

anshupdf

|

This is our home page.

|

ansi

|

ANSIVarious ANSI escape codes, used in moving the cursor in a text console or

rendering coloured text.ExamplePrint something in bold yellow on a red background:>>> from ansi.colour import fg, bg

>>> from ansi.colour.fx import reset

>>> msg = (bg.red, fg.yellow, 'Hello world!', reset)

>>> print(''.join(map(str, msg)))

...If you like syntactic sugar, you may also do:>>> from ansi.colour import fg, bg

>>> print(bg.red(fg.yellow('Hello world!')))

...Also, 256 RGB colours are supported:>>> from ansi.colour.rgb import rgb256

>>> from ansi.colour.fx import reset

>>> msg = (rgb256(0xff, 0x80, 0x00), 'hello world', reset)

>>> print(''.join(map(str, msg)))

...If you prefer to use American English instead:>>> from ansi.color import ...Referenceshttps://www.ecma-international.org/publications-and-standards/standards/ecma-48/RequirementsAnsi requires python 3.6 and supports typing.

|

ansi256colors

|

ansi256-colorsA tool to print and demo ansi color codes for a 256 color terminal.InstallInstall using pip:pip install ansi256colorsThis will installansi256to your python bin. You can then call the script so

long as that is in your path.Usage> ansi256 -h

usage: ansi256 [-h] {print-table,test,write} ...

a tool for printing, testing, and exporting ansi color escapes

positional arguments:

{print-table,test,write}

print-table print a table of the ansi color codes

test test color codes on a string

write write a zsh-rc style file that exports all color codes

options:

-h, --help show this help message and exitPrinting Tables> ansi256 print-table --help

usage: ansi256 print-table [-h] [-f [0-255]] [-b [0-255]] {fg,bg,both}

positional arguments:

{fg,bg,both} specify whether to print foreground, background, or both color code tables

options:

-h, --help show this help message and exit

-f [0-255], --foreground [0-255]

specify a foreground color to be on top of the background table

-b [0-255], --background [0-255]

specify a background color to be the background of the foreground tableFor example:ansi256 print-table bothansi256 print-table -b 218 fgPrinting Tests> ansi256 test -h

usage: ansi256 test [-h] [-f [0-255]] [-b [0-255]] TEXT

positional arguments:

TEXT text to test

options:

-h, --help show this help message and exit

-f [0-255], --foreground [0-255]

specify the foreground color code (0-255)

-b [0-255], --background [0-255]

specify the background color code (0-255)For example:ansi256 test -b 218 -f 196 "This is a test of red on pink"Writing RC files> ansi256 write -h

usage: ansi256 write [-h] FILE

positional arguments:

FILE file to write the exports to

options:

-h, --help show this help message and exit> ansi256 write testrc

> head -5 testrc

export COLOR0_FG=$'%{\e[38;5;0m%}'

export COLOR0_BG=$'%{\e[48;5;0m%}'

export COLOR1_FG=$'%{\e[38;5;1m%}'

export COLOR1_BG=$'%{\e[48;5;1m%}'

export COLOR2_FG=$'%{\e[38;5;2m%}'

|

ansi2html

|

ansi2htmlConvert text with ANSI color codes to HTML or to LaTeX.Inspired by and developed off of the work ofpixelbeatandblackjack.Read the docsfor more

informations.Example - Python APIfromansi2htmlimportAnsi2HTMLConverterconv=Ansi2HTMLConverter()ansi="".join(sys.stdin.readlines())html=conv.convert(ansi)Example - Shell Usage$ls--color=always|ansi2html>directories.html

$sudotail/var/log/messages|ccze-A|ansi2html>logs.html

$taskrc._forcecolor:yeslimit:0burndown|ansi2html>burndown.htmlSee the list of full options with:$ansi2html--helpGet this project:$pip3installansi2htmlSource:https://github.com/pycontribs/ansi2html/pypi:https://pypi.org/project/ansi2html/Licenseansi2htmlis licensed LGPLv3+.CreditsAuthor:Ralph BeanContributor:Robin Schneider

|

ansi2image

|

ANSI to ImageA Python lib to convert ANSI text to ImageANSI2Image officially supports Python 3.8+.Main featuresRead ANSI file (or ANSI stdin) and save an image (JPG or PNG)Installationpip3install--upgradeansi2imageHelpANSItoimagev0.1.1byHelvioJunior

ANSItoImageconvertANSItexttoanimage.

https://github.com/helviojunior/ansi2imagepositionalarguments:[filename]Filepathor-tostdin

Options:-o--output[filename]imageoutputfile.--font[font]fonttype.(default:JetBrainsMonoRegular).--font-listListallsupportedfontfamilyandvariations-h,--helpshowhelpmessageandexit-vSpecifyverbositylevel(default:0).Example:-v,-vv,-vvv--versionshowcurrentversionANSI referencehttps://en.wikipedia.org/wiki/_ANSI_escape_code

|

ansi2txt

|

ansi2txtansi to plain text converterRelatedHere are some related projectscolorized-logsstrip-ansi-cliAcknowledgementsThis code base is a translation/port of theansi2txt.ccode base fromcolorized-logsto Python3 and Bash.

This project came about because I liked the originalansi2txt's output but did not want to have to compile it or ship binaries around.

I ported ansi2txt.c → ansi2txt.py but then came across an environment without python so went ansi2txt.py → ansi2txt.sh.LicenseAGPLv3ANDMITRunning TestsTo run tests, run the following commandbatsansi2txt.bats

|

ansi2utf8

|

# ansi2utf8ANSI to UTF-8**Usage: a2u FILE [NAME]**----## Installation> pip install ansi2utf8``` (shell)a2u file.txta2u file.txt ~file.txt```## Todo- a2u FILEURL [NAME]

|

ansibeautifier

|

ANSI BeautifierThis is a beautifier project to beautify your python command-line or GUI applications.It uses ANSI Escape codes (\u001b) to color the text.InstallationpipinstallansibeautifierUsagefromansibeautifierimportBeautifierb=Beautifier()print(b.red(text="Hello World",bright=False))ReturnsHello Worldin red but not bright as we have given the bright=False parameterThe default bright parameter is False.Variable UsageYou can also store the colored text in a variablefromansibeautifierimportBeautifierb=Beautifier()text=b.green(text="Hello World",bright=False)print(text)This also prints the same result but in a pythonic way!Colors supportedGreen - ansibeautifier.Beautifier.green()Red - ansibeautifier.Beautifier.red()Blue - ansibeautifier.Beautifier.blue()Black - ansibeautifier.Beautifier.black()White - ansibeautifier.Beautifier.white()Yellow - ansibeautifier.Beautifier.yellow()Magenta - ansibeautifier.Beautifier.magenta()Cyan - ansibeautifier.Beautifier.cyan()Other functionsansibeautifier.Beautifier.always_<color_name>()It colors the terminal in which you run the python code. It can be reset by using the ansibeautifier.Beautifier.reset_foreground_color() functionfromansibeautifierimportBeautifierb=Beautifier()print(b.always_white(text="Hello World"))print(b.reset_foreground_color())ansibeautifier.Beautifier.background_<color_name>()It colors the background of the text to the color specified

It can be nullified by the ansibeautifier.Beautifier.reset_background_color() functionfromansibeautifierimportBeautifierb=Beautifier()print(b.background_black(text="Hello World",bright=True))print(b.reset_colors())ansibeautifier.Beautifier.always_background_<color_name>()It colors the background of the terminal and the text specifiedfromansibeautifierimportBeautifierb=Beautifier()print(b.always_background_cyan(text="Hello World"))print(b.reset_colors())ansibeautifier.Beautifier.bold()It returns bold text. It can be neutralized by the ansibeautifier.Beautifier.reset_intensity() function.fromansibeautifierimportBeautifierb=Beautifier()print(b.bold(text="Hello World"))print(b.reset_intensity())It returnsHello Worldansibeautifier.Beautifier.underline()It returns underlined text. It can also be neutralized by the ansibeautifier.Beautifier.reset_intensity() function.fromansibeautifierimportBeautifierb=Beautifier()print(b.underline(text="Hello World",always=True))print(b.reset_intensity())This underlines all the text in the terminal and the text given.ansibeautifier.Beautifier.reverse()It returns like highlighted text.fromansibeautifierimportBeautifierb=Beautifier()print(b.reverse(text="Hello World",always=True))print(b.reset_intensity())ansibeautifier.Beautifier.conceal()It returns hidden text and you can unhide it by the ansibeautifier.Beautifier.reset_intensity() function.importtimefromansibeautifierimportBeautifierb=Beautifier()print("Hello World")print(b.conceal(text="Hello World"))print("Hello World")time.sleep(0.5)print(b.reset_intensity())Try this!Thanks for readingIf you want the source code you can visit my GitHub pagehttps://github.com/BoughtData92730/ansibeautifier

|

ansibel

|

No description available on PyPI.

|

ansibell

|

No description available on PyPI.

|

ansibilo

|

Ansibilo is a set of tools for Ansible. It provides:facto, an Ansible module to create secrets and select unused ports on the remote host (and reused them at the next run).A command line interface to export Ansible inventory in different formats.Some filter and callback plugins.A Sphinx extension which allow to include a graph of the inventory in the documentation.InstallationIntall the package fromPyPI, using pip:pipinstallansibiloOr from GitHub:gitclonegit://github.com/Polyconseil/ansibiloLinksSources:http://github.com/Polyconseil/ansibiloDocumentation:http://readthedocs.org/docs/ansibilo/Package:http://pypi.python.org/pypi/ansibilo/

|

ansiblator

|

Ansiblator==========Ansiblator - makes Ansible api more PythonicThis wrapper allows more easier way how to use Ansible in Python.Chain commands without without playbooks. More like Fabric.With this Ansible can be more powerfull and it will allow to chain commands withpython commands. Ansible documentation is on http://docs.ansible.com/.API is now trying to feel like Fabric, but it's still not complete, therewill be some changes.Get started===========For instalation you can download package and then just unpack package fromhttps://pypi.python.org/pypi/ansiblator and use it::python setup.py installor install by pip::pip install ansiblatorQuickstart==========For most quickest example you can just create your ansible host file namedansible_hosts inside your home directory or give full path to file.Ansiblator is mainly using file such as in ~/ansible_hosts.code::import ansiblator.api as anans = an.Ansiblator()ret = ans.local("uname -a", now=True, use_shell=True)ans.run("uname -a", now=True)ans.runner("uptime")ans.run_all()ans.copy(src="/tmp/aabc.csv", dest="/tmp/",pattern="pc",now=True)specify ansible hosts file and select pattern::ans = an.Ansiblator(inventory="/tmp/ansible_file", pattern="pc")use dictionary to create inventory::inv = {'pc':[{'ssh_host':'192.168.0.10', 'ssh_user':'test_user', 'su_user':'root'},{'ssh_host':'192.168.0.12', 'ssh_user':'test_user2', 'su_pass':'paasswd','su_user':'root'}]}ans = an.Ansiblator(inventory=inv)ans.run("uname -a", now=True)prepare commands and run after::ans = an.Ansiblator(run_at_once=False)ans.get(src="/tmp/file", dest="/tmp/")ans.get(src="/tmp/file2", dest="/tmp/")ans.run_all()make custom class::class Automatization(Ansiblator):def update_server(self, su=True,sudo=False):self.run("apt-get update", su=su, sudo=sudo)self.run("apt-get upgrade -y", su=su, sudo=sudo)use custom class and more patterns together::ans = Automatization(pattern=['servers', 'production', 'test', 'pc'])ans.update_server()With this, you can create full commands or functions and just pass to thempattern and run at the end.Need all modules inside Ansible?::ans = an.Ansiblator()ans.get_all_modules()#now you should be able to do >ans.user(name="hugo")#or evenans.pip(name="six", virtualenv="/tmp/venv", virtualenv_site_packages="yes")More information================Ansiblator automatically saves returned json values for actuall runs, soyou can use them for testing and conditions. For exampletesting::return_code = ans.local("uname -a", now=True, use_shell=True)return_code['contacted']orreturn_code = ans.local(["uname", "-a"], now=True, use_shell=False)return_code['contacted']Todo====- make more tests- improve logging- improve DictToInventory mapper, so more options are possible, such as groups and so onChanges=======- ability to run on more patterns- fixes on more runs- run all modules on ansibleInfo====For more information you can consult functions or actual Ansible documentation.More information can be also used on http://www.pripravto.cz. You can alsocontact us there.

|

ansible

|

AnsibleAnsible is a radically simple IT automation system. It handles configuration management, application

deployment, cloud provisioning, ad-hoc task execution, network automation, and multi-node

orchestration. Ansible makes complex changes like zero-downtime rolling updates with load balancers

easy. More information on the Ansiblewebsite.This is theansiblecommunity package.

Theansiblepython package contains a set of

independent Ansible collections that are curated by the community,

and it pulls inansible-core.

Theansible-corepython package contains the core runtime and CLI tools,

such asansibleandansible-playbook,

while theansiblepackage contains extra modules, plugins, and roles.ansiblefollowssemantic versioning.

Each major version ofansibledepends on a specific major version ofansible-coreand contains specific major versions of the collections it

includes.Design PrinciplesHave an extremely simple setup process and a minimal learning curve.Manage machines quickly and in parallel.Avoid custom-agents and additional open ports, be agentless by

leveraging the existing SSH daemon.Describe infrastructure in a language that is both machine and human

friendly.Focus on security and easy auditability/review/rewriting of content.Manage new remote machines instantly, without bootstrapping any

software.Allow module development in any dynamic language, not just Python.Be usable as non-root.Be the easiest IT automation system to use, ever.Use AnsibleYou can install a released version of Ansible withpipor a package manager. See ourInstallation guidefor details on installing Ansible

on a variety of platforms.Reporting IssuesIssues with plugins and modules in the Ansible package should be reported

on the individual collection’s issue tracker.

Issues withansible-coreshould be reported on

theansible-core issue tracker.

Issues with theansiblepackage build process or serious bugs or

vulnerabilities in a collection that are not addressed after opening an issue

in the collection’s issue tracker should be reported onansible-build-data’s issue tracker.Refer to theCommunication pagefor a

list of support channels if you need assistance from the community or are

unsure where to report your issue.Get InvolvedReadCommunity Informationfor ways to contribute to

and interact with the project, including mailing list information and how

to submit bug reports and code to Ansible or Ansible collections.Join aWorking Group, an organized community

devoted to a specific technology domain or platform.Talk to us before making larger changes

to avoid duplicate efforts. This not only helps everyone

know what is going on, but it also helps save time and effort if we decide

some changes are needed.For a list of email lists, Matrix and IRC channels, and Working Groups, see theCommunication pageCoding GuidelinesWe document our Coding Guidelines in theDeveloper Guide. We also suggest you review:Developing modules checklistCollection contributor guideBranch InfoThe Ansible package is a ‘batteries included’ package that brings inansible-coreand a curated set of collections. Ansible usessemantic versioning(for example, Ansible 5.6.0).The Ansible package has only one stable branch, called ‘latest’ in the documentation.SeeAnsible release and maintenancefor information about active branches and their correspondingansible-coreversions.Refer to theansible-build-datarepository for the exact versions ofansible-coreand collections that

are included in eachansiblerelease.RoadmapBased on team and community feedback, an initial roadmap will be published for a major

version (example: 5, 6). TheAnsible Roadmapdetails what is planned and how to influence the

roadmap.AuthorsAnsible was created byMichael DeHaanand has contributions from over 4700 users (and growing). Thanks everyone!Ansibleis sponsored byRed Hat, Inc.LicenseGNU General Public License v3.0 or laterSeeCOPYINGfor the full license text.

|

ansible-1password-lookup-plugin

|

1Password Local Lookup PluginThis is a simple lookup plugin that search for secrets in a local 1Password database (B5.sqlite format).

It uses theonepassword-local-searchpython module that

greatly improve performance over querying directly the 1Password servers.RequirementsYou require:python 3.7onepassword-local-search modulepip3 install onepassword-local-searchExample Playbook- hosts: servers

roles:

- role: mickaelperrin.ansible-onepassword-local-lookup-plugin

tasks

- debug:

msg: "{{ lookup('onepassword_local', 'p6iyvjqv4xdxw52hsacpkq4rgi', field='name') }}"

- debug:

msg: "{{ lookup('onepassword_local', 'c3264cef-1e5e-4c96-a192-26729539f3f5', field='your_custom_field') }}"

- debug:

msg: "{{ lookup('onepassword_local', '1234567890', field='password') }}"Custom uuid featureuuid in 1Password changes when you move an item from one vault to another. To prevent this issue, a custom uuid mapping feature has been implemented.You need to add on each item a field namedUUID(in capitals).Then runop-local mapping updateto generate the mapping table relationship.You can display UUID mapping by runningop-local mapping list.As we migrated from Lastpass to 1Password, we have also implemented a UUID mapping feature

related to a field namedLASTPASS_ID. If the uuid given is 100% numeric, the search query will be performed over this field.TestsTests are managed bypytestfor the python part andmoleculefor the ansible part withdockeras driver.mkvirtualenv3 ansible-onepassword-local-lookup-plugin

pip install -r requirements/dev.txtPytestpytestMoleculeEnsure thatdockerservice is up and runningmolecule testLicenseGPLv3

|

ansible2puml

|

ansible2pumlAbout ansible2pumlCreate an PlantUML activity diagram from playbooks and roles trough python.A .puml file with the PlantUML syntax is generated and a link to display the diagram as PNG is generated.RequirementsPython version >3.6Install packageInstall via pypipipinstallansible2pumlInstall via gitpipinstallgit+https://github.com/ProfileID/ansible2pumlHow toPlaybookansible2puml --source play.yml --destination play.pumlExampleSource:example-playbook.yml

|

ansible-aisnippet

|

ansible-aisnippetFeaturesQuickstartInstall python packageInstall the latest versionansible-aisnippetwithpiporpipxpipinstallansible-aisnippetUsageYou must have an openai.api_key. You can create it fromopenaiwebsite.exportOPENAI_KEY=<yourtoken>

ansible-aisnippet--helpUsage:ansible-aisnippet[OPTIONS]COMMAND[ARGS]...

╭─Options─────────────────────────────────────────────────────╮

│--version-vShowtheapplication's│

│versionandexit.│

│--install-completionInstallcompletionforthe│

│currentshell.│

│--show-completionShowcompletionforthe│

│currentshell,tocopyitor│

│customizetheinstallation.│

│--helpShowthismessageandexit.│

╰───────────────────────────────────────────────────────────────╯

╭─Commands────────────────────────────────────────────────────╮

│generateAskChatGPTtowriteanansibletaskusinga│

│template│

╰───────────────────────────────────────────────────────────────╯Generate task(s)ansible-aisnippet can generate an or several ansible task from a description or

a filetasks.ansible-aisnippetgenerate--helpUsage:ansible-aisnippetgenerate[OPTIONS][TEXT]AskChatGPTtowriteanansibletaskusingatemplate

╭─Arguments──────────────────────────────────────────────────────────────────────╮

│text[TEXT]Adescriptionoftasktoget[default:Installpackagehtop]│

╰──────────────────────────────────────────────────────────────────────────────────╯

╭─Options────────────────────────────────────────────────────────────────────────╮

│--verbose-vverbosemode│

│--filetasks-fPATH[default:None]│

│--outputfile-oPATH[default:None]│

│--playbook-pCreateaplaybook│

│--helpShowthismessageandexit.│

╰──────────────────────────────────────────────────────────────────────────────────╯Generate a taskexportOPENAI_KEY=<yourtoken>

ansible-aisnippetgenerate"execute command to start /opt/application/start.sh create /var/run/test.lock"Buildingprefixdictfromthedefaultdictionary...

Loadingmodelfromcache/tmp/jieba.cache

Loadingmodelcost0.686seconds.

Prefixdicthasbeenbuiltsuccessfully.

name:Executecommandtostart/opt/application/start.shcreate/var/run/test.lock

ansible.builtin.command:chdir:/opt/applicationcmd:./start.sh&&touch/var/run/test.lockcreates:/var/run/test.lockremoves:''Generate severals tasksansible-aisnippet can generate severals tasks from a file. The file is a yaml file which contains a list of tasks and blocksEx:-task:Install package htop, nginx and net-tools with generic module-task:Copy file from local file /tmp/toto to remote /tmp/titi set mode 0666 owner bob group wwwregister:test-name:A blockwhen:test.rc == 0block:-task:wait for port 6300 on localhost timeout 25rescue:-task:Execute command /opt/application/start.sh creates /var/run/test.lock-task:Download file from https://tmp.io/test/ set mode 0640 and force trueThis file produces this result :exportOPENAI_KEY=<yourtoken>

ansible-aisnippetgenerate-ftest.yml-p

Buildingprefixdictfromthedefaultdictionary...

Loadingmodelfromcache/tmp/jieba.cache

Loadingmodelcost0.671seconds.

Prefixdicthasbeenbuiltsuccessfully.

Result:

-name:Playbookgeneratedwithchatgpthosts:allgather_facts:truetasks:-name:Installpackagehtop,nginxandnet-toolsansible.builtin.yum:name:-htop-nginx-net-toolsstate:present-name:Copyfilefromlocalfile/tmp/tototoremote/tmp/titiansible.builtin.copy:src:/tmp/totodest:/tmp/titimode:'0666'owner:bobgroup:wwwregister:test-name:Ablockwhen:test.rc==0block:-name:Waitforport6300onlocalhosttimeout25ansible.builtin.wait_for:host:127.0.0.1port:'6300'timeout:'25'rescue:-name:Executecommand/opt/application/start.shcreates/var/run/test.lockansible.builtin.command:chdir:/tmp/testcmd:/opt/application/start.shcreates:/var/run/test.lock-name:Downloadfilefromhttps://tmp.io/test/ansible.builtin.get_url:backup:falsedecompress:truedest:/tmp/testforce:truegroup:rootmode:'0640'owner:roottimeout:'10'tmp_dest:/tmp/testurl:https://tmp.io/test/validate_certs:true

|

ansible-alicloud

|

No description available on PyPI.

|

ansible-alicloud-bak

|

No description available on PyPI.

|

ansible-alicloud-module-utils

|

No description available on PyPI.

|

ansible-anonymizer

|

Library to clean up Ansible tasks from any Personally Identifiable Information (PII)Free software: Apache Software License 2.0Anonymized fieldsCredit Card numberemail addressIP addressMAC addressUS SSNUS phone numberYAML commentpassword value, when the field name is identified as being sensitiveuser name from home directory pathUsageThe library can be used to remove the PII from a multi level structure:fromansible_anonymizer.anonymizerimportanonymize_structexample=[{"name":"foo bar","email":"[email protected]"}]anonymize_struct(example)# [{'name': 'foo bar', 'email': '[email protected]'}]But you can also anonymize a block of text:fromansible_anonymizer.anonymizerimportanonymize_text_blocksome_text="""

- name: a task

a_module:

secret: foobar

"""anonymize_text_block(some_text)# '\n- name: a task\n a_module:\n secret: "{{ secret }}"\n'You can also use theansible-anonymizercommand:ansible-anonymizer my-secret-fileCustomize the anonymized stringsBy default, the variables are anonymized with a string based on the name of the field.

You can customize it with thevalue_templateparameter:fromansible_anonymizer.anonymizerimportanonymize_structfromstringimportTemplateoriginal={"password":"$RvEDSRW#R"}value_template=Template("_${variable_name}_")anonymize_struct(original,value_template=value_template)# {'password': '_password_'}Limitationsanonymize_text_block()relies on its own text parser which only support a subset of YAML features. Because of this, it may not be able to identify some PII. When possible, useanonymize_structwhich accepts a Python structure instead.The Anonymizer is not a silver bullet and it’s still possible to see PII going through the filters.

|

ansibleapi

|

Ansible API is a REST based front-end to run Ansible Playbooks in a very lightweight server.Current features are:Query the API for what roles/playbooks are availableRun an Ansible Playbook from the API

|

ansible-api

|

ansible-api v0.5.1A restful http api for ansible

python version >= 3.7What is it?Ansibleis a radically simple IT automation system.

If you are trying to use it and not like CLI, you can try me now. I can provide you use ansible by A RESTful HTTP Api and a realtime processing message (websocket api), you can see all details.Changelog0.5.1add sha256 encryption support for signature (thx: jbackman)fit for latest ansible(v2.8.6) and ansible-runner(v1.4.2)add more error event capture in response0.5.0 replace tornado with sanic, more lightly (python>=3.7)0.3.0 using ansible-runner as middleware0.2.6 adaptive ansible 2.6.4 and add asynchronization mode0.2.2 optimize log0.2.1 optimize log and allow mutil-instance in the same host0.2.0 support websocket, remove code invaded in ansibleStructure chartHow to install[preparatory work] python version >= 3.7 (use asyncio featrue)pip3 install ansible-apiHow to start itdefault configuration: /etc/ansible/api.cfgstart:ansible-api -c [Configfile, Optional] -d [Daemon Mode, Optional]eg: ansible-api -c /etc/ansible/api.cfg -d > /dev/null &How to prepare your dataHTTP API Usage

|

ansible-apply

|

Ansible-apply takes the tasks file spec as first argument:ansible-apply role.name

ansible-apply role.name/some_tasks

ansible-apply https://some/playbook.yml

ansible-apply ./playbook.ymlIt will automatically download the playbook or role if not found.Then, the command takes any number of hosts and inventory variables on

the command line:ansible-apply role.name server1 server2 update=trueFinnaly, any argument passed with dashes are forwarded to the

ansible-playbook command it generates, but named args must use the=notation, and not a space to not confuse the command line parser:# works:

ansible-apply role.name server2 update=true --become-user=root

# does not:

ansible-apply role.name server2 update=true --become-user root

|

ansible-argspec-gen

|

This package contains code for Ansible argument specification program. Its main

audience are Ansible module maintainers that would like to reduce the

duplication in their modules by generating the argument specification directly

from the module’s user documentation.QuickstartDocumentation extractor is published onPyPIand we can install it usingpip:$ pip install ansible-argspec-gen[base] # This will install ansible-base

$ pip install ansible-argspec-gen[ansible] # This will install ansible

$ pip install ansible-argspec-gen # We need to install ansible or

# ansible-base ourselvesIf the previous command did not fail, we are ready to start updating our

modules. When we use the generator for the first time, we need to perform the

following three steps:Add two comments to the module’s source that will mark the location for the

generated code. By default, the generator searched for the# AUTOMATIC MODULE ARGUMENTScomment, but this can be changed with the--markercommand-line parameter.Run the generator, possibly in dry-run and diff mode first to spot any

issues.Remove any hand-writen remnants that are not needed anymore.For example, let us assume that the first few lines of our module’s main

function looks like this before the generator run:def main():

# AUTOMATIC MODULE ARGUMENTS

# AUTOMATIC MODULE ARGUMENTS

module = AnsibleModule(If we run the the generator now in check mode with difference priting switched

on, we will get back something like this:$ ansible-argspec-gen --diff --dry-run plugins/modules/route.py

--- ../ansible_collections/steampunk/nginx_unit/plugins/modules/route.py.old

+++ ../ansible_collections/steampunk/nginx_unit/plugins/modules/route.py.new

@@ -359,6 +359,52 @@

def main():

# AUTOMATIC MODULE ARGUMENTS

+ argument_spec = {

+ "global": {"default": False, "type": "bool"},

+ "name": {"type": "str"},

+ "socket": {"type": "path"},

+ "state": {

+ "choices": ["present", "absent"],

+ "default": "present",

+ "type": "str",

+ },

+ }

+ required_if = [("global", False, ("name",)), ("state", "present", ("steps",))]

# AUTOMATIC MODULE ARGUMENTS

module = AnsibleModule(Once we are happy wth the proposed changes, we can write them to the file:$ ansible-argspec-gen plugins/modules/route.pyIf we update the module’s documentation, we can simply rerun the previous

command and generator will take or updating the specification. Note that the

generator willoverwritethe content between the markers, so make sure you

do not manually modify that part of the file or you will loose the changes on

next update.Writing module documentationGenerating argument specification for theAnsibleModuleclass should work

on any module that has a documentation. But getting the generator to produce

other parameters such as conditional requirements takes a bit of work.In order to generate arequired_ifspecification, our parameters need to

have a sentence in its description that fits the templaterequired if

I({param_name}) is C({param_value}). The next example:options:

name:

description:

- Name of the resource. Required if I(state) is C(present).will produce the following specification:required_if = [("state", "present", ("name", ))]Another thing that generator knows how to produce is themutually_exclusivespecification. The pattern that the generator is looking for in this case isMutually exclusive with I({param1}), I({param2}), and I({param3}), where the

number of parameters that we can specify is not limited. Example:options:

processes:

description:

- Dynamic process limits.

- Mutually exclusive with I(no_processes).

no_processes:

description:

- Static process limit.

- Mutually exclusive with I(processes).This will produce:mutually_exclusive = [("no_processes", "processes")]Development setupGetting development environment up and running is relatively simple if we

havepipenvinstalled:$ pipenv updateTo test the extractor, we can run:$ pipenv run ansible-argspec-gen

|

ansible-art

|

A simple tool to apply role of ansible

|

ansible-autodoc

|

ansible-autodocGenerate documentation from annotated playbooks and roles using templates.Note: this project is currently in Beta, issues, ideas and pull requests are welcome.Featuresallow to document playbook projects and rolesuse templates to generate and maintain the documentationextended functions when documenting:tags: the autodoc will search for used tags in the projectGetting started# install

pip install ansible-autodoc

# print help

ansible-autodoc -h

# print parsed annotation results in the cli

ansible-autodoc -p all path/to/role_or_playbook

# generate README file based on annotations

ansible-autodoc [path/to/project]notes:you can usegripto see the live changes.this only runs with python 3, if you still have python 2.x use pip3AnnotationsUse the following annotations in your playbooks and rolesmeta:use @meta to annotate the metadata of playbook or role, like author

check below list of useful metadataauthor: (self explanatory)description: playbook / role descriptionname: to define a different role/project name instead of the folder namelicense: (self explanatory)email: (self explanatory)# @meta author: Author Name# @meta description: Project descriptiontodo:use @todo to annotate improvements, bugs etc# @todo bug: bug description# @todo improvement: improvementaction:use @action to annotate a actions performed by the playbook/role# @action install # this action describes the installation# @action # this action does not have a section, only descriptiontags:use @tag to annotate tags, this is a special annotation as this will not only search for annotations,

but also for used tags in the project and add that to the generated output.# @tag tagname # tag descriptionvariables:use @var this to annotate configuration variables# @var my_var: default_value # description of the variableexample:the idea is that after every annotation, we can define an example block, linked to the annotation.

in this case the example will be part of the var annotation.# @var my_var: default_value # description of the variablemy_var:default_value# @example # the hash is needed due to the parser constrains# my_var:# - subitem: string# - subitem2: string# @endTemplatesansible-autodoc comes with 3 templates out of the box, the default is "readme", you can change this in configuration.If you want to create your own project specific templates, see thetemplate documentationIf a file already exists in the output, the you will be prompted to overwrite or abort.READMEThe default "readme" template will generate a README.md file in the root of the project, detailing the sections:title and descriptionactionstagsvariablestodoslicenseauthor infomrationyou can extend this my creating a file"_readme_doby.md"in the root of your project, this will be included in the rendered Readme just after the

initial description.Doc and READMEThe "doc_and_readme" template is an extended template intended to be used playbook projects with several roles, it will generate a minimal

README.md file and a documentation subfolder "doc" with more detailed information.you can extend this my creating a file"_readme_doby.md"in the root of your project, this will be included in the rendered Readme just after the

initial description.the files created in the documentation folder will cover:tags: list all tags classified by rolesvariables: list all variables classified by rolestodo: list all todo actions classified by rolesreport: provides a report of the project and useful information during developmentyou can extend the documentation in this folder, just keep in mind that generated files will be overwritten.Command lineThe "cliprint" template is used to display the content when you use the command line print parameter "-p"Configurationyou can create a configuration file "autodoc.config.yaml" in the root of your project in order to modify

several behaviours, see the sample config file for more details:# role or project with playbooks

$ cd <project>

# create sample configuration (optional)

# you can pass the options as parameters too

$ ansible-autodoc --sample-doc > autodoc.config.yaml

|

ansible-autodoc-fork

|

ansible-autodocGenerate documentation from annotated playbooks and roles using templates.Note: this project is currently in Beta, issues, ideas and pull requests are welcome.Featuresallow to document playbook projects and rolesuse templates to generate and maintain the documentationextended functions when documenting:tags: the autodoc will search for used tags in the projectGetting started# install

pip install ansible-autodoc

# print help

ansible-autodoc -h

# print parsed annotation results in the cli

ansible-autodoc -p all path/to/role_or_playbook

# generate README file based on annotations

ansible-autodoc [path/to/project]notes:you can usegripto see the live changes.this only runs with python 3, if you still have python 2.x use pip3AnnotationsUse the following annotations in your playbooks and rolesmeta:use @meta to annotate the metadata of playbook or role, like author

check below list of useful metadataauthor: (self explanatory)description: playbook / role descriptionname: to define a different role/project name instead of the folder namelicense: (self explanatory)email: (self explanatory)# @meta author: Author Name# @meta description: Project descriptiontodo:use @todo to annotate improvements, bugs etc# @todo bug: bug description# @todo improvement: improvementaction:use @action to annotate a actions performed by the playbook/role# @action install # this action describes the installation# @action # this action does not have a section, only descriptiontags:use @tag to annotate tags, this is a special annotation as this will not only search for annotations,

but also for used tags in the project and add that to the generated output.# @tag tagname # tag descriptionvariables:use @var this to annotate configuration variables# @var my_var: default_value # description of the variableexample:the idea is that after every annotation, we can define an example block, linked to the annotation.

in this case the example will be part of the var annotation.# @var my_var: default_value # description of the variablemy_var:default_value# @example # the hash is needed due to the parser constrains# my_var:# - subitem: string# - subitem2: string# @endTemplatesansible-autodoc comes with 3 templates out of the box, the default is "readme", you can change this in configuration.If you want to create your own project specific templates, see thetemplate documentationIf a file already exists in the output, the you will be prompted to overwrite or abort.READMEThe default "readme" template will generate a README.md file in the root of the project, detailing the sections:title and descriptionactionstagsvariablestodoslicenseauthor infomrationyou can extend this my creating a file"_readme_doby.md"in the root of your project, this will be included in the rendered Readme just after the

initial description.Doc and READMEThe "doc_and_readme" template is an extended template intended to be used playbook projects with several roles, it will generate a minimal

README.md file and a documentation subfolder "doc" with more detailed information.you can extend this my creating a file"_readme_doby.md"in the root of your project, this will be included in the rendered Readme just after the

initial description.the files created in the documentation folder will cover:tags: list all tags classified by rolesvariables: list all variables classified by rolestodo: list all todo actions classified by rolesreport: provides a report of the project and useful information during developmentyou can extend the documentation in this folder, just keep in mind that generated files will be overwritten.Command lineThe "cliprint" template is used to display the content when you use the command line print parameter "-p"Configurationyou can create a configuration file "autodoc.config.yaml" in the root of your project in order to modify

several behaviours, see the sample config file for more details:# role or project with playbooks

$ cd <project>

# create sample configuration (optional)

# you can pass the options as parameters too

$ ansible-autodoc --sample-doc > autodoc.config.yaml

|

ansibleawx-client

|

Ansible AWX ClientDonate to help keep this project maintainedSummaryThis is a unofficial python API client for Ansible AWX.RequirementsrequestsQuick Start GuideInstall Ansible AWX Clientpip install ansibleawx-clientInitialize API ClientYou can do this with your username and password or using your Token.Initialize client with your username and passwordimport ansibleawx

API_URL = "http://my-ansibleawx.com/api/v2"

client = ansibleawx.Api("username", "password", api_url=API_URL)Initialize client with your tokenimport ansibleawx

API_URL = "http://my-ansibleawx.com/api/v2"

TOKEN = "AAAAAAAAAAAAAAAAAAAAAAAAAAAAAA"

client = ansibleawx.Api(api_url=API_URL, token=TOKEN)ExamplesGet Inventories# to get all inventories

response = client.get_inventories()

# to get specific inventory by id

response = client.get_inventories(1)Get Jobs Templates# to get all jobs templates

response = client.get_jobs_templates()

# to get specific job template by id

response = client.get_jobs_templates(1)Launch Job Template by idresponse = client.launch_job_template(1)Relaunch Job by idresponse = client.relaunch_job(1)Cancel Job by idresponse = client.cancel_job(1)Function ReferenceConsult theAnsible Tower documentationfor more details.

|

ansible-base

|

AnsibleAnsible is a radically simple IT automation system. It handles

configuration management, application deployment, cloud provisioning,

ad-hoc task execution, network automation, and multi-node orchestration. Ansible makes complex

changes like zero-downtime rolling updates with load balancers easy. More information onthe Ansible website.Design PrinciplesHave a dead simple setup process and a minimal learning curve.Manage machines very quickly and in parallel.Avoid custom-agents and additional open ports, be agentless by

leveraging the existing SSH daemon.Describe infrastructure in a language that is both machine and human

friendly.Focus on security and easy auditability/review/rewriting of content.Manage new remote machines instantly, without bootstrapping any

software.Allow module development in any dynamic language, not just Python.Be usable as non-root.Be the easiest IT automation system to use, ever.Use AnsibleYou can install a released version of Ansible viapip, a package manager, or

ourrelease repository. See ourinstallation guidefor details on installing Ansible

on a variety of platforms.Red Hat offers supported builds ofAnsible Engine.Power users and developers can run thedevelbranch, which has the latest

features and fixes, directly. Although it is reasonably stable, you are more likely to encounter

breaking changes when running thedevelbranch. We recommend getting involved

in the Ansible community if you want to run thedevelbranch.Get InvolvedReadCommunity

Informationfor all

kinds of ways to contribute to and interact with the project,

including mailing list information and how to submit bug reports and

code to Ansible.Join aWorking Group, an organized community devoted to a specific technology domain or platform.Submit a proposed code update through a pull request to thedevelbranch.Talk to us before making larger changes

to avoid duplicate efforts. This not only helps everyone

know what is going on, it also helps save time and effort if we decide

some changes are needed.For a list of email lists, IRC channels and Working Groups, see theCommunication pageCoding GuidelinesWe document our Coding Guidelines in theDeveloper Guide. We particularly suggest you review:Contributing your module to AnsibleConventions, tips and pitfallsBranch InfoThedevelbranch corresponds to the release actively under development.Thestable-2.Xbranches correspond to stable releases.Create a branch based ondeveland set up adev environmentif you want to open a PR.See theAnsible release and maintenancepage for information about active branches.RoadmapBased on team and community feedback, an initial roadmap will be published for a major or minor version (ex: 2.7, 2.8).

TheAnsible Roadmap pagedetails what is planned and how to influence the roadmap.AuthorsAnsible was created byMichael DeHaanand has contributions from over 4700 users (and growing). Thanks everyone!Ansibleis sponsored byRed Hat, Inc.LicenseGNU General Public License v3.0 or laterSeeCOPYINGto see the full text.

|

ansible-bender

|

ansible-benderThis tool bends containers usingAnsibleplaybooksand turns them into container images. It has a pluggable builder selection —

it is up to you to pick the tool which will be used to construct your container

image. Right now the only supported builder isbuildah.Moreto

comein the future.

Ansible-bender (ab) relies onAnsible connection

pluginsfor

performing builds.tl;dr Ansible is the frontend, buildah is the backend.The concept is described in following blog posts:Building containers with buildah and ansible.Ansible and Podman Can Play Together Now.Looking for maintainers ❤This project doesn't have an active maintainer right now thatwould watch issues daily.If you are a user of ansible-bender and are familiar with Python, please consider becoming a maintainer.FeaturesYou can build your container images with buildah as a backend.Ansible playbook is your build recipe.You are able to set various image metadata via CLI or as specific Ansible vars:working directoryenvironment variableslabelsuserdefault commandexposed portsYou can do volume mounts during build.Caching mechanism:Every task result is cached as a container image layer.You can turn this off with--no-cache.You can disable caching from a certain point by adding a tagno-cacheto a task.You can stop creating new image layers by adding tagstop-layeringto a task.If an image build fails, it's committed and named with a suffix-[TIMESTAMP]-failed(so

you can take a look inside and resolve the issue).The tool tries to find python interpreter inside the base image.You can push images you built to remote locations such as:a registry, a tarball, docker daemon, ...podman pushis used to perform the push.DocumentationYou can read more about this project in the documentation:Documentation homeInterfaceInstallationConfigurationUsageCaching and Layering mechanismContribution guideAnsible-bender in OKD

|

ansible-builder

|

Ansible BuilderAnsible Builder is a tool that automates the process of building execution

environments using the schemas and tooling defined in various Ansible

Collections and by the user.See the readthedocs page foransible-builderat:https://ansible-builder.readthedocs.io/en/stable/Get Involved:We useGitHub issuesto

track bug reports and feature ideasWant to contribute, check out ourguideJoin us in the#ansible-builderchannel on Libera.chat IRCFor the full list of Ansible email Lists, IRC channels and working groups,

check out theAnsible Mailing

listspage of the official Ansible documentation.Code of ConductWe ask all of our community members and contributors to adhere to theAnsible

code of

conduct. If

you have questions, or need assistance, please reach out to our community team

[email protected] License v2.0

|

ansible-bundle

|

# ansible-bundleSmall tool for automatic download roles and libs a-la-Gemfile# PreambleAs many roles have changed their configurations among time, anyone would use aspecific version of a role (for instance, a commit, or a branch, or a tag).Moreover, a complex playbook could need different versions from the same role.This app will download roles (bundles, from now on) from theirrepositories before launching a playbook. _That means that a role should be inits own repository_.# Prerequisites- Ansible. Any version.- Git >= 1.8.5- Python 2 >= 2.6# Installation## The easy way: pip`sudo pip install ansible-bundle`## The not-that-easy way: from code- Download [latest release](../../archive/master.zip)- Run `sudo python setup.py`# Syntax`ansible-bundle FILEYAML [ansible-playbook-options] [ansible-bundle-options]`ansible-bundle, along the ansible-playbook parameters, has also these:- `--bundle-clean-roles` Will clean roles and library directories before download (*)- `--bundle-dry` Shows what will be run (as it won't download anything,also won't search for dependencies)- `--bundle-deps-only` Don't run the playbook, just satisfy dependencies.- `--bundle-disable-color` Useful for non-interactive consoles- `--bundle-workers` concurrent connections when downloading/updating roles. Default: 1- `--bundle-safe-update` Don't clean existing roles. (*)(*) If both `bundle-clean-roles` and `bundle-safe-update` are set, `bundle-clean-roles` will take effect.# Configurationansible-bundle expects to find a `[bundle]` section into ansible.cfg, which maycontain some of the command lines parameters:- workers- verbosity- safeAnd the following extra options:- `url`: URL where the roles are located. For example, if role `apache` is in`github.com/foo/roles/apache`, the `url` should be set to `github.com/foo/roles`.Default is 'https://github.com'## bundle.cfg example[bundle]url='[email protected]:devopsysadmin/ansible-roles'workers=5verbosity=1# Example of useGiven the following playbook (site.yml):- include: site-common.ymltags:- common- hosts: allroles:- [email protected] { role: apache, version: '2.4' }Running `ansible-bundle site.yml` will search roles into the `site-common.yml` file andadd to download queue, which already includes postgresql 1.0 and apache master.Please note that each role is intended to be in its own repository, not in a folder.# Changelog## v 0.6- Syntax role/version changes to role@version. This simplifies the configuration inansible.cfg and allows branches names such as feature/something in role version.If a versioned role with the previous syntax is found, will complain with adeprecation warning. The previous syntax will be obsoleted in v 0.7.# AuthorDavid Pedersen (david.3pwood AT gmail DOT com)# LicenseGNU Public License v2 (GPLv2)

|

ansible-butler

|

ansible-butlerButler CLI for Ansible projectsFunctionsObjectActionDescriptiondirectoryinitinitialize an ansible directorydirectorycleancleanup an ansible directoryeeinitinitialize an execution environment directory for ansible-buildereedependencies[deps]parse the dependency tree based on execution environment definition (or collection requirements)rolelistlist rolesroledependencies[deps]Build a dependency graph between roles in a specified directoryrolecleanclean role directory structure (remove empty yml files & dirs)rolemk-readmeauto generate readme based on role meta and basic yml infoplaybookupdatemap legacy module names to FQCNsplaybooklist-collections[lc]list collections used in a playbook (following include_* directives)UsageUsage:

ansible-butler directory init [<dir>] [--config=PATH]

ansible-butler directory clean [<dir>] [--skip-roles]

ansible-butler ee init [<dir>] [--config=PATH]

ansible-butler ee [dependencies|deps] [--config=PATH] [<name>]

ansible-butler role list [--roles-path=PATH] [<name> --recursive]

ansible-butler role [dependencies|deps] [--roles-path=PATH]

ansible-butler role clean [--roles-path=PATH] [<name> --recursive]

ansible-butler role mk-readme [--roles-path=PATH] [<name> --recursive]

ansible-butler playbook update [--context=CONTEXT] [--config=PATH] [<name>] [--recursive] [--force]

ansible-butler playbook [list-collections|lc] [--context=CONTEXT] [--config=PATH] [<name>] [--recursive] [--force]

Arguments:

name name of target (accepts glob patterns)

dir path to directory [default: ./]

Options:

-h --help Show this screen

-r --recursive Apply glob recursively [default: False]

-f --force Make file changes in place

--config=PATH Path to config file

--roles-path=PATH Path to roles directory [default: ./roles]

--context=CONTEXT Path to context directory [default: ./]

--skip-roles Flag to skip cleaning rolesExamplesInitialize Ansible Directoryansible-butler directory init ./sandboxansible-butler directory init ./sandbox --config=~/configs/ansible-butler.ymlClean an Ansible Directoryansible-butler directory clean ./sandboxansible-butler directory clean ./sandbox --skip-rolesInitialize Execution Environment Directoryansible-butler ee init ./ee-windowsansible-butler ee init ./ee-windows --config=~/configs/ansible-butler.ymlInspect Execution Environment Dependenciesansible-butler ee dependencies execution-environment.ymlansible-butler ee deps requirements.yml --config=~/configs/ansible-butler.ymlClean Rolesansible-butler role clean my-role-1ansible-butler role clean my-role-*List Rolesansible-butler role listansible-butler role list ansible_collections/namespace/collection/rolesGenerate Dependency Graphansible-butler role depsansible-butler role deps ansible_collections/namespace/collection/rolesGenerate READMEansible-butler role mk-readme my-role-1ansible-butler role mk-readme my-role-*Update Playbooksansible-butler playbook update --context=./playbooks -ransible-butler playbook update legacy-*.ymlansible-butler playbook update -fList Collectionsansible-butler playbook list-collections --context=./playbooks -ransible-butler playbook lc example-playbook.ymlConfigurationCreate an.ansible-butler.ymlin one or more of the following locations:/etc/ansible-butler/ ## least precedence

~/

./ ## highest precedenceYou can also specify a specific path at runtime via the--configoption.# Example Configuration Schemaexecution_environment:init:version:2ansible_config:ansible.cfgee_base_image:quay.io/ansible/ansible-runner:latestee_builder_image:quay.io/ansible/ansible-builder:latestprepend_build_steps:-...append_build_steps:-...directory:init:folders:-name:pluginsfolders:...files:-README.mdfiles:-playbook.ymlrole:dependencies:output_fmt:html# [html,json]output_dest:graph.htmlinclude_tests:falsemaster_node:role-common-setupinitial_direction:downstreamtitle:ansible-butler roles dependency graphtitle_text_color:whitetitle_background_color:blacktree_options:# Customize the color palettecircleStrokeColor:'#2b8f91'linkStrokeColor:'#dddddd'closedNodeCircleColor:'#9bd3d4'openNodeCircleColor:whitecyclicNodeColor:'#FF4242'missingNodeColor:'#CC0100'maxDepthNodeColor:'#FF5850'playbook:update:modules:smart_device:redirect:zjleblanc.kasa.smart_devicecustom_module:redirect:company.it.custom_module🔗 Default configuration file🔗 Example adding test plugins directory🔗 Example adding module redirectsTroubleshootingansible-butler: command not foundcheck the $PATH environment variable and ensure that~/.local/binis includedLicenseGNU General Public LicenseAuthor InformationZach LeBlancRed Hat

|

ansible-cached-lookup

|

ansible-cached-lookupAn Ansible lookup plugin that caches the results of any other lookup, most

useful in group/host vars.By default, Ansible evaluates any lookups in a group/host var whenever the var

is accessed. For example, given a group/host var:content:"{{lookup('pipe','a-very-slow-command'}}"any tasks that accesscontent(e.g. in a template) will re-evaluate the

lookup, which adds up very quickly. Seeansible/ansible#9263.InstallationPick a name that you want to use to call this plugin in Ansible playbooks.

This documentation assumes you're using the namecached.pip install ansible-cached-lookupCreate alookup_pluginsdirectory in the directory in which you run Ansible.By default, Ansible will look for lookup plugins in anlookup_pluginsfolder

adjacent to the running playbook. For more information on this, or to change

the location where Ansible looks for lookup plugins, see theAnsible

docs.Create a file calledcached.py(or whatever name you picked) in thelookup_pluginsdirectory, with one line:fromansible_cached_lookupimportLookupModuleContributingTo run the tests, runtox.To format code to passtox -e lint, runtox -e format.

|

ansible-cmdb

|

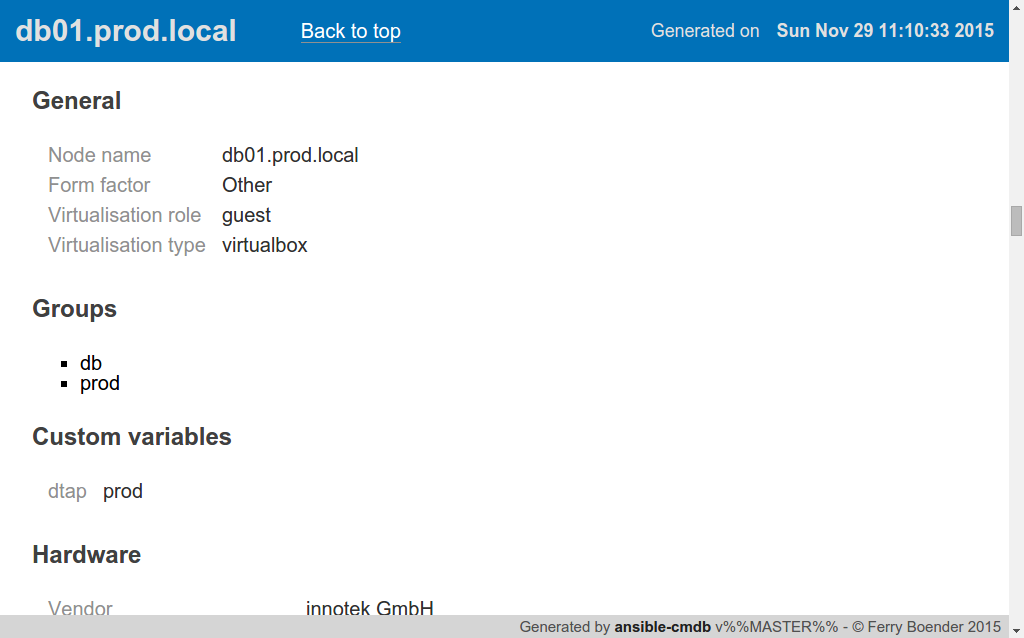

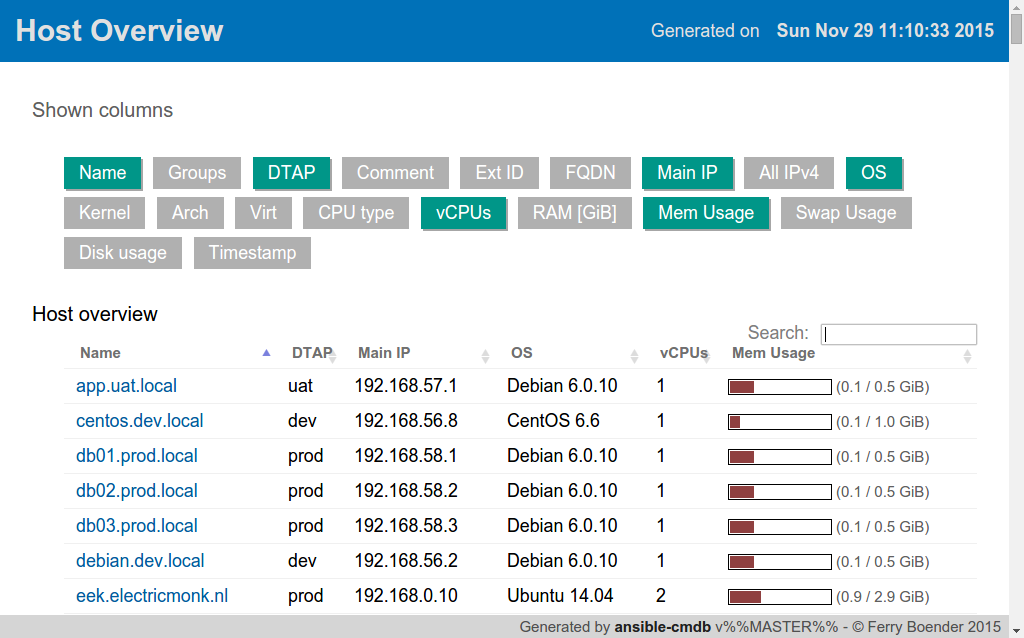

AboutAnsible-cmdb takes the output of Ansible’s fact gathering and converts it into

a static HTML overview page (and other things) containing system configuration

information.It supports multiple types of output (html, csv, sql, etc) and extending