url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 46

51

| id

int64 599M

1.47B

| node_id

stringlengths 18

32

| number

int64 1

5.33k

| title

stringlengths 1

276

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

list | milestone

dict | comments

list | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| closed_at

stringlengths 20

20

⌀ | author_association

stringclasses 3

values | active_lock_reason

null | draft

bool 2

classes | pull_request

dict | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

null | state_reason

stringclasses 3

values | is_pull_request

bool 2

classes |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/3800

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3800/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3800/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3800/events

|

https://github.com/huggingface/datasets/pull/3800

| 1,155,620,761 |

PR_kwDODunzps4zvkjA

| 3,800 |

Added computer vision tasks

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/53175384?v=4",

"events_url": "https://api.github.com/users/merveenoyan/events{/privacy}",

"followers_url": "https://api.github.com/users/merveenoyan/followers",

"following_url": "https://api.github.com/users/merveenoyan/following{/other_user}",

"gists_url": "https://api.github.com/users/merveenoyan/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/merveenoyan",

"id": 53175384,

"login": "merveenoyan",

"node_id": "MDQ6VXNlcjUzMTc1Mzg0",

"organizations_url": "https://api.github.com/users/merveenoyan/orgs",

"received_events_url": "https://api.github.com/users/merveenoyan/received_events",

"repos_url": "https://api.github.com/users/merveenoyan/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/merveenoyan/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/merveenoyan/subscriptions",

"type": "User",

"url": "https://api.github.com/users/merveenoyan"

}

|

[] |

closed

| false | null |

[] | null |

[] |

2022-03-01T17:37:46Z

|

2022-03-04T07:15:55Z

|

2022-03-04T07:15:55Z

|

CONTRIBUTOR

| null | false |

{

"diff_url": "https://github.com/huggingface/datasets/pull/3800.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3800",

"merged_at": "2022-03-04T07:15:55Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3800.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3800"

}

|

Previous PR was in my fork so thought it'd be easier if I do it from a branch. Added computer vision task datasets according to HF tasks.

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3800/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3800/timeline

| null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3799

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3799/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3799/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3799/events

|

https://github.com/huggingface/datasets/pull/3799

| 1,155,356,102 |

PR_kwDODunzps4zus9R

| 3,799 |

Xtreme-S Metrics

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/patrickvonplaten",

"id": 23423619,

"login": "patrickvonplaten",

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"type": "User",

"url": "https://api.github.com/users/patrickvonplaten"

}

|

[] |

closed

| false | null |

[] | null |

[

"@lhoestq - if you could take a final review here this would be great (if you have 5min :-) ) ",

"Don't think the failures are related but not 100% sure",

"Yes the CI fail is unrelated - you can ignore it"

] |

2022-03-01T13:42:28Z

|

2022-03-16T14:40:29Z

|

2022-03-16T14:40:26Z

|

MEMBER

| null | false |

{

"diff_url": "https://github.com/huggingface/datasets/pull/3799.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3799",

"merged_at": "2022-03-16T14:40:26Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3799.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3799"

}

|

**Added datasets (TODO)**:

- [x] MLS

- [x] Covost2

- [x] Minds-14

- [x] Voxpopuli

- [x] FLoRes (need data)

**Metrics**: Done

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3799/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3799/timeline

| null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3798

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3798/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3798/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3798/events

|

https://github.com/huggingface/datasets/pull/3798

| 1,154,411,066 |

PR_kwDODunzps4zrl5Y

| 3,798 |

Fix error message in CSV loader for newer Pandas versions

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/mariosasko",

"id": 47462742,

"login": "mariosasko",

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"type": "User",

"url": "https://api.github.com/users/mariosasko"

}

|

[] |

closed

| false | null |

[] | null |

[] |

2022-02-28T18:24:10Z

|

2022-02-28T18:51:39Z

|

2022-02-28T18:51:38Z

|

CONTRIBUTOR

| null | false |

{

"diff_url": "https://github.com/huggingface/datasets/pull/3798.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3798",

"merged_at": "2022-02-28T18:51:38Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3798.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3798"

}

|

Fix the error message in the CSV loader for `Pandas >= 1.4`. To fix this, I directly print the current file name in the for-loop. An alternative would be to use a check similar to this:

```python

csv_file_reader.handle.handle if datasets.config.PANDAS_VERSION >= version.parse("1.4") else csv_file_reader.f

```

CC: @SBrandeis

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 1,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3798/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3798/timeline

| null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3797

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3797/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3797/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3797/events

|

https://github.com/huggingface/datasets/pull/3797

| 1,154,383,063 |

PR_kwDODunzps4zrgAD

| 3,797 |

Reddit dataset card contribution

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/56791604?v=4",

"events_url": "https://api.github.com/users/anna-kay/events{/privacy}",

"followers_url": "https://api.github.com/users/anna-kay/followers",

"following_url": "https://api.github.com/users/anna-kay/following{/other_user}",

"gists_url": "https://api.github.com/users/anna-kay/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/anna-kay",

"id": 56791604,

"login": "anna-kay",

"node_id": "MDQ6VXNlcjU2NzkxNjA0",

"organizations_url": "https://api.github.com/users/anna-kay/orgs",

"received_events_url": "https://api.github.com/users/anna-kay/received_events",

"repos_url": "https://api.github.com/users/anna-kay/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/anna-kay/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/anna-kay/subscriptions",

"type": "User",

"url": "https://api.github.com/users/anna-kay"

}

|

[] |

closed

| false | null |

[] | null |

[] |

2022-02-28T17:53:18Z

|

2022-03-01T12:58:57Z

|

2022-03-01T12:58:57Z

|

CONTRIBUTOR

| null | false |

{

"diff_url": "https://github.com/huggingface/datasets/pull/3797.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3797",

"merged_at": "2022-03-01T12:58:56Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3797.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3797"

}

|

Description tags for webis-tldr-17 added.

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3797/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3797/timeline

| null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3796

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3796/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3796/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3796/events

|

https://github.com/huggingface/datasets/pull/3796

| 1,154,298,629 |

PR_kwDODunzps4zrOQ4

| 3,796 |

Skip checksum computation if `ignore_verifications` is `True`

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/mariosasko",

"id": 47462742,

"login": "mariosasko",

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"type": "User",

"url": "https://api.github.com/users/mariosasko"

}

|

[] |

closed

| false | null |

[] | null |

[] |

2022-02-28T16:28:45Z

|

2022-02-28T17:03:46Z

|

2022-02-28T17:03:46Z

|

CONTRIBUTOR

| null | false |

{

"diff_url": "https://github.com/huggingface/datasets/pull/3796.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3796",

"merged_at": "2022-02-28T17:03:46Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3796.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3796"

}

|

This will speed up the loading of the datasets where the number of data files is large (can easily happen with `imagefoler`, for instance)

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3796/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3796/timeline

| null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3795

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3795/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3795/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3795/events

|

https://github.com/huggingface/datasets/issues/3795

| 1,153,261,281 |

I_kwDODunzps5EvV7h

| 3,795 |

can not flatten natural_questions dataset

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/38466901?v=4",

"events_url": "https://api.github.com/users/Hannibal046/events{/privacy}",

"followers_url": "https://api.github.com/users/Hannibal046/followers",

"following_url": "https://api.github.com/users/Hannibal046/following{/other_user}",

"gists_url": "https://api.github.com/users/Hannibal046/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/Hannibal046",

"id": 38466901,

"login": "Hannibal046",

"node_id": "MDQ6VXNlcjM4NDY2OTAx",

"organizations_url": "https://api.github.com/users/Hannibal046/orgs",

"received_events_url": "https://api.github.com/users/Hannibal046/received_events",

"repos_url": "https://api.github.com/users/Hannibal046/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/Hannibal046/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Hannibal046/subscriptions",

"type": "User",

"url": "https://api.github.com/users/Hannibal046"

}

|

[

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] |

closed

| false |

{

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq"

}

|

[

{

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq"

}

] | null |

[

"same issue. downgrade it to a lower version.",

"Thanks for reporting, I'll take a look tomorrow :)"

] |

2022-02-27T13:57:40Z

|

2022-03-21T14:36:12Z

|

2022-03-21T14:36:12Z

|

NONE

| null | null | null |

## Describe the bug

after downloading the natural_questions dataset, can not flatten the dataset considering there are `long answer` and `short answer` in `annotations`.

## Steps to reproduce the bug

```python

from datasets import load_dataset

dataset = load_dataset('natural_questions',cache_dir = 'data/dataset_cache_dir')

dataset['train'].flatten()

```

## Expected results

a dataset with `long_answer` as features

## Actual results

Traceback (most recent call last):

File "temp.py", line 5, in <module>

dataset['train'].flatten()

File "/Users/hannibal046/anaconda3/lib/python3.8/site-packages/datasets/fingerprint.py", line 413, in wrapper

out = func(self, *args, **kwargs)

File "/Users/hannibal046/anaconda3/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 1296, in flatten

dataset._data = update_metadata_with_features(dataset._data, dataset.features)

File "/Users/hannibal046/anaconda3/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 536, in update_metadata_with_features

features = Features({col_name: features[col_name] for col_name in table.column_names})

File "/Users/hannibal046/anaconda3/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 536, in <dictcomp>

features = Features({col_name: features[col_name] for col_name in table.column_names})

KeyError: 'annotations.long_answer'

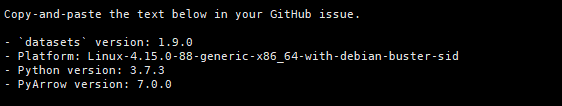

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 1.8.13

- Platform: MBP

- Python version: 3.8

- PyArrow version: 6.0.1

|

{

"+1": 1,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3795/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3795/timeline

| null |

completed

| false |

https://api.github.com/repos/huggingface/datasets/issues/3794

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3794/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3794/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3794/events

|

https://github.com/huggingface/datasets/pull/3794

| 1,153,185,343 |

PR_kwDODunzps4zniT4

| 3,794 |

Add Mahalanobis distance metric

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/17574157?v=4",

"events_url": "https://api.github.com/users/JoaoLages/events{/privacy}",

"followers_url": "https://api.github.com/users/JoaoLages/followers",

"following_url": "https://api.github.com/users/JoaoLages/following{/other_user}",

"gists_url": "https://api.github.com/users/JoaoLages/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/JoaoLages",

"id": 17574157,

"login": "JoaoLages",

"node_id": "MDQ6VXNlcjE3NTc0MTU3",

"organizations_url": "https://api.github.com/users/JoaoLages/orgs",

"received_events_url": "https://api.github.com/users/JoaoLages/received_events",

"repos_url": "https://api.github.com/users/JoaoLages/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/JoaoLages/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/JoaoLages/subscriptions",

"type": "User",

"url": "https://api.github.com/users/JoaoLages"

}

|

[] |

closed

| false | null |

[] | null |

[] |

2022-02-27T10:56:31Z

|

2022-03-02T14:46:15Z

|

2022-03-02T14:46:15Z

|

CONTRIBUTOR

| null | false |

{

"diff_url": "https://github.com/huggingface/datasets/pull/3794.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3794",

"merged_at": "2022-03-02T14:46:14Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3794.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3794"

}

|

Mahalanobis distance is a very useful metric to measure the distance from one datapoint X to a distribution P.

In this PR I implement the metric in a simple way with the help of numpy only.

Similar to the [MAUVE implementation](https://github.com/huggingface/datasets/blob/master/metrics/mauve/mauve.py), we can make this metric accept texts as input and encode them with a featurize model, if that is desirable.

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3794/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3794/timeline

| null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3793

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3793/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3793/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3793/events

|

https://github.com/huggingface/datasets/pull/3793

| 1,150,974,950 |

PR_kwDODunzps4zfdL0

| 3,793 |

Docs new UI actions no self hosted

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/30755778?v=4",

"events_url": "https://api.github.com/users/LysandreJik/events{/privacy}",

"followers_url": "https://api.github.com/users/LysandreJik/followers",

"following_url": "https://api.github.com/users/LysandreJik/following{/other_user}",

"gists_url": "https://api.github.com/users/LysandreJik/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/LysandreJik",

"id": 30755778,

"login": "LysandreJik",

"node_id": "MDQ6VXNlcjMwNzU1Nzc4",

"organizations_url": "https://api.github.com/users/LysandreJik/orgs",

"received_events_url": "https://api.github.com/users/LysandreJik/received_events",

"repos_url": "https://api.github.com/users/LysandreJik/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/LysandreJik/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/LysandreJik/subscriptions",

"type": "User",

"url": "https://api.github.com/users/LysandreJik"

}

|

[] |

closed

| false | null |

[] | null |

[

"It seems like the doc can't be compiled right now because of the following:\r\n\r\n```\r\nTraceback (most recent call last):\r\n File \"/usr/local/bin/doc-builder\", line 33, in <module>\r\n sys.exit(load_entry_point('doc-builder', 'console_scripts', 'doc-builder')())\r\n File \"/__w/datasets/datasets/doc-builder/src/doc_builder/commands/doc_builder_cli.py\", line 39, in main\r\n args.func(args)\r\n File \"/__w/datasets/datasets/doc-builder/src/doc_builder/commands/build.py\", line 95, in build_command\r\n build_doc(\r\n File \"/__w/datasets/datasets/doc-builder/src/doc_builder/build_doc.py\", line 361, in build_doc\r\n anchors_mapping = build_mdx_files(package, doc_folder, output_dir, page_info)\r\n File \"/__w/datasets/datasets/doc-builder/src/doc_builder/build_doc.py\", line 200, in build_mdx_files\r\n raise type(e)(f\"There was an error when converting {file} to the MDX format.\\n\" + e.args[0]) from e\r\nTypeError: There was an error when converting datasets/docs/source/package_reference/table_classes.mdx to the MDX format.\r\nexpected string or bytes-like object\r\n```",

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_3793). All of your documentation changes will be reflected on that endpoint.",

"This is due to the injection of docstrings from PyArrow. I think I can fix that by moving all the docstrings and fix them manually.",

"> It seems like the doc can't be compiled right now because of the following:\r\n\r\nit is expected since there is something I need to change on doc-builder side.\r\n\r\n> This is due to the injection of docstrings from PyArrow. I think I can fix that by moving all the docstrings and fix them manually.\r\n\r\n@lhoestq I will let you know if we need to change it manually.\r\n\r\n@LysandreJik thanks a lot for this PR! I only had one question [here](https://github.com/huggingface/datasets/pull/3793#discussion_r816100194)",

"> @lhoestq I will let you know if we need to change it manually.\r\n\r\nIt would be simpler to change it manually anyway - I don't want our documentation to break if PyArrow has documentation issues",

"For some reason it fails when `Installing node dependencies` when running `npm ci` from the `kit` directory, any idea why @mishig25 ?",

"Checking it rn",

"It's very likely linked to an OOM error: https://github.com/huggingface/transformers/pull/15710#issuecomment-1051737337"

] |

2022-02-25T23:48:55Z

|

2022-03-01T15:55:29Z

|

2022-03-01T15:55:28Z

|

MEMBER

| null | false |

{

"diff_url": "https://github.com/huggingface/datasets/pull/3793.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3793",

"merged_at": "2022-03-01T15:55:28Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3793.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3793"

}

|

Removes the need to have a self-hosted runner for the dev documentation

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3793/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3793/timeline

| null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3792

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3792/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3792/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3792/events

|

https://github.com/huggingface/datasets/issues/3792

| 1,150,812,404 |

I_kwDODunzps5EmAD0

| 3,792 |

Checksums didn't match for dataset source

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/13174842?v=4",

"events_url": "https://api.github.com/users/rafikg/events{/privacy}",

"followers_url": "https://api.github.com/users/rafikg/followers",

"following_url": "https://api.github.com/users/rafikg/following{/other_user}",

"gists_url": "https://api.github.com/users/rafikg/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/rafikg",

"id": 13174842,

"login": "rafikg",

"node_id": "MDQ6VXNlcjEzMTc0ODQy",

"organizations_url": "https://api.github.com/users/rafikg/orgs",

"received_events_url": "https://api.github.com/users/rafikg/received_events",

"repos_url": "https://api.github.com/users/rafikg/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/rafikg/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/rafikg/subscriptions",

"type": "User",

"url": "https://api.github.com/users/rafikg"

}

|

[

{

"color": "E5583E",

"default": false,

"description": "Related to the dataset viewer on huggingface.co",

"id": 3470211881,

"name": "dataset-viewer",

"node_id": "LA_kwDODunzps7O1zsp",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset-viewer"

}

] |

closed

| false | null |

[] | null |

[

"Same issue with `dataset = load_dataset(\"dbpedia_14\")`\r\n```\r\nNonMatchingChecksumError: Checksums didn't match for dataset source files:\r\n['https://drive.google.com/uc?export=download&id=0Bz8a_Dbh9QhbQ2Vic1kxMmZZQ1k']",

"I think this is a side-effect of #3787. The checksums won't match because the URLs have changed. @rafikg @Y0mingZhang, while this is fixed, maybe you can load the datasets as such:\r\n\r\n`data = datasets.load_dataset(\"wiki_lingua\", name=language, split=\"train[:2000]\", ignore_verifications=True)`\r\n`dataset = load_dataset(\"dbpedia_14\", ignore_verifications=True)`\r\n\r\nThis will, most probably, skip the verifications and integrity checks listed [here](https://huggingface.co/docs/datasets/loading_datasets.html#integrity-verifications)",

"Hi! Installing the `datasets` package from master (`pip install git+https://github.com/huggingface/datasets.git`) and then redownloading the datasets with `download_mode` set to `force_redownload` (e.g. `dataset = load_dataset(\"dbpedia_14\", download_mode=\"force_redownload\")`) should fix the issue.",

"Hi @rafikg and @Y0mingZhang, thanks for reporting.\r\n\r\nIndeed it seems that Google Drive changed their way to access their data files. We have recently handled that change:\r\n- #3787\r\n\r\nbut it will be accessible to users only in our next release of the `datasets` version.\r\n- Note that our latest release (version 1.18.3) was made before this fix: https://github.com/huggingface/datasets/releases/tag/1.18.3\r\n\r\nIn the meantime, as @mariosasko explained, you can incorporate this \"fix\" by installing our library from the GitHub master branch:\r\n```shell\r\npip install git+https://github.com/huggingface/datasets#egg=datasets\r\n```\r\nThen, you should force the redownload of the data (before the fix, you are just downloading/caching the virus scan warning page, instead of the data file):\r\n```shell\r\ndata = datasets.load_dataset(\"wiki_lingua\", name=language, split=\"train[:2000]\", download_mode=\"force_redownload\")",

"@albertvillanova by running:\r\n```\r\npip install git+https://github.com/huggingface/datasets#egg=datasets\r\ndata = datasets.load_dataset(\"wiki_lingua\", name=language, split=\"train[:2000]\", download_mode=\"force_redownload\", ignore_verifications=True)\r\n```\r\n\r\nI had a pickle error **UnpicklingError: invalid load key, '<'** in this part of code both `locally and on google colab`:\r\n\r\n```\r\n\"\"\"Yields examples.\"\"\"\r\nwith open(filepath, \"rb\") as f:\r\n data = pickle.load(f)\r\nfor id_, row in enumerate(data.items()):\r\n yield id_, {\"url\": row[0], \"article\": self._process_article(row[1])}\r\n```\r\n",

"This issue impacts many more datasets than the ones mention in this thread. Can we post # of downloads for each dataset by day (by successes and failures)? If so, it should be obvious which ones are failing.",

"I can see this problem too in xcopa, unfortunately installing the latest master (1.18.4.dev0) doesn't work, @albertvillanova .\r\n\r\n```\r\nfrom datasets import load_dataset\r\ndataset = load_dataset(\"xcopa\", \"it\")\r\n```\r\n\r\nThrows\r\n\r\n```\r\nin verify_checksums(expected_checksums, recorded_checksums, verification_name)\r\n 38 if len(bad_urls) > 0:\r\n 39 error_msg = \"Checksums didn't match\" + for_verification_name + \":\\n\"\r\n---> 40 raise NonMatchingChecksumError(error_msg + str(bad_urls))\r\n 41 logger.info(\"All the checksums matched successfully\" + for_verification_name)\r\n 42 \r\n\r\nNonMatchingChecksumError: Checksums didn't match for dataset source files:\r\n['https://github.com/cambridgeltl/xcopa/archive/master.zip']\r\n```",

"Hi @rafikg, I think that is another different issue. Let me check it... \r\n\r\nI guess maybe you are using a different Python version that the one the dataset owner used to create the pickle file...",

"@kwchurch the datasets impacted for this specific issue are the ones which are hosted at Google Drive.",

"@afcruzs-ms I think your issue is a different one, because that dataset is not hosted at Google Drive. Would you mind open another issue for that other problem, please? Thanks! :)",

"@albertvillanova just to let you know that I tried it locally and on colab and it is the same error",

"There are many many datasets on HugggingFace that are receiving this checksum error. Some of these datasets are very popular. There must be a way to track these errors, or to do regression testing. We don't want to catch each of these errors on each dataset, one at a time.",

"@rafikg I am sorry, but I can't reproduce your issue. For me it works OK for all languages. See: https://colab.research.google.com/drive/1yIcLw1it118-TYE3ZlFmV7gJcsF6UCsH?usp=sharing",

"@kwchurch the PR #3787 fixes this issue (generated by a change in Google Drive service) for ALL datasets with this issue. Once we make our next library release (in a couple of days), the fix will be accessible to all users that update our library from PyPI.",

"By the way, @rafikg, I discovered the URL for Spanish was wrong. I've created a PR to fix it:\r\n- #3806 ",

"I have the same problem with \"wider_face\" dataset. It seems that \"load_dataset\" function can not download the dataset from google drive.\r\n",

"still getting this issue with datasets==2.2.2 for \r\ndataset_fever_original_dev = load_dataset('fever', \"v1.0\", split=\"labelled_dev\")\r\n(this one seems to be hosted by aws though)\r\n\r\nupdate: also tried to install from source to get the latest 2.2.3.dev0, but still get the error below (and also force-redownloaded)\r\n\r\nupdate2: Seems like this issues is linked to a change in the links in the specific fever datasets: https://fever.ai/\r\n\"28/04/2022\r\nDataset download URLs have changed\r\nDownload URLs for shared task data for FEVER, FEVER2.0 and FEVEROUS have been updated. New URLS begin with https://fever.ai/download/[task name]/[filename]. All resource pages have been updated with the new URLs. Previous dataset URLs may not work and should be updated if you require these in your scripts. \"\r\n\r\n=> I don't know how to update the links for HF datasets - would be great if someone could update them :) \r\n\r\n```\r\n\r\nDownloading and preparing dataset fever/v1.0 (download: 42.78 MiB, generated: 38.39 MiB, post-processed: Unknown size, total: 81.17 MiB) to /root/.cache/huggingface/datasets/fever/v1.0/1.0.0/956b0a9c4b05e126fd956be73e09da5710992b5c85c30f0e5e1c500bc6051d0a...\r\n\r\nDownloading data files: 100%\r\n6/6 [00:07<00:00, 1.21s/it]\r\nDownloading data:\r\n278/? [00:00<00:00, 2.34kB/s]\r\nDownloading data:\r\n278/? [00:00<00:00, 1.53kB/s]\r\nDownloading data:\r\n278/? [00:00<00:00, 7.43kB/s]\r\nDownloading data:\r\n278/? [00:00<00:00, 5.54kB/s]\r\nDownloading data:\r\n278/? [00:00<00:00, 6.19kB/s]\r\nDownloading data:\r\n278/? [00:00<00:00, 7.51kB/s]\r\nExtracting data files: 100%\r\n6/6 [00:00<00:00, 108.05it/s]\r\n\r\n---------------------------------------------------------------------------\r\n\r\nNonMatchingChecksumError Traceback (most recent call last)\r\n\r\n[<ipython-input-20-92ec5c728ecf>](https://localhost:8080/#) in <module>()\r\n 27 # get labels for fever-nli-dev from original fever - only works for dev\r\n 28 # \"(The labels for both dev and test are hidden but you can retrieve the label for dev using the cid and the original FEVER data.)\"\" https://github.com/easonnie/combine-FEVER-NSMN/blob/master/other_resources/nli_fever.md\r\n---> 29 dataset_fever_original_dev = load_dataset('fever', \"v1.0\", split=\"labelled_dev\")\r\n 30 df_fever_original_dev = pd.DataFrame(data={\"id\": dataset_fever_original_dev[\"id\"], \"label\": dataset_fever_original_dev[\"label\"], \"claim\": dataset_fever_original_dev[\"claim\"], \"evidence_id\": dataset_fever_original_dev[\"evidence_id\"]})\r\n 31 df_fever_dev = pd.merge(df_fever_dev, df_fever_original_dev, how=\"left\", left_on=\"cid\", right_on=\"id\")\r\n\r\n4 frames\r\n\r\n[/usr/local/lib/python3.7/dist-packages/datasets/utils/info_utils.py](https://localhost:8080/#) in verify_checksums(expected_checksums, recorded_checksums, verification_name)\r\n 38 if len(bad_urls) > 0:\r\n 39 error_msg = \"Checksums didn't match\" + for_verification_name + \":\\n\"\r\n---> 40 raise NonMatchingChecksumError(error_msg + str(bad_urls))\r\n 41 logger.info(\"All the checksums matched successfully\" + for_verification_name)\r\n 42 \r\n\r\nNonMatchingChecksumError: Checksums didn't match for dataset source files:\r\n['https://s3-eu-west-1.amazonaws.com/fever.public/train.jsonl', 'https://s3-eu-west-1.amazonaws.com/fever.public/shared_task_dev.jsonl', 'https://s3-eu-west-1.amazonaws.com/fever.public/shared_task_dev_public.jsonl', 'https://s3-eu-west-1.amazonaws.com/fever.public/shared_task_test.jsonl', 'https://s3-eu-west-1.amazonaws.com/fever.public/paper_dev.jsonl', 'https://s3-eu-west-1.amazonaws.com/fever.public/paper_test.jsonl']\r\n```\r\n",

"I think this has to be fixed on the google drive side, but you also have to delete the bad stuff from your local cache. This is not a great design, but it is what it is.",

"We have fixed the issues with the datasets:\r\n- wider_face: by hosting their data files on the HuggingFace Hub (CC: @HosseynGT)\r\n- fever: by updating to their new data URLs (CC: @MoritzLaurer)",

"The yelp_review_full datasets has this problem as well and can't be fixed with the suggestion.",

"This is a super-common failure mode. We really need to find a better workaround. My solution was to wait until the owner of the dataset in question did the right thing, and then I had to delete my cached versions of the datasets with the bad checksums. I don't understand why this happens. Would it be possible to maintain a copy of the most recent version that was known to work, and roll back to that automatically if the checksums fail? And if the checksums fail, couldn't the system automatically flush the cached versions with the bad checksums? It feels like we are blaming the provider of the dataset, when in fact, there are things that the system could do to ease the pain. Let's take these error messages seriously. There are too many of them involving too many different datasets.",

"the [exams](https://huggingface.co/datasets/exams) dataset also has this issue and the provided fix above doesn't work",

"Same for [DART dataset](https://huggingface.co/datasets/dart):\r\n```\r\nNonMatchingChecksumError: Checksums didn't match for dataset source files:\r\n['https://raw.githubusercontent.com/Yale-LILY/dart/master/data/v1.1.1/dart-v1.1.1-full-train.json', 'https://raw.githubusercontent.com/Yale-LILY/dart/master/data/v1.1.1/dart-v1.1.1-full-dev.json', 'https://raw.githubusercontent.com/Yale-LILY/dart/master/data/v1.1.1/dart-v1.1.1-full-test.json']\r\n```",

"same for multi_news dataset",

"- @thesofakillers the issue with `exams` was fixed on 16 Aug by this PR:\r\n - #4853\r\n- @Aktsvigun the issue with `dart` has been transferred to the Hub: https://huggingface.co/datasets/dart/discussions/1\r\n - and fixed by PR: https://huggingface.co/datasets/dart/discussions/2\r\n- @Carol-gutianle the issue with `multi_news` have been transferred to the Hub as well: https://huggingface.co/datasets/multi_news/discussions/1\r\n - not reproducible: maybe you should try to update `datasets`\r\n\r\nFor information to everybody, we are removing the checksum verifications (that were creating a bad user experience). This will be in place in the following weeks."

] |

2022-02-25T19:55:09Z

|

2022-10-12T13:33:26Z

|

2022-02-28T08:44:18Z

|

NONE

| null | null | null |

## Dataset viewer issue for 'wiki_lingua*'

**Link:** *link to the dataset viewer page*

`data = datasets.load_dataset("wiki_lingua", name=language, split="train[:2000]")

`

*short description of the issue*

```

[NonMatchingChecksumError: Checksums didn't match for dataset source files:

['https://drive.google.com/uc?export=download&id=11wMGqNVSwwk6zUnDaJEgm3qT71kAHeff']]()

```

Am I the one who added this dataset ? No

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3792/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3792/timeline

| null |

completed

| false |

https://api.github.com/repos/huggingface/datasets/issues/3791

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3791/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3791/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3791/events

|

https://github.com/huggingface/datasets/pull/3791

| 1,150,733,475 |

PR_kwDODunzps4zevU2

| 3,791 |

Add `data_dir` to `data_files` resolution and misc improvements to HfFileSystem

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/mariosasko",

"id": 47462742,

"login": "mariosasko",

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"type": "User",

"url": "https://api.github.com/users/mariosasko"

}

|

[] |

closed

| false | null |

[] | null |

[] |

2022-02-25T18:26:35Z

|

2022-03-01T13:10:43Z

|

2022-03-01T13:10:42Z

|

CONTRIBUTOR

| null | false |

{

"diff_url": "https://github.com/huggingface/datasets/pull/3791.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3791",

"merged_at": "2022-03-01T13:10:42Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3791.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3791"

}

|

As discussed in https://github.com/huggingface/datasets/pull/2830#issuecomment-1048989764, this PR adds a QOL improvement to easily reference the files inside a directory in `load_dataset` using the `data_dir` param (very handy for ImageFolder because it avoids globbing, but also useful for the other loaders). Additionally, it fixes the issue with `HfFileSystem.isdir`, which would previously always return `False`, and aligns the path-handling logic in `HfFileSystem` with `fsspec.GitHubFileSystem`.

|

{

"+1": 2,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 2,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3791/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3791/timeline

| null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3790

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3790/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3790/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3790/events

|

https://github.com/huggingface/datasets/pull/3790

| 1,150,646,899 |

PR_kwDODunzps4zedMa

| 3,790 |

Add doc builder scripts

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq"

}

|

[] |

closed

| false | null |

[] | null |

[

"I think we're only missing the hosted runner to be configured for this repository and we should be good",

"Regarding the self-hosted runner, I actually encourage using the approach defined here: https://github.com/huggingface/transformers/pull/15710, which doesn't leverage a self-hosted runner. This prevents queuing jobs, which is important when we expect several concurrent jobs.",

"Opened a PR for that on your branch here: https://github.com/huggingface/datasets/pull/3793"

] |

2022-02-25T16:38:47Z

|

2022-03-01T15:55:42Z

|

2022-03-01T15:55:41Z

|

MEMBER

| null | false |

{

"diff_url": "https://github.com/huggingface/datasets/pull/3790.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3790",

"merged_at": "2022-03-01T15:55:41Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3790.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3790"

}

|

I added the three scripts:

- build_dev_documentation.yml

- build_documentation.yml

- delete_dev_documentation.yml

I got them from `transformers` and did a few changes:

- I removed the `transformers`-specific dependencies

- I changed all the paths to be "datasets" instead of "transformers"

- I passed the `--library_name datasets` arg to the `doc-builder build` command (according to https://github.com/huggingface/doc-builder/pull/94/files#diff-bcc33cf7c223511e498776684a9a433810b527a0a38f483b1487e8a42b6575d3R26)

cc @LysandreJik @mishig25

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3790/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3790/timeline

| null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3789

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3789/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3789/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3789/events

|

https://github.com/huggingface/datasets/pull/3789

| 1,150,587,404 |

PR_kwDODunzps4zeQpx

| 3,789 |

Add URL and ID fields to Wikipedia dataset

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

}

|

[] |

closed

| false | null |

[] | null |

[

"Do you think we have a dedicated branch for all the changes we want to do to wikipedia ? Then once everything looks good + we have preprocessed the main languages, we can merge it on the `master` branch",

"Yes, @lhoestq, I agree with you.\r\n\r\nI have just created the dedicated branch [`update-wikipedia`](https://github.com/huggingface/datasets/tree/update-wikipedia). We can merge every PR (once validated) to that branch; once all changes are merged to that branch, we could create the preprocessed datasets and then merge the branch to master. ",

"@lhoestq I guess you approve this PR?"

] |

2022-02-25T15:34:37Z

|

2022-03-04T08:24:24Z

|

2022-03-04T08:24:23Z

|

MEMBER

| null | false |

{

"diff_url": "https://github.com/huggingface/datasets/pull/3789.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3789",

"merged_at": "2022-03-04T08:24:23Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3789.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3789"

}

|

This PR adds the URL field, so that we conform to proper attribution, required by their license: provide credit to the authors by including a hyperlink (where possible) or URL to the page or pages you are re-using.

About the conversion from title to URL, I found that apart from replacing blanks with underscores, some other special character must also be percent-encoded (e.g. `"` to `%22`): https://meta.wikimedia.org/wiki/Help:URL

Therefore, I have finally used `urllib.parse.quote` function. This additionally percent-encodes non-ASCII characters, but Wikimedia docs say these are equivalent:

> For the other characters either the code or the character can be used in internal and external links, they are equivalent. The system does a conversion when needed.

> [[%C3%80_propos_de_M%C3%A9ta]]

> is rendered as [À_propos_de_Méta](https://meta.wikimedia.org/wiki/%C3%80_propos_de_M%C3%A9ta), almost like [À propos de Méta](https://meta.wikimedia.org/wiki/%C3%80_propos_de_M%C3%A9ta), which leads to this page on Meta with in the address bar the URL

> [http://meta.wikipedia.org/wiki/%C3%80_propos_de_M%C3%A9ta](https://meta.wikipedia.org/wiki/%C3%80_propos_de_M%C3%A9ta)

> while [http://meta.wikipedia.org/wiki/À_propos_de_Méta](https://meta.wikipedia.org/wiki/%C3%80_propos_de_M%C3%A9ta) leads to the same.

Fix #3398.

CC: @geohci

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3789/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3789/timeline

| null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3788

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3788/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3788/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3788/events

|

https://github.com/huggingface/datasets/issues/3788

| 1,150,375,720 |

I_kwDODunzps5EkVco

| 3,788 |

Only-data dataset loaded unexpectedly as validation split

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

}

|

[

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] |

open

| false | null |

[] | null |

[

"I see two options:\r\n1. drop the \"dev\" keyword since it can be considered too generic\r\n2. improve the pattern to something more reasonable, e.g. asking for a separator before and after \"dev\"\r\n```python\r\n[\"*[ ._-]dev[ ._-]*\", \"dev[ ._-]*\"]\r\n```\r\n\r\nI think 2. is nice. If we agree on this one we can even decide to require the separation for the other split keywords \"train\", \"test\" etc.",

"Yes, I had something like that on mind: \"dev\" not being part of a word.\r\n```\r\n\"[^a-zA-Z]dev[^a-zA-Z]\"",

"Is there a reason why we want that regex? It feels like something that'll still be an issue for some weird case. \"my_dataset_dev\" doesn't match your regex, \"my_dataset_validation\" doesn't either ... Why not always \"train\" unless specified?",

"The regex is needed as part of our effort to make datasets configurable without code. In particular we define some generic dataset repository structures that users can follow\r\n\r\n> ```\r\n> \"[^a-zA-Z]*dev[^a-zA-Z]*\"\r\n> ```\r\n\r\nunfortunately our glob doesn't support \"^\": \r\n\r\nhttps://github.com/fsspec/filesystem_spec/blob/3e739db7e53f5b408319dcc9d11e92bc1f938902/fsspec/spec.py#L465-L479",

"> \"my_dataset_dev\" doesn't match your regex, \"my_dataset_validation\" doesn't either ... Why not always \"train\" unless specified?\r\n\r\nAnd `my_dataset_dev.foo` would match the pattern, and we also have the same pattern but for the \"validation\" keyword so `my_dataset_validation.foo` would work too",

"> The regex is needed as part of our effort to make datasets configurable without code\r\n\r\nThis feels like coding with the filename ^^'",

"This is still much easier than having to write a full dataset script right ? :p"

] |

2022-02-25T12:11:39Z

|

2022-02-28T11:22:22Z

| null |

MEMBER

| null | null | null |

## Describe the bug

As reported by @thomasw21 and @lhoestq, a dataset containing only a data file whose name matches the pattern `*dev*` will be returned as VALIDATION split, even if this is not the desired behavior, e.g. a file named `datosdevision.jsonl.gz`.

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3788/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3788/timeline

| null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3787

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3787/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3787/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3787/events

|

https://github.com/huggingface/datasets/pull/3787

| 1,150,235,569 |

PR_kwDODunzps4zdE7b

| 3,787 |

Fix Google Drive URL to avoid Virus scan warning

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

}

|

[] |

closed

| false | null |

[] | null |

[

"Thanks for this @albertvillanova!",

"Once this PR merged into master and until our next `datasets` library release, you can get this fix by installing our library from the GitHub master branch:\r\n```shell\r\npip install git+https://github.com/huggingface/datasets#egg=datasets\r\n```\r\nThen, if you had previously tried to load the data and got the checksum error, you should force the redownload of the data (before the fix, you just downloaded and cached the virus scan warning page, instead of the data file):\r\n```shell\r\nload_dataset(\"...\", download_mode=\"force_redownload\")\r\n```",

"Thanks, that solved a bunch of problems we had downstream!\r\ncf. https://github.com/ElementAI/picard/issues/61"

] |

2022-02-25T09:35:12Z

|

2022-03-04T20:43:32Z

|

2022-02-25T11:56:35Z

|

MEMBER

| null | false |

{

"diff_url": "https://github.com/huggingface/datasets/pull/3787.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3787",

"merged_at": "2022-02-25T11:56:35Z",

"patch_url": "https://github.com/huggingface/datasets/pull/3787.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3787"

}

|

This PR fixes, in the datasets library instead of in every specific dataset, the issue of downloading the Virus scan warning page instead of the actual data file for Google Drive URLs.

Fix #3786, fix #3784.

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 1,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3787/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3787/timeline

| null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3786

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3786/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3786/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3786/events

|

https://github.com/huggingface/datasets/issues/3786

| 1,150,233,067 |

I_kwDODunzps5Ejynr

| 3,786 |

Bug downloading Virus scan warning page from Google Drive URLs

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

}

|

[

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] |

closed

| false |

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

}

|

[

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

}

] | null |

[

"Once the PR merged into master and until our next `datasets` library release, you can get this fix by installing our library from the GitHub master branch:\r\n```shell\r\npip install git+https://github.com/huggingface/datasets#egg=datasets\r\n```\r\nThen, if you had previously tried to load the data and got the checksum error, you should force the redownload of the data (before the fix, you just downloaded and cached the virus scan warning page, instead of the data file):\r\n```shell\r\nload_dataset(\"...\", download_mode=\"force_redownload\")\r\n```"

] |

2022-02-25T09:32:23Z

|

2022-03-03T09:25:59Z

|

2022-02-25T11:56:35Z

|

MEMBER

| null | null | null |

## Describe the bug

Recently, some issues were reported with URLs from Google Drive, where we were downloading the Virus scan warning page instead of the data file itself.

See:

- #3758

- #3773

- #3784

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3786/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3786/timeline

| null |

completed

| false |

https://api.github.com/repos/huggingface/datasets/issues/3785

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3785/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3785/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3785/events

|

https://github.com/huggingface/datasets/pull/3785

| 1,150,069,801 |

PR_kwDODunzps4zciES

| 3,785 |

Fix: Bypass Virus Checks in Google Drive Links (CNN-DM dataset)

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/58678541?v=4",

"events_url": "https://api.github.com/users/AngadSethi/events{/privacy}",

"followers_url": "https://api.github.com/users/AngadSethi/followers",

"following_url": "https://api.github.com/users/AngadSethi/following{/other_user}",

"gists_url": "https://api.github.com/users/AngadSethi/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/AngadSethi",

"id": 58678541,

"login": "AngadSethi",

"node_id": "MDQ6VXNlcjU4Njc4NTQx",

"organizations_url": "https://api.github.com/users/AngadSethi/orgs",

"received_events_url": "https://api.github.com/users/AngadSethi/received_events",

"repos_url": "https://api.github.com/users/AngadSethi/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/AngadSethi/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/AngadSethi/subscriptions",

"type": "User",

"url": "https://api.github.com/users/AngadSethi"

}

|

[] |

closed

| false | null |

[] | null |

[

"Thank you, @albertvillanova!",

"Got it. Thanks for explaining this, @albertvillanova!\r\n\r\n> On the other hand, the tests are not passing because the dummy data should also be fixed. Once done, this PR will be able to be merged into master.\r\n\r\nWill do this 👍",

"Hi ! I think we need to fix the issue for every dataset. This can be done simply by fixing how we handle Google Drive links, see my comment https://github.com/huggingface/datasets/pull/3775#issuecomment-1050970157",

"Hi @lhoestq! I think @albertvillanova has already fixed this in #3787",

"Cool ! I missed this one :) thanks",

"No problem!",

"Hi, @AngadSethi, I think that once:\r\n- #3787 \r\n\r\nwas merged, issue:\r\n- #3784 \r\n\r\nwas also fixed.\r\n\r\nTherefore, I think this PR is no longer necessary. I'm closing it. Let me know if you agree.",

"Yes, absolutely @albertvillanova! I agree :)"

] |

2022-02-25T05:48:57Z

|

2022-03-03T16:43:47Z

|

2022-03-03T14:03:37Z

|

NONE

| null | false |

{

"diff_url": "https://github.com/huggingface/datasets/pull/3785.diff",

"html_url": "https://github.com/huggingface/datasets/pull/3785",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/3785.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3785"

}

|

This commit fixes the issue described in #3784. By adding an extra parameter to the end of Google Drive links, we are able to bypass the virus check and download the datasets.

So, if the original link looked like https://drive.google.com/uc?export=download&id=0BwmD_VLjROrfTHk4NFg2SndKcjQ

The new link now looks like https://drive.google.com/uc?export=download&id=0BwmD_VLjROrfTHk4NFg2SndKcjQ&confirm=t

Fixes #3784

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/3785/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/3785/timeline

| null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3784

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3784/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3784/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3784/events

|

https://github.com/huggingface/datasets/issues/3784

| 1,150,057,955 |

I_kwDODunzps5EjH3j

| 3,784 |

Unable to Download CNN-Dailymail Dataset

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/58678541?v=4",

"events_url": "https://api.github.com/users/AngadSethi/events{/privacy}",

"followers_url": "https://api.github.com/users/AngadSethi/followers",

"following_url": "https://api.github.com/users/AngadSethi/following{/other_user}",

"gists_url": "https://api.github.com/users/AngadSethi/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/AngadSethi",

"id": 58678541,

"login": "AngadSethi",

"node_id": "MDQ6VXNlcjU4Njc4NTQx",

"organizations_url": "https://api.github.com/users/AngadSethi/orgs",

"received_events_url": "https://api.github.com/users/AngadSethi/received_events",

"repos_url": "https://api.github.com/users/AngadSethi/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/AngadSethi/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/AngadSethi/subscriptions",

"type": "User",

"url": "https://api.github.com/users/AngadSethi"

}

|

[

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] |

closed

| false |

{

"avatar_url": "https://avatars.githubusercontent.com/u/58678541?v=4",

"events_url": "https://api.github.com/users/AngadSethi/events{/privacy}",

"followers_url": "https://api.github.com/users/AngadSethi/followers",

"following_url": "https://api.github.com/users/AngadSethi/following{/other_user}",

"gists_url": "https://api.github.com/users/AngadSethi/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/AngadSethi",

"id": 58678541,

"login": "AngadSethi",

"node_id": "MDQ6VXNlcjU4Njc4NTQx",

"organizations_url": "https://api.github.com/users/AngadSethi/orgs",

"received_events_url": "https://api.github.com/users/AngadSethi/received_events",

"repos_url": "https://api.github.com/users/AngadSethi/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/AngadSethi/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/AngadSethi/subscriptions",

"type": "User",

"url": "https://api.github.com/users/AngadSethi"

}

|

[

{

"avatar_url": "https://avatars.githubusercontent.com/u/58678541?v=4",

"events_url": "https://api.github.com/users/AngadSethi/events{/privacy}",

"followers_url": "https://api.github.com/users/AngadSethi/followers",

"following_url": "https://api.github.com/users/AngadSethi/following{/other_user}",

"gists_url": "https://api.github.com/users/AngadSethi/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/AngadSethi",

"id": 58678541,

"login": "AngadSethi",

"node_id": "MDQ6VXNlcjU4Njc4NTQx",

"organizations_url": "https://api.github.com/users/AngadSethi/orgs",

"received_events_url": "https://api.github.com/users/AngadSethi/received_events",

"repos_url": "https://api.github.com/users/AngadSethi/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/AngadSethi/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/AngadSethi/subscriptions",

"type": "User",

"url": "https://api.github.com/users/AngadSethi"

}

] | null |

[

"#self-assign",

"@AngadSethi thanks for reporting and thanks for your PR!",

"Glad to help @albertvillanova! Just fine-tuning the PR, will comment once I am able to get it up and running 😀",

"Fixed by:\r\n- #3787"

] |

2022-02-25T05:24:47Z

|

2022-03-03T14:05:17Z

|