text

stringlengths 59

500k

| subset

stringclasses 6

values |

|---|---|

Poverty, vulnerability, and the middle class in Latin America

Marco Stampini1,

Marcos Robles2,

Mayra Sáenz2,

Pablo Ibarrarán1 &

Nadin Medellín1

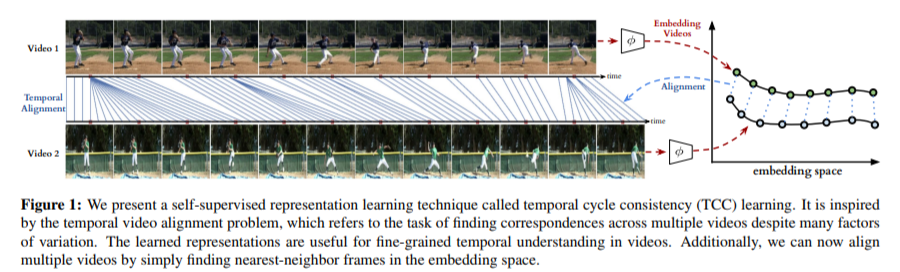

Between 2000 and 2013, Latin America has considerably reduced poverty (from 46.3 to 29.7 % of the population). In this paper, we use synthetic panels to show that, despite progress, the region remains characterized by substantial vulnerability that also affects the rising middle class. More specifically, we find that 65 % of those with daily income between $4 and 10, and 14 % of those in the middle class experience poverty at least once over a 10-year period. Furthermore, chronic poverty remains widespread (representing 91 and 50 % of extreme and moderate poverty, respectively). Differences between rural and urban areas are substantial. Urban areas, which are now home to most moderate poor and vulnerable, are characterized by higher income mobility, particularly upward mobility. These findings have important implications for the design of effective social safety nets. These need to mix long-term interventions for the chronic poor, especially in rural areas, with flexible short-term support to a large group of transient poor and vulnerable, particularly in urban areas.

In recent years, Latin America has made remarkable progress in the reduction of poverty and inequality. Between 2000 and 2013, the percentage of the population living on less than $2.5 per capita per day decreased from 28.8 to 15.9 %, while the share of the population living on less than $4 dropped from 46.3 to 29.7 %. Over the same period, the region has also managed to reduce its unfortunately distinctive inequality: the Gini coefficient of the income distribution fell from 0.57 to 0.51.

These improvements were largely driven by sustained economic growth, which led to an expansion of the middle class.Footnote 1 However, despite these positive trends, the region is still home to 92 million extreme poor and 77 million moderate poor. In addition, most of those that exited poverty joined the vulnerable class and are still at substantial risk of falling into poverty (Fig. 1).

Income distribution in Latin America (2000–2013), region aggregate

The trends in the incidence and depth of poverty, however, do not fully capture poverty dynamics, i.e., its duration and how often families enter and exit poverty. This information is very important for the design of effective social safety nets, particularly as far as targeting and recertification are concerned.Footnote 2 Frequent movements in and out of poverty imply the need for flexible safety net entry and exit rules.

The analysis of poverty dynamics and income mobility in developing countries has received relatively limited attention, largely due to the lack of adequate longitudinal data.Footnote 3 Recently, Ferreira et al. (2013) and Vakis et al. (2015) have analyzed intra-generational mobility in Latin America, with a focus, respectively, on the middle class and the chronic poor. Their analysis is based on the synthetic panel methodology developed by Dang et al. (2014), which is the same we employ in this paper. The two works construct two-period transition matrices [1995–2010 in Ferreira et al. (2013), 2004–2012 in Vakis et al. (2015)] and define the chronic poor as those that were poor in both years. The analysis only captures mobility from the first period to the last period, and not yearly mobility in between the two. Consequently, it depicts the vulnerable and the middle class as consolidated in their position (with a low probability of experiencing poverty).

In this paper, we generate 10-year synthetic panels for a large sample of Latin American countries, and use them to estimate yearly movements in and out of poverty from 2003 to 2013. We provide a novel classification of households based on poverty duration, which distinguishes chronic poor, transient poor, future poor, and never poor. The future poor include those that initially belonged to the vulnerable, middle, and high-income classes, and experienced poverty at any time over the following decade.

We find that 65 % of the vulnerable (i.e., those with daily income between $4 and 10), and 14 % of those in the middle class (with daily income between $10 and 50) of 2003, experienced poverty at least once during the period 2004–13. At the same time, chronic poverty remains widespread, accounting for 91 and 50 % of extreme and moderate poverty, respectively. Differences between rural and urban areas are substantial. Urban areas, which are now home to most moderate poor and vulnerable, are characterized by higher (particularly upward) income mobility.

The remainder of the paper is organized as follows. Section 2 defines poverty and vulnerability, and describes the data and the methodology employed for constructing synthetic panels and forecasting poverty dynamics. Section 3 presents the trends in poverty reduction and shows that the Latin American region is highly heterogeneous in the stage and speed of the socioeconomic transition towards the middle class. Section 4 analyzes poverty dynamics, including transition matrices and poverty duration, and discusses household characteristics of chronic and transient poor. Section 5 highlights the main differences between urban and rural poverty. Section 6 concludes summarizing key findings and policy implications. Along the paper, we discuss the policy implications of the findings for the design and implementation of the social safety nets, with a particular focus on the targeting and recertification processes.Footnote 4

Six annexes provide additional information on data, methods, sensitivity analyses, and country results. Annex 1 lists the data sources. Annex 2 summarizes the existing literature comparing results from genuine panel data with non-parametric, parametric, and point estimate synthetic-panel methods. Annex 3 shows the bounds of selected estimates, providing a visual representation of the quality of our results. Annex 4 presents sensitivity analyses of key chronic poverty results to the adoption of alternative poverty lines. Annex 5 shows that the variation in the share of urban population is limited between 2003 and 2013, which is necessary for the validity of the rural/urban breakdown of the dynamic analyses based on the pseudo panel methodology. Finally, Annex 6 presents country-specific poverty profiles.

Data and methodology

Non-technical readers can skip this section with no prejudice to their ability to understand the rest of the paper.

We look at poverty through two lenses, one that focuses on depth and the other on duration. The former is static, and analyzes a picture of poverty through the value of daily per-capita income (expressed in 2005 dollars adjusted to reflect purchasing power parity). It divides the population in five groups: (i) the extreme poor, with income below $2.5; (ii) the moderate poor, between $2.5 and 4; (iii) the vulnerable, between $4 and 10; (iv) the middle class, between $10 and 50 [as in López-Calva and Ortiz-Juárez (2011)]; and (v) the high-income class, above $50.

The $2.5 line corresponds to the median of the official extreme poverty lines in Latin American countries (CEDLAS and World Bank 2012), and has already been used in regional studies (World Bank 2014). It is higher than the international extreme poverty line of $1.25 used by Ravallion et al. (2008), which corresponds to the mean of the official extreme poverty lines of the 15 poorest countries in the world. The use of a higher line reflects the relatively more advanced stage of socioeconomic development (and the higher price levels) of the Latin American region. Similar considerations hold for the $4 poverty line. The vulnerable class is defined by López-Calva and Ortiz-Juárez (2011), as having a per capita daily income between $4 and 10, which is empirically observed to imply a probability greater than 10 % of falling into poverty.

The second lens is dynamic and focuses on the duration of poverty. It divides the population in four groups: (i) the chronic poor, that are poor (either extreme or moderate) in the first year of analysis, and in five or more years over the following decade;Footnote 5 (ii) the transient poor, that are poor in the first year, and again in four or less years over the following decade; (iii) the future poor, that are either vulnerable, middle class, or high income in the first year of analysis, but experience poverty in at least 1 year during the following 10 years; (iv) the never poor, who are always above the $4 poverty line.

Conditioning the definition of chronic and transient poverty on being poor in the first year guarantees that the sum of extreme and moderate poverty equals the sum of chronic and transient poverty. In other words, the incidence of poverty does not change, no matter if one looks at it through the lenses of depth or through those of duration.

The analysis focusing on the static definition of poverty is based on observed micro-data from 216 cross-sectional household surveys collected between 2000 and 2013 in 18 Latin American countries (see Annex 1).Footnote 6 These data are from IDB's Harmonized Data Bank of Household Surveys from Latin America and the Caribbean (also known as IDB's Sociometro). Regional estimates of the incidence of poverty are obtained by imputing missing values for years with no survey, then calculating population-weighted averages of country estimates.

The data are representative both at the national level and at the urban–rural level, with the exception of Argentina, Uruguay, and Venezuela. In Argentina, the household survey is only urban. In Uruguay, rural areas have been surveyed only since 2006; we restrict the analysis to urban areas to ensure comparability over the period 2000–2013. In Venezuela, since 2004, the survey does not contain a variable that allows separating rural and urban areas.

Given the unavailability of real-panel data sets, in which the same households are surveyed across time, the analysis of poverty duration is based on the construction of synthetic panels a la Dang et al. (2014).Footnote 7 This method was originally designed to analyze transitions in and out of poverty based on two (or more) rounds of cross-sectional data. In addition, the literature, so far, has focused on proving the reliability of the methodology (Cruces et al. 2011; Fields and Viollaz 2013; Haynes et al. 2013). Our objective is slightly different, as we aim to investigate poverty duration, or more specifically in how many years a family has been poor over a decade. For this purpose, we calculate yearly point estimates of per-capita income based on yearly cross-sectional data.Footnote 8

The sample is made of families surveyed in the first year (t = 0). For each of the following 10 years (s = 1,10), we estimate per-capita income using time-invariant variables observed in t = 0, coefficients estimated in t = s, and empirical residuals.Footnote 9 The methodology assumes a linear structure of the income equation, and is based on the following two assumptions: (i) households do not change, which ensures that time-invariant variables observed in t = 0 can be used to estimate income in t = s, and (ii) the correlation of the error terms across time (\(\varepsilon_{t = 0}\) and ɛ t=s ) is not negative. This is a reasonable assumption given that income shocks show persistence over time, and factors leading to a negative correlation of income over time are unlikely to apply to all households at the same time. The methodology requires estimating the following equations:

$$y_{i,t} = \beta^{\prime}_{t} x_{i,t} + \varepsilon_{i,t} \quad {\text{for }}t = 0,10$$

i.e., for the first period and for the following 10 years, where y i,t is the logarithm of household i's per-capita income at time t, and x i,t is a vector of variables measuring household i's characteristics at time t. Our specification of the model includes variables typically employed in the literature (Dang et al. 2014; Cruces et al. 2011), plus statistically significant variables at the regional level. The vector x contains the following variables:

Household head characteristics: sex, age, age squared, years of schooling, years of schooling squared, and agricultural work;

Region (first-level administrative country subdivision) characteristics: average years of schooling of the household heads, and proportion of workers in agriculture;

Geographic controls: rural–urban residence;

Retrospective regressors at regional level in the initial year (2003): inequality (standard deviation of log income), extreme poverty headcount ($2.5 a day), average per capita income, average household size, and average years of schooling of the household heads.

The regressions produce 11 estimates of vectors β and ε (\(\hat{\beta }_{t}\) and \(\hat{\varepsilon }_{t}\), one for each time period). They also produce 11 estimates of the error term variance (\(\hat{\sigma }_{{\varepsilon_{t} }}\)). These parameters are used to produce the synthetic-panel estimates of yearly per-capita income.

Following Cruces et al. (2011), Fields and Viollaz (2013), and Haynes et al. (2013), we use the "non-parametric" version of the method, i.e., we make no assumptions on the structural form of the joint distribution of the errors terms. Two extreme assumptions on the non-parametric time correlation of the error terms lead to a lower and upper bound estimate of per-capita income mobility. At one extreme, one can assume zero correlation between \(\varepsilon_{t = 0}\) and ɛ t=s , i.e., that the two error terms are independent from each other. The logarithm of per capita income of household i in t = s (\(\hat{y}_{i,t = s}^{U}\)) is estimated as follows:

$$\hat{y}_{i,t = s}^{U} = \hat{\beta }_{t = s}^{'} x_{i,t = 0} + \hat{\tilde{\varepsilon }}_{i,t = s}$$

where the apex U indicates uncorrelated error terms, and \(\hat{\tilde{\varepsilon }}_{i,t = s}\) is the mean of 50 random draws (with replacement) from the vector of estimated residuals in t = s. At the other extreme, one can assume perfect correlation between \(\varepsilon_{t = 0}\) and ɛ t=s . Under this assumption, the logarithm of per capita income of household i in t = s (\(\hat{y}_{i,t = s}^{C}\)) is estimated as follows:

$$\hat{y}_{i,t = s}^{C} = \hat{\beta }_{t = s}^{'} x_{i,t = 0} + \gamma \hat{\varepsilon }_{i,t = 0}$$

where the apex C indicates correlated error terms, \(\hat{\varepsilon }_{i,t = 0}\) is (the time-invariant) household i's empirical error term estimated in t = 0, and \(\gamma = \hat{\sigma }_{{\varepsilon_{t = 0} }} /\hat{\sigma }_{{\varepsilon_{t = s} }}\). is a scale factor.

Dang et al. (2014) and Cruces et al. (2011) show that: (i) Eq. (2) produces upper-bound estimates of income mobility, due to the high variation in the error term, and overestimates people's movements in and out of poverty; (ii) Eq. (3) produces lower-bound estimates of income mobility, due to the constant error term, and underestimates poverty transitions; and (iii) the average of (2) and (3) approximates well the observed income mobility, providing a satisfactory estimation of movements in and out of poverty. This last point is proved empirically by comparing synthetic-panel estimates of mobility with those observed in genuine panel data. We, therefore, calculate point estimates of per-capita income of the households in the synthetic-panel as follows:Footnote 10Recently, Dang and Lanjouw (2013) have developed a point estimate synthetic panel approach, which generalizes the use of non-parametric and parametric methods to produce point estimates of poverty transitions. This could have been used, as alternative to Eq. (4), to produce the estimates of poverty duration presented in this paper. We preferred to rely on Eq. (4), because the two methods have been shown to be empirically equivalent in terms of accuracy, and because Dang and Lanjouw (2013) indicate that the point-estimate methodology is most accurate when short time periods are analyzed. The existing literature comparing results from genuine panel data with non-parametric, parametric, and point estimate synthetic-panel methods is summarized in Annex 2.

$$\hat{y}_{i,t = s} = \hat{\beta }_{t = s}^{'} x_{i,t = 0} + \frac{{\left( {\hat{\tilde{\varepsilon }}_{i,t = s} + \gamma \hat{\varepsilon }_{i,t = 0} } \right)}}{2}.$$

As a result, our analysis of income mobility is based on income observed in 2003 along with income estimated for the years between 2004 and 2013. The quality of the predictions is essential to guarantee that results are credible. As is usual practice, we carefully ensured the quality of the fit (value of R-squared, significance of coefficients, over-fitting). In Annex 3, we show for selected countries that the upper and lower bounds of predicted incomes produce poverty rates that are very close to the rates directly observed from household surveys. We also show the bounds for the estimates of chronic poverty, transient poverty, and future poverty in selected countries.

Our methodology can only be applied to 12 countries that have household survey data for each year between 2003 and 2013 (Argentina, Brazil, Colombia, Costa Rica, Dominican Republic, Ecuador, Honduras, Panama, Paraguay, Peru, El Salvador, and Uruguay). Regional numbers were obtained pooling micro-data from the 12 countries and using survey weights.Footnote 11

Static analysis: an heterogeneous and still vulnerable region

Latin America is a very heterogeneous region in terms of the stage of socioeconomic transition, defined as the process of transition out of poverty towards the middle class. Countries such as Argentina, Chile, and Uruguay, are at an advanced stage, being mainly made of middle and high-income class (with the incidence of poverty around 10 %). On the other hand, countries such as Guatemala and Honduras, are at the earliest stages: almost half of their population still lives in extreme poverty, and the incidence of overall poverty exceeds 65 % (Table 1).

Table 1 Income distribution in Latin American countries (2000–2013).

One feature, however, is common to all countries: the large size of the vulnerable class. This represents in most cases 30–40 % of the population, suggesting that an important share of the population remains at substantial risk of falling into poverty. Countries with low poverty rates and large middle classes are no exception. In these countries, the vulnerable class is the back end of the socioeconomic transition; while in the poorest countries, the vulnerable class leads the way.

Country heterogeneity is also high in the speed of the socio-economic transition. For example, Colombia and Ecuador reduced the incidence of poverty by more than 25 percentage points (pp), and expanded their middle and upper classes by more than 15 pp (Table 1). In contrast, progress was sluggish in Mexico and Dominican Republic, despite the fact that these countries started from poverty headcounts around 40 %.

Table 2 summarizes the region's heterogeneity by classifying the Latin American countries based on the stage and speed of their socioeconomic transition. In the countries in the upper-left cell, for example, Nicaragua and El Salvador, the poor still represents the largest share of the population, and poverty reduction has been relatively slow (less than 25 % between 2000 and 2013). These are the countries with the highest need for reforming and/or expanding the social safety net, as poverty is widespread and resilient. These are also the countries with less financial resources for its implementation, so efficiency should be at the top of their policy agenda.

Table 2 Categorization of Latin American countries, by income distribution and poverty reduction (2000–2013).

Dynamic analysis: poverty is still largely chronic

Income mobility between 2003 and 2013 was considerable. Poverty reduction was the net effect of many exiting poverty, while fewer were falling back. Upward mobility was particularly high for those that started in moderate poverty.

Most of the moderate poor rose to the vulnerable class, and a few (6 %) made it to the middle class (Table 3).Footnote 12 In contrast, 73 % of those that were initially extreme poor were still poor after a decade, although many enjoyed less severe poverty and another quarter rose to the vulnerable class. As may be expected (as they started from higher initial living standards), also the vulnerable enjoyed less upper mobility. Only 28 % of them rose to the middle class, while 62 % remained in the initial income category and 10 % fell into poverty.

Table 3 Poverty transition matrix in Latin America (2003–2013), region aggregate.

Chronic poverty was widespread among both the extreme and the moderate poor. Many of those that were initially moderate poor, despite enjoying a high likelihood of rising to the vulnerable class in 2013, were poor in at least 5 years over the period 2004–2013. This may be explained by an ascending trajectory that only rose above the poverty line in the last part of the period of analysis.

On average, 91 % of extreme poverty were chronic (Table 4), with very little country heterogeneity (Fig. 2). In almost all countries with available data, about 90 % or more of the extreme poor in 2003 remained poor in at least five of the following 10 years. The only exceptions were Argentina and Uruguay, for which data are urban only.

Table 4 Poverty duration in Latin America (2003–2013), region aggregate.

Chronic poverty in Latin American countries (2003–2013)

More surprisingly, also half of the moderate poor in 2003 was chronically poor.Footnote 13 This has important implications for the design and implementation of the social safety nets. In particular, it implies that long-term interventions are not only needed for the extreme poor, but also for an important share of those in moderate poverty. In this respect, however, country heterogeneity was substantial (Fig. 2). For example, while extreme poverty was equally chronic in Ecuador and Colombia, in the former moderate poverty was much more transient than in the latter.

An important share of the vulnerable and, more surprisingly, of the middle class experienced poverty over the period 2004–13. More precisely, 65 % of the vulnerable group and 14 % of the middle class of 2003 were poor at least once over the following decade. We call these families "future poor", a group whose share ranged from 10 % of the population in Argentina to 38 % in Costa Rica (Fig. 3). Finally, the share of the population that was never poor ranged from 8 % in Honduras to 57 % in Uruguay (Fig. 3).

Poverty dynamics in Latin American countries (2003–2013)

The identification of the chronic and transient poor in the samples of 2003 allows investigating the household characteristics that are associated with different poverty durations. In other words, it allows studying who are the chronic poor, how they differ from the transient poor and, for comparison, from the non-poor. We address these questions by looking at Paraguay and Honduras, two countries at different stages of the socio-economic transition.

Household characteristics of the chronic poor broadly mimic those that, in the literature, are commonly associated with extreme poverty. They include larger household size, more children, lower levels of education, more engagement in self-employment, less wage employment, and residence in rural areas. Table 5 reports average household characteristics by dynamic poverty status in Paraguay and Honduras. Despite a few differences between the two countries, most patterns are common and the similarities are striking. In both countries, for example, chronically poor households had no member with complete tertiary education. In Honduras, they did not even have any member with complete secondary education; while in Paraguay, only one in six chronically poor households had one member with this level of schooling. Their likelihood to live in rural areas was ten times higher than among the non-poor. Self-employment decreased and wage employment grew as one moved from chronic poverty to non-poverty. The low level of human capital and the remote location suggest that, at least among the chronic poor, the graduation strategies with which many Latin American countries are attempting to complement the social safety nets have low probability of being successful.

Table 5 Household Characteristics in Paraguay and Honduras (2003), by Poverty Status.

Differences between urban and rural areas

The region has undergone a process of urbanization, which has been slowing down in recent years. The urban share of the population has been growing from 49 % in 1960 to 80 % in 2013, and is expected to reach 83 % in 2025 (ECLAC 2013). In our sample of countries, this figure has increased from 72 % in 2000 to 74 % in 2013 (Table 6, panel B). In this context, it is extremely important to understand the different urban–rural trends in poverty reduction, as when it comes to poverty, cities and countryside remain two worlds apart.Footnote 14

Table 6 Geographic profile of poverty in Latin America (2000–2013), region aggregate.

Extreme and overall poverty have decreased substantially in both urban and rural areas. Yet, in the latter, one-third of the population still lives in extreme poverty, and the incidence of total poverty exceeds 50 % (Table 6, panel A).

The growth of a middle class is an eminently urban phenomenon. In rural areas, poverty reduction has been accompanied by an expansion of the vulnerable class, and only 13 % of the population had per-capita income above $10 in 2013. In contrast, the size of the vulnerable class remained fairly constant in urban areas. This is where the middle class expanded more rapidly (by over 10 pp).

As a result, the rural nature of poverty has intensified, with a substantial increase of the share of poor living in rural areas. While in 2000 the rural areas were home to 54 % of the extreme poor and 30 % of the moderate poor, these figures increased to 58 and 38 %, respectively, in 2013. In addition, the rural share of vulnerable expanded, and only the high-income class became more urban during the period of analysis.

In 2013, the majority of the extreme poor lived in urban areas only in four countries (Brazil, Chile, Colombia and Dominican Republic). In contrast, with few exceptions, moderate poverty was fairly equally distributed between urban and rural areas (Fig. 4). This suggests that long-term social safety net programs are best suited for rural areas, while short-term interventions are equally needed in urban and rural areas.

Rural percentage of poverty and population in Latin American countries (2013)

In the near future, in urban areas, poverty is expected to leave way to a rising middle class (Fig. 5). This forecast is obtained by combining economic growth and demographic projections with the estimated growth elasticity of poverty. While the size of the vulnerable class will remain fairly stable (at around 40 % of the urban population), by 2025 the incidence of urban poverty is expected to fall to 13 %. The middle class will rise to represent 42 % of the urban population.

Poverty, vulnerability, and middle class in Latin America (2000–2025), region aggregate

In contrast, the growth of the middle class will be slow in rural areas. Poverty will be mostly replaced by vulnerability. The vulnerable class is expected to become the single largest group in 2021, and grow to 47 % of the rural population in 2025.

Failing to account for substantial rural to urban migration may bias the results of the poverty dynamic analysis based on the pseudo-panel methodology (which assumes that household characteristics, including residence in rural or urban areas, are constant over time). For example, one may overestimate the number of poverty episodes in rural areas, if some rural families have migrated to urban areas and have managed, through migration, to transition to the vulnerable class. The direction of the bias will depend on who migrates (usually not the poorest) and how migration affects their income.

In Annex 5, we analyze the variation in the proportion of urban population over the period 2003–2013. We show that its magnitude is small, and does not threaten the validity of our findings. This result is consistent with the slowdown in the process of urbanization documented in CELADE—Population Division of ECLAC (2015).

The urban and rural poverty dynamics analyses confirm that most of the mobility out of poverty took place in urban areas. In the cities, 35 % of the extreme poor and 73 % of the moderate poor in 2003 had exited poverty after 10 years, against only 15 and 53 % in rural areas (Table 7). A similar pattern can be observed for upward mobility from the vulnerable class. Symmetrically, the risk of falling from the middle class to the vulnerable class or into poverty was more than double in rural than in urban areas (44 versus 21 %). This may also be due to the differential urban–rural impacts of the world recession in the second part of the period of analysis.

Table 7 Urban and rural poverty transition matrices in Latin America (2003–2013), region aggregate.

Rural areas are characterized by high incidence of chronic poverty and future poverty. 99 % of the extreme poor and 78 % of those that were moderate poor in 2003 experienced chronic poverty between 2004 and 2013. Furthermore, 86 % of the vulnerable and 37 % of the middle class were poor at least once during the period of analysis. The picture is relatively rosier in urban areas, where "only" 86 % of extreme poverty and 42 % of moderate poverty were chronic, and where "only" 62 % of the vulnerable experienced at least one episode of poverty (Fig. 6).

Urban and rural poverty dynamics in Latin America (2003–2013), region aggregate

Cross-country analysis shows that Ecuador and Panama present the widest gap between rural and urban areas, a result that is driven by the highly transient nature of urban moderate poverty. Variability is limited in the percentage of extreme poor that are chronic poor, both in rural and urban areas. More differences emerge when looking at the percentage of moderate poor that experience chronic poverty. This is, particularly, the case in urban areas. While in El Salvador 71 % of urban moderate poor experience chronic poverty over the following decade, the same happens to only one every five urban moderate poor in Ecuador and Panama. This indicates that in these countries, urban moderate poverty is particularly transient (Fig. 7). Complete data by country is presented in Annex 6, for the 12 countries for which we can construct synthetic panels.

Urban and rural chronic poverty in Latin American countries (2003–2013)

Conclusions and implication for the design and implementation of social safety nets

In the absence of information on poverty dynamics, development practitioners frequently assume that extreme poverty is chronic and rural, while moderate poverty is transient and urban. Similarly, they tend to expect that the vulnerable are at risk of falling into poverty, while the middle class has reached a safe place and no longer needs a social safety net.

In this paper, we construct synthetic panels and analyze poverty dynamics for a large sample of Latin American countries, with the aim to provide policy makers and development practitioners (engaged in project design) with estimates of the duration of poverty. While the availability of real long panel data would allow refining and deepening the analysis, we believe that our results constitute a useful proxy and hope they will stimulate further data collection and research. Our analysis contributes to debunking a few common assumptions.

First, we show that chronic poverty is widespread also among the moderate poor. This type of poverty, characterized by long duration, accounts for 91 % of extreme poverty and, surprisingly, 50 % of moderate poverty. As expected, chronic poverty is more frequent in rural areas, where 99 % of the extreme poor and as many as 78 % of the moderate poor are chronic poor.

Second, we show that also the middle class is still exposed to a substantial risk of falling back into poverty. More specifically, we find that 14 % of those that belonged to the middle class in 2003 experienced at least one poverty episode during the following decade.

Our results differ from those of Ferreira et al. (2013) and Vakis et al. (2015), although they are based on the application of a similar synthetic panel methodology. These authors analyze mobility between two periods only, and find that the vulnerable and the middle class are more consolidated in their status. For example, Ferreira et al. (2013, Table 4.1) estimate that only 2.7 % of the vulnerable and 0.5 % of the middle class fall back into poverty.

Our findings have important implications for the design and implementation of social safety nets. First, they suggest that interventions that target the rural poor and the urban extreme poor need to adopt a long-term perspective. The frequent recertification of the beneficiaries might not be needed and, probably, represents a loss of administrative and financial resources.

Second, our findings suggest that interventions that target the urban moderate poor need to adopt flexible entry and exit rules in response to this group's high income mobility. Targeting mechanisms based on proxy means tests are unlikely to perform satisfactorily. The Brazilian model based on declared income may represent a better alternative, if it can be coupled with frequent recertification and electronic audits of eligibility based on crossing information from the roster of beneficiaries with other sources of administrative data (e.g., social security contributions, ownership of assets).

Third, we show that the chronic poor have extremely low levels of human capital and live in rural areas with limited opportunity of wage employment. These are key factors for escaping poverty. Consequently, our findings suggest that, at least for this group, graduation strategies aimed at increasing income-generation capacity have low probabilities of success.Footnote 15

Finally, the finding that both the vulnerable and the middle class are likely to experience poverty in the future implies that the social safety nets remain relevant for many that are currently out of poverty.Footnote 16

A caveat is worth mentioning. Our dynamic analysis is based on 12 countries for which data are available. Further work is needed to incorporate results for more countries and increase the representativeness of our findings.

For an analysis of the key drivers of poverty reduction in Peru, see Robles and Robles (2014).

Targeting is the process of identification of poor and vulnerable beneficiaries, as opposed to universal entitlement to benefits. Recertification is the periodic verification of beneficiaries' living standards, to assess whether they still qualify for receiving the benefits.

See Jalan and Ravallion (1998), Baulch and Hoddinott (2000), Davis and Stampini (2002), Hulme and Shepherd (2003), Dercon and Shapiro (2007), Fields et al. (2007), Stampini and Davis (2009), Ferreira et al. (2013), Vakis et al. (2015). What is missing in this literature is the analysis of poverty or income dynamics with long panels made of consecutive years. Robles and Saenz (2015) have started to fill this gap; using synthetic panels (similar to those employed in this paper) and a discrete-time hazard model, they identify the factors associated with long-term poverty and exit from poverty in a sample of Latin American countries.

We refer to social safety nets as the systems of social protection for the poor and vulnerable. In the Inter-American Development Bank Strategic Framework Document on Social Protection and Poverty, this is defined as "(i) efficient redistributive programs that contribute to human capital development; and (ii) delivery of services for social inclusion, in particular those aimed at early childhood development and at-risk youth" (IDB 2014). The findings of this paper are particularly relevant for the design and implementation of redistributive programs, such as conditional cash transfers (CCTs), whose duration and level of benefits should depend on poverty duration and depth.

The 5-year threshold, like any alternative threshold, is somehow arbitrary. However, the results presented in this paper are generally robust to the adoption of alternative values.

These are the 18 countries that regularly execute household surveys and share their databases with the IDB. We lament not being able to include Caribbean countries, for which such data is not available.

Other synthetic or pseudo-panel approaches are those that track cohorts of individuals or households over repeated cross-sectional surveys (Deaton 1985), and those that recover the stochastic process from cross sectional data and generate individual income dynamics (Bourguignon et al. 2004).

We follow Canavire and Robles (2013), who, using this kind of panels and non-parametric duration models, analyze the sequencing and duration of the episodes of poverty.

Dang et al. (2014) and Cruces et al. (2011) show that the method performs well irrespective of the forecasting direction, i.e. that estimates of mobility are very similar if one predicts per-capita income in each year based on the sample of families that are surveyed in the last year.

Dang et al. (2014) suggest that standard errors for the bounds can be estimated by bootstrapping. This involves bootstrap resampling from the original cross-sections while accounting for survey weights (footnote 14). Similarly, we could obtain standard errors for our point estimates by complementing Dang et al.'s suggested procedure with the application of the delta method.

Brazil does not have a household survey for 2010. In order to include it in the dynamic analysis, we considered mobility over the period 2002–2013. It is important to highlight the caveat that our dynamic analysis is based on twelve countries with available data. Among the excluded countries is Mexico, which accounts for an important share of the population of the region. The exclusion of Mexico is due to the fact that the Encuesta Nacional sobre Ingresos y Gastos de los Hogares is carried out every two years, while the Encuesta Nacional de Ocupación y Empleo is yearly but has been nationally representative only since 2005.

Table 3 presents the two-point transition matrix, similar to Table 4.1 of Ferreira et al. (2013) and Table 1 of Vakis et al. (2015), using a larger number of income groups and extending the time period to 2013.

In Annex 4, we perform sensitivity analysis and show that these key results are robust to the adoption of alternative poverty lines.

It is relevant to acknowledge that the term urban refers to very different sizes of human settlements (Satterthwaite 2010), that may range from as few as 2500 to as many as several million inhabitants. Despite this heterogeneity, in this paper we use the terms urban areas and cities as synonims.

For a review of the experience with recertification and graduation in Latin American conditional cash transfer programs, see Medellín et al. (2015).

For an estimate of the demand for social safety nets in Latin American countries, see Ibarraran et al. (2016).

Given our definition of the income variables, our poverty estimates may differ from the official ones and from those calculated by other institutions that use the same household surveys.

Baulch B, Hoddinott J (eds) (2000) Economic mobility and poverty dynamics in developing countries. Frank Cass, London

Bourguignon F, Goh, C, Kim D (2004) Estimating Individual Vulnerability to Poverty with Pseudo-Panel Data. World Bank Policy Research Working Paper No. 3375

CELADE—Population Division of ECLAC (2015). Latin America: Long term population estimates and projections 1950–2100. The 2015 Revision. http://www.cepal.org/es/estimaciones-proyecciones-poblacion-largo-plazo-1950-2100

Canavire G, Robles M (2013) Non-parametric analysis of poverty duration using repeated cross-section data. An application for Peru. The Inter-American Development Bank, Mimeo

Center for Distributional, Labor and Social Studies (CEDLAS) and World Bank. 2012. A guide to the SEDLAC: Socioeconomic Database for Latin America and the Caribbean. La Plata. http://sedlac.econo.unlp.edu.ar/eng/methodology.php

Cohen J (1988) Statistical power analysis for the behavioral sciences, 2nd edn. Lawrence Erlbaum, Hillsdale

Cruces G, Lanjouw P, Lucchetti L, Perova E, Vakis R, Viollaz M (2011) Intra-generational mobility and repeated cross-sections. A three-country validation exercise. World Bank Policy Research Working Paper No. 5916

Dang H, Lanjouw P, Luoto J, McKenzie D (2014) Using repeated cross-sections to explore movements into and out of poverty. J Dev Econ 107:112–128

Dang HA, Lanjouw P (2013) Measuring poverty dynamics with synthetic panels based on cross-sections. World Bank Policy Research Working Paper No. 6504

Davis B, Stampini M (2002) Pathways towards prosperity in Nicaragua: an analysis of panel households in the 1998 and 2001 LSMS Surveys. United Nations Food and Agriculture Organization, ESA Working Paper 02-10

Deaton A (1985) Panel data from time series of cross-sections. J Econom 30:109–216

Dercon S, Shapiro JS (2007) Moving on, staying behind, getting lost: lessons on poverty mobility from longitudinal data. In: Narayan D, Petesch P (eds) Moving out of poverty. World Bank

Ferreira FHG, Messina J, Rigolini J, López-Calva LF, Lugo MA, Vakis R (2013) Economic mobility and the rise of the latin american middle class. World Bank, Washington, DC

Fields G, Viollaz M (2013) Can the limitations of panel datasets be overcome by using pseudo-panels to estimate income mobility? http://www.iza.org/conference_files/worldb2013/fields_g370.pdf

Fields G, Duval-Hernandez R, Freije-Rodriguez S, Sanchez-Puerta ML (2007) Intergenerational income mobility in Latin America. Journal of LACEA. Latin American and Caribbean Economic Association

Haynes M, Martinez A, Tomaszewski W, Western M (2013) Measuring income mobility using pseudo-panel data. Philipp Stat 62(2):71–99

Hulme D, Shepherd A (2003) Conceptualizing chronic poverty. World Dev 3(3):403–423

Ibarraran P, Medellín N, Perez B, Jara P, Parsons J, Stampini M (2016) Más inclusión social: lecciones de Europa y perspectivas para América Latina. Monograph n. 359. Inter-American Development Bank, Washington DC. https://publications.iadb.org/handle/11319/7486

Inter-American Development Bank. 2014. Strategic Framework Document on Social Protection and Health. http://www.iadb.org/document.cfm?id=39211762

Jalan J, Ravallion M (1998) Transient poverty in postreform rural China. J Comp Econ 26:338–357

López-Calva LF, Ortiz-Juarez E (2011) A vulnerability approach to the definition of the middle class. Policy Research Working Paper Series 5902, The World Bank. Later published as López-Calva, L.F. and Ortiz-Juarez, E. 2014. A vulnerability approach to the definition of the middle class. J Econ Inequal 12(1):23–47

Medellín N, Villa Lora JM, Ibarraran P, Stampini M (2015) Moving ahead: recertification and exit strategies in conditional cash transfer programs. Monograph n. 348. Inter-American Development Bank, Washington DC. http://publications.iadb.org/handle/11319/7359

Ravallion M, Chen S, Sangraula P (2008) Dollar a day revisited. World Bank Policy Research Working Paper No. 4620

Robles A, Robles M (2014) Workforce heterogeneity and decomposing welfare changes. The Inter-American Development Bank, Mimeo

Robles M, Saenz M (2015) The dynamics of poverty spells in Latin America. Mimeo, The Inter-American Development Bank

Satterthwaite D (2010) Urban myths and the mis-use of data that underpin them. In: Beall J, Guha-khasnobis B, Kanbur R (eds) Urbanization and development: multidisciplinary perspectives. Oxford Scholarship Online. doi:10.1093/acprof

Stampini M, Davis B (2009) Discerning transient from chronic poverty in Nicaragua: measurement with a two-period panel data set. Eur J Dev Res 18(1):105–130

The United Nations Economic Commission for Latin America and the Caribbean (ECLAC). 2013. Long term population estimates and projections 1950–2100. 2013 Revision. CELADE-Population Division of ECLAC. http://www.cepal.org/celade/proyecciones/basedatos_BD.htm

Vakis R, Rigolini J, Lucchetti L (2015) Left behind: chronic poverty in Latin America and the Caribbean, overview. World Bank, Washington, DC

World Bank (2014) Social gains in the balance: a fiscal policy challenge for Latin America and the Caribbean. Washington DC

This report has been prepared with funds from the IDB economic and sector work "Social Protection beyond Conditional Cash Transfers: Challenges and Alternatives" (RG-K1374). We thank Ferdinando Regalia, Norbert Schady, Susan Parker, and two anonymous referees for useful comments and suggestions. Remaining errors are ours only. The content and findings of this paper reflect the opinions of the authors and not those of the IDB, its Board of Directors or the countries they represent.

Social Protection and Health Division of the Inter-American Development Bank (IDB), Washington, DC, USA

Marco Stampini

, Pablo Ibarrarán

& Nadin Medellín

Front Office of the Social Sector of the IDB, Washington, DC, USA

Marcos Robles

& Mayra Sáenz

Search for Marco Stampini in:

Search for Marcos Robles in:

Search for Mayra Sáenz in:

Search for Pablo Ibarrarán in:

Search for Nadin Medellín in:

Correspondence to Marco Stampini.

Annex 1: Data sources

IDB's Harmonized Data Bank of Household Surveys from Latin America and the Caribbean (also known as IDB's Sociometro) contains harmonized household data sets for Latin American and Caribbean countries starting from the late 1980s. Variable names, definitions, and contents are kept constant across countries and time. Table 8 shows the number of data sets used for the preparation of this paper.

Table 8 Data sets used in this paper, by Country, 2000–2013

Although it is well known that per-capita consumption is a better proxy for well-being, we use per-capita income, because few countries in the region routinely conduct surveys with a consumption module, while all of them include questions on income. We calculate per-capita income by dividing total household income by the number of household members, without using any adult equivalence scale.

Income components are reported after-tax whenever possible. Extraordinary income sources are not considered. Similarly, we do not include the implicit rent from owned or occupied housing, because not all countries capture the information that allows estimating it.Footnote 17 As is common practice in academic and official studies, we do not make any imputation for missing, null or outlying values in addition to those already contained in the data sets provided by the national statistical offices. Finally, we do not make adjustments for differences in urban–rural prices.

Annex 2: Synthetic-panel methodology

Table 9 summarizes the existing literature comparing results from genuine panel data with non-parametric, parametric, and point estimate synthetic-panel methods. All results reported are based on the use of household time-invariant characteristics, sub-national controls, and region fixed effects, consistently with the definition of our own model. They show that the estimates based on the average of the bounds (Eq. (4)) approximate well the estimates based on genuine panel data, irrespective of the length of the period analyzed (2 years in Peru, versus 10 in Chile), the width of the bounds, the type of poverty transition, and the number of replications used to obtain the upper bound (50, 100, 500). These estimates are found to be as accurate as those obtained with either the parametric approach (for Indonesia and Vietnam) or the point estimate approach (Bosnia-Herzegovina).

Table 9 Summary of the literature comparing estimates from synthetic and genuine panel data

Annex 3: Estimate bounds

See Figs. 8, 9, 10.

Observed versus predicted extreme poverty headcounts in selected countries

Observed versus predicted poverty headcounts in selected countries

Bounds for the estimates of transient poverty, chronic poverty and future poverty in selected LAC countries

Annex 4: Sensitivity analysis of the percentage of chronic poverty to the adoption of alternative poverty lines

Poverty lines are defined with a degree of arbitrariness. To show that our findings are robust to the adoption of alternative poverty lines, we perform sensitivity analysis of our key chronic poverty results. In Sect. 4, we showed that chronic poverty accounted for 91 % of extreme poverty and 50 % of moderate poverty. This occurs when using a 2.5$ extreme poverty line and a 4$ moderate poverty line. In Figs. 11 and 12, we verify how these findings change when considering poverty lines that are 20 % lower or higher.

Percentage of extreme poor that are chronic poor, sensitivity analysis to the adoption of alternative poverty lines

Percentage of moderate poor that are chronic poor, sensitivity analysis to the adoption of alternative poverty lines

With lower poverty lines, i.e., using a 2$ extreme poverty line and a 3.2$ moderate poverty line, we find that chronic poverty accounted for 87 % of extreme poverty and 41 % of moderate poverty. With higher poverty lines, i.e., using a 3$ extreme poverty line and a 4.8$ moderate poverty line, chronic poverty accounted for 94 % of extreme poverty and 58 % of moderate poverty.

Overall, the higher the poverty lines, the higher are the percentages of both extreme and moderate poverty that are found to be chronic. The result is less sensitive for extreme than for moderate poverty. Finally, different poverty lines do not alter the order of magnitude of our key results, nor country rankings.

Annex 5: Variation in the percentage of urban population

As a proxy for rural to urban migration, we test whether the percentage of urban population has changed significantly over the period 2003–2013, which is the timeframe of our poverty dynamics analysis. Given the large size of our samples, the standard error of each sample mean tends to be very close to zero. As a consequence, the t test is likely to reject the null hypothesis of means equality even when the difference is trivial (producing type I–false positive–errors).

We, therefore, adopt an alternative hypothesis testing framework by conducting an effect size type analysis, in which the measure of statistical difference is the standard deviation (which is not shrunk by definition by the size of the sample). Specifically, we use the Cohen's d indicator (Cohen 1988), which is equal to the difference between the two sample means, divided by the standard deviation of the pooled samples. Cohen explains that absolute values of d around 0.2 reflect a small effect size, while 0.5 is a medium effect size and 0.8 indicates a large effect size. The last column of Table 10 shows that the absolute values of Cohen's d are all smaller than 0.2 in our sample of countries, suggesting that rural-to-urban migration is not likely to threaten the validity of our poverty dynamic results.

Table 10 Effect size analysis of the variation in the percentage of urban population.

Annex 6: Country profiles

Country profiles—Argentina.

Extreme poverty

Moderate poverty

Vulnerable class

High income

Incidence in 2000—total – – – – – –

Urban 14.9 15.4 36.5 31.3 2.0 100.0

Rural – – – – – –

Urban 4.0 6.9 34.4 52.5 2.2 100.0

Share rural in 2000 – – – – – –

Transition probabilities—total

Extreme poverty – – – – – –

Moderate poverty – – – – – –

Vulnerable class – – – – – –

Middle class – – – – – –

High income – – – – – –

Transition probabilities—urban

Extreme poverty 5.9 20.5 63.7 7.3 2.7 100.0

Moderate poverty 0.5 2.0 65.2 31.2 1.2 100.0

Vulnerable class 0.1 0.6 27.2 71.1 1.0 100.0

Middle class 0.0 0.1 2.6 90.7 6.7 100.0

High income 0.0 0.0 0.0 33.3 66.7 100.0

Transition probabilities—rural

% of chronic poverty—total – – –

Urban 45.7 1.2 27.7

Rural – – –

% future poor—total – – – –

Urban 25.6 2.5 0.0 16.3

Rural – – – –

– Not available

Chronic poor

Transient poor

Future poor

Never poor

% of population 11.1 29.0 9.8 50.1 100.0

Male household head 0.476 0.483 0.507 0.476 0.481

Household size 6.222 5.294 4.389 3.577 4.448

Number of children (aged 0–5) 1.096 0.709 0.524 0.284 0.521

Adult members

Self-employed 0.348 0.342 0.349 0.309 0.327

Salaried 1.060 1.147 1.372 1.233 1.202

Unemployed 0.538 0.388 0.205 0.178 0.282

Inactive 0.851 0.980 0.854 0.825 0.876

Primary education or less 0.527 0.355 0.321 0.144 0.265

Incomplete secondary educ. 0.759 0.725 0.632 0.386 0.550

Complete secondary educ. 0.298 0.495 0.537 0.582 0.521

Incomplete tertiary educ. 0.136 0.250 0.263 0.514 0.371

Complete tertiary educ. 0.025 0.122 0.113 0.512 0.306

Rural (share) – – – – –

Country Profiles—Brazil.

Incidence in 2001—total 27.1 16.8 32.5 21.3 2.3 100.0

Rural 54.3 18.3 21.9 5.3 0.3 100.0

Rural 26.5 17.5 37.6 17.8 0.6 100.0

Share rural in 2001 32.4 17.6 10.9 4.0 2.1 16.2

Extreme poverty 36.2 37.0 26.0 0.7 0.0 100.0

Moderate poverty 5.9 23.7 65.9 4.5 0.0 100.0

Middle class 0.1 0.8 22.1 75.6 1.4 100.0

% of chronic poverty—total 94.6 51.3 77.8

Urban 92.8 48.2 73.4

Rural 98.4 65.6 89.8

% future poor—total 65.2 11.5 0.3 42.1

Urban 63.5 11.0 0.3 40.0

Rural 79.1 23.0 0.0 67.5

% of population 27.5 10.0 25.0 37.5 100.0

Rural (share) 0.310 0.123 0.125 0.044 0.145

Country Profiles—Colombia. Source: authors' calculations based on household survey data from IDB's Sociometro

Moderate poverty 12.6 29.9 53.4 3.3 0.8 100.0

Vulnerable class 3.5 12.6 59.1 23.7 1.0 100.0

Extreme poverty 67.6 21.6 9.2 0.1 1.5 100.0

Vulnerable class 9.6 27.1 56.6 6.4 0.2 100.0

High income 0.0 0.0 0.0 3.9 96.1 100.0

Country Profiles—Costa Rica.

Urban 8.7 11.6 40.0 37.9 1.9 100.0

Incidence in 2013—total 8.5 10.6 37.7 39.2 4.0 100.0

Share rural in 2000 66.5 54.0 42.1 21.0 11.8 41.3

Moderate poverty 26.8 19.2 40.9 11.9 1.3 100.0

Vulnerable class 10.0 13.8 42.2 33.1 0.8 100.0

% of population 21.6 7.4 37.7 33.3 100.0

Country Profiles—Dominican Republic.

Share rural in 2013 45.1 39.0 29.9 15.8 1.4 32.6

Extreme poverty 57.6 31.9 10.4 0.1 100.0

Moderate poverty 21.5 40.7 37.2 0.6 100.0

Vulnerable class 4.3 22.5 67.6 5.6 100.0

Middle class 0.0 2.0 55.2 42.7 100.0

High income 0.0 0.0 56.3 43.7 100.0

Country Profiles—Ecuador.

Country Profiles—Honduras.

Incidence in 2013—total 49.5 17.0 24.9 8.5 0.2 100.0

Extreme poverty 89.0 8.0 2.6 0.0 0.4 100.0

Vulnerable class 21.1 25.7 48.2 4.6 0.4 100.0

Middle class 9.5 23.4 52.6 14.5 0.0 100.0

% of population 64.3 4.1 23.6 8.0 100.0

Country Profiles—Panama.

Moderate poverty 2.2 13.3 72.6 11.9 0.0 100.0

Country Profiles—Peru.

High income 0.0 0.0 20.1 70.5 9.4 100.0

Country Profiles—Paraguay.

% future poor—total 84.3 40.6 13.8 67.6

Rural 97.8 80.1 26.0 91.3

Country Profiles—El Salvador.

High income 0.0 0.9 3.3 90.7 5.0 100.0

Country Profiles—Uruguay.

% of chronic poverty—total –

Urban 31.4 2.3 – 19.2

% of population 7.3 21.7 13.6 57.3 100.0

Stampini, M., Robles, M., Sáenz, M. et al. Poverty, vulnerability, and the middle class in Latin America. Lat Am Econ Rev 25, 4 (2016) doi:10.1007/s40503-016-0034-1

Poverty dynamics

Transitory and chronic poverty

Panel data

Synthetic panels | CommonCrawl |

\begin{definition}[Definition:Integral Element of Algebra/Definition 4]

Let $A$ be a commutative ring with unity.

Let $f : A \to B$ be a commutative $A$-algebra.

Let $b\in B$.

The element $b$ is '''integral''' over $A$ {{iff}} there exists a faithful $A \sqbrk b$-module whose restriction of scalars to $A$ is finitely generated.

\end{definition} | ProofWiki |

\begin{document}

\title{A non-overlapping domain decomposition method for incompressible Stokes equations with continuous pressure} \pagestyle{myheadings} \thispagestyle{plain} \markboth{JING LI AND XUEMIN TU}{DOMAIN DECOMPOSITION FOR INCOMPRESSIBLE STOKES}

\begin{abstract} A non-overlapping domain decomposition algorithm is proposed to solve the linear system arising from mixed finite element approximation of incompressible Stokes equations. A continuous finite element space for the pressure is used. In the proposed algorithm, Lagrange multipliers are used to enforce continuity of the velocity component across the subdomain domain boundary. The continuity of the pressure component is enforced in the primal form, i.e., neighboring subdomains share the same pressure degrees of freedom on the subdomain interface and no Lagrange multipliers are needed. After eliminating all velocity variables and the independent subdomain interior parts of the pressures, a symmetric positive semi-definite linear system for the subdomain boundary pressures and the Lagrange multipliers is formed and solved by a preconditioned conjugate gradient method. A lumped preconditioner is studied and the condition number bound of the preconditioned operator is proved to be independent of the number of subdomains for fixed subdomain problem size. Numerical experiments demonstrate the convergence rate of the proposed algorithm. \end{abstract}

{\bf keywords} domain decomposition, incompressible Stokes, FETI-DP, BDDC

{\bf AMS} 65F10, 65N30, 65N55

\section{Introduction}

Domain decomposition methods have been studied well for solving incompressible Stokes equations and similar saddle-point problems; see, e.g., \cite{Kla98, 8pavwid, li05, paulo2003, Doh04, li06, kim06, Tu:2005:BPP, Tu:2005:BPD, LucaOlofStef}. In many of those work, special care need be taken to deal with the divergence-free constraints across subdomain boundaries, which often lead to large coarse level problems. The large coarse level problem will be a bottleneck in large scale parallel computations, and additional efforts in the algorithm are needed to reduce its impact, cf.~\cite{Tu:2004:TLB,Tu:2005:TLB,Tudd16,Klawonn:2005:IFM,Dohrmann:2005:ABP,KimTu,Tu:2011:TLBS}. Some recent progress has been made by Dohrmann and Widlund~\cite{Doh09, Doh10} for the almost incompressible elasticity, where the coarse level space is built from discrete subdomain saddle-point harmonic extensions of certain subdomain interface cut-off functions and its dimension is much smaller than those in the previous studies. Kim and Lee~\cite[with Park]{kim11, kim102, kim10} studied both the FETI-DP and BDDC algorithms for incompressible Stokes equations where a lumped preconditioner is used and reduction in the dimension of the coarse level space is also achieved.

In most above mentioned applications and analysis of domain decomposition methods for incompressible Stokes equations, the mixed finite element space contains discontinuous pressures. Application of discontinuous pressures in domain decomposition methods is natural. The decomposing of the pressure components to independent subdomains can be handled conveniently and no continuity of pressures across the subdomain boundary need be enforced. However, a big class of mixed finite elements used for solving incompressible Stokes and Navier-Stokes equations have continuous pressures, e.g., the well known Taylor-Hood type~\cite{Taylor}. There have been a variety of approaches using continuous pressures in domain decomposition methods for solving incompressible Stokes equations, e.g., by Goldfeld \cite{pauloPhD}, by \v{S}\'istek {\em et. al.} \cite{sis11}, and by Benhassine and Bendali \cite{ben10}. In their work, an indefinite system of linear equations need be solved, either by a generalized minimal residual method or simply by a conjugate gradient method. To the best of our knowledge, no scalable convergence rate has been proved analytically for any of those approaches using continuous pressures.

In this paper, we propose a non-overlapping domain decomposition algorithm for solving incompressible Stokes equations with continuous pressure finite element space. The scalability of its convergence rate is proved. In this algorithm, the subdomain boundary velocities are dealt with in the same way as in the FETI-DP method: a few for each subdomain are selected as the coarse level primal variables, which are shared by neighboring subdomains; the others are subdomain independent and Lagrange multipliers are used to enforce their continuity. The subdomain boundary pressure degrees of freedom are all in the primal form. They are shared by neighboring subdomains and no Lagrange multipliers are needed for their continuity. After eliminating all velocity variables and the independent subdomain interior parts of the pressures, the system for the subdomain boundary pressures and the Lagrange multipliers is shown to be symmetric positive semi-definite. A preconditioned conjugate gradient method with a lumped preconditioner is studied. As strong condition number bounds as for the scalar elliptic case are established. In the proposed algorithm and in the estimate of its condition number bound, no additional coarse level variables, except those necessary for solving scalar elliptic problems, are required for incompressible Stokes problems. The resulting coarse level problem is also symmetric positive definite.

To stay focused on the purpose of this paper, the discussion of the proposed algorithm and its analysis are based on two-dimensional problems, even though the same approach can be extended to the three-dimensional case without substantial obstacles. It is also worth pointing out that the domain decomposition algorithm and its analysis presented in this paper apply equally well, with only minor modifications, to the case where discontinuous pressures are used in the mixed finite element space.

The remainder of this paper is organized as follows. The finite element discretization of the incompressible Stokes equation is introduced in Section \ref{section:FEM}. A domain decomposition approach is described in Section~\ref{section:DDM}. The system for the subdomain boundary pressures and the Lagrange multipliers is derived in Section~\ref{section:Gmatrix}. Section \ref{section:techniques} provides some techniques used in the condition number bound estimate. In Section~\ref{section:lumped}, a lumped preconditioner is proposed and a scalable condition number bound of the preconditioned operator is established. At the end, in Section~\ref{section:numerics}, numerical results for solving a two-dimensional incompressible Stokes problem are shown to demonstrate the convergence rate of the proposed algorithm.

\section{Finite element discretization} \label{section:FEM}

We consider solving the following incompressible Stokes problem on a bounded, two-dimensional polygonal domain $\Omega$ with a Dirichlet boundary condition, \begin{equation} \label{equation:Stokes} \left\{ \begin{array}{rcll} -\Delta {\bf u} + \nabla p & = & {\bf f}, & \mbox{ in } \Omega \mbox{ , } \\ -\nabla \cdot {\bf u} & = & 0, & \mbox{ in } \Omega \mbox{ , } \\ {\bf u} & = & {\bf u}_{\partial \Omega}, & \mbox{ on } \partial \Omega \mbox{ , }\\ \end{array}\right. \end{equation} where the boundary data ${\bf u}_{\partial \Omega}$ satisfies the compatibility condition $\int_{\partial \Omega} {\bf u}_{\partial \Omega} \cdot {\bf n} = 0$. For simplicity, we assume that ${\bf u}_{\partial \Omega} = {\bf 0}$ without losing any generality.

The weak solution of \EQ{Stokes} is given by: find $\vvec{u} \in \left(H^1_0(\Omega)\right)^2 = \{ \vvec{v} \in (H^1(\Omega))^2 ~

\big| ~ \vvec{v} = \vvec{0} \mbox{ on } \partial \Omega \}$ and $p \in L^2(\Omega)$, such that \begin{equation} \label{equation:bilinear} \left\{ \begin{array}{lcll} a(\vvec{u}, \vvec{v}) + b(\vvec{v}, p) & = & (\vvec{f}, \vvec{v}), & \forall \vvec{v}\in \left(H^1_0(\Omega)\right)^2 , \\ [0.5ex] b(\vvec{u}, q) & = & 0, & \forall q \in L^2(\Omega) \mbox{ , } \\ \end{array} \right. \end{equation} where \[ a(\vvec{u}, \vvec{v})= \int_{\Omega} \nabla{\bf u} \cdot \nabla{\bf v}, \quad b(\vvec{u},q) = -\int_{\Omega} (\nabla \cdot \vvec{u}) q, \quad (\vvec{f}, \vvec{v}) = \int_{\Omega} \vvec{f} \cdot \vvec{v}. \] We note that the solution of \EQ{bilinear} is not unique, with the pressure $p$ different up to an additive constant.

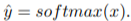

A modified Taylor-Hood mixed finite element is used in this paper to solve \EQ{bilinear}. The domain $\Omega$ is triangulated into shape-regular elements of characteristic size $h$. The pressure finite element space, $Q \subset L^2(\Omega)$, is taken as the space of continuous piecewise linear functions on the triangulation. The velocity finite element space, $\vvec{W} \in \left(H^1_0(\Omega)\right)^2$, is formed by the continuous piecewise linear functions on the finer triangulation obtained by dividing each triangle into four subtriangles by connecting the middle points of its edges. A demonstration of this mixed finite element on a triangulation of a square domain is shown in Figure \ref{figure:TaylorHood}.

\begin{figure}

\caption{ A modified Taylor-Hood mixed finite element}

\label{figure:TaylorHood}

\end{figure}

The finite element solution $(\vvec{u}, p) \in \vvec{W} \bigoplus Q$ of \EQ{bilinear} satisfies \begin{equation} \label{equation:matrix} \left[ \begin{array}{cccc} A & B^T \\ B & 0 \\ \end{array} \right] \left[ \begin{array}{c} {\bf u} \\ p \\ \end{array} \right] = \left[ \begin{array}{l} {\bf f} \\ 0 \\ \end{array} \right] , \end{equation} where $A$, $B$, and $\vvec{f}$ represent respectively the restrictions of $a( \cdot , \cdot )$, $b(\cdot, \cdot )$ and $(\vvec{f} , \cdot)$ to the finite-dimensional spaces $\vvec{W}$ and $Q$. We use the same notation in this paper to represent both a finite element function and the vector of its nodal values.

The coefficient matrix in \EQ{matrix} is rank deficient. $A$ is symmetric positive definite. The kernel of $B^T$, denoted by $Ker(B^T)$, is the space of all constant pressures in $Q$. The range of $B$, denoted by $Im(B)$, is orthogonal to $Ker(B^T)$ and is the subspace of $Q$ consisting of all vectors with zero average. The solution of \EQ{matrix} always exists and is uniquely determined when the pressure is considered in the quotient space $Q/Ker(B^T)$. In this paper, when $q \in Q/Ker(B^T)$, $q$ always has zero average. For a more general right-hand side vector $({\bf f}, ~ g)$ given in \EQ{matrix}, the existence of its solution requires that $g \in Im(B)$, i.e., $g$ has zero average.

The modified Taylor-Hood mixed finite element space $\vvec{W} \times Q$, as shown in Figure \ref{figure:TaylorHood}, is inf-sup stable in the sense that there exists a positive constant $\beta$, independent of $h$, such that \begin{equation} \label{equation:infsup} \sup_{\vvec{w} \in \vvec{W}}

\frac{b(\vvec{w},q)}{|\vvec{w}|_{H^1}} \geq \beta \|q\|_{L^2}, \hspace{0.5cm} \forall q \in Q/Ker(B^T), \end{equation} cf.~\cite[Chapter III, \S 7]{braess}, or equivalently in matrix/vector form, \begin{equation} \label{equation:infsupMatrix} \sup_{{\bf w} \in {\bf W}} \frac{\left< q, B \vvec{w} \right>^2}{\left< \vvec{w}, A \vvec{w} \right>} \geq \beta^2 \left< q, Z q \right>, \hspace{0.5cm} \forall q \in Q/Ker(B^T). \end{equation}

Here, as always in this paper, $\left< \cdot, \cdot \right>$ represents the inner product of two vectors. The matrix $Z$ represents the mass matrix defined on the pressure finite element space $Q$, i.e., for any $q \in Q$, $\|q\|_{L^2}^2 = \left< q, Z q \right>$. It is easy to see, cf.~\cite[Lemma B.31]{Toselli:2004:DDM}, that $Z$ is spectrally equivalent to $h^2 I$ for two-dimensional problems, where $I$ represents the identity matrix of the same dimension, i.e., there exist positive constants $c$ and $C$, such that \begin{equation} \label{equation:massmatrix} c h^2 I \leq Z \leq C h^2 I. \end{equation} Here, as in other places of this paper, $c$ and $C$ represent generic positive constants which are independent of the mesh size $h$ and the subdomain diameter $H$ (discussed in the following section).

\section{A non-overlapping domain decomposition approach} \label{section:DDM}

The domain $\Omega$ is decomposed into $N$ non-overlapping polygonal subdomains $\Omega_i$, $i = 1, 2, ..., N$. Each subdomain is the union of a bounded number of elements, with the diameter of the subdomain in the order of $H$. The nodes on the interface of neighboring subdomains match across the subdomain boundaries $\Gamma = {(\cup\partial\Omega_i)} \backslash \partial\Omega$. $\Gamma$ is composed of subdomain edges, which are regarded as open subsets of $\Gamma$, and of the subdomain vertices, which are end points of edges.

The velocity and pressure finite element spaces ${\bf W}$ and $Q$ are decomposed into \[ {\bf W} = {\bf W}_I \bigoplus {\bf W}_{\Gamma}, \quad Q = Q_I \bigoplus Q_\Gamma, \] where ${\bf W}_I$ and $Q_I$ are direct sums of independent subdomain interior velocity spaces ${\bf W}^{(i)}_I$, and interior pressure spaces $Q^{(i)}_I$, respectively, i.e., $$ {\bf W}_I = \bigoplus_{i=1}^{N}{\bf W}^{(i)}_I, \quad Q_I = \bigoplus_{i=1}^{N}Q^{(i)}_I. $$ ${\bf W}_{\Gamma}$ and $Q_\Gamma$ are subdomain boundary velocity and pressure spaces, respectively. All functions in ${\bf W}_{\Gamma}$ and $Q_\Gamma$ are continuous across the subdomain boundaries $\Gamma$; their degrees of freedom are shared by neighboring subdomains.

To formulate our domain decomposition algorithm, we introduce a partially sub-assembled subdomain boundary velocity space $\vvec{{\widetilde{W}}}_{\Gamma}$, \[ \vvec{{\widetilde{W}}}_{\Gamma} = \vvec{W}_{\Pi} \bigoplus \vvec{W}_{\Delta} = \vvec{W}_{\Pi} \bigoplus \left( \bigoplus_{i=1}^N \vvec{W}^{(i)}_\Delta \right). \] Here, $\vvec{W}_{\Pi}$ is the continuous, coarse level, primal velocity space which is typically spanned by subdomain vertex nodal basis functions, and/or by interface edge basis functions with constant values, or with values of positive weights on these edges. The primal, coarse level velocity degrees of freedom are shared by neighboring subdomains. The complimentary space $\vvec{W}_{\Delta}$ is the direct sum of independent subdomain dual interface velocity spaces $\vvec{W}_{\Delta}^{(i)}$, which correspond to the remaining subdomain boundary velocity degrees of freedom and are spanned by basis functions which vanish at the primal degrees of freedom. Thus, an element in the space $\vvec{{\widetilde{W}}}_{\Gamma}$ typically has a continuous primal velocity component and a discontinuous dual velocity component.

The functions ${\bf w}_{\Delta}$ in ${\bf W}_{\Delta}$ are in general not continuous across $\Gamma$. To enforce their continuity, we define a boolean matrix $B_\Delta$ constructed from $\{0,1,-1\}$. On each row of $B_\Delta$, there are only two non-zero entries, $1$ and $-1$, corresponding to the same velocity degree of freedom on each subdomain boundary node, but attributed to two neighboring subdomains, such that for any ${\bf w}_{\Delta}$ in ${\bf W}_{\Delta}$, each row of $B_\Delta {\bf w}_{\Delta} = 0$ implies that these two degrees of freedom from the two neighboring subdomains be the same. When non-redundant continuity constraints are enforced, $B_\Delta$ has full row rank. We denote the range of $B_\Delta$ applied on ${\bf W}_{\Delta}$ by $\Lambda$, the vector space of the Lagrange multipliers.

In order to define a certain subdomain boundary scaling operator, we introduce a positive scaling factor $\delta^{\dagger}(x)$ for each node $x$ on the subdomain boundary $\Gamma$. Let ${\cal N}_x$ be the number of subdomains sharing $x$, and we simply take $\delta^{\dagger}(x) = 1/{\cal N}_x$. In applications, these scaling factors will depend on the heat conduction coefficient and the first of the Lam\'{e} parameters for scalar elliptic problems and the equations of linear elasticity, respectively; see \cite{kla02,kla06}. Given such scaling factors at the subdomain boundary nodes, we can define a scaled operator $B_{\Delta, D}$. We note that each row of $B_\Delta$ has only two nonzero entries, $1$ and $-1$, corresponding to the same subdomain boundary node $x$. Multiplying each entry by the scaling factor $\delta^{\dagger}(x)$ gives us $B_{\Delta, D}$.

Solving the original fully assembled linear system~\EQ{matrix} is then equivalent to: find $\left( {\bf u}_I, ~p_I, ~{\bf u}_{\Delta}, ~{\bf u}_{\Pi}, ~p_{\Gamma}, ~\lambda \right) \in {\bf W}_I \bigoplus Q_I \bigoplus {\bf W}_{\Delta} \bigoplus {\bf W}_\Pi \bigoplus Q_\Gamma \bigoplus \Lambda$, such that \begin{equation} \label{equation:bigeq} \left[ \begin{array}{cccccc} A_{II} & B_{II}^T & A_{I \Delta} & A_{I \Pi} & B_{\Gamma I}^T & 0 \\[0.8ex] B_{II} & 0 & B_{I \Delta} & B_{I \Pi} & 0 & 0 \\[0.8ex] A_{\Delta I}& B_{I \Delta} ^T& A_{\Delta\Delta} & A_{\Delta \Pi} & B_{\Gamma \Delta}^T& B_{\Delta}^T\\[0.8ex] A_{\Pi I} & B_{I \Pi}^T & A_{\Pi \Delta} & A_{\Pi \Pi} & B_{\Gamma \Pi}^T & 0 \\[0.8ex] B_{\Gamma I}& 0 & B_{\Gamma \Delta} & B_{\Gamma \Pi} & 0 & 0 \\[0.8ex] 0 & 0 & B_{\Delta} & 0 & 0 & 0 \end{array} \right] \left[ \begin{array}{c} {\bf u}_I \\[0.8ex] p_I \\[0.8ex] {\bf u}_{\Delta} \\[0.8ex] {\bf u}_{\Pi} \\[0.8ex] p_{\Gamma} \\[0.8ex] \lambda \end{array} \right] = \left[ \begin{array}{l} {\bf f}_I \\[0.8ex] 0 \\[0.8ex] {\bf f}_{\Delta} \\[0.8ex] {\bf f}_\Pi \\[0.8ex] 0 \\[0.8ex] 0 \end{array} \right] \mbox{ , } \end{equation} where the sub-blocks in the coefficient matrix represent the restrictions of $A$ and $B$ in~\EQ{matrix} to appropriate subspaces. The leading three-by-three block can be made block diagonal with each diagonal block representing one independent subdomain problem.

Corresponding to the one-dimensional null space of~\EQ{matrix}, we consider a vector of the form $\left( {\bf u}_I,~p_I, ~{\bf u}_\Delta, ~ {\bf u}_\Pi, ~p_\Gamma, ~\lambda \right) = \left( {\bf 0},~1_{p_I},~{\bf 0}, ~{\bf 0}, ~1_{p_\Gamma}, \lambda \right)$, where $1_{p_I} \in Q_I$ and $1_{p_\Gamma} \in Q_\Gamma$ represent vectors with value $1$ on each entry. Substituting it into \EQ{bigeq} gives zero blocks on the right-hand side, except at the third block \begin{equation} \label{equation:fdelta} {\bf f}_{\Delta} = [B_{I\Delta}^T ~~ B_{\Gamma\Delta}^T]\left[\begin{array}{c}1_{p_I}\\ 1_{p_\Gamma}\end{array}\right]+B^T_\Delta \lambda. \end{equation} The first term on the right-hand side represents the line integral of the normal component of the velocity finite element basis functions across the subdomain boundary on neighboring subdomains. Corresponding to the same subdomain boundary velocity degree of freedom, their values on the two neighboring subdomains are negative of each other. Therefore \[ [B_{I\Delta}^T ~~ B_{\Gamma\Delta}^T]\left[\begin{array}{c}1_{p_I}\\ 1_{p_\Gamma}\end{array}\right] = B^T_\Delta B_{\Delta,D}[B_{I\Delta}^T ~~ B_{\Gamma\Delta}^T]\left[\begin{array}{c}1_{p_I}\\ 1_{p_\Gamma}\end{array}\right], \] from which we know that $\vvec{f}_\Delta = {\bf 0}$, for \[ \lambda =-B_{\Delta,D}[B_{I\Delta}^T ~~ B_{\Gamma\Delta}^T]\left[\begin{array}{c}1_{p_I}\\

1_{p_\Gamma}\end{array}\right]. \] Therefore, a basis of the one-dimensional null space of \EQ{bigeq} is \begin{equation} \label{equation:bignull} \left( \begin{array}{cccccc} 0, & 1_{p_I}, & 0, & 0, & 1_{p_\Gamma}, & -B_{\Delta,D}[B_{I\Delta}^T ~~ B_{\Gamma\Delta}^T]\left[\begin{array}{c}1_{p_I}\\ 1_{p_\Gamma}\end{array}\right] \end{array} \right). \end{equation}

\section{A reduced symmetric positive semi-definite system} \label{section:Gmatrix}

The system \EQ{bigeq} can be reduced to a Schur complement problem for the variables $\left(p_{\Gamma}, ~\lambda \right)$. Since the leading four-by-four block of the coefficient matrix in \EQ{bigeq} is invertible, the variables $\left( {\bf u}_I, ~p_I, ~{\bf u}_{\Delta}, ~{\bf u}_{\Pi} \right)$ can be eliminated and we obtain \begin{equation} \label{equation:spd} G \left[ \begin{array}{c} p_\Gamma \\[0.8ex] \lambda \end{array} \right] ~ = ~ g, \end{equation} where \begin{equation} \label{equation:Gmatrix} G = \left[ \begin{array}{cccc} B_{\Gamma I} & 0 & B_{\Gamma \Delta} & B_{\Gamma \Pi} \\[0.8ex] 0 & 0 & B_{\Delta} & 0 \end{array} \right] \left[ \begin{array}{cccc} A_{II} & B_{II}^T & A_{I \Delta} & A_{I \Pi} \\[0.8ex] B_{II} & 0 & B_{I \Delta} & B_{I \Pi} \\[0.8ex] A_{\Delta I} & B_{I \Delta} ^T & A_{\Delta\Delta} & A_{\Delta \Pi} \\[0.8ex] A_{\Pi I} & B_{I \Pi}^T & A_{\Pi \Delta} & A_{\Pi \Pi} \end{array} \right]^{-1} \left[ \begin{array}{cc} B_{\Gamma I}^T & 0 \\[0.8ex] 0 & 0 \\[0.8ex] B_{\Gamma \Delta}^T & B_{\Delta}^T \\[0.8ex] B_{\Gamma \Pi}^T & 0 \end{array} \right], \end{equation} and \begin{equation} \label{equation:gvec} g = \left[ \begin{array}{cccc} B_{\Gamma I} & 0 & B_{\Gamma \Delta} & B_{\Gamma \Pi} \\[0.8ex] 0 & 0 & B_{\Delta} & 0 \end{array} \right] \left[ \begin{array}{cccc} A_{II} & B_{II}^T & A_{I \Delta} & A_{I \Pi} \\[0.8ex] B_{II} & 0 & B_{I \Delta} & B_{I \Pi} \\[0.8ex] A_{\Delta I} & B_{I \Delta} ^T & A_{\Delta\Delta} & A_{\Delta \Pi} \\[0.8ex] A_{\Pi I} & B_{I \Pi}^T & A_{\Pi \Delta} & A_{\Pi \Pi} \end{array} \right]^{-1} \left[ \begin{array}{l} {\bf f}_I \\[0.8ex] 0 \\[0.8ex] {\bf f}_{\Delta} \\[0.8ex] {\bf f}_\Pi \end{array} \right]. \end{equation}