Datasets:

language:

- id

- su

- ja

- jv

- min

- br

- ga

- es

- pt

- 'no'

- mn

- ms

- zh

- ko

- ta

- ben

- si

- bg

- ro

- ru

- am

- orm

- ar

- ig

size_categories:

- 1K<n<10K

task_categories:

- question-answering

pretty_name: cvqa

dataset_info:

features:

- name: image

dtype: image

- name: ID

dtype: string

- name: Subset

dtype: string

- name: Question

dtype: string

- name: Translated Question

dtype: string

- name: Options

sequence: string

- name: Translated Options

sequence: string

- name: Label

dtype: int64

- name: Category

dtype: string

- name: Image Type

dtype: string

- name: Image Source

dtype: string

- name: License

dtype: string

splits:

- name: test

num_bytes: 4623568847.008

num_examples: 9752

download_size: 4558394647

dataset_size: 4623568847.008

configs:

- config_name: default

data_files:

- split: test

path: data/test-*

About CVQA

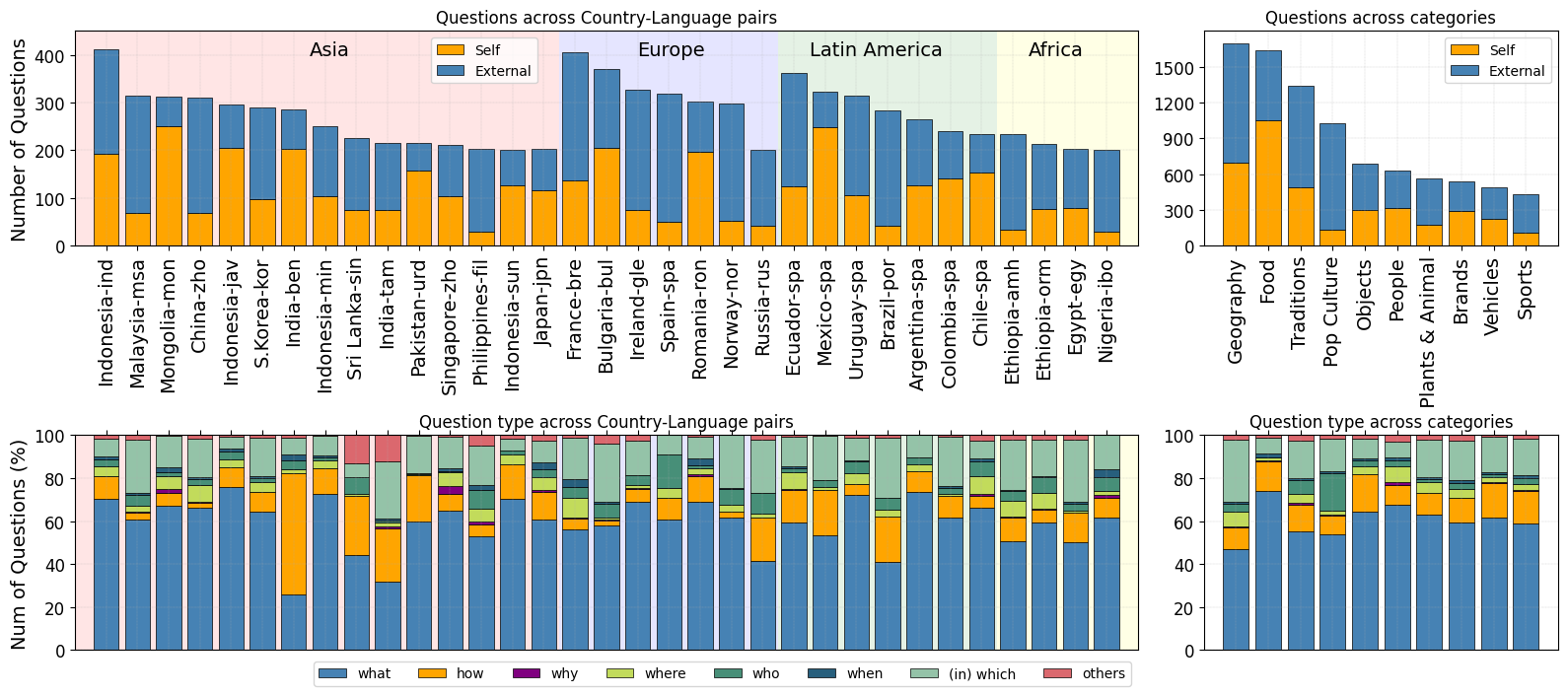

CVQA is a culturally diverse multilingual VQA benchmark consisting of over 9,000 questions from 33 country-language pairs. The questions in CVQA are written in both the native languages and English, and are categorized into 10 diverse categories.

Paper: https://arxiv.org/abs/2406.05967

This data is designed for use as a test set. Please submit your submission here to evaluate your model performance. CVQA is constructed through a collaborative effort led by a team of researchers from MBZUAI. Read more about CVQA in this paper.

Dataset Structure

Data Instances

An example of test looks as follows:

{'image': <PIL.JpegImagePlugin.JpegImageFile image mode=RGB size=2048x1536 at 0x7C3E0EBEEE00>,

'ID': '5919991144272485961_0',

'Subset': "('Japanese', 'Japan')",

'Question': '写真に写っているキャラクターの名前は? ',

'Translated Question': 'What is the name of the object in the picture? ',

'Options': ['コスモ星丸', 'ミャクミャク', ' フリービー ', 'ハイバオ'],

'Translated Options': ['Cosmo Hoshimaru','MYAKU-MYAKU','Freebie ','Haibao'],

'Label': -1,

'Category': 'Objects / materials / clothing',

'Image Type': 'Self',

'Image Source': 'Self-open',

'License': 'CC BY-SA'

}

Data Fields

The data fields are:

image: The image referenced by the question.ID: A unique ID for the given sample.Subset: A Language-Country pairQuestion: The question elicited in the local language.Translated Question: The question elicited in the English language.Options: A list of possible answers to the question in the Local Language.Translated Options: A list of possible answers to the question in the English Language.Label: Will always be -1. Please refer to our leaderboard to get your performance.Category: A specific category for the given sample.Image Type:SelforExternal, meaning if the image is self-taken from the annotator or comes from the internet.Image Source: If the image type is Self, this can beSelf-openorSelf-research_only, meaning that the image can be used for commercial purposes or only for research purposes. If the image type is External, this will be the link to the external source.License: The corresponding license for the image.

Dataset Creation

Source Data

The images in CVQA can either be based on existing external images or from the contributor's own images. You can see this information from the 'Image Type' and 'Image Source' columns. Images based on external sources will retain their original licensing, whereas images from contributors will be licensed based on each contributor's decision.

All the questions are hand-crafted by annotators.

Data Annotation

Data creation follows two general steps: question formulation and validation. During question formulation, annotators are asked to write a question, with one correct answer and three distractors. Questions must be culturally nuanced and relevant to the image. Annotators are asked to mask sensitive information and text that can easily give away the answers. During data validation, another annotator is asked to check and validate whether the images and questions adhere to the guidelines.

You can learn more about our annotation protocol and guidelines in our paper.

Annotators

Annotators needed to be fluent speakers of the language in question and be accustomed to the cultures of the locations for which they provided data. Our annotators are predominantly native speakers, with around 89% residing in the respective country for over 16 years.

Licensing Information

Note that each question has its own license. All data here is free to use for research purposes, but not every entry is permissible for commercial use.

Bibtex citation

misc{romero2024cvqaculturallydiversemultilingualvisual,

title={CVQA: Culturally-diverse Multilingual Visual Question Answering Benchmark},

author={David Romero and Chenyang Lyu and Haryo Akbarianto Wibowo and Teresa Lynn and Injy Hamed and Aditya Nanda Kishore and Aishik Mandal and Alina Dragonetti and Artem Abzaliev and Atnafu Lambebo Tonja and Bontu Fufa Balcha and Chenxi Whitehouse and Christian Salamea and Dan John Velasco and David Ifeoluwa Adelani and David Le Meur and Emilio Villa-Cueva and Fajri Koto and Fauzan Farooqui and Frederico Belcavello and Ganzorig Batnasan and Gisela Vallejo and Grainne Caulfield and Guido Ivetta and Haiyue Song and Henok Biadglign Ademtew and Hernán Maina and Holy Lovenia and Israel Abebe Azime and Jan Christian Blaise Cruz and Jay Gala and Jiahui Geng and Jesus-German Ortiz-Barajas and Jinheon Baek and Jocelyn Dunstan and Laura Alonso Alemany and Kumaranage Ravindu Yasas Nagasinghe and Luciana Benotti and Luis Fernando D'Haro and Marcelo Viridiano and Marcos Estecha-Garitagoitia and Maria Camila Buitrago Cabrera and Mario Rodríguez-Cantelar and Mélanie Jouitteau and Mihail Mihaylov and Mohamed Fazli Mohamed Imam and Muhammad Farid Adilazuarda and Munkhjargal Gochoo and Munkh-Erdene Otgonbold and Naome Etori and Olivier Niyomugisha and Paula Mónica Silva and Pranjal Chitale and Raj Dabre and Rendi Chevi and Ruochen Zhang and Ryandito Diandaru and Samuel Cahyawijaya and Santiago Góngora and Soyeong Jeong and Sukannya Purkayastha and Tatsuki Kuribayashi and Thanmay Jayakumar and Tiago Timponi Torrent and Toqeer Ehsan and Vladimir Araujo and Yova Kementchedjhieva and Zara Burzo and Zheng Wei Lim and Zheng Xin Yong and Oana Ignat and Joan Nwatu and Rada Mihalcea and Thamar Solorio and Alham Fikri Aji},

year={2024},

eprint={2406.05967},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2406.05967},

}