repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers | 13,328 | closed | Licenses for Helsinki-NLP models | Some of the models in the hf-hub under the Helsinki-NLP repo are listed under the apache 2.0 license, but most are listed without a license.

Example of model without license:

https://huggingface.co/Helsinki-NLP/opus-mt-en-de

Only 371 models tagged with a license here:

https://huggingface.co/models?license=license:apache-2.0&sort=downloads&search=helsinki-nlp

Is this omission intentional or are all models in the repo actually intended to be apache licensed? If so would it be possible to update them with license info? | 08-30-2021 09:49:59 | 08-30-2021 09:49:59 | @jorgtied might be able to answer this (and then we can programmatically update all models if needed)

Thanks!

(also cc @sshleifer and @patil-suraj for visibility)<|||||>

They come with a CC-BY 4.0 license.

Jörg

> On 30. Aug 2021, at 13.16, Julien Chaumond ***@***.***> wrote:

>

>

> @jorgtied <https://github.com/jorgtied> might be able to answer this (and then we can programmatically update all models if needed)

>

> Thanks!

>

> (also cc @sshleifer <https://github.com/sshleifer> and @patil-suraj <https://github.com/patil-suraj> for visibility)

>

> —

> You are receiving this because you were mentioned.

> Reply to this email directly, view it on GitHub <https://github.com/huggingface/transformers/issues/13328#issuecomment-908221978>, or unsubscribe <https://github.com/notifications/unsubscribe-auth/AAEWCPQVHTREFMSMDBTZPCLT7NK6NANCNFSM5DBQJLIQ>.

> Triage notifications on the go with GitHub Mobile for iOS <https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675> or Android <https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub>.

>

<|||||>Thanks a lot Jörg 🙏 . I'll update the repos programmatically tomorrow morning<|||||>Done.

For reference, here's the script I've run (depends on https://github.com/huggingface/huggingface_hub/pull/339 to be able to run it using `huggingface_hub`): https://gist.github.com/julien-c/b2dcde5df5d5e41ad7c4b594cb54aba3

And here's a partial list of the generated commits (full list attached to the gist):

```

https://huggingface.co/Helsinki-NLP/opus-mt-bg-en/commit/3a34359f5781368c7748219c2868ffd065f24df0

https://huggingface.co/Helsinki-NLP/opus-mt-bg-fi/commit/04d4dd3690cc730690da31b45745fb3f74198b0f

https://huggingface.co/Helsinki-NLP/opus-mt-bg-sv/commit/7f2c7cc3887492a080441266c63b20fd13497e56

https://huggingface.co/Helsinki-NLP/opus-mt-bi-en/commit/feb365f89ee1f47cad4f1581896b80ae88978983

https://huggingface.co/Helsinki-NLP/opus-mt-bi-es/commit/40001c75cc73df30ac2ffe45d8c3f224ee17781b

https://huggingface.co/Helsinki-NLP/opus-mt-bi-fr/commit/31712329599ad7b50590cd35299ccc8d94029122

https://huggingface.co/Helsinki-NLP/opus-mt-bi-sv/commit/fa443f611486bd359dee28a2ef896a03ca81e515

https://huggingface.co/Helsinki-NLP/opus-mt-bzs-en/commit/4a0238e6463445a99590c0abe7aed5f2f95e064d

https://huggingface.co/Helsinki-NLP/opus-mt-bzs-es/commit/b03449222edb29b8497af1df03c30782995912f5

https://huggingface.co/Helsinki-NLP/opus-mt-bzs-fi/commit/26a623904cfb745bdc48f4e62f4de8ec0f0f0bbb

https://huggingface.co/Helsinki-NLP/opus-mt-bzs-fr/commit/5f69cdba6de378f61042d90ed0a19f3047837ea1

https://huggingface.co/Helsinki-NLP/opus-mt-bzs-sv/commit/2a12941aeaeaa78979240cfcb1d63e44958af76f

https://huggingface.co/Helsinki-NLP/opus-mt-ca-en/commit/22113f5e0e8e89677d6e0142e55c85402eecb455

https://huggingface.co/Helsinki-NLP/opus-mt-ca-es/commit/3b93f0ccce95f7d8c7a78d56ec5c658271f6d244

https://huggingface.co/Helsinki-NLP/opus-mt-ceb-es/commit/94ff5e6902541d95fc1890e7e5e185477d922271

https://huggingface.co/Helsinki-NLP/opus-mt-ceb-fi/commit/8c5cdaa45a8ef959061c6d97a7f118e2714725bc

https://huggingface.co/Helsinki-NLP/opus-mt-ceb-fr/commit/90d773c1774988007f9fd8f44477de8d5ee310b6

https://huggingface.co/Helsinki-NLP/opus-mt-ceb-sv/commit/bf1810fb698cbeb2a7beeecb96917557ece3158f

https://huggingface.co/Helsinki-NLP/opus-mt-chk-en/commit/d9a7fad4fdc70b734457a5eee20835d8899e7415

https://huggingface.co/Helsinki-NLP/opus-mt-chk-es/commit/c41790360ecb70331ba71c881db1c592b0923502

https://huggingface.co/Helsinki-NLP/opus-mt-chk-fr/commit/6db3456d236063ccbb97abdea52dc574da37a898

https://huggingface.co/Helsinki-NLP/opus-mt-chk-sv/commit/de1bf0196adc388148bb52c5388fd795c46191b6

https://huggingface.co/Helsinki-NLP/opus-mt-crs-de/commit/f0552c0fcef8dc8b03acc5ecf9c170a3a9356ca1

https://huggingface.co/Helsinki-NLP/opus-mt-crs-en/commit/7ee4bb979dd28886b7d98f890298c4548e84a847

https://huggingface.co/Helsinki-NLP/opus-mt-crs-es/commit/808d78b9c72092991bba047542192f26c3bff3b8

https://huggingface.co/Helsinki-NLP/opus-mt-crs-fi/commit/e61325e6904fe87fbad3e6d978dca63fb4e766ba

https://huggingface.co/Helsinki-NLP/opus-mt-crs-fr/commit/341ed6222bcb84709acf9b8a3d5d57991b350c5e

https://huggingface.co/Helsinki-NLP/opus-mt-crs-sv/commit/a338a7e5ef9b876f1edc63b0af6c6cd11e6a7611

https://huggingface.co/Helsinki-NLP/opus-mt-cs-de/commit/f5a1b1443dc5381df3a0a83d790b3c2eb16cf811

https://huggingface.co/Helsinki-NLP/opus-mt-cs-en/commit/186ab5dff3e18ca970a492525c0ca4b398d525ab

https://huggingface.co/Helsinki-NLP/opus-mt-cs-fi/commit/d60a357cfb2c4d1df38b43f2fafe34dbff0199cf

https://huggingface.co/Helsinki-NLP/opus-mt-cs-fr/commit/3040852ec5404c1da928602fa1ec636b6ddf9a2e

https://huggingface.co/Helsinki-NLP/opus-mt-cs-sv/commit/ab967fe66d1c0d4f9403ae0b4c97c06ae8947b89

https://huggingface.co/Helsinki-NLP/opus-mt-csg-es/commit/9742b7a5ed07cb69c4051567686b2e1ace50b061

https://huggingface.co/Helsinki-NLP/opus-mt-csn-es/commit/c3086bbf7d9101947a5a07d286cb9ccc533f9e0a

https://huggingface.co/Helsinki-NLP/opus-mt-cy-en/commit/775c85089bc7a55c8203bff544e9fa34cd4ba7ca

https://huggingface.co/Helsinki-NLP/opus-mt-da-de/commit/2e4d10f7054f579178b167e5082b0e57726eee44

https://huggingface.co/Helsinki-NLP/opus-mt-da-en/commit/8971eb3839ec41bddd060128b9b83038bb43fd96

https://huggingface.co/Helsinki-NLP/opus-mt-da-es/commit/59b50e55d16babe69b0facb1fb1c4dfb175328fe

https://huggingface.co/Helsinki-NLP/opus-mt-da-fi/commit/a2e614cb32e2b0fa09c5c1dcaba8122d9d647b18

https://huggingface.co/Helsinki-NLP/opus-mt-da-fr/commit/186e4c938bc1744a9ddbd67073fe572c93a494c8

https://huggingface.co/Helsinki-NLP/opus-mt-de-ZH/commit/93d4bc065a572a35ab1f1110ffeccc9740444a42

https://huggingface.co/Helsinki-NLP/opus-mt-de-ase/commit/09e461fdf799287e13c7c48df0573fd89273b1bd

https://huggingface.co/Helsinki-NLP/opus-mt-de-bcl/commit/628737ef8907e7d2db7989660f413420cfad41f5

https://huggingface.co/Helsinki-NLP/opus-mt-de-bi/commit/7c40aed9a4611cec93aa9560f2bb99e49e895789

https://huggingface.co/Helsinki-NLP/opus-mt-de-bzs/commit/30ed515b4d391e1f98cefdbf5f6fcc340c979fce

https://huggingface.co/Helsinki-NLP/opus-mt-de-crs/commit/b9de144126655b973cd8cf74a5651ac999e551a2

https://huggingface.co/Helsinki-NLP/opus-mt-de-cs/commit/683666e07ca027d76af9ac23c0902b29084a0d18

https://huggingface.co/Helsinki-NLP/opus-mt-de-da/commit/bccfbee95d55ba1333fd447f67574453eba5d948

https://huggingface.co/Helsinki-NLP/opus-mt-de-de/commit/7be6c82bcda2cf76f48ba1f730baeeebcbcb172d

https://huggingface.co/Helsinki-NLP/opus-mt-de-ee/commit/42218c447d3da4a8836adb6de710d06bbad480c9

https://huggingface.co/Helsinki-NLP/opus-mt-de-efi/commit/1309ccb2f74acba991a654adf4ff1363a577d51b

https://huggingface.co/Helsinki-NLP/opus-mt-de-el/commit/ad3da773c26cf72780d46b4a75333226a19760e4

https://huggingface.co/Helsinki-NLP/opus-mt-de-en/commit/6137149949ac01d19d8eeef6e35d32221dabc8e4

https://huggingface.co/Helsinki-NLP/opus-mt-de-eo/commit/9188e5326cba934d553fcb0150a9e88de140a286

https://huggingface.co/Helsinki-NLP/opus-mt-de-es/commit/d6bff091731341b977e4ca7294d2c309a2ca11e4

https://huggingface.co/Helsinki-NLP/opus-mt-de-et/commit/55157cd448f864a87992b80aef23f95546a0280c

https://huggingface.co/Helsinki-NLP/opus-mt-de-fi/commit/bbd50eeefdc1e26d75f6a806495192b55878c04a

https://huggingface.co/Helsinki-NLP/opus-mt-de-fj/commit/596580a8225fb340357d25cd38639fed5d662681

https://huggingface.co/Helsinki-NLP/opus-mt-de-fr/commit/6aa8c4011488513f5575b235ce75d6d795d90b35

https://huggingface.co/Helsinki-NLP/opus-mt-de-gaa/commit/0722f96d5ce2e9fd6b2e0df3987105a78d062d1c

https://huggingface.co/Helsinki-NLP/opus-mt-de-gil/commit/56bb25bf50c7b8268c9fd1ec8f8124e54631af59

https://huggingface.co/Helsinki-NLP/opus-mt-de-guw/commit/7a441fe0e9e7c4c430889b46b3b4541005c93bb1

https://huggingface.co/Helsinki-NLP/opus-mt-de-ha/commit/5a241c2d7ce3f36d42b7bbd7f563bd0da651d480

https://huggingface.co/Helsinki-NLP/opus-mt-de-he/commit/44d42278e67bf34bd1c0a8dcca06c6525eca6263

https://huggingface.co/Helsinki-NLP/opus-mt-de-hil/commit/4f0571df9d70e36af0435f1368a03cd059750c40

https://huggingface.co/Helsinki-NLP/opus-mt-de-ho/commit/6f07189ef39e3e609a24c45936c40e30fd6b3ef8

https://huggingface.co/Helsinki-NLP/opus-mt-de-hr/commit/d1b7e5205290af5c36e8be8cd6d73f6b5d9bba5f

https://huggingface.co/Helsinki-NLP/opus-mt-de-ht/commit/2d296463f4735961ca4512271b415aacf7c0ba91

https://huggingface.co/Helsinki-NLP/opus-mt-de-hu/commit/4b30440320ea86d33b6927fe70c46e20f671da86

https://huggingface.co/Helsinki-NLP/opus-mt-de-ig/commit/862152c08618d17ff651fc7df9145d81519ba9f7

https://huggingface.co/Helsinki-NLP/opus-mt-de-ilo/commit/e9260adbaa77c85f5a0203460399c1cec12357c1

https://huggingface.co/Helsinki-NLP/opus-mt-de-iso/commit/d3d1caff0521142085ee7faa07112ce593803734

https://huggingface.co/Helsinki-NLP/opus-mt-de-it/commit/cd2319a082a7be0dd471fe62701ae557a71833c2

https://huggingface.co/Helsinki-NLP/opus-mt-de-kg/commit/495d68528e086b0ccea38761513241152e4f217f

https://huggingface.co/Helsinki-NLP/opus-mt-de-ln/commit/05dd393385fb99c42d5849c22cef67931922eff3

https://huggingface.co/Helsinki-NLP/opus-mt-de-loz/commit/efc9fe11206c281704056c9c3eda0b42f1cf43a0

https://huggingface.co/Helsinki-NLP/opus-mt-de-lt/commit/e0105109d696baf37e2a4cca511a46f59fa97707

https://huggingface.co/Helsinki-NLP/opus-mt-de-lua/commit/319b94b75439b497c0860a3fc80a34ecacb597a0

https://huggingface.co/Helsinki-NLP/opus-mt-de-mt/commit/0d71c2c09e3838d7276288da102f7e66d2d24032

https://huggingface.co/Helsinki-NLP/opus-mt-de-niu/commit/6b15b26f7d7752bfde0368809479c544880174cd

https://huggingface.co/Helsinki-NLP/opus-mt-de-nl/commit/da037ec1ad70f9d79735c287d418c00158b55b68

https://huggingface.co/Helsinki-NLP/opus-mt-de-nso/commit/fbd9a40fa66f610b52855ad16263d4ea32c8bd7c

https://huggingface.co/Helsinki-NLP/opus-mt-de-ny/commit/595549133dfde470a3ea04e93674ff1c90c5ac5a

https://huggingface.co/Helsinki-NLP/opus-mt-de-pag/commit/f03679f6d038388c5a0a40918acc4bf6406cac28

https://huggingface.co/Helsinki-NLP/opus-mt-de-pap/commit/6c57622b7e815f9e1cb24f6e1f9a09b58627f0b7

https://huggingface.co/Helsinki-NLP/opus-mt-de-pis/commit/ddfb8177ff0559adc697171c2c4c7704921bd4ec

https://huggingface.co/Helsinki-NLP/opus-mt-de-pl/commit/67458bb97566391315397d8e0aa5f14f774bd238

https://huggingface.co/Helsinki-NLP/opus-mt-de-pon/commit/d18f29c5ef79abbca40d53e34b94c8514ffd6235

https://huggingface.co/Helsinki-NLP/opus-mt-ee-de/commit/5e01b793901fec6acbcaf6b35e9e0873d7190147

https://huggingface.co/Helsinki-NLP/opus-mt-ee-en/commit/a69e3d990dc8b84d8d727b9502c20511a50233ed

https://huggingface.co/Helsinki-NLP/opus-mt-ee-es/commit/976bee3eb2616b35a55d6e6467ca2d211ba68d49

https://huggingface.co/Helsinki-NLP/opus-mt-ee-fi/commit/8547cfc9f2c5ef75f00c78ef563eef59fc0204ee

https://huggingface.co/Helsinki-NLP/opus-mt-ee-fr/commit/066e2a847a6098c2a999d6db7a1f50b878578c8e

https://huggingface.co/Helsinki-NLP/opus-mt-ee-sv/commit/8170bc4af3be1e3633e37ef4180cada5eb177b2c

https://huggingface.co/Helsinki-NLP/opus-mt-efi-de/commit/cedf2694630c1ee2ea1d75dffead02c4dc49ef80

https://huggingface.co/Helsinki-NLP/opus-mt-efi-en/commit/0bf437954f943da3d49a172b6f91aa7157c3525a

https://huggingface.co/Helsinki-NLP/opus-mt-efi-fi/commit/02877c2ef68a205047cde71b4b376ffcc565e4a7

https://huggingface.co/Helsinki-NLP/opus-mt-efi-fr/commit/7b528531e45c04716015e7c211ef2b74817ff438

https://huggingface.co/Helsinki-NLP/opus-mt-efi-sv/commit/c02cd07b017c7c71d4583dbd6050dfee383a1cf0

https://huggingface.co/Helsinki-NLP/opus-mt-el-fi/commit/aef52d8c3cc2129847cf9ea84c62a5e7b9bb41bc

https://huggingface.co/Helsinki-NLP/opus-mt-el-fr/commit/b00ba91c42b2f20768228b179f01274048158001

https://huggingface.co/Helsinki-NLP/opus-mt-el-sv/commit/e8894cf2f5713e1cc68fe7710636ecc4b4dc99d7

https://huggingface.co/Helsinki-NLP/opus-mt-en-CELTIC/commit/69fe75e42d848a1b30f968800ff94783e3ed8fe2

https://huggingface.co/Helsinki-NLP/opus-mt-en-ROMANCE/commit/92870a2f094c444064c7a568c25eef6971e07b03

https://huggingface.co/Helsinki-NLP/opus-mt-en-af/commit/c6a79302395db2b59af8b15f4016081a66095ace

https://huggingface.co/Helsinki-NLP/opus-mt-en-bcl/commit/fdda7e146d903da0f4da8895800c52bdcfa07ecc

https://huggingface.co/Helsinki-NLP/opus-mt-en-bem/commit/7d0c704d934f400158d645345a7ed27c6cfe73e8

https://huggingface.co/Helsinki-NLP/opus-mt-en-ber/commit/cad15de24b5374102d6dd95619d0c4011102dcce

https://huggingface.co/Helsinki-NLP/opus-mt-en-bi/commit/b3e9ed52697fffab06a733a23c37d843a3464976

https://huggingface.co/Helsinki-NLP/opus-mt-en-bzs/commit/2b7c7d345202d17dd7f42850eae846e4d11b6fda

https://huggingface.co/Helsinki-NLP/opus-mt-en-ca/commit/81d80b5921b66885e45c3b27615752da4b511b40

https://huggingface.co/Helsinki-NLP/opus-mt-en-ceb/commit/a5e0a21b4e9db37945be9cd5977573b53cd95999

https://huggingface.co/Helsinki-NLP/opus-mt-en-chk/commit/a57e025c3f8a7a9b20968190b6a6db234ef1541a

https://huggingface.co/Helsinki-NLP/opus-mt-en-crs/commit/1f25af1f9d1c0680005a9f0d16ed8bb412784c32

https://huggingface.co/Helsinki-NLP/opus-mt-en-cs/commit/7cba4a7e3daff13c48fc2fcd740ef0711b1dd075

https://huggingface.co/Helsinki-NLP/opus-mt-en-cy/commit/038aee0304224b119582e0258c0dff2bc1c1c411

https://huggingface.co/Helsinki-NLP/opus-mt-en-da/commit/9786126ba34f1f86636af779ef13557bd9d1b246

https://huggingface.co/Helsinki-NLP/opus-mt-en-de/commit/6c00b328d3da7183582a4928b638b24a4a14a79f

https://huggingface.co/Helsinki-NLP/opus-mt-en-ee/commit/45d6ef20f2aac6de3ad001d7452ff5243f25f219

https://huggingface.co/Helsinki-NLP/opus-mt-en-efi/commit/08b5f78e0bb66e8e1940fe1eb976a5b9de276f84

https://huggingface.co/Helsinki-NLP/opus-mt-en-el/commit/cd8ab0896f1d0598007ba5266a0a30884fed71de

https://huggingface.co/Helsinki-NLP/opus-mt-en-eo/commit/20a8920034dfbb6b2e5909f5065a32d6b1b5990b

https://huggingface.co/Helsinki-NLP/opus-mt-en-et/commit/f696ce2db3f802cf4dd723ea97b2af1eda90c7e9

https://huggingface.co/Helsinki-NLP/opus-mt-en-fi/commit/627fe90df5c335be61521cd89c68f62e2bdce050

https://huggingface.co/Helsinki-NLP/opus-mt-en-fj/commit/2c98ee541817946993595aa514f12804b6c95efc

https://huggingface.co/Helsinki-NLP/opus-mt-en-fr/commit/a8fbc1c711cb6263e8a20c5229b210cc05c57ff0

https://huggingface.co/Helsinki-NLP/opus-mt-en-gaa/commit/2f75e3d8bc190f8e0e412beecf00e564c40e33c4

```<|||||>Looking great! Thank you for the quick resolution of this!<|||||>I have a doubt, on the [opus-MT github page](https://github.com/Helsinki-NLP/OPUS-MT-train/tree/master) it says that all pretrained models are under the cc by 4.0 license, but on hugging face many opus-MT models have apache 2.0 license, for example, [this model](https://huggingface.co/Helsinki-NLP/opus-mt-it-fr) on hugging face has the apache 2.0 license, while downloading it from the opus-MT github page ([here](https://github.com/Helsinki-NLP/OPUS-MT-train/tree/master/models/it-fr)) it has the cc by 4.0 license among the files, is this an error or did I miss something?<|||||>Hi @niedev, thanks for raising this! Could you open a discussion on the respective checkpoint pages on the hub? |

transformers | 13,327 | closed | Wrong weight initialization for TF t5 model | ## Environment info

- `transformers` version: 4.9.2

- Platform: Linux-4.15.0-142-generic-x86_64-with-glibc2.29

- Python version: 3.8.10

- PyTorch version (GPU?): not installed (NA)

- Tensorflow version (GPU?): 2.5.0 (True)

- Flax version (CPU?/GPU?/TPU?): 0.3.4 (gpu)

- Jax version: 0.2.18

- JaxLib version: 0.1.69

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: yes

### Who can help

@patil-suraj

@patrickvonplaten

## Information

Model I am using: Pre-training T5-base

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [X] my own modified scripts: (give details below): Added to run_mlm.py the t5 data collator and keras adafactor optimizer

The tasks I am working on is:

* [X] my own task or dataset: Pre-training T5 base with oscar dataset (as in FLAX example)

## Expected behavior

Before updating init weights to normal distribution (as in transformers/src/transformers/models/t5/modeling_flax_t5.py) loss stuck at 4.5 (unlike FLAX behaviour). after update of init weights i get same behaviour as in FLAX and reach <2 loss.

Example:

In flax code: class: FlaxT5DenseReluDense: lines 95:,96

wi_init_std = self.config.initializer_factor * (self.config.d_model ** -0.5)

wo_init_std = self.config.initializer_factor * (self.config.d_ff ** -0.5)

In TF code, the default initializer is used. My suggested fix:

wi_initializer = tf.keras.initializers.RandomNormal(mean = 0, stddev = config.initializer_factor * (config.d_model ** -0.5))

wo_initializer = tf.keras.initializers.RandomNormal(mean = 0, stddev = config.initializer_factor * (config.d_ff ** -0.5))

self.wi = tf.keras.layers.Dense(config.d_ff, use_bias=False, name="wi",kernel_initializer=wi_initializer)

self.wo = tf.keras.layers.Dense(config.d_model, use_bias=False, name="wo",kernel_initializer=wo_initializer)

This is relevant for all weights and embeddings initialization.

| 08-30-2021 07:50:28 | 08-30-2021 07:50:28 | I agree! Would you like to open a PR to fix it? :-)<|||||>Will try to do it on coming days<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 13,326 | closed | Wav2Vec2ForCTC is not BaseModelOutput | On the website of huggingface: https://huggingface.co/transformers/model_doc/wav2vec2.html#wav2vec2forctc

it says Wav2VecForCTC is "BaseModelOutput".

But actually it is "CausalLMOutput", it has no attribute 'last_hidden_state' or the others of "BaseModelOutput".

Its returns should belong to "CausalLMOutput": https://huggingface.co/transformers/main_classes/output.html#causallmoutput

**The description of the returns of Wav2VecForCTC on the website:**

<img width="1031" alt="130951325-9fd86ab4-4b2a-4965-b4bf-88b2cc556b46" src="https://user-images.githubusercontent.com/15671418/131300701-11106f9c-ab2a-42b8-8c35-9a7418e37474.png">

**The error when call "the last hidden state" of Wav2VecForCTC:**

<img width="663" alt="130951343-eb4655a3-af57-4a2f-a387-0fa628f854dc" src="https://user-images.githubusercontent.com/15671418/131300840-107609a5-6d30-4d89-a0e1-ae003feb3934.png">

**The description of CasualLMOutput which the Wav2VecForCTC shoud be:**

<img width="1077" alt="130951334-093e1df0-6207-45f0-b803-76b276f17f7b" src="https://user-images.githubusercontent.com/15671418/131300953-a8eaf063-db8d-46f3-836e-4baf534e1554.png">

@patrickvonplaten | 08-30-2021 07:20:28 | 08-30-2021 07:20:28 | Hi @patrickvonplaten , I read the source code and found the wav2vecctc only conducts word-level tokenization. Does it support ctc fine-tuning on grapheme level or character level? Thanks.<|||||>Oh yeah that's a typo in the docs indeed, but it's already fixed on master I think :-)

See: https://huggingface.co/transformers/master/model_doc/wav2vec2.html#wav2vec2forctc<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 13,325 | closed | Handling tag with no prefix for aggregation_strategy in TokenClassificationPipeline | # 🚀 Feature request

Previously the parameter grouped_entities would handle entity with no prefix (like "PER" instead of "B-PER") and would correctly group similar entities next to each others. With the new parameter aggregation_strategy, this is not the case anymore.

## Motivation

In some simple models, the prefix add some complexity that is not always required. Because of this we are forced to add a prefix to make aggregation works even if not required by the model.

## Your contribution

| 08-29-2021 21:09:09 | 08-29-2021 21:09:09 | cc @Narsil <|||||>Hi @jbpolle what do you mean `correctly` ? We should not have changed behavior there, but indeed it's not part of the testing right now, so there might be some issues.

Could you provide a small script on an older transformers version that displays the intended behavior ?<|||||>Hello Nicolas,

Here is what it looks like now in the "hosted inference API » panel:

This is from my model here:

https://huggingface.co/Jean-Baptiste/camembert-ner?text=Je+m%27appelle+jean-baptiste+et+je+vis+%C3%A0+montr%C3%A9al

In previous version, It would display « jean-baptiste PER » and « Montreal LOC ».

However I renamed my entities in the config.json file to I-PER, I-ORG,…which I believe should fix this issue.

Before that the entities were just PER, LOC,…

I hope this help,

Thank you,

Jean-Baptiste

> Le 30 août 2021 à 09:15, Nicolas Patry ***@***.***> a écrit :

>

>

> Hi @jbpolle <https://github.com/jbpolle> what do you mean correctly ? We should not have changed behavior there, but indeed it's not part of the testing right now, so there might be some issues.

>

> Could you provide a small script on an older transformers version that displays the intended behavior ?

>

> —

> You are receiving this because you were mentioned.

> Reply to this email directly, view it on GitHub <https://github.com/huggingface/transformers/issues/13325#issuecomment-908333781>, or unsubscribe <https://github.com/notifications/unsubscribe-auth/AMIMGPPBHLPBSACPHW5PZWDT7OAAXANCNFSM5DAT4PAA>.

> Triage notifications on the go with GitHub Mobile for iOS <https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675> or Android <https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub>.

>

<|||||>Adding missing screenshot in previous message:

<img width="541" alt="PastedGraphic-1" src="https://user-images.githubusercontent.com/51430205/131381199-64fa35a0-05dd-4233-a3de-e53307bd6f71.png">

<|||||>I went back to `4.3.3` and I can see that the splitting was exactly the same. (no grouping when tags didn't include B-, I- ).

The fact that the cache wasn't probably cleaned on the widget is still an issue, clearing it.<|||||>I was working on 4.3.2 and here is how this was working:

But now in 4.9:

And even when playing with new aggregation_strategy parameters, I can't get previous results.

Anyway it's fixed in my case by adding the prefix so don't hesitate to close the ticket.

Thank you, <|||||>Ok, I must have tested it wrong before. I can confirm. This is indeed because the default for tags wasn't really explicited, but did behave as `I- `

Code was:

```python

entity["entity"].split("-")[0] != "B"

```

Which would resolve to `"PER" != "B"` whereas now the default tag was explicitely set as B-:

https://github.com/huggingface/transformers/blob/master/src/transformers/pipelines/token_classification.py#L413

The fix would be easy but I am unsure about reverting this now that was merged 6th June.

Tagging a core maintainer for advice for how to handle this. @LysandreJik

We would need to run some numbers on the hub too, to get an idea of amount of affected repos.<|||||>I would fix it to behave the same as it was in v4.3.2 as this is the expected behavior when using `grouped_entities`<|||||>PR opened. https://github.com/huggingface/transformers/pull/13493 |

transformers | 13,324 | closed | distilbert-flax | # What does this PR do?

DistilBert Flax

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

@VictorSanh @patrickvonplaten | 08-29-2021 11:09:54 | 08-29-2021 11:09:54 | Great Great job @kamalkraj!

Think the only major thing to update is to docs in the modeling file (at the moment it looks like it's the PyTorch docs, but should be Flax :-))

<|||||>@patrickvonplaten

Thanks for the review.

Done changes according to your review. <|||||>Hi @kamalkraj , I'm also really interested in that PR - thanks for adding it :hugs:

Do you also plan to add a script for the distillation process (like it is done in the ["old" script](https://github.com/huggingface/transformers/tree/master/examples/research_projects/distillation)), as I would like to re-distillate some of my previous DistilBERT models (I don't have access to multi GPU setups, only to TPUs at the moment).<|||||>Hi @stefan-it,

I will go through the scripts and pings you.

I have multi-GPU access. Which TPU do you use? v3-8 ?<|||||>```

JAX_PLATFORM_NAME=cpu RUN_SLOW=1 pytest tests/test_modeling_flax_distilbert.py::FlaxDistilBertModelIntegrationTest::test_inference_no_head_absolute_embedding

```

passes and the code looks good :-) Ready to merge IMO :tada: !

@patil-suraj the slow test doesn't pass on TPU since distilbert has pretty extreme activations in the forward pass like a couple of other models. We need to think a bit how to adapt the slow test depending on whether they're run on TPU or not in general...<|||||>Great work @kamalkraj ! |

transformers | 13,323 | closed | Documentation mismatch in Preprocessing data | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.10.0.dev0

- Platform: macOS-10.16-x86_64-i386-64bit

- Python version: 3.9.2

- PyTorch version (GPU?): 1.9.0 (False)

- Tensorflow version (GPU?): 2.6.0 (False)

- Flax version (CPU?/GPU?/TPU?): 0.3.4 (cpu)

- Jax version: 0.2.19

- JaxLib version: 0.1.70

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: No

### Who can help

@sgugger @SaulLu

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @Rocketknight1

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

Model hub:

- for issues with a model report at https://discuss.huggingface.co/ and tag the model's creator.

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

## Information

There seems a conflict in [ Utilities for tokenizers ](https://huggingface.co/transformers/internal/tokenization_utils.html?highlight=truncation#transformers.tokenization_utils_base.PreTrainedTokenizerBase.__call__) and [Preprocessing data](https://huggingface.co/transformers/preprocessing.html?highlight=truncation#everything-you-always-wanted-to-know-about-padding-and-truncation).

In **Preprocessing data**, For **`truncation_strategy = True`**, It states "truncate to a maximum length specified by the max_length argument or the maximum length accepted by the model if no max_length is provided (max_length=None). This will only truncate the first sentence of a pair if a pair of sequences (or a batch of pairs of sequences) is provided."

whereas for the same in **Utilities for tokenizers**, it states "Truncate to a maximum length specified with the argument max_length or to the maximum acceptable input length for the model if that argument is not provided. This will truncate token by token, removing a token from the longest sequence in the pair if a pair of sequences (or a batch of pairs) is provided.".

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

In Preprocessing_data documentation , `truncation_strategy=True` must match with `longest_first` instead of `only_first`.

<!-- A clear and concise description of what you would expect to happen. -->

| 08-29-2021 04:46:39 | 08-29-2021 04:46:39 | Indeed. Would you mind opening a PR with the change? |

transformers | 13,322 | closed | DestilGTP2 code from pytorch-transformers does not work in transformers, I made a basic example | How would i convert this to new version of transformers. Or is it possible to somehow use DestilGTP2 with pytorch-transformers.

use_transformers = True

if use_transformers:

import torch

from transformers import GPT2Tokenizer, GPT2Model, GPT2LMHeadModel

tokenizer1 = GPT2Tokenizer.from_pretrained('distilgpt2',cache_dir="/var/software/Models/")

model1 = GPT2LMHeadModel.from_pretrained('distilgpt2',cache_dir="/var/software/Models/")

model1.eval()

model1.to('cuda')

text = "Who was Jim Henson ?"

indexed_tokens = tokenizer1.encode(text)

tokens_tensor = torch.tensor([indexed_tokens])

tokens_tensor = tokens_tensor.to('cuda')

with torch.no_grad():

predictions_1 = model1(tokens_tensor)

print(predictions_1)

else:

import torch

from pytorch_transformers import GPT2Tokenizer, GPT2Model, GPT2LMHeadModel

tokenizer1 = GPT2Tokenizer.from_pretrained('gpt2',cache_dir="/var/software/Models/") # cache_dir=None

model1 = GPT2LMHeadModel.from_pretrained('gpt2',cache_dir="/var/software/Models/")

model1.eval()

model1.to('cuda')

text = "Who was Jim Henson ?"

indexed_tokens = tokenizer1.encode(text)

tokens_tensor = torch.tensor([indexed_tokens])

tokens_tensor = tokens_tensor.to('cuda')

with torch.no_grad():

predictions_1 = model1(tokens_tensor)

print(predictions_1)

When i try i get an error, and tried to follow the guide but do not get what the new tokeniser does differently.

| 08-29-2021 02:38:20 | 08-29-2021 02:38:20 | This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 13,321 | closed | Add missing module __spec__ | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

This PR adds a missing `__spec__` object when importing the library that would be `None` otherwise.

Fixes #12904

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 08-28-2021 18:18:49 | 08-28-2021 18:18:49 | Thanks for your PR!

As you can see, this makes all the tests fail because you changed the init of `_LazyModule` without adapting all the places it's used (all the intermediates init of each model). I'm not sure whether those intermediate inits need to pass along the spec attribute or not, if they do you should add it in each one of them (don't forget the model template as well), and if they don't, you should make that argument optional.<|||||>@sgugger Thanks for looking at it! Changed the `module_spec` arg to be optional as I don't see why the other intermediate inits would need it.<|||||>Great! One last thing: could you run `make style` on your branch to solve the code quality issue?<|||||>Last thing caught by the CI new that the style is correct: your new test file will never be run by the CI. Since it's linked to `_LazyModule` defined in file_utils, could you move it to `test_file_utils`? Thanks a lot.<|||||>Is this one ready to be merged and published? |

transformers | 13,320 | closed | examples: only use keep_linebreaks when reading TXT files | Hi,

this is a follow-up (bug-fix) PR for #13150.

It turns out - as reported in #13312 - that the `keep_linebreaks` argument only works, when Datasets extension is `text`.

I used this logic to only pass the `keep_linebreaks` argument, when extension is `text`, simplified as:

```

dataset_args = {}

if extension == "text":

dataset_args["keep_linebreaks"] = True

dataset = load_dataset(extension, data_files=data_files, **dataset_args)

print(dataset["train"][0])

```

When `keep_linebreaks` was set to `True` and reading in a text file, the output looks like:

```bash

{'text': 'Heute ist ein schöner Tach\n'}

```

For `keep_linebreaks` set to `False` the output looks like:

```bash

{'text': 'Heute ist ein schöner Tach'}

```

So the proposed way is working with the `dataset_args` argument. I also checked all examples that they're working when passing a CSV dataset.

| 08-28-2021 10:20:16 | 08-28-2021 10:20:16 | |

transformers | 13,319 | closed | neptune.ai logger: add ability to connect to a neptune.ai run | single line is changed

when `NEPTUNE_RUN_ID` environmetnt variable is set, neptune will log into the previous run with id `NEPTUNE_RUN_ID`

trainer: @sgugger

| 08-28-2021 10:18:25 | 08-28-2021 10:18:25 | Thanks a lot for your PR! |

transformers | 13,318 | closed | Errors when fine-tuning RAG on cloud env | Hi the team,

I'm trying to fine-tune RAG with [the scripts you provided](https://github.com/huggingface/transformers/tree/9ec0f01b6c3aff4636869aee735859fb6f89aa98/examples/research_projects/rag). My env is cloud servers (4 V100 with 48G GRAM), and I always have these errors when do the fine-tuning:

> RuntimeError: Error in faiss::Index* faiss::read_index(faiss::IOReader*, int) at /__w/faiss-wheels/faiss-wheels/faiss/faiss/impl/index_read.cpp:480: Error: 'ret == (size)' failed: read error in <cache path>: 6907889358 != 16160765700 (Success)

It seems like errors are from faiss (and I don't know how to interpret it. Sizes do not macth?). I used this command to do the fine-tuning:

```bash

- python run_rag_ft.py

--data_dir /msmarco

--output_dir ./msmarco_rag

--model_name_or_path facebook/rag-sequence-nq

--model_type rag_sequence

--fp16

--gpus 4

--distributed_retriever pytorch

--num_retrieval_workers 4

--fp16

--profile

--do_train

--do_predict

--n_val -1

--train_batch_size 8

--eval_batch_size 1

--max_source_length 128

--max_target_length 40

--val_max_target_length 40

--test_max_target_length 40

--label_smoothing 0.1

--dropout 0.1

--attention_dropout 0.1

--weight_decay 0.001

--adam_epsilon 1e-08

--max_grad_norm 0.1

--lr_scheduler polynomial

--learning_rate 3e-05

--num_train_epochs 2

--warmup_steps 500

--gradient_accumulation_steps 1

```

Nothing special but just use my own data (MSMARCO). I sticked to the pytorch for the distributed retriever, and have not yet tested the ray version. Is that the problem?

I cannot run this on my local machines because of OOM errors (two 24GRAM GPUs).

I think @patrickvonplaten could help me on this. Thanks!

| 08-28-2021 09:41:37 | 08-28-2021 09:41:37 | Hey @DapangLiu,

We sadly don't actively maintain the `research_projects` folder except for Wav2Vec2. Could you try to use the forum: https://discuss.huggingface.co/ instead? <|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 13,317 | closed | How to use the pretraining task of ProphetNet | I want to use the pretraining task of ProphetNet, that recovers the mask span of the input sentence.

I follow the instruction of Figure 1 in the paper.

For example, the input is `But I [MASK][MASK] my life for some lovin\' and some gold` and I only recover the first `[MASK]`. (the sentence is from the pretraining corpus BookCorpus)

I use the following code:

```python

from transformers import ProphetNetTokenizer, ProphetNetForConditionalGeneration

tokenizer = ProphetNetTokenizer.from_pretrained('prophetnet')

model = ProphetNetForConditionalGeneration.from_pretrained('prophetnet')

# the sentence is from the pretraining corpus BookCorpus

input_ids = tokenizer('But I traded all my life for some lovin\' and some gold', return_tensors="pt")['input_ids']

mask_id = input_ids[0][2]

input_ids[0][2:4] = tokenizer.pad_token_id

decoder_input_ids = tokenizer('[MASK][MASK] I', return_tensors="pt")['input_ids']

# the way of MASS: decoder_input_ids = tokenizer('[MASK][MASK][MASK]', return_tensors="pt")['input_ids']

output = model(input_ids=input_ids, decoder_input_ids=decoder_input_ids)

probs = output.logits[0][2]

# the rank of the target word in the vocabulary

print((probs[mask_id]<probs).sum())

```

However, the rank of `traded` is 15182 among 30522 words.

And I also tried different masked words and masked spans, but the results are all unexpected.

So, I want to ask if my way to recover the mask has some errors? @patrickvonplaten | 08-28-2021 09:02:04 | 08-28-2021 09:02:04 | cc @qiweizhen<|||||>@StevenTang1998 - could you maybe try to use the forum: https://discuss.huggingface.co/ for such questions. I haven't played around with the model enough to give a qualified answer here sadly :-/ <|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 13,316 | closed | Squeeze and Excitation Network | # What does this PR do?

This PR implements an optional Squeeze and Excitation Block in Bert and the copied modules (RoBerta, Electra, splinter and layoutlm) in pytorch.

Fixes #11998

Additional tests have been added to the corresponding test scripts and the docs updated.

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed.

@LysandreJik | 08-28-2021 05:38:51 | 08-28-2021 05:38:51 | Hi,

Thanks for your PR! However, I don't think that we want to add this block to files of other models. It's more appropriate to add a new SesameBERT model (if pretrained weights are available), or add it under the `research_projects` directory.

cc @LysandreJik <|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.<|||||>Hi @AdityaDas-IITM, are you interested in working on this PR (making it a research project instead)?<|||||>Hey @NielsRogge, Yes I'll get started on it soon<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 13,315 | closed | Current trainer.py doesn't support beam search | # 🚀 Feature request

Currently I can't find any support for beam search in trainer.py - to begin with it doesn't even import the BearScorer or BeamHypotheses classes and the evaluation_loop and prediction_loop don't make any use of beam search logic internally. Its misleading because in the predict and evaluate functions in trainer_seq2seq.py it includes setting the self._num_beams to a passed in hyper parameter however that isn't used by the parent predict or evaluate functions. Also the run_summarization.py script also includes a beam search hyperparameter which isn't made use of. What would be the simplest way to have an evaluation and prediction step call and evaluate beam search?

## Motivation

Beam search is very critical for the evaluation of seq2seq methods. HuggingFace must have a trainer that does integrate with beam search just not sure where it is exposed / how that integration works. Currently for reference https://github.com/huggingface/transformers/blob/master/src/transformers/trainer.py#L2342 the prediction_step doesn't call beam search / perform beam search and this is called to get the loss, logits, labels for each step in evaluation. Thus it isn't actually performing a search over all the possible beams and instead evaluating for each next step in the dataloader.

## Your contribution

I would further look into how the Beam search util file is used in other models. The code exists https://github.com/huggingface/transformers/blob/master/src/transformers/generation_utils.py#L1612 I just wonder why trainer.py isn't calling it in the evaluate or predict functions - is there a reason for that? @patil-suraj

| 08-28-2021 01:00:24 | 08-28-2021 01:00:24 | This post on the forum will answer your question: https://discuss.huggingface.co/t/trainer-vs-seq2seqtrainer/3145/2?u=nielsr<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 13,314 | closed | neptune.ai logger: utilize `rewrite_logs` in `NeptuneCallback` as in `WandbCallback` | single line ischanged,

utilized a missing conversion in neptune logger as implemented in wandb logger

trainer: @sgugger | 08-27-2021 23:50:49 | 08-27-2021 23:50:49 | it turns out neptune.ai ui doesnt support charts for nested logged variables |

transformers | 13,313 | closed | [Testing] Add Flax Tests on GPU, Add Speech and Vision to Flax & TF tests | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

This PR does two things:

- 1. Adds Flax to the daily slow tests on GPU and adds flax tests on GPU. There is a TF Docker image that works well for JAX on GPU - see: https://github.com/google/jax/discussions/6338 . I think it's easiest to just use this image for now until there is an official JAX docker image for GPU.

- 2. We now have slow tests in both TF and Flax that require `soundfile` (TFHubert, TFWav2Vec2, FlaxWav2Vec2, ... Also there is FlaxViT in Flax which requires the `vision` package IMO. A new `tf-flax-speech` extension is added to make sure one doesn't install torch along torchaudio for TF and Flax's speech models and it is added to all the tests.

Also it is very likely that some slow tests in Flax will fail at the moment since they have been written to pass on TPU. If ok, @patil-suraj and I can fix them one-by-one after getting a report from the daily slow tests - we'll probably have to add some `if-else` statements depending on the backend there...

2nd, at the moment, we don't have any multi-GPU or multi-TPU tests for Flax, but I nevertheless enable the tests on multi-gpu on Flax here already. I'll add a multi-gpu/multi-tpu test for all flax models next week (cc @patil-suraj)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 08-27-2021 22:25:45 | 08-27-2021 22:25:45 | > Looks great! Mostly left nitpicks. I'm fine with seeing which tests failed on a first run and then adapting/removing failed tests.

>

> Do you have a number in mind regarding total runtime?

All non-slow tests together took 1h20, we don't have that many slow tests in Flax at the moment - so I'd assume that the total runtime would be something like 1h40<|||||>Running all the jitted tests takes a lot of time (but they're quite important IMO) <|||||>Ok sounds good! |

transformers | 13,312 | closed | Having problem Pre-training GPT models | ## Environment info

- `transformers` version: 4.9.2

- Platform: Linux-5.4.104+-x86_64-with-Ubuntu-18.04-bionic

- Python version: 3.7.11

- PyTorch version (GPU?): 1.9.0+cu102 (True)

- Tensorflow version (GPU?): 2.6.0 (True)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: <fill in>

- Using distributed or parallel set-up in script?: <fill in>

### Who can help

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @Rocketknight1

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

Model hub:

- for issues with a model report at https://discuss.huggingface.co/ and tag the model's creator.

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

## Information

Model I am using (Bert, XLNet ...): EleutherAI/gpt-neo-2.7B

The problem arises when using:

* [X ] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [X ] my own task or dataset: (give details below)

## To reproduce

I used a csv file with each line have a sample of example

Steps to reproduce the behavior:

My input: `!python /content/transformers/examples/pytorch/language-modeling/run_clm.py --model_name_or_path EleutherAI/gpt-neo-2.7B --train_file /content/df.csv --output_dir /tmp/test-clm`

i also tried using the no trainer version but still doesn't work.

What am i doing wrong?

What i got back:

```

Traceback (most recent call last):

File "/content/transformers/examples/pytorch/language-modeling/run_clm.py", line 520, in <module>

main()

File "/content/transformers/examples/pytorch/language-modeling/run_clm.py", line 291, in main

cache_dir=model_args.cache_dir,

File "/usr/local/lib/python3.7/dist-packages/datasets/load.py", line 830, in load_dataset

**config_kwargs,

File "/usr/local/lib/python3.7/dist-packages/datasets/load.py", line 710, in load_dataset_builder

**config_kwargs,

File "/usr/local/lib/python3.7/dist-packages/datasets/builder.py", line 271, in __init__

**config_kwargs,

File "/usr/local/lib/python3.7/dist-packages/datasets/builder.py", line 370, in _create_builder_config

builder_config = self.BUILDER_CONFIG_CLASS(**config_kwargs)

TypeError: __init__() got an unexpected keyword argument 'keep_linebreaks'

```

## Expected behavior

Just want to further train the GPT model

notebook: https://colab.research.google.com/drive/1bk8teH0Egu-gAmBC_zlvUifMHS7y_SyM?usp=sharing

Any help is much appreciated | 08-27-2021 20:37:02 | 08-27-2021 20:37:02 | Probably caused by this PR: #13150

cc @stefan-it <|||||>I'm looking into it right now :)<|||||>Oh no, this is only happening when using CSV files as input :thinking:

@mosh98 As a very quick workaround, could you try to "convert" your csv file into a normal text file (file extension .txt) and then re-run the training :thinking: <|||||>The `keep_linebreaks` argument is only implemented for text files in :hugs: Datasets:

https://github.com/huggingface/datasets/blob/67574a8d74796bc065a8b9b49ec02f7b1200c172/src/datasets/packaged_modules/text/text.py#L19

For CSV it is not available:

https://github.com/huggingface/datasets/blob/67574a8d74796bc065a8b9b49ec02f7b1200c172/src/datasets/packaged_modules/csv/csv.py<|||||>I'm working on a fix now (so that `keep_linebreaks` is only used when file extension is `.txt`)<|||||>Sure i can try that, i do have aquestion tho,

when i convert my csv into a text file how will i organize it so that it uses each line as a sample and also what command do i have to put when i run the script?

At the moment i have each row is the csv file as an individual sample<|||||>You can use the same structure (one individual sample per line) for the text file.

Command would be pretty much the same, but you need to use the file ending `.txt`, so that the training script will infer the correct extension for the `load_dataset` argument :)<|||||>Thank you @stefan-it the script works now, running out of cuda memeory tho but i think it's irrelevant to the actual script and more to do with my device.

Thanks Again!

|

transformers | 13,311 | closed | [Feature request] Introduce GenericTransformer to ease deployment of custom models to the Hub | # 🚀 Feature request

Introduce a GenericTransformer model that can handle many different variants and tweaks of the Transformer architecture. There are 2 ways of doing this and I'm not 100% sure of which one would better suit HF:

1. Introduce a GenericTransformerModel with many different options (extensive config file), such as different positional embeddings or attention variants. The modeling code would constantly be updated by HF or contributions from the community and would be included in each release of the library itself. Backward compatibility would not necessarily be an issue if all new additions were disabled by default in the config class. Also, the model could be designed in a modular way to ease the addition of new variants (see torchtext's MHA container https://github.com/pytorch/text/blob/main/torchtext/nn/modules/multiheadattention.py).

2. Allow users to submit code following HF's interfaces alongside checkpoints. GenericTransformerModel would dynamically download and load code from the hub.

I think the first one would be more convenient to avoid third-party dependencies and potentially unsafe code. The second one would be way more flexible, though.

## Motivation

An important point of the HF Transformers library philosophy is outlined in the README of the repo:

> Why shouldn't I use transformers?

> This library is not a modular toolbox of building blocks for neural nets. The code in the model files is not refactored with additional abstractions on purpose, so that researchers can quickly iterate on each of the models without diving into additional abstractions/files.

To clarify, this feature request does NOT intend to modify this philosophy. which clearly has many advantages. Instead, it has the purpose of potentially alleviating one of the drawbacks of this philosophy: the difficulties in sharing custom models, even if these models just introduce small tweaks (see https://github.com/stanford-crfm/mistral/issues/85, https://github.com/huggingface/transformers/pull/12243).

This would hopefully encourage researching different variants and combinations. In case one variant stabilized as a well-defined architecture that was worth using, then it might be considered to add it to the library the "classical" way, having a specific class, documentation, etc.

## Your contribution

I can't allocate time to this at the moment. Sorry about that.

| 08-27-2021 19:43:26 | 08-27-2021 19:43:26 | cc @sgugger regarding 2. :)<|||||>The plan is to add in the coming weeks support for custom models directly in the AutoModel classes, with the user providing the code of their models in a modeling file in the same repository on the model hub (same for custom tokenizers).

ETA for this feature should be end of next week.<|||||>> The plan is to add in the coming weeks support for custom models directly in the AutoModel classes, with the user providing the code of their models in a modeling file in the same repository on the model hub (same for custom tokenizers).

>

> ETA for this feature should be end of next week.

Perfect, thanks!<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.<|||||>PR https://github.com/huggingface/transformers/pull/13467 introduced a first version of what you were asking for @jordiae! Let us know if it works for you :) <|||||>> PR #13467 introduced a first version of what you were asking for @jordiae! Let us know if it works for you :)

Cool! Thanks! |

transformers | 13,310 | closed | :bug: fix small model card bugs | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

- `model_index` ➡️ `model-index`

- `metric` ➡️ `metrics`

- Metrics Dict ➡️ List of Metrics Dicts

These changes fix problem of user-provided evaluation metrics not showing up on model pages pushed to hub from trainer.

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 08-27-2021 17:46:33 | 08-27-2021 17:46:33 | good catch and thanks for working on this<|||||>Heres a repo I created with it: [nateraw/vit-base-beans-demo-v3](https://huggingface.co/nateraw/vit-base-beans-demo-v3) |

transformers | 13,309 | closed | Fixing a typo in the data_collator documentation | # Fixed a typo in the documentation | 08-27-2021 16:49:45 | 08-27-2021 16:49:45 | |

transformers | 13,308 | closed | [Large PR] Entire rework of pipelines. | # What does this PR do?

tl;dr: Make pipeline code much more consistent and enable large speedups with GPU inference.

# GPU pipeline

Currently the way pipeline are setup, it's kind of hard to keep the GPU busy 100% because we're not enabling the use of DataLoader (on pytorch), which is necessary to keep CPU working on next items to tokenize, while processing an item on GPU.

We cannot realistically use the current API to maximize utilization:

```python

for item in dataset:

# item == "This is some test" for instance

output = pipe(item)

# output == {"label": "POSITIVE", "score": 0,99}

```

So we need to change up the API to something closer to what `DataLoader` does, which is use an iterable, which enables to have worker CPU threads process next items while the GPU is busy on the current one, meaning we're now using 100% of the GPU.

```python

for output in pipe(dataset):

# output == {"label": "POSITIVE", "score": 0,99}

pass

```

In order to make that change possible, we **need** to separate better what happens on the CPU vs the GPU.

The proposed way is to split the __call__ of pipeline into 3 distinct function calls

- `preprocess`: in charge of taking the original pipeline input, and output a dict of everything necessary to do `model(**model_inputs)` for instance (or a `generate` call, but stuff that will really involve the GPU.

- `forward`: In most cases it's a simple function call to the model forward method, but can be more complex depending on the pipeline. It needs to be separate from the other 2 because this is where the GPU might be used. so we can encapsulate more logic around this in the base class (`no_grad`, sending and retrieving tensors to/from GPU etc..)

- `postprocess`: Usually links to processing the logits into something more user-friendly for the task at hand, again usually pretty fast and should happen on CPU (but should be so fast it does not matter really to have a separate thread for this).

In order to increase consistency across pipelines, ALL pipelines will have to implement the 3 methods, and should have a `__call__` method (with exceptions discussed in consistency).

They should be readable on their own too, meaning, the outputs of `preprocess` should be **exactly** what is sent to `forward` and what is returned by `forward` exactly the inputs of `preprocess`. So:

```python

model_inputs = pipe.preprocess(item)

model_outputs = pipe.forward(item)

outputs = pipe.postprocess(model_outputs)

```

will always be perfectly valid, even if not the most efficient.

# Consistency of pipelines

Right now, pipelines are quite inconsistent in their returned outputs.

- Some have parameters to change the output format (this is fine)

- Most pipelines accept lists of items, and will return a list of outputs but:

- Some will return a single item only if the input was a list of a single item (regardless of what the inputs originally was)

- Some will do it better and return single item only if single item was sent

- Some will use lists as batching, some will not, leading to slowdowns at best, OOM errors on large lists, and overall pretty poor efficiency on GPU (more info: https://github.com/huggingface/transformers/issues/13141, https://github.com/huggingface/transformers/pull/11251, https://github.com/huggingface/transformers/pull/11251)

Batching on GPU seems like what is speeding up, things, but really it's not at inference times, batching in ML is used because of gradients and it's necessary for the gradient descent to be smooth, the speed part of the GPU is really linked to overall GPU usage, using `DataLoader` is the key part here. Nonetheless, sometimes, depending on actual hardware, pipeline, and input data, batching *can* be used efficiently, so the new design should enable that. However, it shouldn't be done the way it's currently setup, which is some pipelines do, some don't and no consistency overall, it should be done on a different layer than dataprocessing part of the pipeline.

Because of the inconsitencies mentionned above, this refactor will include some `__call__` methods to change the return type based on what was previously there so (`preprocess`, `forward` and `postprocess` are mostly pure, while `__call__` will handle backwards compatibilty)

# Parameter handling

Another cause of concern for pipelines was parameter handling. Most parameters were sent to `__call__` method, but some where sent to `__init__`. Some in both.

That meant that you would have to look though the docs to guess if you needed to to

```python

pipe = pipeline(....., num_beams=2)

outputs = pipe(item)

# or

pipe = pipeline(....)

outputs = pipe(item, num_beams=2)

```

The goal in this PR, was to make that explicit, so BOTH will be supported and have the exact same behavior.

In order to do that, we introduced a new mandatory method `set_parameters` which would be called both in `__call__` and `__init__` in the same way so that it would always work.

1. Because this new `set_parameters` is a standard method, we can use it to properly discard unexpected keyword with a real errors instead of just ignoring it.

2. Because `__init__` and `__call__` are now base class only (roughly), we can capture parameters much better, meaning we don't have extra layer of parameter guessing (is it tokenization param, model param, pipeline param ?). Each method will capture everything it needs and pass on the rest, the ultimate method in the chain is `set_parameters` which might be specific parameters, or accept everything (like **generate_kwargs, so utlimately `generate` will have the final word).

3. Because `set_parameters` will be called at least 2 times and we don't know which one will have actual real values, it needs to be done in a somewhat odd way. The ways most pipelines will do, is simply have a default argument to `None`, so if the argument is `None` we know that the caller didn't supply this argument so we don't override it (the default one is defined in the `__init__` if dynamic or directly in the class if static. This however does not work when `None` is a valid choice for some parameter, this is true **only** for `zero-shot-classification` test, where we specially test that we raise some error when passing `None` as a value (so it can probably be changed, but will be backward incompatible regarding tests). For those, more complex logic is required.

4. Because we're now using `self` as the holder for parameters that means that using threading mecanisms to run the pipelines might lead to some oddities (but people most likely aren't using 1 pipeline on different threads, most likely shouldn't be at least). Other options are possible but would passing them though all 3 functions `preprocess`, `forward` and `postprocess` reducing readability IMHO, for debattable gains.

# Results

Currently we're sitting here performance wise

bench code

```python

from transformers import pipeline

from transformers.pipelines.base import KeyDataset

import datasets

import tqdm

pipe = pipeline("automatic-speech-recognition", model="facebook/wav2vec2-base-960h", device=0)

dataset = datasets.load_dataset("superb", name="asr", split="test")

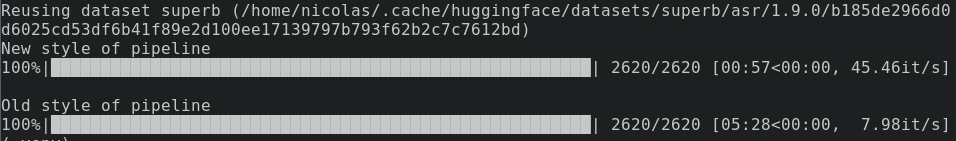

print("New style of pipeline")

for out in tqdm.tqdm(pipe(KeyDataset(dataset, "file"))):

pass

print("Old style of pipeline")

for item in tqdm.tqdm(dataset):

out = pipe(item["file"])

```

Speed (done on old suffering GTX 970):

## Backward compatibility

We're currently sitting at 100% backward compatibility regarding tests. We're not however 100% backward compatible.