repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers | 12,826 | closed | Allow the use of tensorflow datasets having UNKNOWN_CARDINALTY for TFTrainer | # 🚀 Feature request

<!-- A clear and concise description of the feature proposal.

Please provide a link to the paper and code in case they exist. -->

I am currently working on a project which requires huggingface and tensorflow. I created a huggingface dataset and converted it into tensorflow dataset using `from_generator()`, instead of `from_tensor_slices()` like the example in the docs. The dataset that I am using is very big, hence, it takes a lot of time for it to get converted into a tensor using dictionary comprehension. `from_generator()` handles this well by processing the data on-the-fly. Tensorflow allows training via datasets having UNKNOWN_CARDINALITY. I would like to suggest the same to be implemented in TFTrainer.

| 07-21-2021 09:43:19 | 07-21-2021 09:43:19 | Hi! We're actually trying to deprecate the use of TFTrainer at the moment in favour of Keras - there will be a deprecation warning about this in the next release of Transformers. Using Keras you can train with a generator or tf.data.Dataset without problems.

In addition, we're looking to improve our Tensorflow integration in other ways, such as by making it easier to load huggingface datasets as Tensorflow datasets, which might allow us to retain the cardinality information regardless. This second part is still a work in progress, though!<|||||>> Hi! We're actually trying to deprecate the use of TFTrainer at the moment in favour of Keras - there will be a deprecation warning about this in the next release of Transformers. Using Keras you can train with a generator or tf.data.Dataset without problems.

>

> In addition, we're looking to improve our Tensorflow integration in other ways, such as by making it easier to load huggingface datasets as Tensorflow datasets, which might allow us to retain the cardinality information regardless. This second part is still a work in progress, though!

That's great! Looking forward to the next release!:smile:<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 12,825 | closed | TFBertModel much slower on GPU than BertModel | ## Environment info

- `transformers` version: 4.8.2

- Platform: Linux-5.8.0-55-generic-x86_64-with-glibc2.31

- Python version: 3.9.5

- PyTorch version (GPU?): 1.9.0 (True)

- Tensorflow version (GPU?): 2.5.0 (True)

- Using GPU in script?: yes

- Using distributed or parallel set-up in script?: no

### Who can help

@LysandreJik, @Rocketknight1

## Information

Hello,

I have an GPU issue using BertModel with Pytorch 1.9.0 (OK) and Tensorflow 2.5.0 (very slow).

I'm trying to get vectors for each token of a sentence. The following minimal working example takes 15 seconds to process an (identical) sentence 2000 times (on a RTX 3090, cudatoolkit 11.1.74). GPU usage is about 30%

```

text = "this is a very simple sentence for which I need word piece vectors ."

def test_torch():

import torch

from transformers import BertModel, BertTokenizer

tokenizer = BertTokenizer.from_pretrained("bert-base-multilingual-cased")

model = BertModel.from_pretrained("bert-base-multilingual-cased")

model = model.cuda()

start = time.time()

for x in range(2000):

words = tokenizer.cls_token + text + tokenizer.sep_token # add [CLS] and [SEP] around sentence

tokenids = tokenizer.convert_tokens_to_ids(tokenizer.tokenize(words))

#print(tokenids)

token_tensors=torch.tensor([tokenids])

with torch.no_grad():

last_layer_features = model(token_tensors.cuda())[0]

#print(last_layer_features)

end = time.time()

print("%d seconds" % (end-start))

```

whereas using Tensorflow the same takes 130 seconds (and the GPU usage is about 4%):

```

def test_tf2():

import tensorflow as tf

from transformers import TFBertModel, BertTokenizer

with tf.device("/gpu:0"):

tokenizer = BertTokenizer.from_pretrained("bert-base-multilingual-cased")

model = TFBertModel.from_pretrained("bert-base-multilingual-cased")

#model = model.gpu() # does not exist

start = time.time()

for x in range(2000):

words = tokenizer.cls_token + text + tokenizer.sep_token # add [CLS] and [SEP] around sentence

tokenids = tokenizer.convert_tokens_to_ids(tokenizer.tokenize(words))

token_tensors = tf.convert_to_tensor([tokenids])

#with torch.no_grad():

last_layer_features = model(token_tensors.gpu(), training=False)[0]

end = time.time()

print("%d seconds" % (end-start))

```

So obviously I do not copy things correctly to the GPUs in the Tensorflow example. Does anyone have an idea what is wrong here?

| 07-21-2021 09:04:23 | 07-21-2021 09:04:23 | This is more of a TF issue than a Transformers one - eager TF can be quite inefficient, especially when you're repeatedly calling it with small inputs. In TF you can 'compile' a Python function or model call - this is analogous to using TorchScript in PyTorch, but is much more common and integrated into the main library - e.g. Keras almost always compiles models before running them.

I tested your code with compilation and I got a speedup of 8x-10x, so I'd recommend using that. You can just add `model = tf.function(model)` before the loop, or for even higher performance on recent versions of TF you can use `model = tf.function(model, jit_compile=True)`. Alternatively, all of our models are Keras models, and if you run using the Keras API methods like fit() and predict(), all of the details of compilation and feeding data will be handled for you, and performance will usually be very good.

For more, I wrote a reddit post on the Keras approach with our library [here](https://www.reddit.com/r/MachineLearning/comments/ok81v4/n_tf_keras_and_transformers/), including links to example scripts.

You can also see a guide on using `tf.function` as a call or a decorator [here](https://www.tensorflow.org/guide/function).<|||||>Note that this code is a little bit sloppy - you'll ordinarily want to write the whole function that does what you want, and then call or decorate that function with `tf.function`, as explained in the TF docs. `tf.function(model)` works fine for this small example, but will cause problems if you then later want to use the model object for other things, such as using the Keras API with it.<|||||>Thanks for your reply ! I checked tf.function(model) and it works nicely. However my MWE was too minimal. In reality every sentence is different in wording and length. So I had to add a padding to make sure that all sentences have an equal (maximal) length, and now my "real" code is running as fast as pytorch. Apparently pytorch pads auto-magically, but in Tensorflow I have to do it manually. Anyway, it works! <|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 12,824 | closed | Can't use padding in Wav2Vec2Tokenizer. TypeError: '<' not supported between instances of 'NoneType' and 'int'. | # **Questions & Help**

## Details

I'm trying to get a Tensor of labels from a text in order to train a Wav2Vec2ForCTC from scratch but apparently pad_token_id is set to NoneType, even though I've set a pad_token in my Tokenizer.

This is my code:

```

# Generating the Processor

from transformers import Wav2Vec2CTCTokenizer

from transformers import Wav2Vec2FeatureExtractor

from transformers import Wav2Vec2Processor

tokenizer = Wav2Vec2CTCTokenizer("./vocab.json", unk_token = "[UNK]", pad_token = "[PAD]", word_delimiter_token="|")

feature_extractor = Wav2Vec2FeatureExtractor(feature_size=1, sampling_rate=sampling_rate, padding_value=0.0, do_normalize=True, return_attention_mask=False)

processor = Wav2Vec2Processor(feature_extractor=feature_extractor, tokenizer=tokenizer)

with processor.as_target_processor():

batch["labels"] = processor(batch["text"], padding = True, max_length = 1000, return_tensors="pt").input_ids

```

Error message is this:

```

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-8-45831c0137f6> in <module>

9

10 # Processing

---> 11 data = prepare(data)

12 data["input"] = data["input"][0]

13 data["input"] = np.array([inp.T.reshape(12*4096) for inp in data["input"]])

<ipython-input-4-aaba15f24a61> in prepare(batch)

29 # Texts

30 with processor.as_target_processor():

---> 31 batch["labels"] = processor(batch["text"], padding = True, max_length = 1000, return_tensors="pt").input_ids

32

33 return batch

~/anaconda3/lib/python3.8/site-packages/transformers/models/wav2vec2/processing_wav2vec2.py in __call__(self, *args, **kwargs)

115 the above two methods for more information.

116 """

--> 117 return self.current_processor(*args, **kwargs)

118

119 def pad(self, *args, **kwargs):

~/anaconda3/lib/python3.8/site-packages/transformers/tokenization_utils_base.py in __call__(self, text, text_pair, add_special_tokens, padding, truncation, max_length, stride, is_split_into_words, pad_to_multiple_of, return_tensors, return_token_type_ids, return_attention_mask, return_overflowing_tokens, return_special_tokens_mask, return_offsets_mapping, return_length, verbose, **kwargs)

2252 if is_batched:

2253 batch_text_or_text_pairs = list(zip(text, text_pair)) if text_pair is not None else text

-> 2254 return self.batch_encode_plus(

2255 batch_text_or_text_pairs=batch_text_or_text_pairs,

2256 add_special_tokens=add_special_tokens,

~/anaconda3/lib/python3.8/site-packages/transformers/tokenization_utils_base.py in batch_encode_plus(self, batch_text_or_text_pairs, add_special_tokens, padding, truncation, max_length, stride, is_split_into_words, pad_to_multiple_of, return_tensors, return_token_type_ids, return_attention_mask, return_overflowing_tokens, return_special_tokens_mask, return_offsets_mapping, return_length, verbose, **kwargs)

2428

2429 # Backward compatibility for 'truncation_strategy', 'pad_to_max_length'

-> 2430 padding_strategy, truncation_strategy, max_length, kwargs = self._get_padding_truncation_strategies(

2431 padding=padding,

2432 truncation=truncation,

~/anaconda3/lib/python3.8/site-packages/transformers/tokenization_utils_base.py in _get_padding_truncation_strategies(self, padding, truncation, max_length, pad_to_multiple_of, verbose, **kwargs)

2149

2150 # Test if we have a padding token

-> 2151 if padding_strategy != PaddingStrategy.DO_NOT_PAD and (not self.pad_token or self.pad_token_id < 0):

2152 raise ValueError(

2153 "Asking to pad but the tokenizer does not have a padding token. "

TypeError: '<' not supported between instances of 'NoneType' and 'int'

```

I've also tried seting the pad_token with tokenizer.pad_token = "[PAD]". It didn't work.

Does anyone know what I'm doing wrong? Thanks. | 07-21-2021 09:03:14 | 07-21-2021 09:03:14 | @mrcolorblind It's difficult to reproduce your error as many of the variables in your code snippet aren't set and I don't know where `"./vocab.json"` comes from.

However the following code snippet works:

```python

from transformers import Wav2Vec2CTCTokenizer, Wav2Vec2FeatureExtractor, Wav2Vec2Processor

tokenizer = Wav2Vec2CTCTokenizer.from_pretrained("facebook/wav2vec2-base-960h")

feature_extractor = Wav2Vec2FeatureExtractor(feature_size=1, sampling_rate=16000, padding_value=0.0, do_normalize=True, return_attention_mask=False)

processor = Wav2Vec2Processor(feature_extractor=feature_extractor, tokenizer=tokenizer)

with processor.as_target_processor():

labels = processor(["hello", "hey", "a"], padding = True, max_length = 1000, return_tensors="pt").input_ids

```<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.<|||||>@patrickvonplaten @patrickvonplaten I have the same error here, any help?<|||||>@patrickvonplaten me too. any help?<|||||>Could you guys please add a reproducible code snippet that I can debug? :-) Thanks!<|||||>I am getting the same error when I am trying to use gpt2 tokenizer. I am trying to fine tune bert2gpt2 encoder decoder model with your training scripts here: https://huggingface.co/patrickvonplaten/bert2gpt2-cnn_dailymail-fp16

I tried transformers 4.15.0 and 4.6.0 both of them didn't work. |

transformers | 12,823 | closed | Fix generation docstrings regarding input_ids=None | # What does this PR do?

The docstrings incorrectly describe what happens if no input_ids are passed. This PR changes the docstrings so that the actual behavior is described.

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

Documentation: @sgugger

| 07-21-2021 08:57:22 | 07-21-2021 08:57:22 | Thanks for reviewing!

The merge conflict has been resolved. |

transformers | 12,822 | closed | label list in MNLI dataset | ## Environment info

- `transformers` version:

- Platform: centos7.2

- Python version: Python3.6.8

- PyTorch version (GPU?): None

- Tensorflow version (GPU?): None

- Using GPU in script?: None

- Using distributed or parallel set-up in script?: None

### Who can help

Models:

- albert, bert, xlm: @LysandreJik

## Information

Model I am using bert-base-uncased-mnli

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [x] my own modified scripts: (give details below)

The tasks I am working on is:

* [x] an official GLUE/SQUaD task: MNLI

* [ ] my own task or dataset:

## To reproduce

When processiong label list for MNLI tasks, I noticed lable_list is defined different in Huggingface transformer and Hugging face dataset.

### label list in datasets

If I load my data via datasets:

```

import datasets

from datasets import load_dataset, load_metric

raw_datasets = load_dataset("glue", 'mnli')

print(raw_datasets['validation_matched'].features['label'].names)

```

It returns:

```

['entailment', 'neutral', 'contradiction']

```

And label is also mentioned in document: https://huggingface.co/datasets/glue.

```

mnli

premise: a string feature.

hypothesis: a string feature.

label: a classification label, with possible values including entailment (0), neutral (1), contradiction (2).

idx: a int32 feature.

```

### label list in transoformers

But in huggingface transformers:

```

processor = transformers.glue_processors['mnli']()

label_list = processor.get_labels()

print(label_list)

```

It returns:

```

['contradiction', 'entailment', 'neutral']

```

### label configs

I checked the config used in datasets which is downloaded from https://raw.githubusercontent.com/huggingface/datasets/1.8.0/datasets/glue/glue.py.

The defination for label_classes is:

```

label_classes=["entailment", "neutral", "contradiction"],

```

And in transformer master, it is defined in function: https://github.com/huggingface/transformers/blob/15d19ecfda5de8c4b50e2cd3129a16de281dbd6d/src/transformers/data/processors/glue.py#L247

It's confusing that same MNLI tasks uses different label order in datasets and transformers. I'm expecting it should be same on both datasets and transformers.

| 07-21-2021 08:46:48 | 07-21-2021 08:46:48 | cc @sgugger @lhoestq, and I think @lewtun was also interested in that at some point.<|||||>Note that the label_list in Transformers is deprecated and should not be used anymore.<|||||>Indeed the order between a model's labels and those in a dataset can differ, which is why we've added a `Dataset.align_labels_with_mapping` function in this PR: https://github.com/huggingface/datasets/pull/2457<|||||>Hi @lewtun , @sgugger . Thanks for the quick reply. So label_list won't be supported in tranformers in future, and should be handled by datasets, right? <|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.<|||||>Hi,

Some of the data in dev_mismatched file doesnt have labels, how should we get accurary from them?

|

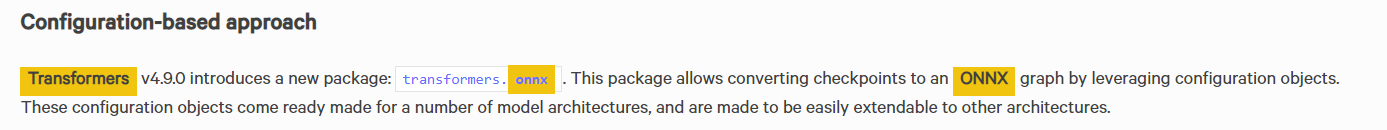

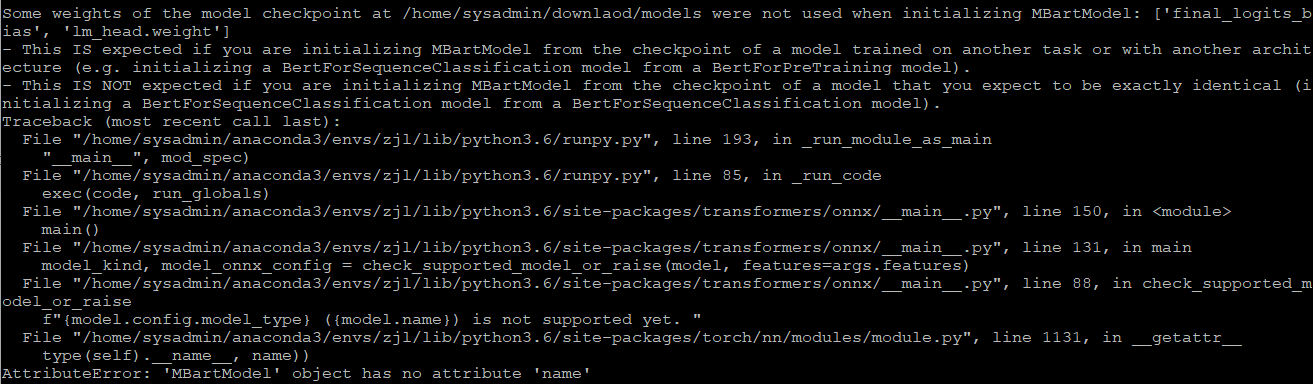

transformers | 12,821 | closed | Any example to accelerate BART/MBART model with onnx runtime? | # 🚀 Feature request

<!-- A clear and concise description of the feature proposal.

Transformers.onnx provided a simple way to convert the bart/mbart model to onnx model. But there is no example about how these model could be actually exc by onnx runtime. Bucause some onnx models may occur various of errors. Could you please provide some examples about using onnx runtime to exc these models ?

| 07-21-2021 08:43:59 | 07-21-2021 08:43:59 | The transformer.onnx provides a simple to convert hf models to onnx models. But there is no examples about how to exc them by onnx runtime. Some onnx models actually would meet some errors exc with onnx runtime. Could you please provide some examples and experiments about running them with onnx runtime? |

transformers | 12,820 | closed | fix typo in gradient_checkpointing metadata | help for [ModelArguments.gradient_checkpointing] should be

"If True, use gradient checkpointing to save memory

at the expense of slower backward pass."

not "Whether to freeze the feature extractor layers of the model."

(which is duplicated from [freeze_feature_extractor])

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes Typo

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

@patrickvonplaten

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 07-21-2021 08:29:09 | 07-21-2021 08:29:09 | |

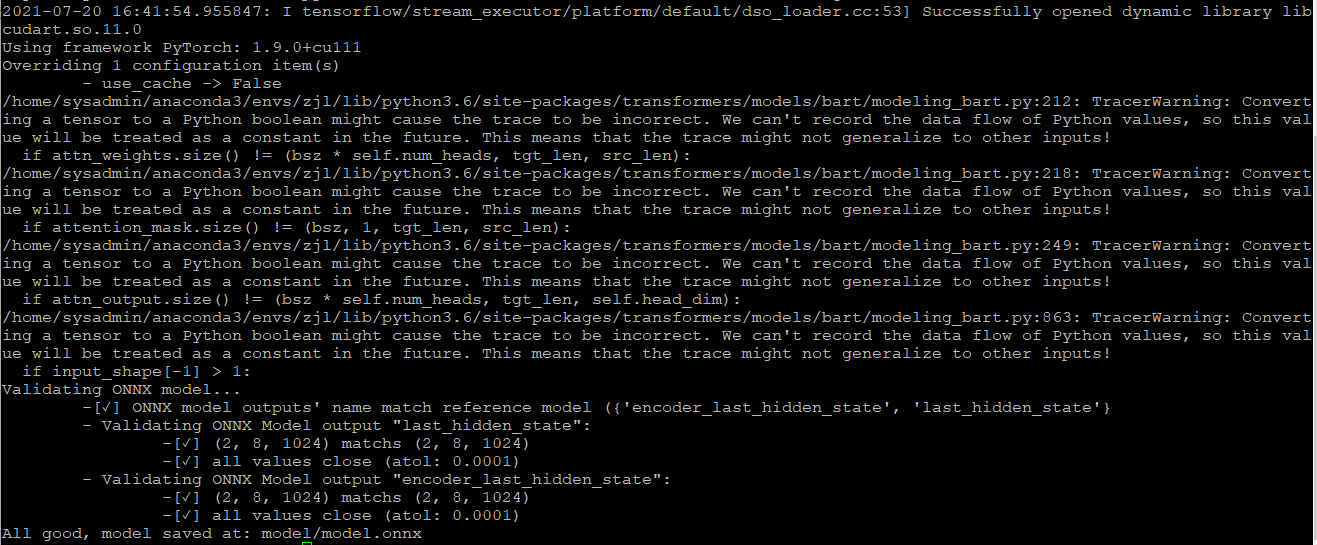

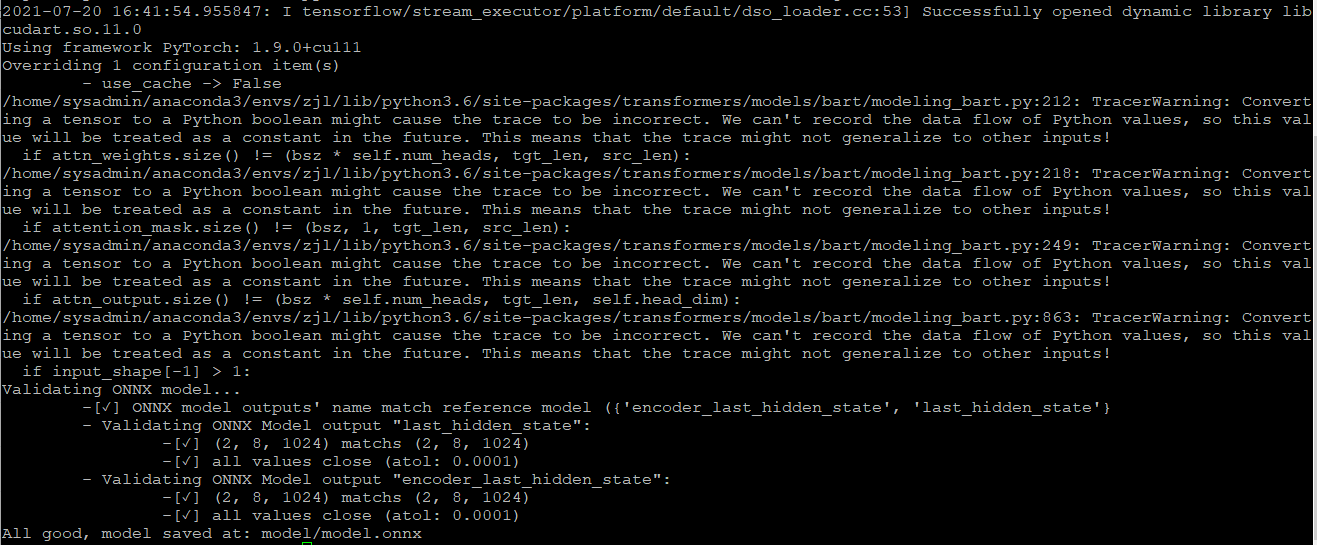

transformers | 12,819 | closed | Converting a tensor to a python boolean might cause the trace to be incorrect. We can't record the data flow of python values | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version:

- Platform: CENTOS 8.

- Python version: 3.7

- PyTorch version (GPU?): 1.9.0 + cuda

- Tensorflow version (GPU?): No

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: No

### Who can help

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

@patrickvonplaten, @patil-suraj @LysandreJik

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @Rocketknight1

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

Model hub:

- for issues with a model report at https://discuss.huggingface.co/ and tag the model's creator.

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

## Information

I convert the hugging face bart model to onnx model using transformer.onnx (thanks for the excellent tools). But these warnings occured and they may have a influencec on the performance of model.

| 07-21-2021 06:38:33 | 07-21-2021 06:38:33 | Hi @leoozy, you should safely disregard these warnings. The warnings should only apply to assertions we make to ensure that the input shape passed is correct.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 12,818 | closed | Refer warmup_ratio when setting warmup_num_steps. | # What does this PR do?

This PR fixes the bug that DeepSpeed schduler does not refer warmup_ratio argument, although DeepSpeed schduler refers warmup_steps argument. This contradicts to the warning message "Both warmup_ratio and warmup_steps given, warmup_steps will override any effect of warmup_ratio during training" defined in training_args.py L.700.

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Because this PR relates to DeepSpeed integration, I hope @stas00 may review this. | 07-21-2021 04:19:28 | 07-21-2021 04:19:28 | Thank you for your PR, @tsuchm

I'm trying to understand what you're trying to accomplish and could use some help from you.

Did I understand you correctly that you're saying that Deepspeed integration ignores the `--warmup_ratio` argument and you're proposing how to integrate it? I agree that it could be integrated.

In which case the logic should be the same as in:

https://github.com/huggingface/transformers/blob/cabcc75171650f9131a4cf31c62e1f102589014e/src/transformers/trainer.py#L825-L828

so that `--warmup_steps` takes precedence and we are matching HF Trainer consistently.

The part that I didn't get is what does this have to do with the warning from:

https://github.com/huggingface/transformers/blob/cabcc75171650f9131a4cf31c62e1f102589014e/src/transformers/training_args.py#L724-L727

Thank you.<|||||>> Did I understand you correctly that you're saying that Deepspeed integration ignores the --warmup_ratio argument and you're proposing how to integrate it? I agree that it could be integrated.

Yes, your understanding is correct.

> In which case the logic should be the same as in:

Yes, my PR also aimed to reproduce your pointed code, and I agree that my PR is not exactly equal to your pointed code.

I however think that if you, the development team, hope that these two fragments are exactly same, it is better to abstract them into a function than to copy it. Unfortunately, I do not have enough knowledge to abstract them.

> The part that I didn't get is what does this have to do with the warning from:

I am sorry for my confusing comment. My aim to quote the above warning is simply to indicate that this behavior (DeepSpeed scheduler does not refer warmup_ratio argument) does not match the action that we users expect. I think that no fix for this warning message is necessary. <|||||>I totally agree on all accounts. Thank you for the clarification.

Would you like me to push the changes into your PR branch or would you rather try to do it yourself with my guidance?

Basically this code:

```

warmup_steps = (

self.args.warmup_steps

if self.args.warmup_steps > 0

else math.ceil(num_training_steps * self.args.warmup_ratio)

```

can be made into a method around the same place, e.g. `def get_warmup_steps(self, num_training_steps)`

and then we can call it in both places, in the same way.

Let me know what you prefer.

p.s. may be it best belongs in `TrainingArguments` - let's ask @sgugger where he prefers such wrapper to reside. but for now edit it in place as I suggested if you'd like to try to work it out by yourself.

<|||||>I agree your opinion. I tried to add a new method to get number of warmup steps to TrainingArguments class. Unfortunately, my using environment uses the version 4.8.2, thus, I confirmed my proposal works on the version 4.8.2, and backported it to the main branch.

Could you review my PR?<|||||>a gentle note to reviewers: please don't forget the docs.

Fixed here: https://github.com/huggingface/transformers/pull/12830<|||||>I'm sorry I missed the documentation inside `deepsped`, the main point of the PR was that it was documented a certain way in `training_args.py` and not enforced.<|||||>That's alright, @sgugger. I was just asleep to have a chance to review.

My understanding is that the main intention of this PR was to fix the missing functionality in the Deepspeed integration. And in the process other parts of the ecosystem were touched.<|||||>Thanks to all reviewers. Finally, I have just succeeded to reproduce results of a previous research using warmup strategy. |

transformers | 12,817 | closed | tensor size mismatch in NER.py | Hi,

I am trying to classify tokens for a task similar to NER. I am using the following code:

transformers/examples/pytorch/token-classification/run_ner.py

My input data is in JSON format for both train and test. When I perform classification using BioBERT, it runs without any issue. When I use BERT base (cased or uncased), I get the following error in the middle of the training:

Traceback (most recent call last):

File "/home/tv349/PharmaBERT/transformers/examples/pytorch/token-classification/run_ner.py", line 530, in <module>

main()

File "/home/tv349/PharmaBERT/transformers/examples/pytorch/token-classification/run_ner.py", line 463, in main

train_result = trainer.train(resume_from_checkpoint=checkpoint)

File "/home/tv349/.conda/envs/HF/lib/python3.8/site-packages/transformers/trainer.py", line 1269, in train

tr_loss += self.training_step(model, inputs)

File "/home/tv349/.conda/envs/HF/lib/python3.8/site-packages/transformers/trainer.py", line 1754, in training_step

loss = self.compute_loss(model, inputs)

File "/home/tv349/.conda/envs/HF/lib/python3.8/site-packages/transformers/trainer.py", line 1786, in compute_loss

outputs = model(**inputs)

File "/home/tv349/.local/lib/python3.8/site-packages/torch/nn/modules/module.py", line 889, in _call_impl

result = self.forward(*input, **kwargs)

File "/home/tv349/.conda/envs/HF/lib/python3.8/site-packages/transformers/models/bert/modeling_bert.py", line 1712, in forward

outputs = self.bert(

File "/home/tv349/.local/lib/python3.8/site-packages/torch/nn/modules/module.py", line 889, in _call_impl

result = self.forward(*input, **kwargs)

File "/home/tv349/.conda/envs/HF/lib/python3.8/site-packages/transformers/models/bert/modeling_bert.py", line 984, in forward

embedding_output = self.embeddings(

File "/home/tv349/.local/lib/python3.8/site-packages/torch/nn/modules/module.py", line 889, in _call_impl

result = self.forward(*input, **kwargs)

File "/home/tv349/.conda/envs/HF/lib/python3.8/site-packages/transformers/models/bert/modeling_bert.py", line 221, in forward

embeddings += position_embeddings

RuntimeError: The size of tensor a (607) must match the size of tensor b (512) at non-singleton dimension 1

Also, this is how I call the function:

python transformers/examples/pytorch/token-classification/run_ner.py \

--model_name_or_path $model_path \

--train_file $train_file \

--validation_file $validation_file \

--output_dir $root_output_dir \

--overwrite_output_dir \

--do_train \

--do_eval

I don't think the problem is in my input data or setting because everything works for BioBERT, but failed for BERT base.

Could you please let me know what can I do to avoid this issue?

Thanks! | 07-21-2021 02:57:35 | 07-21-2021 02:57:35 | cc @sgugger <|||||>Mmm the problem looks like it's coming from the position embedding with an input of size 607, which is larger than 512 (the maximum handled apparently).<|||||>Thanks for the response. But why do you think it works for BioBert and not BERT base?<|||||>Will it help if I provide my training data?<|||||>Anything that could helps us reproduce the issue would help, yes.<|||||>> But why do you think it works for BioBert and not BERT base?

It seems this is happening due to the [`model_max_length`] property in `tokenizer_config.json`. So far I could understand from the [docs], setting this property to a value will trim the tokens while tokenization (please, correct me if I am wrong).

[`tokenizer_config.json` of biobert] sets `model_max_length=512`. But in case of bert-base [cased] or [uncased], `model_max_length` is not specified.

[`model_max_length`]: https://huggingface.co/transformers/internal/tokenization_utils.html?highlight=model_max_length#transformers.tokenization_utils_base.PreTrainedTokenizerBase

[docs]: https://huggingface.co/transformers/internal/tokenization_utils.html?highlight=model_max_length#transformers.tokenization_utils_base.PreTrainedTokenizerBase

[`tokenizer_config.json` of biobert]: https://huggingface.co/dmis-lab/biobert-v1.1/blob/main/tokenizer_config.json

[cased]: https://huggingface.co/bert-base-cased/blob/main/tokenizer_config.json

[uncased]: https://huggingface.co/bert-base-uncased/blob/main/tokenizer_config.json<|||||>Yes, that is very likely the case. I think the script is simply missing a `max_seq_length` parameter (like there is for text classification for instance) that the user should set when the `model_max_length` is either too large or simply missing.

I will add that tomorrow.<|||||>Adding model_max_length=512 to the tokenizer_config.json solved the issue. Thank you very much!<|||||>@sgugger that would be a great idea. I was looking for that option but couldn't find it. Thank you very much for your consideration. |

transformers | 12,816 | closed | [debug] DebugUnderflowOverflow doesn't work with DP | As reported in https://github.com/huggingface/transformers/issues/12815 `DebugUnderflowOverflow` breaks under DP since the model gets new references to model sub-modules/params on replication and the old references are needed to track the model layer names.

It might be possible to think of some workaround, most likely overriding `torch.nn.parallel.data_parallel.replicate` to refresh the model references after the replication, but at the moment this is not required, since DDP works just fine. (or single GPU).

So this PR adds a clean assert when DP is used, instead of a confusing exception. Update docs.

Fixes: https://github.com/huggingface/transformers/issues/12815

@sgugger | 07-21-2021 01:18:58 | 07-21-2021 01:18:58 | |

transformers | 12,815 | closed | DebugUnderflowOverflow crashes with Multi-GPU training | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.8.2

- Platform: Linux-4.15.0-29-generic-x86_64-with-Ubuntu-18.04-bionic

- Python version: 3.6.9

- PyTorch version (GPU?): 1.9.0+cu102 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: Yes

### Who can help

@stas00

@sgugger

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @Rocketknight1

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

Model hub:

- for issues with a model report at https://discuss.huggingface.co/ and tag the model's creator.

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

## Information

Model I am using (Bert, XLNet ...): Bart (but this is inessential)

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [x] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [x] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

1. Instantiate a `debug_utils.DebugUnderflowOverflow` for a model (I do this with `debug="underflow_overflow"` to a `TrainingArguments`).

2. Train using multi-GPU setup.

3. the debug hook added by `DebugUnderflowOverflow` to run after `forward()` will crash because of a bad lookup in the class's `module_names` dict.

This does not happen on single-GPU training.

Here's an example that crashes if I run on my (4-GPU) machine, but does **not** crash if I restrict to a single GPU (by calling `export CUDA_VISIBLE_DEVICES=0` before invoking the script):

```

from torch.utils.data import Dataset

from transformers import (BartForConditionalGeneration, BartModel, BartConfig,

Seq2SeqTrainingArguments, Seq2SeqTrainer)

class DummyDataset(Dataset):

def __len__(self):

return 5

def __getitem__(self, idx):

return {'input_ids': list(range(idx, idx+3)),

'labels': list(range(idx, idx+3))}

def main():

train_dataset = DummyDataset()

config = BartConfig(vocab_size=10, max_position_embeddings=10, d_model=8,

encoder_layers=1, decoder_layers=1,

encoder_attention_heads=1, decoder_attention_heads=1,

decoder_ffn_dim=8, encoder_ffn_dim=8)

model = BartForConditionalGeneration(config)

args = Seq2SeqTrainingArguments(output_dir="tmp", do_train=True,

debug="underflow_overflow")

trainer = Seq2SeqTrainer(model=model, args=args, train_dataset=train_dataset)

trainer.train()

if __name__ == '__main__':

main()

```

I get the following stack trace on my multi-GPU machine:

```

Traceback (most recent call last):

File "./kbp_dbg.py", line 29, in <module>

main()

File "./kbp_dbg.py", line 26, in main

trainer.train()

File "[...]/transformers/trainer.py", line 1269, in train

tr_loss += self.training_step(model, inputs)

File "[...]/transformers/trainer.py", line 1762, in training_step

loss = self.compute_loss(model, inputs)

File "[...]/transformers/trainer.py", line 1794, in compute_loss

outputs = model(**inputs)

File "[...]/torch/nn/modules/module.py", line 1051, in _call_impl

return forward_call(*input, **kwargs)

File "[...]/torch/nn/parallel/data_parallel.py", line 168, in forward

outputs = self.parallel_apply(replicas, inputs, kwargs)

File "[...]/torch/nn/parallel/data_parallel.py", line 178, in parallel_apply

return parallel_apply(replicas, inputs, kwargs, self.device_ids[:len(replicas)])

File "[...]/torch/nn/parallel/parallel_apply.py", line 86, in parallel_apply

output.reraise()

File "[...]/torch/_utils.py", line 425, in reraise

raise self.exc_type(msg)

KeyError: Caught KeyError in replica 0 on device 0.

Original Traceback (most recent call last):

File "[...]/torch/nn/parallel/parallel_apply.py", line 61, in _worker

output = module(*input, **kwargs)

File "[...]/torch/nn/modules/module.py", line 1071, in _call_impl

result = forward_call(*input, **kwargs)

File "[...]/transformers/models/bart/modeling_bart.py", line 1308, in forward

return_dict=return_dict,

File "[...]/torch/nn/modules/module.py", line 1071, in _call_impl

result = forward_call(*input, **kwargs)

File "[...]/transformers/models/bart/modeling_bart.py", line 1173, in forward

return_dict=return_dict,

File "[...]/torch/nn/modules/module.py", line 1071, in _call_impl

result = forward_call(*input, **kwargs)

File "[...]/transformers/models/bart/modeling_bart.py", line 756, in forward

inputs_embeds = self.embed_tokens(input_ids) * self.embed_scale

File "[...]/torch/nn/modules/module.py", line 1076, in _call_impl

hook_result = hook(self, input, result)

File "[...]/transformers/debug_utils.py", line 246, in forward_hook

self.create_frame(module, input, output)

File "[...]/transformers/debug_utils.py", line 193, in create_frame

self.expand_frame(f"{self.prefix} {self.module_names[module]} {module.__class__.__name__}")

KeyError: Embedding(10, 8, padding_idx=1)

```

And, again, it completes totally fine if you restrict visibility to a single GPU via `CUDA_VISIBLE_DEVICES` before running this.

Having looked into it, I strongly suspect what's happening is the following:

- the `DebugUnderflowOverflow` instantiated in `Trainer.train` populates a `module_names` dict from nn.Modules to names in its constructor [(link)](https://github.com/huggingface/transformers/blob/master/src/transformers/debug_utils.py#L182)

- If multi-gpu training is enabled, `nn.DataParallel` is called (I think [here in trainer.py](https://github.com/huggingface/transformers/blob/master/src/transformers/trainer.py#L943)).

- this calls `torch.nn.replicate` on its forward pass [(link)](https://github.com/pytorch/pytorch/blob/master/torch/nn/parallel/data_parallel.py#L167), which I believe calls `nn.Module._replicate_for_data_parallel()` [here](https://github.com/pytorch/pytorch/blob/master/torch/nn/parallel/replicate.py#L115), replicating the model once per GPU.

- This results in different `hash()` values for the replicated `nn.Module` objects across different GPUs, in general.

- Finally, the `module_names` lookup in `create_frame()` will crash on the GPUs, since the differently replicated `nn.Module` objects on which the forward-pass hooks are called now have different `hash()` values after multi-GPU replication.

(You can confirm that a module has different hash values after replication via the following snippet):

```

m = torch.nn.Embedding(7, 5)

m2 = m._replicate_for_data_parallel()

print(hash(m))

print(hash(m2))

```

Which will print something like:

```

8730470757753

8730462809617

```

Not sure what the best way to fix this is. If there's a way to instantiate a different `DebugUnderflowOverflow` for each GPU, after replication, that will maybe solve this issue (since per-replica hashes will assumedly be consistent then), but I'm not sure if that's feasible or the best way to do this.

One could also just make the miscreant f-string construction in `create_frame` have a `.get()` with a default as the `module_names` lookup, rather than using square brackets, but this would probably make the debugging traces too uninformative. So I figured I'd just open a bug

## Expected behavior

I'd expect it to either not crash or print a fatal `overflow/underflow debug unsupported for multi-GPU` error or something. I originally thought this was a bug in the model code rather than a multi-GPU thing.

| 07-20-2021 21:59:35 | 07-20-2021 21:59:35 | Thank you for the great report and the analysis of the problem, @kpich.

I will have a look a bit later when I have time to solve this, including your suggestion.

Meanwhile, any special reason why you're using DP and not DDP (`torch.distributed.launch`)? There each GPU instantiates its own everything and it will work correctly. <|||||>No, only reason I'm using DP rather than DDP is I'm using the `Trainer` framework with its default behaviors on a multi-GPU machine. Using DDP is a good suggestion, thanks!<|||||>DDP is typically faster than DP (as long as the interconnect is fast), please see: https://huggingface.co/transformers/performance.html#dp-vs-ddp<|||||>As a first step, here is a simple reproduction of the OP report with this cmd w/ 2 gpus over DP:

```

export BS=16; rm -r output_dir; PYTHONPATH=src USE_TF=0 CUDA_VISIBLE_DEVICES=0,1 python \

examples/pytorch/translation/run_translation.py --model_name_or_path t5-small --output_dir \

output_dir --adam_eps 1e-06 --do_train --label_smoothing 0.1 --learning_rate 3e-5 \

--logging_first_step --logging_steps 500 --max_source_length 128 --max_target_length 128 \

--val_max_target_length 128 --num_train_epochs 1 --overwrite_output_dir \

--per_device_train_batch_size $BS --predict_with_generate --sortish_sampler --source_lang en \

--target_lang ro --dataset_name wmt16 --dataset_config "ro-en" --source_prefix "translate English to \

Romanian: " --warmup_steps 50 --max_train_samples 50 --debug underflow_overflow

```

gives a similar error.<|||||>Nothing comes to mind as a simple fix at the moment, so for now let's just do a clean assert as you suggested. https://github.com/huggingface/transformers/pull/12816

If someone is stuck and can't use DDP we will revisit this.

And of course, if you or someone would like to work on an actual solution it'd be very welcome. I don't see `nn.DataParallel` having any hooks, so most likely this will require overriding `torch.nn.parallel.data_parallel.replicate` to refresh the model references after the replication. You can see the source code here: https://pytorch.org/docs/stable/_modules/torch/nn/parallel/data_parallel.html#DataParallel So it can be done, but it is not really worth it, IMHO. |

transformers | 12,814 | closed | minor mistake in the documentation of XLMTokenizer | On [this page](https://huggingface.co/transformers/model_doc/xlm.html#xlmtokenizer), the default value of `cls_token` is given as `</s>` whereas it should be `<s>`.

Please fix it.

| 07-20-2021 21:10:38 | 07-20-2021 21:10:38 | This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 12,813 | closed | Update pyproject.toml | You don't support Python 3.5, so I've bumped the black `target-version` to `'py36'`. | 07-20-2021 20:12:19 | 07-20-2021 20:12:19 | |

transformers | 12,812 | closed | Expose get_config() on ModelTesters | This exposes the `get_config()`method on `XXXModelTester`s. It makes it accessible in a platform-agnostic way, so that utilities may use that value to obtain a tiny configuration for tests even if `torch` isn't installed.

Part of the pipeline refactor, which needs to have tiny configurations for all models. | 07-20-2021 13:57:39 | 07-20-2021 13:57:39 | |

transformers | 12,811 | closed | Add _CHECKPOINT_FOR_DOC to all models | This adds the `_CHECKPOINT_FOR_DOC` variable for all models that do not have it. This value is used in the pipeline tests refactoring, where all models/tokenizers compatible with a given pipeline are tested. To that end, it is important to have a source of truth as to which checkpoint is well maintained, which should be the case of the `_CHECKPOINT_FOR_DOC` that is used in the documentation. | 07-20-2021 13:46:30 | 07-20-2021 13:46:30 | |

transformers | 12,810 | closed | [CLIP/docs] add and fix examples | # What does this PR do?

Adds examples in PyTorch model docstrings also fixes rendering issues in flax model docstring. | 07-20-2021 12:14:03 | 07-20-2021 12:14:03 | |

transformers | 12,809 | closed | [Longformer] Correct longformer docs | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 07-20-2021 11:36:01 | 07-20-2021 11:36:01 | |

transformers | 12,808 | closed | https://huggingface.co/facebook/detr-resnet-101-panoptic model has 250 classes ? | coco data has 80 classes.

why the model has 250 classes

id2label: {

"0": "LABEL_0",

"1": "LABEL_1",

"2": "LABEL_2",

"3": "LABEL_3",

"4": "LABEL_4",

"5": "LABEL_5",

"6": "LABEL_6",

"7": "LABEL_7",

"8": "LABEL_8",

"9": "LABEL_9",

"10": "LABEL_10",

"11": "LABEL_11",

"12": "LABEL_12",

"13": "LABEL_13",

"14": "LABEL_14",

"15": "LABEL_15",

"16": "LABEL_16",

"17": "LABEL_17",

"18": "LABEL_18",

"19": "LABEL_19",

"20": "LABEL_20",

"21": "LABEL_21",

"22": "LABEL_22",

"23": "LABEL_23",

"24": "LABEL_24",

"25": "LABEL_25",

"26": "LABEL_26",

"27": "LABEL_27",

"28": "LABEL_28",

"29": "LABEL_29",

"30": "LABEL_30",

"31": "LABEL_31",

"32": "LABEL_32",

"33": "LABEL_33",

"34": "LABEL_34",

"35": "LABEL_35",

"36": "LABEL_36",

"37": "LABEL_37",

"38": "LABEL_38",

"39": "LABEL_39",

"40": "LABEL_40",

"41": "LABEL_41",

"42": "LABEL_42",

"43": "LABEL_43",

"44": "LABEL_44",

"45": "LABEL_45",

"46": "LABEL_46",

"47": "LABEL_47",

"48": "LABEL_48",

"49": "LABEL_49",

"50": "LABEL_50",

"51": "LABEL_51",

"52": "LABEL_52",

"53": "LABEL_53",

"54": "LABEL_54",

"55": "LABEL_55",

"56": "LABEL_56",

"57": "LABEL_57",

"58": "LABEL_58",

"59": "LABEL_59",

"60": "LABEL_60",

"61": "LABEL_61",

"62": "LABEL_62",

"63": "LABEL_63",

"64": "LABEL_64",

"65": "LABEL_65",

"66": "LABEL_66",

"67": "LABEL_67",

"68": "LABEL_68",

"69": "LABEL_69",

"70": "LABEL_70",

"71": "LABEL_71",

"72": "LABEL_72",

"73": "LABEL_73",

"74": "LABEL_74",

"75": "LABEL_75",

"76": "LABEL_76",

"77": "LABEL_77",

"78": "LABEL_78",

"79": "LABEL_79",

"80": "LABEL_80",

"81": "LABEL_81",

"82": "LABEL_82",

"83": "LABEL_83",

"84": "LABEL_84",

"85": "LABEL_85",

"86": "LABEL_86",

"87": "LABEL_87",

"88": "LABEL_88",

"89": "LABEL_89",

"90": "LABEL_90",

"91": "LABEL_91",

"92": "LABEL_92",

"93": "LABEL_93",

"94": "LABEL_94",

"95": "LABEL_95",

"96": "LABEL_96",

"97": "LABEL_97",

"98": "LABEL_98",

"99": "LABEL_99",

"100": "LABEL_100",

"101": "LABEL_101",

"102": "LABEL_102",

"103": "LABEL_103",

"104": "LABEL_104",

"105": "LABEL_105",

"106": "LABEL_106",

"107": "LABEL_107",

"108": "LABEL_108",

"109": "LABEL_109",

"110": "LABEL_110",

"111": "LABEL_111",

"112": "LABEL_112",

"113": "LABEL_113",

"114": "LABEL_114",

"115": "LABEL_115",

"116": "LABEL_116",

"117": "LABEL_117",

"118": "LABEL_118",

"119": "LABEL_119",

"120": "LABEL_120",

"121": "LABEL_121",

"122": "LABEL_122",

"123": "LABEL_123",

"124": "LABEL_124",

"125": "LABEL_125",

"126": "LABEL_126",

"127": "LABEL_127",

"128": "LABEL_128",

"129": "LABEL_129",

"130": "LABEL_130",

"131": "LABEL_131",

"132": "LABEL_132",

"133": "LABEL_133",

"134": "LABEL_134",

"135": "LABEL_135",

"136": "LABEL_136",

"137": "LABEL_137",

"138": "LABEL_138",

"139": "LABEL_139",

"140": "LABEL_140",

"141": "LABEL_141",

"142": "LABEL_142",

"143": "LABEL_143",

"144": "LABEL_144",

"145": "LABEL_145",

"146": "LABEL_146",

"147": "LABEL_147",

"148": "LABEL_148",

"149": "LABEL_149",

"150": "LABEL_150",

"151": "LABEL_151",

"152": "LABEL_152",

"153": "LABEL_153",

"154": "LABEL_154",

"155": "LABEL_155",

"156": "LABEL_156",

"157": "LABEL_157",

"158": "LABEL_158",

"159": "LABEL_159",

"160": "LABEL_160",

"161": "LABEL_161",

"162": "LABEL_162",

"163": "LABEL_163",

"164": "LABEL_164",

"165": "LABEL_165",

"166": "LABEL_166",

"167": "LABEL_167",

"168": "LABEL_168",

"169": "LABEL_169",

"170": "LABEL_170",

"171": "LABEL_171",

"172": "LABEL_172",

"173": "LABEL_173",

"174": "LABEL_174",

"175": "LABEL_175",

"176": "LABEL_176",

"177": "LABEL_177",

"178": "LABEL_178",

"179": "LABEL_179",

"180": "LABEL_180",

"181": "LABEL_181",

"182": "LABEL_182",

"183": "LABEL_183",

"184": "LABEL_184",

"185": "LABEL_185",

"186": "LABEL_186",

"187": "LABEL_187",

"188": "LABEL_188",

"189": "LABEL_189",

"190": "LABEL_190",

"191": "LABEL_191",

"192": "LABEL_192",

"193": "LABEL_193",

"194": "LABEL_194",

"195": "LABEL_195",

"196": "LABEL_196",

"197": "LABEL_197",

"198": "LABEL_198",

"199": "LABEL_199",

"200": "LABEL_200",

"201": "LABEL_201",

"202": "LABEL_202",

"203": "LABEL_203",

"204": "LABEL_204",

"205": "LABEL_205",

"206": "LABEL_206",

"207": "LABEL_207",

"208": "LABEL_208",

"209": "LABEL_209",

"210": "LABEL_210",

"211": "LABEL_211",

"212": "LABEL_212",

"213": "LABEL_213",

"214": "LABEL_214",

"215": "LABEL_215",

"216": "LABEL_216",

"217": "LABEL_217",

"218": "LABEL_218",

"219": "LABEL_219",

"220": "LABEL_220",

"221": "LABEL_221",

"222": "LABEL_222",

"223": "LABEL_223",

"224": "LABEL_224",

"225": "LABEL_225",

"226": "LABEL_226",

"227": "LABEL_227",

"228": "LABEL_228",

"229": "LABEL_229",

"230": "LABEL_230",

"231": "LABEL_231",

"232": "LABEL_232",

"233": "LABEL_233",

"234": "LABEL_234",

"235": "LABEL_235",

"236": "LABEL_236",

"237": "LABEL_237",

"238": "LABEL_238",

"239": "LABEL_239",

"240": "LABEL_240",

"241": "LABEL_241",

"242": "LABEL_242",

"243": "LABEL_243",

"244": "LABEL_244",

"245": "LABEL_245",

"246": "LABEL_246",

"247": "LABEL_247",

"248": "LABEL_248",

"249": "LABEL_249"

}

and what is the trully name of the 250 classes?

## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version:

- Platform:

- Python version:

- PyTorch version (GPU?):

- Tensorflow version (GPU?):

- Using GPU in script?:

- Using distributed or parallel set-up in script?:

### Who can help

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @Rocketknight1

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

Model hub:

- for issues with a model report at https://discuss.huggingface.co/ and tag the model's creator.

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

## Information

Model I am using (Bert, XLNet ...):

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [ ] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

1.

2.

3.

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

<!-- A clear and concise description of what you would expect to happen. -->

| 07-20-2021 11:28:56 | 07-20-2021 11:28:56 | Yes, the authors just set it to a sufficiently high number to make sure all classes are covered. See https://github.com/facebookresearch/detr/issues/175#issue-672014097

Note that for the panoptic model, one combines the COCO instance classes with stuff classes (background things like trees, streets, sky,...). <|||||>thanks a lot bro.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 12,807 | open | Model Request: Blenderbot 2.0 | # 🌟 New model addition

## Model description

Facebook released Blenderbot 2.0, a chatbot that builds on RAG and Blenderbot 1.0. It can save interactions for later reference and use web search to find information on the web.

https://parl.ai/projects/blenderbot2/

## Open source status

* [x] the model implementation is available: in Parl.ai

* [x] the model weights are available: https://parl.ai/docs/zoo.html#wizard-of-internet-models

* [ ] who are the authors: (mention them, if possible by @gh-username)

| 07-20-2021 11:21:52 | 07-20-2021 11:21:52 | I am interested to work on this. Can we use RAG-end2end ?<|||||>Is there any news regarding Blenderbot 2.0 with huggingface? <|||||>Any new updates? It's been three months.<|||||>Does anyone want to work together on this?<|||||>I'm interested too. FYI https://github.com/JulesGM/ParlAI_SearchEngine.<|||||>+1<|||||>+1 GitHub - shamanez

On Sat, Nov 20, 2021, 23:30 RM ***@***.***> wrote:

> +1

>

> —

> You are receiving this because you commented.

> Reply to this email directly, view it on GitHub

> <https://github.com/huggingface/transformers/issues/12807#issuecomment-974628903>,

> or unsubscribe

> <https://github.com/notifications/unsubscribe-auth/AEA4FGTW5KWREVEYFQT5PUTUM52EPANCNFSM5AVTBZYQ>

> .

>

<|||||>+1 is there any progress for this?<|||||>Hi @AlafateABULIMITI Yes, we are considering adding this model. But since this is a complex model it might take a couple of weeks to fully integrate it. <|||||>+1 <|||||>Really excited for it! I think even without a search engine implementation people would use it, though examples wouldn't hurt!

Also, btw, someone did a simple search engine implementation already here: https://github.com/JulesGM/ParlAI_SearchEngine<|||||>Thank you for sharing this @Darth-Carrotpie <|||||>Is anyone currently working on this or is it's inclusion just being discussed?<|||||>I have started working on it. Should have a PR soon!<|||||>I should probably also mention that using Newspaper (https://pypi.org/project/newspaper3k/) to extract webpage text is slightly cleaner than the custom solution provided in JulesGM's search server implementation! <|||||>@patil-suraj It has been two months since your last post on Blender 2.0. Do you know when it will be included in Transformers? <|||||>Blenderbot2 is obsolete now. See Seeker https://parl.ai/projects/seeker/

Unfortunately, these conversational AIs seems to be a combination bunch of models and/or APIs, so I understand that they enter with difficulty in Huggingface's transformers.

I wrote something to deploy Seeker and its API on Kubernetes:

https://louis030195.medium.com/deploy-seeker-search-augmented-conversational-ai-on-kubernetes-in-5-minutes-81a61aa4e749<|||||>> Blenderbot2 is obsolete now. See Seeker https://parl.ai/projects/seeker/

>

> Unfortunately, these conversational AIs seems to be a combination bunch of models and/or APIs, so I understand that they enter with difficulty in Huggingface's transformers.

>

> I wrote something to deploy Seeker and its API on Kubernetes: https://louis030195.medium.com/deploy-seeker-search-augmented-conversational-ai-on-kubernetes-in-5-minutes-81a61aa4e749

Blenderbot 2 has memory as well as search engine capabilities, so the potential to remember chat topics between sessions. Unless I'm missing something, SeeKeR doesn't seem to have this feature, so I wouldn't call Blenderbot completely obsolete just yet...<|||||>Blenderbot 3 is out now: https://parl.ai/projects/bb3/#models<|||||>Any progress? With ChatGPT and Sparrow, it seems like chatbots are increasing in both relevance and importance in NLP.<|||||>> I have started working on it. Should have a PR soon!

@patil-suraj Could you please create a draft PR or something so that others can pick up where you left off?<|||||>(It seems like he's switched over to working on `diffusers` in the meantime...)<|||||>@ArthurZucker @younesbelkada (the ML engineers currently in charge of the text models)<|||||>Do we have any updates?<|||||>Patil is no longer working on this issue: he's working on diffusers instead. There don't seem to be any active contributors to this issue at the moment.

I would like to contribute at some point, but I'm swamped with my other to-do items.<|||||>Hey, there has not been any progress here! So currently this is not planned by someone on our team, so if you want to pick it up feel free to do so. Also since the ` BlenderBot` model is already supported, unless the architecture is very different, adding the model should be pretty easy with ` transformers-cli add-new-model-like` 😉

But blenderBot is a pretty complex model, so this is not a good first issue and is better fitted for someone who already added a model! 🚀 In that case we'll gladly support and be there to help!

<|||||>+1<|||||>I guess BlenderBot 3x is also out!

https://twitter.com/ylecun/status/1667196416043925505

It might be a good idea to try porting the dataset over to Huggingface and fine-tuning a better base model... |

transformers | 12,806 | closed | Fix tokenizer saving during training with `Trainer` | **EDIT:** If you have already read this message, you can see the update directly in [this message](https://github.com/huggingface/transformers/pull/12806#issuecomment-899570500)

# What does this PR do?

Fixes #12762.

As shown in this issue, a training launched with the `Trainer` object can fail at the time of the saving of the tokenizer if this last one belongs to a tokenizer class that has a slow version and a fast version but that it was instantiated from a folder containing only the files which permit to initialize the fast version.

I think it is important to solve this problem because this case will happen "more often" with the addition of the new feature to train new tokenizers from a known architecture.

To be even more precise, it does not fail in all cases, it depends on the implementation of the `save_vocabulary` method of the slow version. For example, `BertTokenizer` knows how to generate the slow files but `AlbertTokenizer` copies the files from the files in the folder where the tokenizer was initialized.

My PR proposes to add a test in the trainer tests to test this version that fails.

The fix I propose is really to be discussed because it changes the generated files in some cases (Bert's for example) but it seems to me to be the simplest fix.

If we ever want the behavior not to be modified in the case of the Bert tokenizer, we should find a way to know if the `save_vocabulary` method is able to generate the slow version from the fast version or not. I'm not sure I see a simple way to do this - unless we use a `try/except block. What I see as an alternative would be to add a property to all tokenizer classes that would allow knowing if the `save_vocabulary` method can be executed even without the slow versions present in the initial folder.

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. @LysandreJik and @sgugger , I would love to hear your thoughts on this PR even though it is certainly not in its final form.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 07-20-2021 11:20:46 | 07-20-2021 11:20:46 | I don't think this is the right fix: it just hides the problem as `tokenizer.save_pretrained()` should always work. And it should always save all the possible files, to make it easy for users to share their tokenizer with other users that might not have the `tokenizers` or the `sentencepiece` library installed.

Adding a new class property that tells the tokenizer whether it can save the vocabulary from the fast tokenizer sounds the best fix to me, with the try-excepts blocks in the `save_pretrained` method as the second best option.<|||||>**Update**: I have therefore redone this PR to take into account the return of @sgugger. This time, I propose to add a new `can_save_slow_tokenizer` attribute to all fast tokenizers:

- if the tokenizer fast is able to rebuild the files of the tokenizer slow, the attribute is set to `True`

- otherwise it is set to `False`

I use this attribute in ` _save_pretrained` of `PreTrainedTokenizerFast` so that with the default arguments:

- if possible the slow and fast files are saved

- otherwise only fast files are saved

For information, all slow tokenizers using `spm` have now the `can_save_slow_tokenizer`attribute set to `False`, all other tokenizers have their attribute left at `True`.

Failing tests: I don't think this is related to the current PR but I'll have a closer look :thinking: <|||||>You'll probably need to rebase for the tests to pass.<|||||>@sgugger, @LysandreJik, Thank you very much for your reviews! I have normally applied all your suggestions and after the rebase the tests all pass well :smile: <|||||>In that case, feel free to merge your PR :-) |

transformers | 12,805 | closed | What is the data format of transformers language modeling run_clm.py fine-tuning? | I now use run_clm.py to fine-tune gpt2, the command is as follows:

```

python run_clm.py \\

--model_name_or_path gpt2 \\

--train_file train1.txt \\

--validation_file validation1.txt \\

--do_train \\

--do_eval \\

--output_dir /tmp/test-clm

```

The training data is as follows:

[train1.txt](https://github.com/huggingface/transformers/files/6847229/train1.txt)

[validation1.txt](https://github.com/huggingface/transformers/files/6847234/validation1.txt)

The following error always appears:

```

[INFO|modeling_utils.py:1354] 2021-07-20 17:37:01,399 >> All the weights of GPT2LMHeadModel were initialized from the model checkpoint at gpt2.

If your task is similar to the task the model of the checkpoint was trained on, you can already use GPT2LMHeadModel for predictions without further training.

Running tokenizer on dataset: 100%|██████████| 1/1 [00:00<00:00, 90.89ba/s]

Running tokenizer on dataset: 100%|██████████| 1/1 [00:00<00:00, 333.09ba/s]

Grouping texts in chunks of 1024: 0%| | 0/1 [00:00<?, ?ba/s]

Traceback (most recent call last):

File "D:/NLU/tanka-reminder-suggestion/language_modeling/run_clm.py", line 492, in <module>

main()

File "D:/NLU/tanka-reminder-suggestion/language_modeling/run_clm.py", line 407, in main

desc=f"Grouping texts in chunks of {block_size}",

File "D:\lib\site-packages\datasets\dataset_dict.py", line 489, in map

for k, dataset in self.items()

File "D:\lib\site-packages\datasets\dataset_dict.py", line 489, in <dictcomp>

for k, dataset in self.items()

File "D:\lib\site-packages\datasets\arrow_dataset.py", line 1673, in map

desc=desc,

File "D:\lib\site-packages\datasets\arrow_dataset.py", line 185, in wrapper

out: Union["Dataset", "DatasetDict"] = func(self, *args, **kwargs)

File "D:\lib\site-packages\datasets\fingerprint.py", line 397, in wrapper

out = func(self, *args, **kwargs)

File "D:\lib\site-packages\datasets\arrow_dataset.py", line 2024, in _map_single

writer.write_batch(batch)

File "D:\lib\site-packages\datasets\arrow_writer.py", line 388, in write_batch

pa_table = pa.Table.from_pydict(typed_sequence_examples)

File "pyarrow\table.pxi", line 1631, in pyarrow.lib.Table.from_pydict

File "pyarrow\array.pxi", line 332, in pyarrow.lib.asarray

File "pyarrow\array.pxi", line 223, in pyarrow.lib.array

File "pyarrow\array.pxi", line 110, in pyarrow.lib._handle_arrow_array_protocol

File "D:\lib\site-packages\datasets\arrow_writer.py", line 100, in __arrow_array__

if trying_type and out[0].as_py() != self.data[0]:

File "pyarrow\array.pxi", line 1076, in pyarrow.lib.Array.__getitem__

File "pyarrow\array.pxi", line 551, in pyarrow.lib._normalize_index

IndexError: index out of bounds

```

Is the format of my training data incorrect? Please help me thanks! | 07-20-2021 09:43:30 | 07-20-2021 09:43:30 | The format seems correct. From the [language modeling page](https://github.com/huggingface/transformers/tree/master/examples/pytorch/language-modeling):

> If your dataset is organized with one sample per line, you can use the --line_by_line flag (otherwise the script concatenates all texts and then splits them in blocks of the same length).

So as you're not specifying that flag, it concatenates the text. Perhaps your dataset is just to small to group it into chunks?<|||||>Hi @NielsRogge Thanks for your reply. This `run_clm.py` does not have a `--line_by_line` flag, my `transformers` version is 4.8.2 .