repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers | 11,925 | closed | BERT pretraining: [SEP] vs. Segment Embeddings? | I’m confused about the differences between the intent of the [SEP] tokens and Segment Embeddings applied to the input of BERT during pretraining.

As far as I’ve understood, the [SEP] tokens are inserted between sentence A and B to enable the model’s ability to distinguish between the two sentences for BERTs Next-Sentence Prediction pretraining-task. Similarly, the Segment Embeddings are added to the input embeddings to alter the input, creating another opportunity for the model to learn that sentence A and B are distinct things.

However, these seem to be facilitating the same purpose. Why can’t BERT be trained on only Segment Embeddings, omitting [SEP] tokens? What additional information do [SEP] tokens conceptually provide, that the Segment Embeddings don’t?

Furthermore, [SEP] tokens aren’t used directly anyways. NSP is trained on the [CLS] embeddings, which I understand to sort of represent an embedding of sentence continuity. | 05-28-2021 12:10:21 | 05-28-2021 12:10:21 | From the BERT paper: "We differentiate the sentences in two ways. First, we separate them with a special token ([SEP]). Second, we add a learned embedding to every token indicating whether it belongs to sentence A or sentence B."

Deep learning, as you may now, is a lot of experimenting, and in this case, it was a design choice. I guess you could try to omit the [SEP] token, perhaps it doesn't add much information to the model. Or omit the token type embeddings, and check whether the results are significantly different.

To give another example, people are experimenting with all kinds of position encodings (include absolute ones, as in BERT, relatives ones, as in T5, sinusoidal ones, as in the original Transformer, now there are rotary embeddings, as in the new RoFormer paper)...

So the question you're asking is a genuine research question :) <|||||>Thank you for the quick answer, good to know! I was suspecting it might be something along these lines :)<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 11,924 | closed | Test optuna and ray | Run the slow tests for optuna and ray

cc @richardliaw @amogkam | 05-28-2021 11:51:57 | 05-28-2021 11:51:57 | |

transformers | 11,923 | closed | Trainer.predict using customized model.predict function? | I am using the [Trainer](https://huggingface.co/transformers/main_classes/trainer.html) to train a sentence-bert model with triplet-loss. Then I want to do some inference. How to call Trainer.predict using custom model.predict function?

I use `model.forward()` to calculate loss in training stage. But I want to use a customized `model.predict()` to calculate prediction results based on `model.forward()` (e.g., model.forward() -> embedding -> other method to calculate prediction instead of the loss function)

I saw the `prediction_step()` function just called `outputs = model(**inputs)` to get `(loss, logits, labels)`

Is there any good method to do that? | 05-28-2021 11:22:22 | 05-28-2021 11:22:22 | Cant you just subclass the Trainer class and write your own `predict`?<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 11,922 | closed | get_ordinal(local=True) replaced with get_local_ordinal() in training_args.py | ## Fixed

Wrong method call fixed. Modified according to:

https://pytorch.org/xla/release/1.8.1/_modules/torch_xla/core/xla_model.html

TPU training as called by the following or similar scripts now works:

```ruby

python xla_spawn.py \

--num_cores=8 \

language-modeling/run_mlm.py \

--train_file $TRAIN_FILE \

--model_name_or_path bert-base-uncased \

--output_dir $OUTPUT_DIR \

--overwrite_output_dir True \

--do_train True \

```

## Discussed/approved

https://github.com/huggingface/transformers/issues/11910

## Who can review?

@sgugger

| 05-28-2021 09:50:49 | 05-28-2021 09:50:49 | Thanks a lot! |

transformers | 11,921 | closed | ProphetNetForConditionalGeneration model isn't returning all objects properly | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.6.1

- Platform: Google Colab

- Python version:

- PyTorch version (GPU?): GPU

- Tensorflow version (GPU?):

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: No

### Who can help

- text generation: @patrickvonplaten

## Information

The model I am using Prophetnet:

The problem arises when using:

* my own modified scripts: [My notebook](https://colab.research.google.com/drive/1rmZyTXsdEDDpx8tbX-Gt6Uj9NuQi92VK?usp=sharing)

The tasks I am working on is:

* an official SQUaD task: Question Generation

## To reproduce

Steps to reproduce the behavior:

1. Just run the notebook

2. After running a single inference I am only getting 4 objects while I should get loss and other objects.

## Expected behavior

After running a single inference I am only getting 4 objects while I should get loss and other objects. @patrickvonplaten

| 05-28-2021 08:34:09 | 05-28-2021 08:34:09 | What are all the objects you expect to get? The `loss` is only returned if you pass the labels to the model - otherwise it cannot compute any loss. Please check out the [return statement of ProphetNetForConditionalGeneration's forward method for more information](https://huggingface.co/transformers/model_doc/prophetnet.html#transformers.ProphetNetForConditionalGeneration.forward). <|||||>Thank you, it worked @LysandreJik |

transformers | 11,920 | closed | Remove redundant `nn.log_softmax` in `run_flax_glue.py` | # What does this PR do?

`optax.softmax_cross_entropy` expects unscaled logits, so it already calls `nn.log_softmax` ([here](https://github.com/deepmind/optax/blob/master/optax/_src/loss.py#L166)). `nn.log_softmax` is idempotent so mathematically it shouldn't have made a difference.

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

@marcvanzee @patrickvonplaten

| 05-28-2021 07:34:51 | 05-28-2021 07:34:51 | Great catch @n2cholas! Could you also remove the line:

```python

import flax.linen as nn

```

to make our code quality checks happy? Happy to merge right after :-)<|||||>Done @patrickvonplaten! |

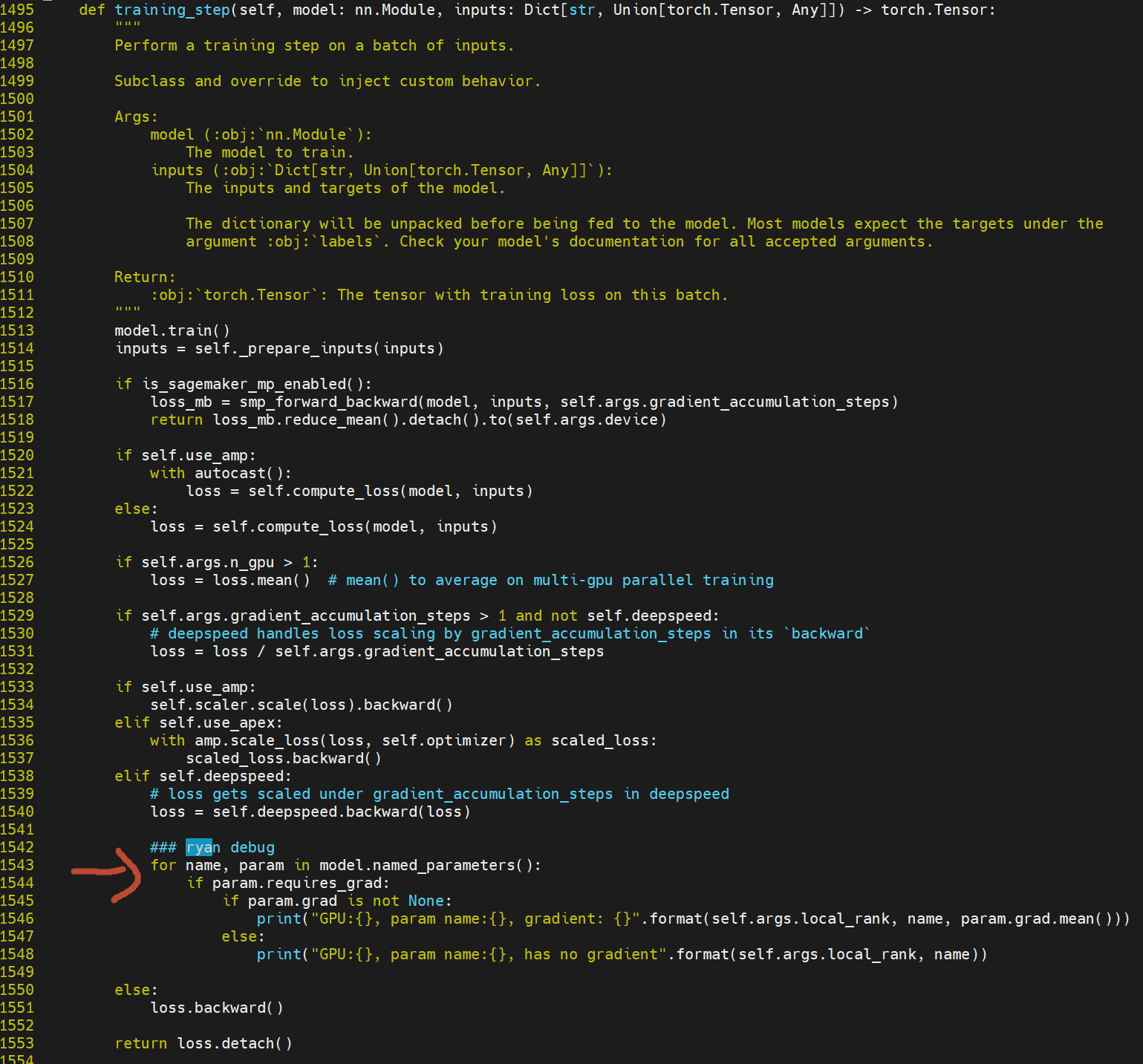

transformers | 11,919 | closed | Trainer reported loss is wrong when using DeepSpeed and gradient_accumulation_steps > 1 | ## Environment info

- `transformers` version: 4.7.0.dev0

- Platform: Windows-10-10.0.19041-SP0

- Python version: 3.8.0

- PyTorch version (GPU?): 1.8.1 (True)

- Tensorflow version (GPU?): not installed (NA)

- Using GPU in script?: <fill in>

- Using distributed or parallel set-up in script?: no but using DeepSpeed on a single node

### Who can help

@stas00, @sgugger (trainer.py)

### See Also

https://github.com/microsoft/DeepSpeed/issues/1107

## Information

Model I am using (Roberta)

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [x] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [ ] my own task or dataset: (give details below)

* [ ] pretraining a Language Model (wikipedia and bookcorpus datasets)

## To reproduce

Steps to reproduce the behavior:

1. run scripts to pretrain a model with DeepSpeed on a single node with 1 GPU for N steps (gradient_accum_steps=1)

2. run scripts to pretrain a model with DeepSpeed on a single node with 1 GPU for N steps (gradient_accum_steps=8)

3. note that vast difference in **loss** reported on console by trainer.py

## Expected behavior

reported loss for any number of gradient_accum_steps, nodes, or GPUs should be the mean of all losses; the same order of magnitude as shown when training with gradient_accum_steps=1, on a single node, with a single GPU.

| 05-28-2021 05:54:56 | 05-28-2021 05:54:56 | Please note that the fix should involve ignoring the return value of `deepspeed.backward()` in this [line](https://github.com/huggingface/transformers/blob/8d171628fe84bdf92ee40b5375d7265278180f14/src/transformers/trainer.py#L1754). Or at least not updating loss with this return value since it is the scaled loss value, similar to `scaled_loss` in this [line](https://github.com/huggingface/transformers/blob/8d171628fe84bdf92ee40b5375d7265278180f14/src/transformers/trainer.py#L1750)<|||||>Aaaah! We had two different definitions of scaled here, I know fully understand the issue. I was thinking scaled as scaled by the gradient accumulation steps factor, not scaled as scaled by the loss scaling factor. This is an easy fix to add, will do that in a bit.<|||||>> Please note that the fix should involve ignoring the return value of `deepspeed.backward()` in this [line](https://github.com/huggingface/transformers/blob/8d171628fe84bdf92ee40b5375d7265278180f14/src/transformers/trainer.py#L1754). Or at least not updating loss with this return value since it is the scaled loss value, similar to `scaled_loss` in this [line](https://github.com/huggingface/transformers/blob/8d171628fe84bdf92ee40b5375d7265278180f14/src/transformers/trainer.py#L1750)

@tjruwase, could you please review your suggestion, since I see the deepspeed code doing scaling by GAS only. Please see:

https://github.com/microsoft/DeepSpeed/blob/c697d7ae1cf5a479a8a85afa3bf9443e7d54ac2b/deepspeed/runtime/engine.py#L1142-L1143

Am I missing something?

And running tests I don't see any problem with the current code.<|||||>@stas00, you are right my suggestion here is not correct. I initially thought that deepspeed code scaling by GAS and exposing the scaled value to the client (HF) was the problem. But based yours and @sgugger findings, it seems there is nothing to do if HF is fine with `deepspeed.backward()` returning the GAS-scaled loss.

Sounds like this issue can be closed, once @rfernand2 agrees. <|||||>Yes, sounds good to me.<|||||>Closing as the same report on Deepspeed side has been closed https://github.com/microsoft/DeepSpeed/issues/1107

|

transformers | 11,918 | closed | [Flax] Return Attention from BERT, ELECTRA, RoBERTa and GPT2 | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # https://github.com/huggingface/transformers/issues/11901

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 05-28-2021 05:06:06 | 05-28-2021 05:06:06 | 🎉 |

transformers | 11,917 | closed | [Flax][WIP] Addition of Flax-Wav2Vec Model | # What does this PR do?

This PR is for the addition of Wav2Vec Model

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

@patil-suraj | 05-28-2021 04:51:35 | 05-28-2021 04:51:35 | Cool PR! For next steps, we should write the missing classes and remove everything which is related to:

```

feat_extract_norm="group"

do_stable_layer_norm=True

```

(This config parameters are only used for https://huggingface.co/facebook/wav2vec2-base-960h which is the oldest of the wav2vec2 models)

Also, it would be very important to add tests in `modeling_flax_wav2vec2.py` <|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.<|||||>Closing this in favor of https://github.com/huggingface/transformers/pull/12271 |

transformers | 11,916 | closed | Wrong perplexity when evaluate the megatron-gpt2. | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.7.0.dev0

- Platform: Linux-5.4.0-1046-azure-x86_64-with-debian-buster-sid

- Python version: 3.6.10

- PyTorch version (GPU?): 1.8.1+cu111 (True)

- Tensorflow version (GPU?): not installed (NA)

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: No

### Who can help

@jdemouth @LysandreJik @sgugger

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @Rocketknight1

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

Model hub:

- for issues with a model report at https://discuss.huggingface.co/ and tag the model's creator.

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

## Information

Model I am using gpt2(megatron-gpt2-345m):

The problem arises when using:

* [x] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [x] an official language-modeling task: (transformers/examples/pytorch/language-modeling/run_clm.py )

* [ ] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

1. Follow the steps given by [huggingface](https://huggingface.co/nvidia/megatron-gpt2-345m) to convert the megatron-lm model to huggingface model.

+ export MYDIR=/mnt/reproduce

+ git clone https://github.com/huggingface/transformers.git $MYDIR/transformers

+ mkdir -p $MYDIR/nvidia/megatron-gpt2-345m

+ wget --content-disposition https://api.ngc.nvidia.com/v2/models/nvidia/megatron_lm_345m/versions/v0.0/zip -O $MYDIR/nvidia/megatron-gpt2-345m/checkpoint.zip

+ python3 $MYDIR/transformers/src/transformers/models/megatron_gpt2/convert_megatron_gpt2_checkpoint.py $MYDIR/nvidia/megatron-gpt2-345m/checkpoint.zip

(Here I meet error: *"io.UnsupportedOperation: seek. You can only torch.load from a file that is seekable. Please pre-load the data into a buffer like io.BytesIO and try to load from it instead."* And I solve it by,

- unzip $MYDIR/nvidia/megatron-gpt2-345m/checkpoint.zip,

- change the code in transformers/src/transformers/models/megatron_gpt2/convert_megatron_gpt2_checkpoint.py Line **209-211** by

```python

with open(args.path_to_checkpoint, "rb") as pytorch_dict:

input_state_dict = torch.load(pytorch_dict, map_location="cpu")

```

- python3 $MYDIR/transformers/src/transformers/models/megatron_gpt2/convert_megatron_gpt2_checkpoint.py $MYDIR/nvidia/megatron-gpt2-345m/release/mp_rank_00/model_optim_rng.pt

+ git clone https://huggingface.co/nvidia/megatron-gpt2-345m/

+ mv $MYDIR/nvidia/megatron-gpt2-345m/release/mp_rank_00/pytorch_model.bin $MYDIR/nvidia/megatron-gpt2-345m/release/mp_rank_00/config.json $MYDIR/megatron-gpt2-345m/

2. run the clm.py tests on wikitext-2, the scripts is given by [readme](https://github.com/huggingface/transformers/blob/master/examples/pytorch/language-modeling/README.md).

```python

CUDA_VISIBLE_DEVICES=0 python $MYDIR/transformers/examples/pytorch/language-modeling/run_clm.py \

--model_name_or_path $MYDIR/megatron-gpt2-345m \

--dataset_name wikitext \

--dataset_config_name wikitext-2-raw-v1 \

--do_eval \

--output_dir /mnt/logs/evaluation/megatron/wikitext-2

```

3. The results are shown as, which shows the wrong perplexity(I also test on other datasets, and the perplexity results are also big):

``` txt

[INFO|trainer_pt_utils.py:907] 2021-05-28 04:17:49,817 >> ***** eval metrics *****

[INFO|trainer_pt_utils.py:912] 2021-05-28 04:17:49,817 >> eval_loss = 11.63

[INFO|trainer_pt_utils.py:912] 2021-05-28 04:17:49,817 >> eval_runtime = 0:00:22.85

[INFO|trainer_pt_utils.py:912] 2021-05-28 04:17:49,817 >> eval_samples = 240

[INFO|trainer_pt_utils.py:912] 2021-05-28 04:17:49,817 >> eval_samples_per_second = 10.501

[INFO|trainer_pt_utils.py:912] 2021-05-28 04:17:49,817 >> eval_steps_per_second = 1.313

[INFO|trainer_pt_utils.py:912] 2021-05-28 04:17:49,817 >> perplexity = 112422.0502

```

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

I want to convert my megatron-lm model checkpoints into huggingface. Please help me.

<!-- A clear and concise description of what you would expect to happen. -->

| 05-28-2021 04:23:15 | 05-28-2021 04:23:15 | We’ll try to reproduce the issue on our side. We’ll keep you posted. Thanks!<|||||>> We’ll try to reproduce the issue on our side. We’ll keep you posted. Thanks!

Thanks for your help!

<|||||>We (NVIDIA engineers) were able to reproduce strange perplexity results and we are trying to identify the root cause. We will update you as we know more. Thanks for reporting the issue and for the reproducer.<|||||>Hi,

I think #12004 is an related issue |

transformers | 11,915 | closed | RuntimeError: The size of tensor a (716) must match the size of tensor b (512) at non-singleton dimension 1 | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.6.0.dev0

- Platform: windows 7

- Python version: 3.8.8

- PyTorch version (GPU?):

- Tensorflow version (GPU?): 2.4.1 (False)

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: No

## Information

Model I am using (Bert, XLNet ...): Bert

The problem arises when using:

* [√] the official example scripts: (give details below)

`python run_ner.py --model_name_or_path nlpaueb/legal-bert-base-uncased --train_file ***.json --validation_file ***.json --output_dir /tmp/*** --do_train --do_eval`

* [ ] my own modified scripts: (give details below)

The tasks I am working on is: NER

* [ ] an official GLUE/SQUaD task: (give the name)

* [√] my own task or dataset: (give details below)

## Error

`File "run_ner.py", line 504, in <module>

main()

File "run_ner.py", line 446, in main

train_result = trainer.train(resume_from_checkpoint=checkpoint)

File "D:\Tool\Install\Python\lib\site-packages\transformers\trainer.py", line

1240, in train

tr_loss += self.training_step(model, inputs)

File "D:\Tool\Install\Python\lib\site-packages\transformers\trainer.py", line

1635, in training_step

loss = self.compute_loss(model, inputs)

File "D:\Tool\Install\Python\lib\site-packages\transformers\trainer.py", line

1667, in compute_loss

outputs = model(**inputs)

File "D:\Tool\Install\Python\lib\site-packages\torch\nn\modules\module.py", li

ne 727, in _call_impl

result = self.forward(*input, **kwargs)

File "D:\Tool\Install\Python\lib\site-packages\transformers\models\bert\modeli

ng_bert.py", line 1679, in forward

outputs = self.bert(

File "D:\Tool\Install\Python\lib\site-packages\torch\nn\modules\module.py", li

ne 727, in _call_impl

result = self.forward(*input, **kwargs)

File "D:\Tool\Install\Python\lib\site-packages\transformers\models\bert\modeli

ng_bert.py", line 964, in forward

embedding_output = self.embeddings(

File "D:\Tool\Install\Python\lib\site-packages\torch\nn\modules\module.py", li

ne 727, in _call_impl

result = self.forward(*input, **kwargs)

File "D:\Tool\Install\Python\lib\site-packages\transformers\models\bert\modeli

ng_bert.py", line 207, in forward

embeddings += position_embeddings

RuntimeError: The size of tensor a (716) must match the size of tensor b (512) at non-singleton dimension 1

5%|██▏ | 12/231 [03:07<56:54, 15.59s/i

t]`

| 05-28-2021 03:43:43 | 05-28-2021 03:43:43 | This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 11,914 | closed | How to get back the identified words from LayoutLMForTokenClassification? | I am using LayoutLMForTokenClassification as described [here](https://github.com/NielsRogge/Transformers-Tutorials/blob/master/LayoutLM/Fine_tuning_LayoutLMForTokenClassification_on_FUNSD.ipynb). In the end, the tutorial shows an annotated image with identified classes for various tokens.

How can I get back the original words as well to be annotated along with the labels?

I tried to read the words with tokenizer.decode(input_ids).split(" ") but the tokenizer broke words into multiple tokens which it wasn't supposed to. So, I have more words/outputs/boxes that I am supposed to have.

| 05-28-2021 03:16:30 | 05-28-2021 03:16:30 | Hi,

A solution for this can be the following (taken from my [Fine-tuning BERT for NER notebook](https://github.com/NielsRogge/Transformers-Tutorials/blob/master/BERT/Custom_Named_Entity_Recognition_with_BERT_only_first_wordpiece.ipynb)):

In my notebook for `LayoutLMForTokenClassification`, only the label for the first word piece of each word matters. In HuggingFace Transformers, a tokenizer takes an additional parameter called `return_offsets_mapping` which can be set to `True` to return the (char_start, char_end) for each token.

You can use this to determine whether a token is the first wordpiece of a word, or not. As we are only interested in the label of the first wordpiece, you can assign its label to be the label for the entire word.

Do you understand?

<|||||>I do. Thanks. I tried doing this by referring your BERT code. But I am getting this error, unfortunately.

Apologies if I messed up. This is the first time that I am working with transformers.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 11,913 | closed | Inference for pinned model keeps loading | I have pinned the enterprise model: `ligolab/DxRoberta`

This model is pinned for instant start.

When I try the Inference API `fill-mask` task, it responds instantly

However, when I try API call to embedding pipeline https://api-inference.huggingface.co/pipeline/feature-extraction/ligolab/DxRoberta , I keep getting the message: `{'error': 'Model ligolab/DxRoberta is currently loading', 'estimated_time': 20}` and status does not change with time.

API call to embedding pipeline was working yesterday when I tested it.

| 05-28-2021 01:22:25 | 05-28-2021 01:22:25 | after I have unpinned the model embeddings pipeline started working again (unless you did something on the back end)<|||||>I have repeated the experiment: pinned model - embedding pipeline API returns "loading" status; unpinned model - embedding pipeline returns valid results (after model 'warm up'). Look like inference API to embedding pipeline stops working if the model is pinned for instant inference access. <|||||>Tagging @Narsil for visibility (API support issues are best handled over email if possible!)<|||||>Thanks for tagging me, the `ligolab/DxRoberta` is defined a `fill-mask` by default, so that was what was being pinned down leading the issues you were encountering. You can override that by changing the `pipeline_tag` in the model card (if you want).

There is currently no way to specify the `task` when pinning, so I did it manually for now ! You should be good to go !<|||||>If we change `pipeline_tag `do we still need to use API endpoint `/pipeline/feature-extraction/ ` ?<|||||>No if you change the default tag, then the regular route /models/{MODEL} will work ! <|||||>@Narsil , could you chare snippet of using `pipeline_tag` in the card? I don't recall seeing this option it in the documentation https://github.com/huggingface/model_card.<|||||>Just `pipeline_tag: xxx`, see https://huggingface.co/docs#how-is-a-models-type-of-inference-api-and-widget-determined |

transformers | 11,912 | closed | Distillation of Pegasus using Pseudo labeling |

### Who can help

@sgugger

@patrickvonplaten

Models:

- Distillation of Pegasus

## Information

The model I am using (google/pegasus-xsum):

The problem:

- Trying to implement Pegasus Distillation using [Pseudo Labeling](https://github.com/huggingface/transformers/blob/master/examples/research_projects/seq2seq-distillation/precomputed_pseudo_labels.md) in [PRE-TRAINED SUMMARIZATION DISTILLATION](https://arxiv.org/pdf/2010.13002v2.pdf)

- By copying layers from the Teacher model, freezing the positional, token embeddings, all Encoder layers

- The model trained for two epochs on Xsum-Dataset using cross-entropy loss function between logits of student and output

generated from the teacher model

- Generating outputs from the student model gives repeated words and poor generation, although the losses function decreases from 8 to 0.7647 in training and 0.5424 in validation

```python

[have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have have wing wing wing wing wing wing wing wing wing wing wing wing wing']

```

How can I improve the generation of the model

| 05-27-2021 23:06:27 | 05-27-2021 23:06:27 | |

transformers | 11,911 | closed | Fix a condition in test_generate_with_head_masking | Fix a glitch in a condition in `test_generate_with_headmasking`, i.e.

```diff

- if set(head_masking.keys()) < set([*signature.parameters.keys()]):

+ if set(head_masking.keys()) > set([*signature.parameters.keys()]):

continue

```

This PR also fixes usage of head_mask for bigbird_pegasus and speech2texy models.

**Reviewer:** @patrickvonplaten | 05-27-2021 20:48:53 | 05-27-2021 20:48:53 | |

transformers | 11,910 | closed | xla_spawn.py: xm.get_ordinal() got an unexpected keyword argument 'local' | ## Environment info

- `transformers` version: 4.7.0.dev0

- Platform: Linux-5.4.109+-x86_64-with-Ubuntu-18.04-bionic (working on Colab with TPU)

- Python version: 3.7.10

- PyTorch version (GPU?): 1.8.1+cu101 (False)

- Tensorflow version (GPU?): 2.5.0 (False)

- Using GPU in script?: No, using TPU

- Using distributed or parallel set-up in script?: number_of_cores = 8

### Who can help

<@sgugger, @patil-suraj -->

## Information

Model I am using: Bert

The problem arises when using:

* [ ] the official example scripts:

```ruby

python /transformers/examples/pytorch/xla_spawn.py --num_cores=8 \

/transformers/examples/pytorch/language-modeling/run_mlm.py (--run_mlm.py args)

```

The tasks I am working on is:

* Pretraining BERT with TPU

## To reproduce

Steps to reproduce the behavior:

1. install necessary packages:

```ruby

pip install git+https://github.com/huggingface/transformers

cd /content/transformers/examples/pytorch/language-modeling

pip install -r requirements.txt

pip install cloud-tpu-client==0.10 https://storage.googleapis.com/tpu-pytorch/wheels/torch_xla-1.8.1-cp37-cp37m-linux_x86_64.whl

```

2. run xla_spwan with minimal args passed to run_mlm: specify a small .txt TRAIN_FILE and an OUTPUT_DIR:

```ruby

python xla_spawn.py \

--num_cores=8 \

language-modeling/run_mlm.py \

--train_file $TRAIN_FILE \

--model_name_or_path bert-base-uncased \

--output_dir $OUTPUT_DIR \

--overwrite_output_dir True \

--do_train True \

```

I get this error (for different TPU cores):

```ruby

Exception in device=TPU:0: get_ordinal() got an unexpected keyword argument 'local'

Traceback (most recent call last):

File "/usr/local/lib/python3.7/dist-packages/torch_xla/distributed/xla_multiprocessing.py", line 329, in _mp_start_fn

_start_fn(index, pf_cfg, fn, args)

File "/usr/local/lib/python3.7/dist-packages/torch_xla/distributed/xla_multiprocessing.py", line 323, in _start_fn

fn(gindex, *args)

File "/content/drive/My Drive/Thesis/transformers/examples/pytorch/language-modeling/run_mlm.py", line 493, in _mp_fn

main()

File "/content/drive/My Drive/Thesis/transformers/examples/pytorch/language-modeling/run_mlm.py", line 451, in main

train_result = trainer.train(resume_from_checkpoint=checkpoint)

File "/usr/local/lib/python3.7/dist-packages/transformers/trainer.py", line 1193, in train

self.state.is_local_process_zero = self.is_local_process_zero()

File "/usr/local/lib/python3.7/dist-packages/transformers/trainer.py", line 1784, in is_local_process_zero

return self.args.local_process_index == 0

File "/usr/local/lib/python3.7/dist-packages/transformers/file_utils.py", line 1605, in wrapper

return func(*args, **kwargs)

File "/usr/local/lib/python3.7/dist-packages/transformers/training_args.py", line 864, in local_process_index

return xm.get_ordinal(local=True)

TypeError: get_ordinal() got an unexpected keyword argument 'local'

```

## Expected behavior

The training should run without errors. I achieved this by simply replacing line 864 of /transformers/training_args.py:

```ruby

return xm.get_ordinal(local=True)

```

with:

```ruby

return xm.get_local_ordinal()

```

Following torch docs at:

https://pytorch.org/xla/release/1.5/_modules/torch_xla/core/xla_model.html

If this is the correct syntax (and this behaviour is not due to something wrong in my environment), this easy fix should be enough. My model trained correctly.

| 05-27-2021 20:06:19 | 05-27-2021 20:06:19 | Thanks for the catch! Since you have the proper fix indeed, would like to make a PR with it?<|||||>Done, thanks!<|||||>Closed by #11922 |

transformers | 11,909 | closed | FlaxGPTNeo Draft PR | # What does this PR do?

Add FlaxGPTNeo to HuggingFace Models!

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 05-27-2021 20:00:18 | 05-27-2021 20:00:18 | Hey @zanussbaum,

Regarding on how to proceed with the implementation, could you maybe post your questions here and tag @patil-suraj and @patrickvonplaten so that we can move forward? :-)<|||||>Hey @patrickvonplaten, I actually chatted with Suraj this morning and

cleared my questions up about the Self Attention Module. I am working on

implementing it and hope to have something out this weekend!

On Thu, Jun 3, 2021 at 11:27 AM Patrick von Platen ***@***.***>

wrote:

> Hey @zanussbaum <https://github.com/zanussbaum>,

>

> Regarding on how to proceed with the implementation, could you maybe post

> your questions here and tag @patil-suraj <https://github.com/patil-suraj>

> and @patrickvonplaten <https://github.com/patrickvonplaten> so that we

> can move forward? :-)

>

> —

> You are receiving this because you were mentioned.

> Reply to this email directly, view it on GitHub

> <https://github.com/huggingface/transformers/pull/11909#issuecomment-853957259>,

> or unsubscribe

> <https://github.com/notifications/unsubscribe-auth/AIBFIPPVDY3FYPLTVEYQCTTTQ6NPXANCNFSM45U7XKKQ>

> .

>

|

transformers | 11,908 | closed | Fine tuning with transformer models for Regression tasks | - `transformers` version: Bert, Albert, openai-gpt2

- Tensorflow version (GPU?): 2.5.0

## Information

Model I am using : Bert, Albert, openai-gpt2

The problem arises when using:

* [x] my own modified scripts: (give details below) <br>

- performed fine tuning

The tasks I am working on is:

* [x] my own task or dataset: (give details below)<br>

- I have been trying to use BertModel, albert and GPT2 models for fine tuning on my regression task and i was able to produce unwanted results . i will mention it below: <br>

- I tried it two times: <br>

1. I used CLS token embeddings and fine tuned over my entire custom model but it produced some random number repeating over and over in my output matrix space.<br>

2. I simply passed CLS token embeddings to the feed forward NN. In this case also it produced some random number and no learning is seen here.<br>

<br>

**what can be the solution to this problem? is there any issues with transformers with respect to regression?** | 05-27-2021 19:40:44 | 05-27-2021 19:40:44 | I think it's better to ask this question on the [forum](https://discuss.huggingface.co/) rather than here. For example, all questions related to training BERT for regression can be found [here](https://discuss.huggingface.co/search?q=bert%20regression). |

transformers | 11,907 | closed | Add conversion from TF to PT for Tapas retrieval models | # What does this PR do?

Table Retrieval models based on Tapas as described [here](https://arxiv.org/pdf/2103.12011.pdf) just got published in the [Tapas repository](https://github.com/google-research/tapas). The existing conversion function does not work with the retrieval models, so I added support to convert them to Pytorch.

Unfortunately, this only converts the language model without the down projection layer. However, I think this might still be useful to some people who, for instance, want to fine-tune the pre-trained models.

Unfortunately, I do not have the time at the moment to add the down projection layer myself.

## Who can review?

@NielsRogge

| 05-27-2021 17:35:11 | 05-27-2021 17:35:11 | Thanks for this, it's certainly something I'd like to add in the future. The TAPAS team seems quite active, they released [yet another paper involving TAPAS](https://arxiv.org/abs/2106.00479) (to work on larger tables).<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.<|||||>@NielsRogge I just quickly reviewed this script and it looks fine. Is it possible to know why this PR became stall and was automatically closed? Is there anything wrong with the script I should be aware of?

I'm currently working on finetuning the TAPAS retrieval model for a research project, just wanted to have your thoughts on this before running the script and uploading the model to the Huggingface hub.<|||||>@jonathanherzig Just wanted to confirm with you, in this case `bert` is the table encoder and `bert_1` is the question encoder, right? <|||||>Hi @xhlulu ,

Sorry, but I not familiar with the implementation details in this version of TAPAS... probably @NielsRogge can help.

Best,

Jonathan<|||||>No worries, thanks Jonathan! |

transformers | 11,906 | closed | Added Sequence Classification class in GPTNeo | # Added Sequence Classification Class in GPT Neo Model

Fixes #11811

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case. #11811

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

@patrickvonplaten @patil-suraj @sgugger

| 05-27-2021 15:10:21 | 05-27-2021 15:10:21 | |

transformers | 11,905 | closed | Customize pretrained model for model hub | Hi community,

I would like to add mean pooling step inside a custom SentenceTransformer class derived from the model sentence-transformers/stsb-xlm-r-multilingual, in order to avoid to do this supplementary step after getting the tokens embeddings.

My aim is to push this custom model onto model hub. If not using this custom step, it is trivial as below:

```

from transformers import AutoTokenizer, AutoModel

Simple export

## Instanciate the model

model_name = "sentence-transformers/stsb-xlm-r-multilingual"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModel.from_pretrained(model_name)

## Save the model and tokenizer files into cloned repository

model.save_pretrained("path/to/repo/clone/your-model-name")

tokenizer.save_pretrained("path/to/repo/clone/your-model-name")

```

However, after defining my custom class SentenceTransformerCustom I can’t manage to push on model hub the definition of this class:

```

import transformers

import torch

#### Custom export ####

## 1. Load feature-extraction pipeline with specific sts model

model_name = "sentence-transformers/stsb-xlm-r-multilingual"

pipeline_name = "feature-extraction"

nlp = transformers.pipeline(pipeline_name, model=model_name, tokenizer=model_name)

tokenizer = nlp.tokenizer

## 2. Setting up a simple torch model, which inherits from the XLMRobertaModel model. The only thing we add is a weighted summation over the token embeddings and a clamp to prevent zero-division errors.

class SentenceTransformerCustom(transformers.XLMRobertaModel):

def __init__(self, config):

super().__init__(config)

# Naming alias for ONNX output specification

# Makes it easier to identify the layer

self.sentence_embedding = torch.nn.Identity()

def forward(self, input_ids, attention_mask):

# Get the token embeddings from the base model

token_embeddings = super().forward(

input_ids,

attention_mask=attention_mask

)[0]

# Stack the pooling layer on top of it

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

sum_embeddings = torch.sum(token_embeddings * input_mask_expanded, 1)

sum_mask = torch.clamp(input_mask_expanded.sum(1), min=1e-9)

return self.sentence_embedding(sum_embeddings / sum_mask)

## 3. Create the custom model based on the config of the original pipeline

model = SentenceTransformerCustom(config=nlp.model.config).from_pretrained(model_name)

## 4. Save the model and tokenizer files into cloned repository

model.save_pretrained("/home/matthieu/Deployment/HF/stsb-xlm-r-multilingual")

tokenizer.save_pretrained("/home/matthieu/Deployment/HF/stsb-xlm-r-multilingual")

```

Do I need to place this custom class definition inside a specific .py file ? Or is there anything to do in order to correctly import this custom class from model hub?

Thanks! | 05-27-2021 14:04:14 | 05-27-2021 14:04:14 | Maybe of interest to @nreimers <|||||>Hi @Matthieu-Tinycoaching

I was sadly not able to re-produce your error. Have you uploaded such a model to the hub? Could you post the link here?

And how does your code look like to load the model?<|||||>Hi @nreimers

I retried with including the custom class definition when loading the model and it worked.

|

transformers | 11,904 | closed | 'error': 'Model Matthieu/stsb-xlm-r-multilingual is currently loading' | Hello,

I have pushed on model hub (https://huggingface.co/Matthieu/stsb-xlm-r-multilingual) a pretrained sentence transformer model (https://huggingface.co/sentence-transformers/stsb-xlm-r-multilingual).

However, when trying to get prediction via th API_URL I stil got the following error:

`{'error': 'Model Matthieu/stsb-xlm-r-multilingual is currently loading', 'estimated_time': 44.49033436}`

How could I deal with this problem?

Thanks!

| 05-27-2021 13:59:55 | 05-27-2021 13:59:55 | This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 11,903 | closed | Problem when freezing all GPT2 model except the LM head | ## Environment info

- `transformers` version: 4.6.1

- Platform: Linux-4.15.0-142-generic-x86_64-with-debian-stretch-sid

- Python version: 3.7.7

- PyTorch version (GPU?): 1.5.1 (True)

- Tensorflow version (GPU?): not installed (NA)

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: No

### Who can help

Models:

- gpt2: @patrickvonplaten, @LysandreJik

## Information

Model I am using (Bert, XLNet ...): GPT2

The problem arises when using:

[*] the official example scripts: (give details below)

When I try to print all the named parameters of GPT2 model with LM head, `model.lm_head` does not appear in the list.

In my experiment, I tried to freeze all the parameters except the lm head, however, the lm head is frozen together when model.transformer.wte is frozen.

## To reproduce

Steps to reproduce the behavior:

1. Load model

```

from transformers import AutoModelForCausalLM

gpt2 = AutoModelForCausalLM.from_pretrained("gpt2")

```

2. freeze the transformer part

```

for p in gpt2.transformer.parameters():

p.requires_grad=False

```

or just:

```

for p in gpt2.transformer.wte.parameters():

p.requires_grad=False

```

3. check lm_head

```

for p in gpt2.lm_head.parameters():

print(p.requires_grad)

```

and the output of the third step is False.

4. When I try printing all the named parameters

```

components = [k for k,v in gpt2.named_parameters()]

print(components)

```

The output is as follows:

['transformer.wte.weight', 'transformer.wpe.weight', 'transformer.h.0.ln_1.weight', 'transformer.h.0.ln_1.bias', 'transformer.h.0.attn.c_attn.weight', 'transformer.h.0.attn.c_attn.bias', 'transformer.h.0.attn.c_proj.weight', 'transformer.h.0.attn.c_proj.bias', 'transformer.h.0.ln_2.weight', 'transformer.h.0.ln_2.bias', 'transformer.h.0.mlp.c_fc.weight', 'transformer.h.0.mlp.c_fc.bias', 'transformer.h.0.mlp.c_proj.weight', 'transformer.h.0.mlp.c_proj.bias', 'transformer.h.1.ln_1.weight', 'transformer.h.1.ln_1.bias', 'transformer.h.1.attn.c_attn.weight', 'transformer.h.1.attn.c_attn.bias', 'transformer.h.1.attn.c_proj.weight', 'transformer.h.1.attn.c_proj.bias', 'transformer.h.1.ln_2.weight', 'transformer.h.1.ln_2.bias', 'transformer.h.1.mlp.c_fc.weight', 'transformer.h.1.mlp.c_fc.bias', 'transformer.h.1.mlp.c_proj.weight', 'transformer.h.1.mlp.c_proj.bias', 'transformer.h.2.ln_1.weight', 'transformer.h.2.ln_1.bias', 'transformer.h.2.attn.c_attn.weight', 'transformer.h.2.attn.c_attn.bias', 'transformer.h.2.attn.c_proj.weight', 'transformer.h.2.attn.c_proj.bias', 'transformer.h.2.ln_2.weight', 'transformer.h.2.ln_2.bias', 'transformer.h.2.mlp.c_fc.weight', 'transformer.h.2.mlp.c_fc.bias', 'transformer.h.2.mlp.c_proj.weight', 'transformer.h.2.mlp.c_proj.bias', 'transformer.h.3.ln_1.weight', 'transformer.h.3.ln_1.bias', 'transformer.h.3.attn.c_attn.weight', 'transformer.h.3.attn.c_attn.bias', 'transformer.h.3.attn.c_proj.weight', 'transformer.h.3.attn.c_proj.bias', 'transformer.h.3.ln_2.weight', 'transformer.h.3.ln_2.bias', 'transformer.h.3.mlp.c_fc.weight', 'transformer.h.3.mlp.c_fc.bias', 'transformer.h.3.mlp.c_proj.weight', 'transformer.h.3.mlp.c_proj.bias', 'transformer.h.4.ln_1.weight', 'transformer.h.4.ln_1.bias', 'transformer.h.4.attn.c_attn.weight', 'transformer.h.4.attn.c_attn.bias', 'transformer.h.4.attn.c_proj.weight', 'transformer.h.4.attn.c_proj.bias', 'transformer.h.4.ln_2.weight', 'transformer.h.4.ln_2.bias', 'transformer.h.4.mlp.c_fc.weight', 'transformer.h.4.mlp.c_fc.bias', 'transformer.h.4.mlp.c_proj.weight', 'transformer.h.4.mlp.c_proj.bias', 'transformer.h.5.ln_1.weight', 'transformer.h.5.ln_1.bias', 'transformer.h.5.attn.c_attn.weight', 'transformer.h.5.attn.c_attn.bias', 'transformer.h.5.attn.c_proj.weight', 'transformer.h.5.attn.c_proj.bias', 'transformer.h.5.ln_2.weight', 'transformer.h.5.ln_2.bias', 'transformer.h.5.mlp.c_fc.weight', 'transformer.h.5.mlp.c_fc.bias', 'transformer.h.5.mlp.c_proj.weight', 'transformer.h.5.mlp.c_proj.bias', 'transformer.h.6.ln_1.weight', 'transformer.h.6.ln_1.bias', 'transformer.h.6.attn.c_attn.weight', 'transformer.h.6.attn.c_attn.bias', 'transformer.h.6.attn.c_proj.weight', 'transformer.h.6.attn.c_proj.bias', 'transformer.h.6.ln_2.weight', 'transformer.h.6.ln_2.bias', 'transformer.h.6.mlp.c_fc.weight', 'transformer.h.6.mlp.c_fc.bias', 'transformer.h.6.mlp.c_proj.weight', 'transformer.h.6.mlp.c_proj.bias', 'transformer.h.7.ln_1.weight', 'transformer.h.7.ln_1.bias', 'transformer.h.7.attn.c_attn.weight', 'transformer.h.7.attn.c_attn.bias', 'transformer.h.7.attn.c_proj.weight', 'transformer.h.7.attn.c_proj.bias', 'transformer.h.7.ln_2.weight', 'transformer.h.7.ln_2.bias', 'transformer.h.7.mlp.c_fc.weight', 'transformer.h.7.mlp.c_fc.bias', 'transformer.h.7.mlp.c_proj.weight', 'transformer.h.7.mlp.c_proj.bias', 'transformer.h.8.ln_1.weight', 'transformer.h.8.ln_1.bias', 'transformer.h.8.attn.c_attn.weight', 'transformer.h.8.attn.c_attn.bias', 'transformer.h.8.attn.c_proj.weight', 'transformer.h.8.attn.c_proj.bias', 'transformer.h.8.ln_2.weight', 'transformer.h.8.ln_2.bias', 'transformer.h.8.mlp.c_fc.weight', 'transformer.h.8.mlp.c_fc.bias', 'transformer.h.8.mlp.c_proj.weight', 'transformer.h.8.mlp.c_proj.bias', 'transformer.h.9.ln_1.weight', 'transformer.h.9.ln_1.bias', 'transformer.h.9.attn.c_attn.weight', 'transformer.h.9.attn.c_attn.bias', 'transformer.h.9.attn.c_proj.weight', 'transformer.h.9.attn.c_proj.bias', 'transformer.h.9.ln_2.weight', 'transformer.h.9.ln_2.bias', 'transformer.h.9.mlp.c_fc.weight', 'transformer.h.9.mlp.c_fc.bias', 'transformer.h.9.mlp.c_proj.weight', 'transformer.h.9.mlp.c_proj.bias', 'transformer.h.10.ln_1.weight', 'transformer.h.10.ln_1.bias', 'transformer.h.10.attn.c_attn.weight', 'transformer.h.10.attn.c_attn.bias', 'transformer.h.10.attn.c_proj.weight', 'transformer.h.10.attn.c_proj.bias', 'transformer.h.10.ln_2.weight', 'transformer.h.10.ln_2.bias', 'transformer.h.10.mlp.c_fc.weight', 'transformer.h.10.mlp.c_fc.bias', 'transformer.h.10.mlp.c_proj.weight', 'transformer.h.10.mlp.c_proj.bias', 'transformer.h.11.ln_1.weight', 'transformer.h.11.ln_1.bias', 'transformer.h.11.attn.c_attn.weight', 'transformer.h.11.attn.c_attn.bias', 'transformer.h.11.attn.c_proj.weight', 'transformer.h.11.attn.c_proj.bias', 'transformer.h.11.ln_2.weight', 'transformer.h.11.ln_2.bias', 'transformer.h.11.mlp.c_fc.weight', 'transformer.h.11.mlp.c_fc.bias', 'transformer.h.11.mlp.c_proj.weight', 'transformer.h.11.mlp.c_proj.bias', 'transformer.ln_f.weight', 'transformer.ln_f.bias']

## Expected behavior

<!-- A clear and concise description of what you would expect to happen. -->

May I ask about the connect between LM head and the wte layer and is that possible to freeze the GPT2 model except LM head? | 05-27-2021 13:21:26 | 05-27-2021 13:21:26 | Hi! In GPT-2, as with most models, the LM head is tied to the embeddings: it has the same weights.

You can play around with the `tie_word_embeddings` configuration option, but your LM head will be randomly initialized.<|||||>Thank you very much! |

transformers | 11,902 | closed | [Flax] return attentions | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 05-27-2021 12:08:52 | 05-27-2021 12:08:52 | |

transformers | 11,901 | closed | [Flax] Add attention weights outputs to all models | # 🚀 Feature request

At the moment we cannot return a list of attention weight outputs in Flax as we can do in PyTorch.

In PyTorch, there is a `output_attentions` boolean in the forward call of every function, see [here](https://github.com/huggingface/transformers/blob/42fe0dc23e4a7495ebd08185f5850315a1a12dc0/src/transformers/models/bert/modeling_bert.py#L528) which when set to True collects all attention weights and returns them as a tuple.

In PyTorch, the attention weights are returned (if `output_attentions=True`) from the self-attention layer *e.g.* here: https://github.com/huggingface/transformers/blob/42fe0dc23e4a7495ebd08185f5850315a1a12dc0/src/transformers/models/bert/modeling_bert.py#L331 and then passed with the outputs.

Currently, this is not implemented in Flax and needs to be done. At the moment the function [`dot_product_attention`](https://github.com/google/flax/blob/6fb839c640de80f887580a533b222c6dddf04c0d/flax/linen/attention.py#L109) is used in every Flax model which makes it impossible to retrieve the attention weights, see [here](https://github.com/huggingface/transformers/blob/42fe0dc23e4a7495ebd08185f5850315a1a12dc0/src/transformers/models/bert/modeling_flax_bert.py#L244). However recently the Flax authors refactored this function into a smaller one called [`dot_product_attention_weights`](https://github.com/google/flax/blob/6fb839c640de80f887580a533b222c6dddf04c0d/flax/linen/attention.py#L37) which would allow us to correctly retrieve the attention weights if needed. To do so all `dot_product_attention` functions should be replaced by `dot_product_attention_weights`, followed by a `jnp.einsum`, see [here](https://github.com/google/flax/blob/6fb839c640de80f887580a533b222c6dddf04c0d/flax/linen/attention.py#L162) so that we can retrieve the attention weights.

Next, the whole `output_attentions` logic should be implemented for all Flax models analog to `output_hidden_states`.

| 05-27-2021 11:11:26 | 05-27-2021 11:11:26 | Also, adding this feature will require us to bump up the Flax dependency to `>=0.3.4` for `flax` in https://github.com/huggingface/transformers/blob/master/setup.py<|||||>I am starting with Bert.<|||||>Hi @patrickvonplaten @patil-suraj https://github.com/huggingface/transformers/pull/11918 |

transformers | 11,900 | closed | [Community Notebooks] Add Emotion Speech Noteboook | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Adds a notebook for Emotion Classification

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 05-27-2021 09:44:33 | 05-27-2021 09:44:33 | Amazing notebook @m3hrdadfi ! |

transformers | 11,899 | closed | Provides an option to select the parallel mode of the Trainer. | # 🚀 Feature request

Provides an option to select the parallel mode of the Trainer.

## Motivation

For multiple GPUs, Trainer uses `nn.DataParallel` for parallel computing by default, however, this approach results in a large memory occupation for the first GPU. Please provide an API to switch to `nn.parallel.DistributedDataParallel`. Also, for the `Trainer.predict()` function is there an option to turn off parallel computing?

## Your contribution

<!-- Is there any way that you could help, e.g. by submitting a PR?

Make sure to read the CONTRIBUTING.MD readme:

https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md -->

@sgugger | 05-27-2021 09:03:05 | 05-27-2021 09:03:05 | This is already implemented, it just depends on how you launch your training script. To use distributed data parallel, you have to launch it with `torch.distributed.launch`.<|||||>> This is already implemented, it just depends on how you launch your training script. To use distributed data parallel, you have to launch it with `torch.distributed.launch`.

Hi~

How to do for jupyter?

Also what should I do if I use DataParallel for train and only want to use one GPU for predict?

As the `DataParallel` requires droplast, which is not allowed in predict phase.

Thanks

<|||||>You can't do this directly in jupyter, you have to launch a script using the pytorch utilities (it's not a Trainer limitation, it's a PyTorch one). You can completely predict in parallel with the `Trainer`, it will complete the last batch to make it the same size as the others and then truncate the predictions.<|||||>> You can't do this directly in jupyter, you have to launch a script using the pytorch utilities (it's not a Trainer limitation, it's a PyTorch one). You can completely predict in parallel with the `Trainer`, it will complete the last batch to make it the same size as the others and then truncate the predictions.

But we can't truncate predictions because it's a contest or a client's demand and we need all the test results we can get.

As a demo, #11833

https://github.com/huggingface/transformers/issues/11833<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 11,898 | closed | mutil gpu errors | i want to use multi gpus to train,but it erros;

model = nn.DataParallel(model)

model = model.cuda()

model.train_model(train_df, eval_data=eval_df)

torch.nn.modules.module.ModuleAttributeError: 'DataParallel' object has no attribute 'train_model'

so how can i to use multi gpus | 05-27-2021 08:02:08 | 05-27-2021 08:02:08 | Is this related to `transformers`? <|||||>yes this is simpletransformers and i find transformers multi gpus is hard to run <|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 11,897 | closed | Fix Tensorflow Bart-like positional encoding | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes #11724

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

@patrickvonplaten

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 05-27-2021 04:45:16 | 05-27-2021 04:45:16 | |

transformers | 11,896 | closed | Update deepspeed config to reflect hyperparameter search parameters | # What does this PR do?

This PR adds a few lines of code to the Trainer so that it rebuilds the Deepspeed config when running hyperparameter_search. As is, if you run hyperparameter_search while using Deepspeed the TrainingArguments are updated but the Deepspeed config is not, the two become out of sync, and Deepspeed effectively ignores the parameters of any hyperparameter search trials which are set by the Deepspeed config.

This fixes #11894

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case. --> https://github.com/huggingface/transformers/issues/11894

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

I ran the Deepspeed tests and Trainer tests locally; everything passed except for `test_stage3_nvme_offload` but I think that was a hardware compatibility issue on my local machine.

## Who can review?

@stas00 (and maybe whoever implemented hyperparameter_search() in the Trainer)

| 05-27-2021 04:43:22 | 05-27-2021 04:43:22 | Code quality check passes on my local machine 🤔

Happy to change formatting if necessary, just not sure what to change.<|||||>You probably have a different version of `black`.

Please try:

```

cd transformers

pip install -e .[dev]

make fixup

```

this should re-align the versions.<|||||>Turns out I just ran the style check on the wrong branch the first time; my bad.

Should be fixed now. <|||||>the doc job failure is unrelated - we will re-run it when other jobs finish - the CI has been quite flakey...<|||||>Thanks for your PR! |

transformers | 11,895 | closed | Small error in documentation / Typo | The documentation for BART decoder layer mentions that it expects hidden states as well as the encoder hidden states to be expected in "(seq_len, batch, embed_dim)" instead of the "(batch, seq_len, embed_dim)" that is actually is expected. Lead to a bit of confusion so would be great if it were corrected! :)

Relevant lines:

https://github.com/huggingface/transformers/blob/996a315e76f6c972c854990e6114226a91bc0a90/src/transformers/models/bart/modeling_bart.py#L373

https://github.com/huggingface/transformers/blob/996a315e76f6c972c854990e6114226a91bc0a90/src/transformers/models/bart/modeling_bart.py#L376

@patrickvonplaten | 05-27-2021 03:40:12 | 05-27-2021 03:40:12 | Thanks for spotting. Feel free to open a PR to fix this :)

By the way, I see you're the main author of MDETR (amazing work!). I'm currently adding DETR to the repo (see #11653), so if you are up to help me add MDETR to the repo, feel free to reach out :)

<|||||>Ooh thanks :D That sounds great, I'd be happy to help :) Will send you an email. |

transformers | 11,894 | closed | Deepspeed integration ignores Optuna trial parameters in hyperparameter_search | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.6.1

- Platform: Linux-4.19.0-16-cloud-amd64-x86_64-with-glibc2.10

- Python version: 3.8.10

- PyTorch version (GPU?): 1.8.1+cu111 (True)